EEC 693793 Special Topics in Electrical Engineering Secure

- Slides: 31

EEC 693/793 Special Topics in Electrical Engineering Secure and Dependable Computing Lecture 11 Wenbing Zhao Department of Electrical and Computer Engineering Cleveland State University wenbing@ieee. org Spring 2007 EEC 693: Secure & Dependable Computing

2 Outline • • • Reminder: wiki page due 4/5 Dependability concepts (some review) Fault, error and failure (some review) Fault/failure detection in distributed systems Consensus in asynchronous distributed systems Spring 2007 EEC 693: Secure & Dependable Computing 2

3 Dependable System • Dependability: – Ability to deliver service that can justifiably be trusted – Ability to avoid service failures that are more frequent or more severe than is acceptable • When service failures are more frequent or more severe than acceptable, we say there is a dependability failure • For a system to be dependable, it must be – Available - e. g. , ready for use when we need it – Reliable - e. g. , able to provide continuity of service while we are using it – Safe - e. g. , does not have a catastrophic consequence on the environment – Secure - e. g. , able to preserve confidentiality Spring 2007 EEC 693: Secure & Dependable Computing 3

Approaches to Achieving Dependability • Fault Avoidance - how to prevent, by construction, the fault occurrence or introduction • Fault Removal - how to minimize, by verification, the presence of faults • Fault Tolerance - how to provide, by redundancy, a service complying with the specification in spite of faults • Fault Forecasting - how to estimate, by evaluation, the presence, the creation, and the consequence of faults Spring 2007 EEC 693: Secure & Dependable Computing 4 4

5 Graceful Degradation • If a specified fault scenario develops, the system must still provide a specified level of service. Ideally, the performance of the system degrades gracefully – The system must not suddenly collapse when a fault occur, or as the size of the faults increases – Rather it should continue to execute part of the work load correctly Spring 2007 EEC 693: Secure & Dependable Computing 5

Quantitative Dependability Measures 6 • Reliability - a measure of continuous delivery of proper service - or, equivalently, of the time to failure – It is the probability of surviving (potentially despite failures) over an interval of time • For example, the reliability requirement might be stated as a 0. 999999 availability for a 10 -hour mission. In other words, the probability of failure during the mission may be at most 10 -6 • Hard real-time systems such as flight control and process control demand high reliability, in which a failure could mean loss of life Spring 2007 EEC 693: Secure & Dependable Computing 6

Quantitative Dependability Measures 7 • Availability - a measure of the delivery of correct service with respect to the alternation of correct service and outof-service – It is the probability of being operational at a given instant of time • A 0. 999999 availability means that the system is not operational at most one hour in a million hours • A system with high availability may in fact fail. However, failure frequency and recovery time should be small enough to achieve the desired availability • Soft real-time systems such as telephone switching and airline reservation require high availability Spring 2007 EEC 693: Secure & Dependable Computing 7

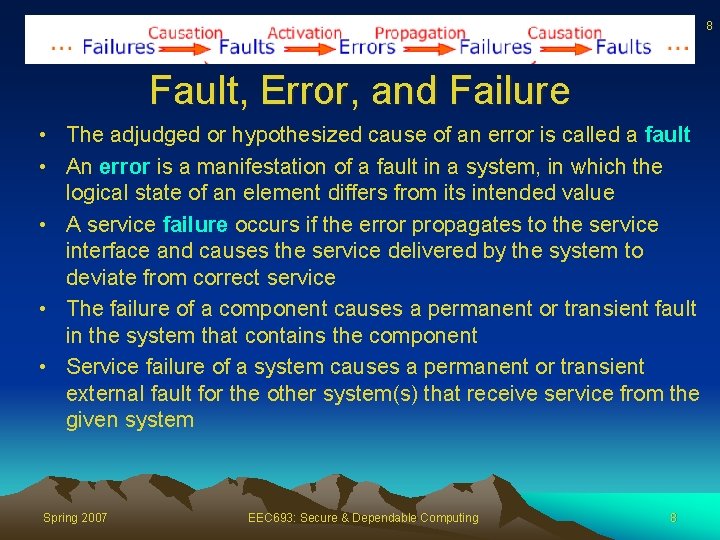

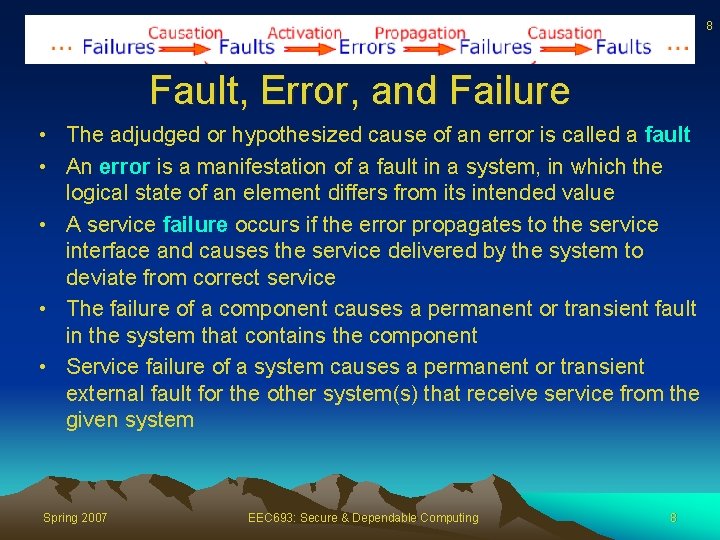

8 Fault, Error, and Failure • The adjudged or hypothesized cause of an error is called a fault • An error is a manifestation of a fault in a system, in which the logical state of an element differs from its intended value • A service failure occurs if the error propagates to the service interface and causes the service delivered by the system to deviate from correct service • The failure of a component causes a permanent or transient fault in the system that contains the component • Service failure of a system causes a permanent or transient external fault for the other system(s) that receive service from the given system Spring 2007 EEC 693: Secure & Dependable Computing 8

9 Fault • Faults can arise during all stages in a computer system's evolution - specification, design, development, manufacturing, assembly, and installation - and throughout its operational life • Most faults that occur before full system deployment are discovered through testing and eliminated • Faults that are not removed can reduce a system's dependability when it is in the field • A fault can be classified by its duration, nature of output, and correlation to other faults Spring 2007 EEC 693: Secure & Dependable Computing 9

10 Fault Types - Based on Duration • Permanent faults are caused by irreversible device/software failures within a component due to damage, fatigue, or improper manufacturing, or bad design and implementation – Permanent software faults are also called Bohrbugs – Easier to detect • Transient/intermittent faults are triggered by environmental disturbances or incorrect design – Transient software faults are also referred to as Heisenbugs – Study shows that Heisenbugs are the majority software faults – Harder to detect Spring 2007 EEC 693: Secure & Dependable Computing 10

11 Fault Types - Based on Nature of Output • Malicious fault: The fault that causes a unit to behave arbitrarily or malicious. Also referred to as Byzantine fault – A sensor sending conflicting outputs to different processors – Compromised software system that attempts to cause service failure • Non-malicious faults: the opposite of malicious faults – Faults that are not caused with malicious intention – Faults that exhibit themselves consistently to all observers, e. g. , fail -stop • Malicious faults are much harder to detect than nonmalicious faults Spring 2007 EEC 693: Secure & Dependable Computing 11

12 Fail-Stop System • A system is said to be fail-stop if it responds to up to a certain maximum number of faults by simply stopping, rather than producing incorrect output • A fail-stop system typically has many processors running the same tasks and comparing the outputs. If the outputs do not agree, the whole unit turns itself off • A system is said to be fail-safe if one or more safe states can be identified, that can be accessed in case of a system failure, in order to avoid catastrophe Spring 2007 EEC 693: Secure & Dependable Computing 12

13 Fault Types - Based on Correlation • Components fault may be independent of one another or correlated • A fault is said to be independent if it does not directly or indirectly cause another fault • Faults are said to be correlated if they are related. Faults could be correlated due to physical or electrical coupling of components • Correlated faults are more difficult to detect than independent faults 13

14 Fail Fast to Reduce Heisenbugs • The bugs that software developers hate most: – The ones that show up only after hours of successful operation, under unusual circumstances – The stack trace usually does not provide useful information • This kind of bugs might be caused by many reasons, such as – Not checking the boundary of an array – Invalid defensive programming <= what fail fast addresses • Reference – http: //www. martinfowler. com/ieee. Software/fail. Fast. pdf Spring 2007 EEC 693: Secure & Dependable Computing 14

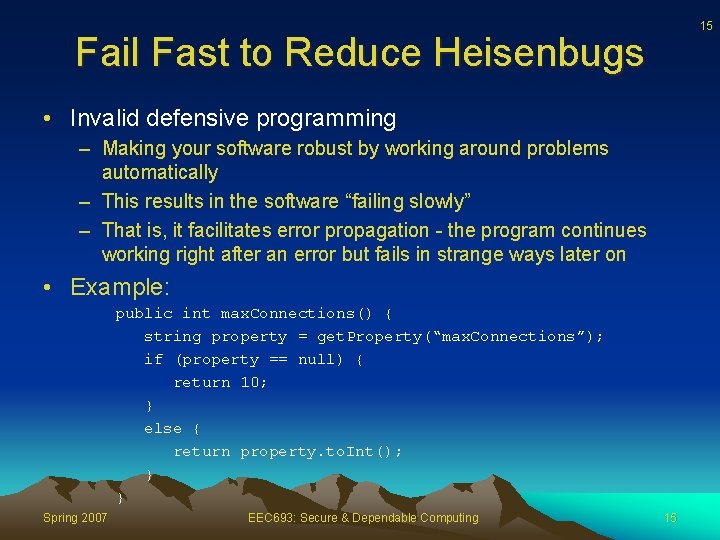

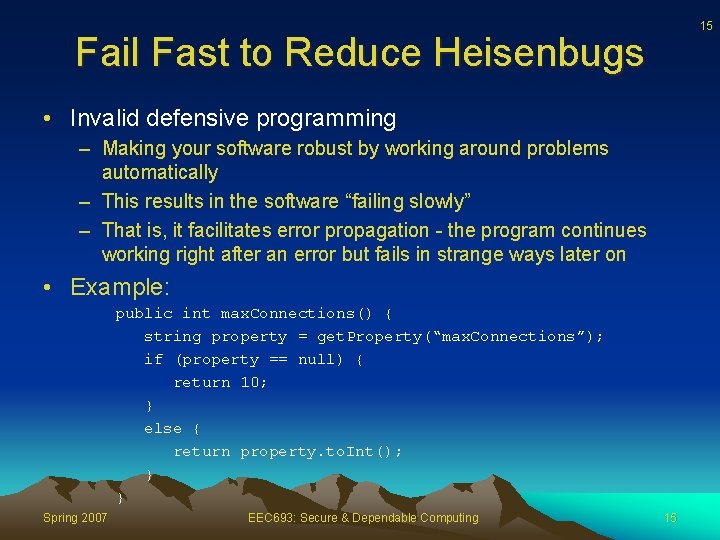

15 Fail Fast to Reduce Heisenbugs • Invalid defensive programming – Making your software robust by working around problems automatically – This results in the software “failing slowly” – That is, it facilitates error propagation - the program continues working right after an error but fails in strange ways later on • Example: public int max. Connections() { string property = get. Property(“max. Connections”); if (property == null) { return 10; } else { return property. to. Int(); } } Spring 2007 EEC 693: Secure & Dependable Computing 15

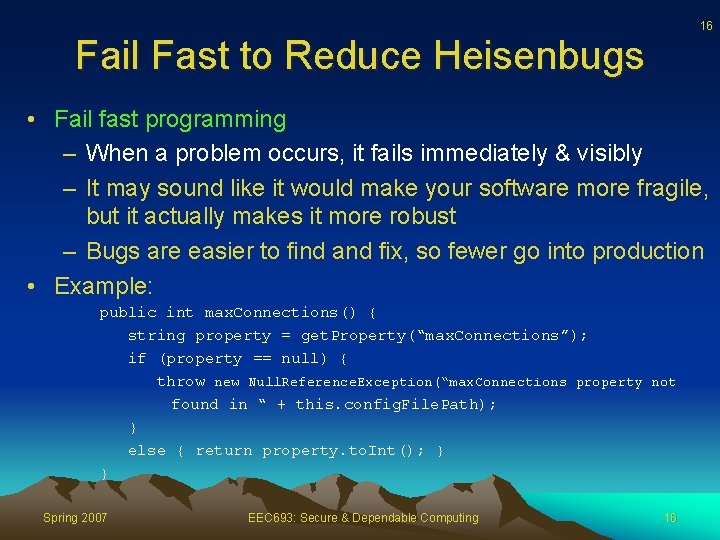

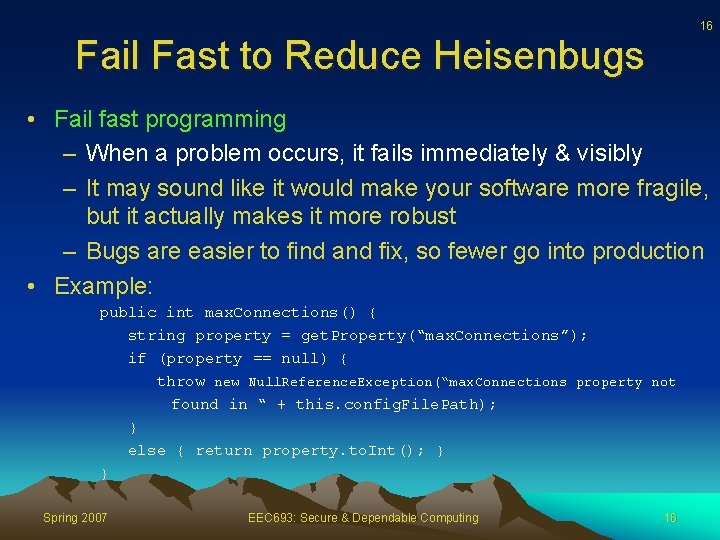

16 Fail Fast to Reduce Heisenbugs • Fail fast programming – When a problem occurs, it fails immediately & visibly – It may sound like it would make your software more fragile, but it actually makes it more robust – Bugs are easier to find and fix, so fewer go into production • Example: public int max. Connections() { string property = get. Property(“max. Connections”); if (property == null) { throw new Null. Reference. Exception(“max. Connections property not found in “ + this. config. File. Path); } else { return property. to. Int(); } } Spring 2007 EEC 693: Secure & Dependable Computing 16

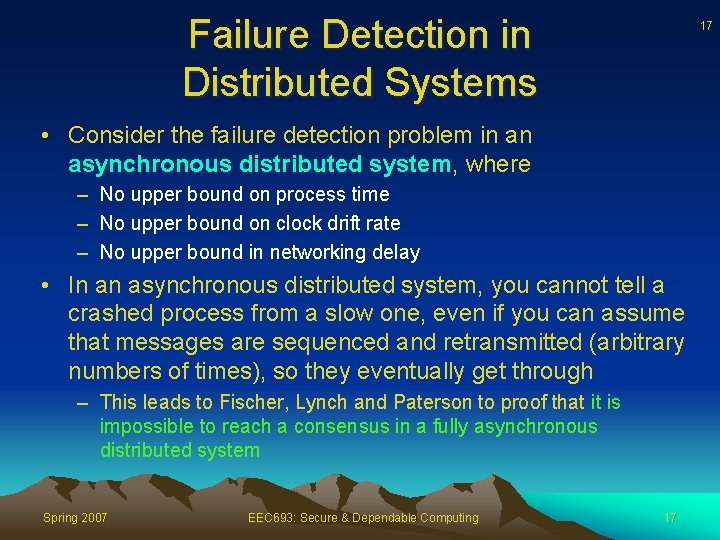

Failure Detection in Distributed Systems 17 • Consider the failure detection problem in an asynchronous distributed system, where – No upper bound on process time – No upper bound on clock drift rate – No upper bound in networking delay • In an asynchronous distributed system, you cannot tell a crashed process from a slow one, even if you can assume that messages are sequenced and retransmitted (arbitrary numbers of times), so they eventually get through – This leads to Fischer, Lynch and Paterson to proof that it is impossible to reach a consensus in a fully asynchronous distributed system Spring 2007 EEC 693: Secure & Dependable Computing 17

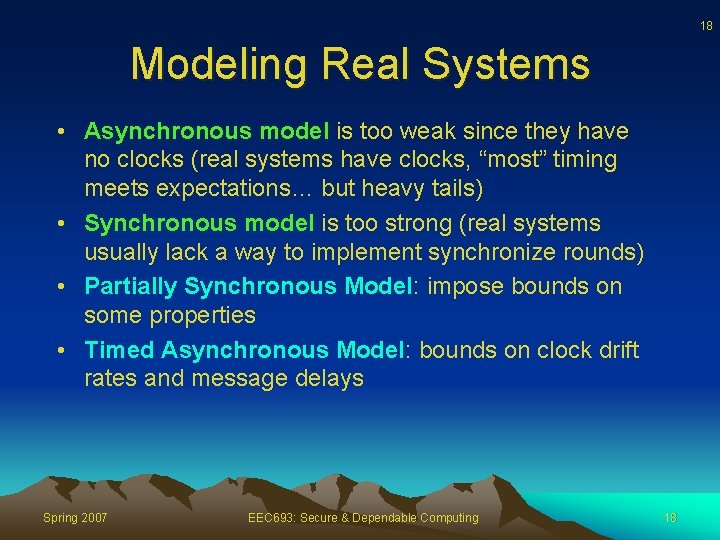

18 Modeling Real Systems • Asynchronous model is too weak since they have no clocks (real systems have clocks, “most” timing meets expectations… but heavy tails) • Synchronous model is too strong (real systems usually lack a way to implement synchronize rounds) • Partially Synchronous Model: impose bounds on some properties • Timed Asynchronous Model: bounds on clock drift rates and message delays Spring 2007 EEC 693: Secure & Dependable Computing 18

19 Consensus Problem • Assumptions – – – Asynchronous distributed systems Complete network graph Reliable FIFO broadcast communication Deterministic processes, {0, 1} initial values Fail-stop failures are possible • Solution requirement for consensus – Agreement: All processes decide on the same value – Validity: If a process decides on a value, then there was a process that started with that value – Termination: All processes that do not fail eventually decide Spring 2007 EEC 693: Secure & Dependable Computing 19

20 Impossibility Results • FLP Impossibility of Consensus – A single faulty process can prevent consensus – Because a slow process is indistinguishable from a crashed one • Chandra/Toueg Showed that FLP Impossibility applies to many problems, not just consensus – In particular, they show that FLP applies to group membership, reliable multicast – So these practical problems are impossible in asynchronous systems – They also look at the weakest condition under which consensus can be solved • Ways to bypass the impossibility result – Use unreliable failure detector – Use a randomized consensus algorithm Spring 2007 EEC 693: Secure & Dependable Computing 20

21 Chandra/Toueg Idea • Separate problem into – The consensus algorithm itself – A “failure detector” - a form of oracle that announces suspected failure • Aiming to determine the weakest oracle for which consensus is always solvable? Spring 2007 EEC 693: Secure & Dependable Computing 21

22 Failure Detector Properties • Completeness: detection of every crash – Strong completeness: Eventually, every process that crashes is permanently suspected by every correct process – Weak completeness: Eventually, every process that crashes is permanently suspected by some correct process Spring 2007 EEC 693: Secure & Dependable Computing 22

23 Failure Detector Properties • Accuracy: does it make mistakes? – Strong accuracy: No process is suspected before it crashes – Weak accuracy: Some correct process is never suspected – Eventual {strong/ weak} accuracy: there is a time after which {strong/weak} accuracy is satisfied Spring 2007 EEC 693: Secure & Dependable Computing 23

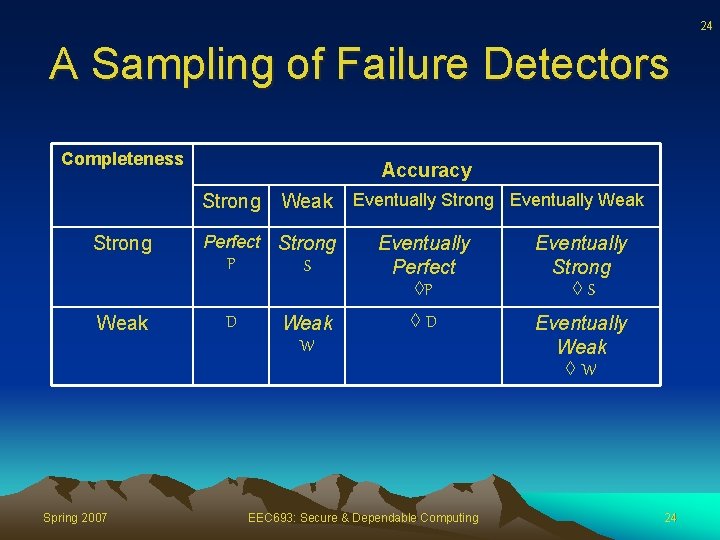

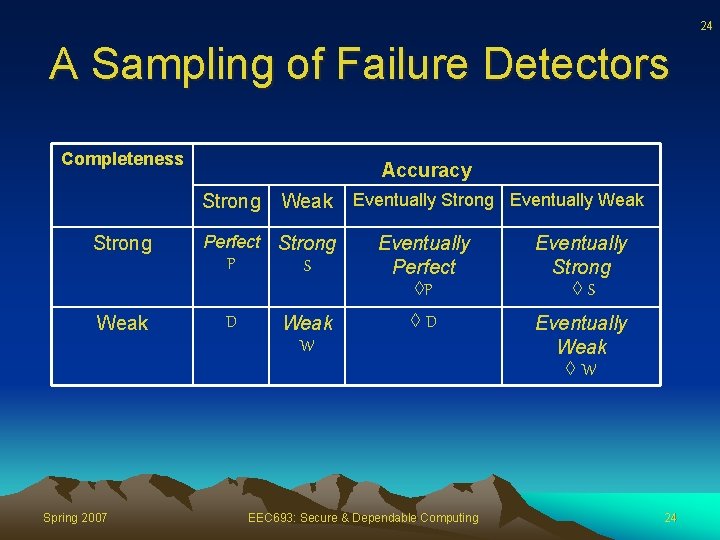

24 A Sampling of Failure Detectors Completeness Accuracy Strong Weak Spring 2007 Weak Perfect Strong P S D Weak W Eventually Strong Eventually Weak Eventually Perfect P Eventually Strong S D Eventually Weak W EEC 693: Secure & Dependable Computing 24

25 Perfect Detector • Named Perfect, written P • Strong completeness and strong accuracy • Immediately detects all failures • Never makes mistakes Spring 2007 EEC 693: Secure & Dependable Computing 25

26 Example of a Failure Detector • The detector they call W: “eventually weak” • More commonly: W: “diamond-W” • Defined by two properties: – There is a time after which every process that crashes is suspected by some correct process {weak completeness} – There is a time after which some correct process is never suspected by any correct process {weak accuracy} • E. g. we can eventually agree upon a leader. If it crashes, we eventually, accurately detect the crash Spring 2007 EEC 693: Secure & Dependable Computing 26

27 W: Weakest Failure Detector • W is the weakest failure detector for which consensus is guaranteed to be achieved • Algorithm – Rotate a token around a ring of processes – Decision can occur once token makes it around once without a change in failure-suspicion status for any process – Subsequently, as token is passed, each recipient learns the decision outcome Spring 2007 EEC 693: Secure & Dependable Computing 27

28 Building Systems with W • Unfortunately, this failure detector is not implementable • This is the weakest failure detector that solves consensus • Using timeouts we can make mistakes at arbitrary times – A correct process might be suspected – But timeout is the most widely used failure detection mechanism Spring 2007 EEC 693: Secure & Dependable Computing 28

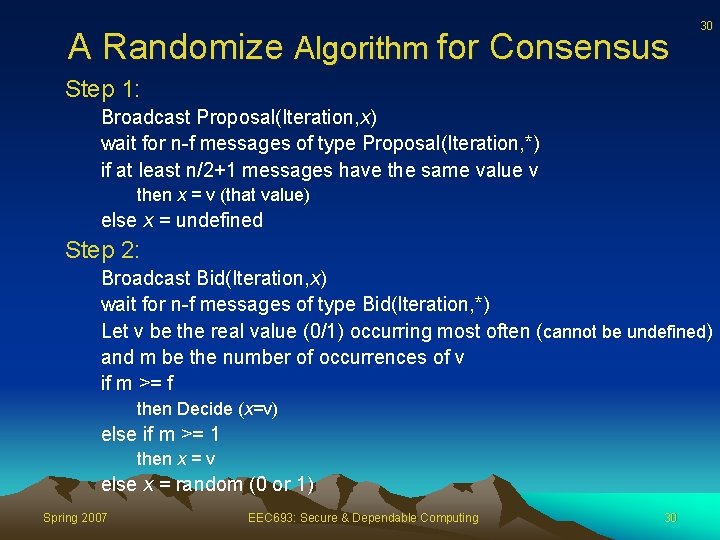

A Randomize Algorithm for Consensus • Assumption n - total number of processes f - total number of faulty processes n > 2 f • Algorithm Iteration=0; x = initial value (0 or 1) Do Forever: Iteration = Iteration + 1 Step 2 Spring 2007 EEC 693: Secure & Dependable Computing 29 29

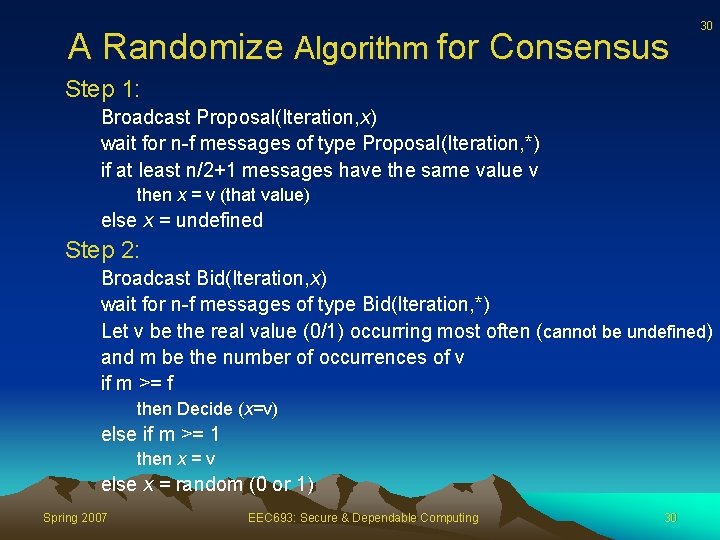

A Randomize Algorithm for Consensus 30 Step 1: Broadcast Proposal(Iteration, x) wait for n-f messages of type Proposal(Iteration, *) if at least n/2+1 messages have the same value v then x = v (that value) else x = undefined Step 2: Broadcast Bid(Iteration, x) wait for n-f messages of type Bid(Iteration, *) Let v be the real value (0/1) occurring most often (cannot be undefined) and m be the number of occurrences of v if m >= f then Decide (x=v) else if m >= 1 then x = v else x = random (0 or 1) Spring 2007 EEC 693: Secure & Dependable Computing 30

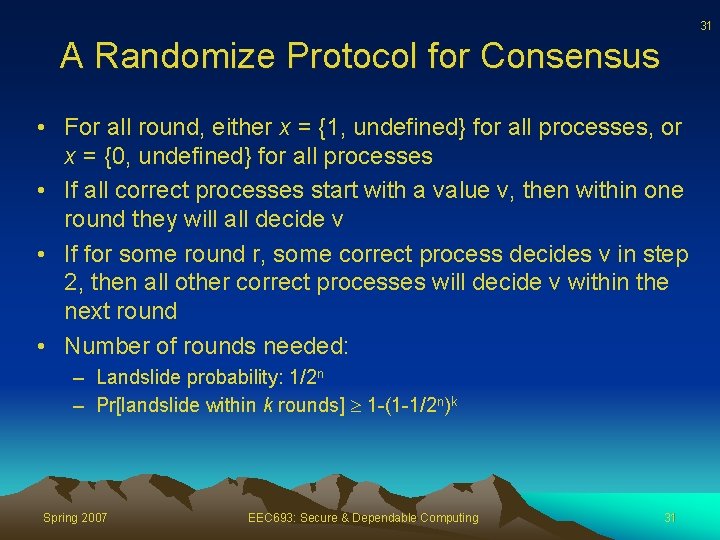

31 A Randomize Protocol for Consensus • For all round, either x = {1, undefined} for all processes, or x = {0, undefined} for all processes • If all correct processes start with a value v, then within one round they will all decide v • If for some round r, some correct process decides v in step 2, then all other correct processes will decide v within the next round • Number of rounds needed: – Landslide probability: 1/2 n – Pr[landslide within k rounds] 1 -(1 -1/2 n)k Spring 2007 EEC 693: Secure & Dependable Computing 31