ECE 408 Fall 2015 Applied Parallel Programming Lecture

- Slides: 27

ECE 408 Fall 2015 Applied Parallel Programming Lecture 19: GPU System Architecture © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Imaging for Io. T • Principles of a camera • Design of a CMOS imaging system • Mobile Imaging in Android / i. OS • Image processing basics • Image / Video coding • Computer vision basics • 3 D imaging • Applications: robots, cars, drones, VR/AR, mobile devices, things • Project: build something cool • Looking for ~4 students to help me create this course • Juniors/Seniors/MS students – Comp. E+DSP background © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Project Timeline • • • Today: Project Proposal (5 slides max / PPT) Week of Nov 1 st: project review #1 with staff Week of Nov 16 th: project review #2 with staff Week of Dec 7 th: demo to staff Week of Dec 7 th: poster session © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

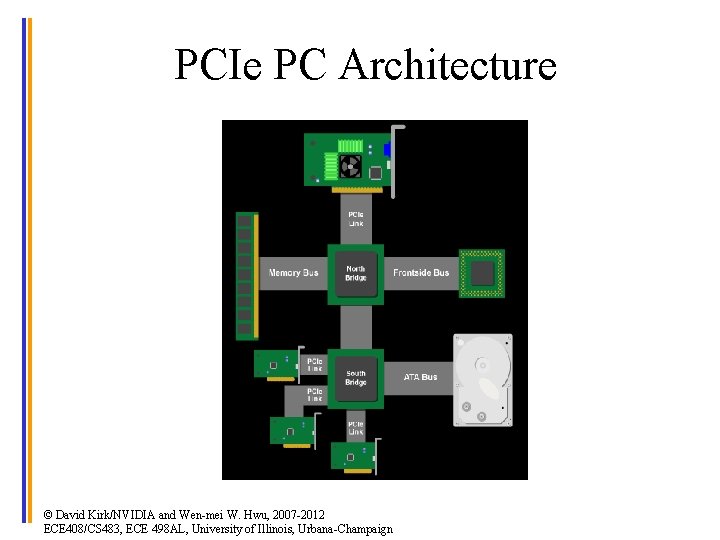

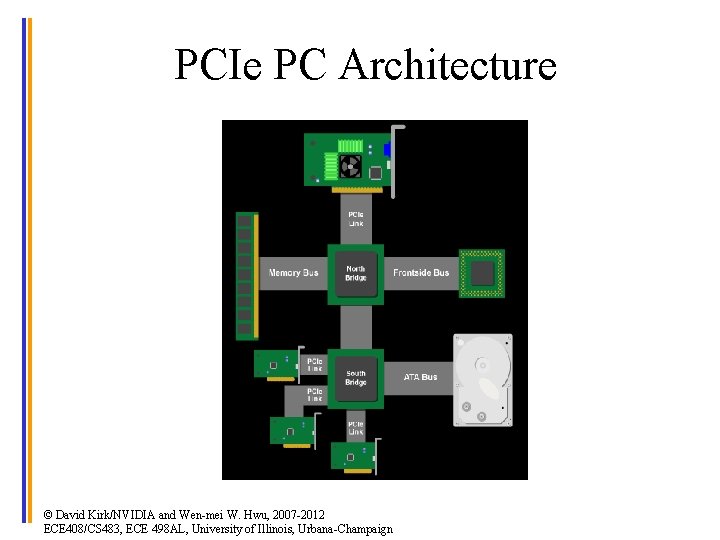

PCIe PC Architecture © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Data Transfer Overheads • The overheads between key components ultimately dictates system performance – Especially true for massively parallel systems processing massive amount of data – Tricks like buffering, reordering, caching can temporarily defy the rules in some cases – Ultimately, the performance falls back to what the data transfer architecture dictates © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

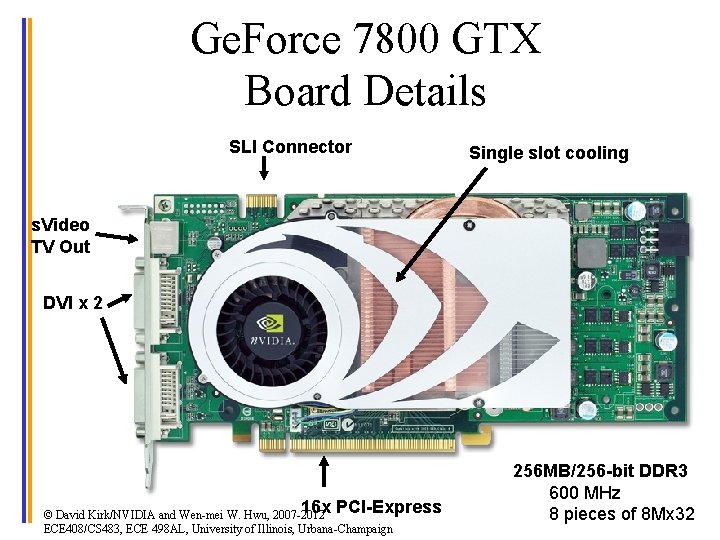

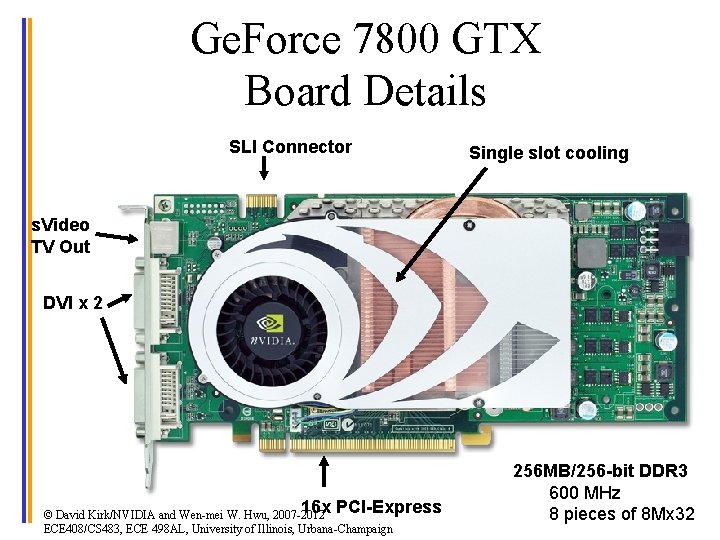

Ge. Force 7800 GTX Board Details SLI Connector Single slot cooling s. Video TV Out DVI x 2 16 x PCI-Express © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign 256 MB/256 -bit DDR 3 600 MHz 8 pieces of 8 Mx 32

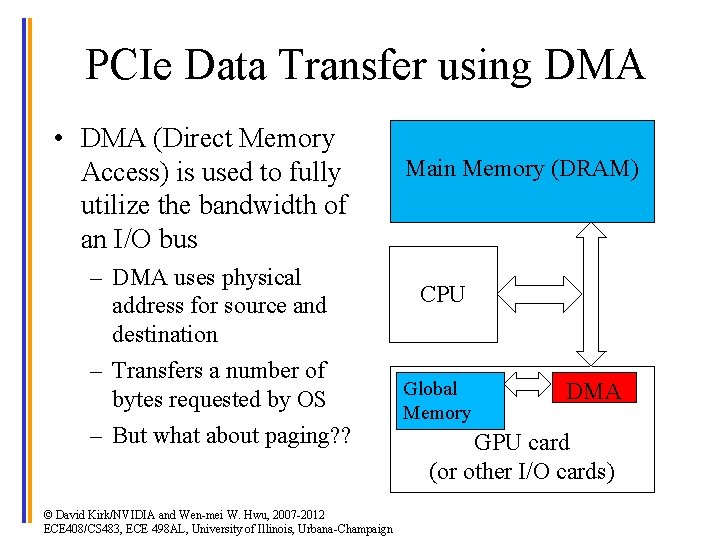

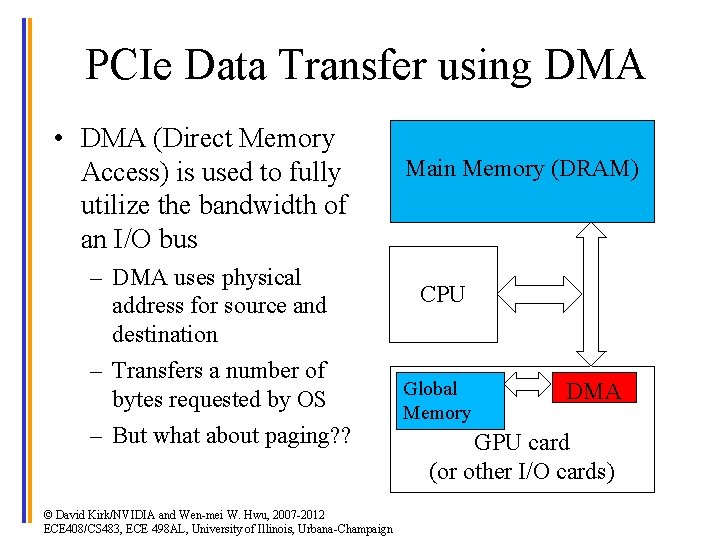

PCIe Data Transfer using DMA • DMA (Direct Memory Access) is used to fully utilize the bandwidth of an I/O bus – DMA uses physical address for source and destination – Transfers a number of bytes requested by OS – But what about paging? ? © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Main Memory (DRAM) CPU Global Memory DMA GPU card (or other I/O cards)

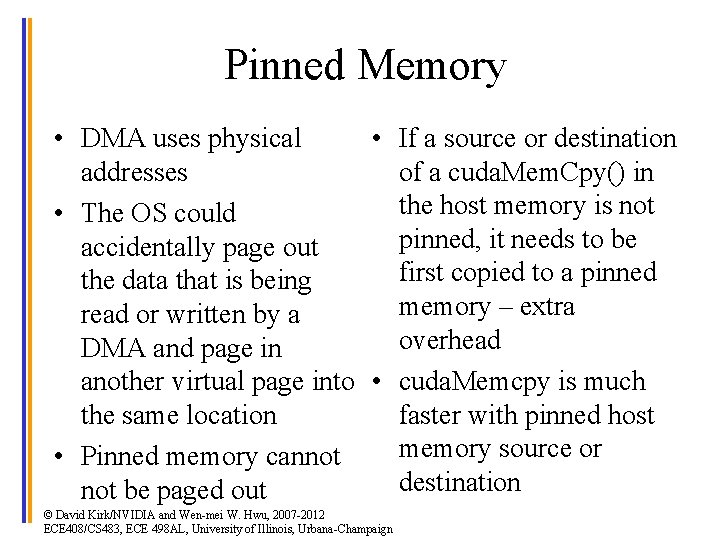

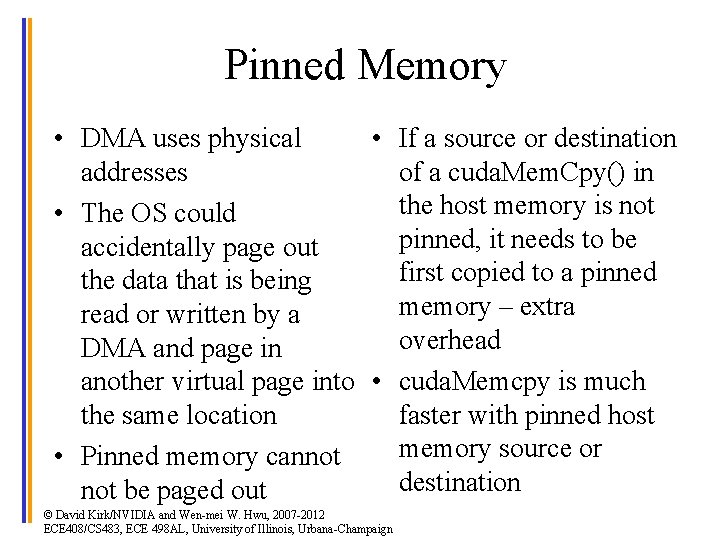

Pinned Memory • DMA uses physical • If a source or destination addresses of a cuda. Mem. Cpy() in the host memory is not • The OS could pinned, it needs to be accidentally page out first copied to a pinned the data that is being memory – extra read or written by a overhead DMA and page in another virtual page into • cuda. Memcpy is much the same location faster with pinned host memory source or • Pinned memory cannot destination not be paged out © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

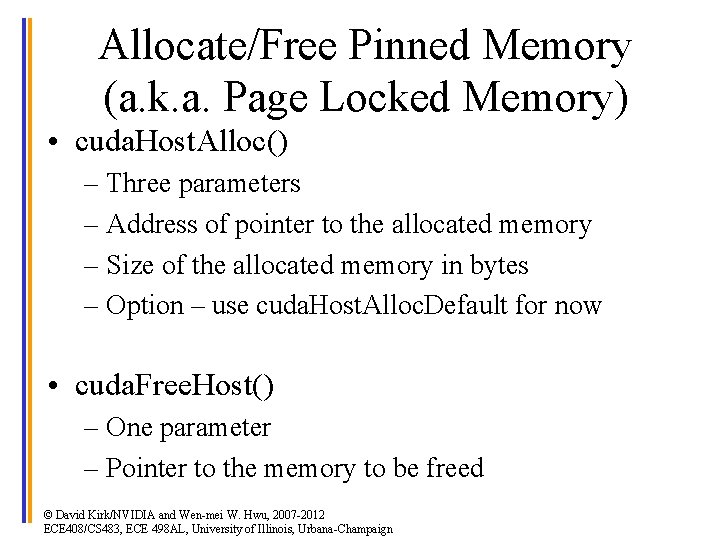

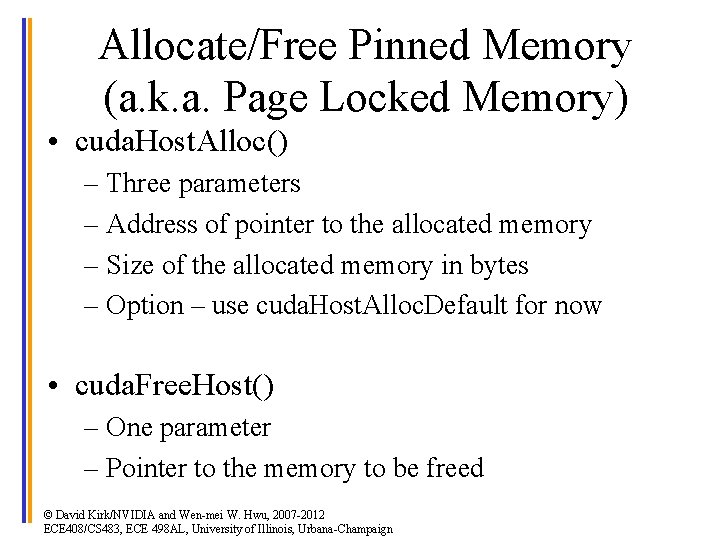

Allocate/Free Pinned Memory (a. k. a. Page Locked Memory) • cuda. Host. Alloc() – Three parameters – Address of pointer to the allocated memory – Size of the allocated memory in bytes – Option – use cuda. Host. Alloc. Default for now • cuda. Free. Host() – One parameter – Pointer to the memory to be freed © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

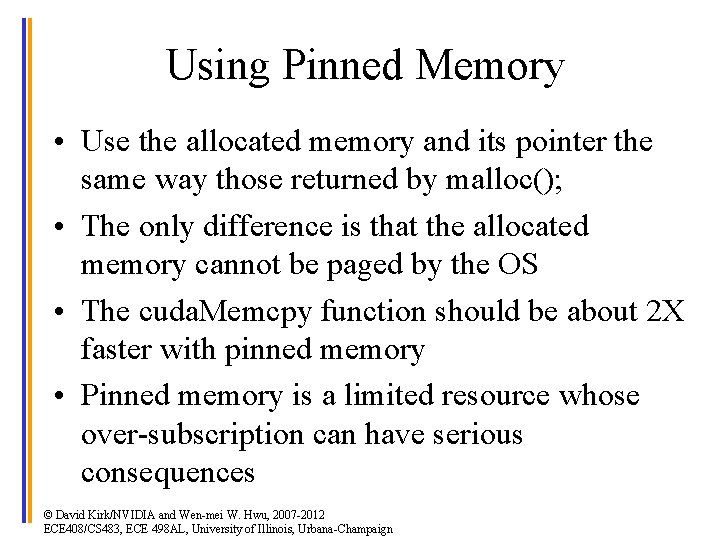

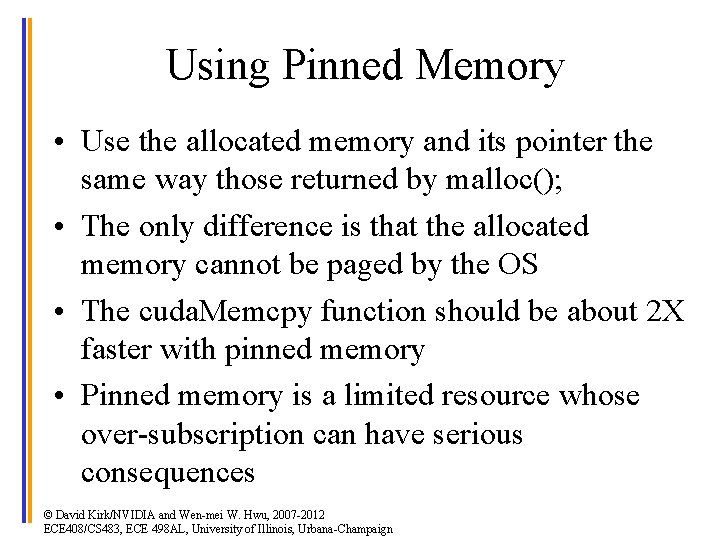

Using Pinned Memory • Use the allocated memory and its pointer the same way those returned by malloc(); • The only difference is that the allocated memory cannot be paged by the OS • The cuda. Memcpy function should be about 2 X faster with pinned memory • Pinned memory is a limited resource whose over-subscription can have serious consequences © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

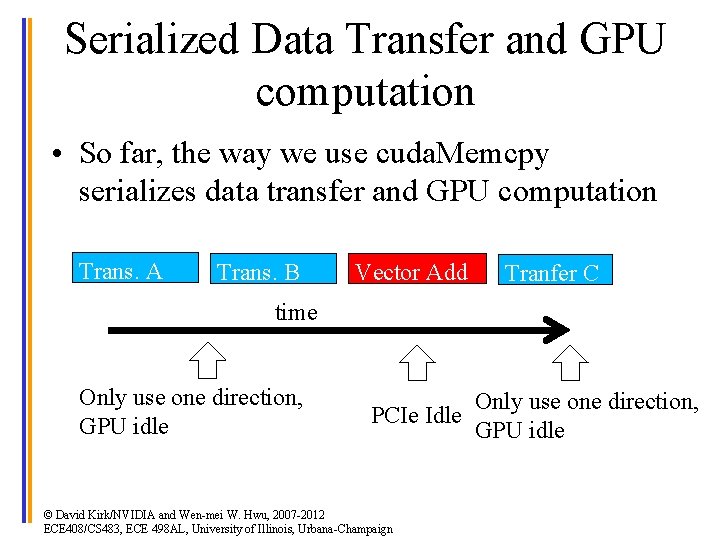

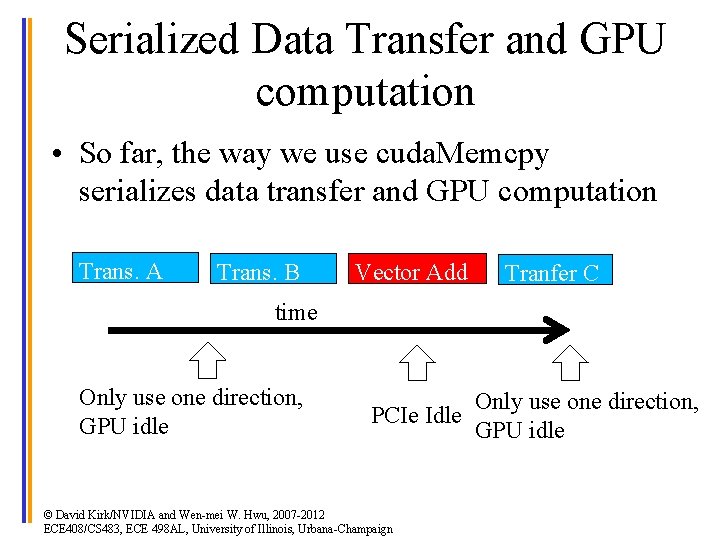

Serialized Data Transfer and GPU computation • So far, the way we use cuda. Memcpy serializes data transfer and GPU computation Trans. A Trans. B Vector Add Tranfer C time Only use one direction, GPU idle PCIe Idle © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Only use one direction, GPU idle

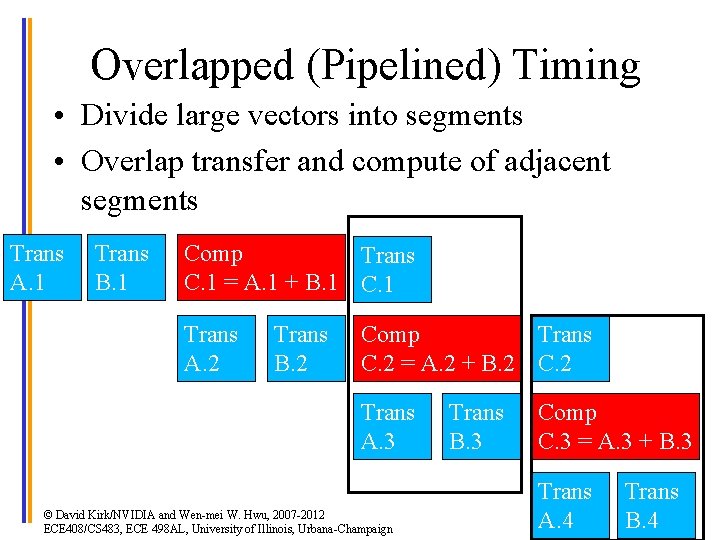

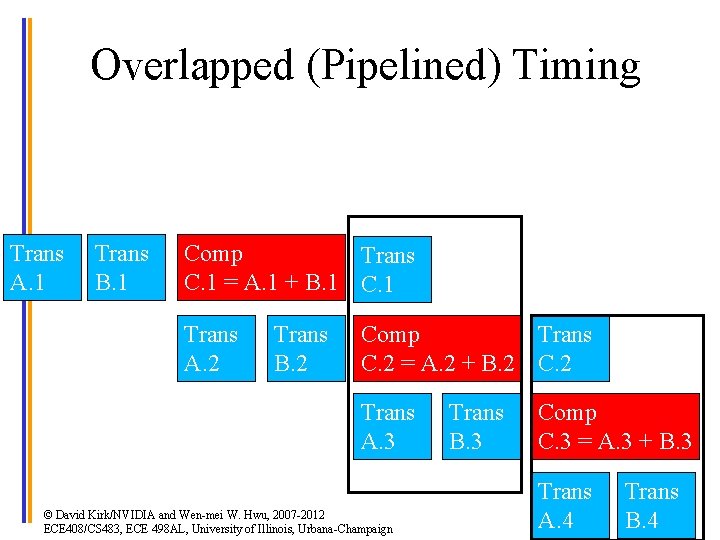

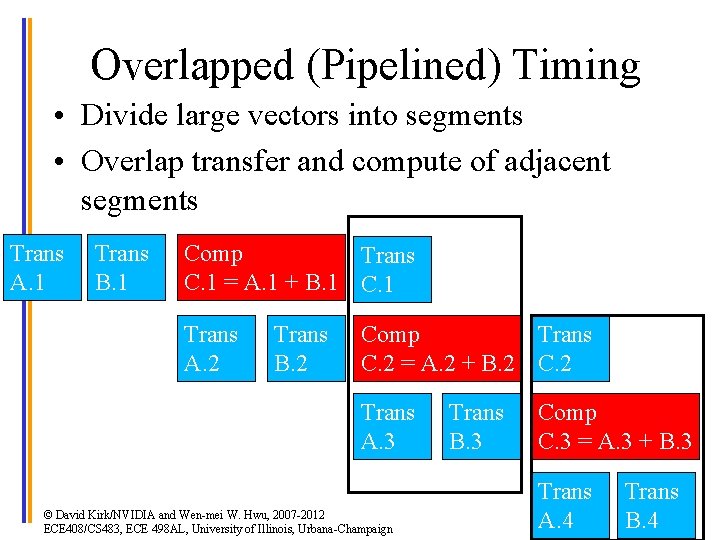

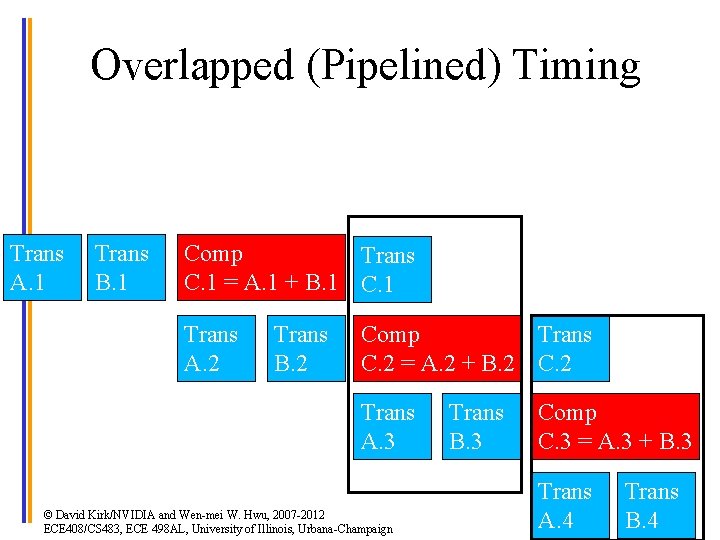

Overlapped (Pipelined) Timing • Divide large vectors into segments • Overlap transfer and compute of adjacent segments Trans A. 1 Trans B. 1 Comp Trans C. 1 = A. 1 + B. 1 C. 1 Trans A. 2 Trans B. 2 Comp Trans C. 2 = A. 2 + B. 2 C. 2 Trans A. 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Trans B. 3 Comp C. 3 = A. 3 + B. 3 Trans A. 4 Trans B. 4

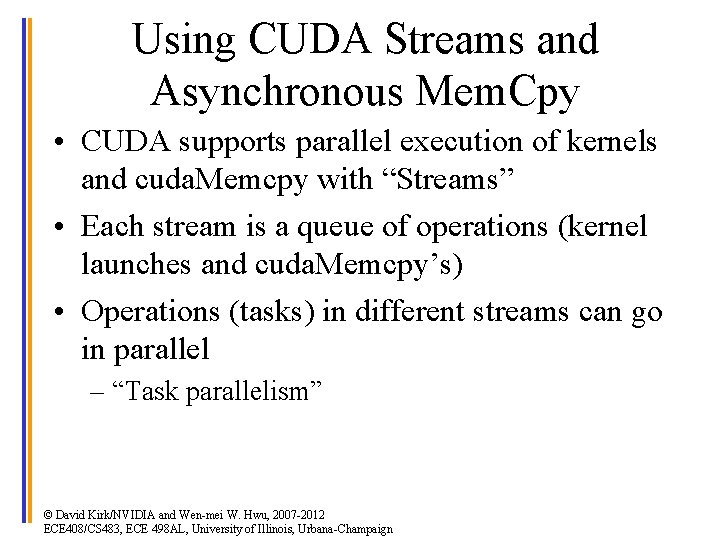

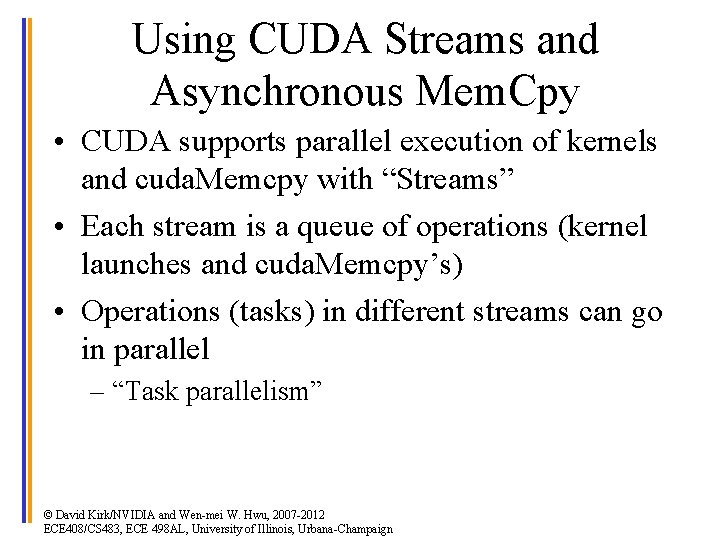

Using CUDA Streams and Asynchronous Mem. Cpy • CUDA supports parallel execution of kernels and cuda. Memcpy with “Streams” • Each stream is a queue of operations (kernel launches and cuda. Memcpy’s) • Operations (tasks) in different streams can go in parallel – “Task parallelism” © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

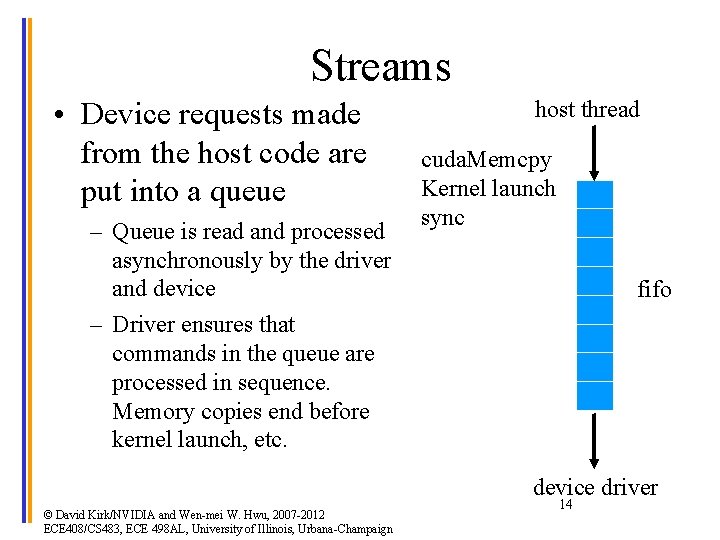

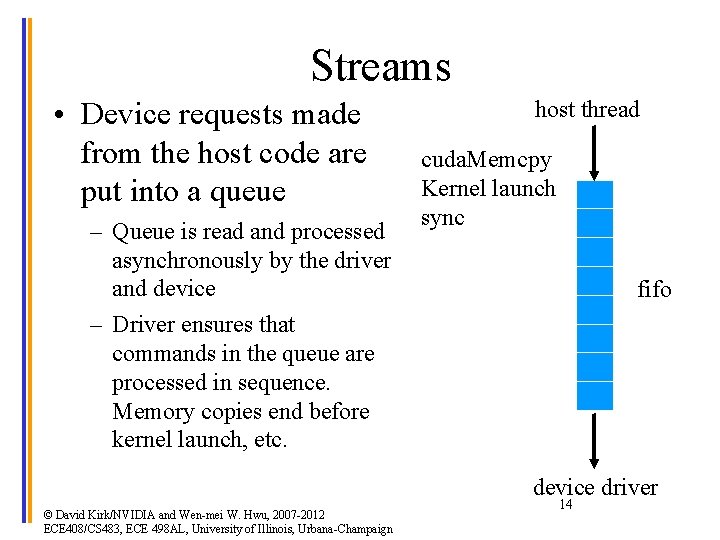

Streams • Device requests made from the host code are put into a queue – Queue is read and processed asynchronously by the driver and device – Driver ensures that commands in the queue are processed in sequence. Memory copies end before kernel launch, etc. host thread cuda. Memcpy Kernel launch sync fifo device driver © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign 14

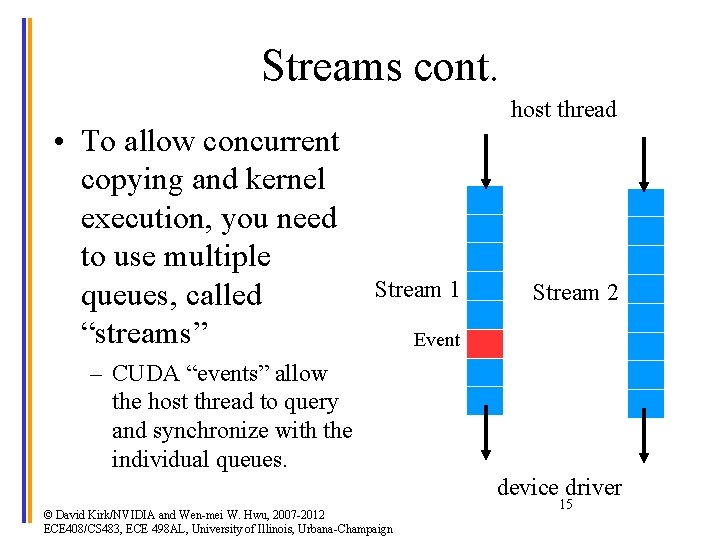

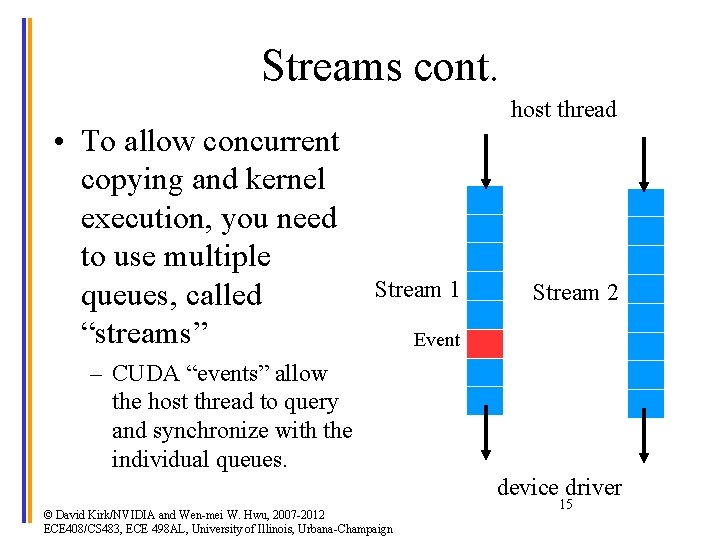

Streams cont. • To allow concurrent copying and kernel execution, you need to use multiple queues, called “streams” host thread Stream 1 Stream 2 Event – CUDA “events” allow the host thread to query and synchronize with the individual queues. device driver © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign 15

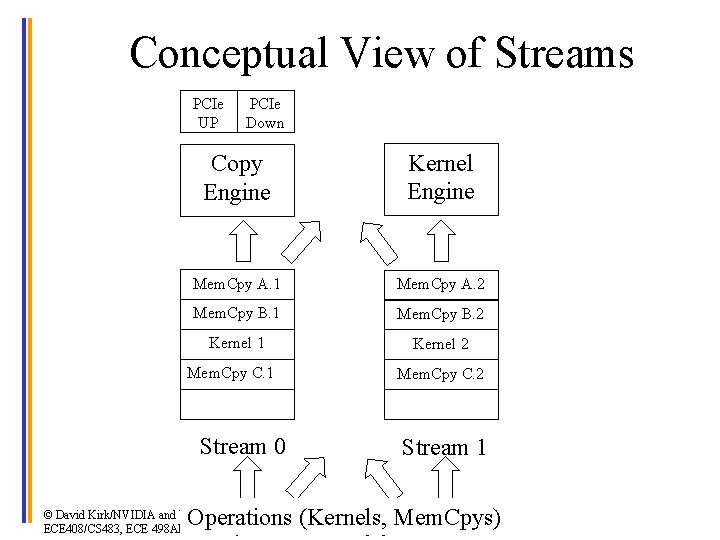

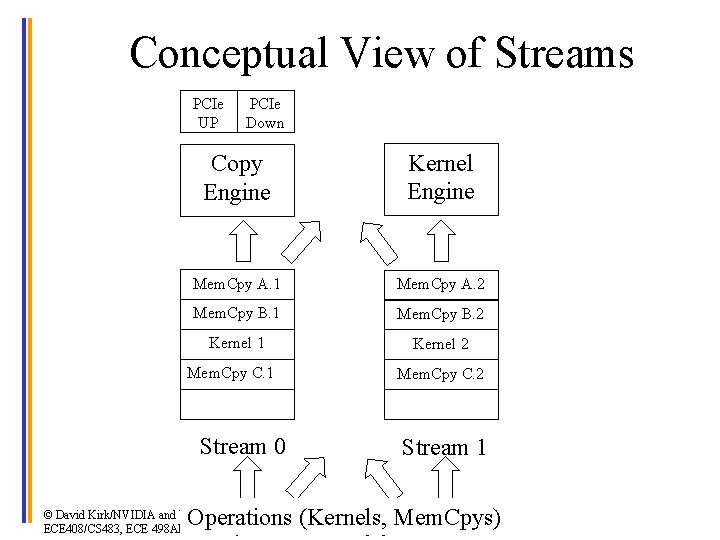

Conceptual View of Streams PCIe UP PCIe Down Copy Engine Kernel Engine Mem. Cpy A. 1 Mem. Cpy A. 2 Mem. Cpy B. 1 Mem. Cpy B. 2 Kernel 1 Kernel 2 Mem. Cpy C. 1 Stream 0 Mem. Cpy C. 2 Stream 1 Operations (Kernels, Mem. Cpys) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

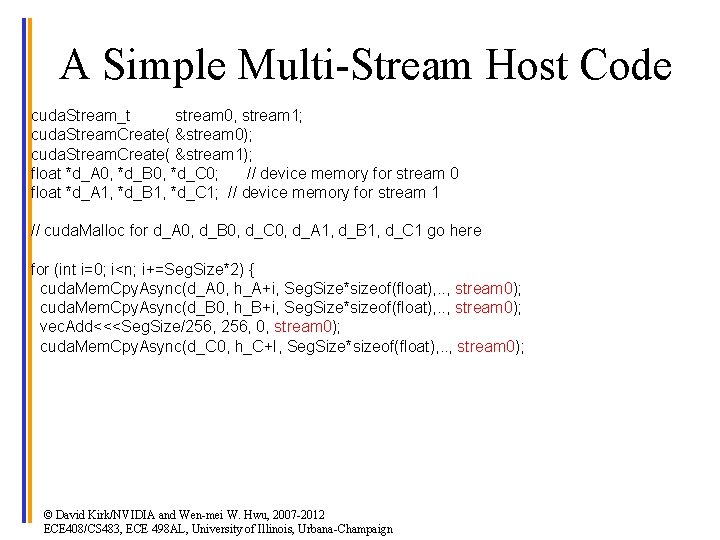

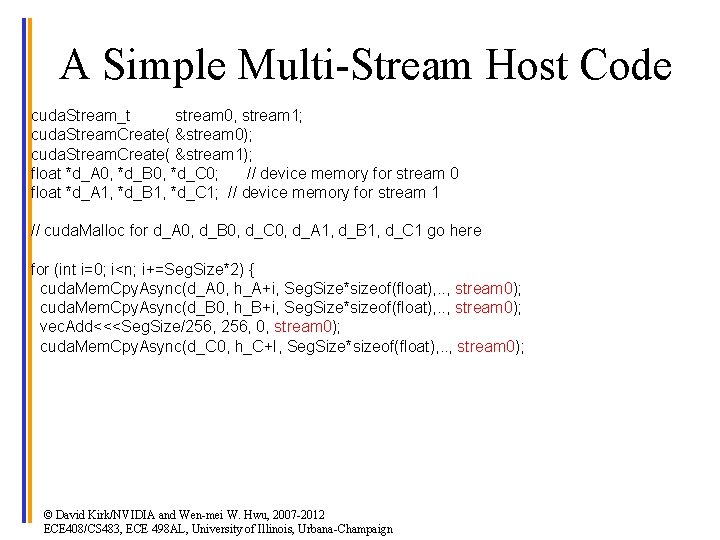

A Simple Multi-Stream Host Code cuda. Stream_t stream 0, stream 1; cuda. Stream. Create( &stream 0); cuda. Stream. Create( &stream 1); float *d_A 0, *d_B 0, *d_C 0; // device memory for stream 0 float *d_A 1, *d_B 1, *d_C 1; // device memory for stream 1 // cuda. Malloc for d_A 0, d_B 0, d_C 0, d_A 1, d_B 1, d_C 1 go here for (int i=0; i<n; i+=Seg. Size*2) { cuda. Mem. Cpy. Async(d_A 0, h_A+i, Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_B 0, h_B+i, Seg. Size*sizeof(float), . . , stream 0); vec. Add<<<Seg. Size/256, 0, stream 0); cuda. Mem. Cpy. Async(d_C 0, h_C+I, Seg. Size*sizeof(float), . . , stream 0); © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

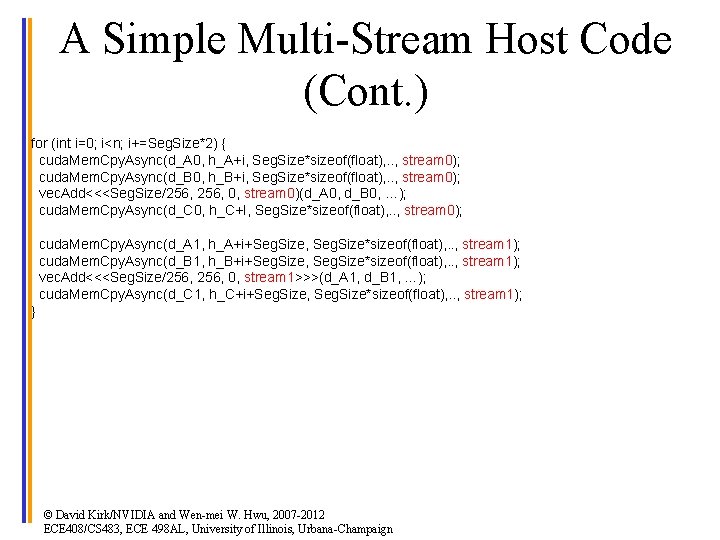

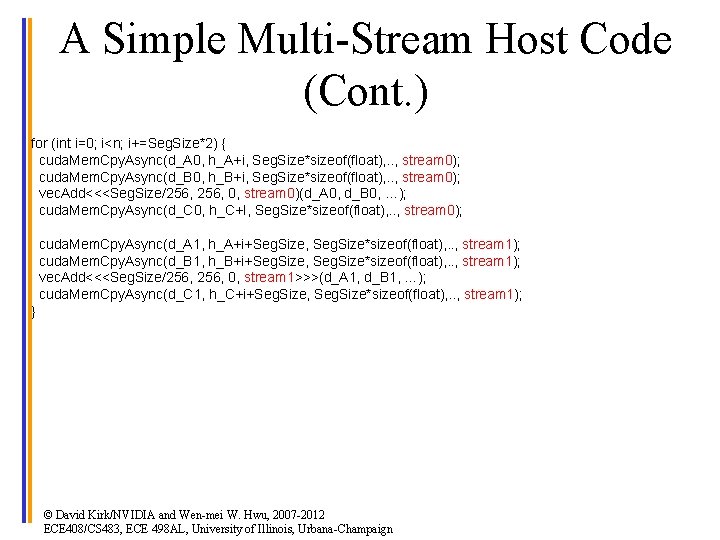

A Simple Multi-Stream Host Code (Cont. ) for (int i=0; i<n; i+=Seg. Size*2) { cuda. Mem. Cpy. Async(d_A 0, h_A+i, Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_B 0, h_B+i, Seg. Size*sizeof(float), . . , stream 0); vec. Add<<<Seg. Size/256, 0, stream 0)(d_A 0, d_B 0, …); cuda. Mem. Cpy. Async(d_C 0, h_C+I, Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_A 1, h_A+i+Seg. Size, Seg. Size*sizeof(float), . . , stream 1); cuda. Mem. Cpy. Async(d_B 1, h_B+i+Seg. Size, Seg. Size*sizeof(float), . . , stream 1); vec. Add<<<Seg. Size/256, 0, stream 1>>>(d_A 1, d_B 1, …); cuda. Mem. Cpy. Async(d_C 1, h_C+i+Seg. Size, Seg. Size*sizeof(float), . . , stream 1); } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

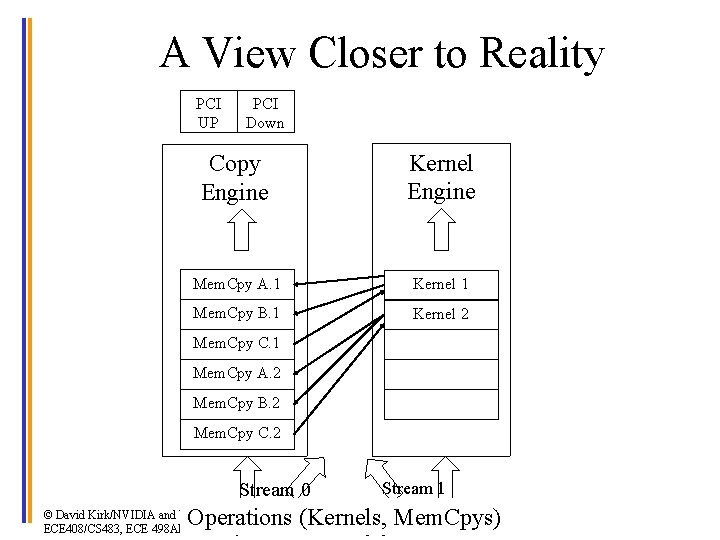

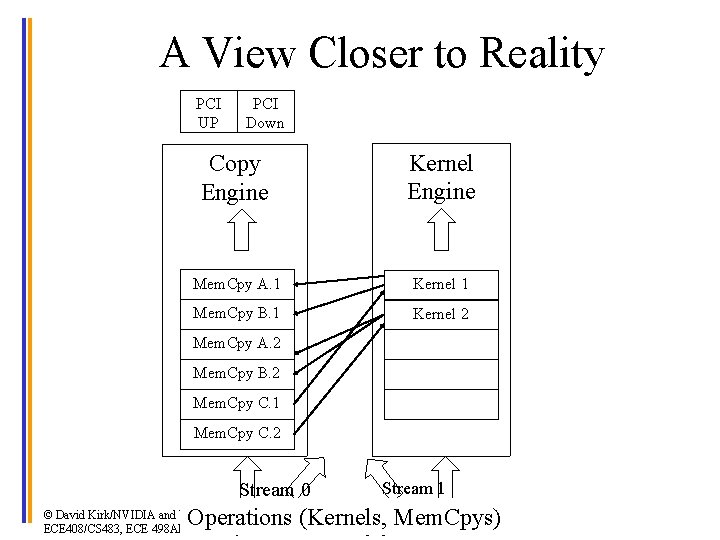

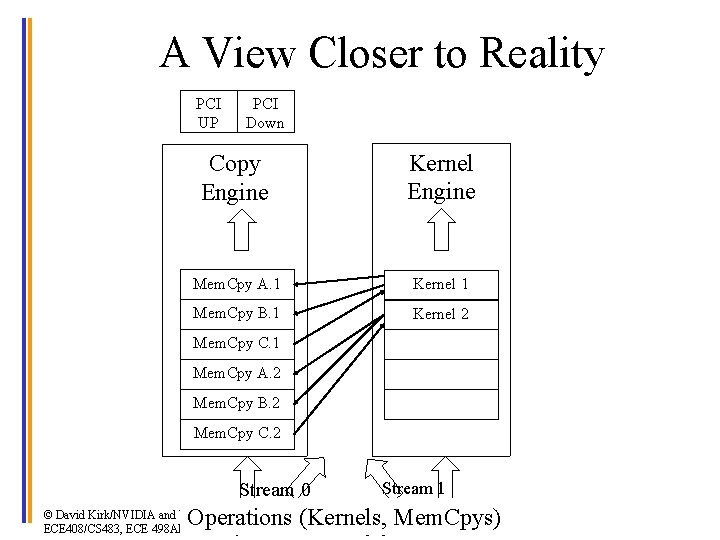

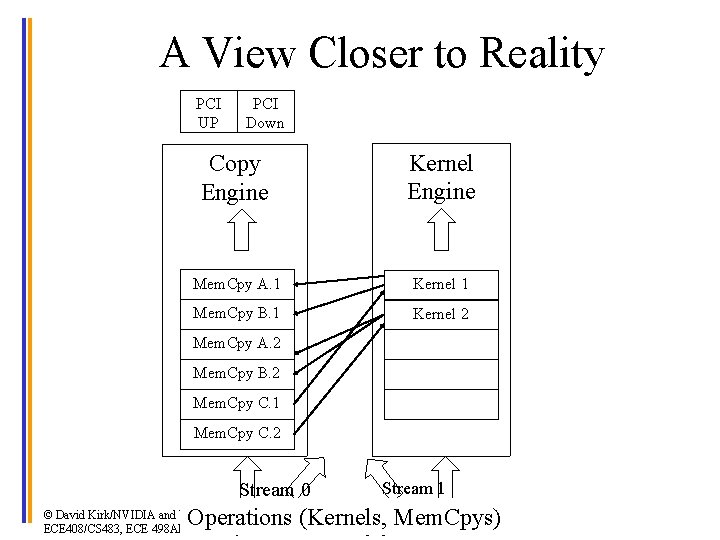

A View Closer to Reality PCI UP PCI Down Copy Engine Kernel Engine Mem. Cpy A. 1 Kernel 1 Mem. Cpy B. 1 Kernel 2 Mem. Cpy C. 1 Mem. Cpy A. 2 Mem. Cpy B. 2 Mem. Cpy C. 2 Stream 0 Stream 1 Operations (Kernels, Mem. Cpys) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

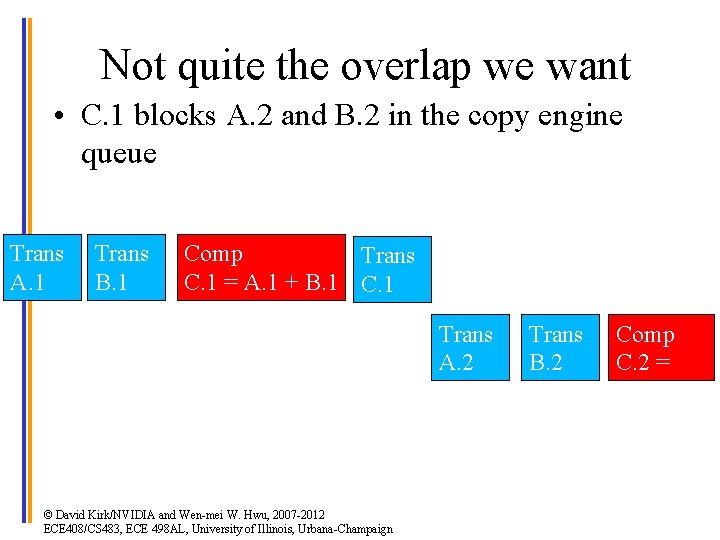

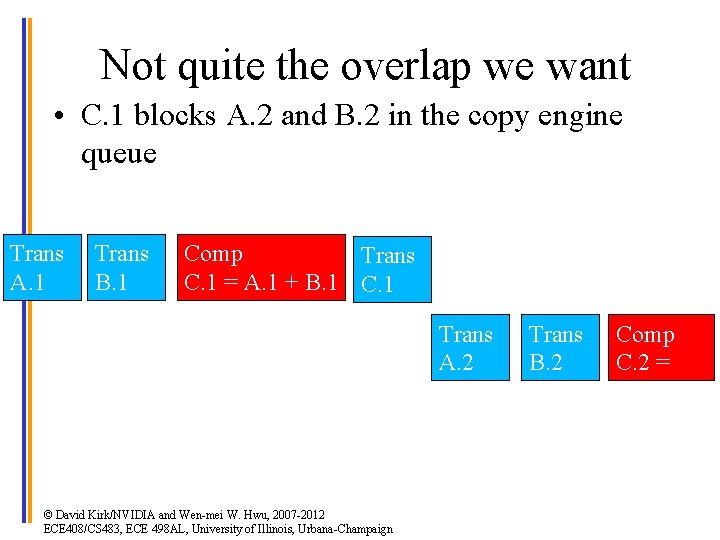

Not quite the overlap we want • C. 1 blocks A. 2 and B. 2 in the copy engine queue Trans A. 1 Trans B. 1 Comp Trans C. 1 = A. 1 + B. 1 C. 1 Trans A. 2 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Trans B. 2 Comp C. 2 = A. 2 + B. 2

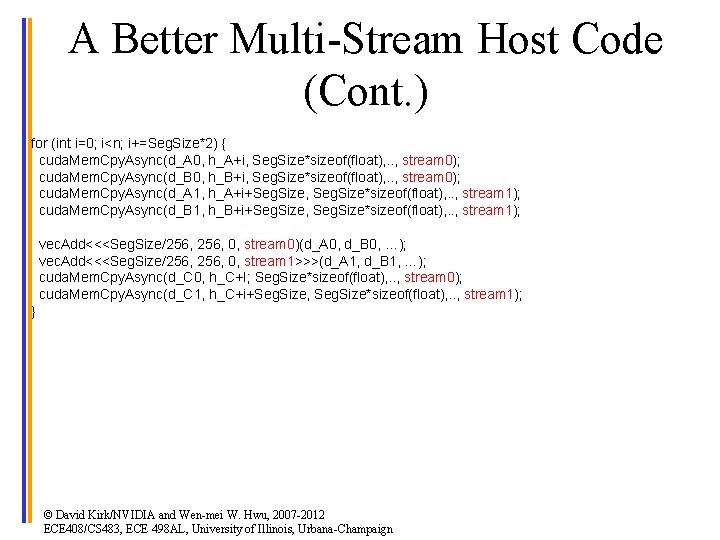

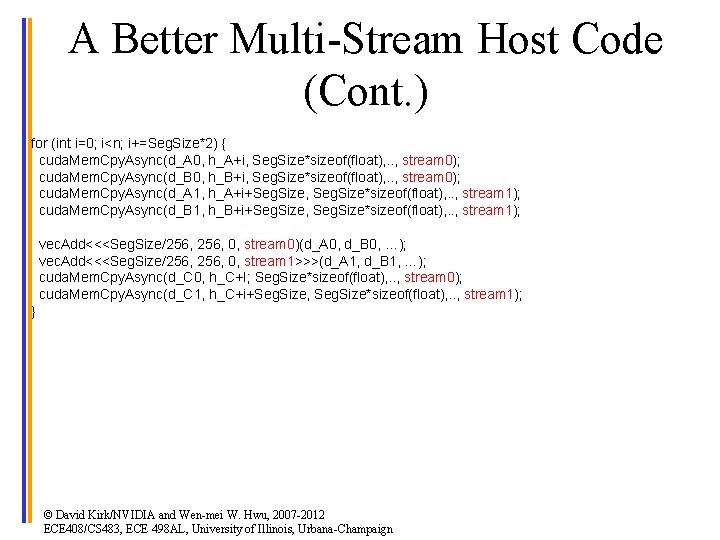

A Better Multi-Stream Host Code (Cont. ) for (int i=0; i<n; i+=Seg. Size*2) { cuda. Mem. Cpy. Async(d_A 0, h_A+i, Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_B 0, h_B+i, Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_A 1, h_A+i+Seg. Size, Seg. Size*sizeof(float), . . , stream 1); cuda. Mem. Cpy. Async(d_B 1, h_B+i+Seg. Size, Seg. Size*sizeof(float), . . , stream 1); vec. Add<<<Seg. Size/256, 0, stream 0)(d_A 0, d_B 0, …); vec. Add<<<Seg. Size/256, 0, stream 1>>>(d_A 1, d_B 1, …); cuda. Mem. Cpy. Async(d_C 0, h_C+I; Seg. Size*sizeof(float), . . , stream 0); cuda. Mem. Cpy. Async(d_C 1, h_C+i+Seg. Size, Seg. Size*sizeof(float), . . , stream 1); } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

A View Closer to Reality PCI UP PCI Down Copy Engine Kernel Engine Mem. Cpy A. 1 Kernel 1 Mem. Cpy B. 1 Kernel 2 Mem. Cpy A. 2 Mem. Cpy B. 2 Mem. Cpy C. 1 Mem. Cpy C. 2 Stream 0 Stream 1 Operations (Kernels, Mem. Cpys) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

Overlapped (Pipelined) Timing Trans A. 1 Trans B. 1 Comp Trans C. 1 = A. 1 + B. 1 C. 1 Trans A. 2 Trans B. 2 Comp Trans C. 2 = A. 2 + B. 2 C. 2 Trans A. 3 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Trans B. 3 Comp C. 3 = A. 3 + B. 3 Trans A. 4 Trans B. 4

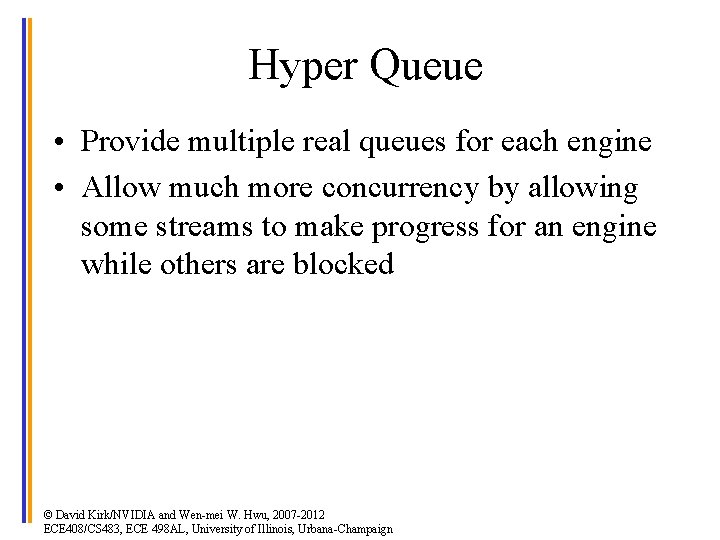

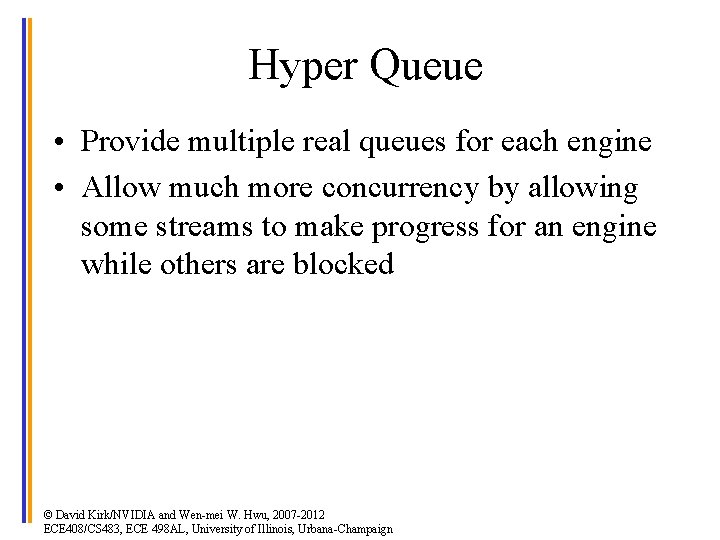

Hyper Queue • Provide multiple real queues for each engine • Allow much more concurrency by allowing some streams to make progress for an engine while others are blocked © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

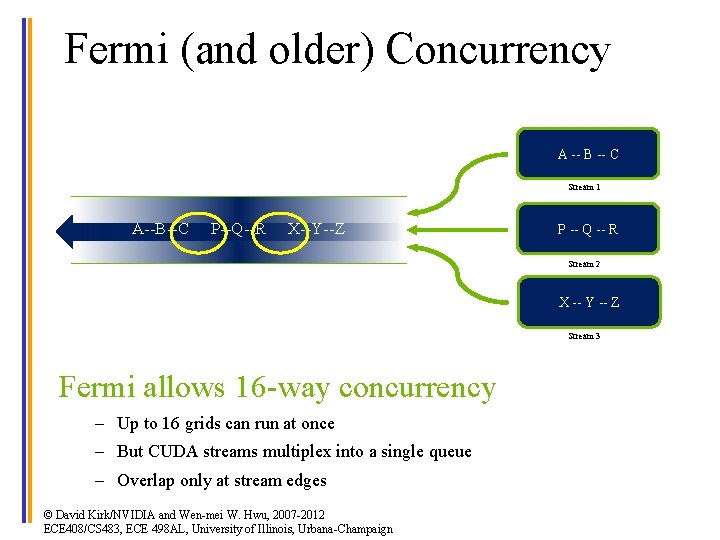

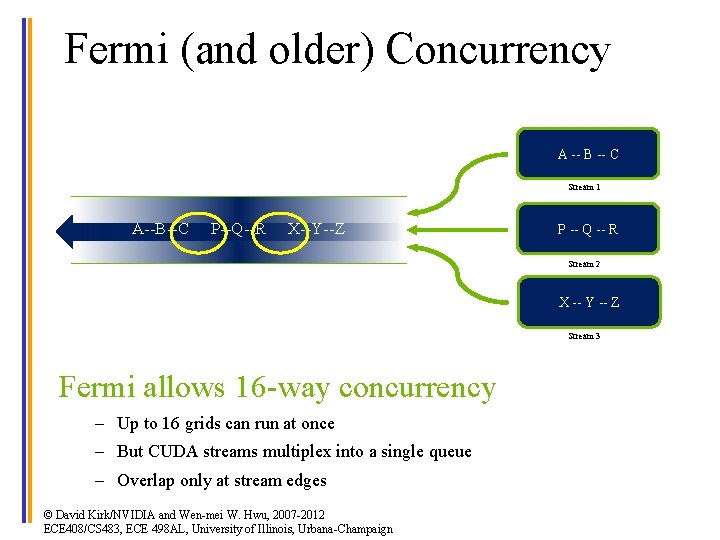

Fermi (and older) Concurrency A -- B -- C Stream 1 A--B--C P--Q--R X--Y--Z P -- Q -- R Stream 2 Hardware Work Queue X -- Y -- Z Stream 3 Fermi allows 16 -way concurrency – Up to 16 grids can run at once – But CUDA streams multiplex into a single queue – Overlap only at stream edges © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

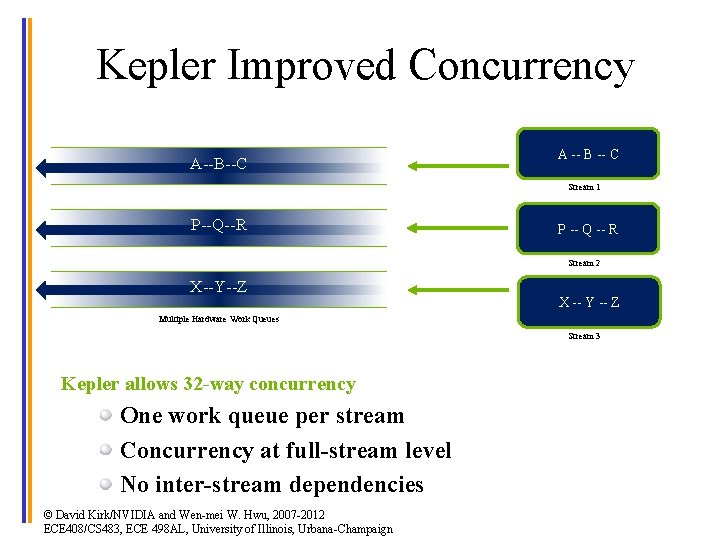

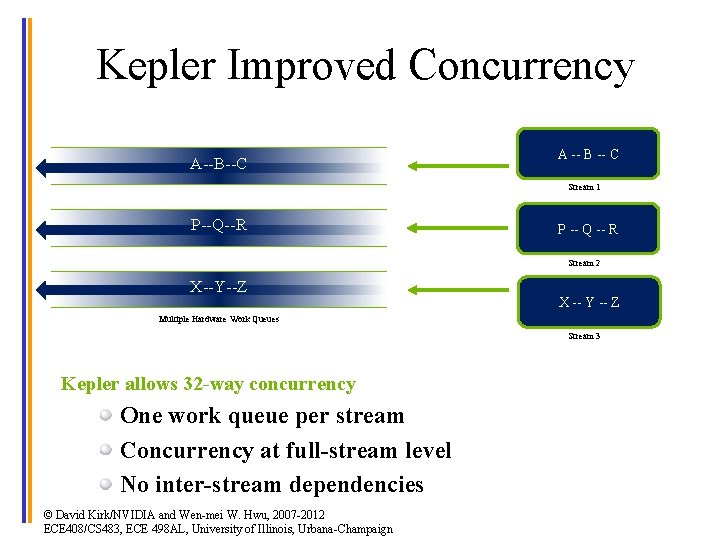

Kepler Improved Concurrency A--B--C A -- B -- C Stream 1 P--Q--R P -- Q -- R Stream 2 X--Y--Z X -- Y -- Z Multiple Hardware Work Queues Stream 3 Kepler allows 32 -way concurrency One work queue per stream Concurrency at full-stream level No inter-stream dependencies © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign

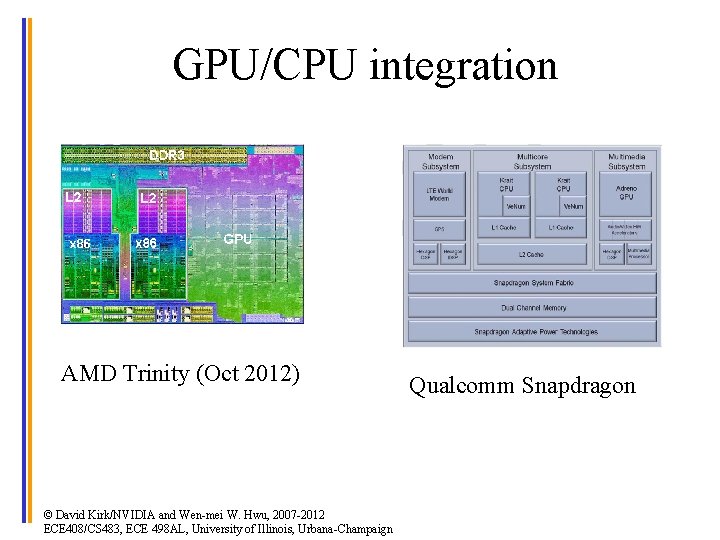

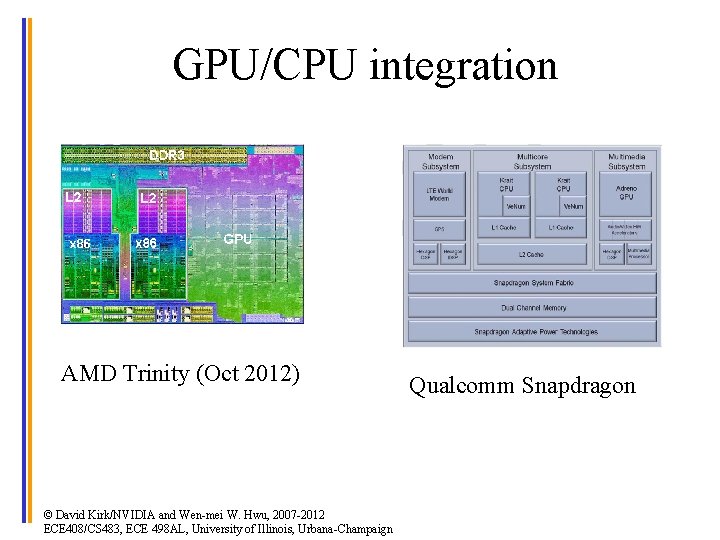

GPU/CPU integration AMD Trinity (Oct 2012) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, ECE 498 AL, University of Illinois, Urbana-Champaign Qualcomm Snapdragon