ECE 408 CS 483 Fall 2015 Applied Parallel

- Slides: 9

ECE 408 / CS 483 Fall 2015 Applied Parallel Programming Lecture 3: Introduction to CUDA C (Part 2) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 1

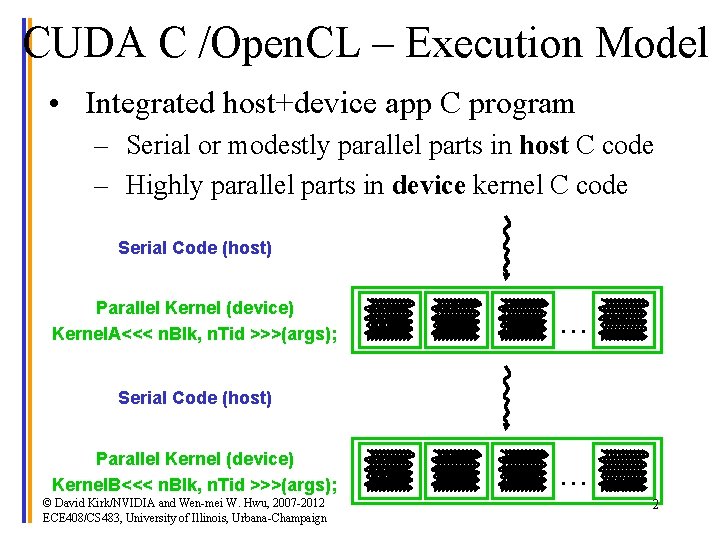

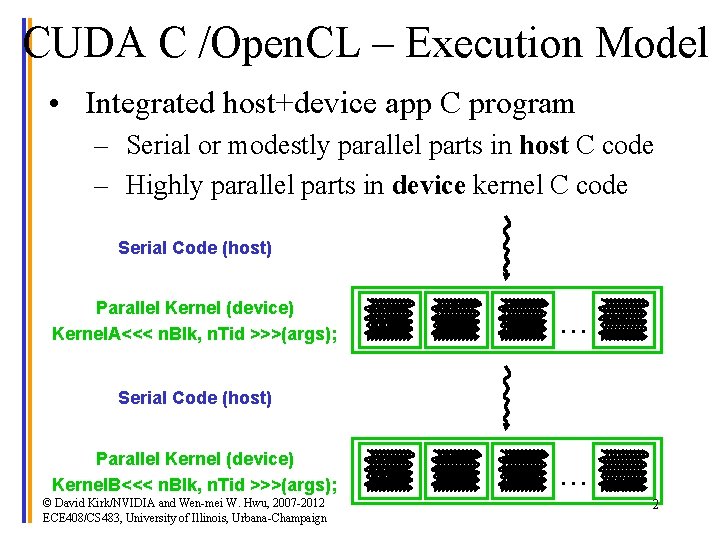

CUDA C /Open. CL – Execution Model • Integrated host+device app C program – Serial or modestly parallel parts in host C code – Highly parallel parts in device kernel C code Serial Code (host) Parallel Kernel (device) Kernel. A<<< n. Blk, n. Tid >>>(args); . . . Serial Code (host) Parallel Kernel (device) Kernel. B<<< n. Blk, n. Tid >>>(args); © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign . . . 2

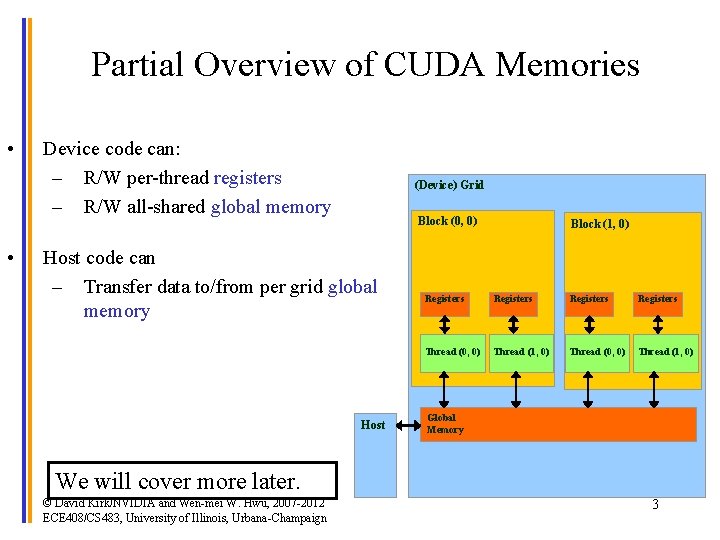

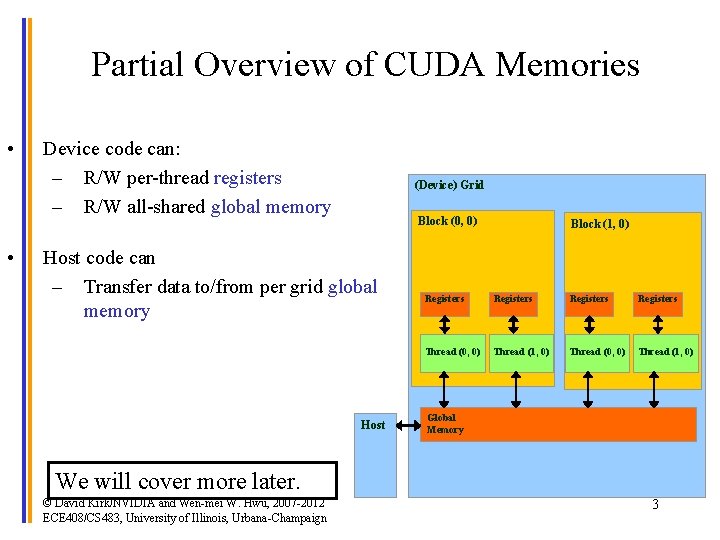

Partial Overview of CUDA Memories • • Device code can: – R/W per-thread registers – R/W all-shared global memory (Device) Grid Block (0, 0) Host code can – Transfer data to/from per grid global memory Host Block (1, 0) Registers Thread (0, 0) Thread (1, 0) Global Memory We will cover more later. © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 3

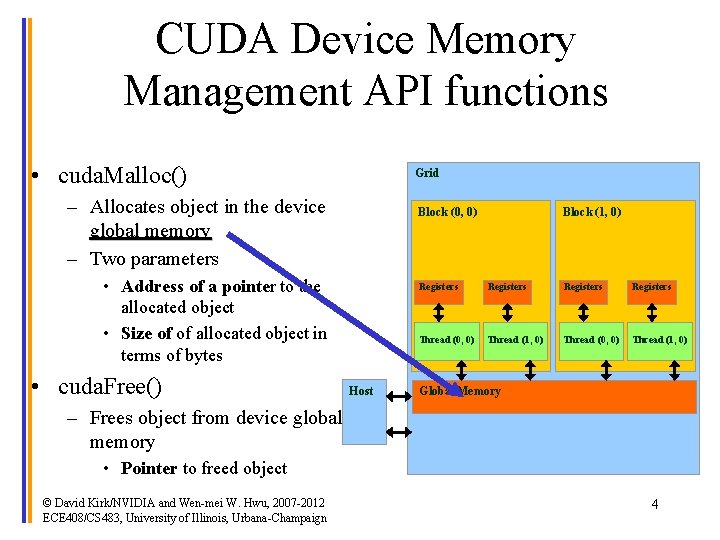

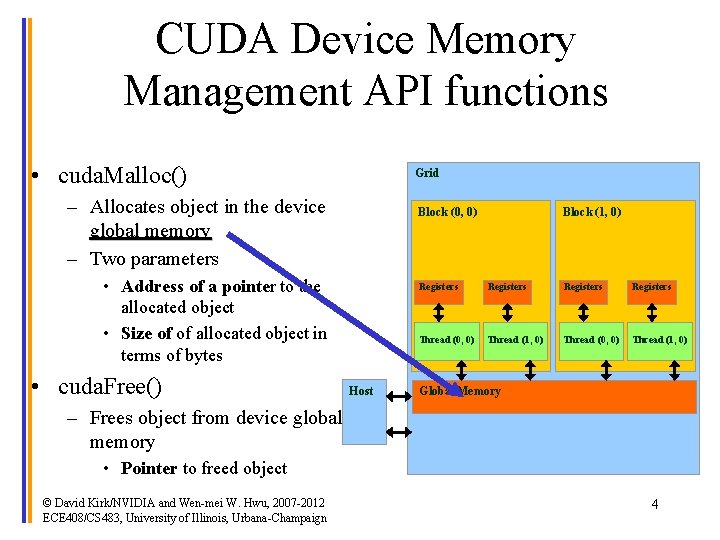

CUDA Device Memory Management API functions • cuda. Malloc() Grid – Allocates object in the device global memory – Two parameters Block (0, 0) • Address of a pointer to the allocated object • Size of of allocated object in terms of bytes • cuda. Free() Host Block (1, 0) Registers Thread (0, 0) Thread (1, 0) Global Memory – Frees object from device global memory • Pointer to freed object © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 4

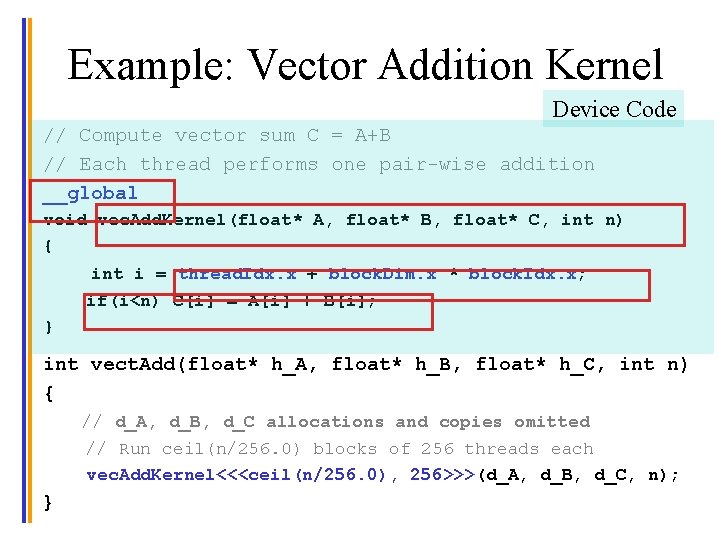

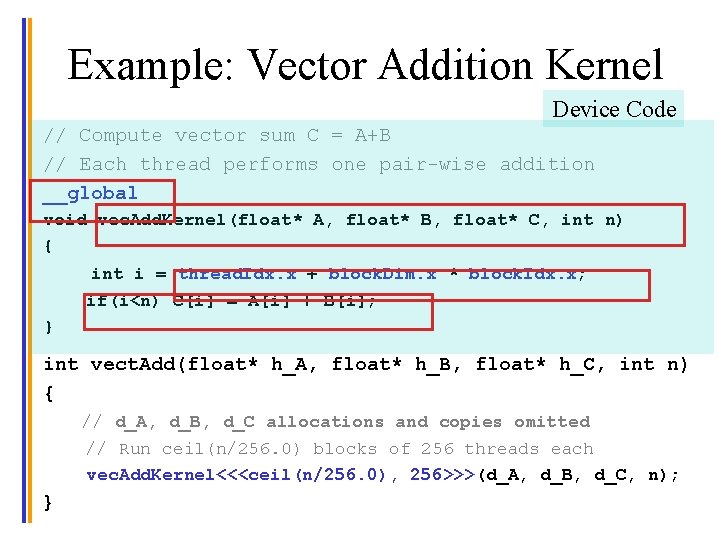

Example: Vector Addition Kernel Device Code // Compute vector sum C = A+B // Each thread performs one pair-wise addition __global__ void vec. Add. Kernel(float* A, float* B, float* C, int n) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; if(i<n) C[i] = A[i] + B[i]; } int vect. Add(float* h_A, float* h_B, float* h_C, int n) { // d_A, d_B, d_C allocations and copies omitted // Run ceil(n/256. 0) blocks of 256 threads each vec. Add. Kernel<<<ceil(n/256. 0), 256>>>(d_A, d_B, d_C, n); } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 5

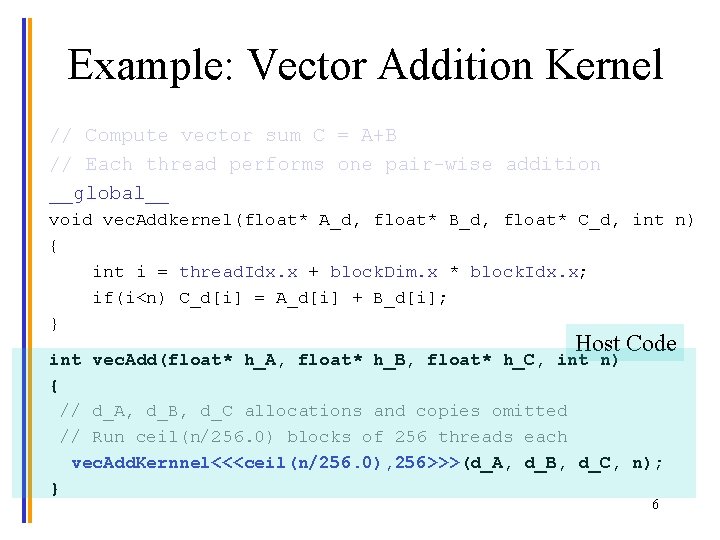

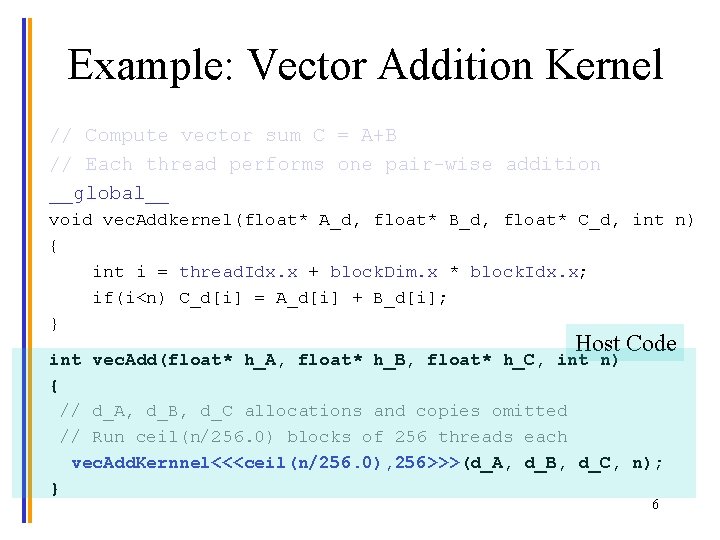

Example: Vector Addition Kernel // Compute vector sum C = A+B // Each thread performs one pair-wise addition __global__ void vec. Addkernel(float* A_d, float* B_d, float* C_d, int n) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; if(i<n) C_d[i] = A_d[i] + B_d[i]; } Host Code int vec. Add(float* h_A, float* h_B, float* h_C, int n) { // d_A, d_B, d_C allocations and copies omitted // Run ceil(n/256. 0) blocks of 256 threads each vec. Add. Kernnel<<<ceil(n/256. 0), 256>>>(d_A, d_B, d_C, n); } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 6

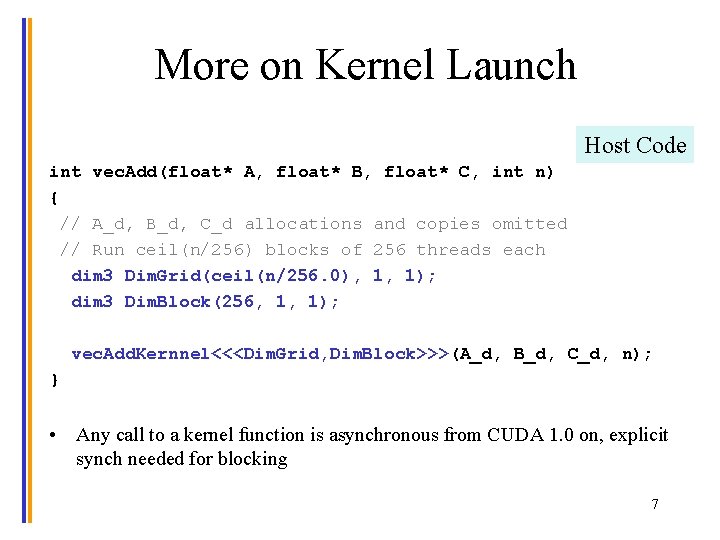

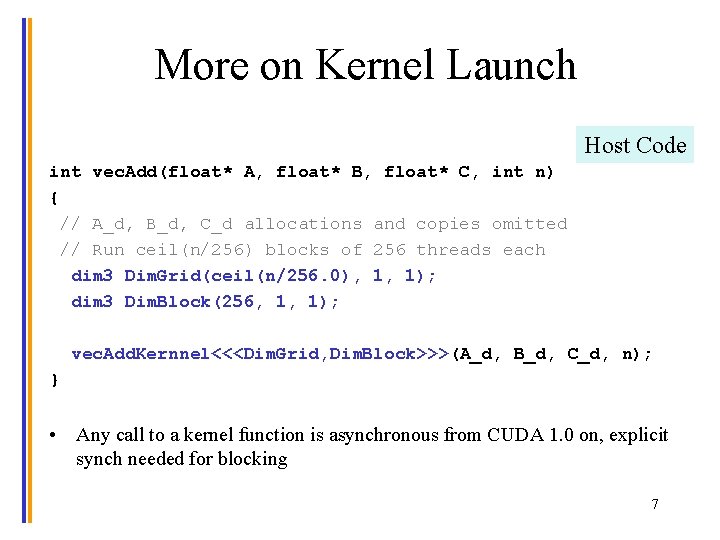

More on Kernel Launch Host Code int vec. Add(float* A, float* B, float* C, int n) { // A_d, B_d, C_d allocations and copies omitted // Run ceil(n/256) blocks of 256 threads each dim 3 Dim. Grid(ceil(n/256. 0), 1, 1); dim 3 Dim. Block(256, 1, 1); vec. Add. Kernnel<<<Dim. Grid, Dim. Block>>>(A_d, B_d, C_d, n); } • Any call to a kernel function is asynchronous from CUDA 1. 0 on, explicit synch needed for blocking © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 7

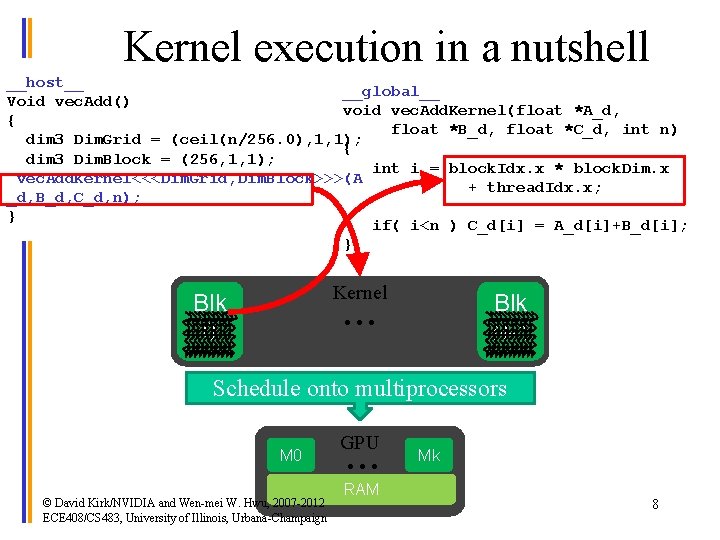

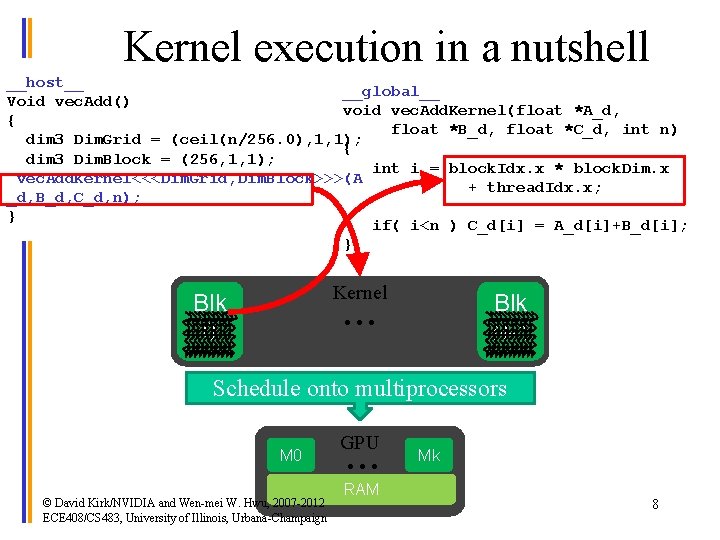

Kernel execution in a nutshell __host__ __global__ Void vec. Add() void vec. Add. Kernel(float *A_d, { float *B_d, float *C_d, int n) dim 3 Dim. Grid = (ceil(n/256. 0), 1, 1); { dim 3 Dim. Block = (256, 1, 1); int i = block. Idx. x * block. Dim. x vec. Add. Kernel<<<Dim. Grid, Dim. Block>>>(A + thread. Idx. x; _d, B_d, C_d, n); } if( i<n ) C_d[i] = A_d[i]+B_d[i]; } Kernel • • • Blk 0 Blk N-1 Schedule onto multiprocessors M 0 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign GPU • • • RAM Mk 8

QUESTIONS? © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2012 ECE 408/CS 483, University of Illinois, Urbana-Champaign 9