Discretized Streams FaultTolerant Streaming Computation at Scale Matei

![Fault-tolerance in Traditional Systems Node Replication [e. g. Borealis, Flux ] • Separate set Fault-tolerance in Traditional Systems Node Replication [e. g. Borealis, Flux ] • Separate set](https://slidetodoc.com/presentation_image/416f45fc31da8f67a32a4ebe57a9ebb6/image-7.jpg)

![Fault-tolerance in Traditional Systems Upstream Backup [e. g. Time. Stream, Storm ] • Each Fault-tolerance in Traditional Systems Upstream Backup [e. g. Time. Stream, Storm ] • Each](https://slidetodoc.com/presentation_image/416f45fc31da8f67a32a4ebe57a9ebb6/image-8.jpg)

- Slides: 37

Discretized Streams Fault-Tolerant Streaming Computation at Scale Matei Zaharia, Tathagata Das (TD), Haoyuan (HY) Li, Timothy Hunter, Scott Shenker, Ion Stoica DS 503 Team#26 - Praneeth Nooli and Rahul Ghadge Special Thanks - Prof. Mohd. Eltabakh

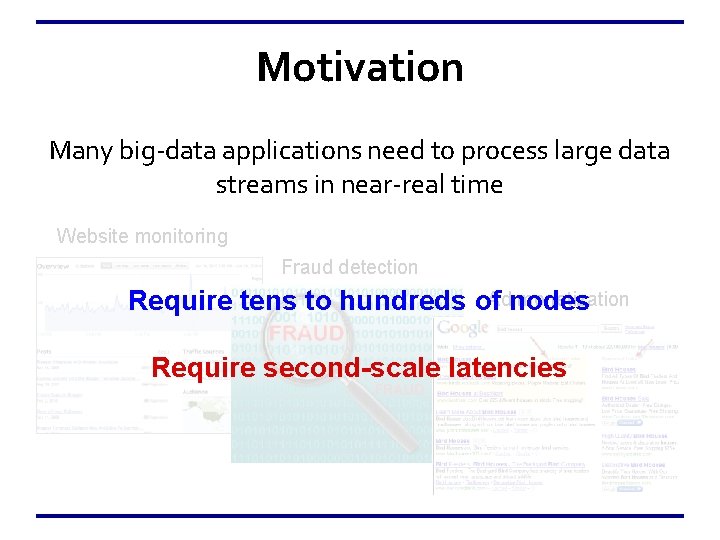

Motivation Many big-data applications need to process large data streams in near-real time Website monitoring Fraud detection monetization Require tens to hundreds of. Adnodes Require second-scale latencies

Challenge • Stream processing systems must recover from failures and stragglers quickly and efficiently – More important for streaming systems than batch systems • Traditional streaming systems don’t achieve these properties simultaneously

Outline • Limitations of Traditional Streaming Systems • Discretized Stream Processing • Unification with Batch and Interactive Processing

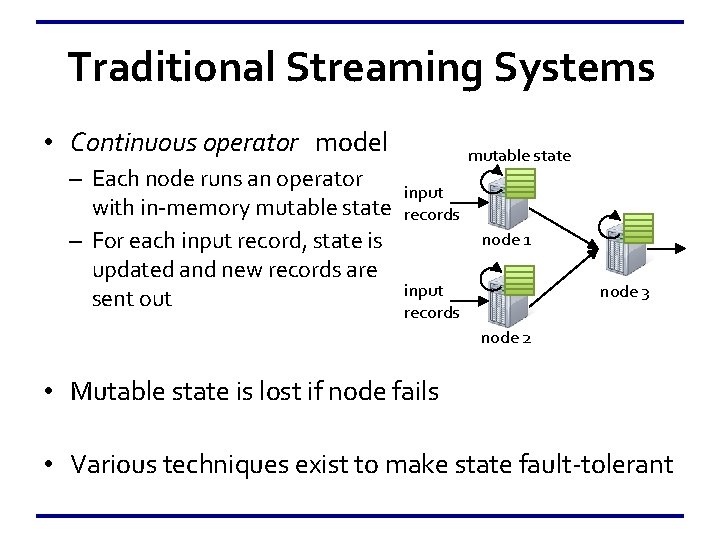

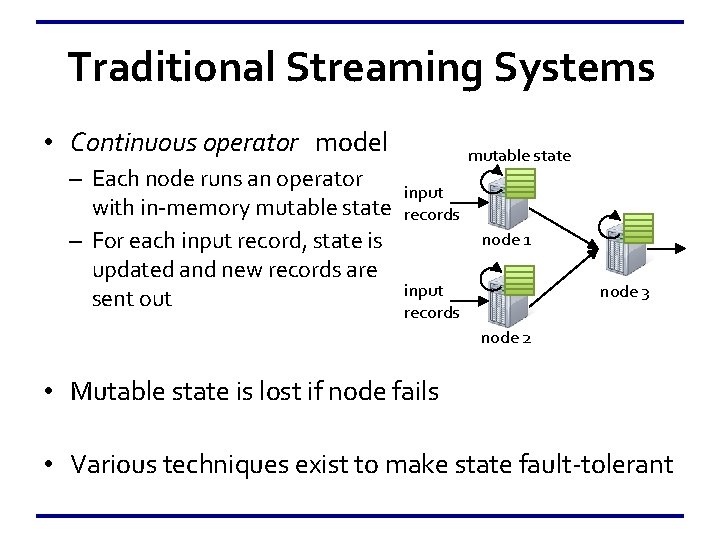

Traditional Streaming Systems • Continuous operator model – Each node runs an operator with in-memory mutable state – For each input record, state is updated and new records are sent out mutable state input records node 1 input records node 3 node 2 • Mutable state is lost if node fails • Various techniques exist to make state fault-tolerant

![Faulttolerance in Traditional Systems Node Replication e g Borealis Flux Separate set Fault-tolerance in Traditional Systems Node Replication [e. g. Borealis, Flux ] • Separate set](https://slidetodoc.com/presentation_image/416f45fc31da8f67a32a4ebe57a9ebb6/image-7.jpg)

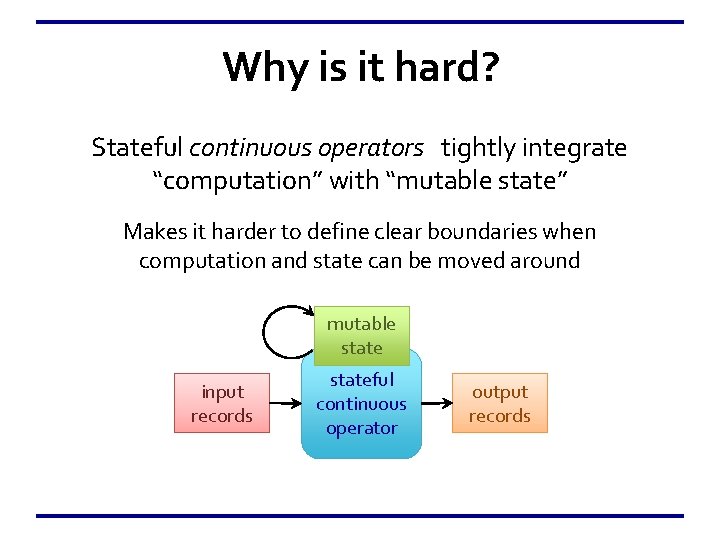

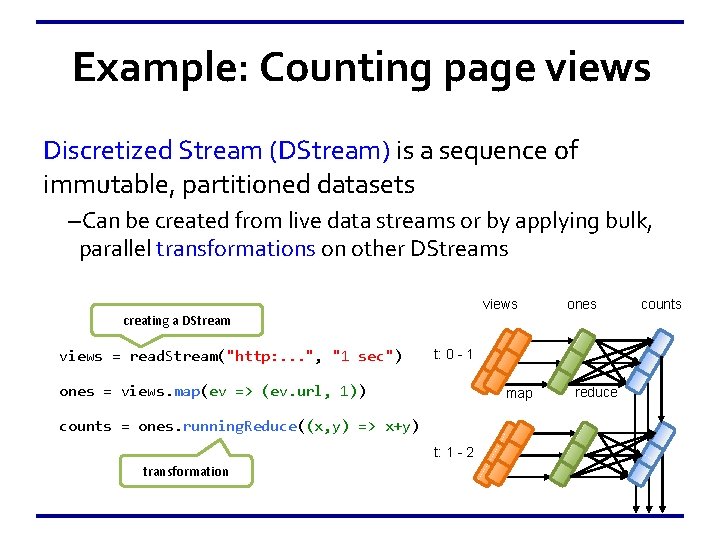

Fault-tolerance in Traditional Systems Node Replication [e. g. Borealis, Flux ] • Separate set of “hot failover” nodes process the same data streams input hot failover nodes sync protocol • Synchronization protocols ensures exact ordering of records in both sets • On failure, the system switches over to the failover nodes Fast recovery, but 2 x hardware cost

![Faulttolerance in Traditional Systems Upstream Backup e g Time Stream Storm Each Fault-tolerance in Traditional Systems Upstream Backup [e. g. Time. Stream, Storm ] • Each](https://slidetodoc.com/presentation_image/416f45fc31da8f67a32a4ebe57a9ebb6/image-8.jpg)

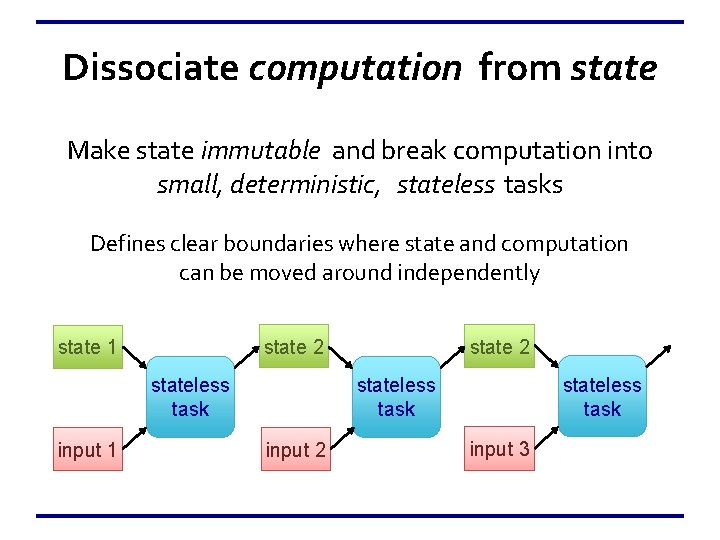

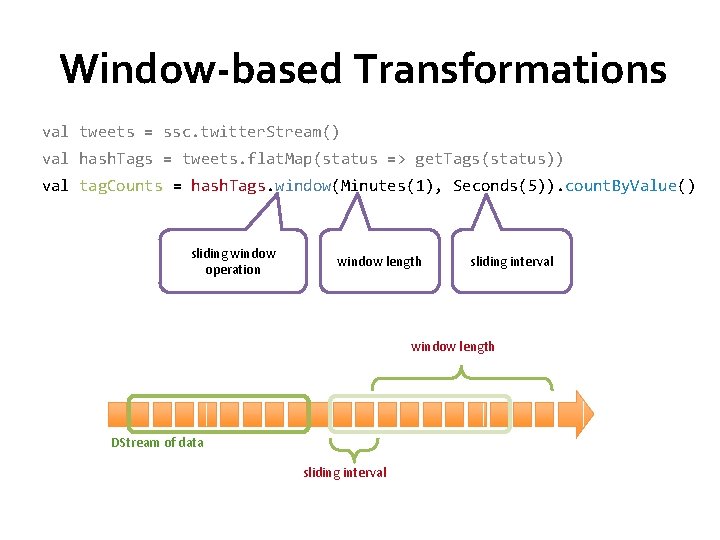

Fault-tolerance in Traditional Systems Upstream Backup [e. g. Time. Stream, Storm ] • Each node maintains backup of the forwarded records since last checkpoint input • A “cold failover” node is maintained input • On failure, upstream nodes replay the backup records serially to the failover node to recreate the state repla y backup Only need 1 standby, but slow recovery cold failover node

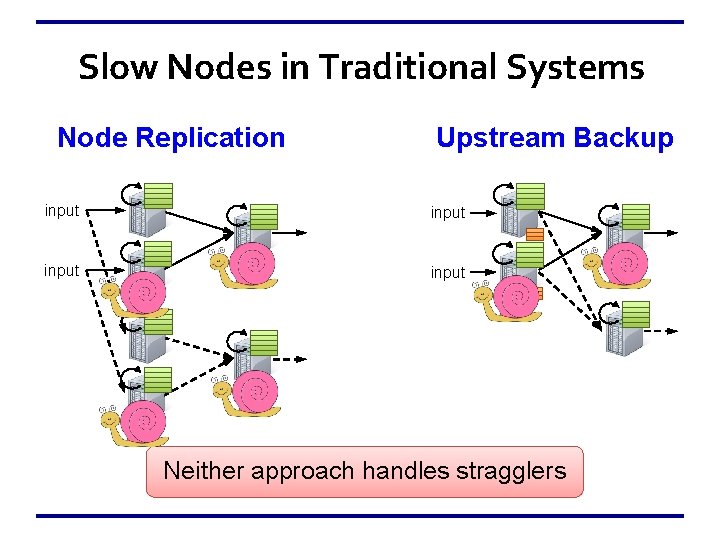

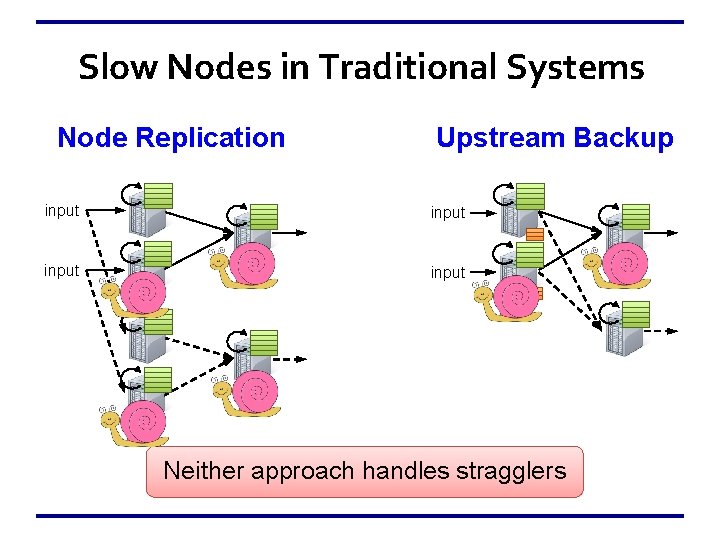

Slow Nodes in Traditional Systems Node Replication Upstream Backup input Neither approach handles stragglers

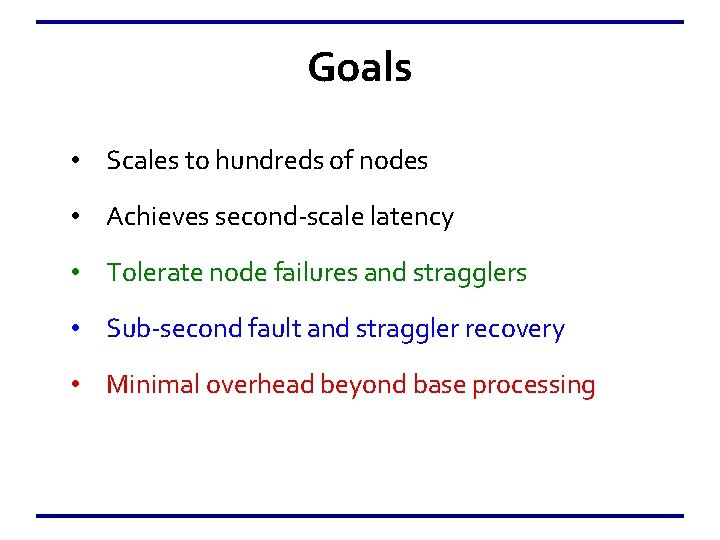

Goals • Scales to hundreds of nodes • Achieves second-scale latency • Tolerate node failures and stragglers • Sub-second fault and straggler recovery • Minimal overhead beyond base processing

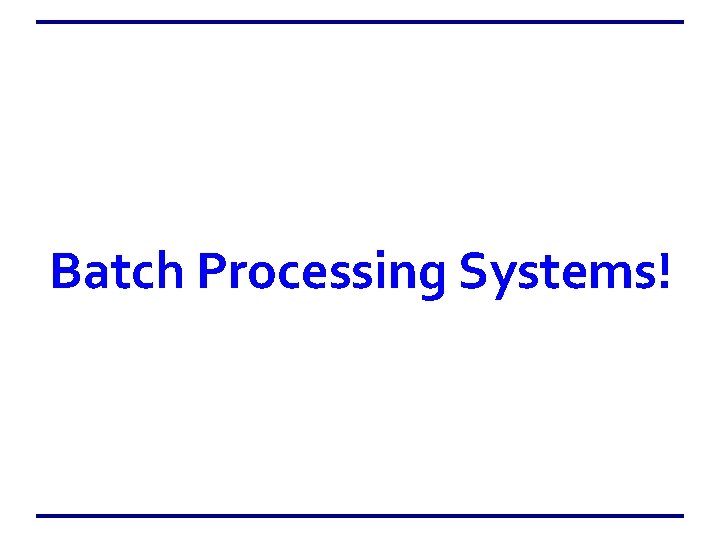

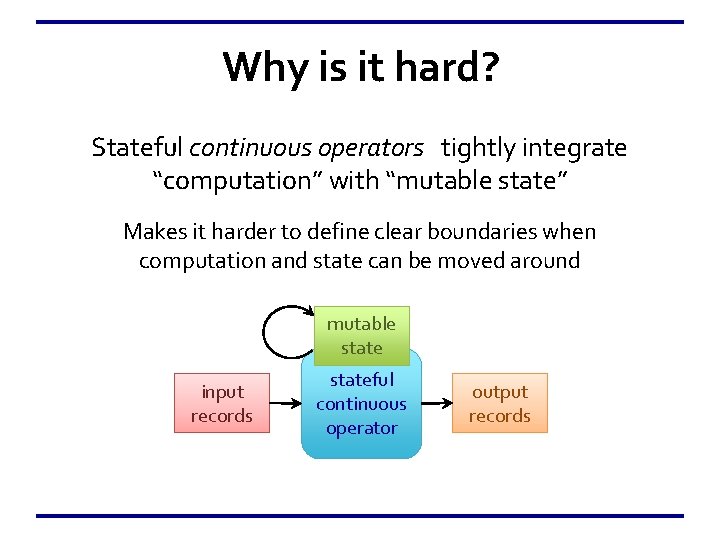

Why is it hard? Stateful continuous operators tightly integrate “computation” with “mutable state” Makes it harder to define clear boundaries when computation and state can be moved around mutable state input records stateful continuous operator output records

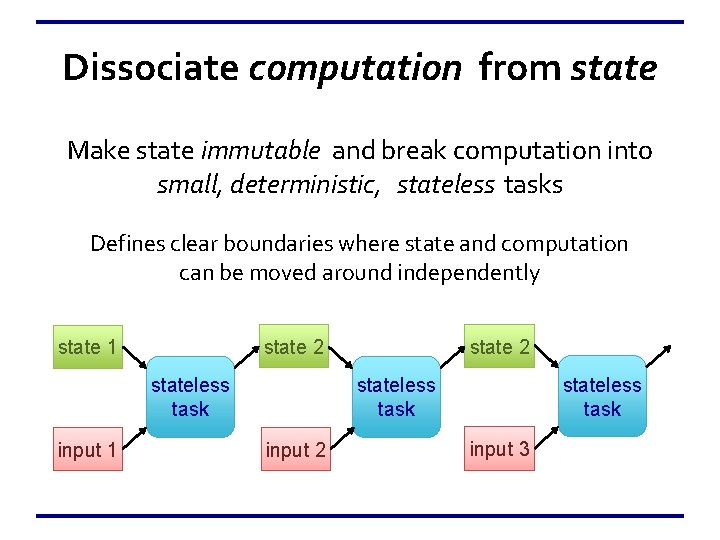

Dissociate computation from state Make state immutable and break computation into small, deterministic, stateless tasks Defines clear boundaries where state and computation can be moved around independently stateless task input 1 state 2 state 1 stateless task input 2 input 3

Batch Processing Systems!

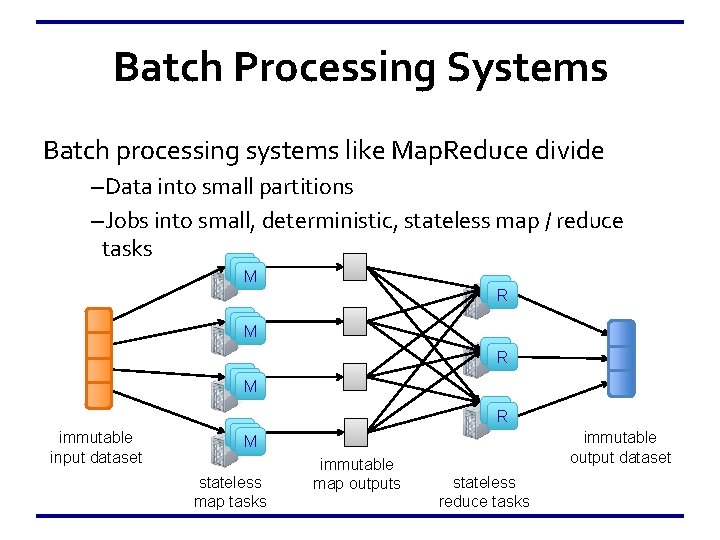

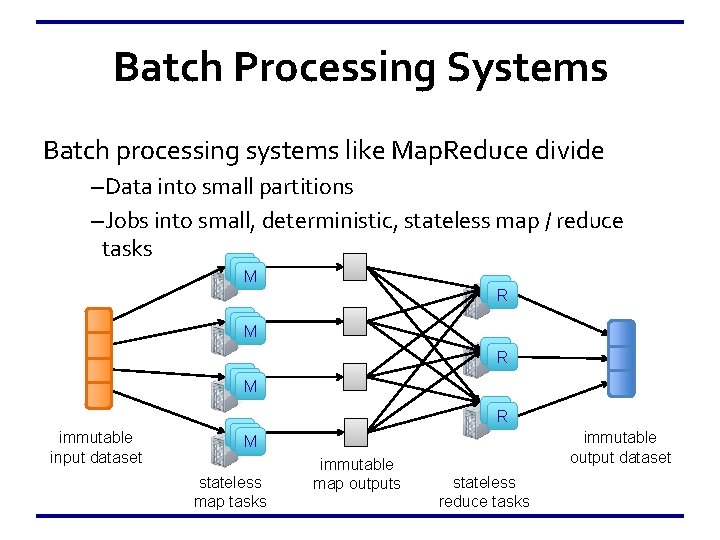

Batch Processing Systems Batch processing systems like Map. Reduce divide –Data into small partitions –Jobs into small, deterministic, stateless map / reduce tasks M M M RR M M M immutable input dataset RR M M M stateless map tasks immutable map outputs immutable output dataset stateless reduce tasks

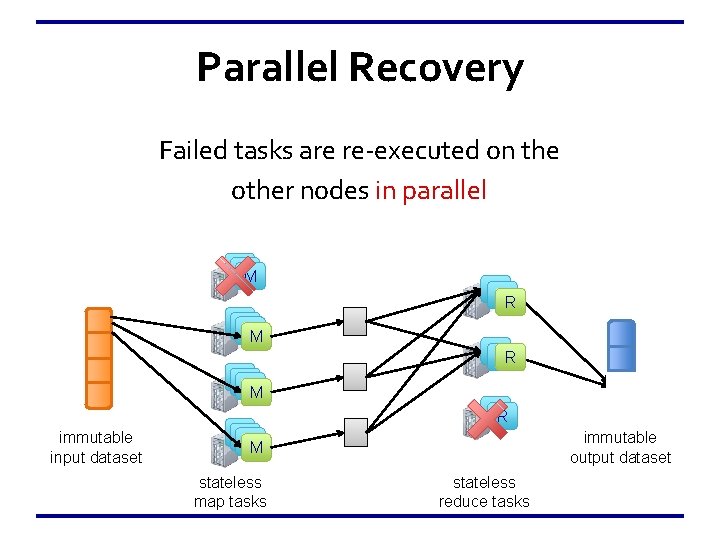

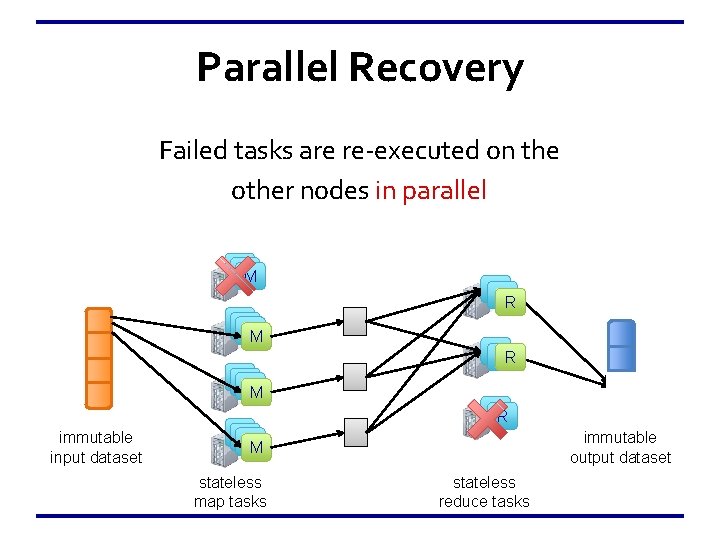

Parallel Recovery Failed tasks are re-executed on the other nodes in parallel M M MM RR R RRR M M MM immutable input dataset M M MM stateless map tasks RR immutable output dataset stateless reduce tasks

Discretized Stream Processing

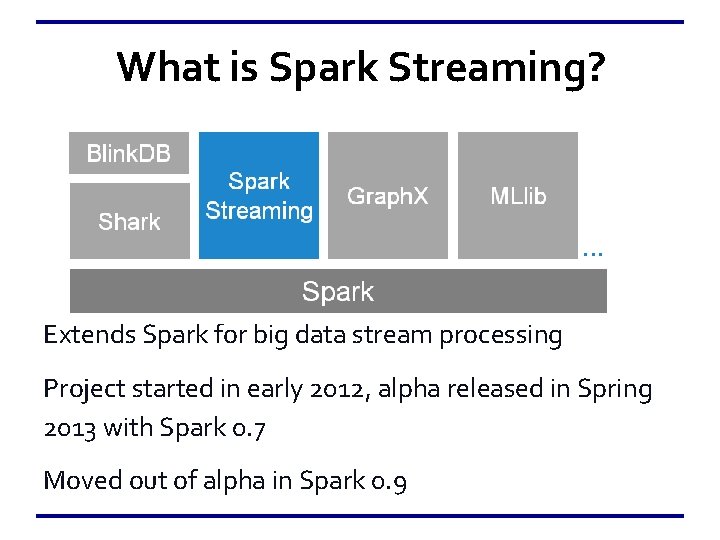

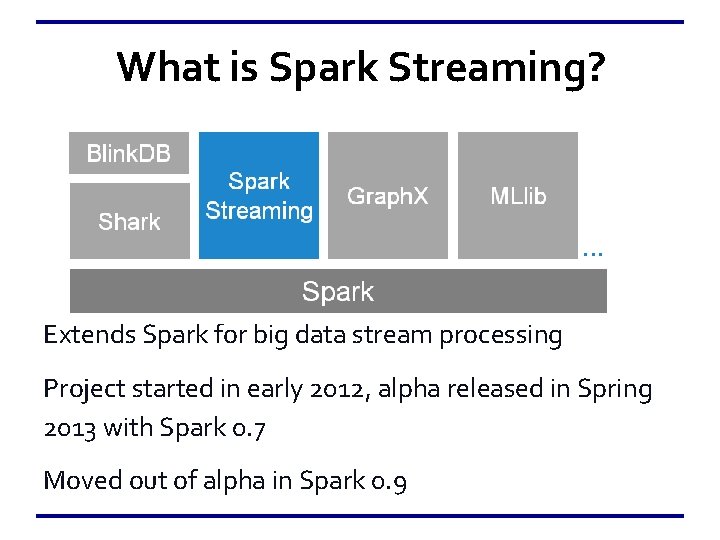

What is Spark Streaming? Extends Spark for big data stream processing Project started in early 2012, alpha released in Spring 2013 with Spark 0. 7 Moved out of alpha in Spark 0. 9

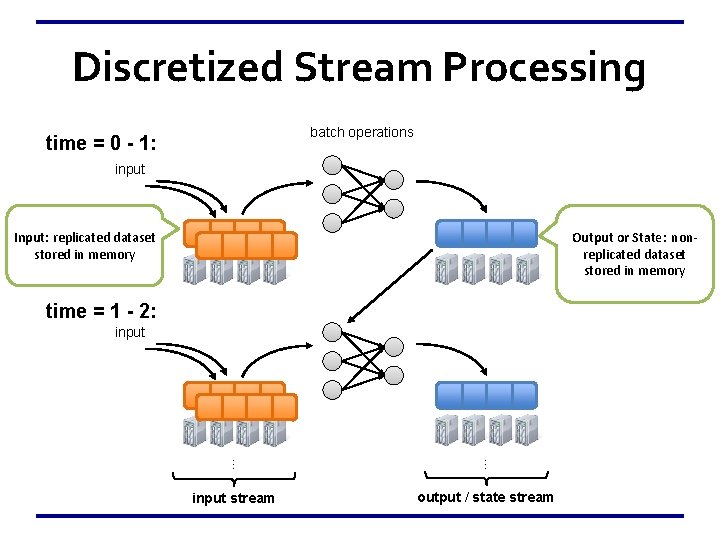

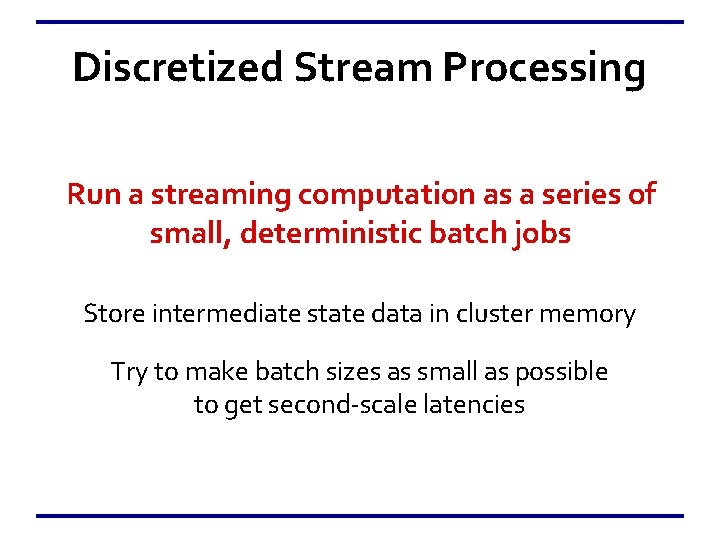

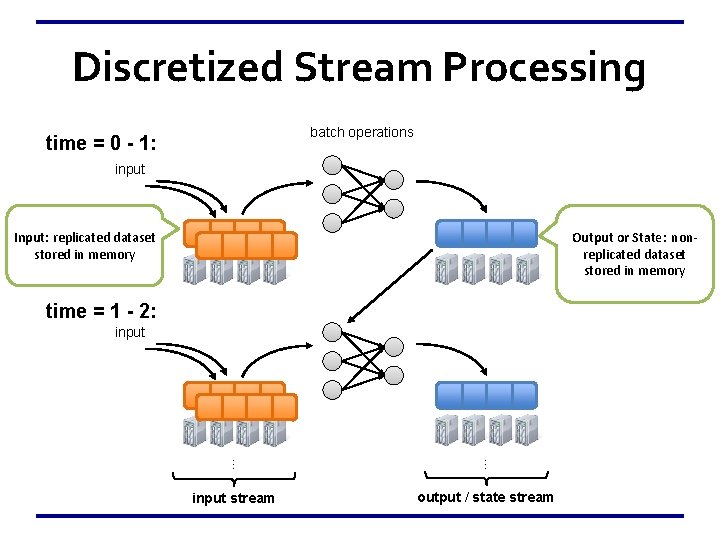

Discretized Stream Processing Run a streaming computation as a series of small, deterministic batch jobs Store intermediate state data in cluster memory Try to make batch sizes as small as possible to get second-scale latencies

Discretized Stream Processing batch operations time = 0 - 1: input Output or State: nonreplicated dataset stored in memory Input: replicated dataset stored in memory time = 1 - 2: input stream … … input output / state stream

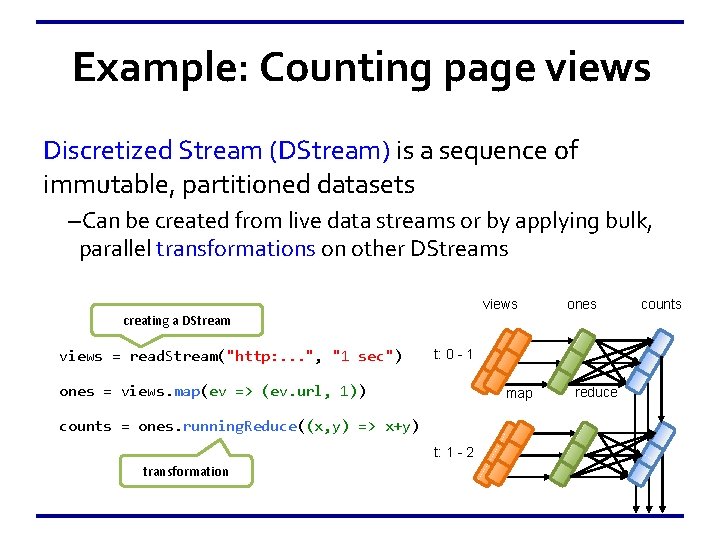

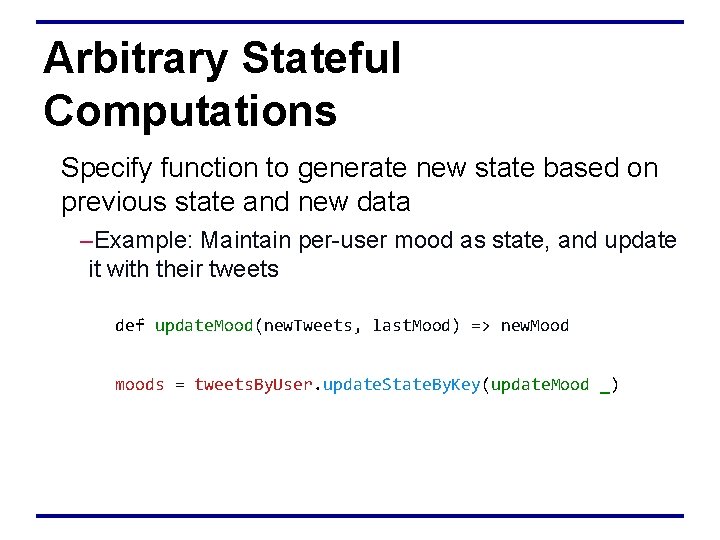

Example: Counting page views Discretized Stream (DStream) is a sequence of immutable, partitioned datasets –Can be created from live data streams or by applying bulk, parallel transformations on other DStreams views creating a DStream views = read. Stream("http: . . . ", "1 sec") t: 0 - 1 ones = views. map(ev => (ev. url, 1)) map counts = ones. running. Reduce((x, y) => x+y) t: 1 - 2 transformation ones reduce counts

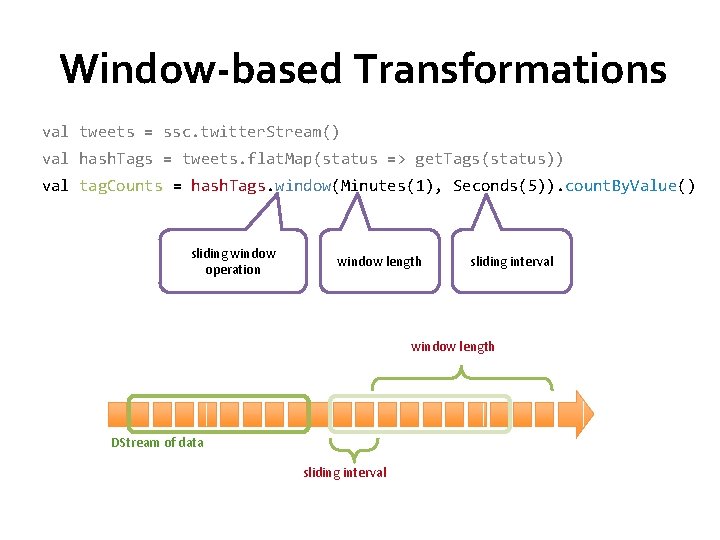

Window-based Transformations val tweets = ssc. twitter. Stream() val hash. Tags = tweets. flat. Map(status => get. Tags(status)) val tag. Counts = hash. Tags. window(Minutes(1), Seconds(5)). count. By. Value() sliding window operation window length sliding interval window length DStream of data sliding interval

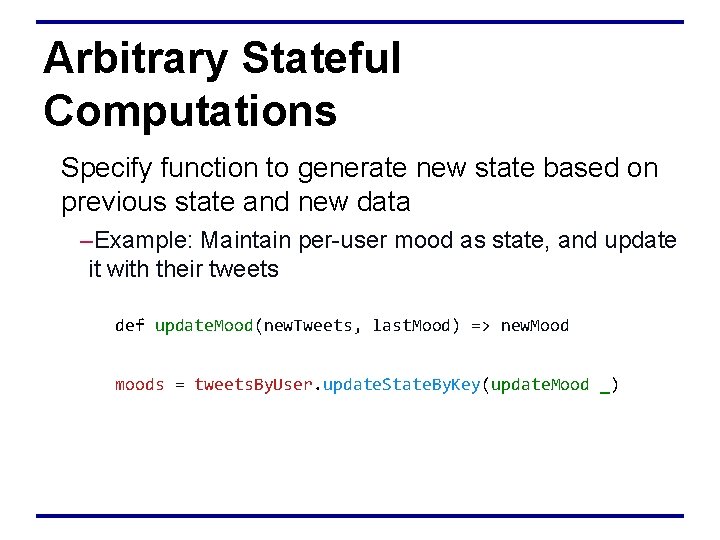

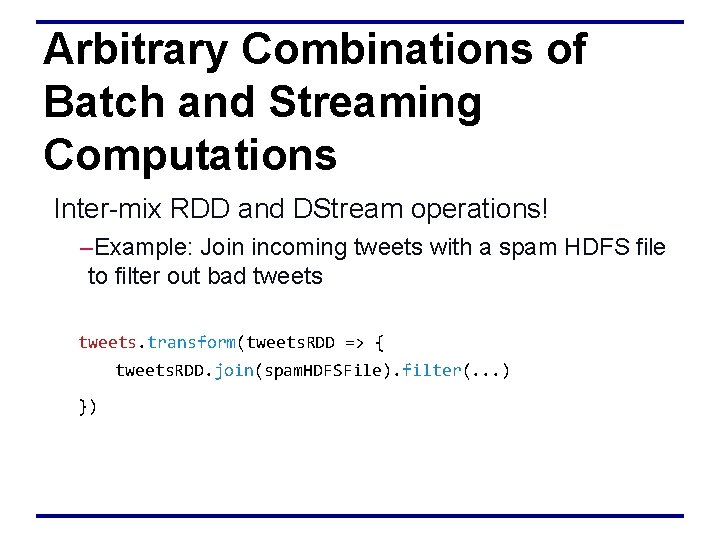

Arbitrary Stateful Computations Specify function to generate new state based on previous state and new data –Example: Maintain per-user mood as state, and update it with their tweets def update. Mood(new. Tweets, last. Mood) => new. Mood moods = tweets. By. User. update. State. By. Key(update. Mood _)

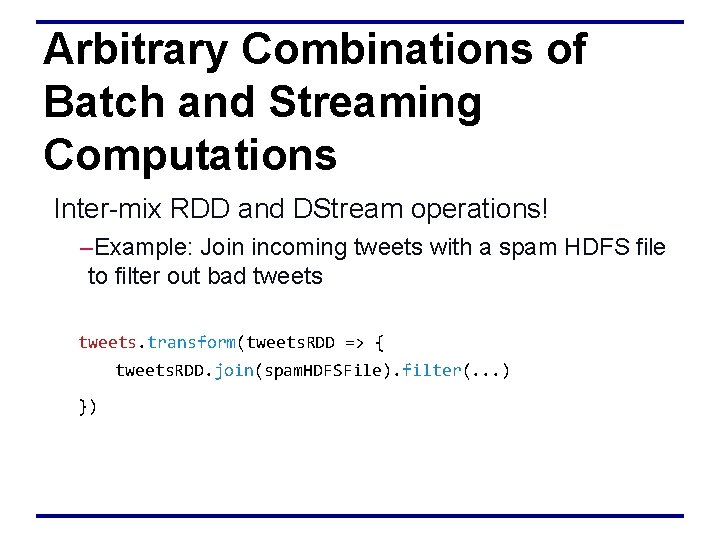

Arbitrary Combinations of Batch and Streaming Computations Inter-mix RDD and DStream operations! –Example: Join incoming tweets with a spam HDFS file to filter out bad tweets. transform(tweets. RDD => { tweets. RDD. join(spam. HDFSFile). filter(. . . ) })

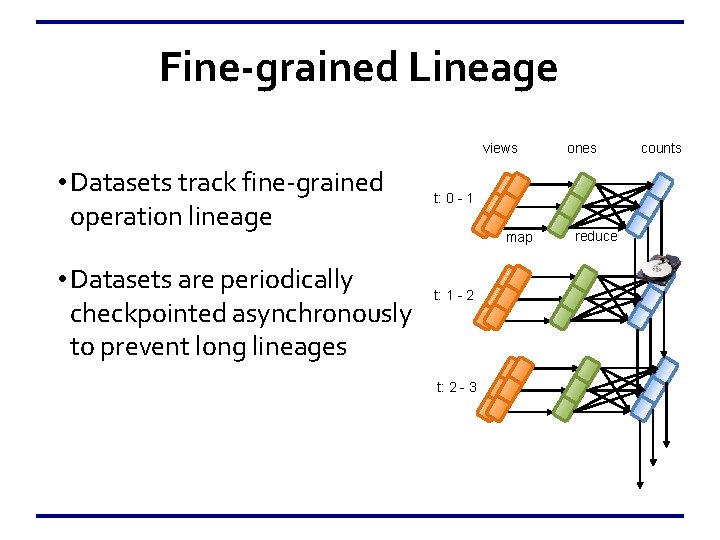

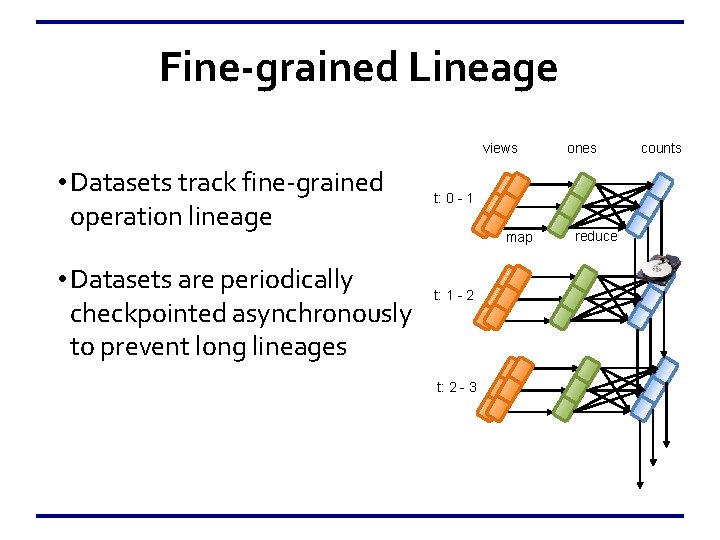

Fine-grained Lineage views • Datasets track fine-grained operation lineage • Datasets are periodically checkpointed asynchronously to prevent long lineages ones t: 0 - 1 map t: 1 - 2 t: 2 - 3 reduce counts

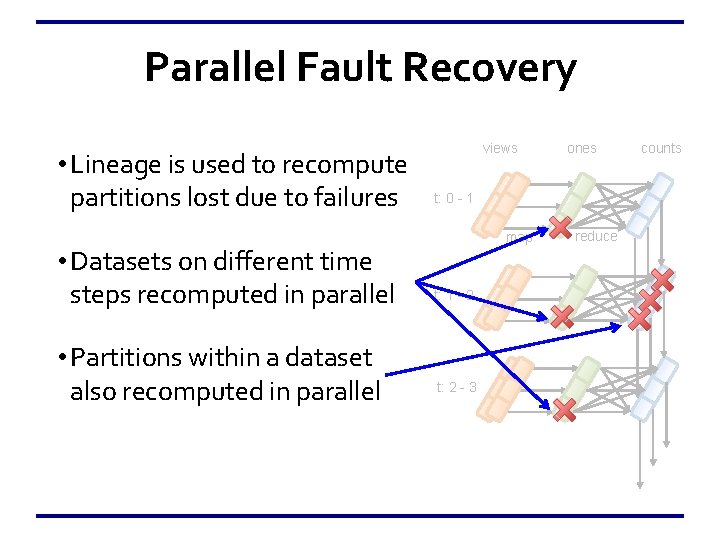

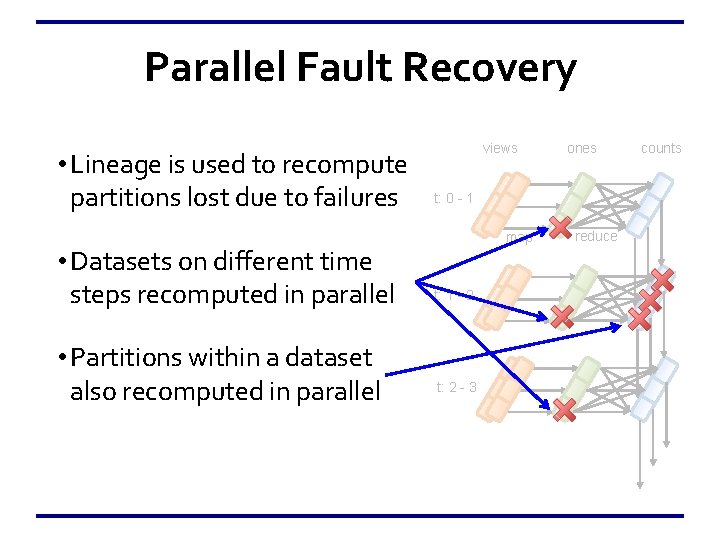

Parallel Fault Recovery • Lineage is used to recompute partitions lost due to failures • Datasets on different time steps recomputed in parallel • Partitions within a dataset also recomputed in parallel views ones t: 0 - 1 map t: 1 - 2 t: 2 - 3 reduce counts

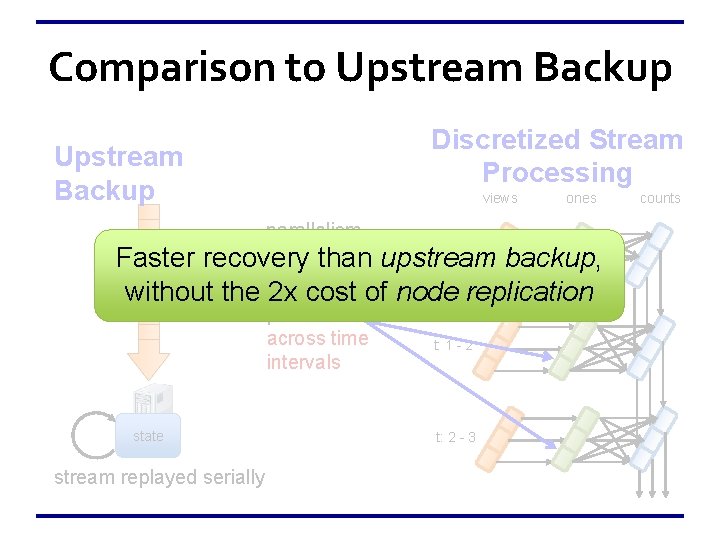

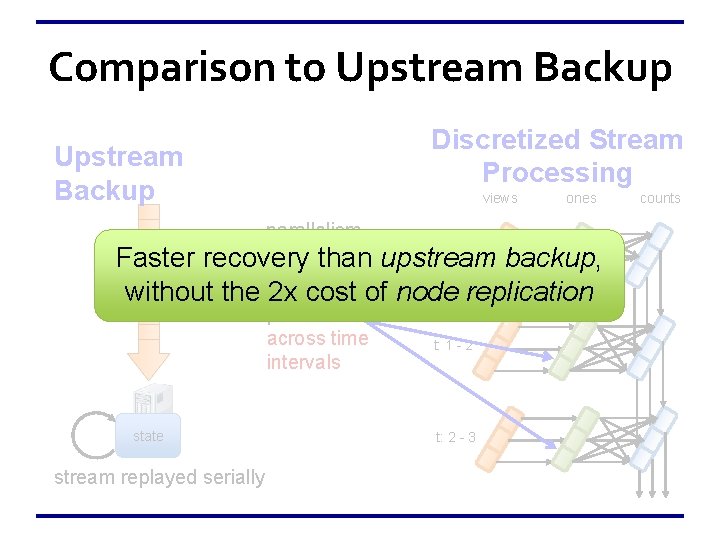

Comparison to Upstream Backup Discretized Stream Processing Upstream Backup views parallelism withinthan a recovery batch t: 0 - 1 ones Faster upstream backup, without the 2 x cost of node replication parallelism across time intervals state stream replayed serially t: 1 - 2 t: 2 - 3 counts

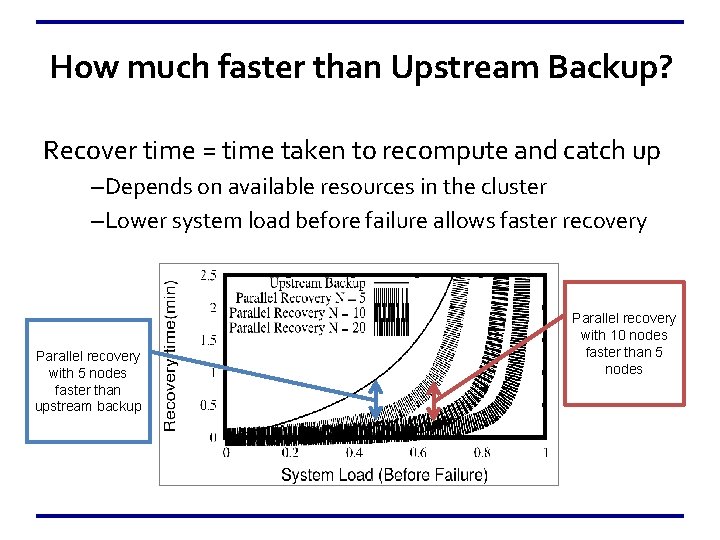

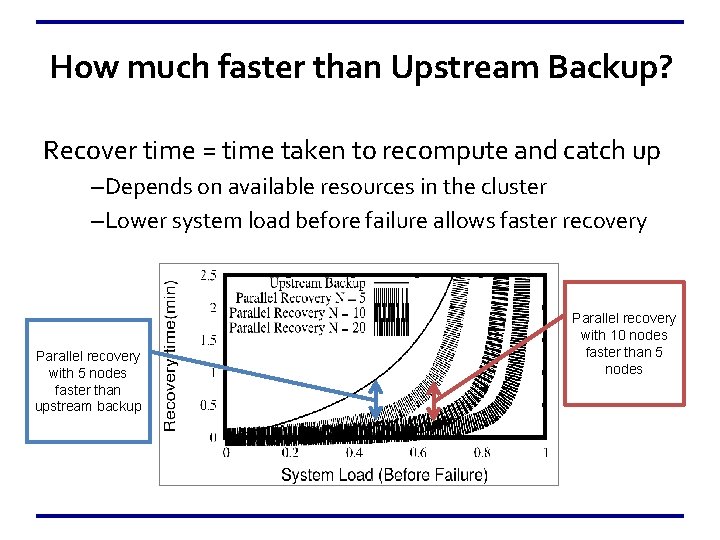

How much faster than Upstream Backup? Recover time = time taken to recompute and catch up –Depends on available resources in the cluster –Lower system load before failure allows faster recovery Parallel recovery with 5 nodes faster than upstream backup Parallel recovery with 10 nodes faster than 5 nodes

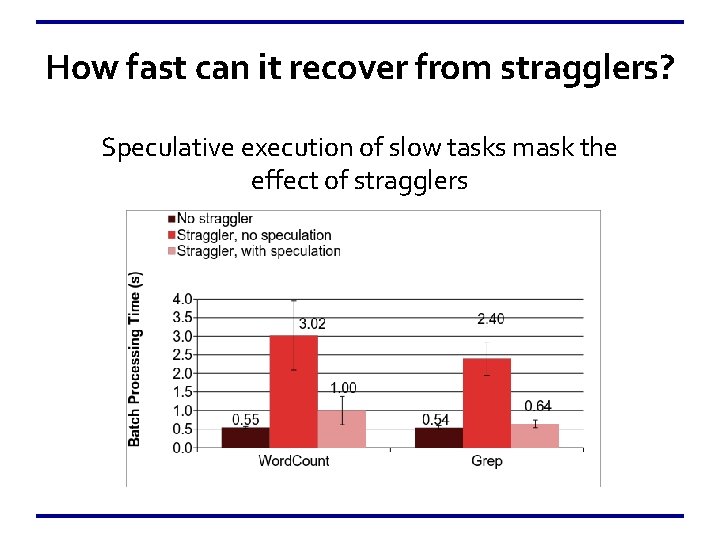

Parallel Straggler Recovery • Straggler mitigation techniques – Detect slow tasks (e. g. 2 X slower than other tasks) – Speculatively launch more copies of the tasks in parallel on other machines • Masks the impact of slow nodes on the progress of the system

Evaluation

Spark Streaming • Implemented using Spark processing engine* – Spark allows datasets to be stored in memory, and automatically recovers them using lineage • Modifications required to reduce jobs launching overheads from seconds to milliseconds [ *Resilient Distributed Datasets - NSDI, 2012 ]

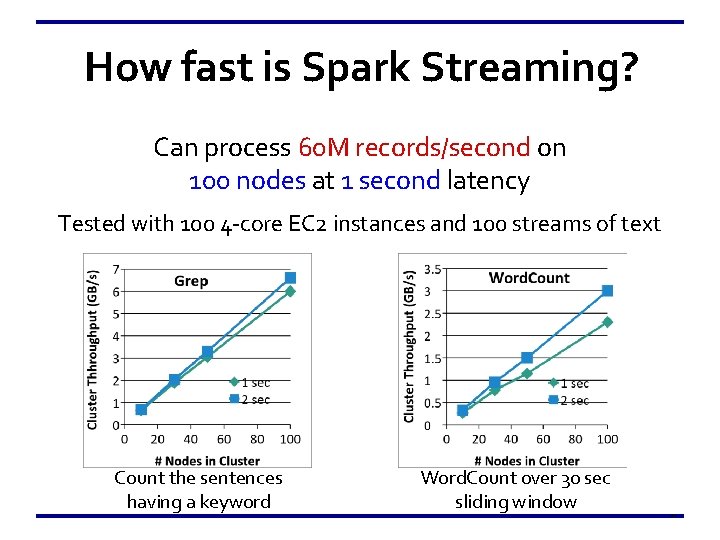

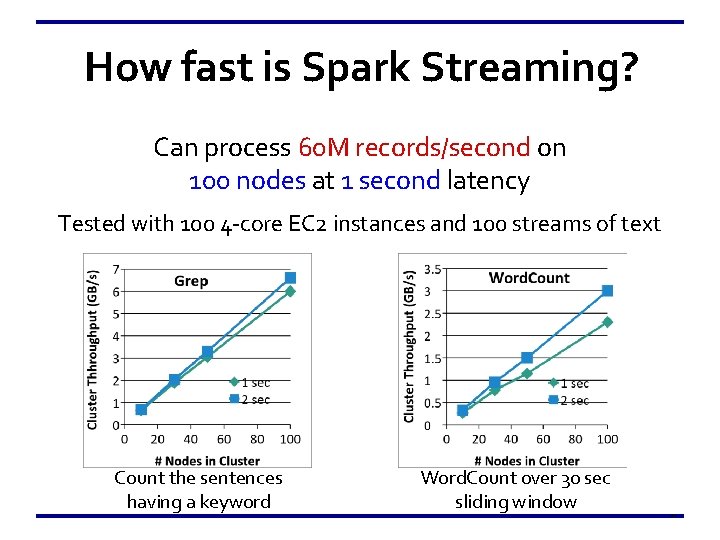

How fast is Spark Streaming? Can process 60 M records/second on 100 nodes at 1 second latency Tested with 100 4 -core EC 2 instances and 100 streams of text Count the sentences having a keyword Word. Count over 30 sec sliding window 31

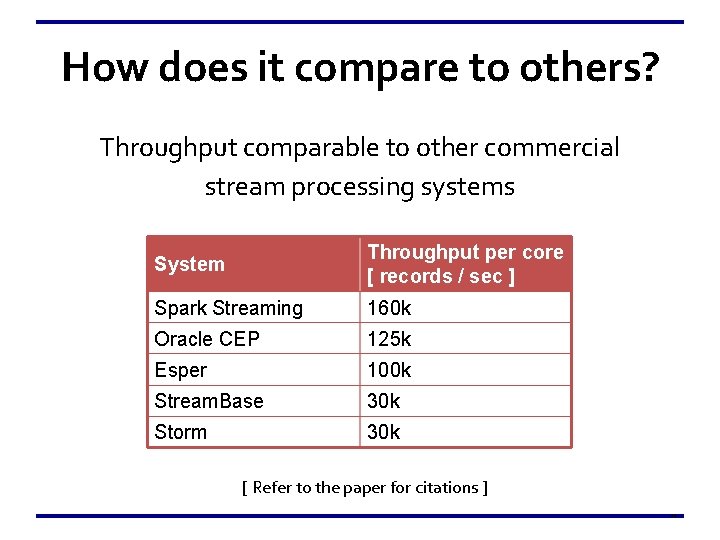

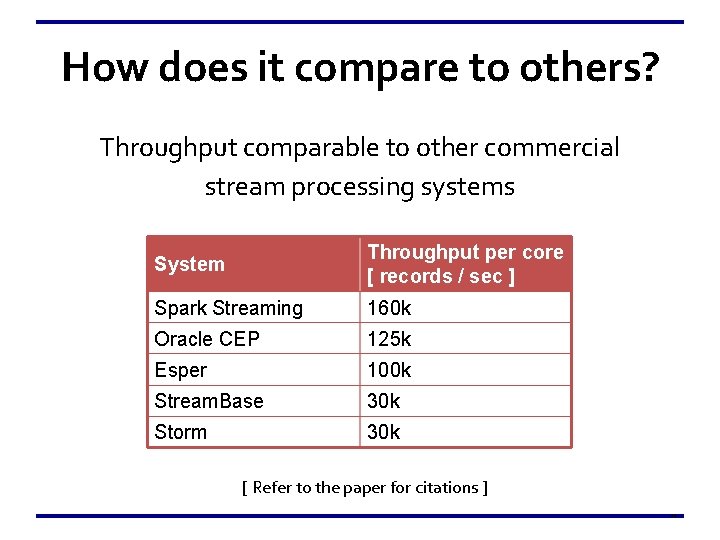

How does it compare to others? Throughput comparable to other commercial stream processing systems System Throughput per core [ records / sec ] Spark Streaming 160 k Oracle CEP 125 k Esper 100 k Stream. Base 30 k Storm 30 k [ Refer to the paper for citations ] 32

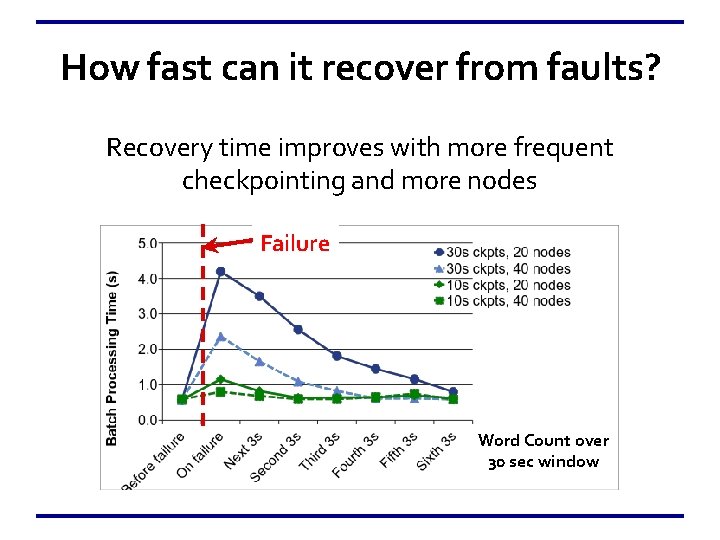

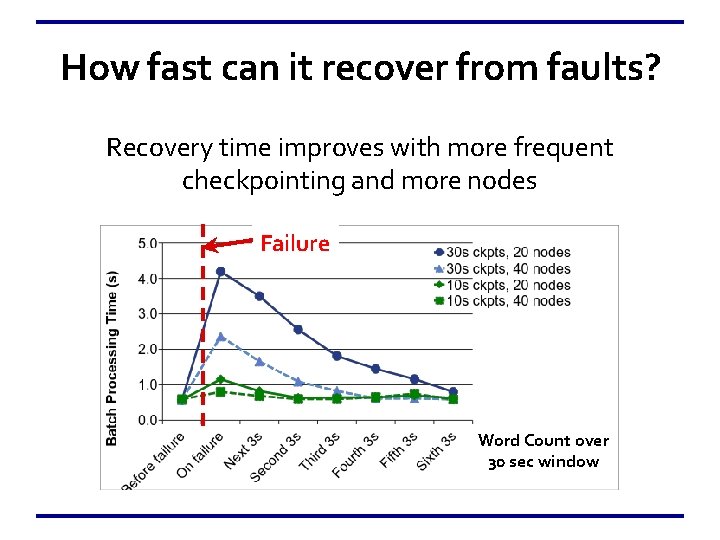

How fast can it recover from faults? Recovery time improves with more frequent checkpointing and more nodes Failure Word Count over 30 sec window

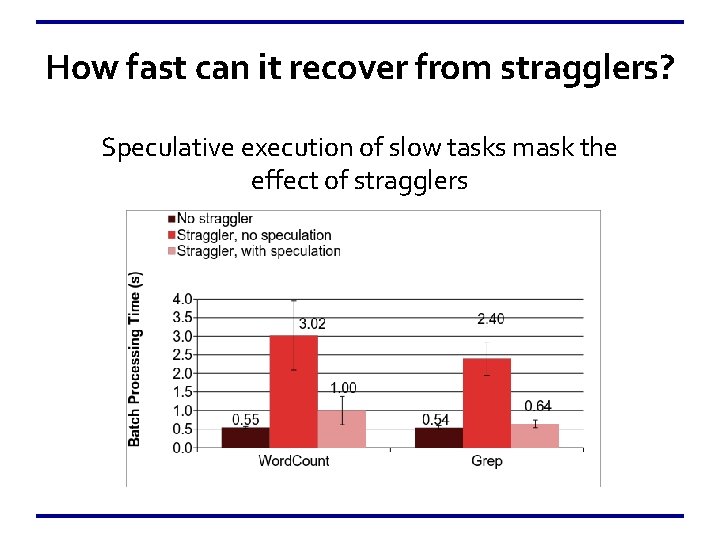

How fast can it recover from stragglers? Speculative execution of slow tasks mask the effect of stragglers

Unification with Batch and Interactive Processing

Unification with Batch and Interactive Processing • Discretized Streams creates a single programming and execution model for running streaming, batch and interactive jobs • Combine live data streams with historic data live. Counts. join(historic. Counts). map(. . . ) • Interactively query live streams live. Counts. slice(“ 21: 00”, “ 21: 05”). count()

Takeaways • Large scale streaming systems must handle faults and stragglers • Discretized Streams model streaming computation as series of batch jobs – Uses simple techniques to exploit parallelism in streams – Scales to 100 nodes with 1 second latency – Recovers from failures and stragglers very fast • Spark Streaming is open source - spark-project. org – Used in production by ~ 10 organizations!