Dictionary data structures for the Inverted Index Ch

![Sec. 3. 1 A naïve dictionary n An array of struct: char[20] 20 bytes Sec. 3. 1 A naïve dictionary n An array of struct: char[20] 20 bytes](https://slidetodoc.com/presentation_image/2525070015d8c0fe0ca99b3cd89cb8ff/image-4.jpg)

- Slides: 34

Dictionary data structures for the Inverted Index

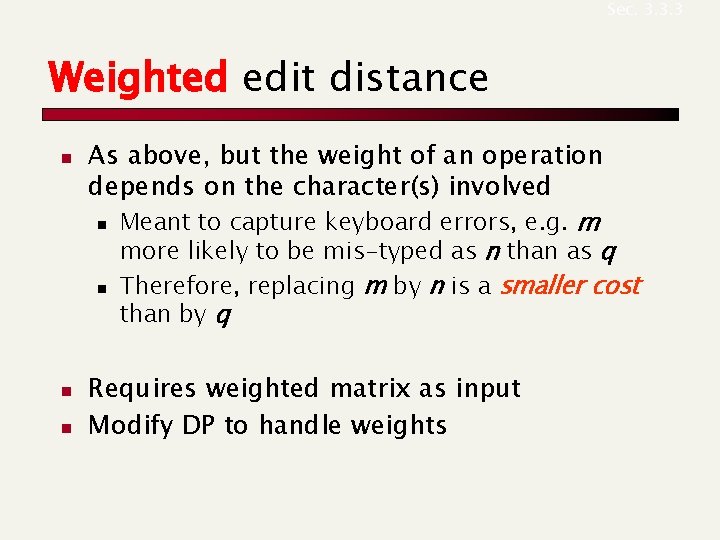

Ch. 3 This lecture n Dictionary data structures n n n Exact search Prefix search “Tolerant” retrieval n n Edit-distance queries Wild-card queries Spelling correction Soundex

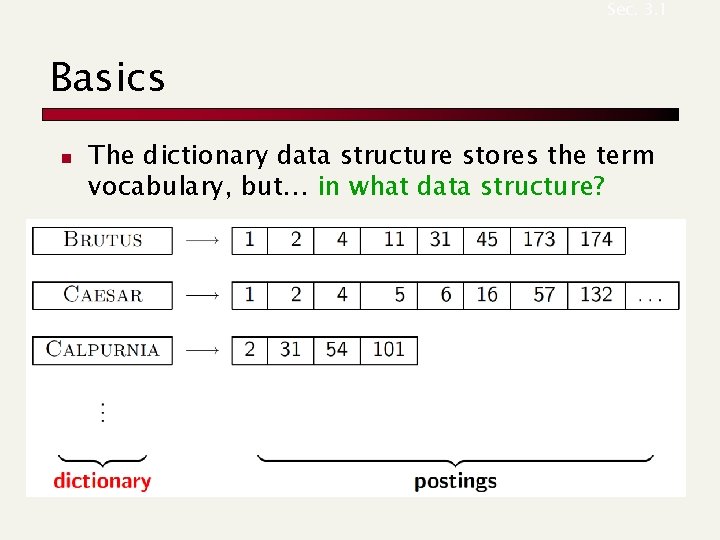

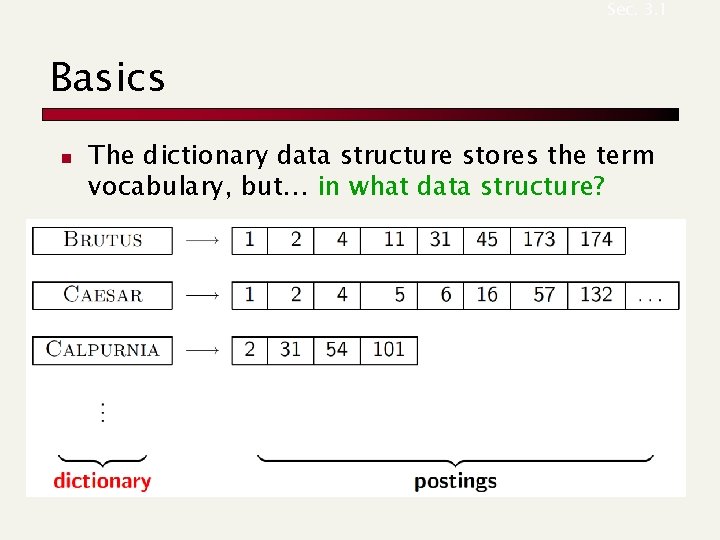

Sec. 3. 1 Basics n The dictionary data structure stores the term vocabulary, but… in what data structure?

![Sec 3 1 A naïve dictionary n An array of struct char20 20 bytes Sec. 3. 1 A naïve dictionary n An array of struct: char[20] 20 bytes](https://slidetodoc.com/presentation_image/2525070015d8c0fe0ca99b3cd89cb8ff/image-4.jpg)

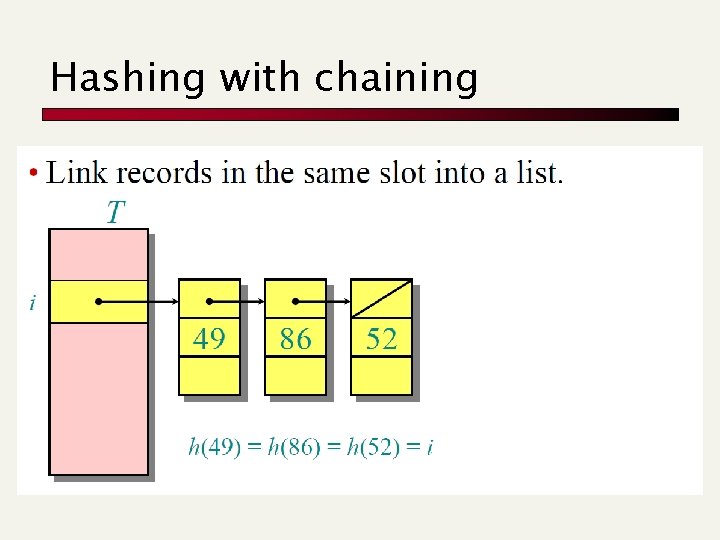

Sec. 3. 1 A naïve dictionary n An array of struct: char[20] 20 bytes int 4/8 bytes Postings * 4/8 bytes n How do we store a dictionary in memory efficiently? n How do we quickly look up elements at query time?

Sec. 3. 1 Dictionary data structures n Two main choices: n n Hash table Tree Trie Some IR systems use hashes, some trees/tries

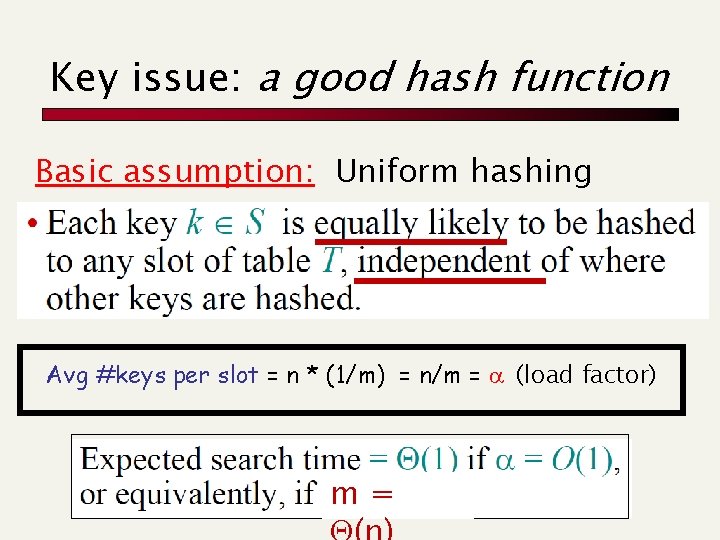

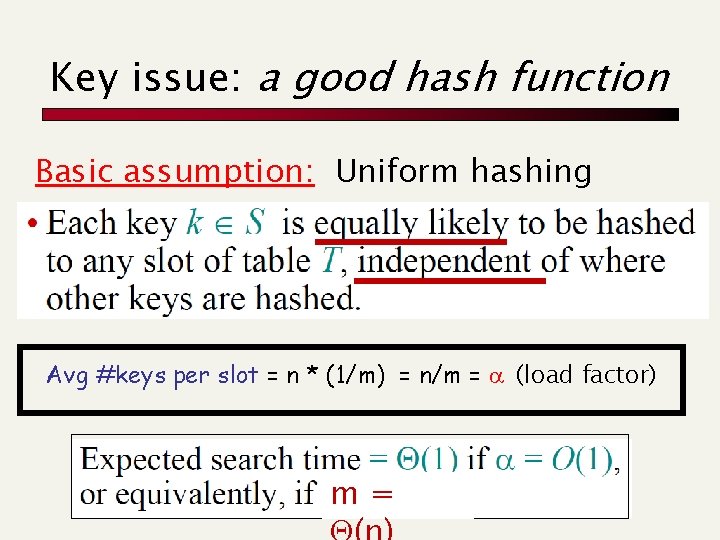

Hashing with chaining

Key issue: a good hash function Basic assumption: Uniform hashing Avg #keys per slot = n * (1/m) = n/m = a (load factor) m=

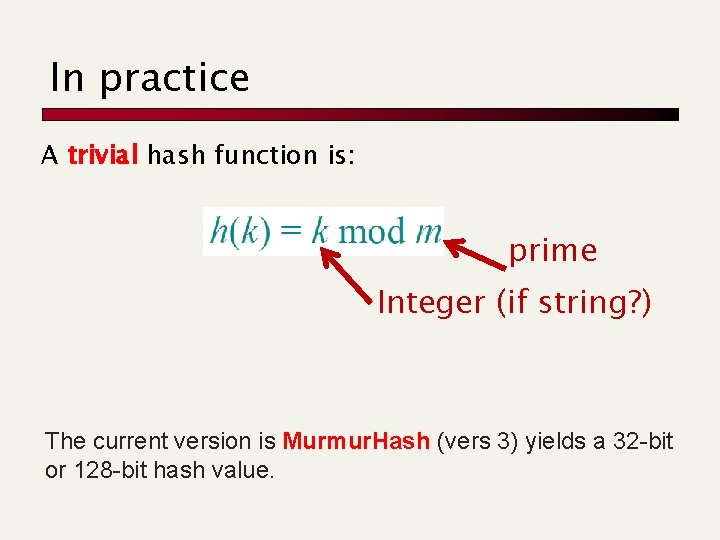

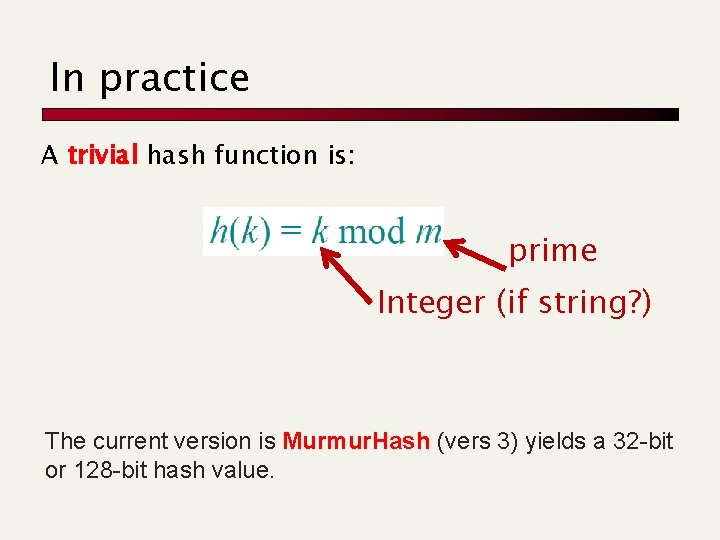

In practice A trivial hash function is: prime Integer (if string? ) The current version is Murmur. Hash (vers 3) yields a 32 -bit or 128 -bit hash value.

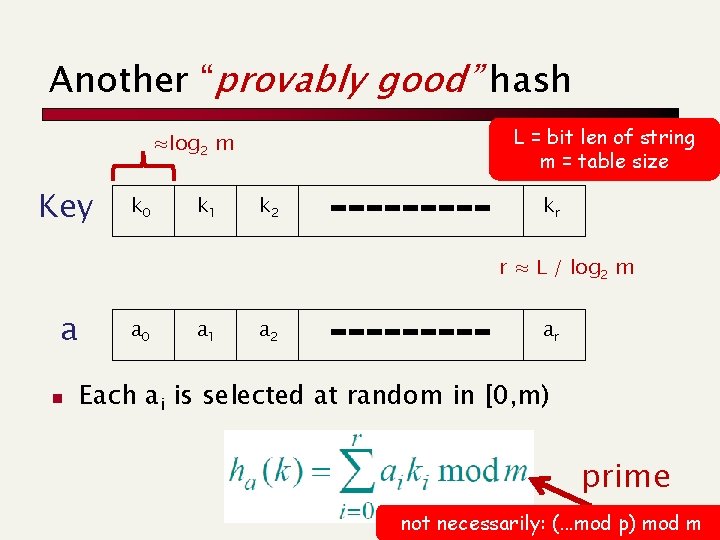

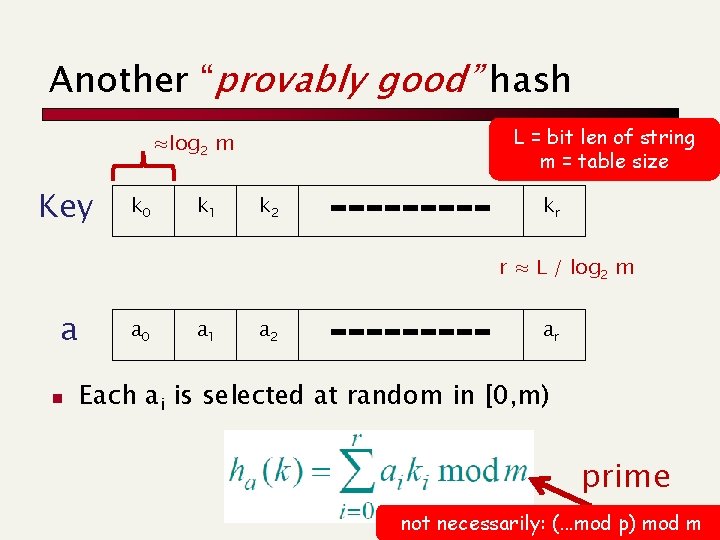

Another “provably good” hash L = bit len of string m = table size ≈log 2 m Key k 0 k 1 k 2 kr r ≈ L / log 2 m a n a 0 a 1 a 2 ar Each ai is selected at random in [0, m) prime not necessarily: (. . . mod p) mod m

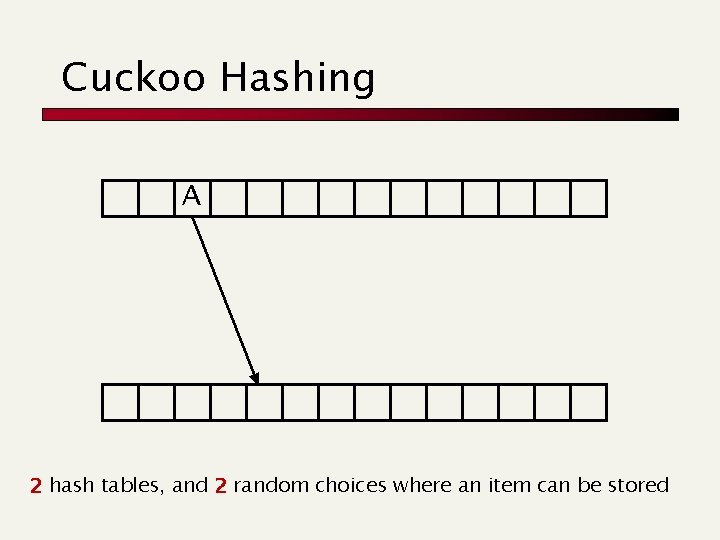

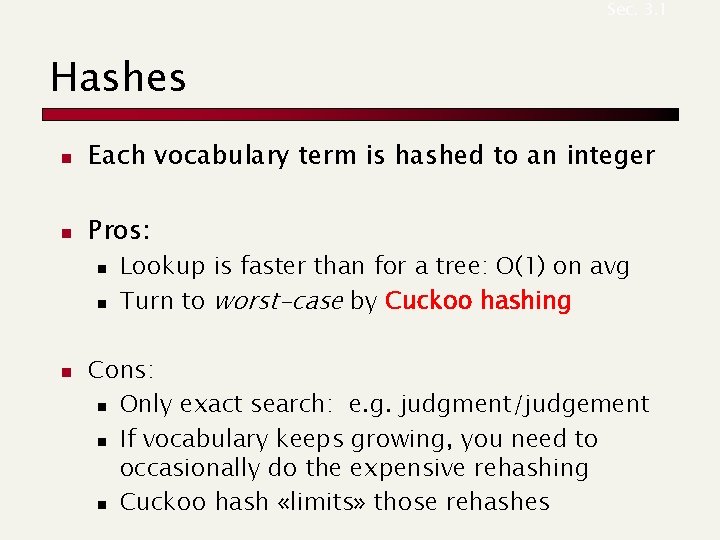

Sec. 3. 1 Hashes n Each vocabulary term is hashed to an integer n Pros: n n n Lookup is faster than for a tree: O(1) on avg Turn to worst-case by Cuckoo hashing Cons: n Only exact search: e. g. judgment/judgement n If vocabulary keeps growing, you need to occasionally do the expensive rehashing n Cuckoo hash «limits» those rehashes

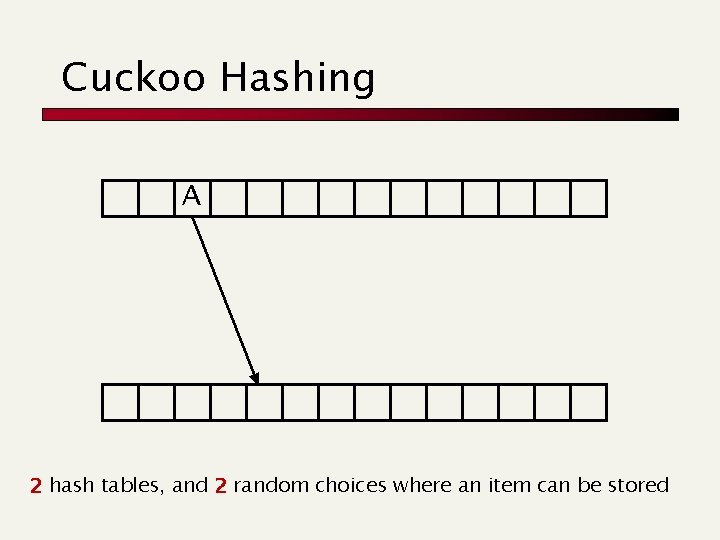

Cuckoo Hashing A 2 hash tables, and 2 random choices where an item can be stored

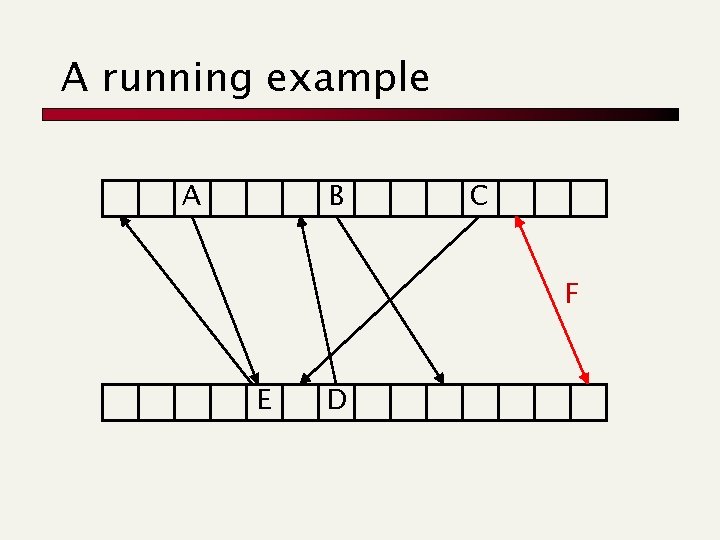

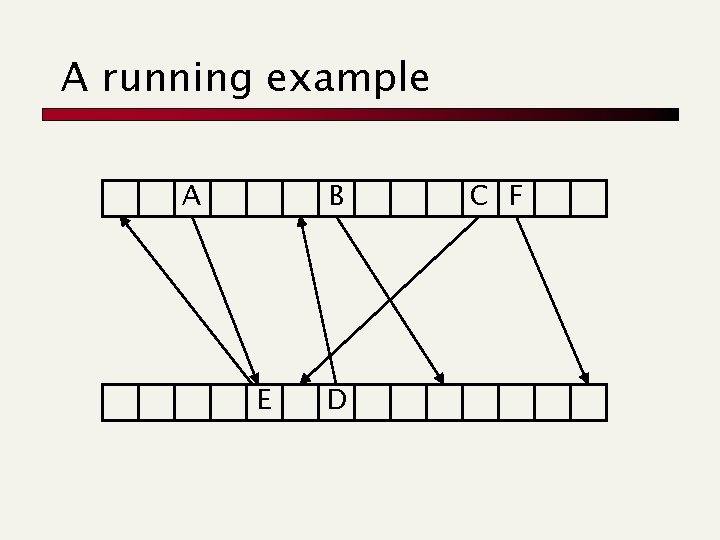

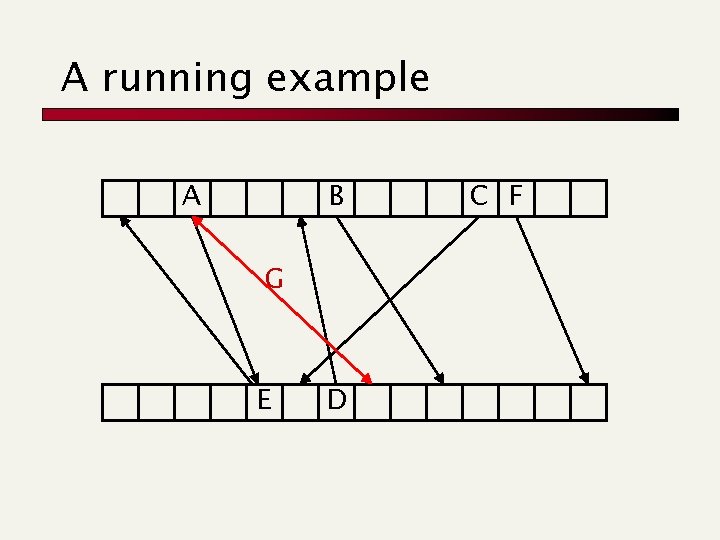

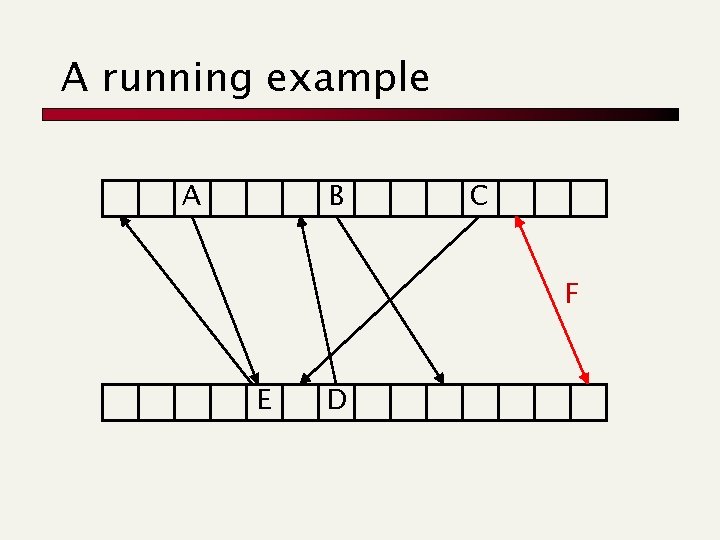

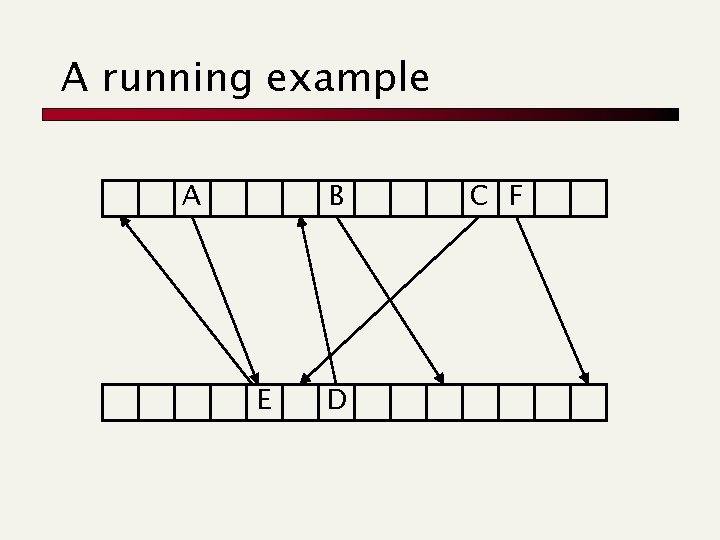

A running example A B C F E D

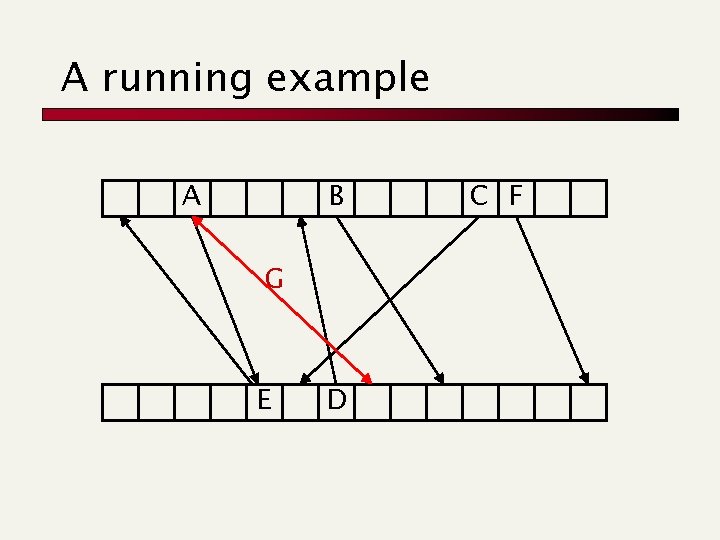

A running example A B E D C F

A running example A B G E D C F

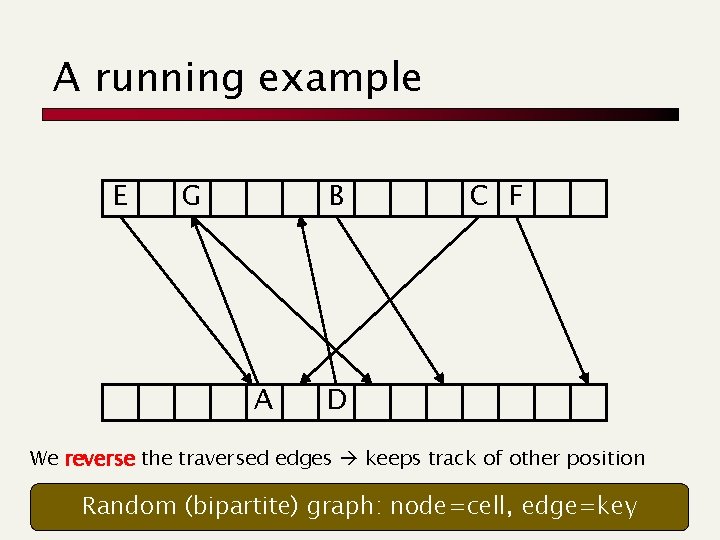

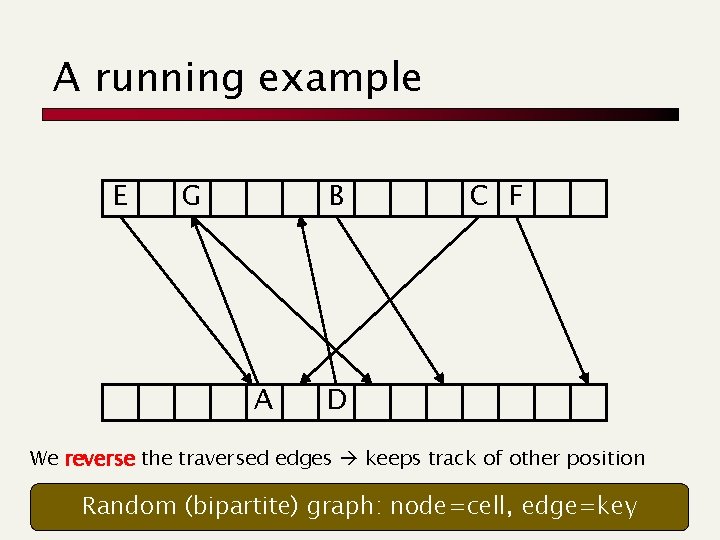

A running example E G B A C F D We reverse the traversed edges keeps track of other position Random (bipartite) graph: node=cell, edge=key

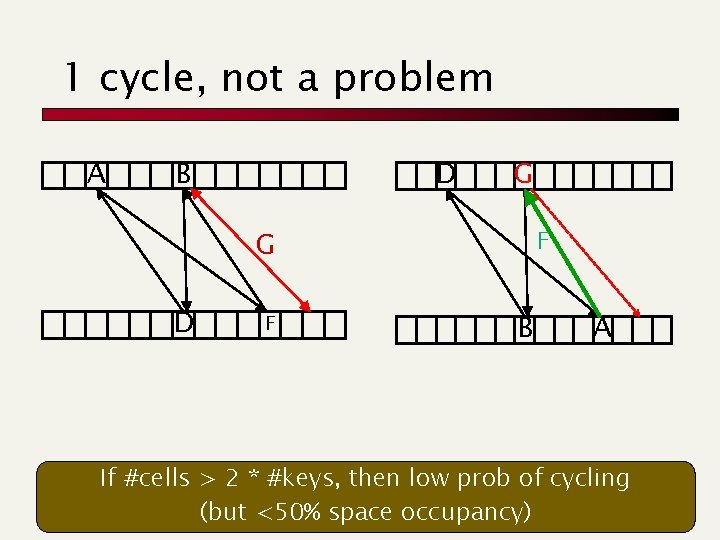

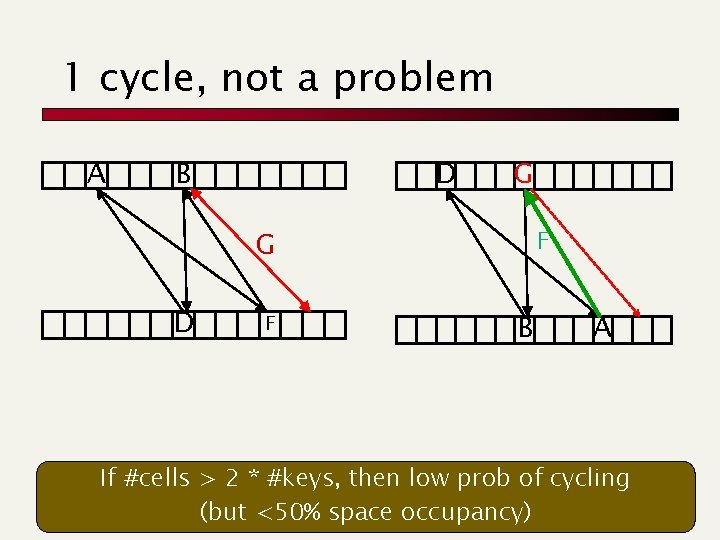

1 cycle, not a problem A B D G F G D F B A If #cells > 2 * #keys, then low prob of cycling (but <50% space occupancy)

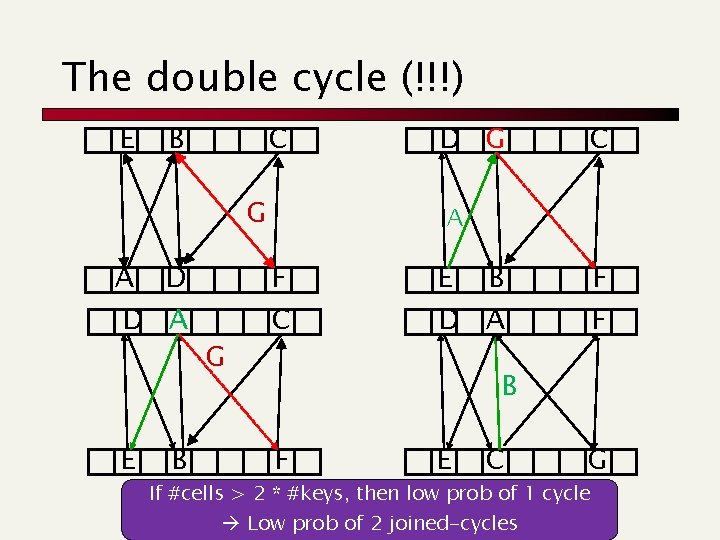

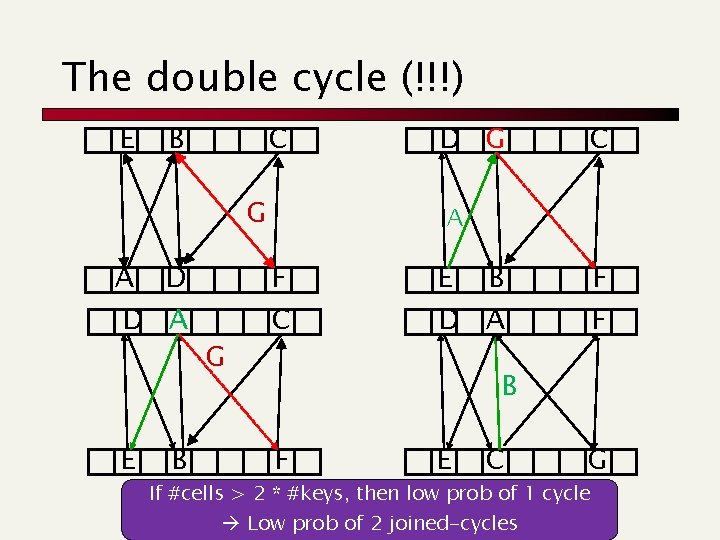

The double cycle (!!!) E B C G A D D A E B G D G C A F C E B D A F F B F E C G If #cells > 2 * #keys, then low prob of 1 cycle Low prob of 2 joined-cycles

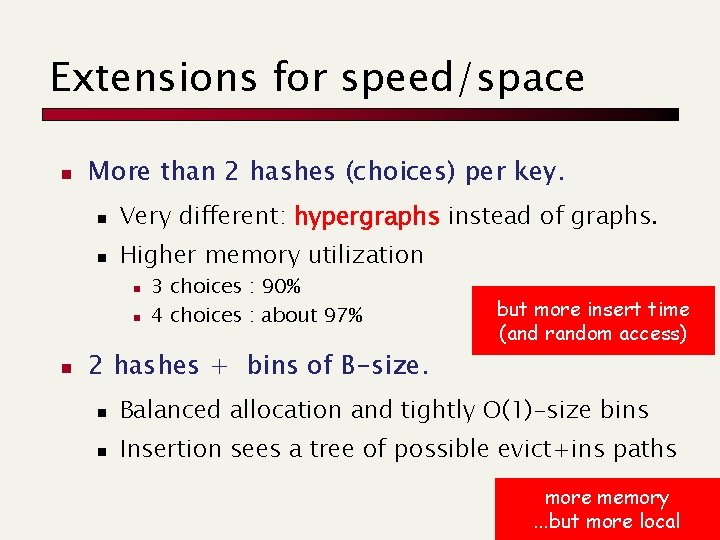

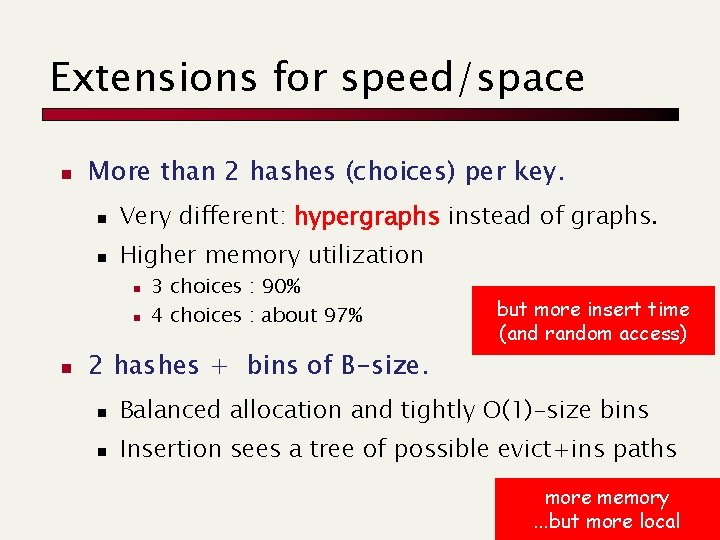

Extensions for speed/space n More than 2 hashes (choices) per key. n Very different: hypergraphs instead of graphs. n Higher memory utilization n 3 choices : 90% 4 choices : about 97% 2 hashes + bins of B-size. but more insert time (and random access) n Balanced allocation and tightly O(1)-size bins n Insertion sees a tree of possible evict+ins paths more memory. . . but more local

Prefix search

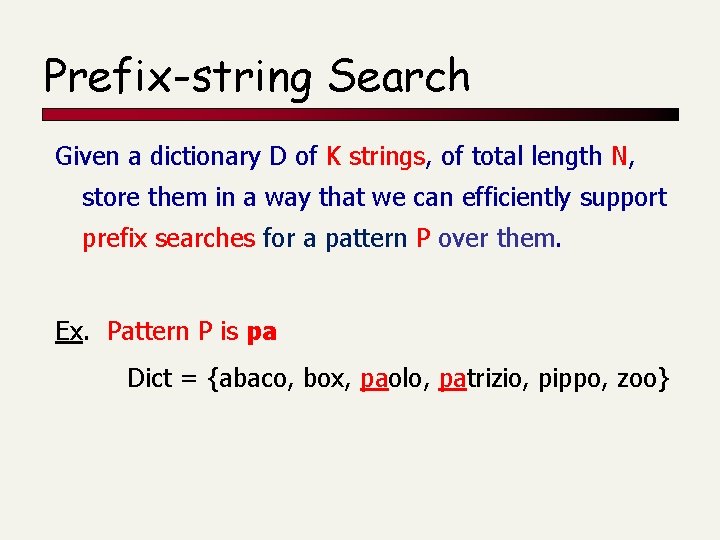

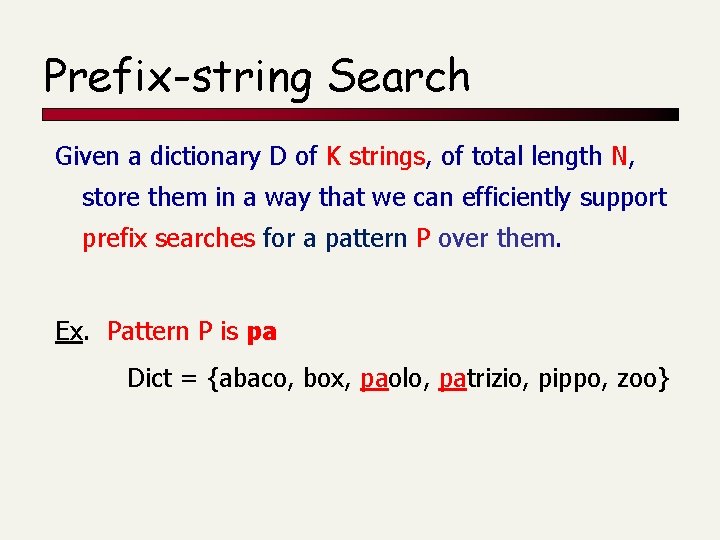

Prefix-string Search Given a dictionary D of K strings, of total length N, store them in a way that we can efficiently support prefix searches for a pattern P over them. Ex. Pattern P is pa Dict = {abaco, box, paolo, patrizio, pippo, zoo}

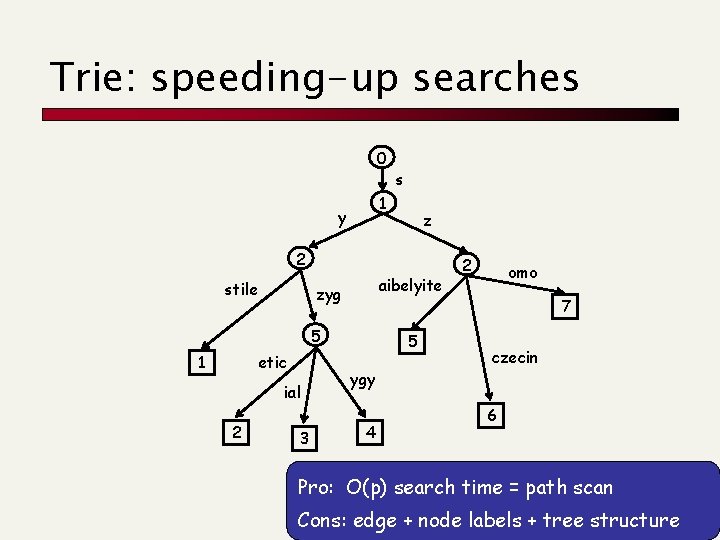

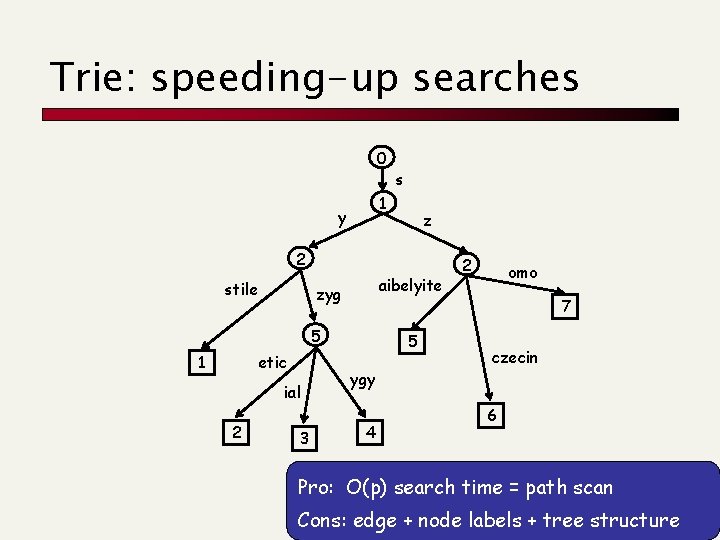

Trie: speeding-up searches 0 s 1 y z 2 stile aibelyite zyg 5 1 etic ial 2 3 5 2 omo 7 czecin ygy 4 6 Pro: O(p) search time = path scan Cons: edge + node labels + tree structure

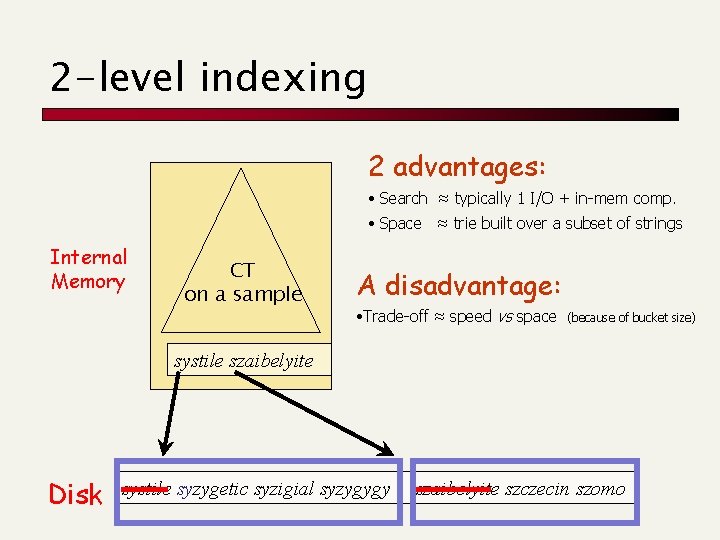

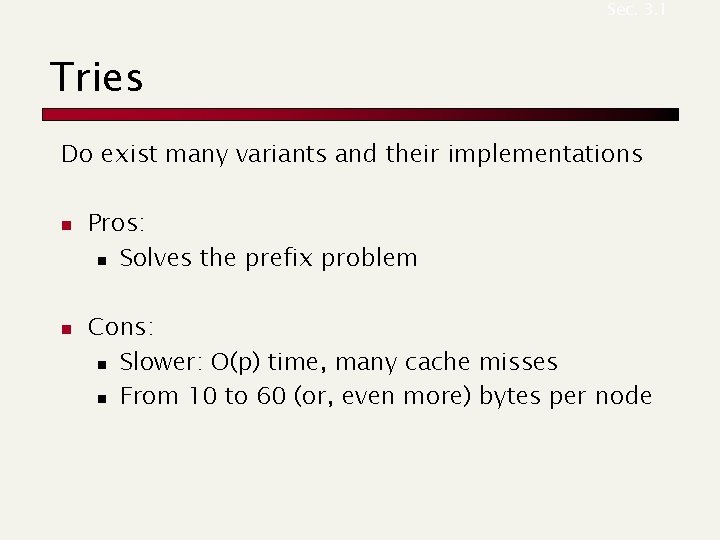

Sec. 3. 1 Tries Do exist many variants and their implementations n n Pros: n Solves the prefix problem Cons: n Slower: O(p) time, many cache misses n From 10 to 60 (or, even more) bytes per node

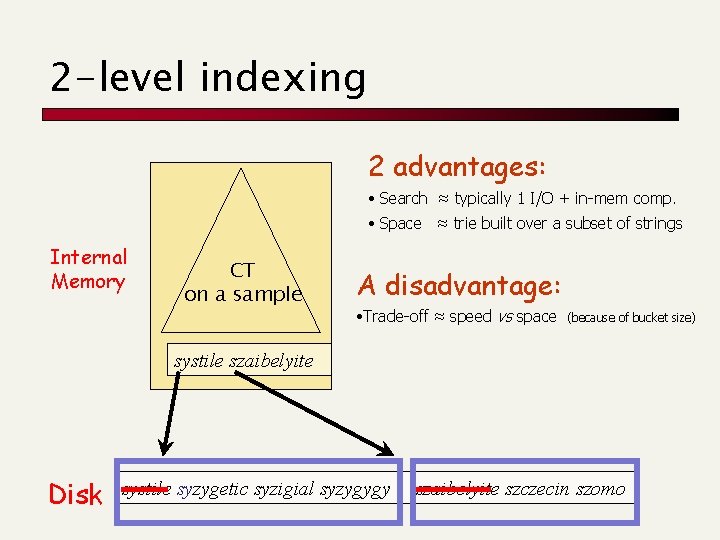

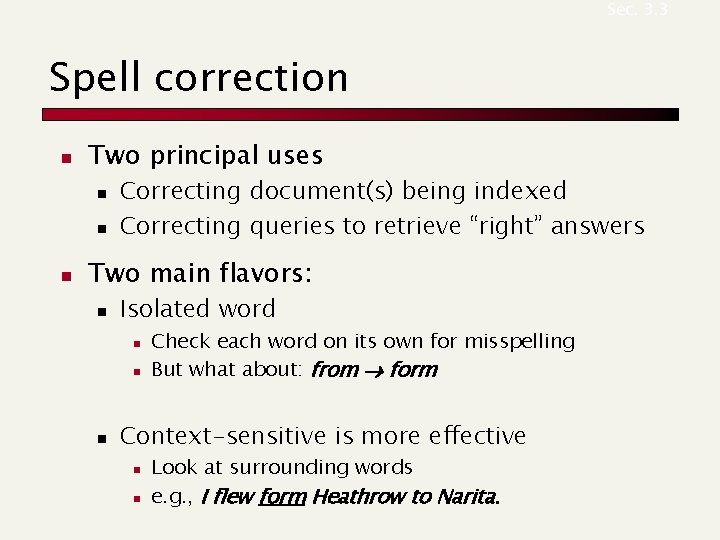

2 -level indexing 2 advantages: • Search ≈ typically 1 I/O + in-mem comp. • Space ≈ trie built over a subset of strings Internal Memory CT on a sample A disadvantage: • Trade-off ≈ speed vs space (because of bucket size) systile szaibelyite Disk systile syzygetic syzigial syzygygy szaibelyite szczecin szomo

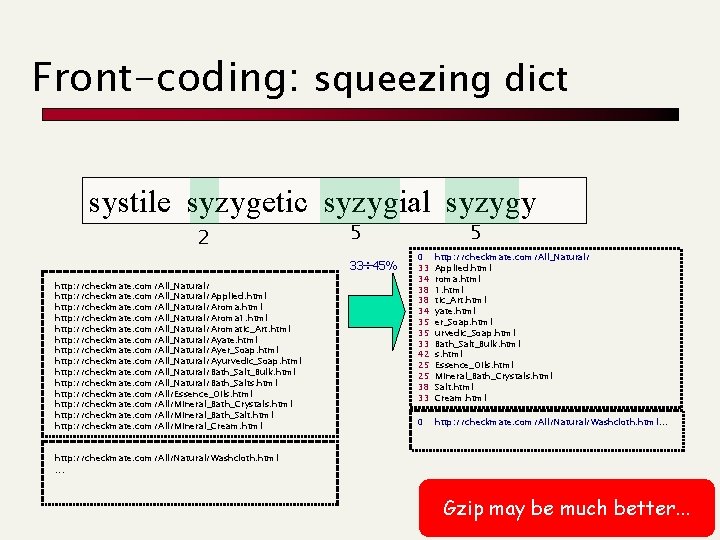

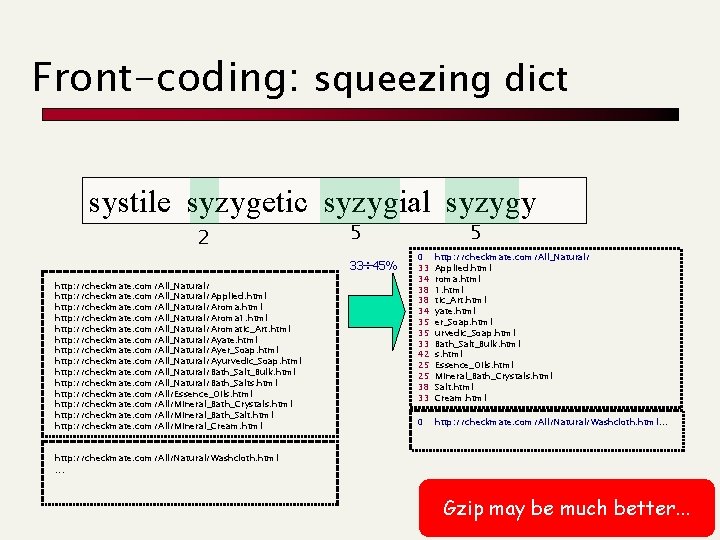

Front-coding: squeezing dict systile syzygetic syzygial syzygy 2 http: //checkmate. com/All_Natural/Applied. html http: //checkmate. com/All_Natural/Aroma 1. html http: //checkmate. com/All_Natural/Aromatic_Art. html http: //checkmate. com/All_Natural/Ayate. html http: //checkmate. com/All_Natural/Ayer_Soap. html http: //checkmate. com/All_Natural/Ayurvedic_Soap. html http: //checkmate. com/All_Natural/Bath_Salt_Bulk. html http: //checkmate. com/All_Natural/Bath_Salts. html http: //checkmate. com/All/Essence_Oils. html http: //checkmate. com/All/Mineral_Bath_Crystals. html http: //checkmate. com/All/Mineral_Bath_Salt. html http: //checkmate. com/All/Mineral_Cream. html 5 33 45% 5 0 33 34 38 38 34 35 35 33 42 25 25 38 33 http: //checkmate. com/All_Natural/ Applied. html roma. html 1. html tic_Art. html yate. html er_Soap. html urvedic_Soap. html Bath_Salt_Bulk. html s. html Essence_Oils. html Mineral_Bath_Crystals. html Salt. html Cream. html 0 http: //checkmate. com/All/Natural/Washcloth. html. . . http: //checkmate. com/All/Natural/Washcloth. html . . . Gzip may be much better. . .

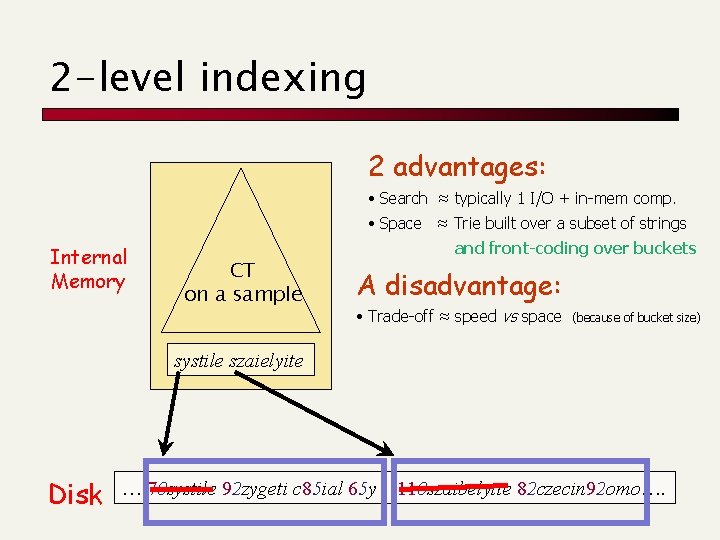

2 -level indexing 2 advantages: • Search ≈ typically 1 I/O + in-mem comp. • Space ≈ Trie built over a subset of strings Internal Memory CT on a sample and front-coding over buckets A disadvantage: • Trade-off ≈ speed vs space (because of bucket size) systile szaielyite Disk …. 70 systile 92 zygeti c 85 ial 65 y 110 szaibelyite 82 czecin 92 omo….

Spelling correction

Sec. 3. 3 Spell correction n Two principal uses n n n Correcting document(s) being indexed Correcting queries to retrieve “right” answers Two main flavors: n Isolated word n n n Check each word on its own for misspelling But what about: from form Context-sensitive is more effective n n Look at surrounding words e. g. , I flew form Heathrow to Narita.

Sec. 3. 3. 2 Isolated word correction n n Fundamental premise – there is a lexicon from which the correct spellings come Two basic choices for this n A standard lexicon such as n n n Webster’s English Dictionary An “industry-specific” lexicon – hand-maintained The lexicon of the indexed corpus n n n E. g. , all words on the web All names, acronyms etc. (including the mis-spellings) Mining algorithms to derive the possible corrections

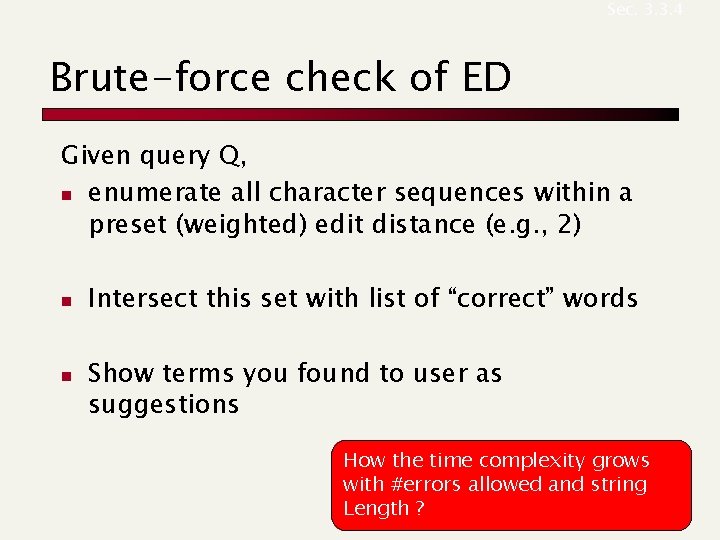

Sec. 3. 3. 2 Isolated word correction n Given a lexicon and a character sequence Q, return the words in the lexicon closest to Q n What’s “closest”? n We’ll study several measures n n n Edit distance (Levenshtein distance) Weighted edit distance n-gram overlap

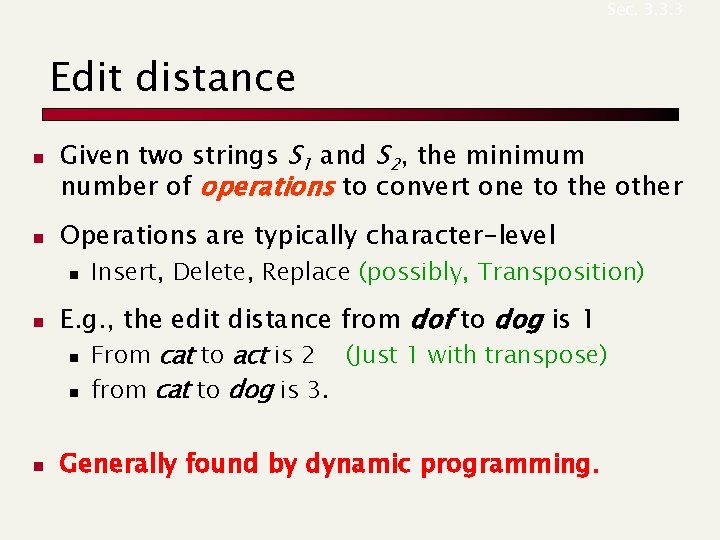

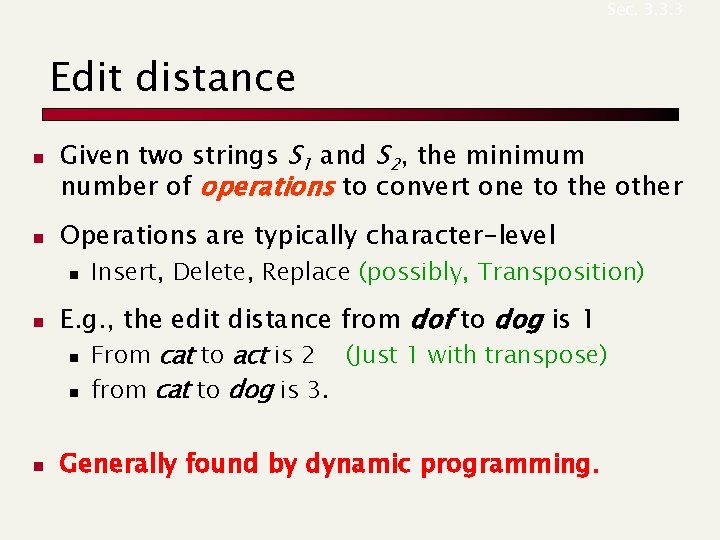

Sec. 3. 3. 4 Brute-force check of ED Given query Q, n enumerate all character sequences within a preset (weighted) edit distance (e. g. , 2) n n Intersect this set with list of “correct” words Show terms you found to user as suggestions How the time complexity grows with #errors allowed and string Length ?

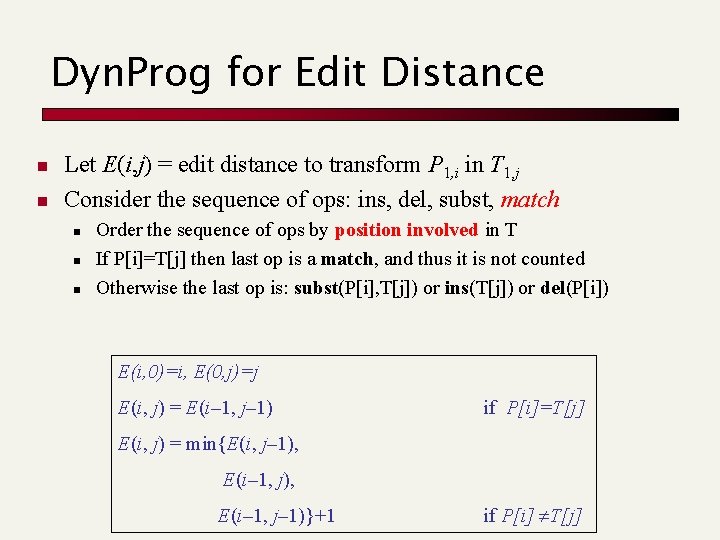

Sec. 3. 3. 3 Edit distance n n Given two strings S 1 and S 2, the minimum number of operations to convert one to the other Operations are typically character-level n n n Insert, Delete, Replace (possibly, Transposition) E. g. , the edit distance from dof to dog is 1 n From cat to act is 2 (Just 1 with transpose) n from cat to dog is 3. Generally found by dynamic programming.

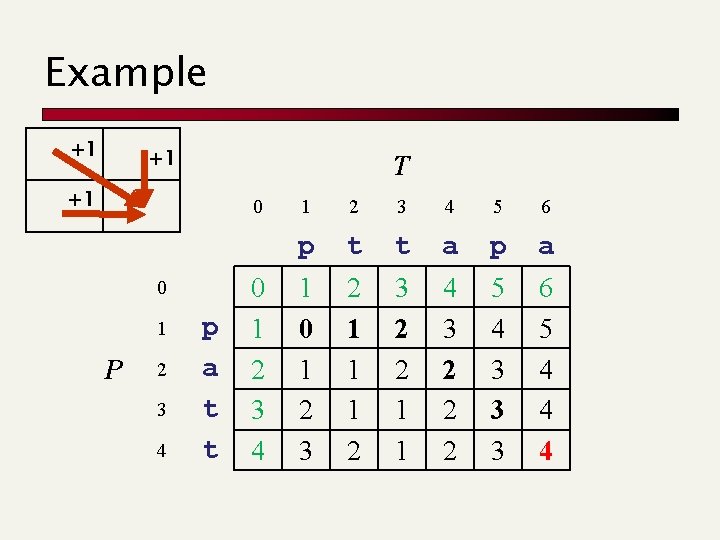

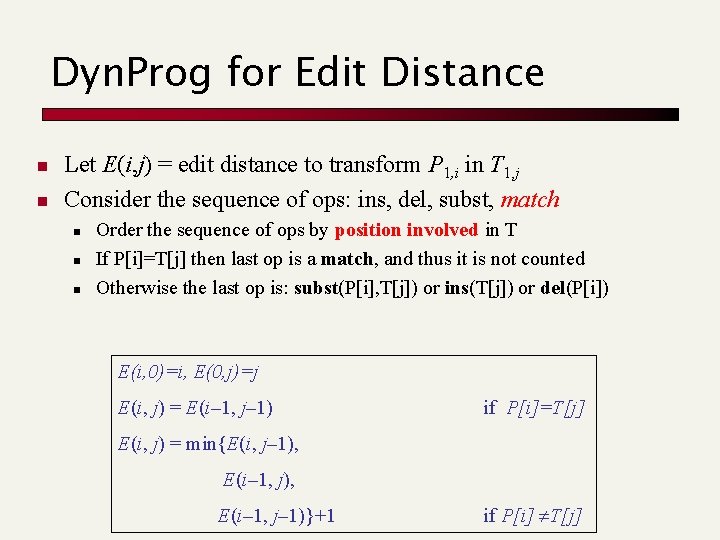

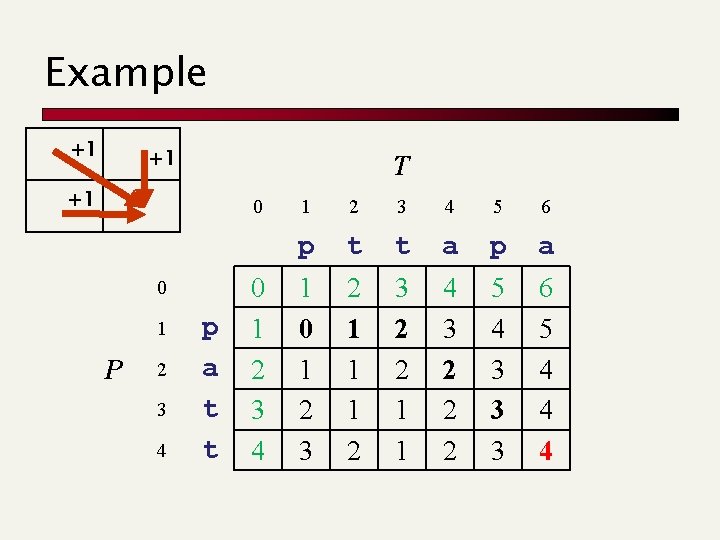

Dyn. Prog for Edit Distance n n Let E(i, j) = edit distance to transform P 1, i in T 1, j Consider the sequence of ops: ins, del, subst, match n n n Order the sequence of ops by position involved in T If P[i]=T[j] then last op is a match, and thus it is not counted Otherwise the last op is: subst(P[i], T[j]) or ins(T[j]) or del(P[i]) E(i, 0)=i, E(0, j)=j E(i, j) = E(i– 1, j– 1) if P[i]=T[j] E(i, j) = min{E(i, j– 1), E(i– 1, j– 1)}+1 if P[i] T[j]

Example +1 +1 T +1 0 0 1 P 2 3 4 p a t t 0 1 2 3 4 5 6 p t t a p a 1 0 1 2 3 2 1 1 1 2 3 2 2 1 1 4 3 2 2 2 5 4 3 3 3 6 5 4 4 4

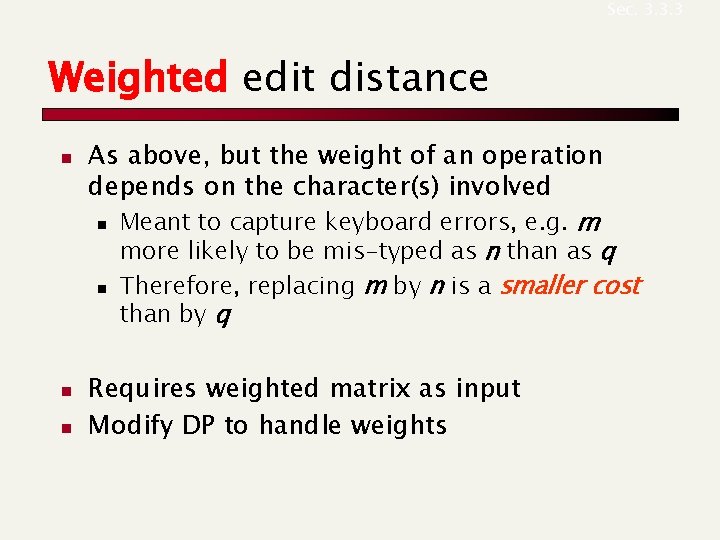

Sec. 3. 3. 3 Weighted edit distance n n n As above, but the weight of an operation depends on the character(s) involved n Meant to capture keyboard errors, e. g. m more likely to be mis-typed as n than as q n Therefore, replacing m by n is a smaller cost than by q Requires weighted matrix as input Modify DP to handle weights