Deep Learning Neural Network with Memory 2 Hungyi

- Slides: 22

Deep Learning Neural Network with Memory (2) Hung-yi Lee

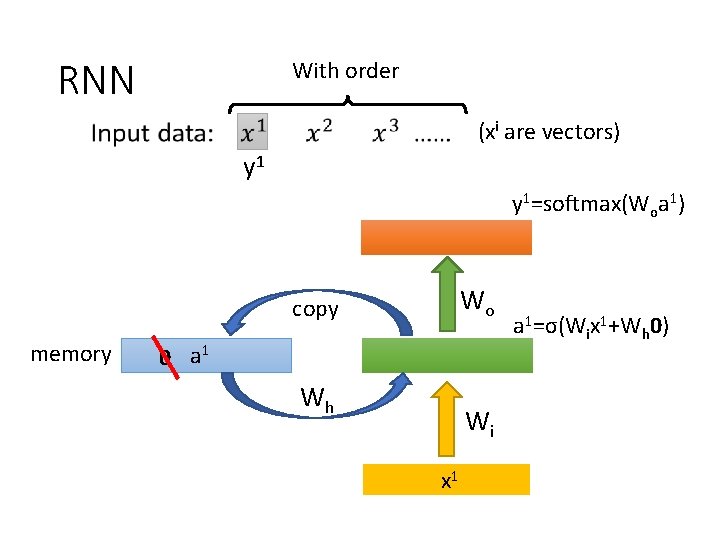

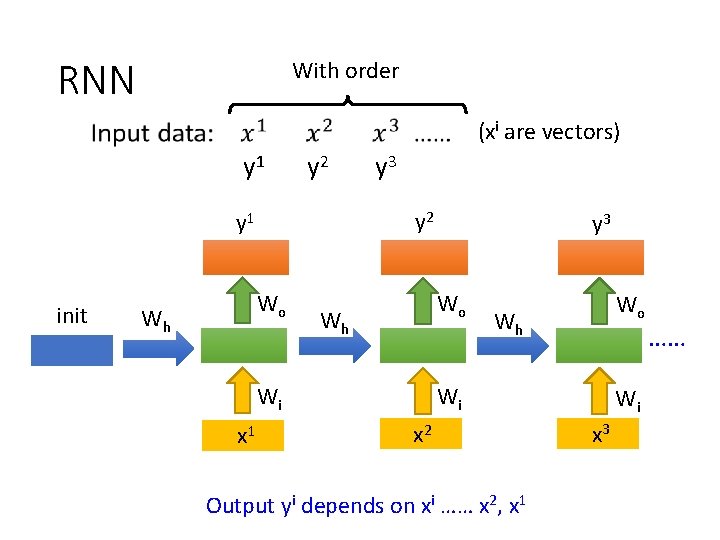

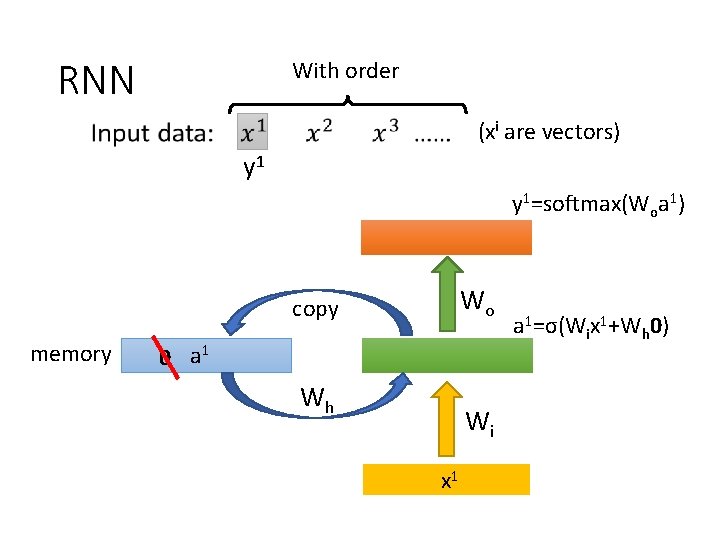

RNN With order (xi are vectors) y 1=softmax(Woa 1) Wo copy memory 0 a 1 Wh Wi x 1 a 1=σ(Wix 1+Wh 0)

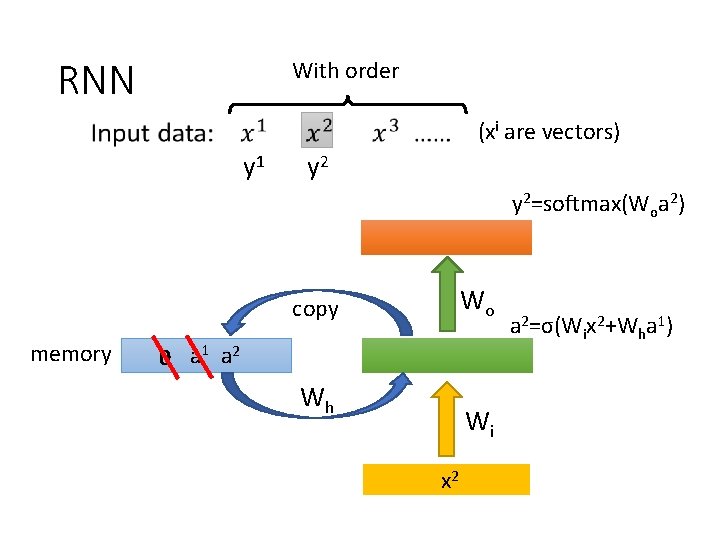

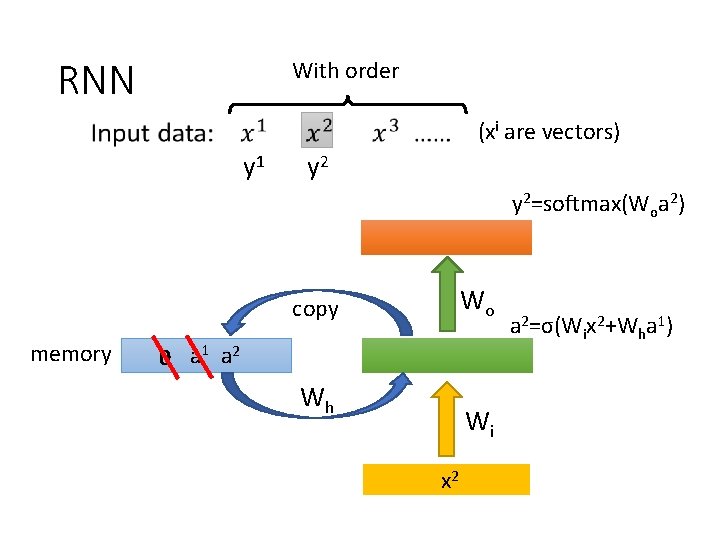

RNN With order (xi are vectors) y 1 y 2=softmax(Woa 2) Wo copy memory 0 a 1 a 2 Wh Wi x 2 a 2=σ(Wix 2+Wha 1)

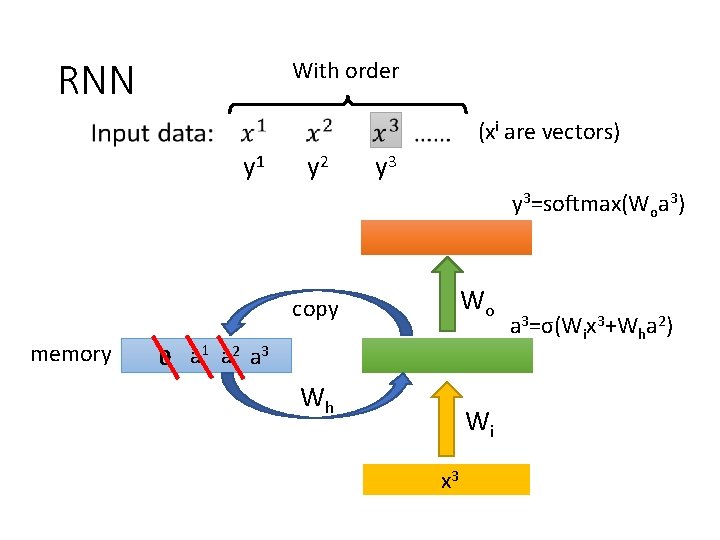

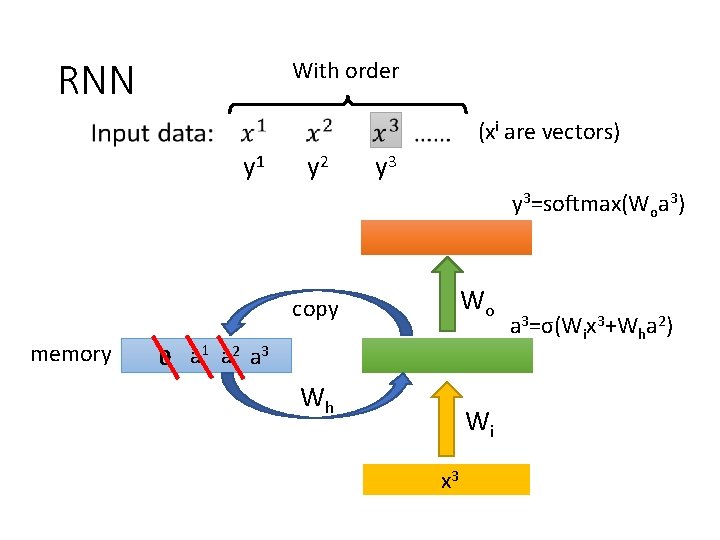

RNN With order (xi are vectors) y 1 y 2 y 3=softmax(Woa 3) Wo copy memory 0 a 1 a 2 a 3 Wh Wi x 3 a 3=σ(Wix 3+Wha 2)

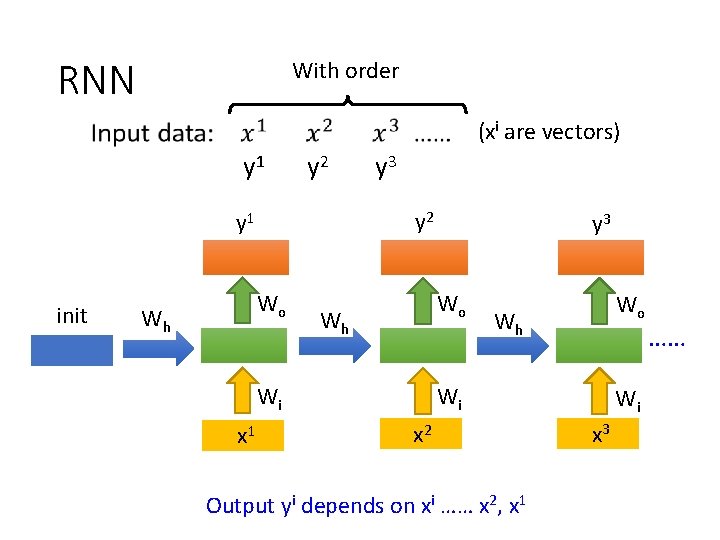

RNN With order (xi are vectors) y 1 y 2 y 1 init Wo Wh y 3 Wo Wh Wi x 1 y 3 Wo Wh Wi x 2 Output yi depends on xi …… x 2, x 1 Wi x 3 ……

Training data: RNN – Training Backpropagation Through Time (BPTT) init also trainable y 2 y 1 Wo Wh Wi x 1 y 3 Wo Wh Wi x 2 error δ Wi x 3 ……

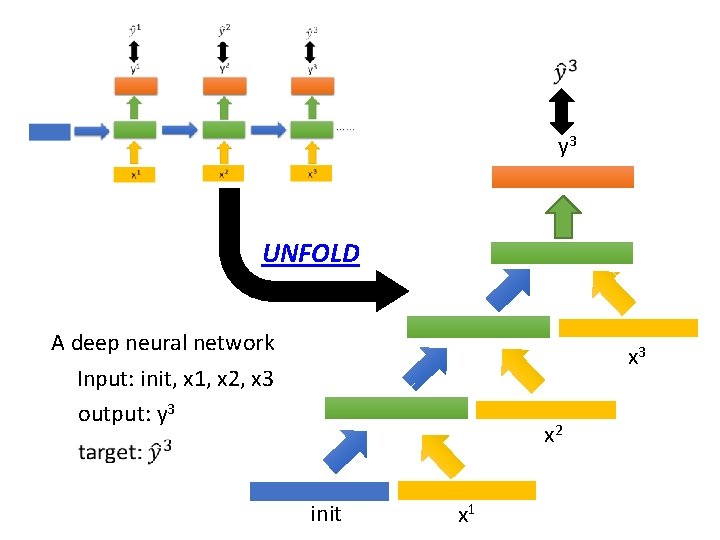

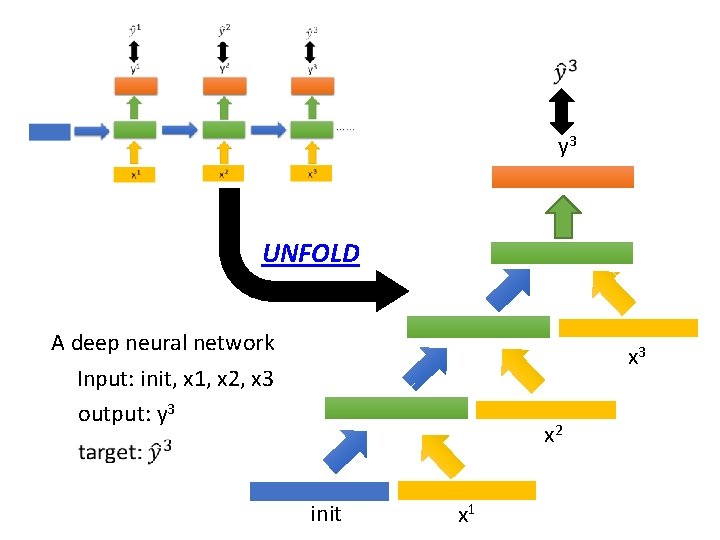

y 3 UNFOLD A deep neural network x 3 Input: init, x 1, x 2, x 3 output: y 3 x 2 init x 1

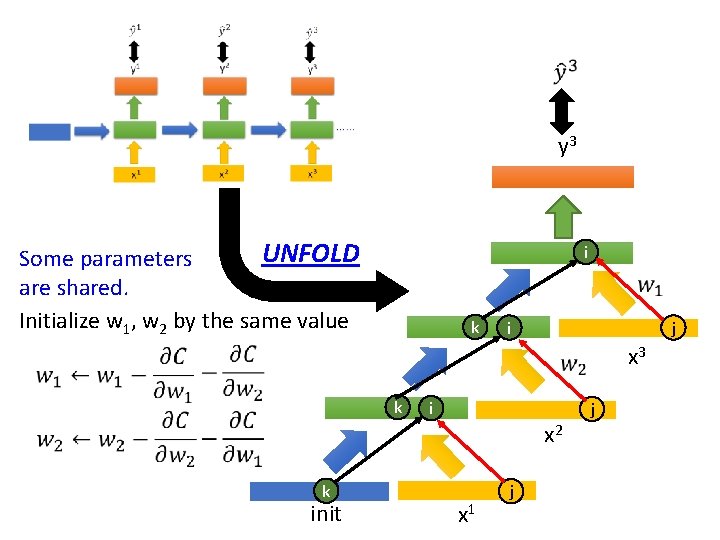

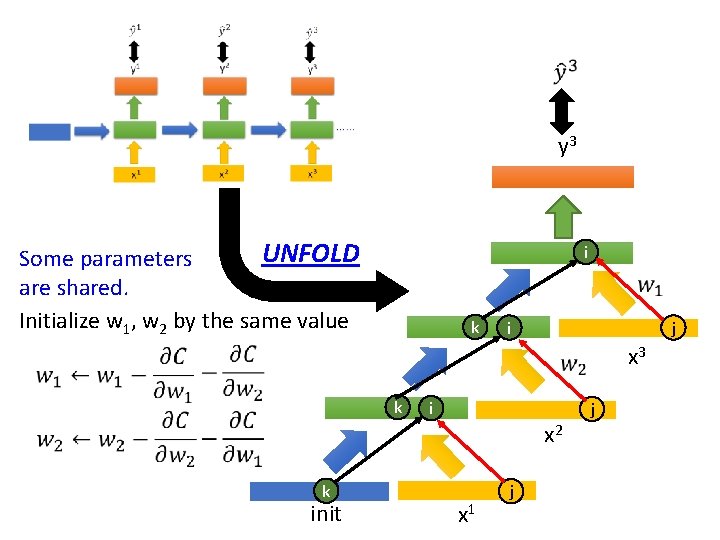

y 3 UNFOLD Some parameters are shared. Initialize w 1, w 2 by the same value i k i j x 3 k i x 2 k init x 1 j j

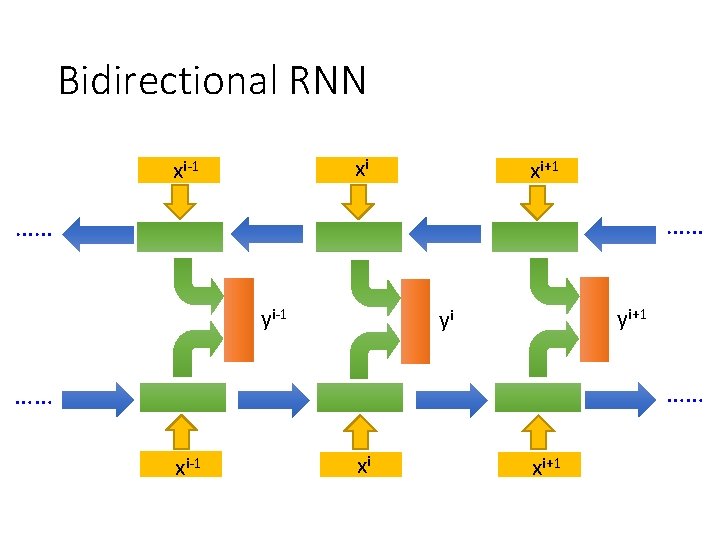

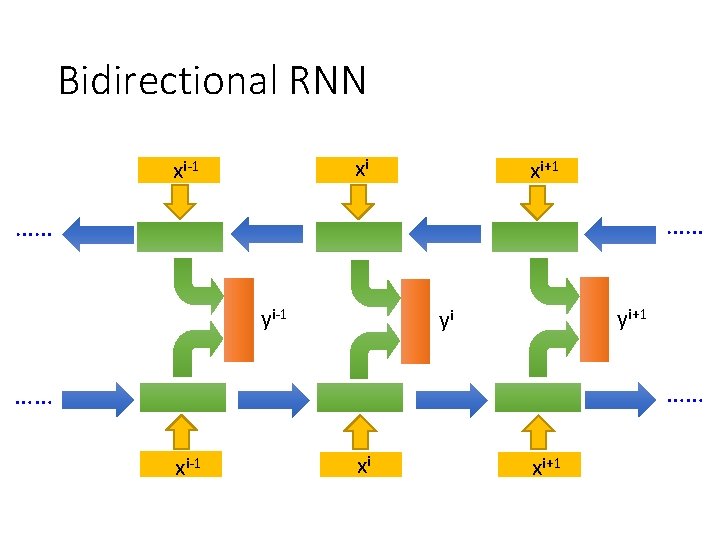

Bidirectional RNN xi xi-1 xi+1 …… …… yi-1 yi+1 yi …… …… xi-1 xi xi+1

Difficulty of RNN Training Unfortunately, it is not easy to train RNN.

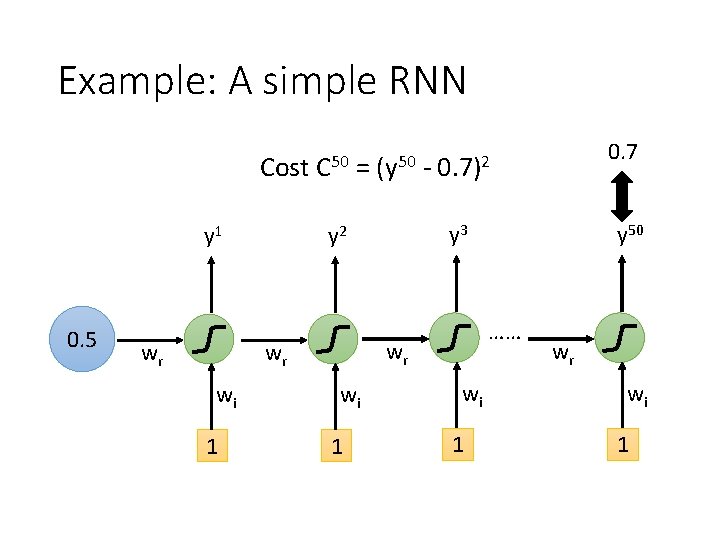

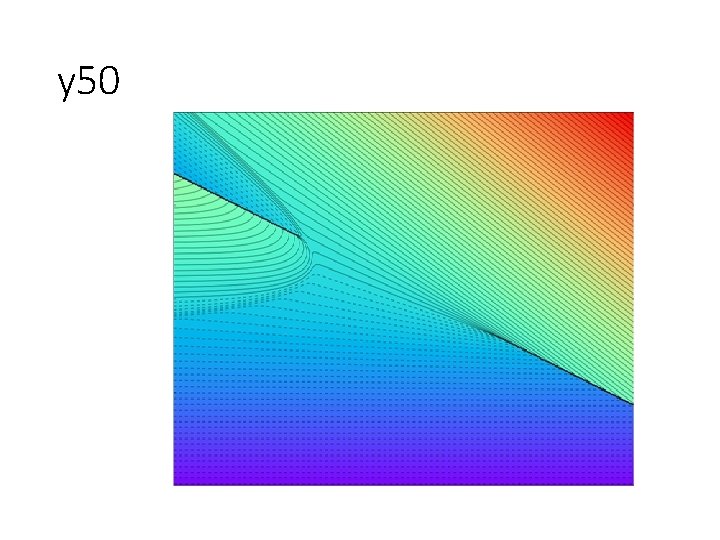

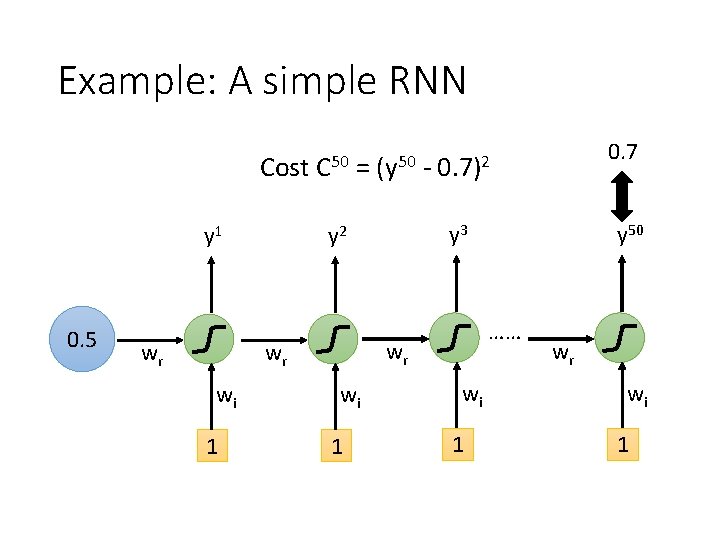

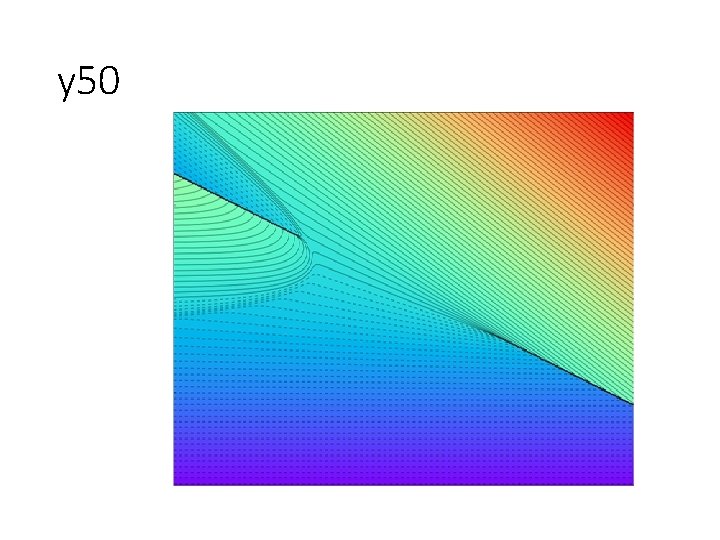

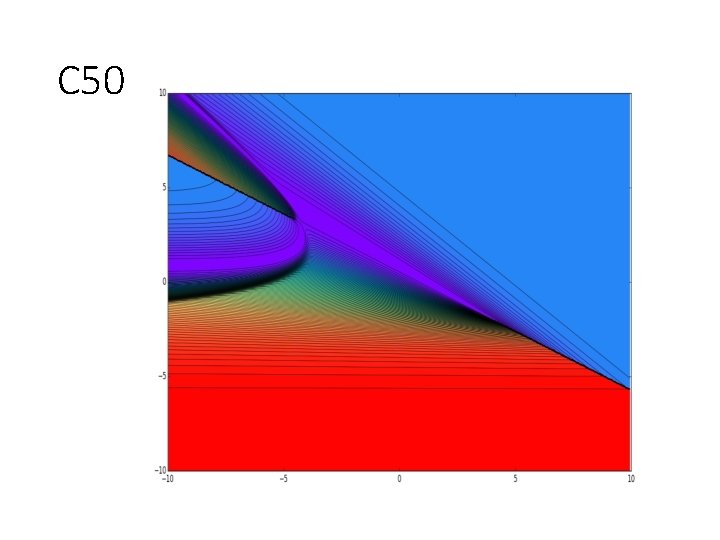

Example: A simple RNN Cost y 1 0. 5 wr C 50 = 1 - 1 y 50 …… wr wi 0. 7)2 y 3 y 2 wr wi (y 50 wi 1 wr wi 1

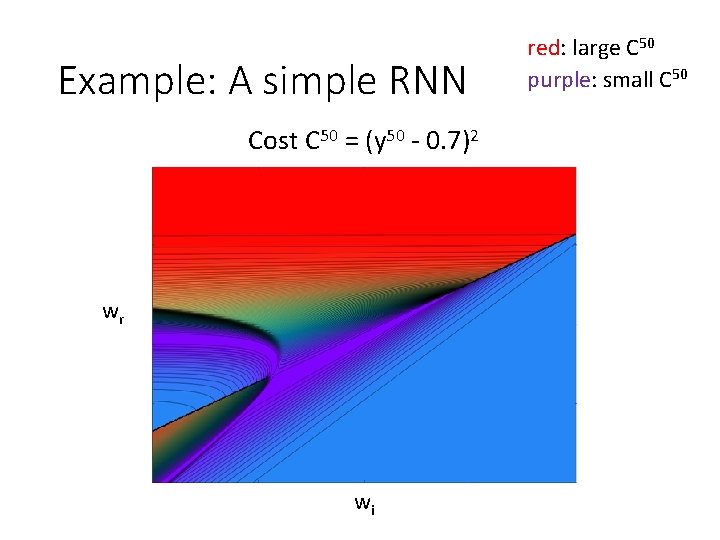

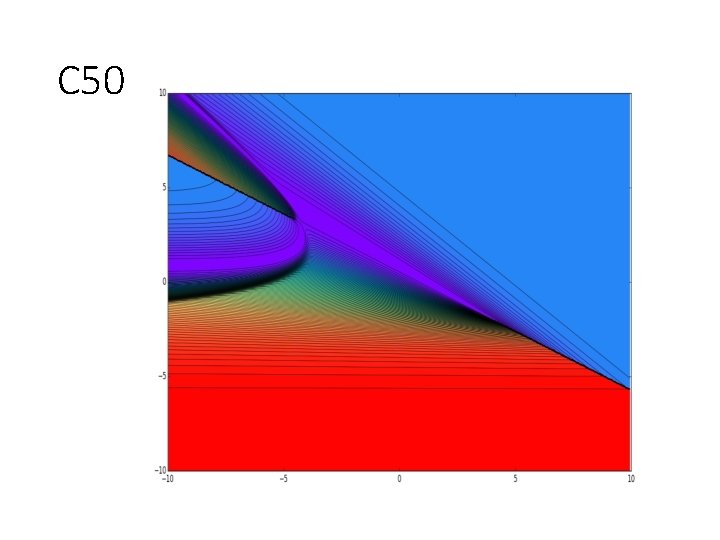

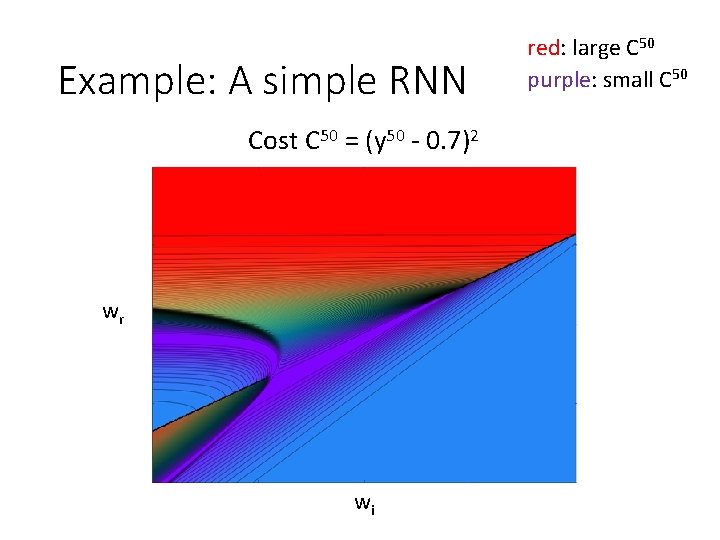

Example: A simple RNN Cost C 50 = (y 50 - 0. 7)2 wr wi red: large C 50 purple: small C 50

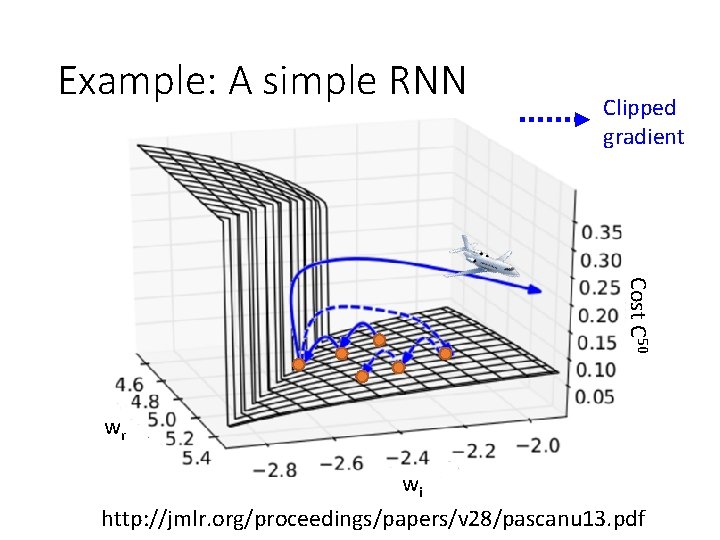

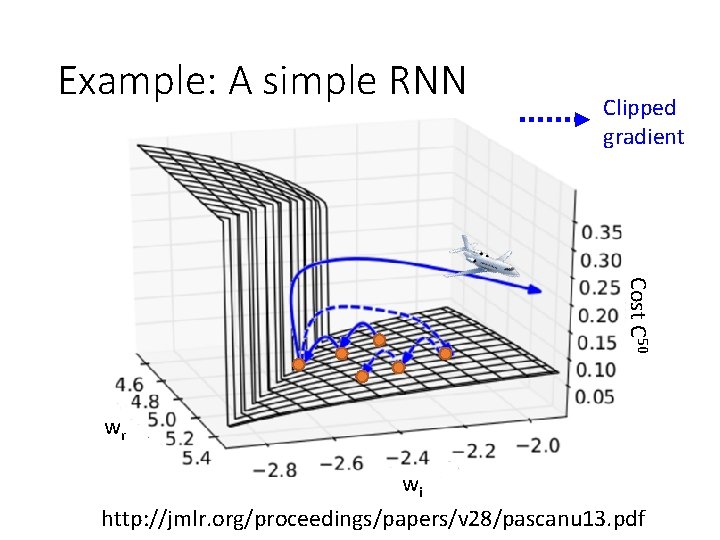

Example: A simple RNN Clipped gradient Cost C 50 wr wi http: //jmlr. org/proceedings/papers/v 28/pascanu 13. pdf

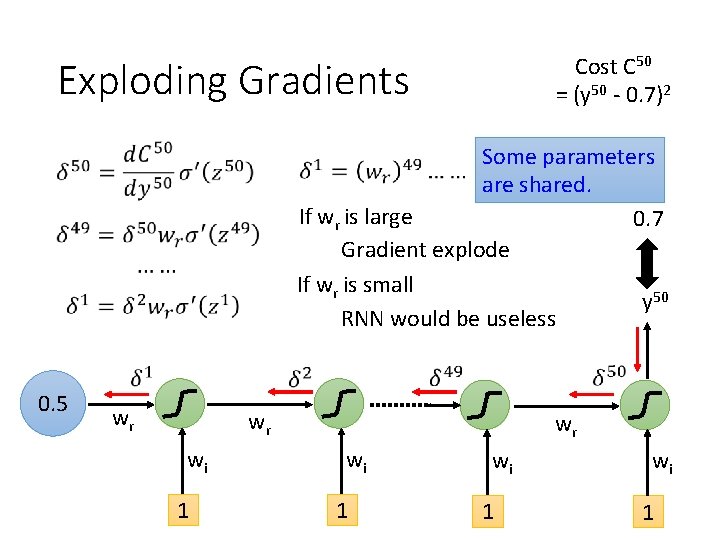

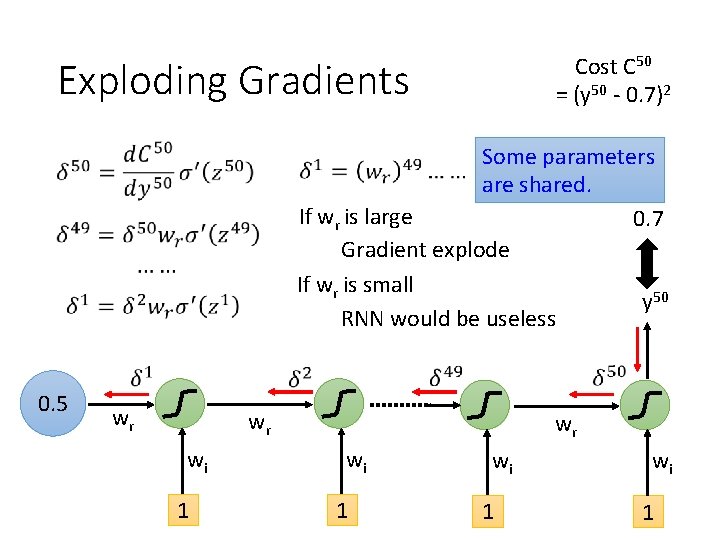

Cost C 50 = (y 50 - 0. 7)2 Exploding Gradients Some parameters are shared. If wr is large 0. 7 Gradient explode If wr is small y 50 RNN would be useless 0. 5 wr wr wi 1

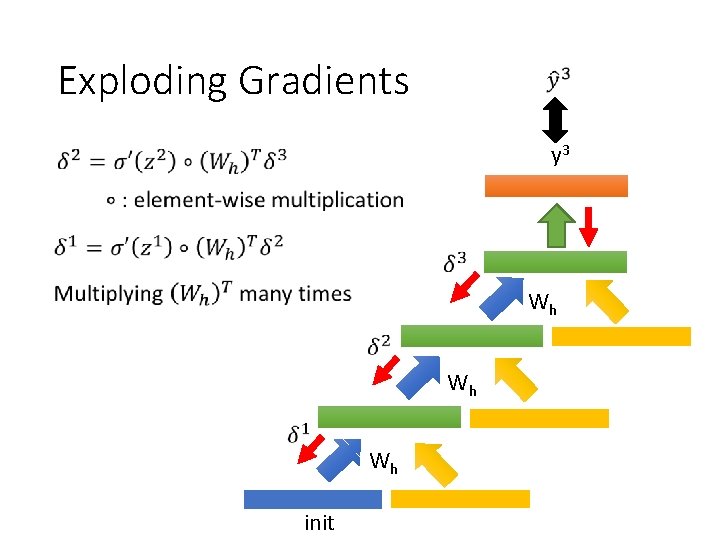

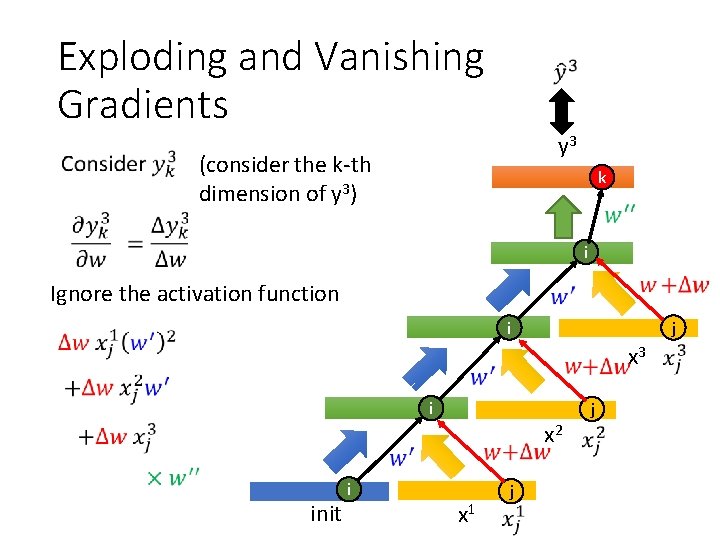

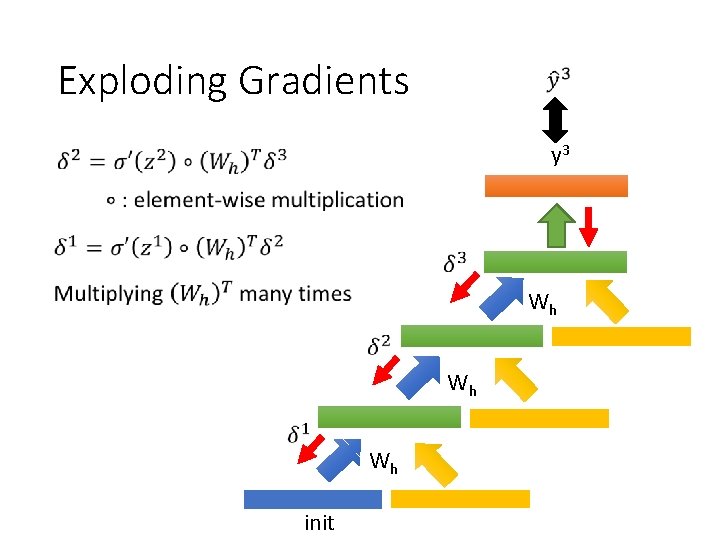

Exploding Gradients y 3 Wh Wh Wh init

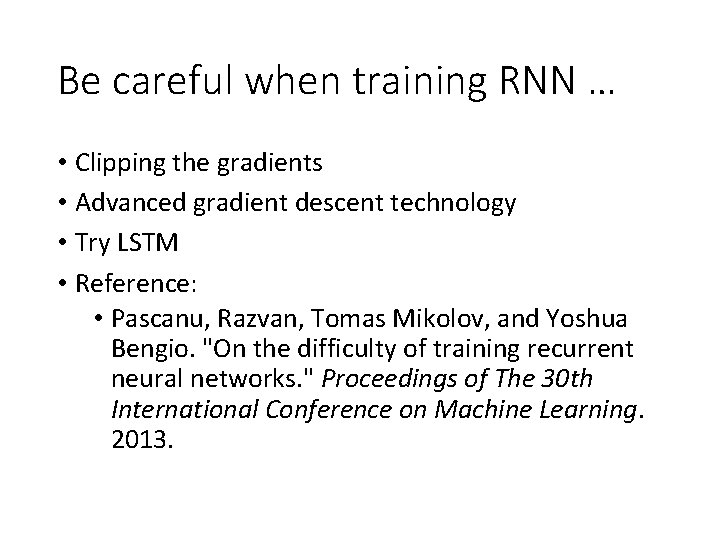

Be careful when training RNN … • Clipping the gradients • Advanced gradient descent technology • Try LSTM • Reference: • Pascanu, Razvan, Tomas Mikolov, and Yoshua Bengio. "On the difficulty of training recurrent neural networks. " Proceedings of The 30 th International Conference on Machine Learning. 2013.

Thanks for your attention!

Appendix

y 50

C 50

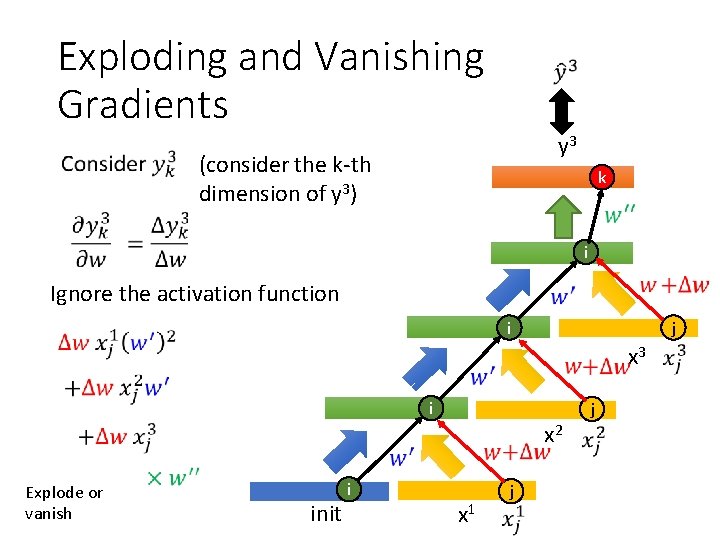

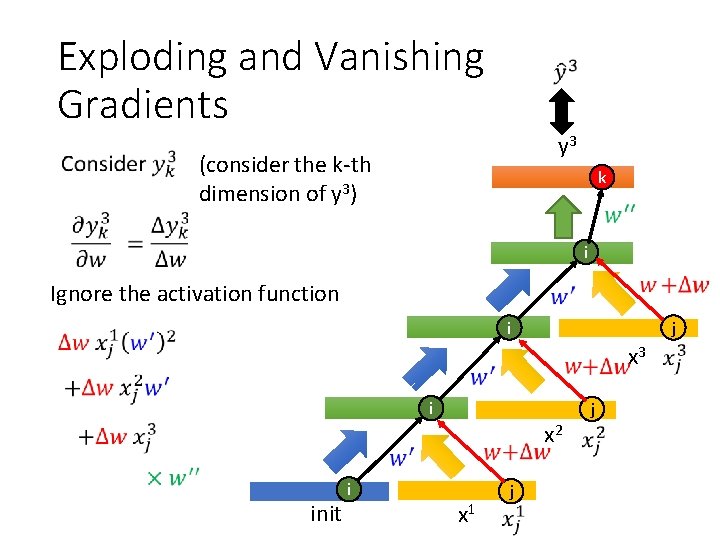

Exploding and Vanishing Gradients y 3 (consider the k-th dimension of y 3) k i Ignore the activation function i j x 3 i x 2 init i x 1 j j

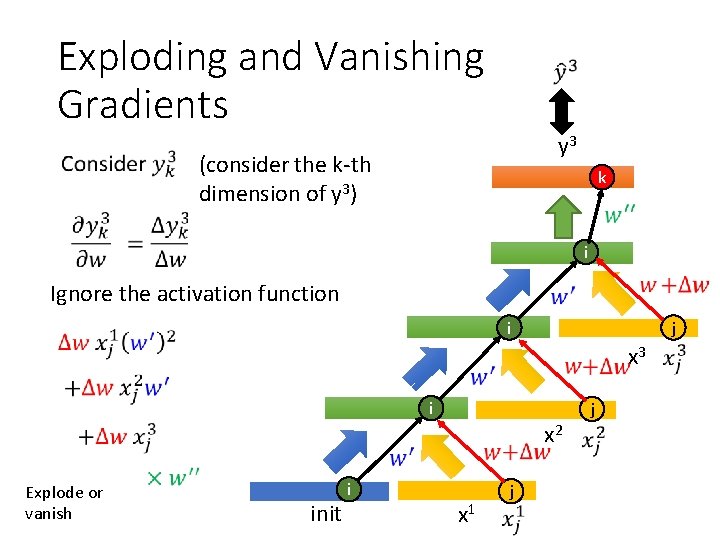

Exploding and Vanishing Gradients y 3 (consider the k-th dimension of y 3) k i Ignore the activation function i j x 3 i x 2 Explode or vanish init i x 1 j j