Deep Learning for Speech Recognition Hungyi Lee Outline

- Slides: 68

Deep Learning for Speech Recognition Hung-yi Lee

Outline • Conventional Speech Recognition • How to use Deep Learning in acoustic modeling? • Why Deep Learning? • Speaker Adaptation • Multi-task Deep Learning • New acoustic features • Convolutional Neural Network (CNN) • Applications in Acoustic Signal Processing

Conventional Speech Recognition

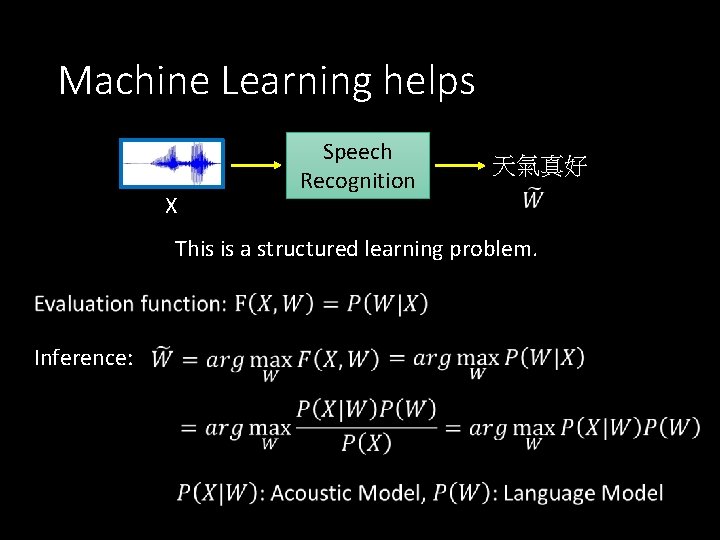

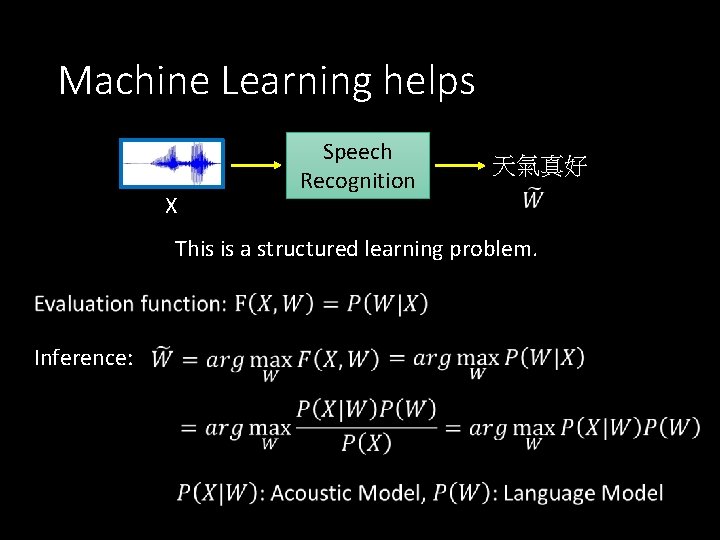

Machine Learning helps X Speech Recognition 天氣真好 This is a structured learning problem. Inference:

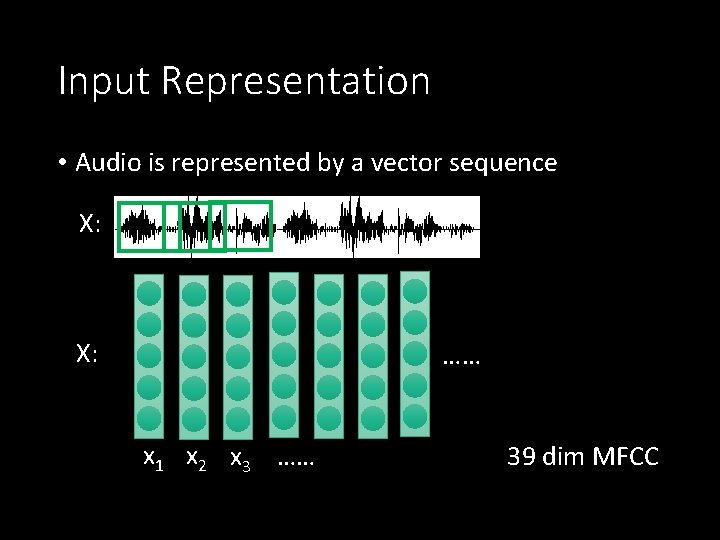

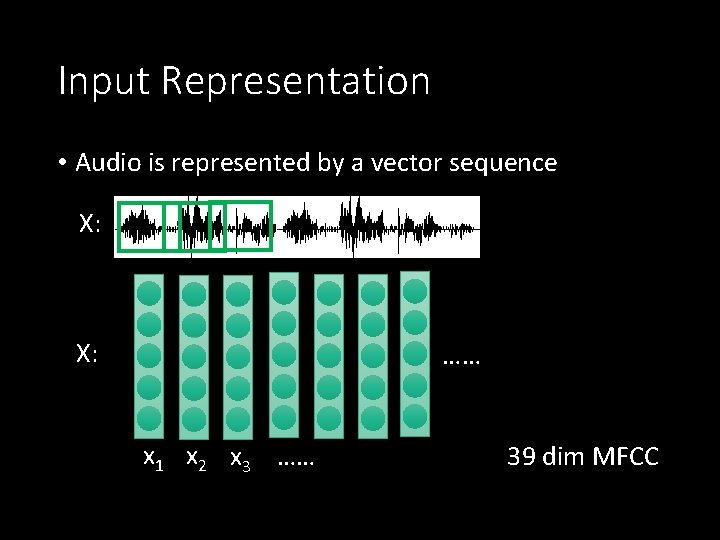

Input Representation • Audio is represented by a vector sequence X: …… x 1 x 2 x 3 …… 39 dim MFCC

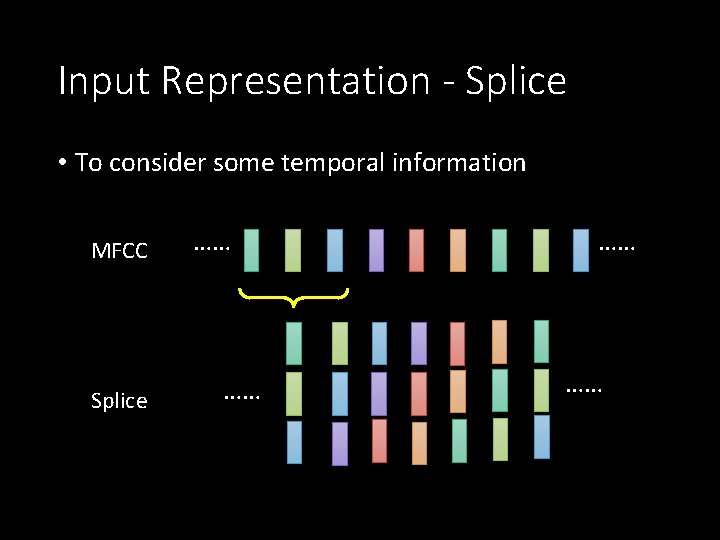

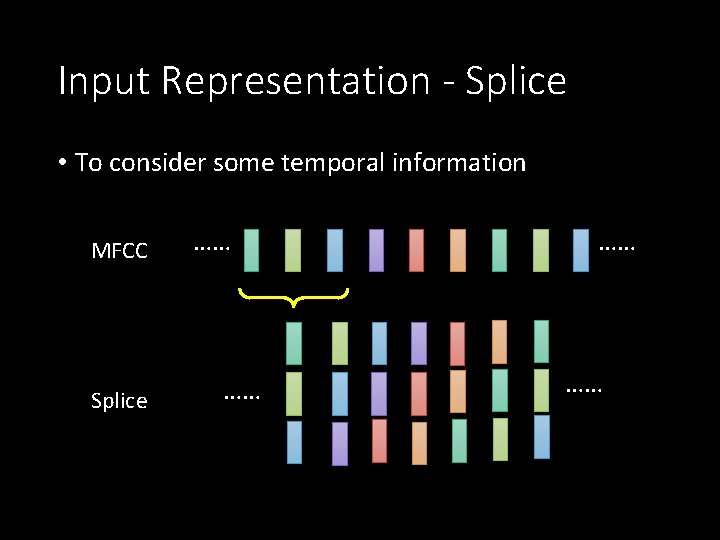

Input Representation - Splice • To consider some temporal information MFCC Splice …… ……

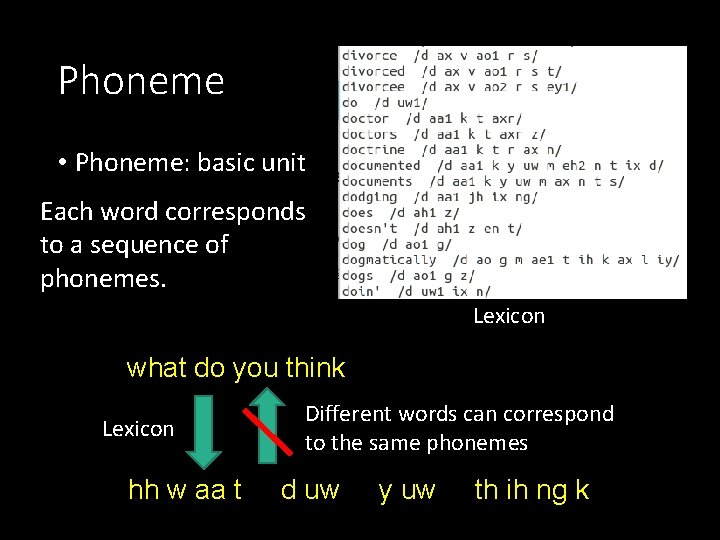

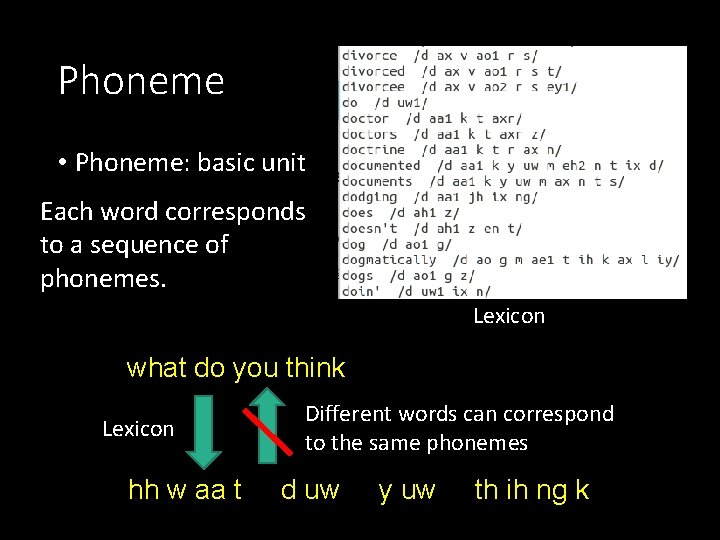

Phoneme • Phoneme: basic unit Each word corresponds to a sequence of phonemes. Lexicon what do you think Lexicon Different words can correspond to the same phonemes hh w aa t d uw y uw th ih ng k

State • Each phoneme correspond to a sequence of states what do you think Phone: hh w aa t d uw y uw th ih ng k Tri-phone: …… t-d+uw d-uw+y uw-y+uw y-uw+th …… t-d+uw 1 t-d+uw 2 t-d+uw 3 d-uw+y 1 d-uw+y 2 d-uw+y 3 State:

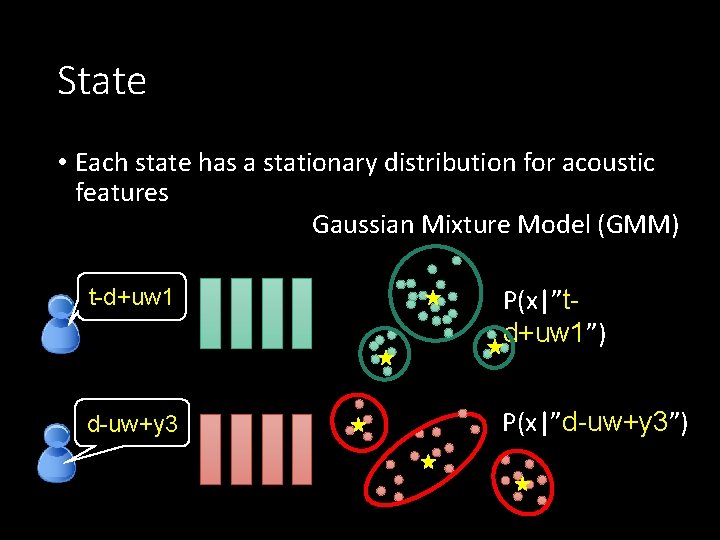

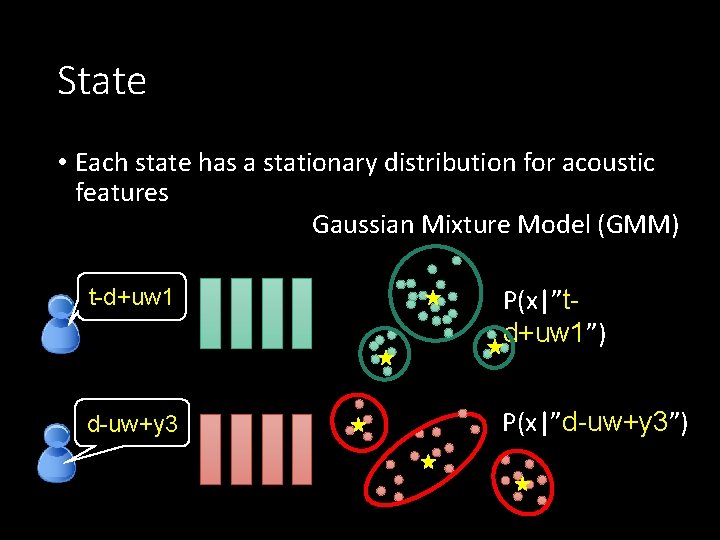

State • Each state has a stationary distribution for acoustic features Gaussian Mixture Model (GMM) t-d+uw 1 P(x|”td+uw 1”) d-uw+y 3 P(x|”d-uw+y 3”)

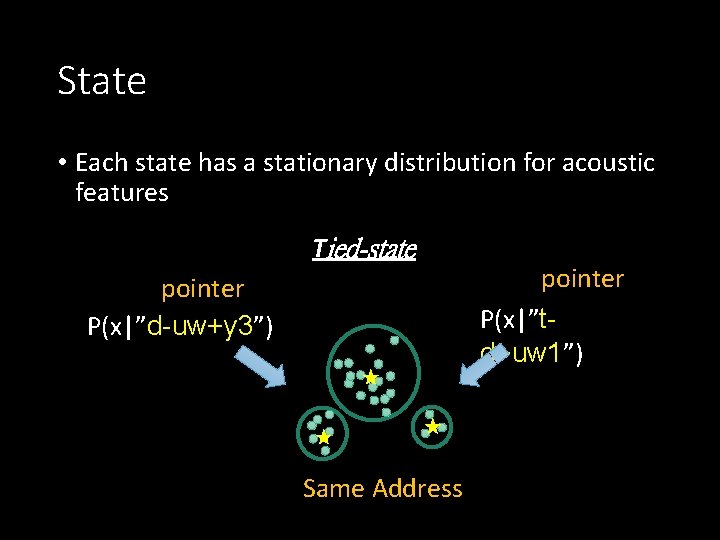

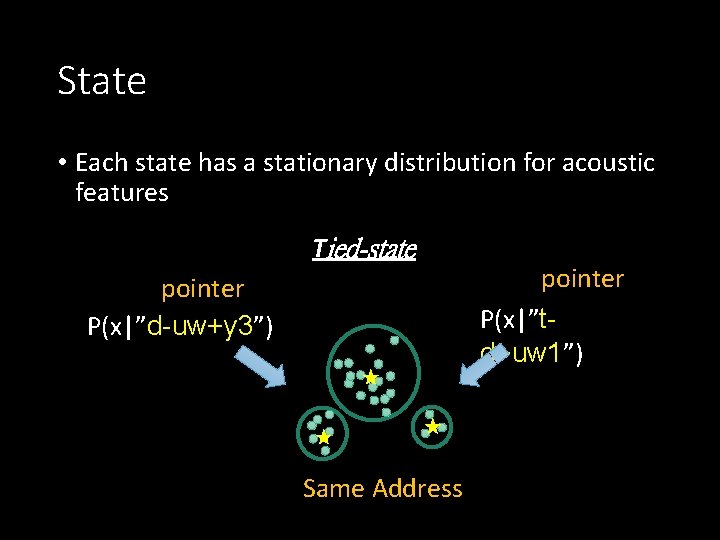

State • Each state has a stationary distribution for acoustic features Tied-state pointer P(x|”d-uw+y 3”) Same Address pointer P(x|”td+uw 1”)

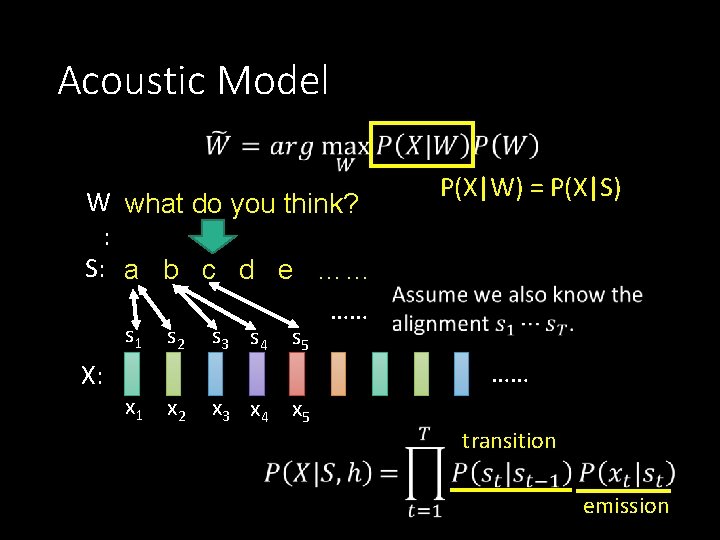

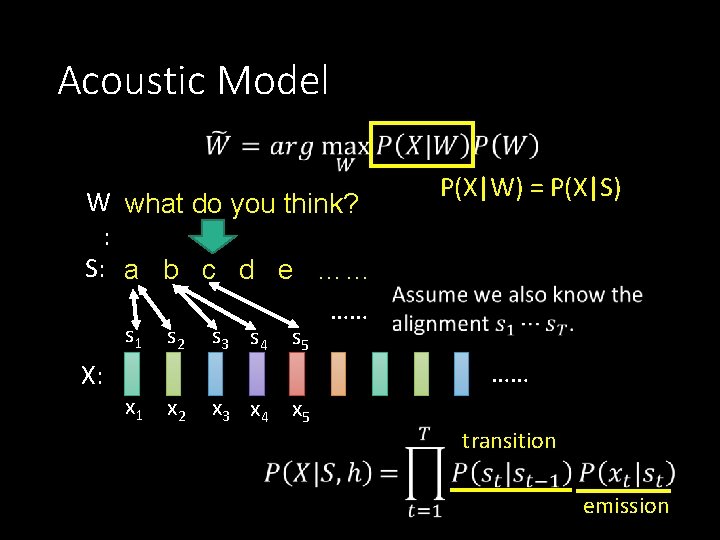

Acoustic Model W what do you think? : S: a b c d e …… …… s 1 X: s 2 s 3 s 4 P(X|W) = P(X|S) s 5 …… x 1 x 2 x 3 x 4 x 5 transition emission

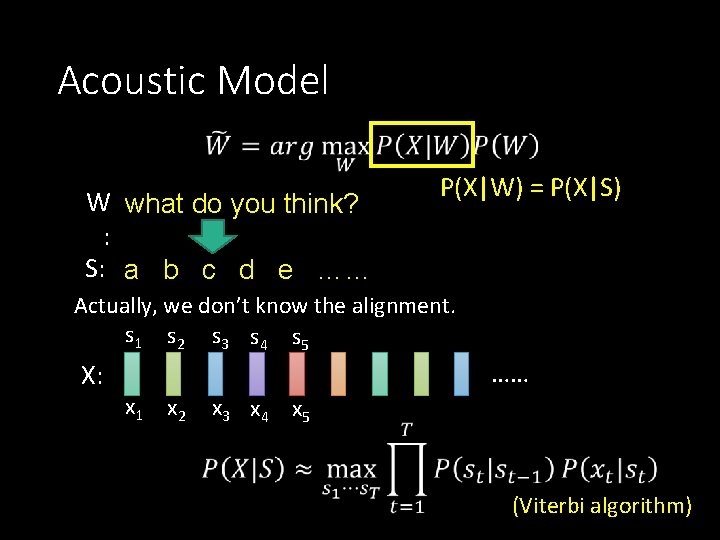

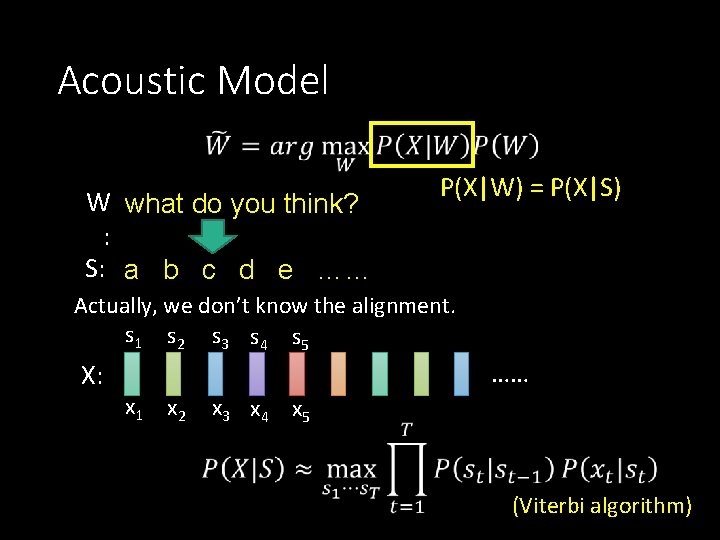

Acoustic Model W what do you think? : S: a b c d e …… P(X|W) = P(X|S) Actually, we don’t know the alignment. s 1 s 2 s 3 s 4 s 5 X: …… x 1 x 2 x 3 x 4 x 5 (Viterbi algorithm)

How to use Deep Learning?

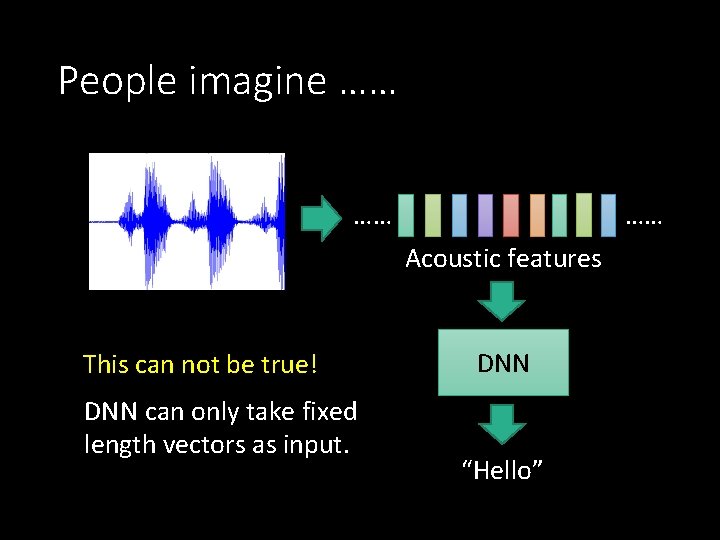

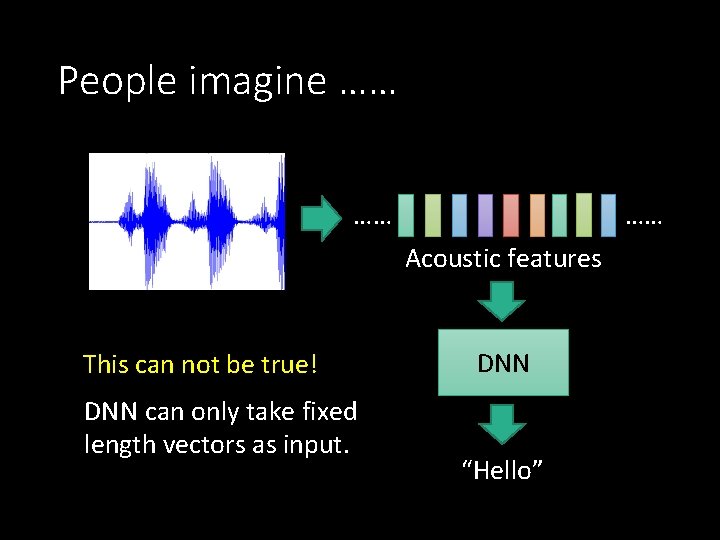

People imagine …… …… …… Acoustic features This can not be true! DNN can only take fixed length vectors as input. DNN “Hello”

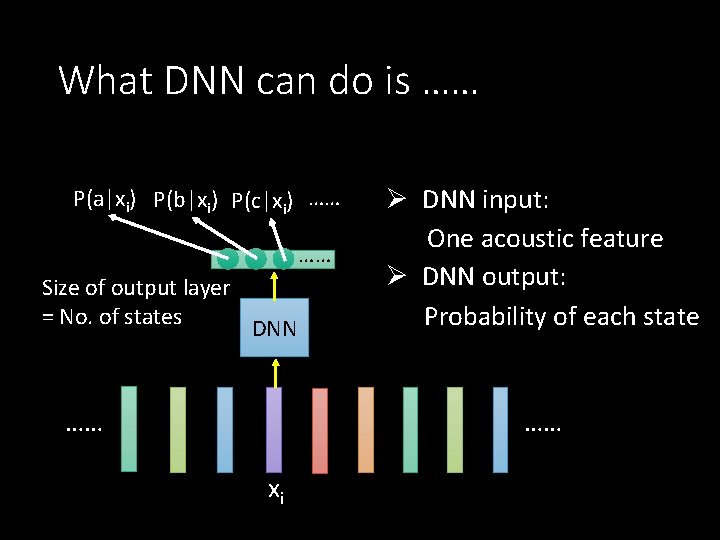

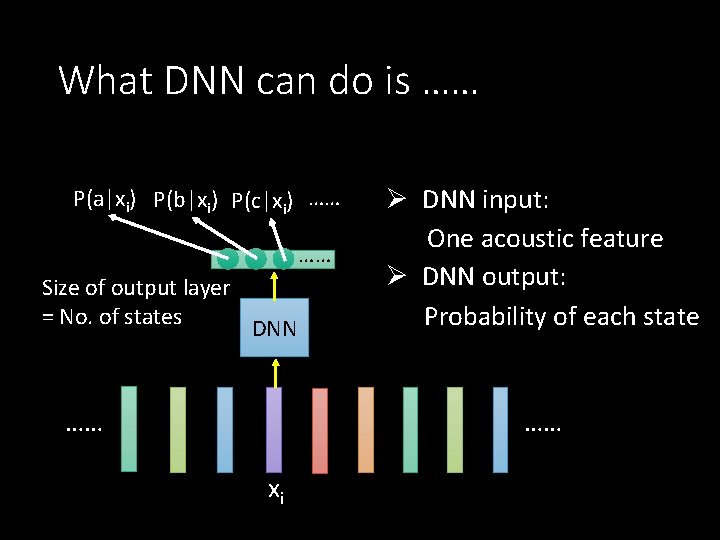

What DNN can do is …… P(a|xi) P(b|xi) P(c|xi) …… …… Size of output layer = No. of states DNN …… Ø DNN input: One acoustic feature Ø DNN output: Probability of each state …… xi

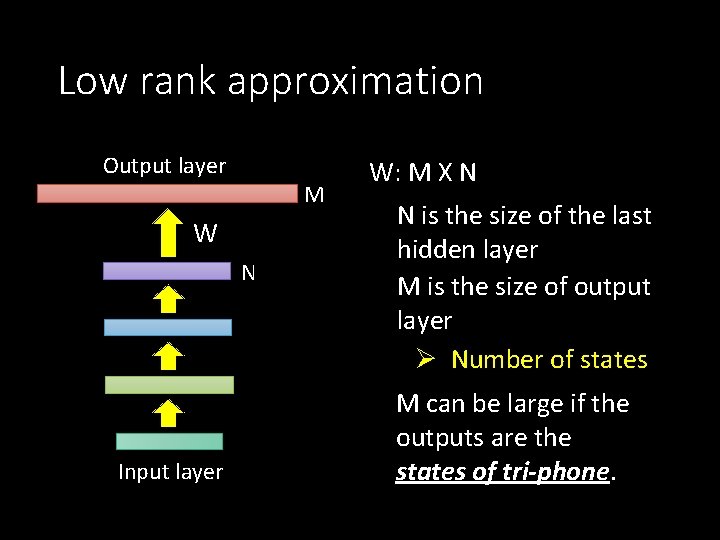

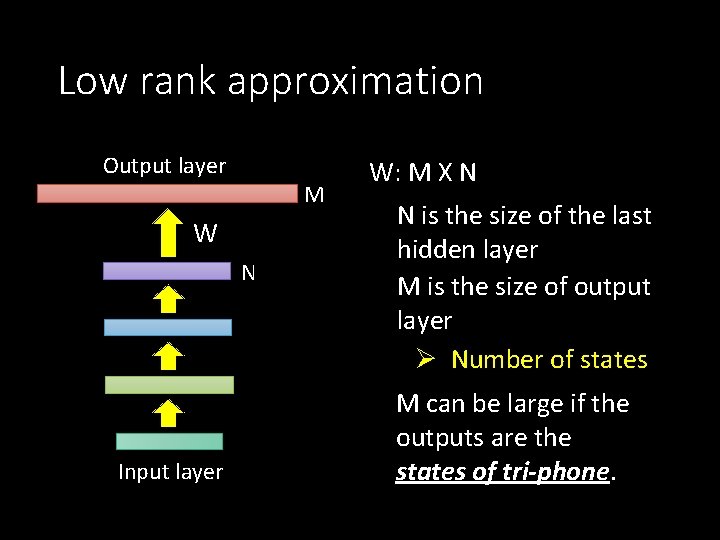

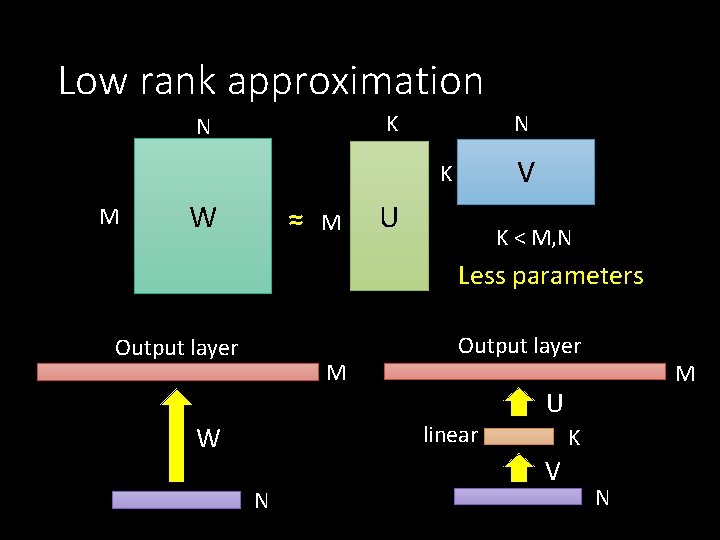

Low rank approximation Output layer M W N Input layer W: M X N N is the size of the last hidden layer M is the size of output layer Ø Number of states M can be large if the outputs are the states of tri-phone.

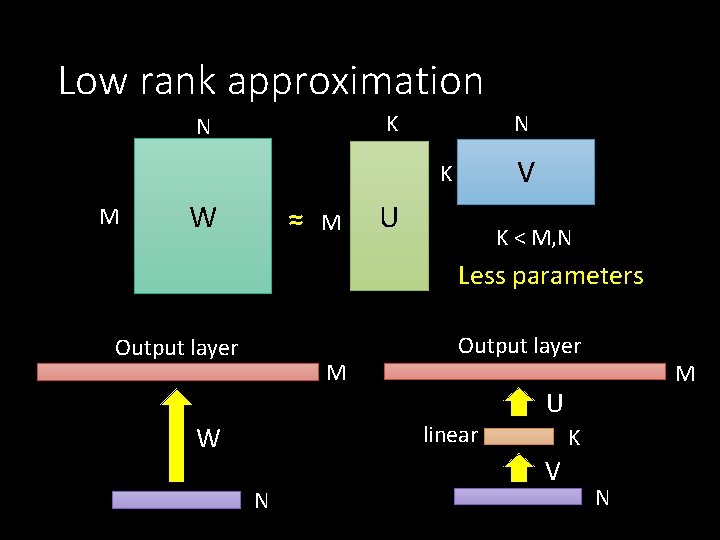

Low rank approximation K N N V K M W ≈ M U K < M, N Less parameters Output layer M Output layer linear W N M U K V N

How we use deep learning • There are three ways to use DNN for acoustic modeling • Way 1. Tandem Efforts for exploiting • Way 2. DNN-HMM hybrid deep learning • Way 3. End-to-end

How to use Deep Learning? Way 1: Tandem

Way 1: Tandem system P(a|xi) P(b|xi) P(c|xi) …… …… Size of output layer = No. of states DNN …… Input of your original speech recognition system …… xi Last hidden layer or bottleneck layer are also possible.

How to use Deep Learning? Way 2: DNN-HMM hybrid

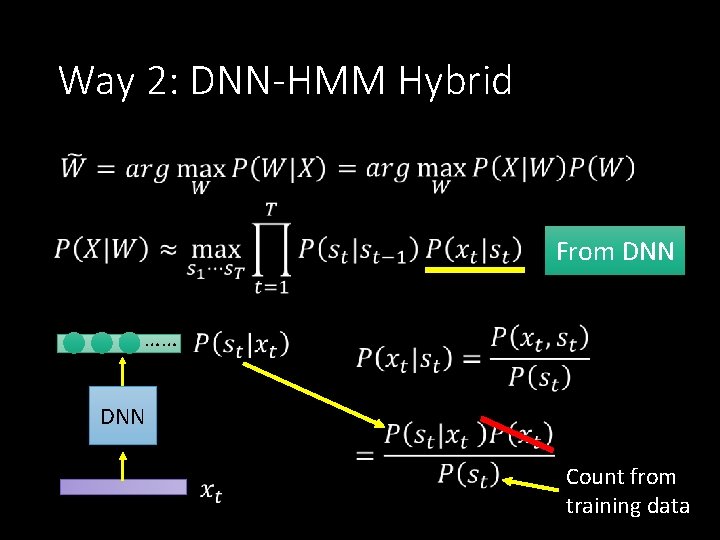

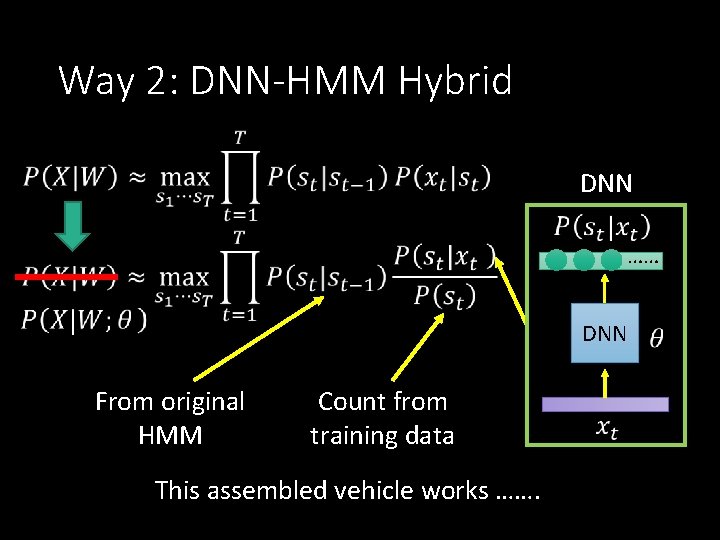

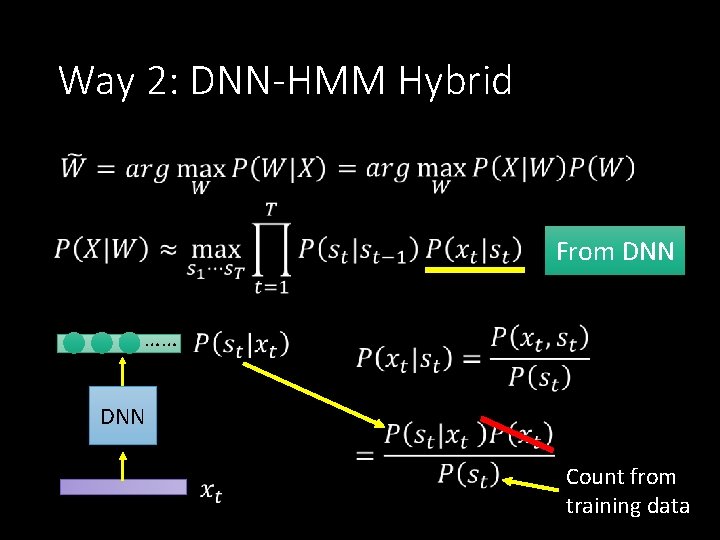

Way 2: DNN-HMM Hybrid From DNN …… DNN Count from training data

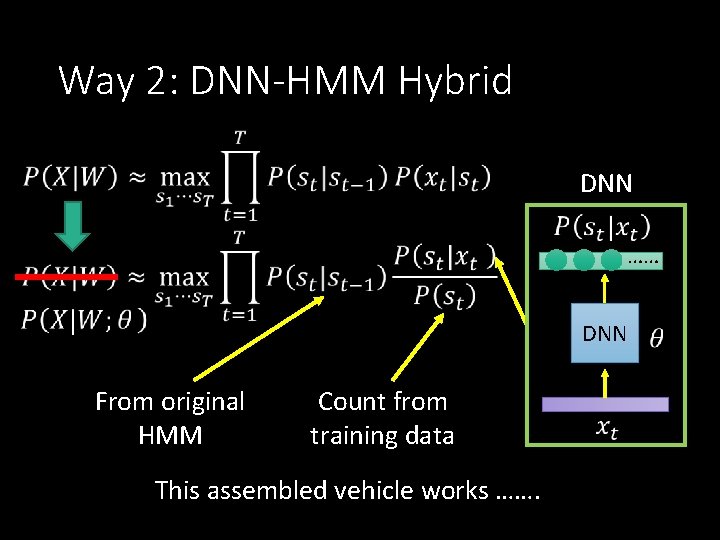

Way 2: DNN-HMM Hybrid DNN …… DNN From original HMM Count from training data This assembled vehicle works …….

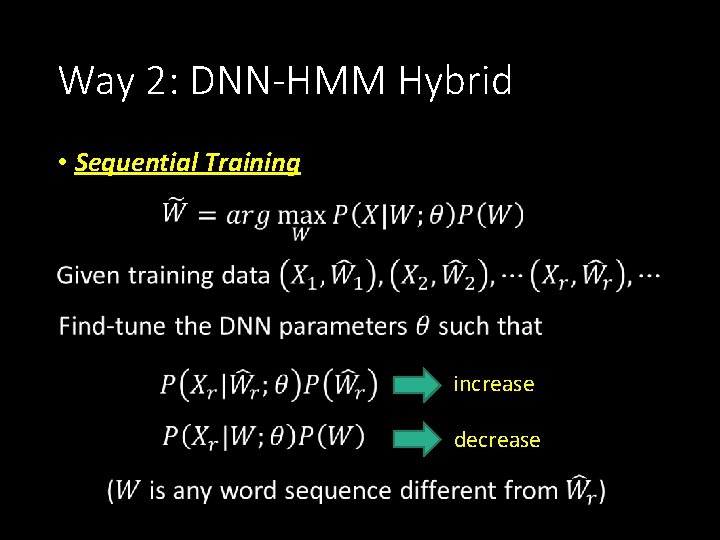

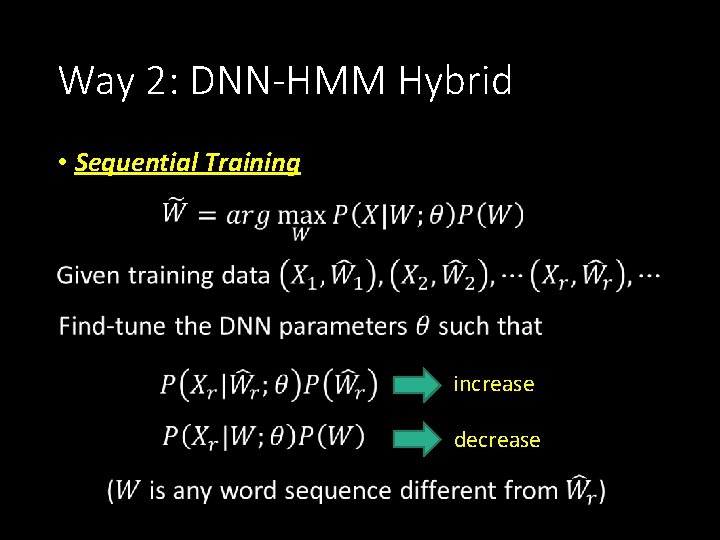

Way 2: DNN-HMM Hybrid • Sequential Training increase decrease

How to use Deep Learning? Way 3: End-to-end

Way 3: End-to-end - Character Input: acoustic features (spectrograms) Output: characters (and space) + null (~) No phoneme and lexicon (No OOV problem) A. Hannun, C. Case, J. Casper, B. Catanzaro, G. Diamos, E. Elsen, R. Prenger, S. Satheesh, S. Sengupta, A. Coates, A. Ng ”Deep Speech: Scaling up end-to-end speech recognition”, ar. Xiv: 1412. 5567 v 2, 2014.

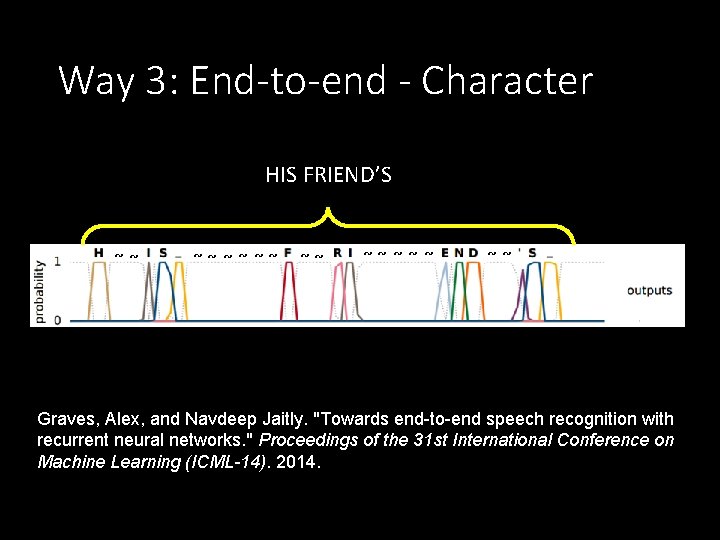

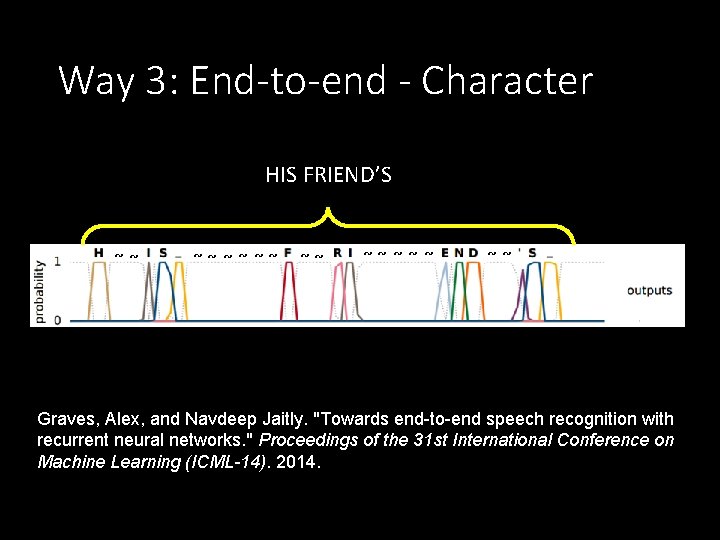

Way 3: End-to-end - Character HIS FRIEND’S ~~ ~~ ~ ~~ Graves, Alex, and Navdeep Jaitly. "Towards end-to-end speech recognition with recurrent neural networks. " Proceedings of the 31 st International Conference on Machine Learning (ICML-14). 2014.

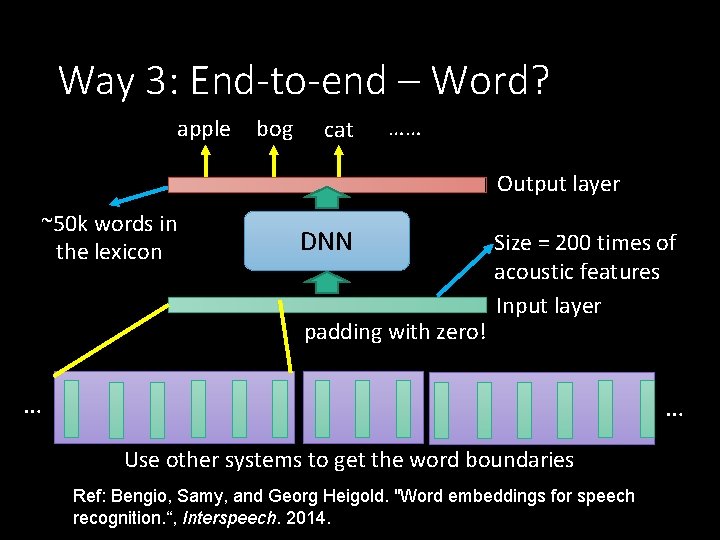

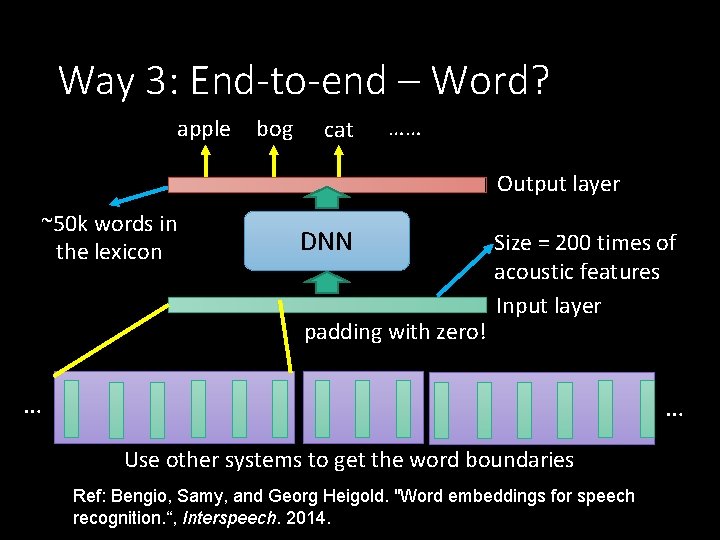

Way 3: End-to-end – Word? apple bog cat …… Output layer ~50 k words in the lexicon DNN padding with zero! Size = 200 times of acoustic features Input layer … … Use other systems to get the word boundaries Ref: Bengio, Samy, and Georg Heigold. "Word embeddings for speech recognition. “, Interspeech. 2014.

Why Deep Learning?

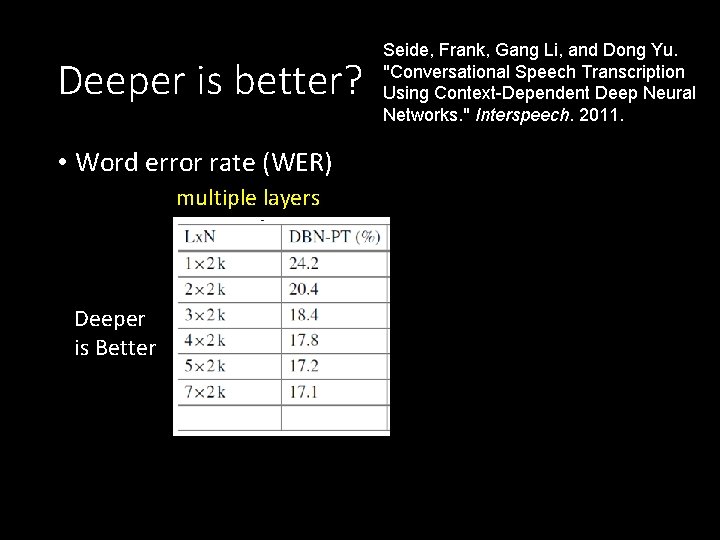

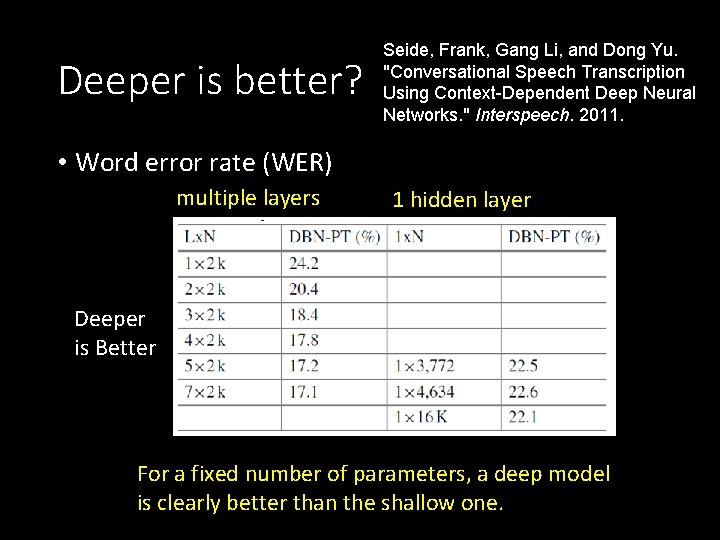

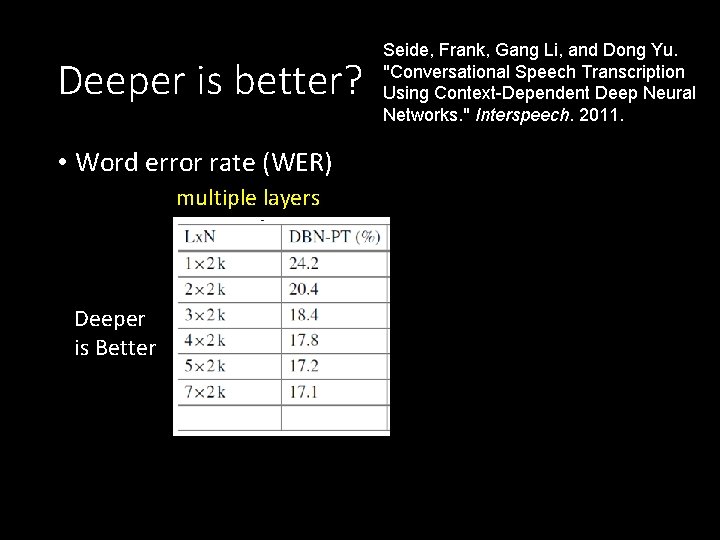

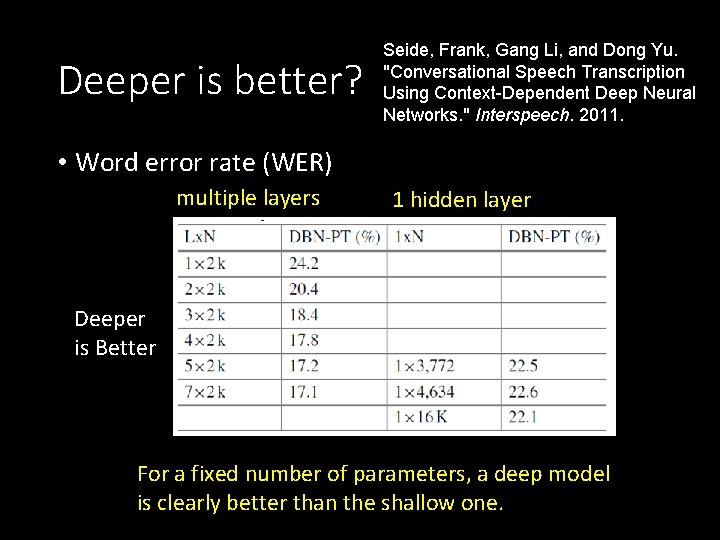

Deeper is better? Seide, Frank, Gang Li, and Dong Yu. "Conversational Speech Transcription Using Context-Dependent Deep Neural Networks. " Interspeech. 2011. • Word error rate (WER) multiple layers Deeper is Better 1 hidden layer

Deeper is better? Seide, Frank, Gang Li, and Dong Yu. "Conversational Speech Transcription Using Context-Dependent Deep Neural Networks. " Interspeech. 2011. • Word error rate (WER) multiple layers 1 hidden layer Deeper is Better For a fixed number of parameters, a deep model is clearly better than the shallow one.

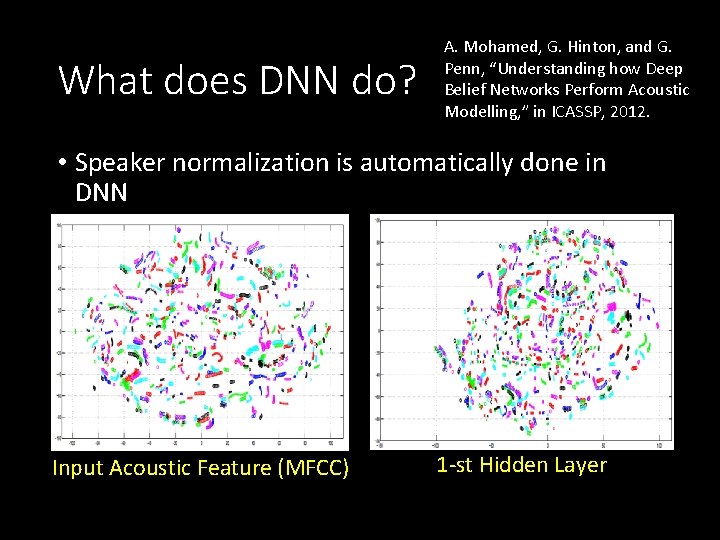

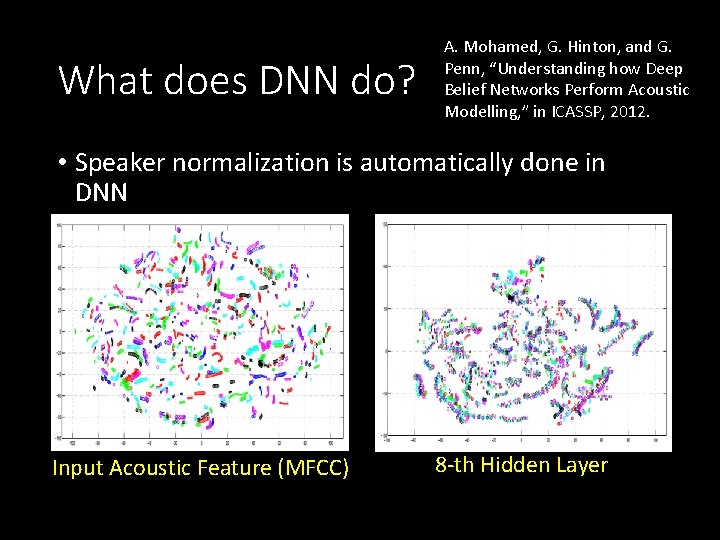

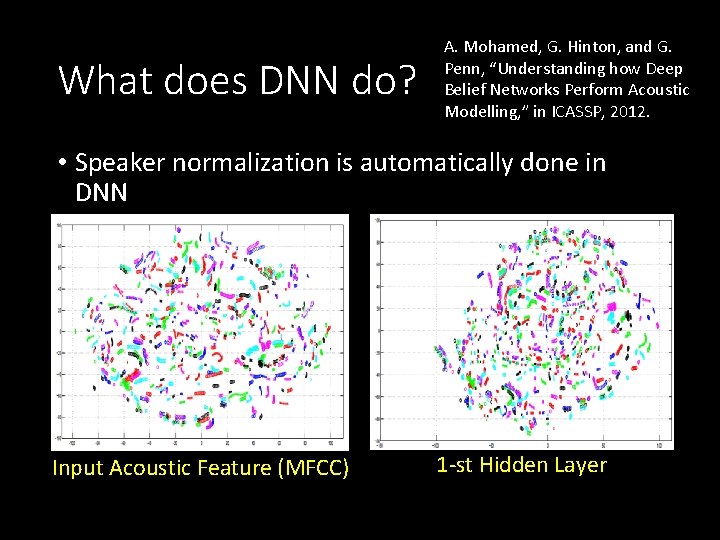

What does DNN do? A. Mohamed, G. Hinton, and G. Penn, “Understanding how Deep Belief Networks Perform Acoustic Modelling, ” in ICASSP, 2012. • Speaker normalization is automatically done in DNN Input Acoustic Feature (MFCC) 1 -st Hidden Layer

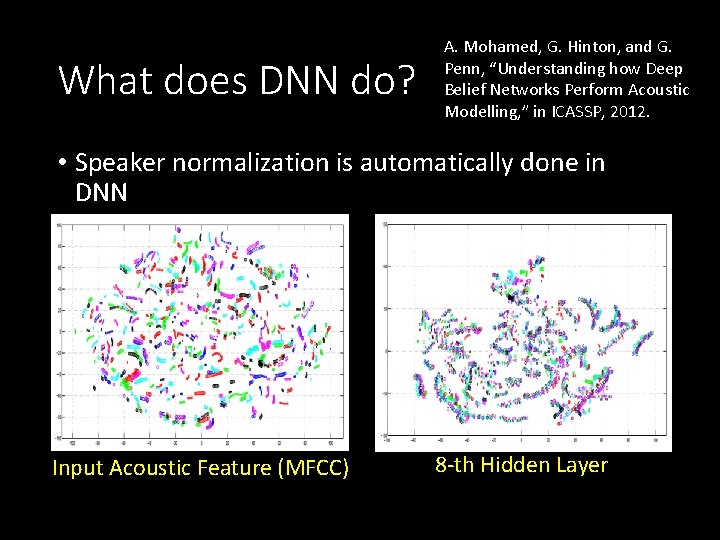

What does DNN do? A. Mohamed, G. Hinton, and G. Penn, “Understanding how Deep Belief Networks Perform Acoustic Modelling, ” in ICASSP, 2012. • Speaker normalization is automatically done in DNN Input Acoustic Feature (MFCC) 8 -th Hidden Layer

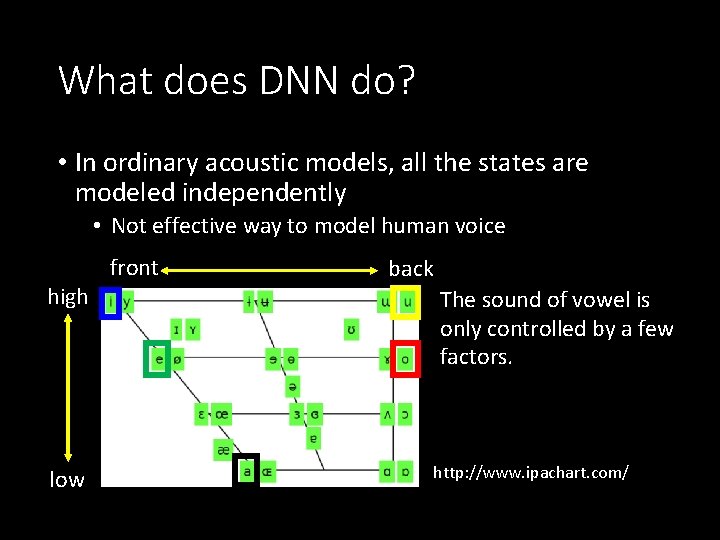

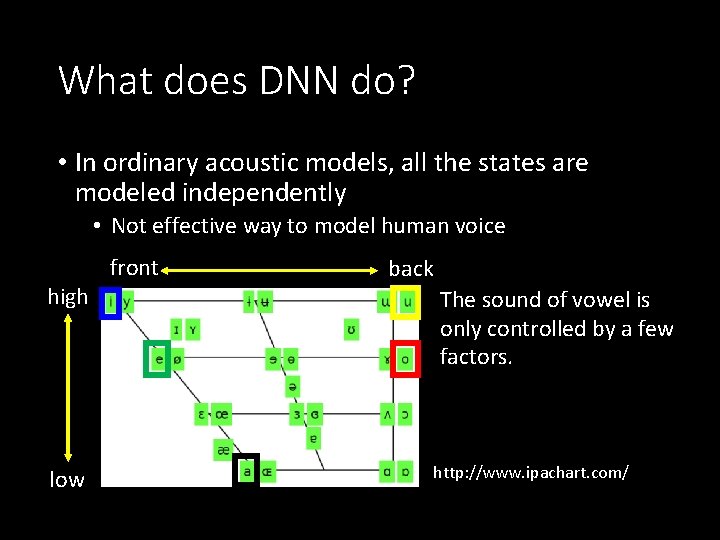

What does DNN do? • In ordinary acoustic models, all the states are modeled independently • Not effective way to model human voice high low front back The sound of vowel is only controlled by a few factors. http: //www. ipachart. com/

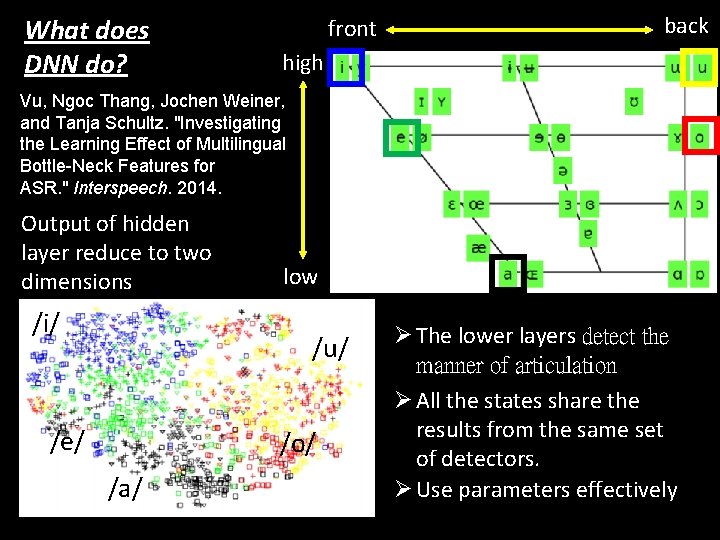

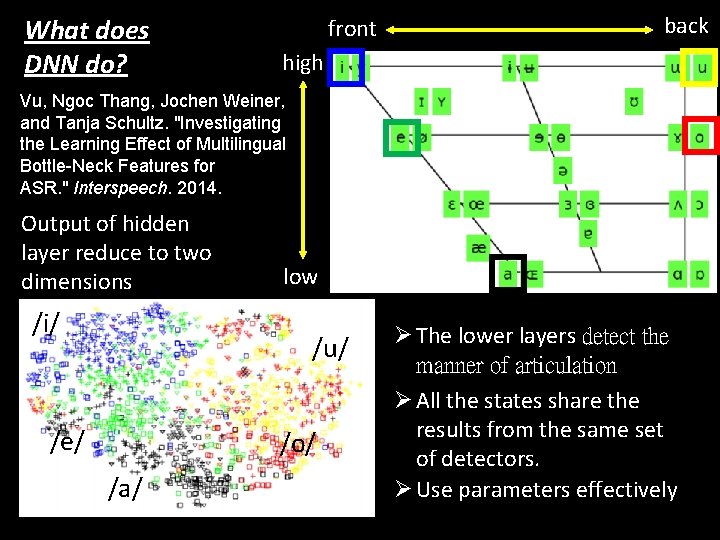

What does DNN do? front back high Vu, Ngoc Thang, Jochen Weiner, and Tanja Schultz. "Investigating the Learning Effect of Multilingual Bottle-Neck Features for ASR. " Interspeech. 2014. Output of hidden layer reduce to two dimensions /i/ low /u/ /e/ /o/ /a/ Ø The lower layers detect the manner of articulation Ø All the states share the results from the same set of detectors. Ø Use parameters effectively

Speaker Adaptation

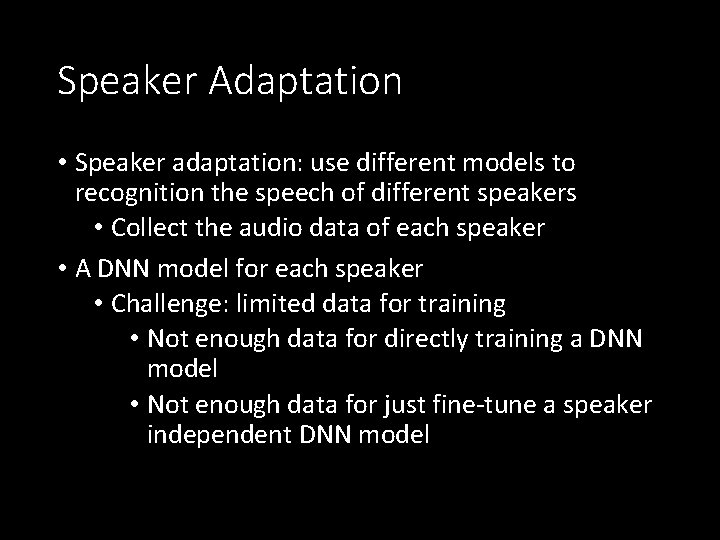

Speaker Adaptation • Speaker adaptation: use different models to recognition the speech of different speakers • Collect the audio data of each speaker • A DNN model for each speaker • Challenge: limited data for training • Not enough data for directly training a DNN model • Not enough data for just fine-tune a speaker independent DNN model

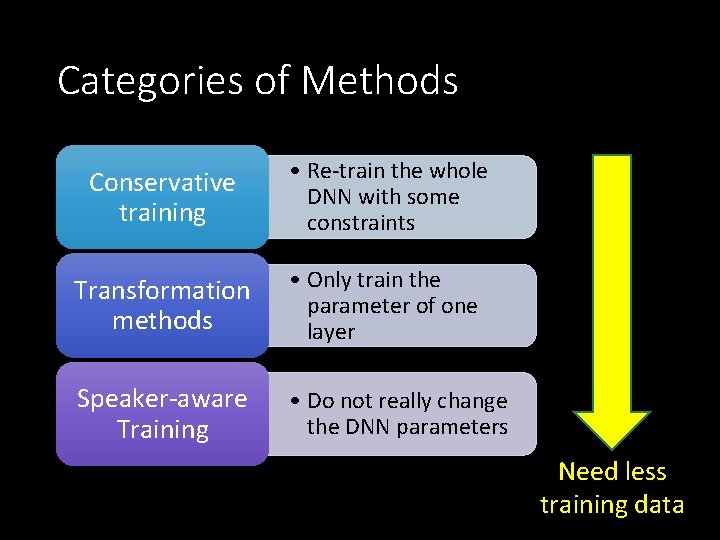

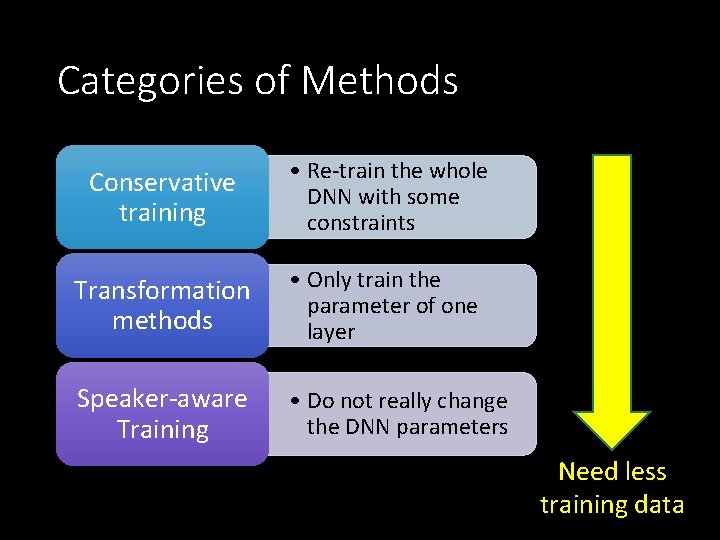

Categories of Methods Conservative training • Re-train the whole DNN with some constraints Transformation methods • Only train the parameter of one layer Speaker-aware Training • Do not really change the DNN parameters Need less training data

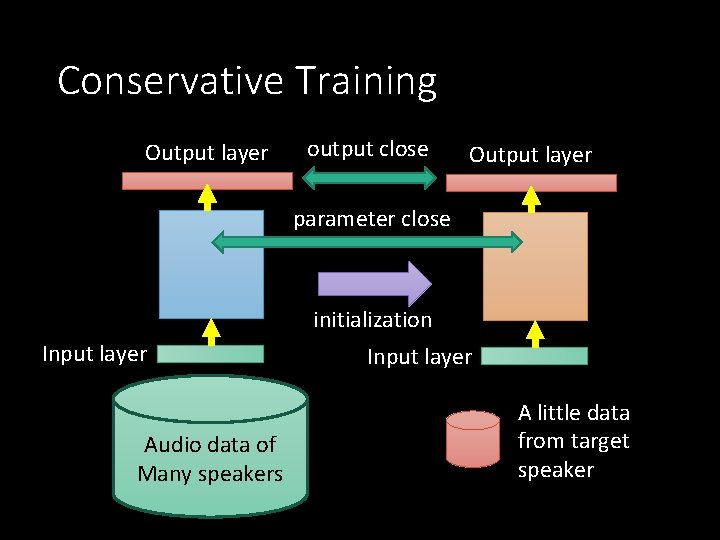

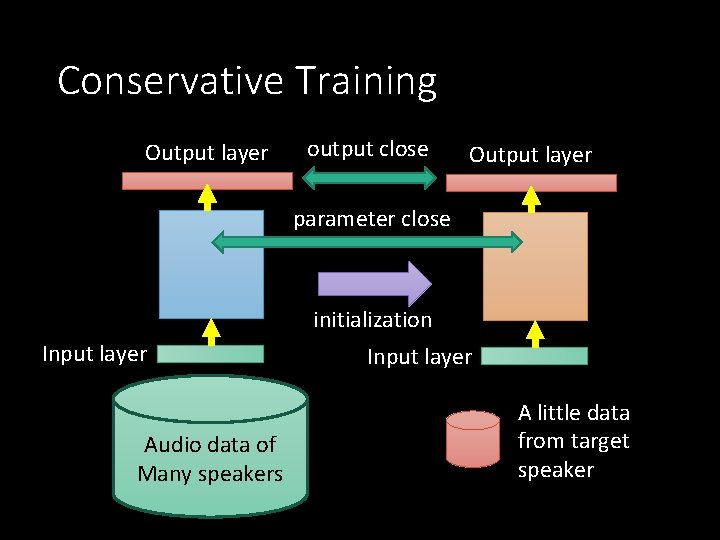

Conservative Training Output layer output close Output layer parameter close initialization Input layer Audio data of Many speakers Input layer A little data from target speaker

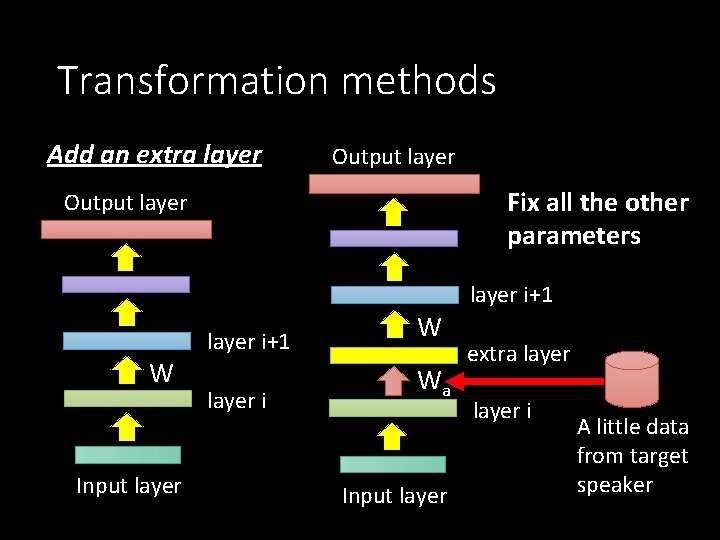

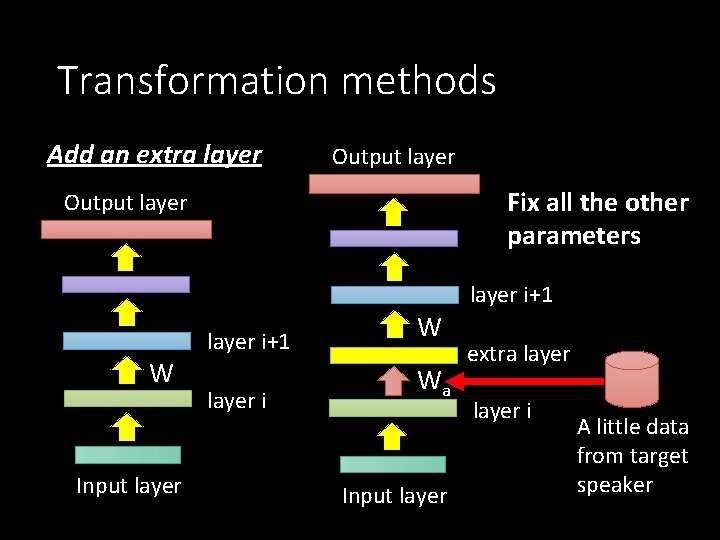

Transformation methods Add an extra layer Output layer Fix all the other parameters Output layer i+1 W Input layer i+1 layer i W Wa Input layer extra layer i A little data from target speaker

Transformation methods • Add the extra layer between the input and first layer • With splicing …… …… layer 1 extra layers extra layer Wa Larger Wa More data Wa Smaller Wa Wa Wa less data

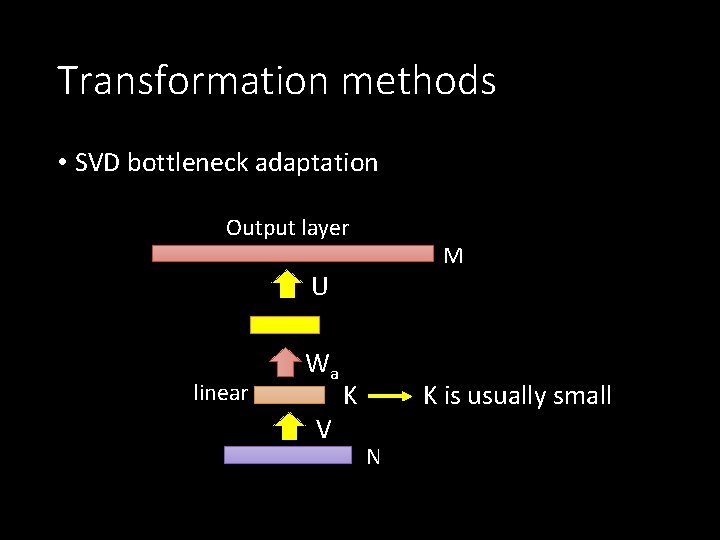

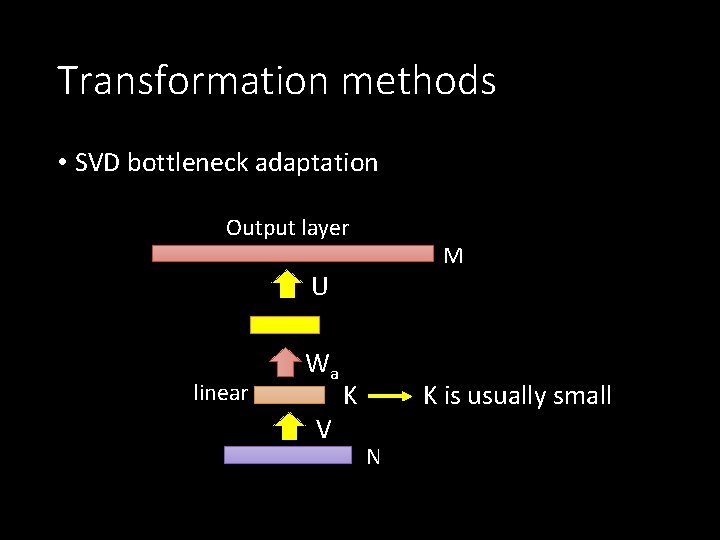

Transformation methods • SVD bottleneck adaptation Output layer M U linear Wa V K is usually small K N

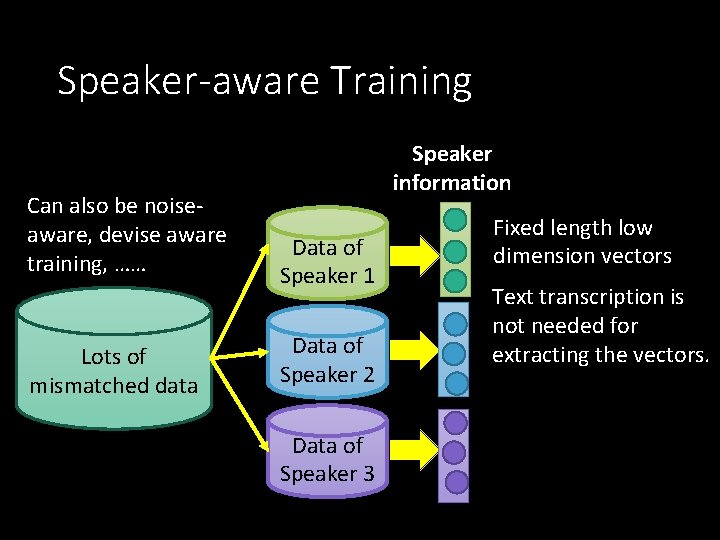

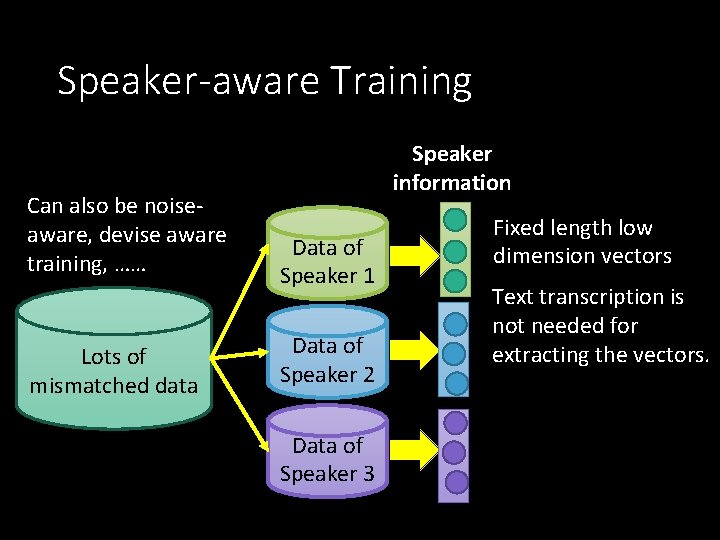

Speaker-aware Training Can also be noiseaware, devise aware training, …… Lots of mismatched data Speaker information Data of Speaker 1 Data of Speaker 2 Data of Speaker 3 Fixed length low dimension vectors Text transcription is not needed for extracting the vectors.

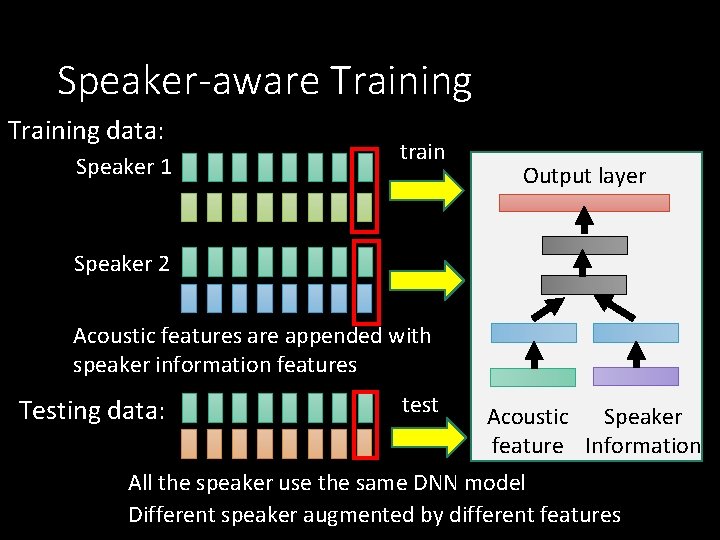

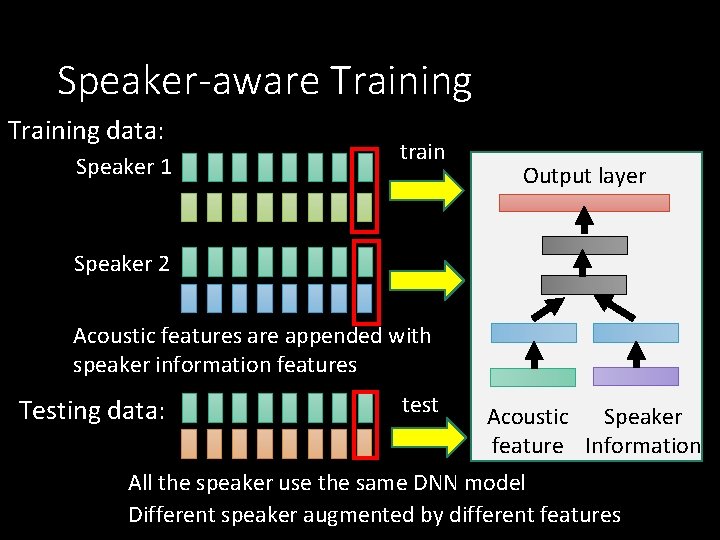

Speaker-aware Training data: Speaker 1 train Output layer Speaker 2 Acoustic features are appended with speaker information features Testing data: test Acoustic Speaker feature Information All the speaker use the same DNN model Different speaker augmented by different features

Multi-task Learning

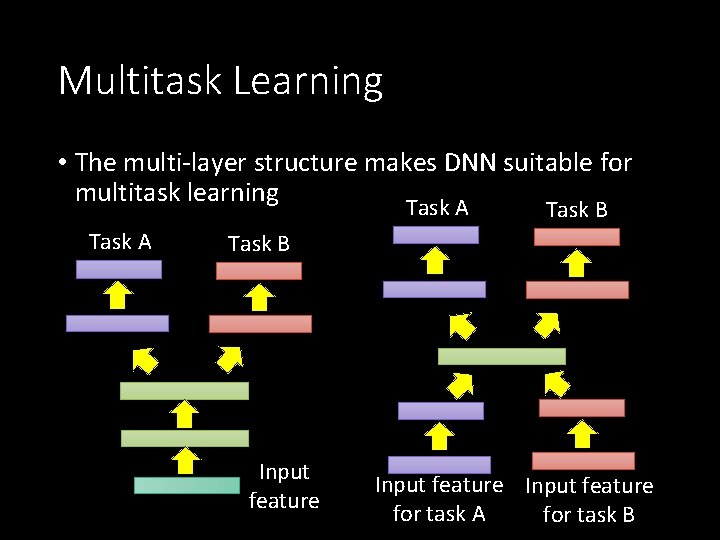

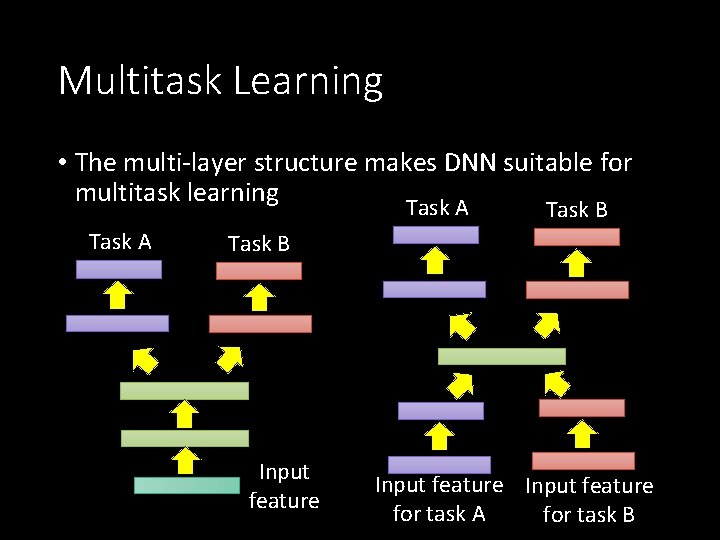

Multitask Learning • The multi-layer structure makes DNN suitable for multitask learning Task A Task B Input feature for task A for task B

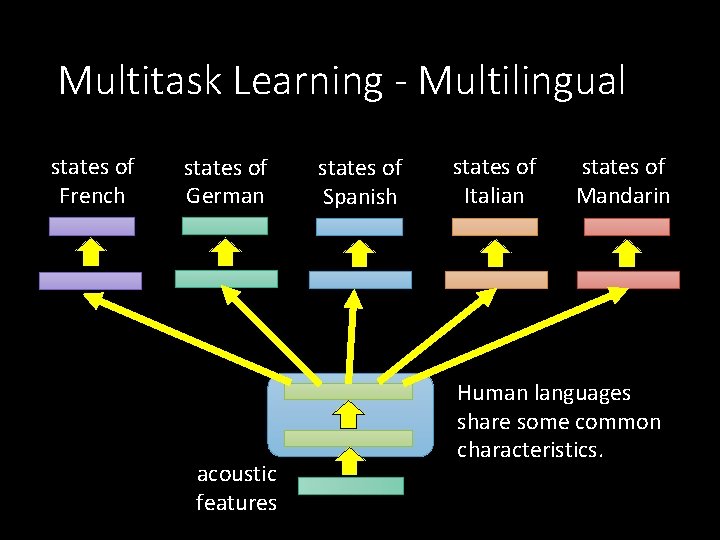

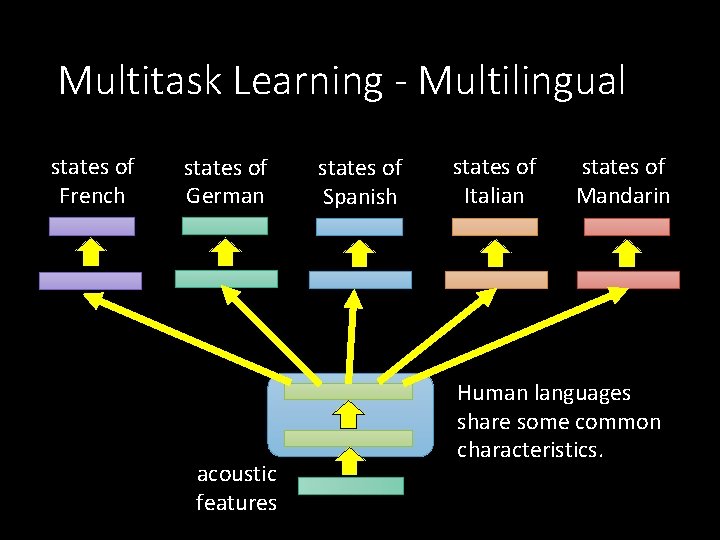

Multitask Learning - Multilingual states of French states of German acoustic features states of Spanish states of Italian states of Mandarin Human languages share some common characteristics.

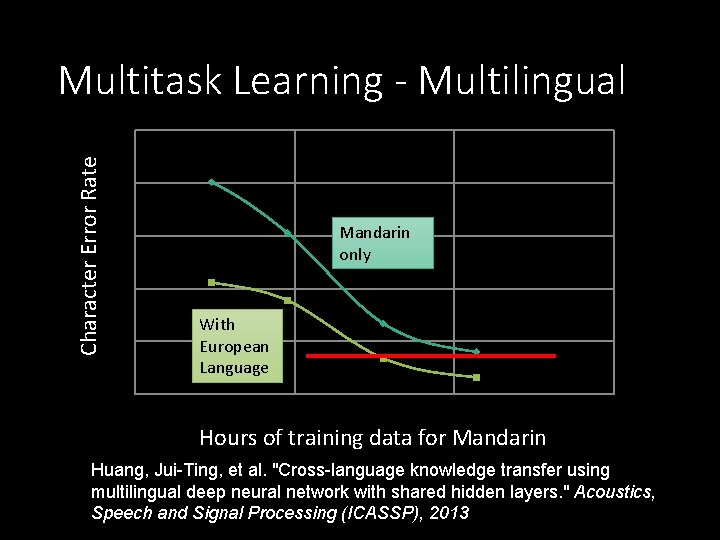

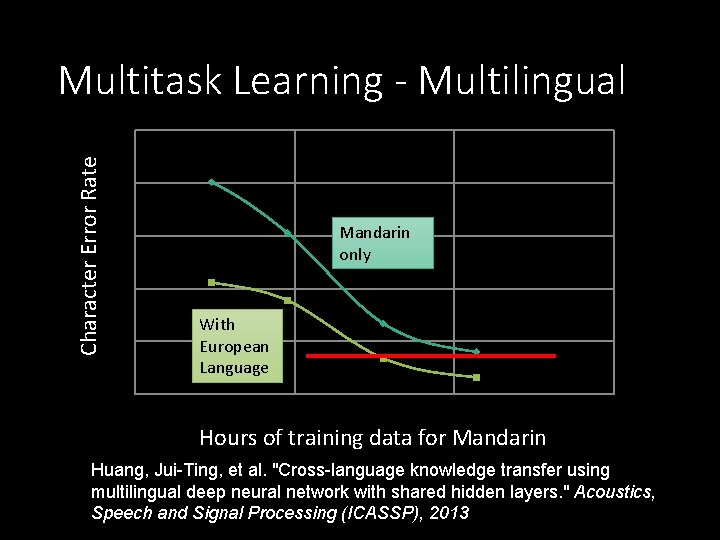

Multitask Learning - Multilingual Character Error Rate 50 45 Mandarin only 40 35 With European Language 30 25 1 10 1000 Hours of training data for Mandarin Huang, Jui-Ting, et al. "Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers. " Acoustics, Speech and Signal Processing (ICASSP), 2013

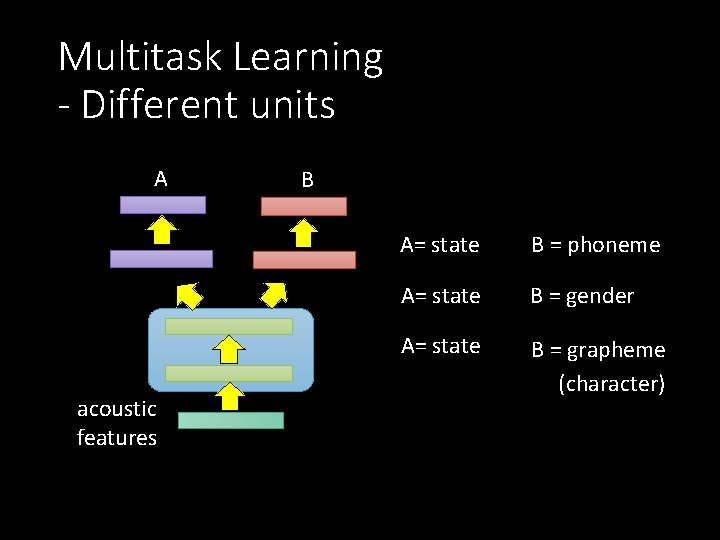

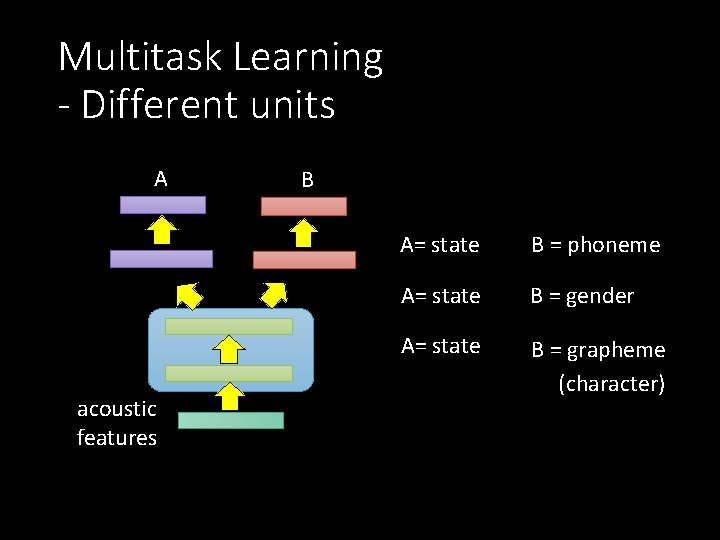

Multitask Learning - Different units A acoustic features B A= state B = phoneme A= state B = gender A= state B = grapheme (character)

Deep Learning for Acoustic Modeling New acoustic features

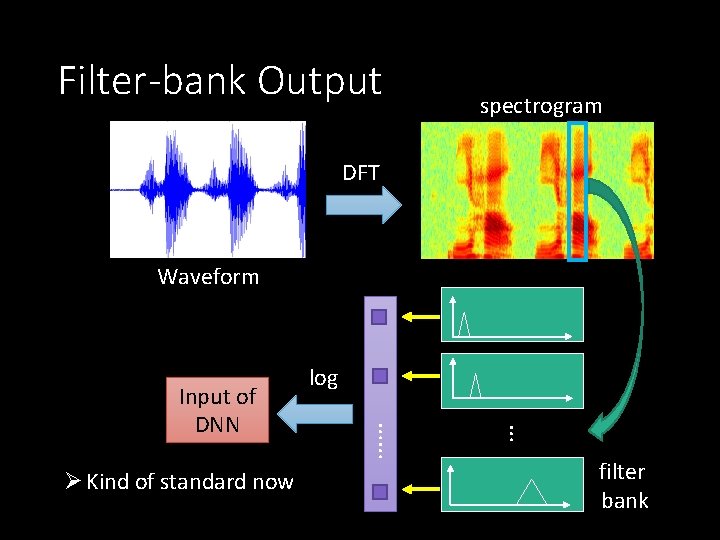

MFCC spectrogram DFT Waveform DCT MFCC … …… Input of DNN log filter bank

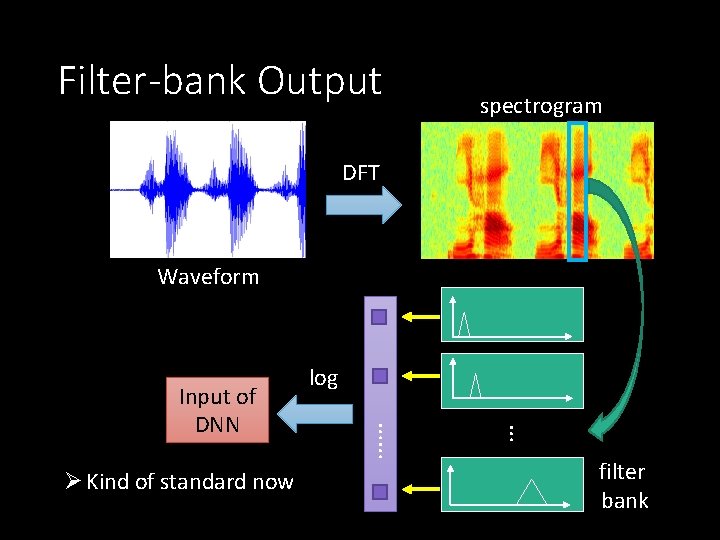

Filter-bank Output spectrogram DFT Waveform … Ø Kind of standard now …… Input of DNN log filter bank

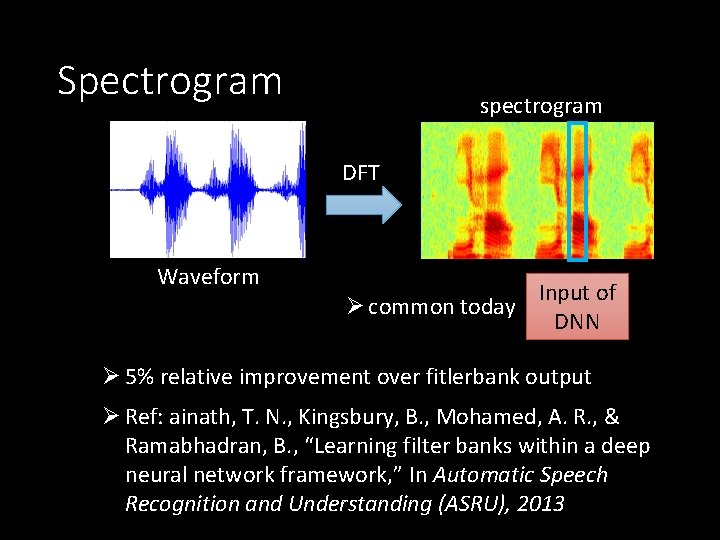

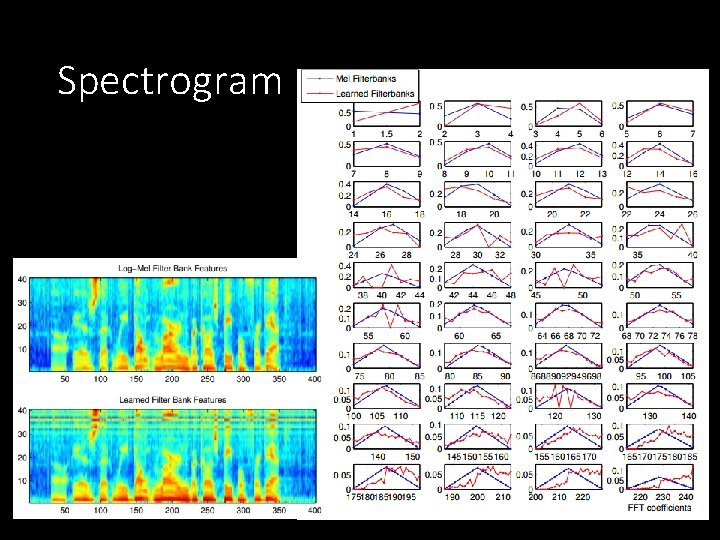

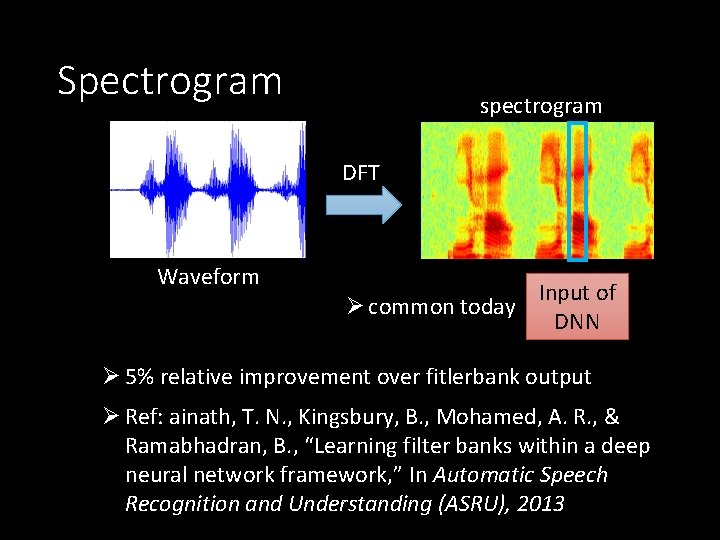

Spectrogram spectrogram DFT Waveform Ø common today Input of DNN Ø 5% relative improvement over fitlerbank output Ø Ref: ainath, T. N. , Kingsbury, B. , Mohamed, A. R. , & Ramabhadran, B. , “Learning filter banks within a deep neural network framework, ” In Automatic Speech Recognition and Understanding (ASRU), 2013

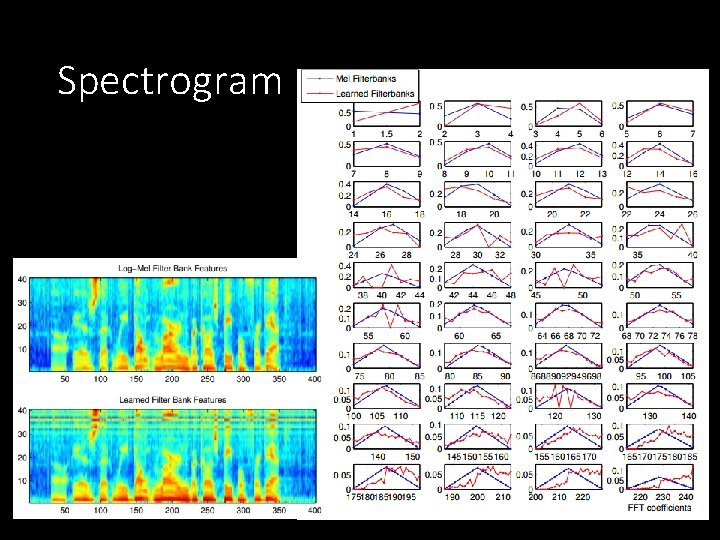

Spectrogram

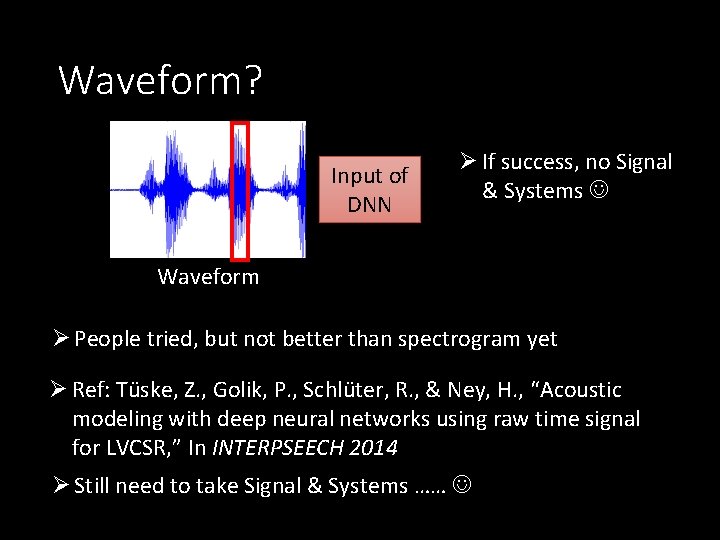

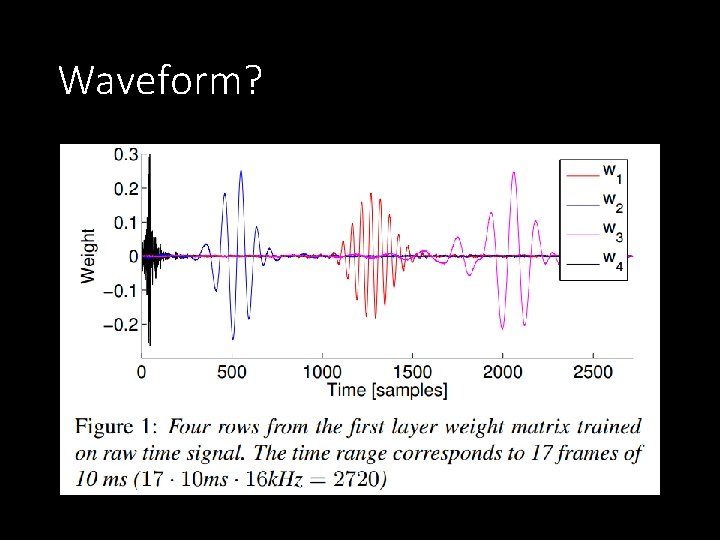

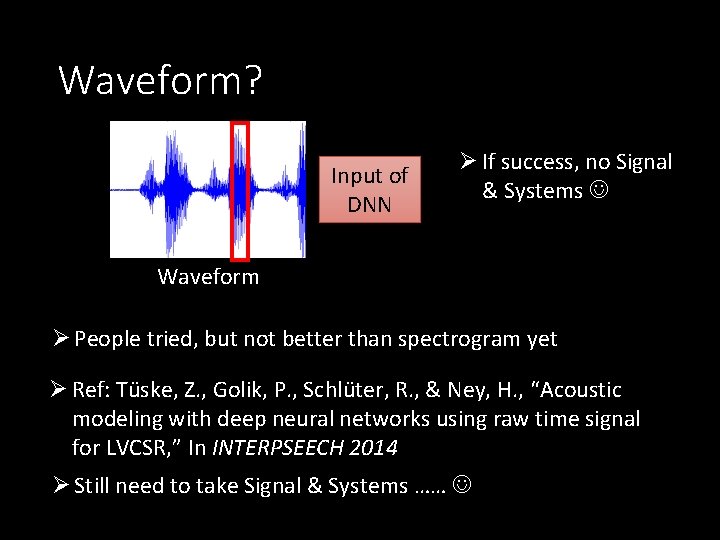

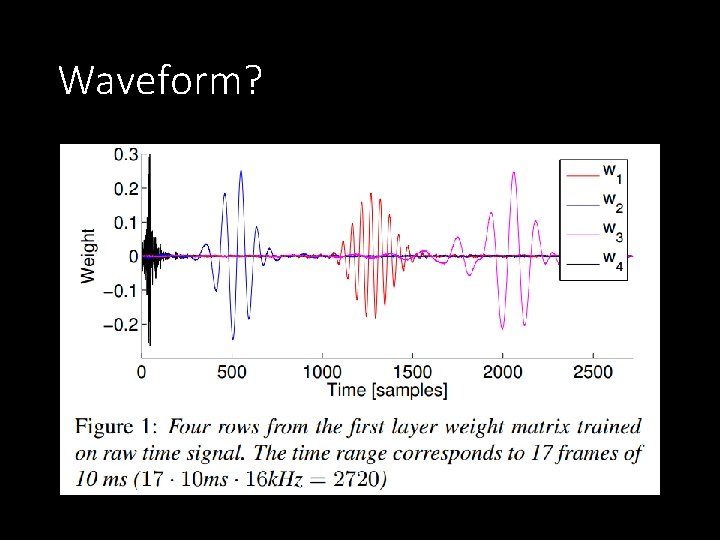

Waveform? Input of DNN Ø If success, no Signal & Systems Waveform Ø People tried, but not better than spectrogram yet Ø Ref: Tüske, Z. , Golik, P. , Schlüter, R. , & Ney, H. , “Acoustic modeling with deep neural networks using raw time signal for LVCSR, ” In INTERPSEECH 2014 Ø Still need to take Signal & Systems ……

Waveform?

Convolutional Neural Network (CNN)

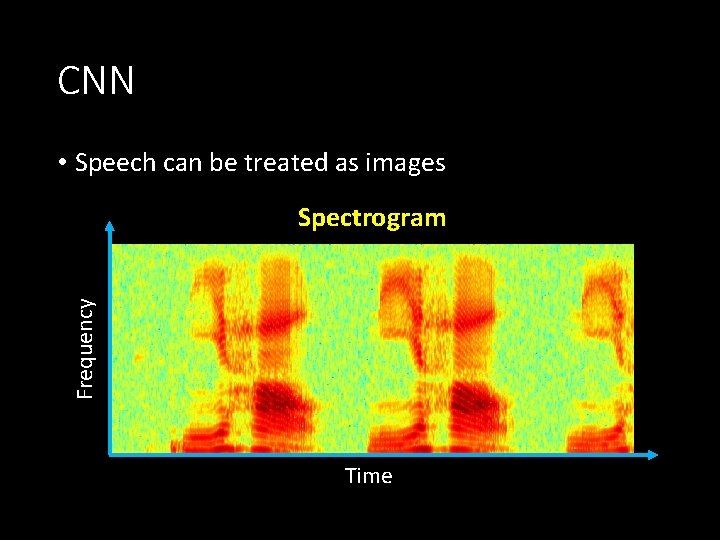

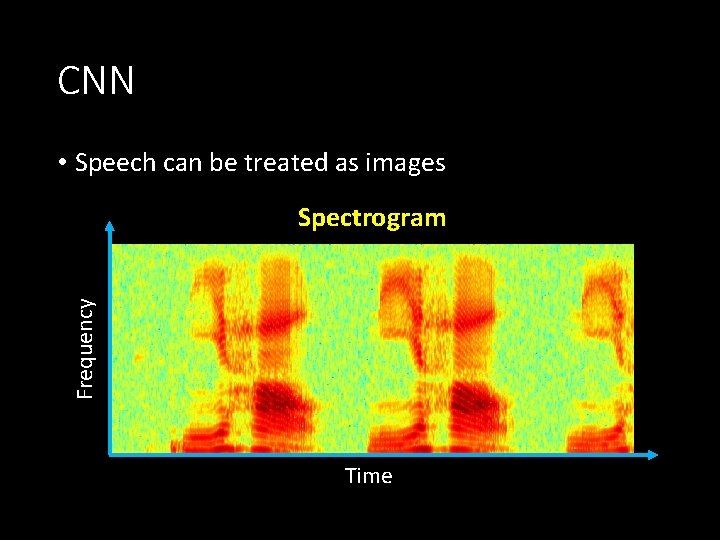

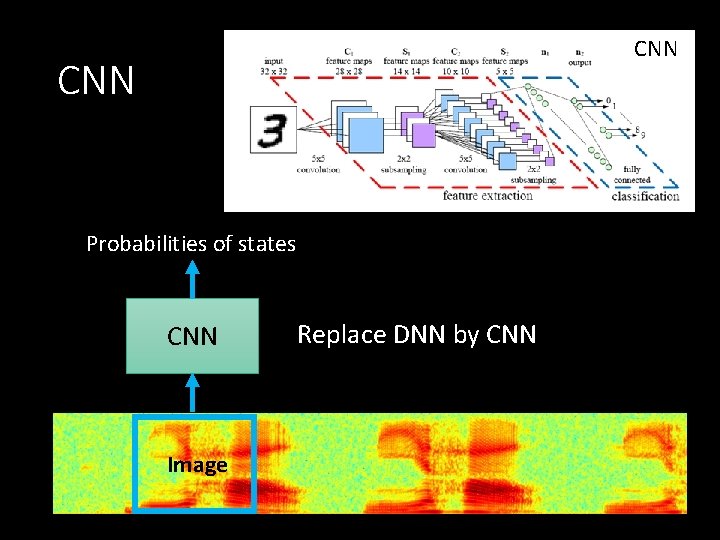

CNN • Speech can be treated as images Frequency Spectrogram Time

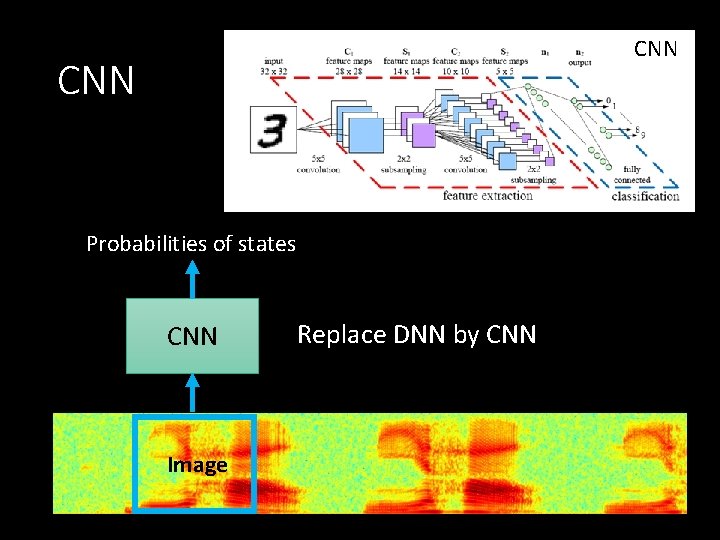

CNN Probabilities of states CNN Image Replace DNN by CNN

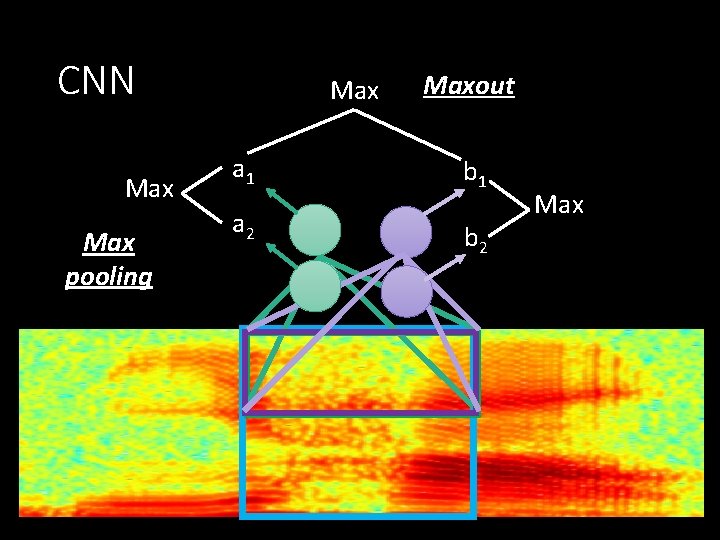

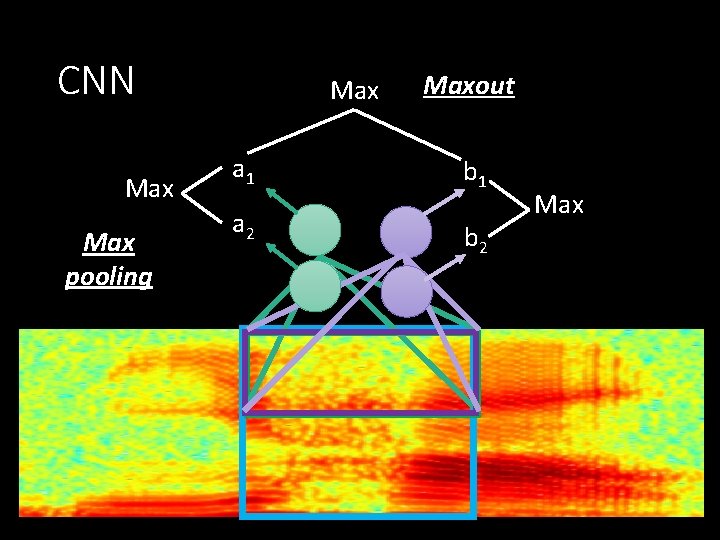

CNN Max pooling Max a 1 a 2 Maxout b 1 b 2 Max

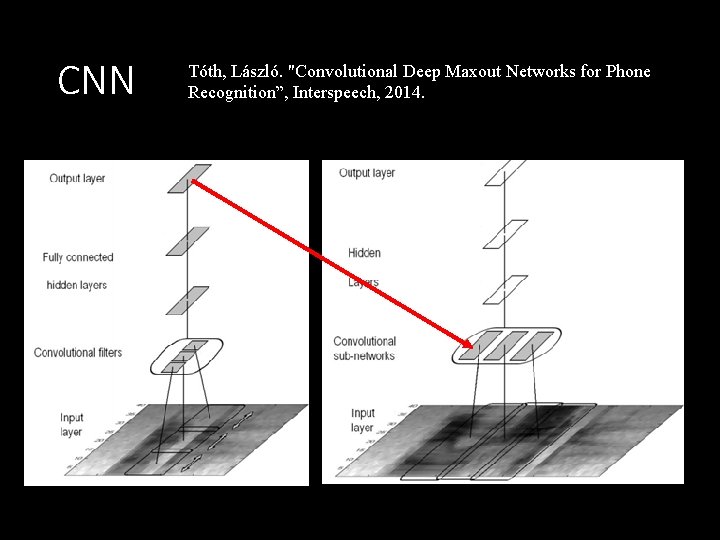

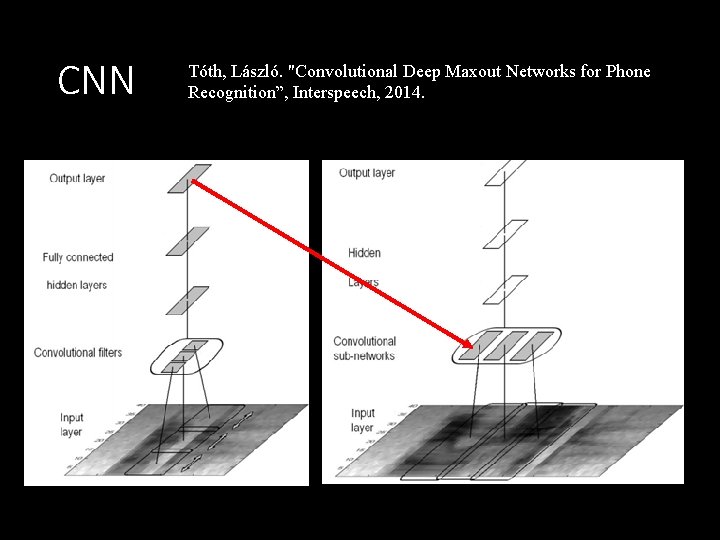

CNN Tóth, László. "Convolutional Deep Maxout Networks for Phone Recognition”, Interspeech, 2014.

Applications in Acoustic Signal Processing

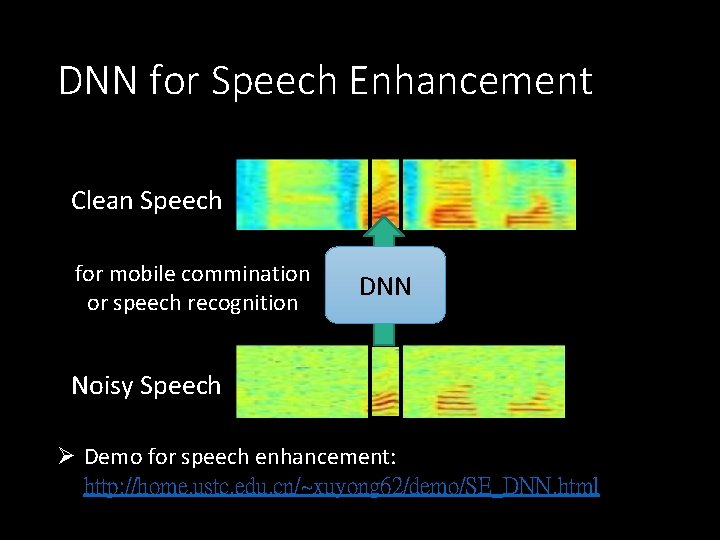

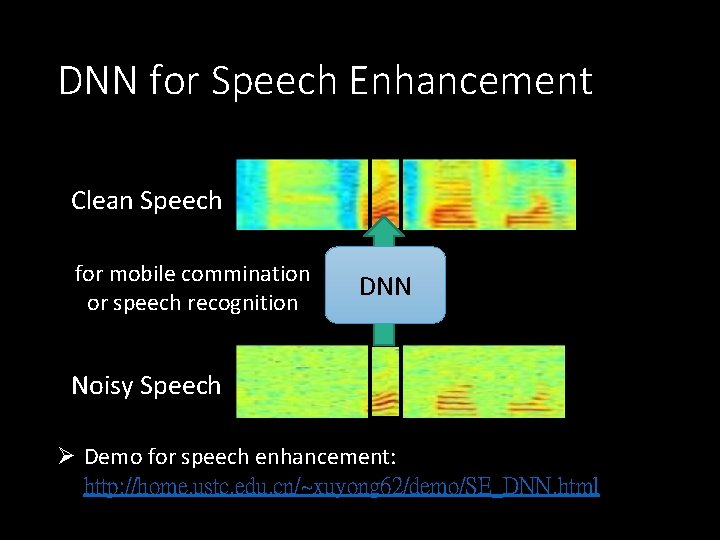

DNN for Speech Enhancement Clean Speech for mobile commination or speech recognition DNN Noisy Speech Ø Demo for speech enhancement: http: //home. ustc. edu. cn/~xuyong 62/demo/SE_DNN. html

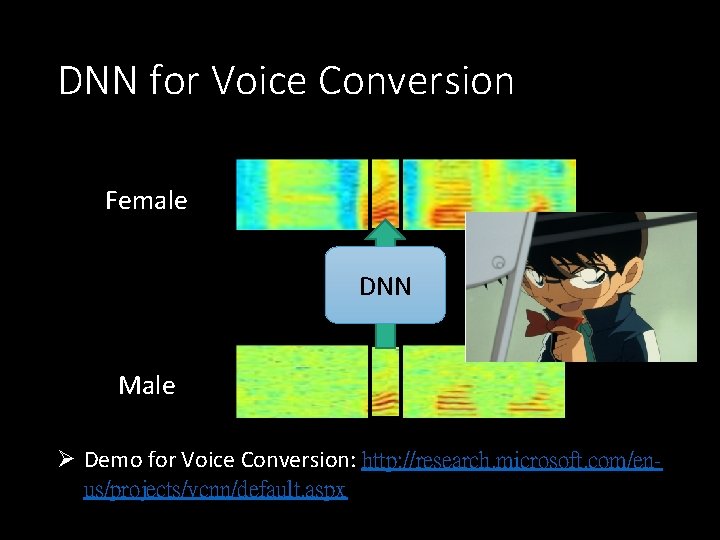

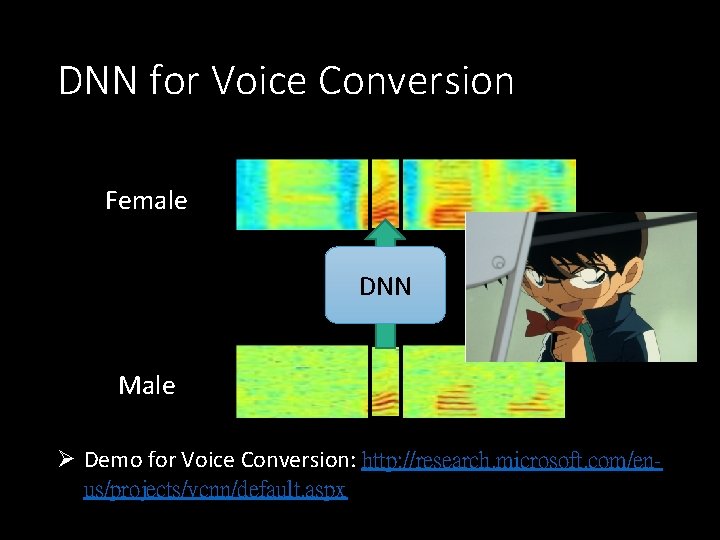

DNN for Voice Conversion Female DNN Male Ø Demo for Voice Conversion: http: //research. microsoft. com/enus/projects/vcnn/default. aspx

Concluding Remarks

Concluding Remarks • Conventional Speech Recognition • How to use Deep Learning in acoustic modeling? • Why Deep Learning? • Speaker Adaptation • Multi-task Deep Learning • New acoustic features • Convolutional Neural Network (CNN) • Applications in Acoustic Signal Processing

Thank you for your attention!

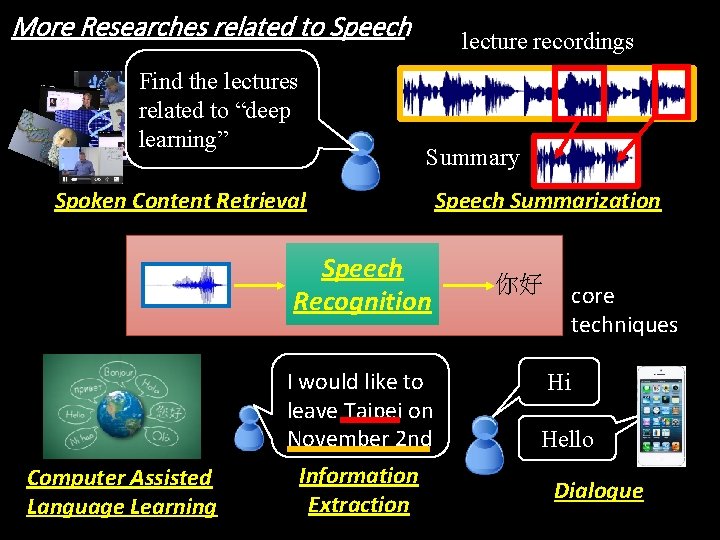

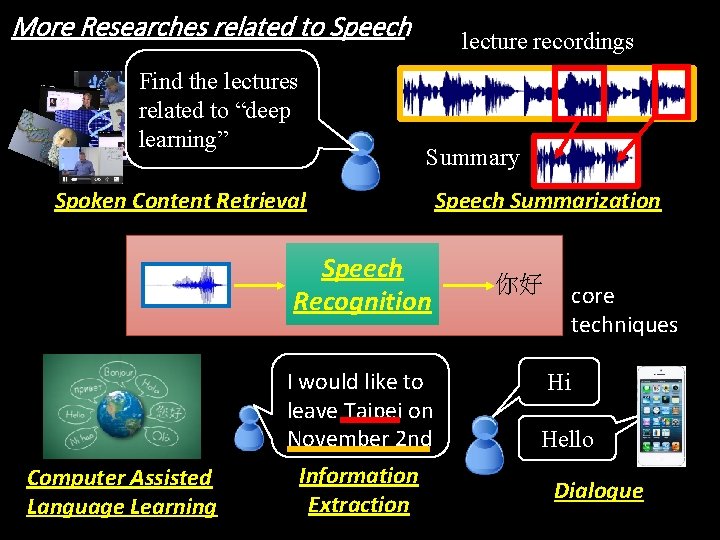

More Researches related to Speech Find the lectures related to “deep learning” lecture recordings Summary Spoken Content Retrieval Speech Recognition I would like to leave Taipei on November 2 nd Computer Assisted Language Learning Information Extraction Speech Summarization 你好 core techniques Hi Hello Dialogue