Decoupled Pipelines Rationale Analysis and Evaluation Frederick A

- Slides: 32

Decoupled Pipelines: Rationale, Analysis, and Evaluation Frederick A. Koopmans, Sanjay J. Patel Department of Computer Engineering University of Illinois at Urbana-Champaign

Outline Introduction & Motivation Background DSEP Design Average Case Optimizations Experimental Results 2

Motivation Why Asynchronous? n n No clock skew No clock distribution circuitry Lower power (potentially) Increased modularity But what about performance? n What is the architectural benefit of removing the clock? w Decoupled Pipelines! 3

Motivation Advantages of a Decoupled Pipeline n n n Pipeline achieves average-case performance Rarely taken critical paths no longer affect performance New potential for average-case optimizations 4

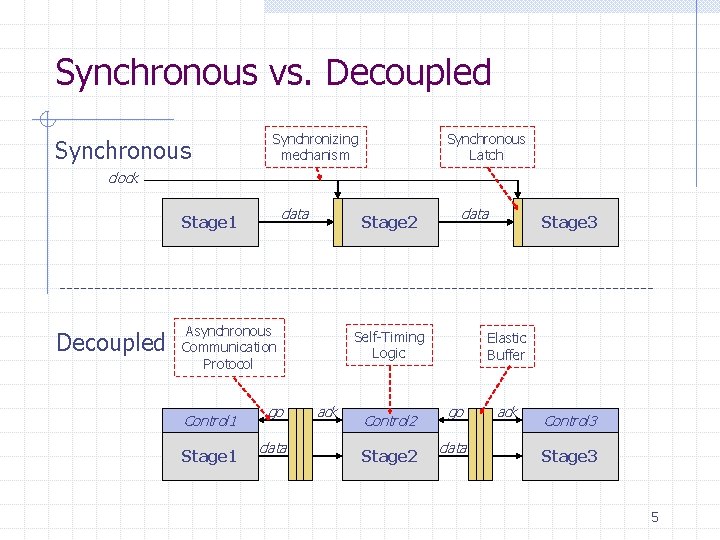

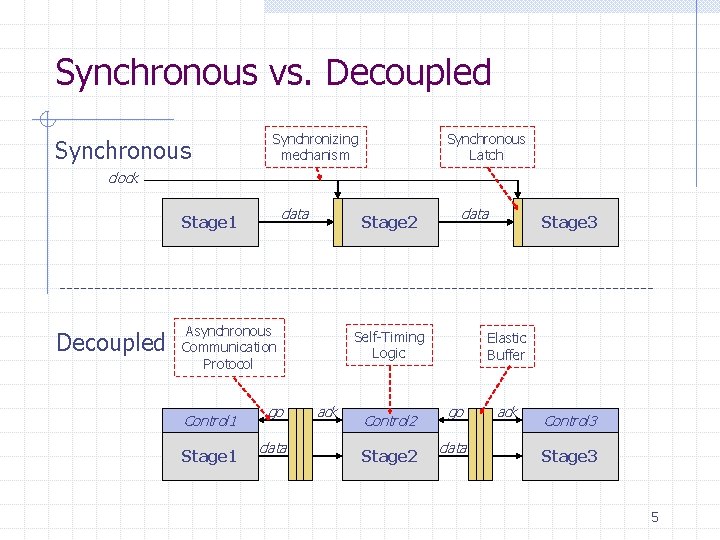

Synchronous vs. Decoupled Synchronous Synchronizing mechanism Synchronous Latch clock data Stage 1 Decoupled Stage 2 Asynchronous Communication Protocol Control 1 Stage 1 go data Self-Timing Logic ack Control 2 Stage 3 Elastic Buffer go data ack Control 3 Stage 3 5

Outline Introduction & Motivation Background DSEP Design Average Case Optimizations Experimental Results 6

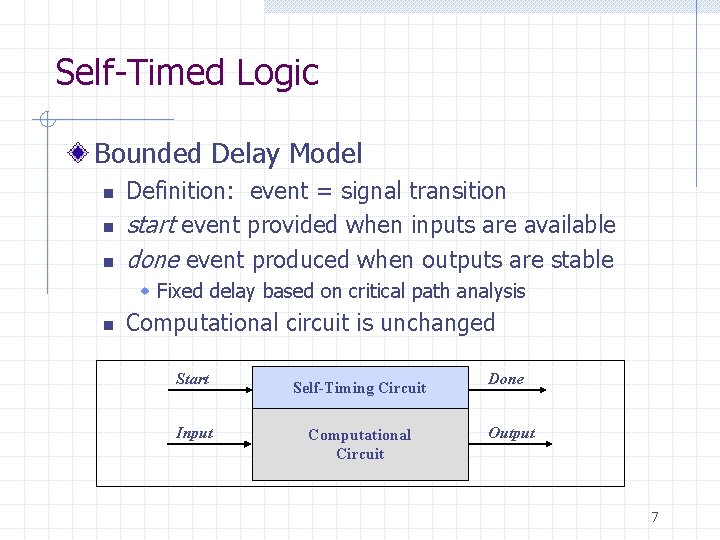

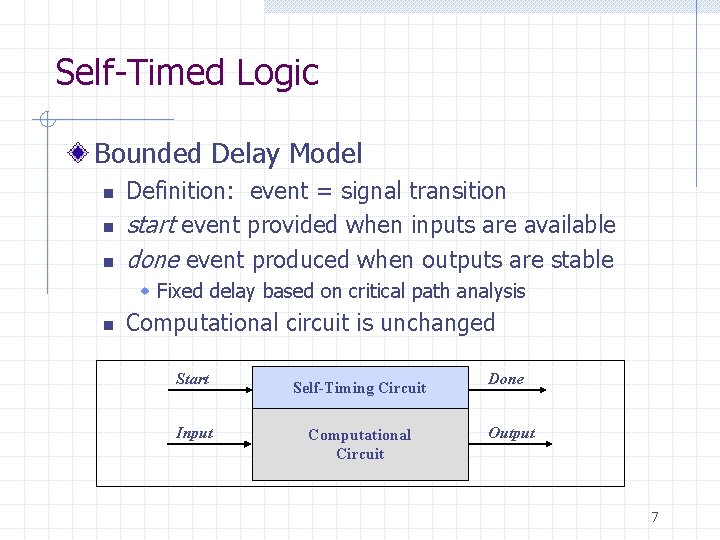

Self-Timed Logic Bounded Delay Model n n n Definition: event = signal transition start event provided when inputs are available done event produced when outputs are stable w Fixed delay based on critical path analysis n Computational circuit is unchanged Start Input Self-Timing Circuit Computational Circuit Done Output 7

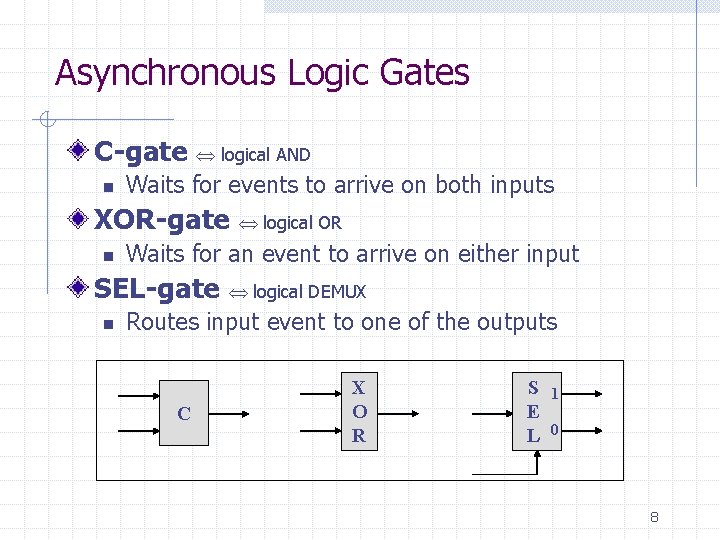

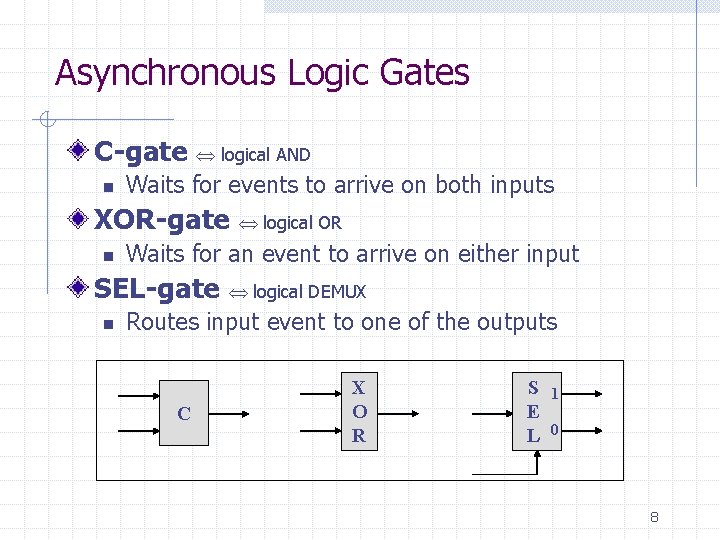

Asynchronous Logic Gates C-gate n logical AND Waits for events to arrive on both inputs XOR-gate n Waits for an event to arrive on either input SEL-gate n logical OR logical DEMUX Routes input event to one of the outputs C X O R S E L 1 0 8

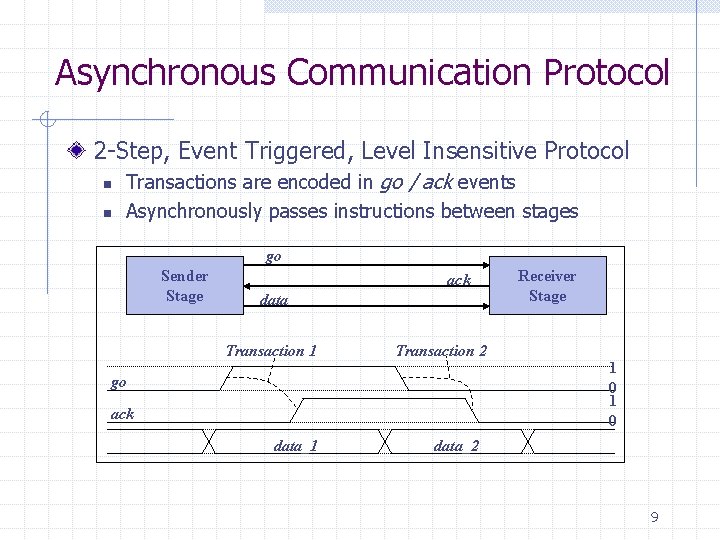

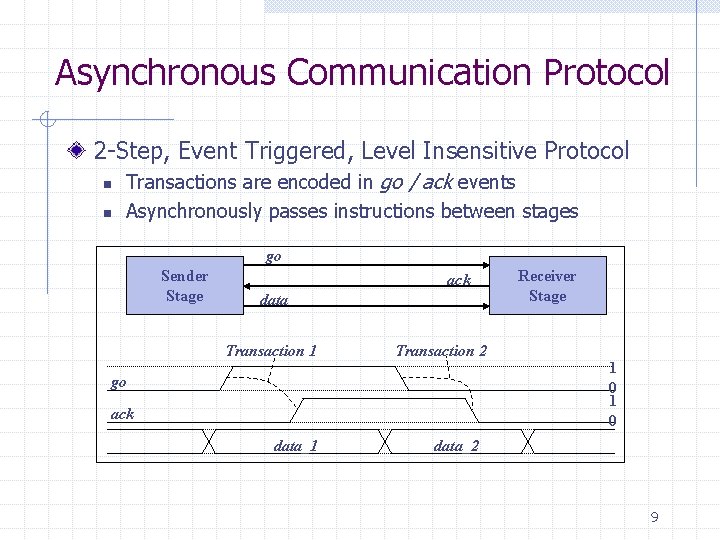

Asynchronous Communication Protocol 2 -Step, Event Triggered, Level Insensitive Protocol n n Transactions are encoded in go / ack events Asynchronously passes instructions between stages go Sender Stage ack data Transaction 1 Transaction 2 go ack data_1 Receiver Stage 1 0 data_2 9

Outline Introduction & Motivation Background DSEP Design Average Case Optimizations Experimental Results 10

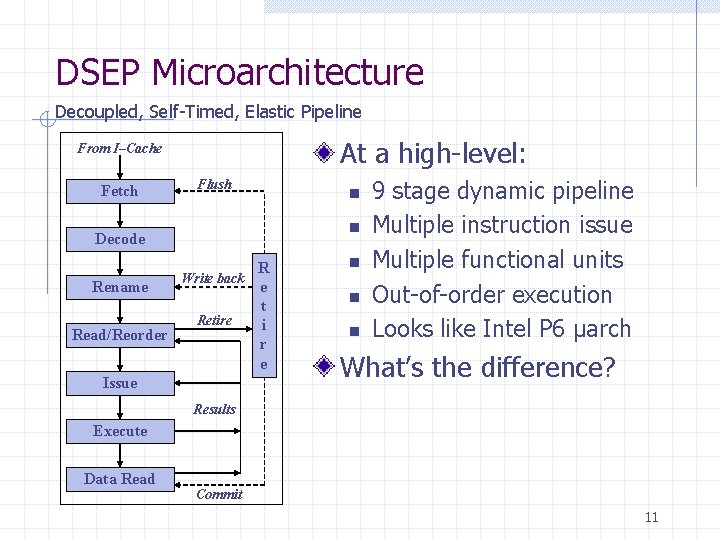

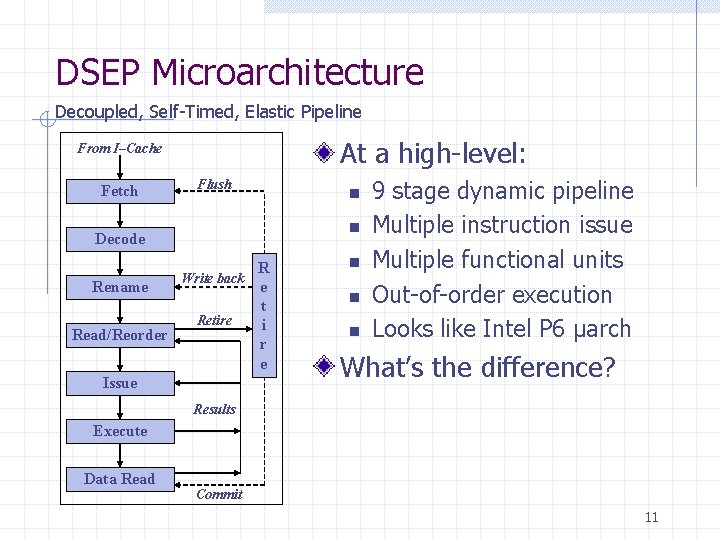

DSEP Microarchitecture Decoupled, Self-Timed, Elastic Pipeline At a high-level: From I–Cache Fetch Flush n n Decode R Write back e Rename t Retire i Read/Reorder r e Issue n n n 9 stage dynamic pipeline Multiple instruction issue Multiple functional units Out-of-order execution Looks like Intel P 6 µarch What’s the difference? Results Execute Data Read Commit 11

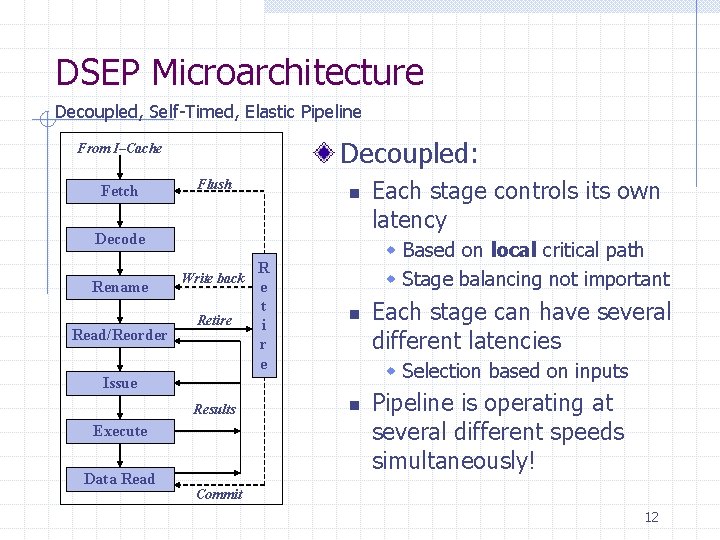

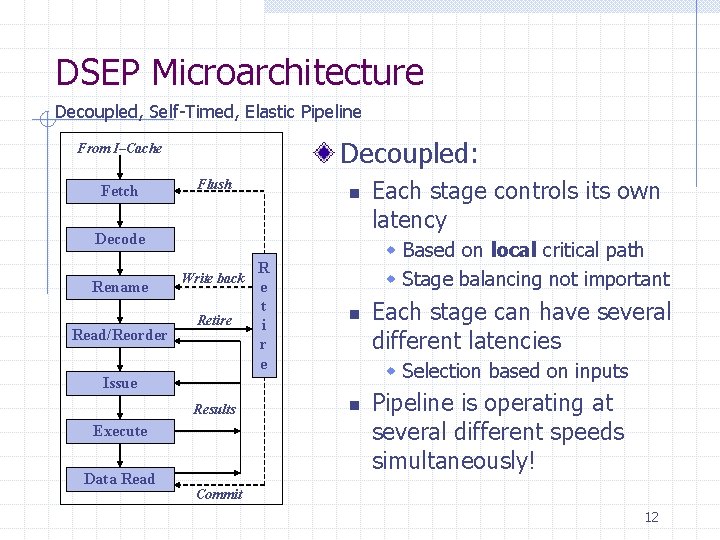

DSEP Microarchitecture Decoupled, Self-Timed, Elastic Pipeline Decoupled: From I–Cache Fetch Flush n Decode Rename Read/Reorder Write back Retire R e t i r e w Based on local critical path w Stage balancing not important n Execute Data Read Each stage can have several different latencies w Selection based on inputs Issue Results Each stage controls its own latency n Pipeline is operating at several different speeds simultaneously! Commit 12

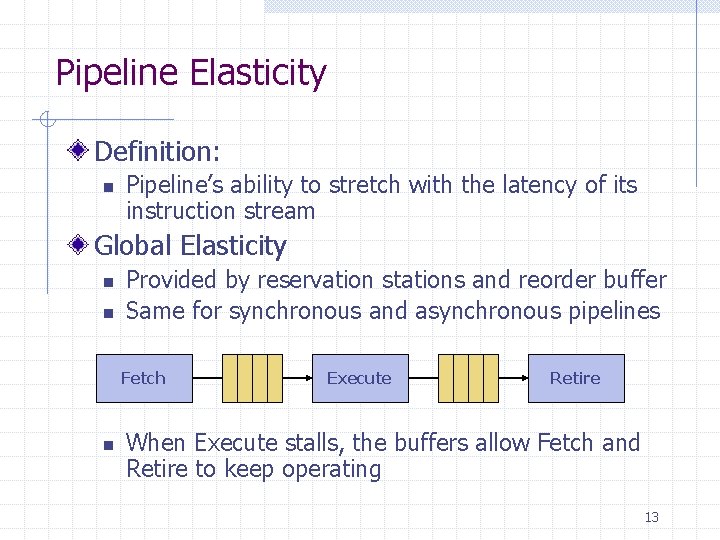

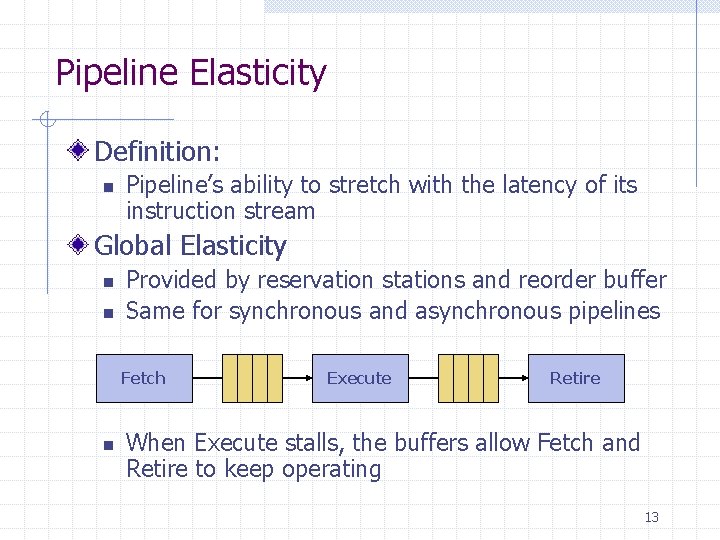

Pipeline Elasticity Definition: n Pipeline’s ability to stretch with the latency of its instruction stream Global Elasticity n n Provided by reservation stations and reorder buffer Same for synchronous and asynchronous pipelines Fetch n Execute Retire When Execute stalls, the buffers allow Fetch and Retire to keep operating 13

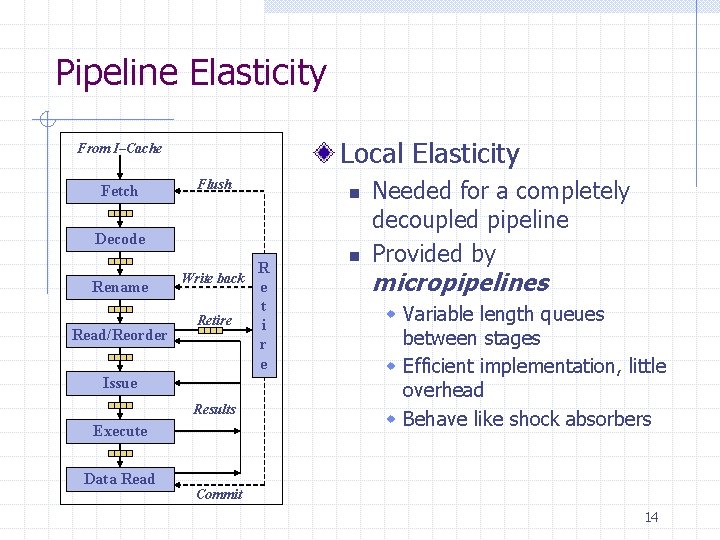

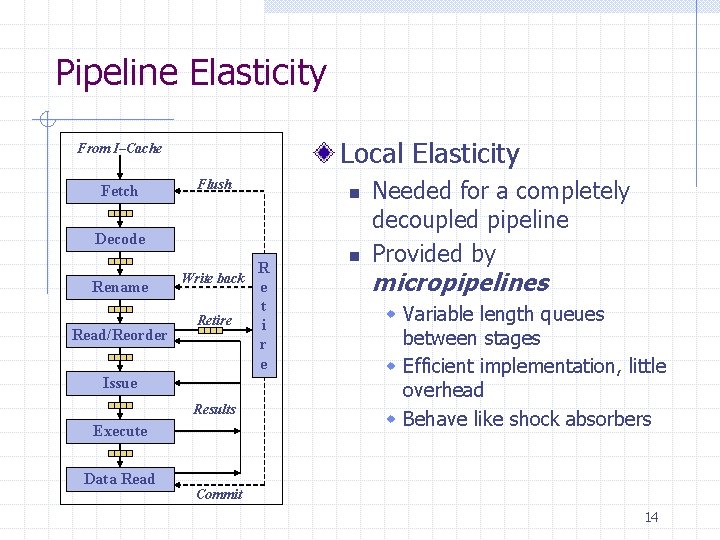

Pipeline Elasticity Local Elasticity From I–Cache Fetch Flush n Decode R Write back e Rename t Retire i Read/Reorder r e Issue Results Execute Data Read n Needed for a completely decoupled pipeline Provided by micropipelines w Variable length queues between stages w Efficient implementation, little overhead w Behave like shock absorbers Commit 14

Outline Introduction & Motivation Background DSEP Design Average Case Optimizations Experimental Results 15

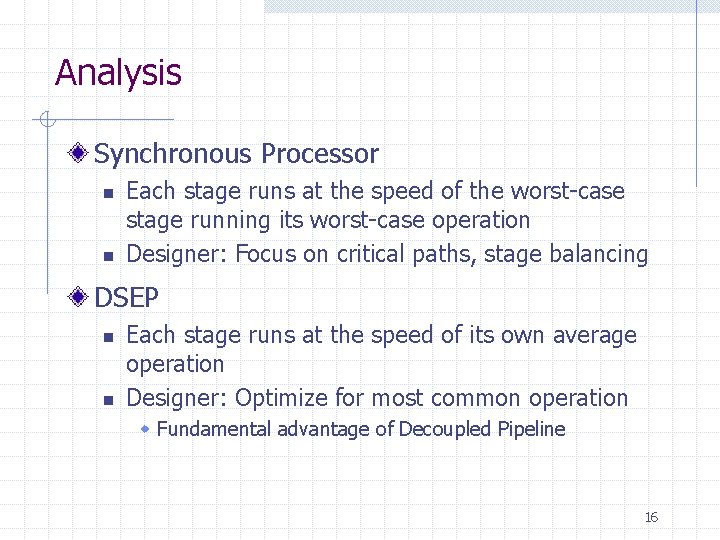

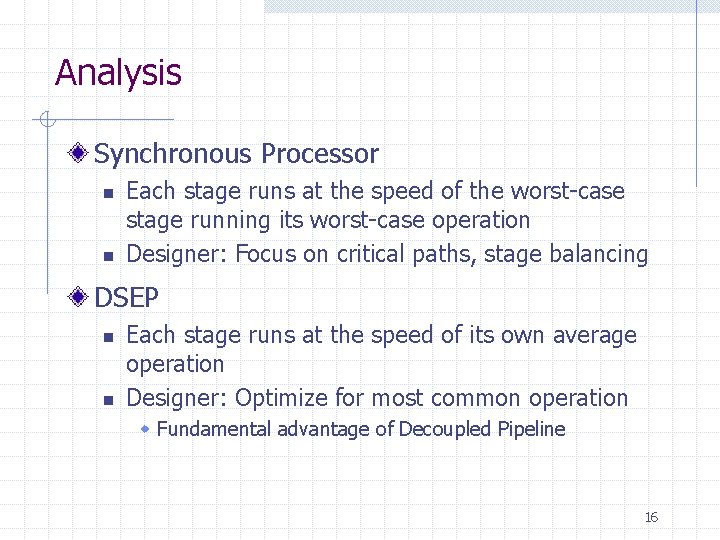

Analysis Synchronous Processor n n Each stage runs at the speed of the worst-case stage running its worst-case operation Designer: Focus on critical paths, stage balancing DSEP n n Each stage runs at the speed of its own average operation Designer: Optimize for most common operation w Fundamental advantage of Decoupled Pipeline 16

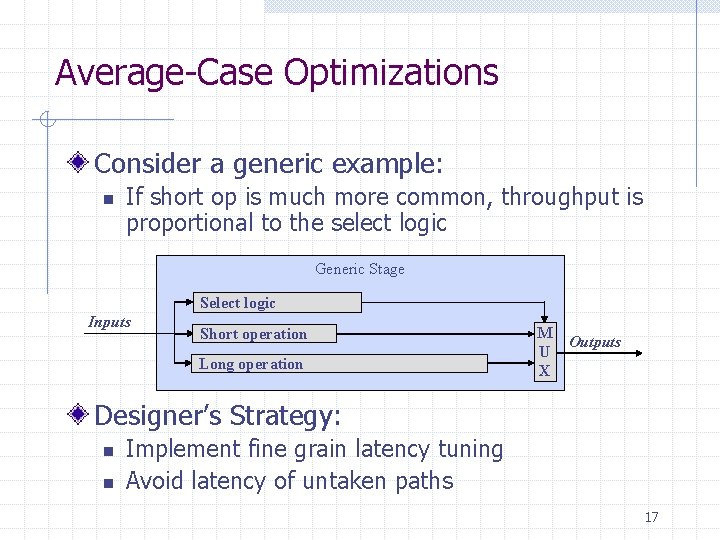

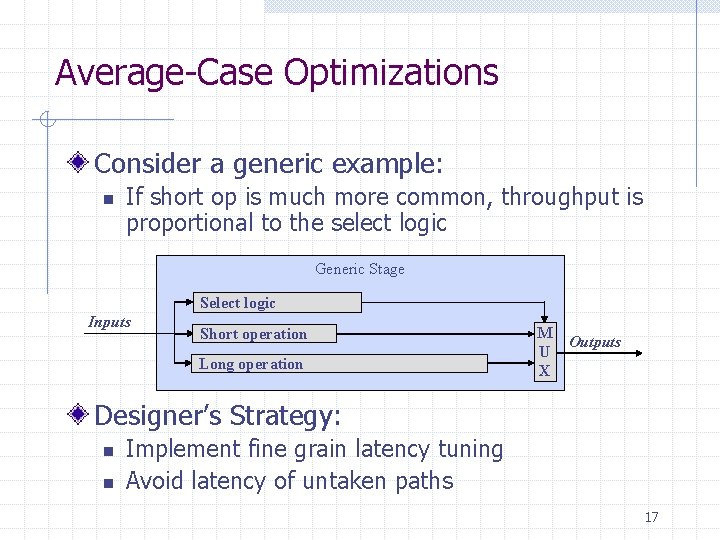

Average-Case Optimizations Consider a generic example: n If short op is much more common, throughput is proportional to the select logic Generic Stage Select logic Inputs Short operation Long operation M U X Outputs Designer’s Strategy: n n Implement fine grain latency tuning Avoid latency of untaken paths 17

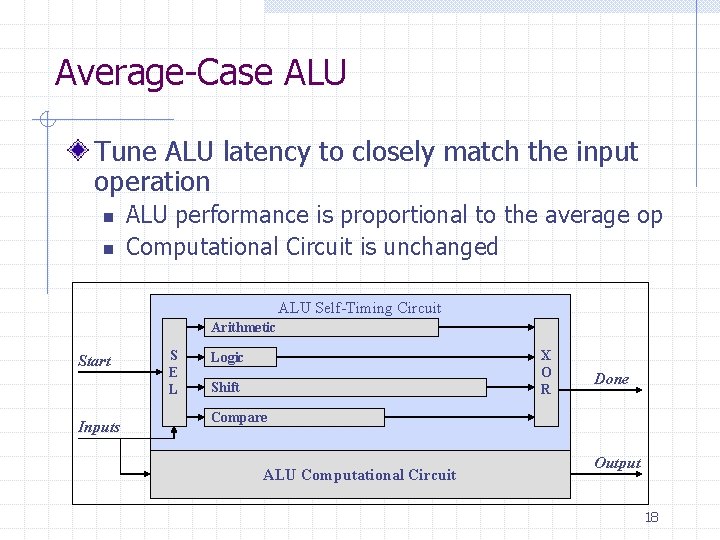

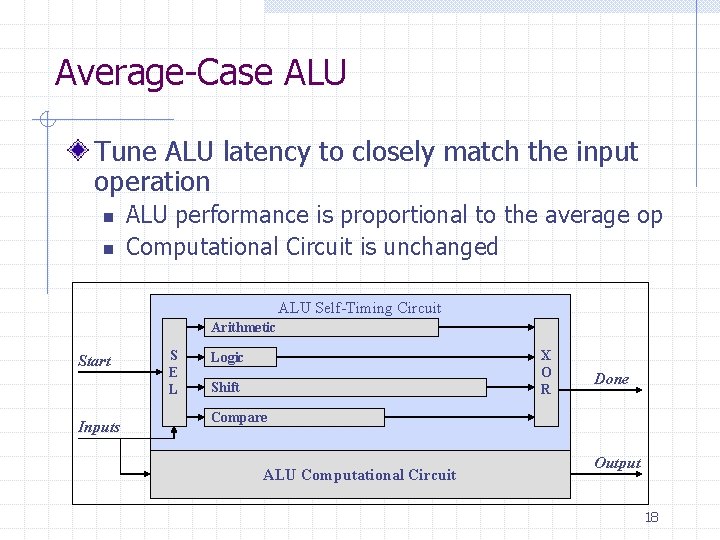

Average-Case ALU Tune ALU latency to closely match the input operation n n ALU performance is proportional to the average op Computational Circuit is unchanged ALU Self-Timing Circuit Arithmetic Start Inputs S E L X O R Logic Shift Done Compare ALU Computational Circuit Output 18

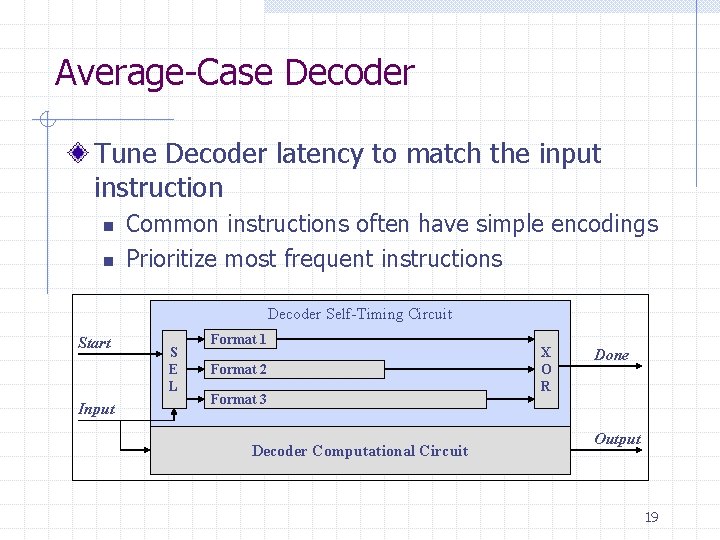

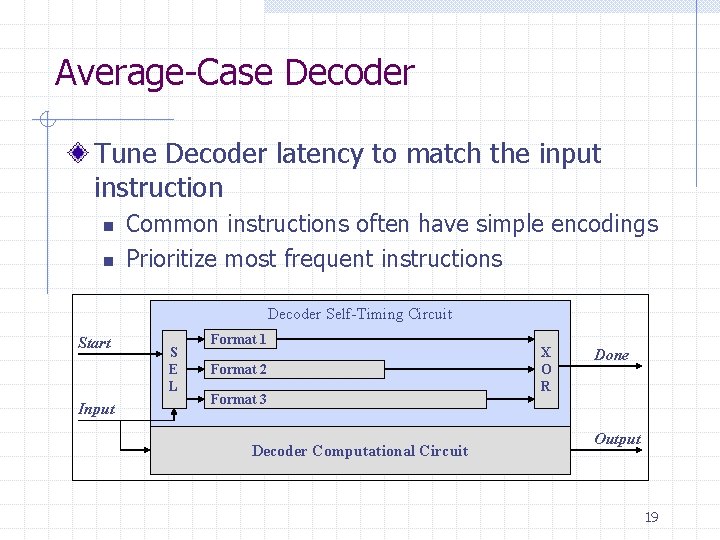

Average-Case Decoder Tune Decoder latency to match the input instruction n n Common instructions often have simple encodings Prioritize most frequent instructions Decoder Self-Timing Circuit Start Input S E L Format 1 Format 2 Format 3 Decoder Computational Circuit X O R Done Output 19

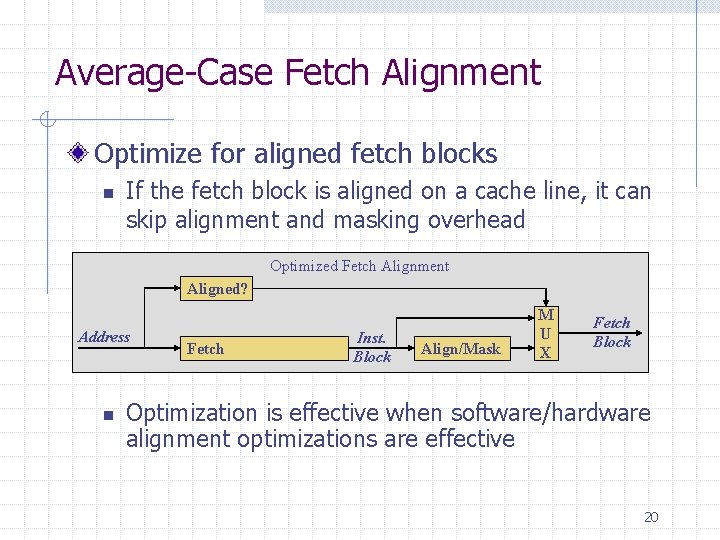

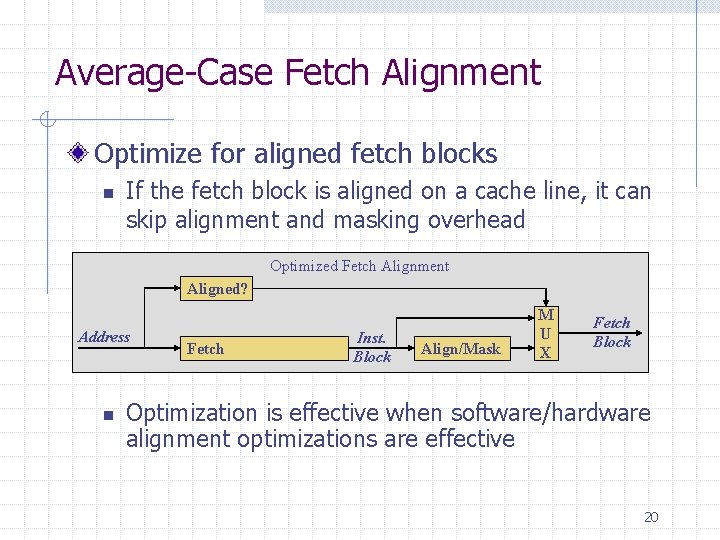

Average-Case Fetch Alignment Optimize for aligned fetch blocks n If the fetch block is aligned on a cache line, it can skip alignment and masking overhead Optimized Fetch Alignment Aligned? Address n Fetch Inst. Block Align/Mask M U X Fetch Block Optimization is effective when software/hardware alignment optimizations are effective 20

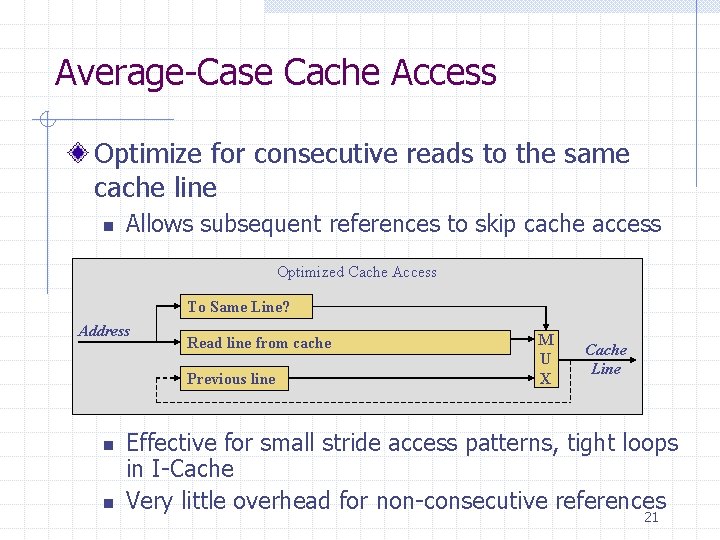

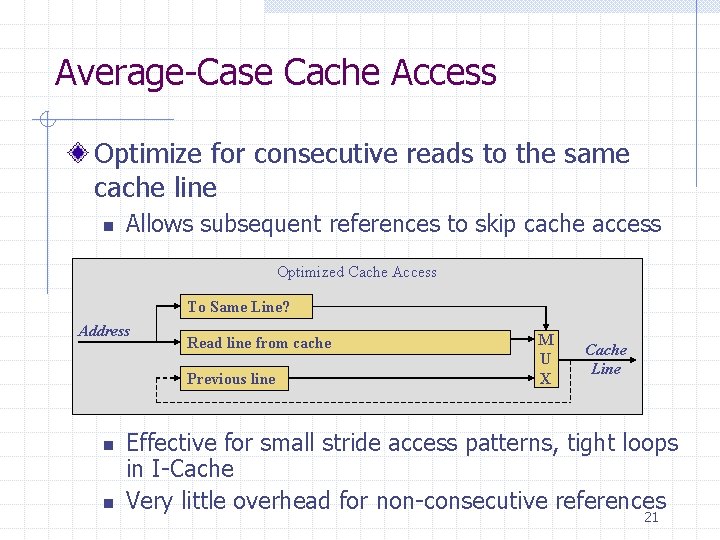

Average-Case Cache Access Optimize for consecutive reads to the same cache line n Allows subsequent references to skip cache access Optimized Cache Access To Same Line? Address Read line from cache Previous line n n M U X Cache Line Effective for small stride access patterns, tight loops in I-Cache Very little overhead for non-consecutive references 21

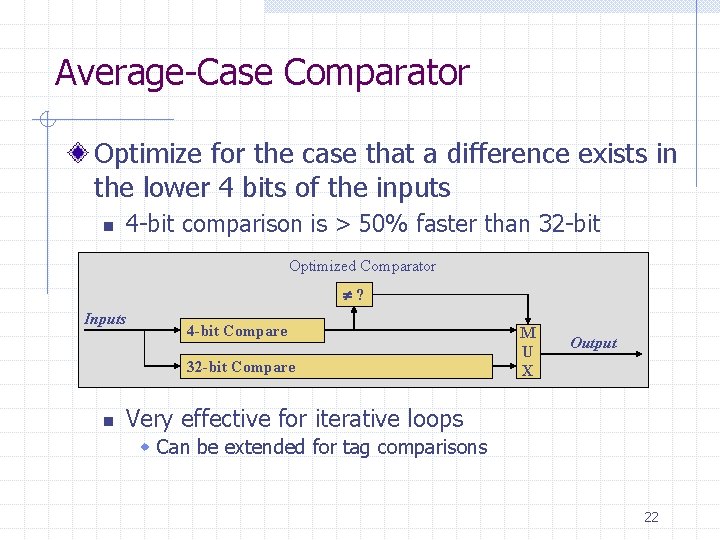

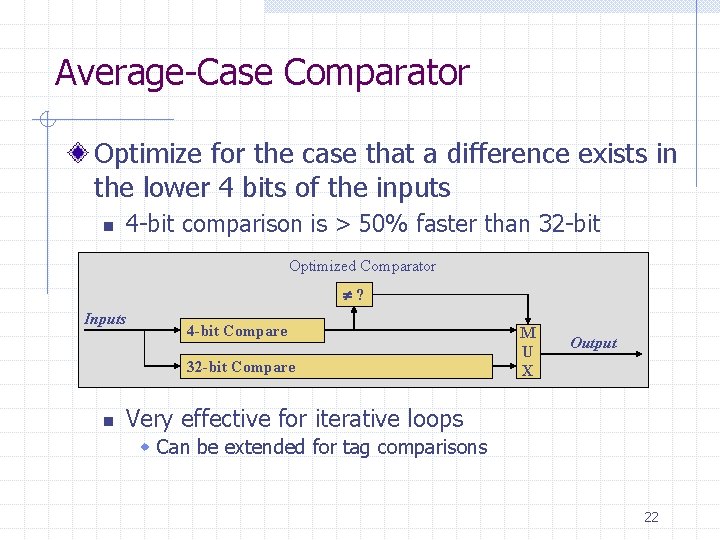

Average-Case Comparator Optimize for the case that a difference exists in the lower 4 bits of the inputs n 4 -bit comparison is > 50% faster than 32 -bit Optimized Comparator ? Inputs 4 -bit Compare 32 -bit Compare n M U X Output Very effective for iterative loops w Can be extended for tag comparisons 22

Outline Introduction & Motivation Background DSEP Design Average Case Optimizations Experimental Evaluation 23

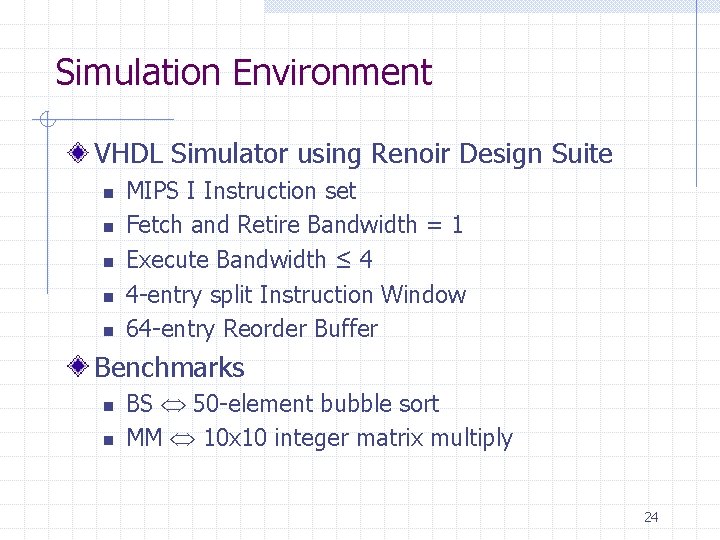

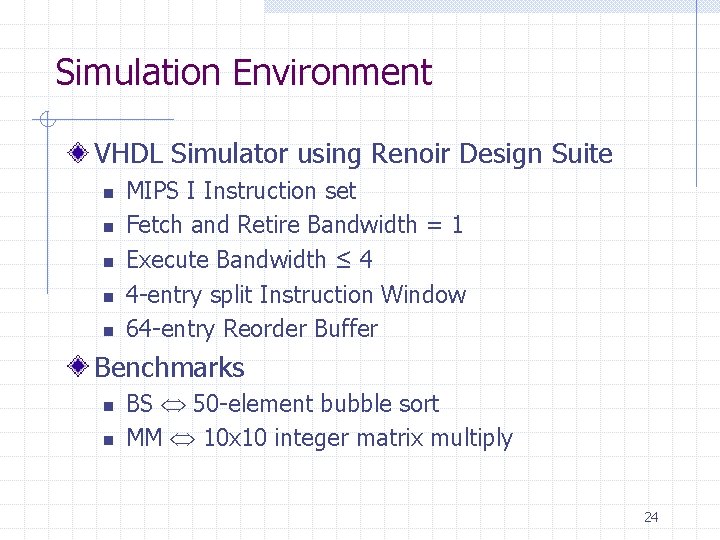

Simulation Environment VHDL Simulator using Renoir Design Suite n n n MIPS I Instruction set Fetch and Retire Bandwidth = 1 Execute Bandwidth ≤ 4 4 -entry split Instruction Window 64 -entry Reorder Buffer Benchmarks n n BS 50 -element bubble sort MM 10 x 10 integer matrix multiply 24

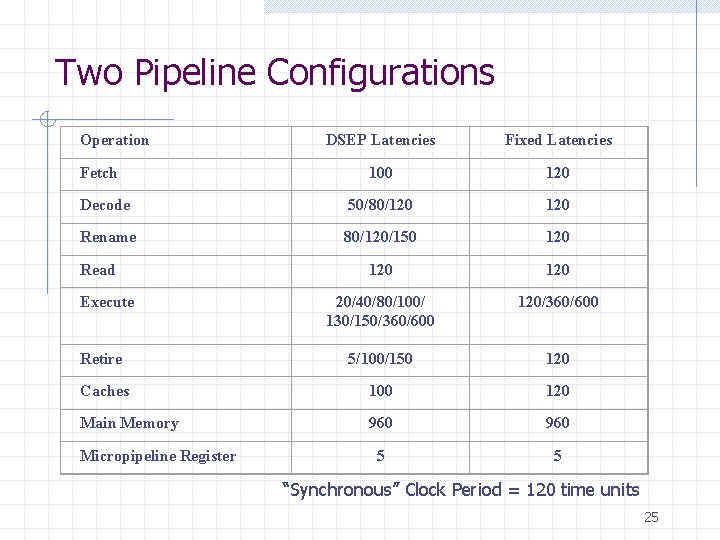

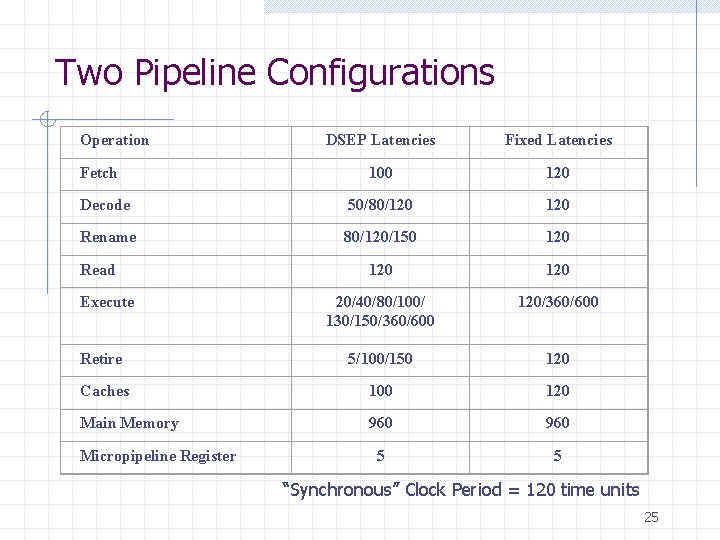

Two Pipeline Configurations Operation DSEP Latencies Fixed Latencies 100 120 Decode 50/80/120 Rename 80/120/150 120 120 20/40/80/100/ 130/150/360/600 120/360/600 Retire 5/100/150 120 Caches 100 120 Main Memory 960 5 5 Fetch Read Execute Micropipeline Register “Synchronous” Clock Period = 120 time units 25

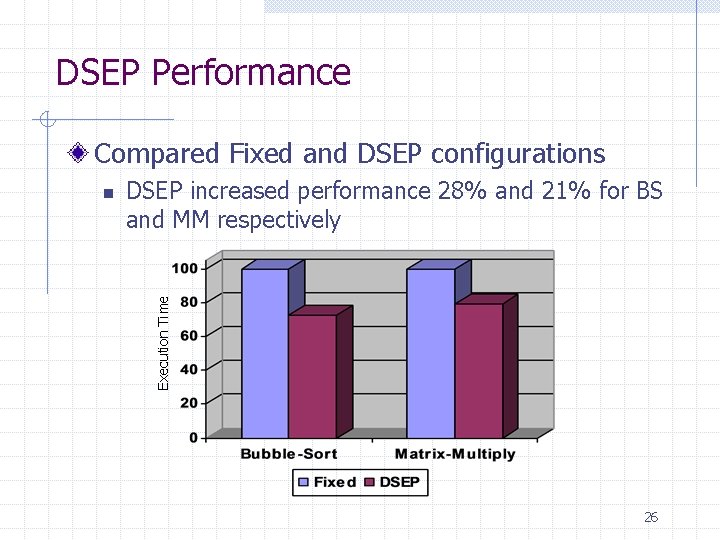

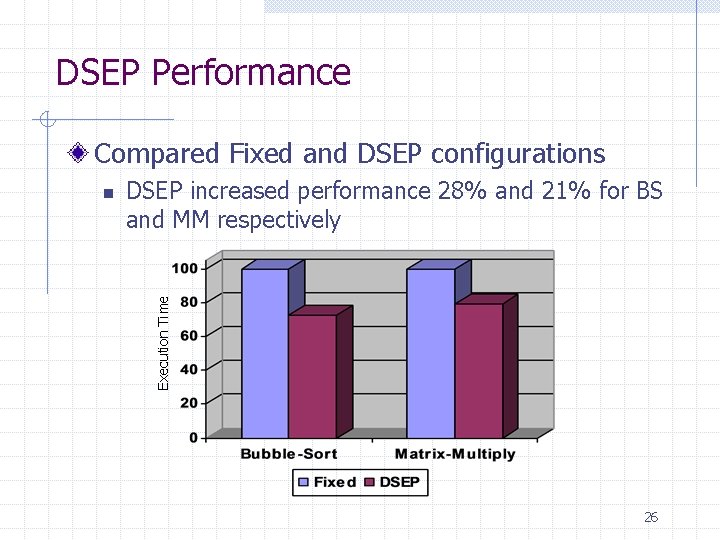

DSEP Performance Compared Fixed and DSEP configurations DSEP increased performance 28% and 21% for BS and MM respectively Execution Time n 26

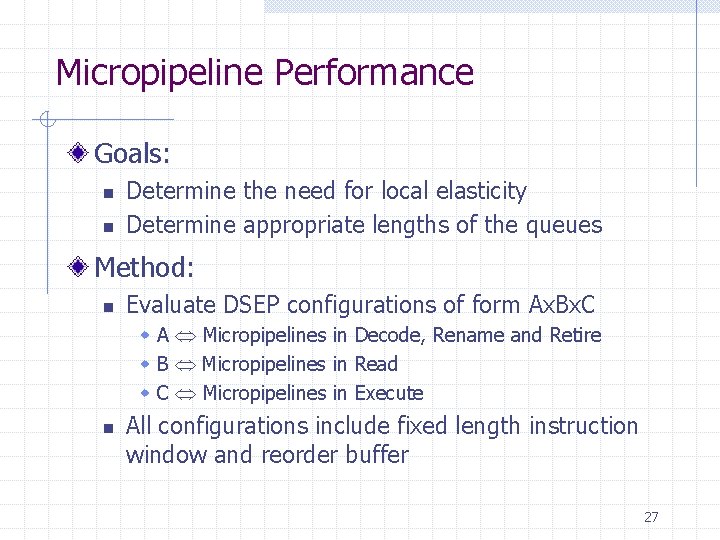

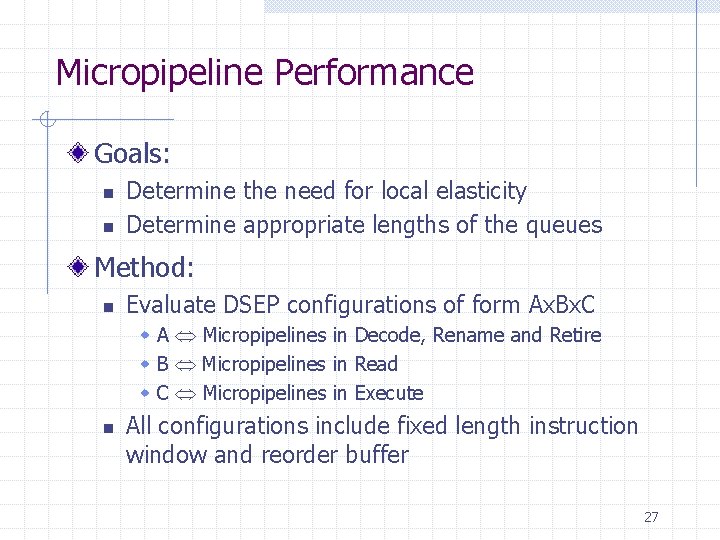

Micropipeline Performance Goals: n n Determine the need for local elasticity Determine appropriate lengths of the queues Method: n Evaluate DSEP configurations of form Ax. Bx. C w A Micropipelines in Decode, Rename and Retire w B Micropipelines in Read w C Micropipelines in Execute n All configurations include fixed length instruction window and reorder buffer 27

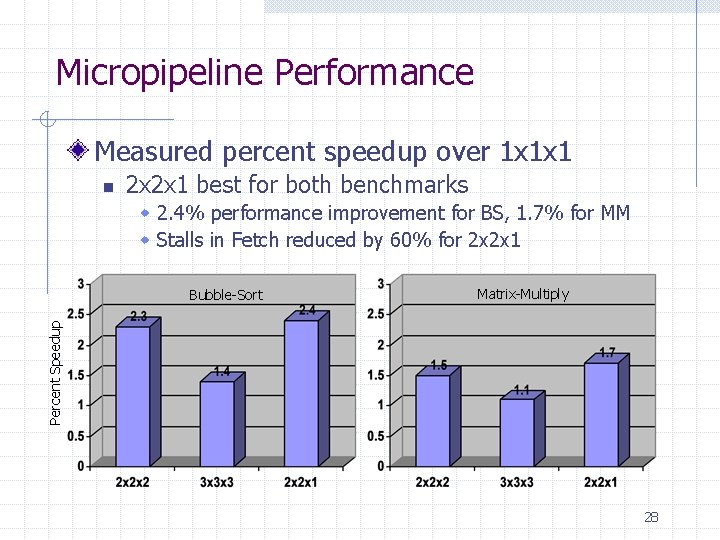

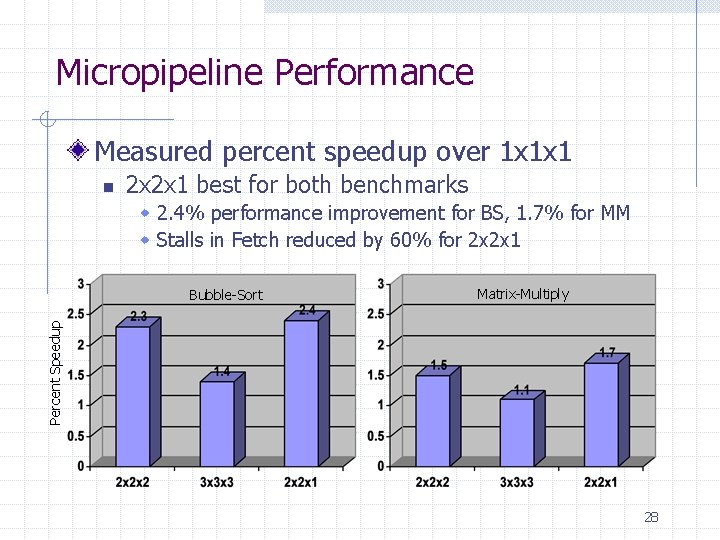

Micropipeline Performance Measured percent speedup over 1 x 1 x 1 n 2 x 2 x 1 best for both benchmarks w 2. 4% performance improvement for BS, 1. 7% for MM w Stalls in Fetch reduced by 60% for 2 x 2 x 1 Matrix-Multiply Percent Speedup Bubble-Sort 28

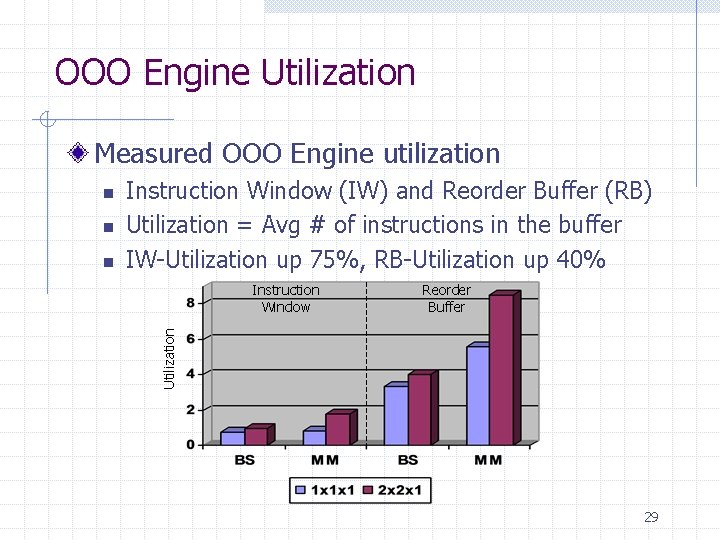

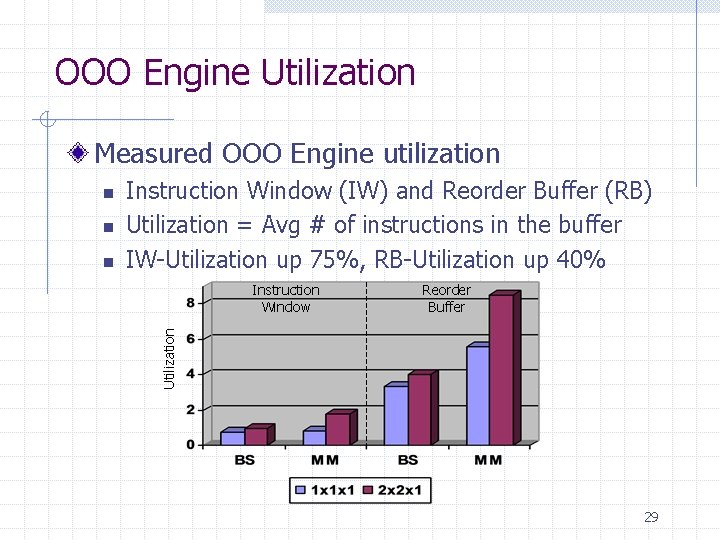

OOO Engine Utilization Measured OOO Engine utilization n n Instruction Window (IW) and Reorder Buffer (RB) Utilization = Avg # of instructions in the buffer IW-Utilization up 75%, RB-Utilization up 40% Instruction Window Reorder Buffer Utilization n 29

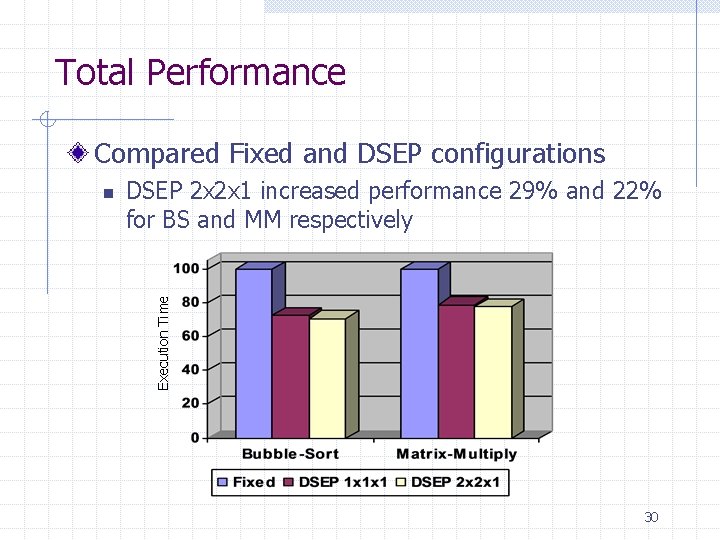

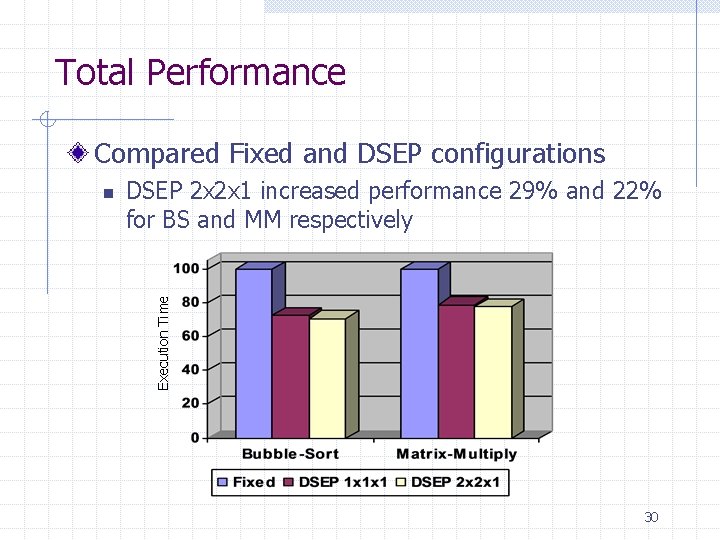

Total Performance Compared Fixed and DSEP configurations DSEP 2 x 2 x 1 increased performance 29% and 22% for BS and MM respectively Execution Time n 30

Conclusions Decoupled, Self-Timing n n Average-Case optimizations significantly increase performance Rarely taken critical paths no longer matter Elasticity n n n Removes pipeline jitter from decoupled operation Increases utilization of existing resources Not as important as Average-Case Optimizations (At least for our experiments) 31

Questions? 32