Evaluation of Training Rationale for Evaluation Types of

- Slides: 45

Evaluation of Training • Rationale for Evaluation • Types of Evaluation Data • Validity Issue • Evaluation Design

Rationale for Evaluation • Organizational activities should be regularly examined to ensure they are occurring as planned and producing the intended results • To correct things (people, processes, products or services) that deviate from their objectives

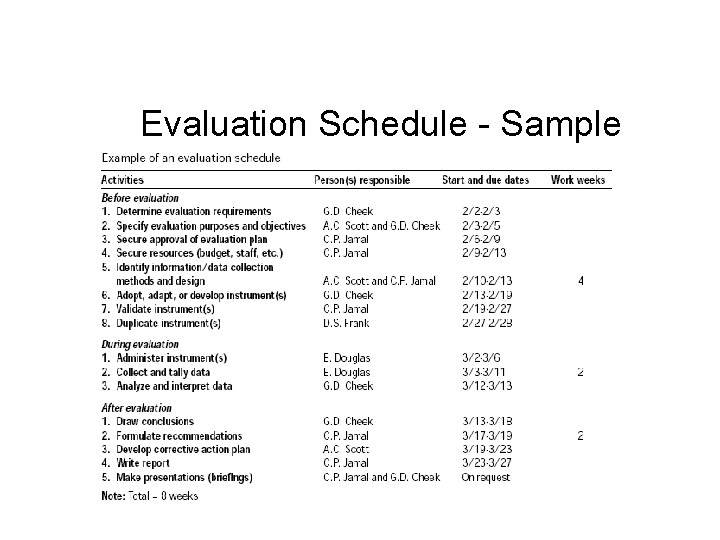

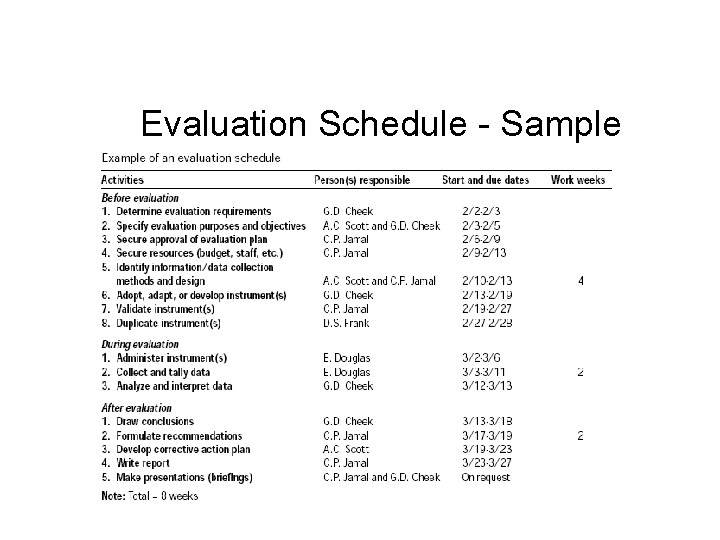

Evaluation Schedule - Sample

Types of Evaluation Data Two areas of evaluation: Process evaluation: how well the training was designed, developed, and implemented Outcome evaluation: how well the training achieves its objectives

Process Data Before Training • Analyzing the processes used to develop training – effectiveness of needs analysis – assessment of the training objectives – evaluation of the design of the training – assessment of evaluation tools – examination of the training package • Pre-training evaluation enables correction of errors or omissions

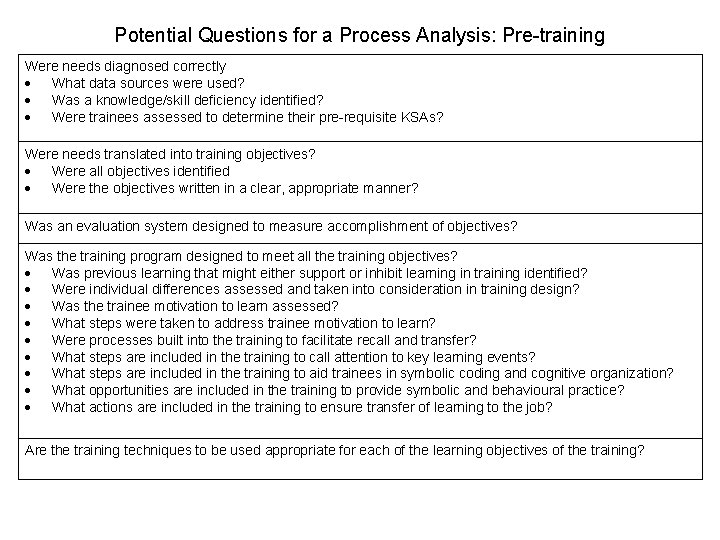

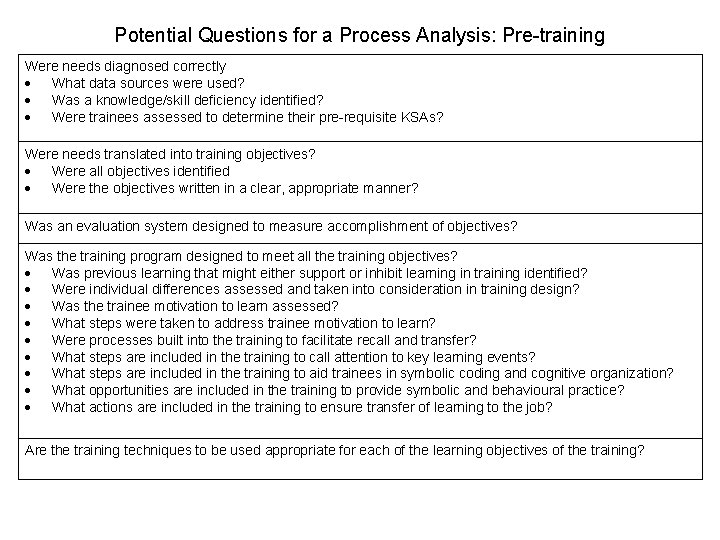

Potential Questions for a Process Analysis: Pre-training Were needs diagnosed correctly What data sources were used? Was a knowledge/skill deficiency identified? Were trainees assessed to determine their pre-requisite KSAs? Were needs translated into training objectives? Were all objectives identified Were the objectives written in a clear, appropriate manner? Was an evaluation system designed to measure accomplishment of objectives? Was the training program designed to meet all the training objectives? Was previous learning that might either support or inhibit learning in training identified? Were individual differences assessed and taken into consideration in training design? Was the trainee motivation to learn assessed? What steps were taken to address trainee motivation to learn? Were processes built into the training to facilitate recall and transfer? What steps are included in the training to call attention to key learning events? What steps are included in the training to aid trainees in symbolic coding and cognitive organization? What opportunities are included in the training to provide symbolic and behavioural practice? What actions are included in the training to ensure transfer of learning to the job? Are the training techniques to be used appropriate for each of the learning objectives of the training?

Process Data: During Training • Examining whether the implementation of the training program reflects what was proposed, designed and included in the training manual • Assessing the appropriateness of training techniques and methodologies for achieving training objectives

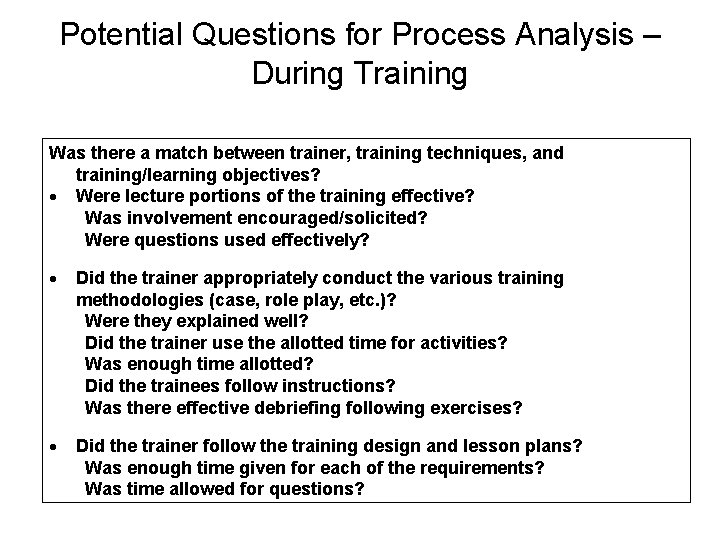

Potential Questions for Process Analysis – During Training Was there a match between trainer, training techniques, and training/learning objectives? Were lecture portions of the training effective? Was involvement encouraged/solicited? Were questions used effectively? Did the trainer appropriately conduct the various training methodologies (case, role play, etc. )? Were they explained well? Did the trainer use the allotted time for activities? Was enough time allotted? Did the trainees follow instructions? Was there effective debriefing following exercises? Did the trainer follow the training design and lesson plans? Was enough time given for each of the requirements? Was time allowed for questions?

Uses of Process Data • Trainer – Helps to determine what works well and what does not • Other trainers – May be able to apply if the process is generalizable • Training manager – Decision making when the training fails, or problem with particular trainer

Outcome Data • To determine how well training has met its goals • Four types of outcomes that are normally used: – reaction – learning – behaviour – organizational results

Reaction Outcomes • measures of trainee’s: – perceptions – emotions – subjective evaluations of the training experience • the first level of evaluation • favourable reactions are important in creating motivation to learn

Reaction Outcomes (cont’d) • the data are used to determine what the trainees thought about the training • usually it is the only type of evaluation undertaken • reaction questionnaires are divided into two types: – affective : measures general feelings – utility : beliefs about the value of training

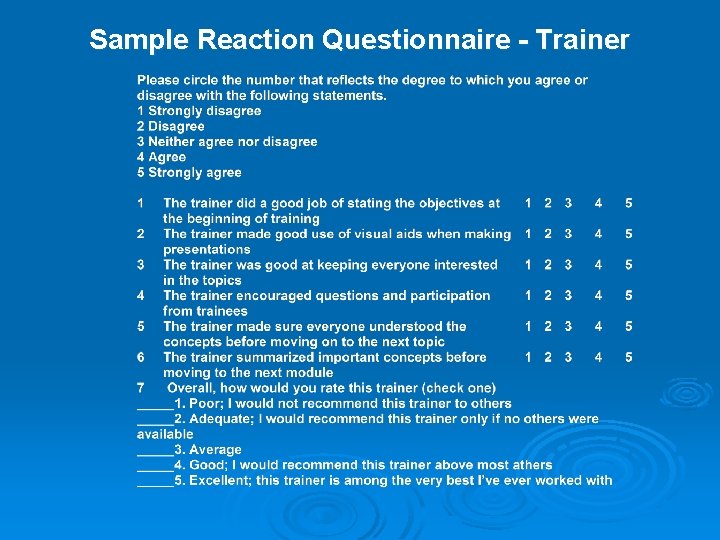

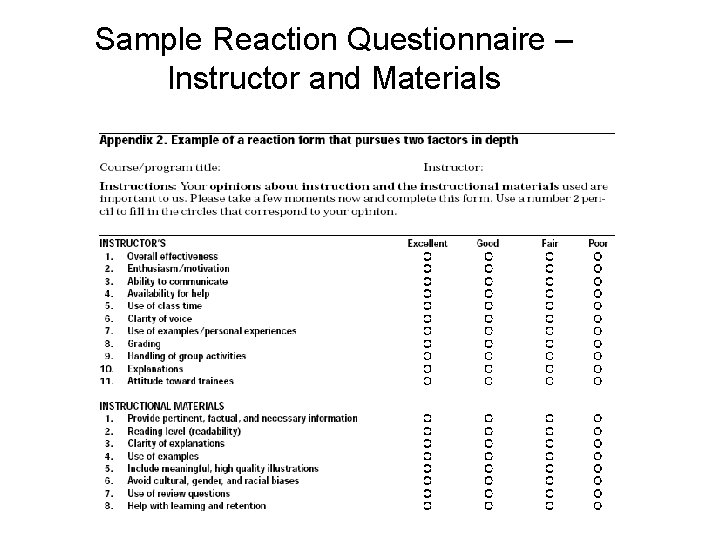

Reaction Outcomes (cont’d) • do not measure learning – only opinions and attitudes of trainees about the training • categories normally included in developing a reaction questionnaire: – – relevance of training content, materials and exercises trainer’s behaviour facilities

Reaction Outcomes (cont’d) • Training Relevance – provides an indication of the value of training – perceived value influences interests • Training Content, Materials and Exercises – includes written materials, videos, exercises and other tools of instruction – based on trainees’ feedbacks, modifications could be made

Reaction Outcomes (cont’d) • Reactions to the Trainer(s) – evaluations on the trainer’s actions – how well the trainer conducted the training programme • Facilities – items related to the facilities – noise, temperature, seating arrangements etc. – helps in determining whether the facilities are to be used for future training programmes

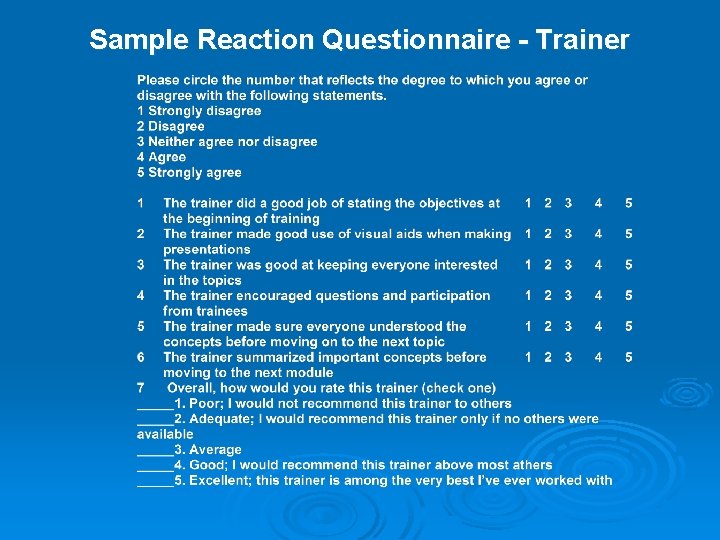

Sample Reaction Questionnaire - Trainer

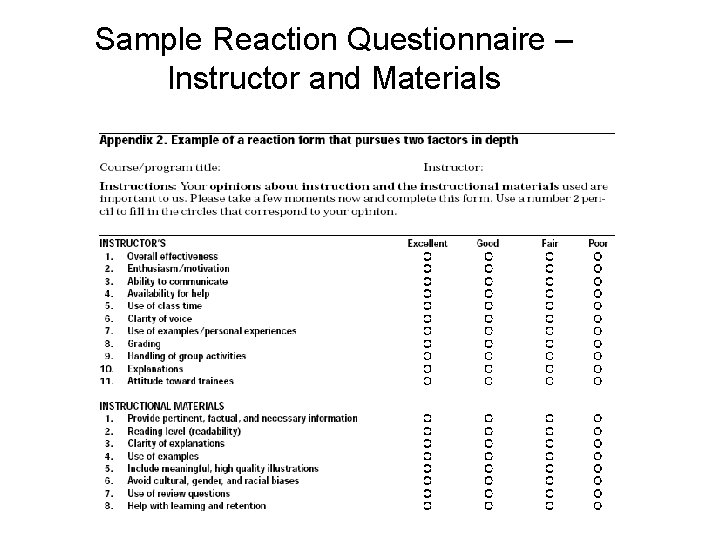

Sample Reaction Questionnaire – Instructor and Materials

Learning Outcomes • Learning objectives are developed from TNA • The gap between trainee’s KSAs and the required KSAs defines the learning that must occur • Three types of learning outcomes: – knowledge – skills – attitudes

Learning Outcomes Knowledge • There are three types of knowledge: – declarative – procedural – strategic

Knowledge Outcome Declarative • factual knowledge • paper-and-pencil tests are often used to determine whether trainees have learned the knowledge – easier to administer and score – if properly developed, it accurately measures most declarative knowledge • multiple-choice test is the most common – reliable – covers a broader content

Knowledge Outcome Procedural • organizing information into mental models • one method commonly used is “paired comparisons” – trainee’s answers are compared to an expert’s answers

Knowledge Outcome - Strategic • deals with the ability to develop and apply cognitive strategies in problem solving • assesses the level of understanding the trainee has about the decisions and choices he/she makes • trainees are required to provide the rationale for decisions/choices made

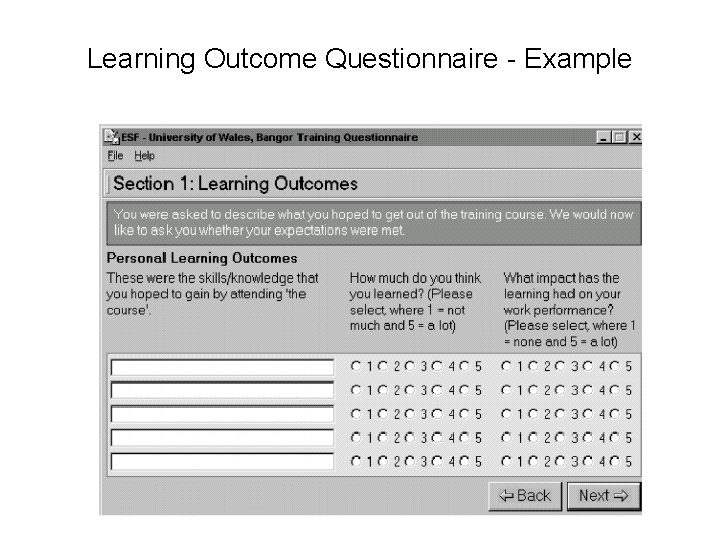

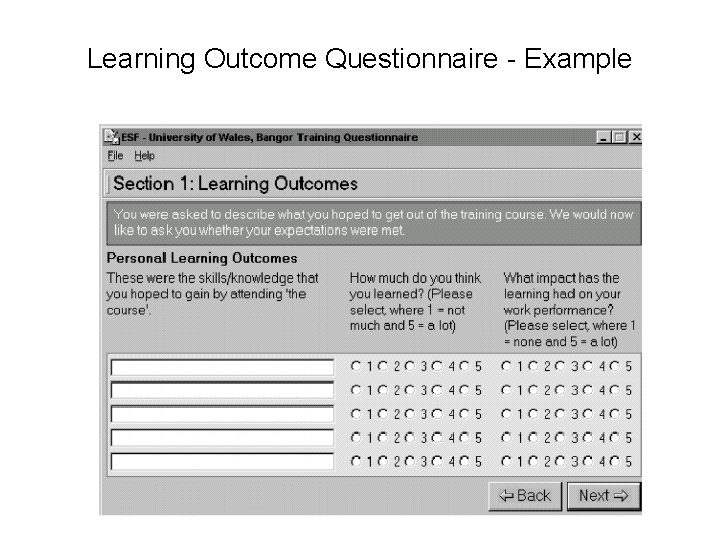

Learning Outcome Questionnaire - Example

Skill-Based Outcomes • to determine if a skill or set of behaviours has been learned • it measures the level of learning not whether they are used on the job • two levels of skill acquisition: – compilation – automaticity

Skill-based Outcome Compilation • to determine the additional skills acquired by the trainee from training • various methods may be used: – structured scenario – multiple raters using standardized methods

Skill-based Outcome Automaticity • the speed in which a skill is being used • one method is speed test – performance has to be completed within a certain time • e. g emergency procedures for pilot

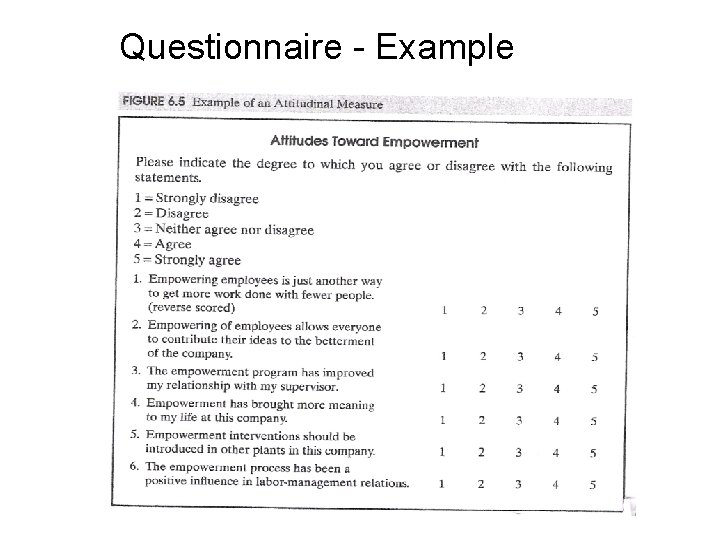

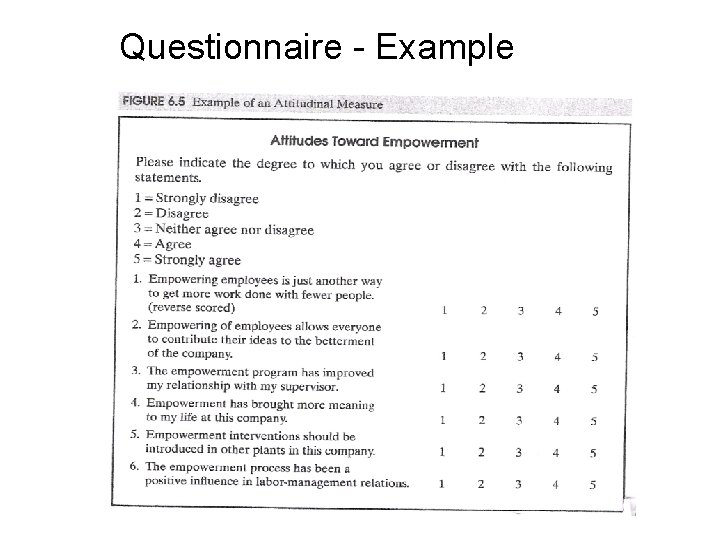

Attitudinal Outcomes • assessment of changes in attitudes • attitudinal scales are often used • to measure changes, evaluation should use pre/post measure of responses on a scale • caution: weaknesses of self-report

Questionnaire - Example

Ambiguity?

Behaviour Outcomes • to determine whether the training has transferred to the job • primary sources of data: – interviews – questionnaires – direct observation – performance records

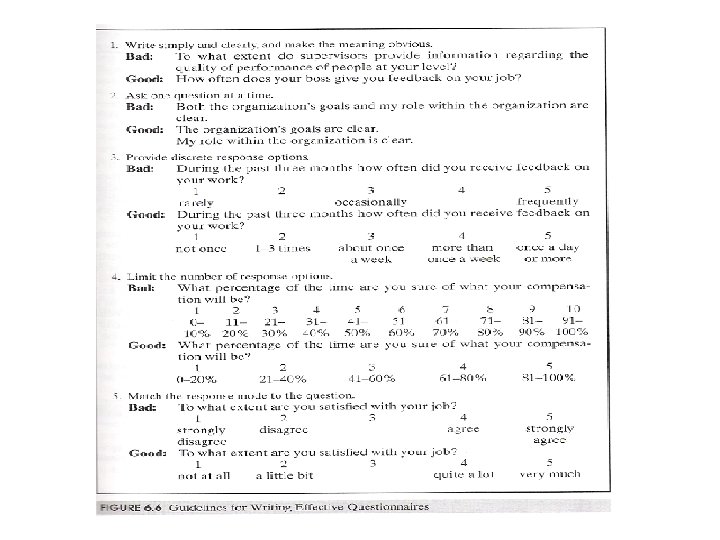

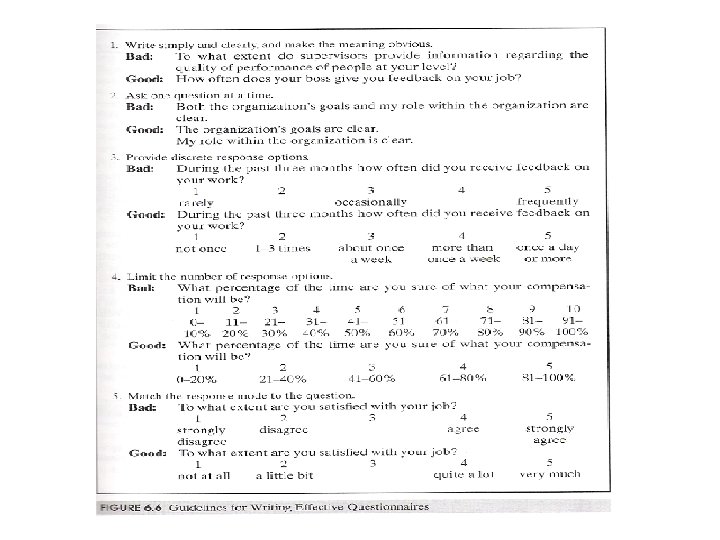

Behaviour Outcomes (cont’d) • questionnaires are often used, because: – opinions can be obtained from a large number of employees – the information can be tabulated to yield a numerical response – respondents are anonymous – short amount of time is required

Organizational Results • The objectives of training are developed to solve organizational problems • Evaluation is conducted to assess whether the training has solved the problems • Some aspects of evaluation may include: – – reduction in defects increase in productivity reduction in grievances increase in quality

Validity Issue in Evaluation • Internal validity: – whether the change was a function of training – the confidence that the results of the evaluations are in fact correct • External validity – whether the same results are generalisable to other groups of trainees

Threats to Internal Validity • History – other events that take place concurrently with training • Maturation – changes that occur because of the passage of time • Testing – presence in pretest/post-test design that use the same test

Threats to Internal Validity (cont’d) • Instrumentation – two different but equivalent tests – is it really equivalent – could cause differences in the two scores • Statistical regression – tendency for those who score either very high or very low on a test to regress to the middle when taking the test again (“regression to the mean”

Threats to Internal Validity (cont’d) • Initial Group Differences – comparison between trainees and a similar group of employees (control group who have not been trained) – it is important that the control group be similar in every way to the training group • Loss of Group Members – poor scorers in pretest may be demoralised and drop out of the training

Threats to Internal Validity (cont’d) • Diffusion of Training – trainees may share the knowledge with the control group – post-test scores may not show differences between the training group and the control group • Compensating Treatment – control group may get special assistant because they are not given training

Threats to Internal Validity (cont’d) • Compensatory Rivalry – the control may see the situation as a challenge and compete for better performance – post-test scores may not show difference in performance between the two groups

Threats to Internal Validity (cont’d) • Demoralised Control Group – the control may perceive that they are made control group because they are not as good as the training group – they may give up and actually reduce productivity – post-test scores would show difference but not due to training

External Validity • The evaluation has to be internally valid before it can be externally valid • If training is effective for a group, will it also be effective for other groups? i. e is the evaluation generalizable

Threats to External Validity • Testing – subsequent training groups may not have pre-tests – difficult to conclude that they would be as effective – those who took pre-test may have focused on certain materials highlighted in the test • Selection – a training program with identical design may produce different results for different categories of employees

Threats to External Validity (cont’d) • Reaction to Evaluation – success from a group may make further evaluation unnecessary – those who are evaluated may got more attention (“Hawthorne Effects”) • • • novelty special received feedback know they are being observed inspired by the trainer

Threats to External Validity (cont’d) • Multiple techniques – effectiveness could be from a combination of techniques: • lecture – no effect • video instruction – effective – video instruction is then used for future training, but ineffective – the effectiveness of training in the first group was the result of both lecture and video instruction