Curs 09 Speech Cristian Miron Author Date Continental

- Slides: 39

Curs 09 Speech Cristian Miron / Author / Date © Continental AG

Summary Why to use Speech in a car? Speech synthesis Speech recognition 2

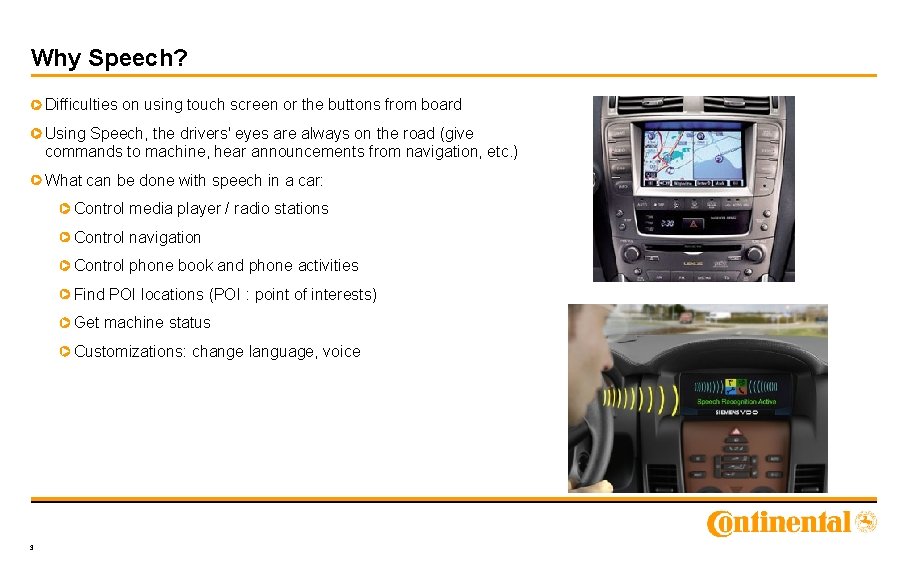

Why Speech? Difficulties on using touch screen or the buttons from board Using Speech, the drivers' eyes are always on the road (give commands to machine, hear announcements from navigation, etc. ) What can be done with speech in a car: Control media player / radio stations Control navigation Control phone book and phone activities Find POI locations (POI : point of interests) Get machine status Customizations: change language, voice 3

Speech overview Difficulties with speech, scenarios and solutions: if the driver listens music or radio, how speech recognition will work? (barge-in) same problem, when using TTS (barge-in) multiple persons speaking: "personal space" ideas Type of grammars for recognition: static (for command control) text enrollment (for dynamic data like media player artists, songs names, etc. ) voice tags (for dynamic data like radio stations names, persons from phone book) 4

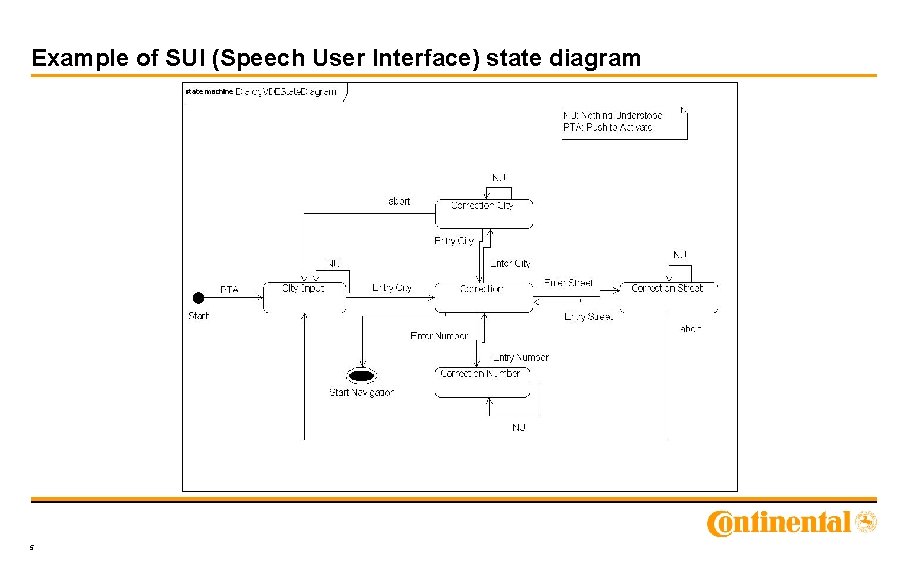

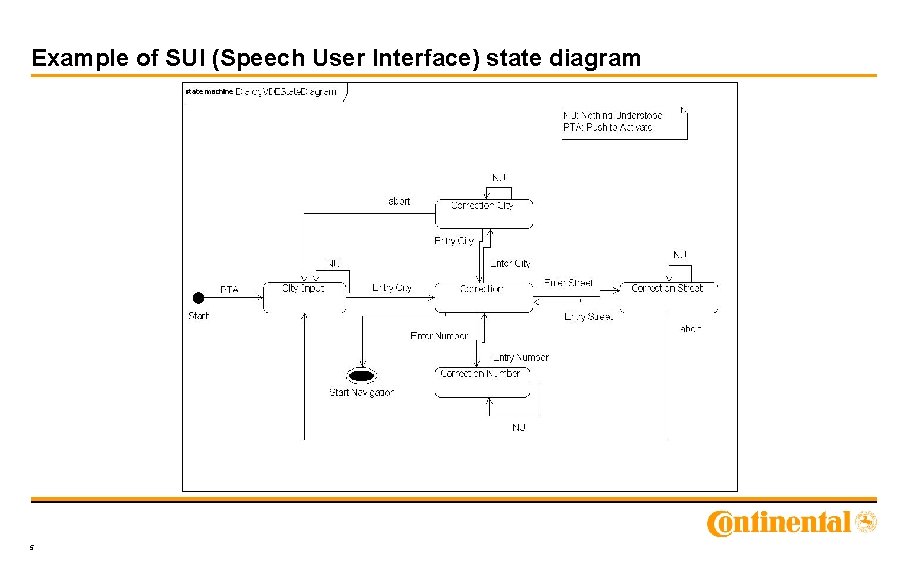

Example of SUI (Speech User Interface) state diagram 5

Speech synthesis What is speech synthesis? History How it works? Synthesizer technologies Challenges Voice synthesizers Applications 6

What is speech synthesis? Speech synthesis is the artificial production of human speech. Can be implemented in software or hardware A text-to-speech (TTS) system converts normal language text into speech Synthesized speech can be created by concatenating pieces of recorded speech that are stored in a database 7

What is speech synthesis? The quality of a speech synthesizer is judged by its similarity to the human voice (naturalness), and by its ability to be understood (intelligibility). Naturalness describes how closely the output sounds like human speech, while intelligibility is the ease with which the output is understood An intelligible text-to-speech program allows people with visual impairments or reading disabilities to listen to written works on a home computer The ideal speech synthesizer is both natural and intelligible 8

History Sec. X-XII: Long before electronic signal processing was invented, there were those who tried to build machines to create human speech. (early legends of the existence of "speaking heads“) 1779: the Danish scientist Christian Kratzenstein, working at the Russian Academy of Sciences, built models of the human vocal tract that could produce the five long vowel sounds 1930: Bell Labs developed the VOCODER, a keyboard-operated electronic speech analyzer and synthesizer that was said to be clearly intelligible 1968: first complete text-to-speech system Early electronic speech synthesizers sounded robotic and were often barely intelligible. However, the quality of synthesized speech has steadily improved, and output from contemporary speech synthesis systems is sometimes indistinguishable from actual human speech. 9

How it works? The two primary technologies for generating synthetic speech waveforms are concatenative synthesis and formant synthesis A text-to-speech system (or "engine") is composed of four parts: a front-end a back-end The front-end has two major tasks: text normalization: it converts raw text containing symbols like numbers and abbreviations into the equivalent of written-out words grapheme-to-phoneme conversion: it assigns phonetic transcriptions to each word, and divides and marks the text into prosodic units, like phrases, clauses and sentences The back-end (synthesizer): converts the symbolic linguistic representation into sound. 10

Overview of a typical TTS system 11

Synthesizer technologies Concatenative synthesis (based on the concatenation of segments of recorded speech): Unit selection synthesis Diphone synthesis Domain-specific synthesis Formant synthesis (does not use human speech samples at runtime) Articulatory synthesis (refers to computational techniques for synthesizing speech based on models of the human vocal tract) HMM-based synthesis (based on hidden Markov models) 12

Concatenative synthesis Generally, concatenative synthesis produces the most natural-sounding synthesized speech Unit selection synthesis: uses large databases of recorded speech provides the greatest naturalness, because it applies only a small amount of digital signal processing (DSP) to the recorded speech maximum naturalness typically requires unit-selection speech databases to be very large, in some systems ranging into the gigabytes of recorded data, representing dozens of hours of speech Diphone synthesis: uses a minimal speech database containing all the diphones (sound-to-sound transitions) occurring in a language the number of diphones depends on the phonotactics of the language: for example, Spanish has about 800 diphones, and German about 2500 the quality of the resulting speech is generally worse than that of unit-selection systems, but more naturalsounding than the output of formant synthesizers 13

Concatenative synthesis Domain-specific synthesis concatenates prerecorded words and phrases to create complete utterances It is used in applications where the variety of texts the system will output is limited to a particular domain, like transit schedule announcements or weather reports Formant synthesis does not use human speech samples at runtime the synthesized speech output is created using an acoustic model parameters such as fundamental frequency, voicing and noise levels are varied over time to create a waveform of artificial speech 14

Challenges Text normalization challenges Text-to-phoneme challenges Evaluation challenges Prosodics and emotional content 15

Text normalization challenges Texts are full of heteronyms, number and abbreviations that all require expansion into a phonetic representation Most text-to-speech (TTS) systems do not generate semantic representations of their input texts Various heuristic techniques are used to guess the proper way to disambiguate homographs, like examining neighboring words and using statistics about frequency of occurrence. Deciding how to convert numbers is another problem that TTS systems have to address Abbreviations can be ambiguous 16

Text-to-phoneme challenges dictionary-based approach: a large dictionary containing all the words of a language and their correct pronunciations is stored by the program determining the correct pronunciation of each word is a matter of looking up each word in the dictionary and replacing the spelling with the pronunciation specified in the dictionary it's quick and accurate, but completely fails if it is given a word which is not in its dictionary rule-based approach: pronunciation rules are applied to words to determine their pronunciations based on their spellings works on any input, but the complexity of the rules grows substantially as the system takes into account irregular spellings or pronunciations 17

Evaluation challenges The consistent evaluation of speech synthesis systems may be difficult because of a lack of universally agreed objective evaluation criteria The quality of speech synthesis systems also depends to a large degree on the quality of the production technique and on the facilities used to replay the speech 18

Voice synthesizers and API’s Microsoft Speech API Soft. Voice TTS SVOX TTS Apple Plain. Talk Free. TTS Nuance Vocalizer Vocoder e. Speak 19

Applications Accessibility (speech synthesis has long been a vital assistive technology tool and its application in this area is significant and widespread) News service (some news sites used speech synthesis to convert written news to audio content, which can be used for mobile applications) Entertainment (Speech synthesis techniques are used as well in the entertainment productions such as games, animations) Navigation 20

Speech recognition Agenda What is speech recognition? History How it works? Voice synthesizers Applications Challenges 21

What is speech recognition? Speech recognition converts spoken words to machine-readable input 22

What is speech recognition? The performance of speech recognition systems is usually specified in terms of accuracy and speed Accuracy may be measured in terms of performance accuracy which is usually rated with word error rate (WER) Speed is measured with the real time factor (If it takes time P to process an input of duration I, the real time factor is defined as RTF = P / I) Optimal conditions usually assume that users: have speech characteristics which match the training data, can achieve proper speaker adaptation, and work in a clean noise environment (e. g. quiet office or laboratory space). ÞLimited vocabulary systems, requiring no training, can recognize a small number of words (for instance, the ten digits) as spoken by most speakers 23

History 1984 – First system of speech recognition based on a supercomputer. Each computing process lasts few minutes. Only 5000 words from English are recognized. 1986 - Tangora 4 Prototype: Using a special microprocessor, real-time computing of a human voice on a desktop PC. 1993 - IBM Personal Dictation: first solution for PC - 1000 USD. 1998 - IBM, Dragon, Lernout&Hauspie si Philips launch first customer versions of their products 24

How is works? Modern statistically-based speech recognition algorithms: Acoustic model Language model Hidden Markov model (HMM)-based speech recognition (widely used in many systems) Dynamic time warping (DTW)-based speech recognition 25

Hidden Markov model (HMM)-based speech recognition Modern general-purpose speech recognition systems are generally based on Hidden Markov Models These are statistical models which output a sequence of symbols or quantities One possible reason why HMMs are used in speech recognition is that a speech signal could be viewed as a piecewise stationary signal or a short-time stationary signal Another reason why HMMs are popular is because they can be trained automatically and are simple and computationally feasible to use In speech recognition, the hidden Markov model would output a sequence of n-dimensional real-valued vectors (with n being a small integer, such as 10), outputting one of these every 10 milliseconds 26

Dynamic time warping (DTW)-based speech recognition Dynamic time warping is an approach that was historically used for speech recognition but has now largely been displaced by the more successful HMM-based approach Dynamic time warping is an algorithm for measuring similarity between two sequences which may vary in time or speed. For instance, similarities in walking patterns would be detected, even if in one video the person was walking slowly and if in another they were walking more quickly, or even if there were accelerations and decelerations during the course of one observation 27

Applications Health care Military High-performance fighter aircraft Helicopters Battle management Training air traffic controllers Telephony and other domains People with disabilities Mobile telephony, including mobile email Robotics Video games Home automation Automotive speech recognition Hands-free computing: voice command recognition computer user interface 28

Speech Recognition engines Microsoft Speech Server IBM: Web. Sphere Voice Server Nuance: Vo. Con Voice. Box 29

Speech Recognition in Linux There is currently no open-source equivalent of proprietary speech recognition software for Linux However, there are several incomplete, open-source projects and solutions that could be used to attain some elements of speech recognition in the free operating system: CVoice. Control Dyna. Speak Vox. Forge Julius 30

Challenges Variability of Speech Patterns Processing Power Extracting Meaning Background Noise Continuous Speech Recognition 31

Challenges of speech recognition in automotive industry Noisy environment, the noise level of the signal is between 20 d. B and 5 db Cheap microphones (low cost) placed 30 -100 cm from speaker Embedded platforms with restrictions related to CPU and memory/space storage 32

Speech recognition in automotive industry Ford SYNC (developed by Ford and Microsoft) Continental MMP (developed by Continental/Siemens) 33

Speech Application Programming Interface (SAPI) The Speech Application Programming Interface or SAPI is an API developed by Microsoft to allow the use of speech recognition and speech synthesis within Windows applications. In general all versions of the API have been designed such that a software developer can write an application to perform speech recognition and synthesis by using a standard set of interfaces, accessible from a variety of programming languages. In addition, it is possible for a 3 rd-party company to produce their own Speech Recognition and TTS engines or adapt existing engines to work with SAPI. 34

SAPI 5 API Features included: Shared recognizer In-proc recognizer Grammar objects Voice object Audio interfaces User lexicon object Object tokens 35

Nice things Faster chip-based speech recognition will enable video players to search rapidly for Arnold Schwarzenegger saying "Hasta la vista, baby!" in the movie And lower power consumption will enable a cell phone to take dictated notes. 36

Voice Recognition Funny Errors While typing an operating report: "The patient was prepped and raped in the usual fashion" instead of "The patient was prepped and draped in the usual fashion. " "she is buried" instead of "she is married" 37

Speech Questions? 38

References Speech synthesis - http: //en. wikipedia. org/wiki/Speech_synthesis Speech recognition - http: //en. wikipedia. org/wiki/Speech_recognition SAPI - http: //en. wikipedia. org/wiki/Speech_Application_Programming_Interface 39

First author second author third author

First author second author third author Convergent boundary feature

Convergent boundary feature What happens at oceanic oceanic convergent boundaries

What happens at oceanic oceanic convergent boundaries Carol miron

Carol miron Miron costin suceava

Miron costin suceava Mos miron prisacarul

Mos miron prisacarul Aproximeno

Aproximeno Duh u močvari melita

Duh u močvari melita Scoala miron costin suceava

Scoala miron costin suceava Homemade dividend

Homemade dividend Record date dividends

Record date dividends Who wrote awake united states

Who wrote awake united states Curs postuniversitar management

Curs postuniversitar management Retele de calculatoare curs

Retele de calculatoare curs Electronica curs

Electronica curs Curs managementul proiectelor

Curs managementul proiectelor Curs inventor

Curs inventor Curs de llenguatge administratiu

Curs de llenguatge administratiu Curs inteligenta artificiala

Curs inteligenta artificiala Cele 6 tipuri de personalitate process communication model

Cele 6 tipuri de personalitate process communication model Pozitii orto meta para

Pozitii orto meta para Runtime error 7 out of memory

Runtime error 7 out of memory Ing curs

Ing curs Curs calificare marinar fluvial

Curs calificare marinar fluvial Curs inventor

Curs inventor Curs guvernanta corporativa

Curs guvernanta corporativa Curs proiectare tipare

Curs proiectare tipare Curs matlab

Curs matlab Curs de novells

Curs de novells Lontalk

Lontalk Curs data science

Curs data science Curs sisteme de operare

Curs sisteme de operare Multinationale bucuresti

Multinationale bucuresti Scuola don milani genova

Scuola don milani genova Curs de llenguatge administratiu

Curs de llenguatge administratiu Sisteme de operare curs

Sisteme de operare curs Curs inventor

Curs inventor Curs sisteme de operare

Curs sisteme de operare Curs asincron

Curs asincron Diagrama de descompunere a obiectivelor

Diagrama de descompunere a obiectivelor