CSE 6339 3 0 Introduction to Computational Linguistics

- Slides: 28

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSGs Unification review, HPSG Introduction, Principles, Rules, Examples, Modularity Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 1

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Unification Review Robinson 1965 all formulae represented in disjunctive normal form namely, p 1 ^. . . pk q 1 . . . qt is equivalent to: ¬p 1 . . . ¬pk q 1 . . . qt three inference rules: 1. resolution 2. substitution 3. simplification Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 2

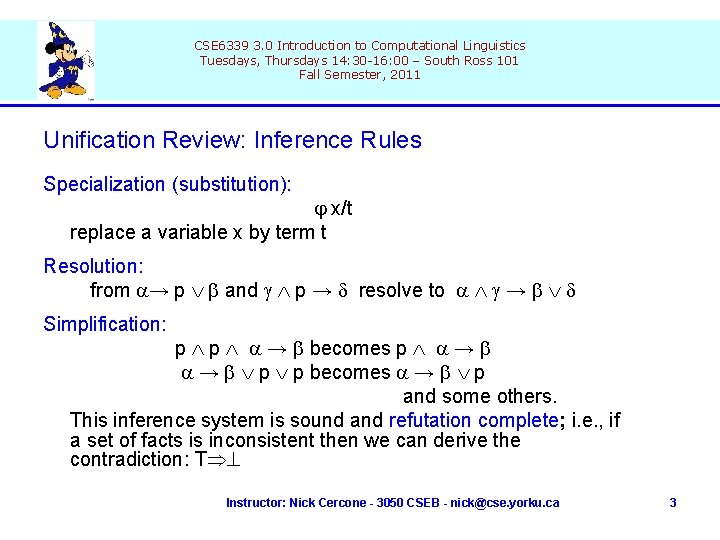

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Unification Review: Inference Rules Specialization (substitution): x/t replace a variable x by term t Resolution: from → p and p → resolve to → Simplification: p p → becomes p → → p p becomes → p and some others. This inference system is sound and refutation complete; i. e. , if a set of facts is inconsistent then we can derive the contradiction: T Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 3

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Unification Review: Examples As usual, x and y are variables and a and b are constants. UNIF(on(a, x), on(a, b)) = x → b UNIF(on(a, x), on(y, b)) = x → b, y → a UNIF(on(a, x), on(y, f(y ))) = y → a, x → f(a) UNIF(on(x, y ), on(y, f(y ))) = fail UNIF(on(a, x), on(x, b)) = fail(a b) UNIF( f(x, g(y, y), x), f(z, z, g(w, f(T))) ) = ? Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 4

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Driven Phrase Structure Grammars (HPSGs) HPSG was developed by Carl Pollard and Ivan Sag since 1987, initially as a refinement and extension of Generalized Phrase Structure Grammars (Gazdar, 1981) and belongs to a family of phrase structure-theoretic approaches in which a rich set of lexical specifications, coupled with a few very general combinatorial constraints and restrictions on information sharing, interact monotonically to give rise to sets of complex objects called feature structures, which model the properties of linguistic signs. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 5

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Driven Phrase Structure Grammars (HPSGs) Consider, for example, an auxilliary verb in English. Such verbs select a certain class of subjects and appear either in canonically ordered sentences (Robin has left) or inverted sentences (Has Robin left? ). In HPSG such a linguistic expression will be modled by a feature structure including a specification for the feature CAT, provided relevant syntactic information, including specification of the HEAD properties of that expression - those which are invariably shared betweenmother and head daughter. The feature HEAD is then taken to be a function which maps a particular node labeled by the sort category to a particular node of sort noun, verb, and so on; for verbs, this latter node itself is mapped by a function VFORM to a node labeled by one of a set of sorts fin(ite), inf(inite), … by a function AUX to one of the sorts … Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 6

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Driven Phrase Structure Grammars (HPSGs) A long-standing, near-universal, and erroneous practice of teaching syntax in a void exists, as if the communicative function of language had nothing to do with syntax. And semantics has customarily been taught in sequence after syntax, or else not at all. . [ HPSG ] seeks to redress this situation by building up syntactic and semantic aspects of grammatical theory in an integrated way from the start, under the assumption that neither is of linguistic interest divorced from the other. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 7

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Driven Phrase Structure Grammars (HPSGs) The theory presented, head-driven phrase structure grammar - so called because of its central notion of the grammatical head - is an information-based (or unification-based) theory that has roots in different research programs within linguistics and neighboring disciplines (philosophy and computer science). HPSG draws upon and attempts to synthesize theories, such as categorial grammar, lexical-functional grammar, generalized phrase-structure grammar, and government-binding theory; but many key ideas arise from semantic theories like situation semantics and discourse representation theory, and from computational work in knowledge representation, data type theory, and formalisms based on the unification of partial information. . Carl Pollard, 1987 Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 8

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Driven Phrase Structure Grammars (HPSGs) HPSG is not a theory of syntax. Researchers into GB, GPSG, and LFG have focused on syntax, relying mainly on a Montague-style system of model-theoretic interpretation. In contrast, HPSG theory inextricably intertwines syntax and semantics, that is, syntactic and semantic aspects of grammatical theory are built up in an integrated way from the start. Thus HPSG is closer in spirit to situation semantics, and this closeness is reflected in the choice of ontological categories in terms of which the semantic contents of signs are analyzed: individuals, relations, roles, situations and circumstances. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 9

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Driven Phrase Structure Grammars (HPSGs) HPSG is an information-based theory of natural language syntax and semantics. It was developed by synthesizing a number of theories mentioned above. In these theories syntactic features are classified as head features, binding features and the subcategorization feature; thus HPSG uses three principles of universal grammar including: Head Feature Principle Binding Inheritance Principle Subcategorization Principle Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 10

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Head Feature Principle Similar to GPSG’s Head Feature Convention. It states that head features (e. g. , part of speech, the case of nouns, verb inflection) of a phrasal sign be shared with its head daughter, e. g. , case of a noun phrase is determined by the case of its head noun, etc. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 11

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Binding Inheritance Principle Similar to GPSG’s Foot Feature Principle. Binding features encode syntactic dependencies of signs that are essentially nonlocal such as the presence of gaps, relative pronouns, etc. This principle states that dependency information be transmitted up the sign’s constituent structure until the dependency can become “bound/saturated”. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 12

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Subcategorization Principle Generalization of categorial grammar’s “argument cancellation”. Subcategorization is described by a SUBCAT feature. SUBCAT value is a list of signs with which the sign in question must combine to be saturated. For example, the SUBCAT value of the past-tense intransitive verb walked is the list NP [NOM] since walked must combine with a single nominative case NP (the subject) to be saturated; past tense transitive verb liked has the SUBCAT value NP[ACC], NP[NOM] since liked requires accusative-case NP (direct object) & nominative-case NP (subject). • Dowty, D. (1982 a), Grammatical Relations and Montague Grammar. in P. Jacobson and G. K. Pullam (eds) The Nature of Syntactic Represenations. Dordrecht, Riedel. • Dowty, D. (1982 b), More on the Categorial Analysis of Grammatical Relations. in A. Zaenen (ed) Subjects and Other Subjects: Proceedings of the Harvard Conference on Grammatical Relations. Bloomington, Indiana University Linguistics Club. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 13

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG Since words (lexical signs) in HPSG are highly structured, together with the principles mentioned, the sharing of information is constrained between lexical signs and phrasal signs which they head (“projections” or the projection principle of GB theories). Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 14

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG principles are more explicitly formulated and thus implementations more likely to be faithful to theory. There is less work for language-specific rules of grammar. In Pollard & Sag (1987) only four highly schematic HPSG rules accounted for a substantial English fragment. One rule, informally written as [ SUBCAT ] H[ LEX - ], C subsumes a number of conventional phrase structure rules, such as those below. S NP VP NP DET NOM NP NP’s NOM Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 15

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG In the HPSG rule, one possibility is that the English phrase to be a saturated sign [ SUBCAT ], with denoting the empty list, has constituents which are a phrasal head (H[ LEX - ]) and a single complement (C). Another HPSG rule, expressed informally as [ SUBCAT [ ] ] H[ LEX + ], C* says that another option for English phrases is to be a sign subcategorizing for exactly one complement [ SUBCAT [ ] ] with “ [ ] ” stands for any list of length one, and whose daughters are a lexical head (H[ LEX + ]) and any number of complement daughters. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 16

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG This rule subsumes a number of conventional phrase structure rules, such as VP V; VP V S’; AP A; VP V NP; AP A PP; PP P NP; VP V PP; VP V VP; VP V AP; VP V NP NP; VP V NP PP; etc. HPSG rules determine constituency only; this follows GPSG theory where generalizations about relative order of constituents is factored out of phrase structure rules and expressed in independent language-specific linear precedence (LP) constraints. Unlike GPSG’s some LP constraints may refer not only to syntactic categories but also to their grammatical relations Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 17

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG Additional lexicalization of linguistic information and further simplification of the grammar is achieved in HPSG by lexical rules (similar to that of LFG). Lexical rules operate upon lexical signs of a given input class, systematically affecting their phonology, semantics and syntax to produce lexical signs of a certain output class. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 18

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG is not a theory of syntax. Researchers into GB, GPSG, and LFG have focussed on syntax, relying mainly on a Montague-style system of model-theoretic interpretation. In contrast, HPSG theory inextricably intertwines syntax and semantics, that is, syntactic and semantic aspects of grammatical theory are built up in an integrated way from the start. Thus HPSG is closer in spirit to situation semantics, and this closeness is reflected in the choice of ontological categories in terms of which the semantic contents of signs are analyzed: individuals, relations, roles, situations and circumstances. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 19

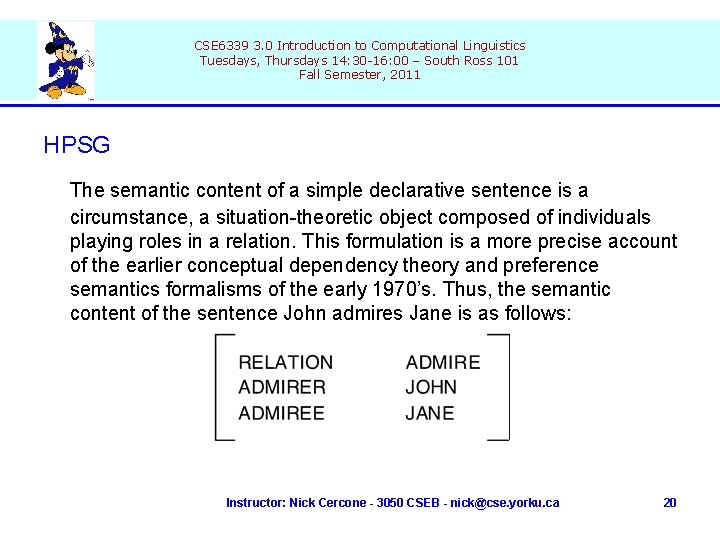

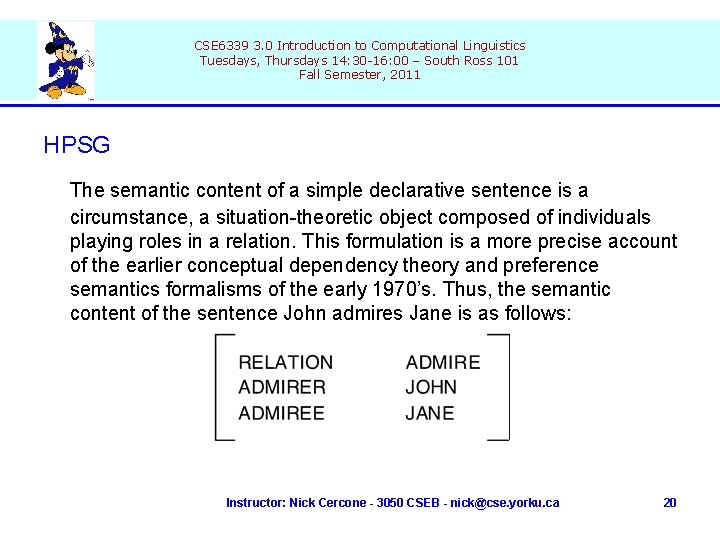

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG The semantic content of a simple declarative sentence is a circumstance, a situation-theoretic object composed of individuals playing roles in a relation. This formulation is a more precise account of the earlier conceptual dependency theory and preference semantics formalisms of the early 1970’s. Thus, the semantic content of the sentence John admires Jane is as follows: Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 20

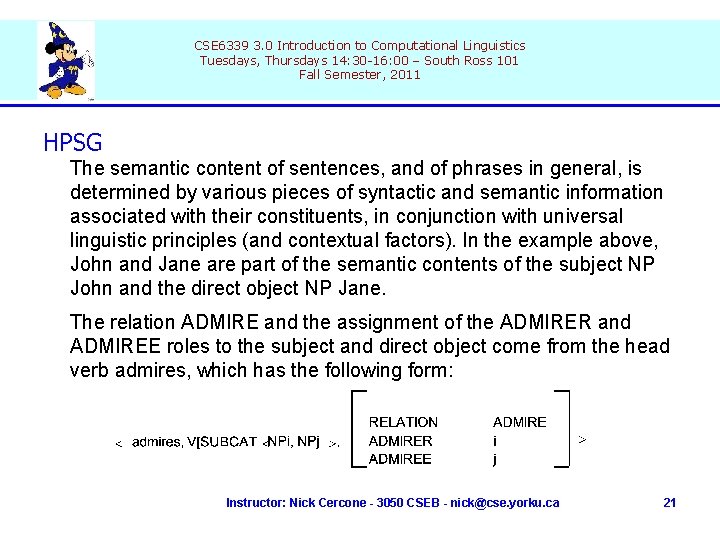

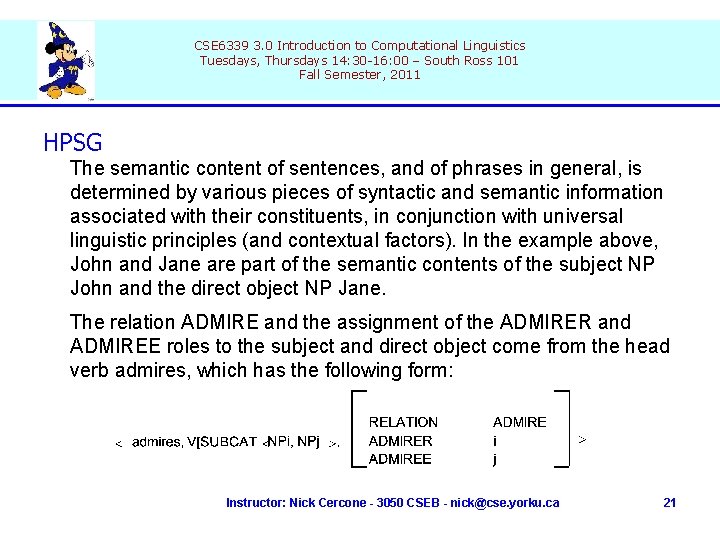

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG The semantic content of sentences, and of phrases in general, is determined by various pieces of syntactic and semantic information associated with their constituents, in conjunction with universal linguistic principles (and contextual factors). In the example above, John and Jane are part of the semantic contents of the subject NP John and the direct object NP Jane. The relation ADMIRE and the assignment of the ADMIRER and ADMIREE roles to the subject and direct object come from the head verb admires, which has the following form: Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 21

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG The lexical sign consists of phonological, syntactic, and semantic information. The crucial assignment of semantic roles to grammatical relations is mediated by the SUBCAT feature. i and j are variables. The specification “NPi” calls for an NP whose variable is to be unified with the ADMIREE role filler. The subcategorization principle ensures that variables j and i are then unified with John and Jane. The semantic content of the whole sentence follows by an additional universal Semantic Principle which requires the semantic content of a phrase be unified with that of its head daughter. Whereas Montague style semantics are determined by syntax directed model theoretic interpretation, in HPSG theory the semantic contents of a sentences’ lexical constituents “falls out” by virtue of the linguistic constraints which require pieces of information associated with signs be unified with other pieces. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 22

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG • tuple (Atom, Feat, Var, Type, Init, Rule): • Atom - set of atoms • Feat - set of features or attributes • Type = (T, subtype) - type hierarchy • Init - set of initial AVMs (attribute-value matrices) • Rule - set of rules • HPSG principes are defined and used to define HPSG modules Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 23

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG mechanism shift to HPSG illustration slide Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 24

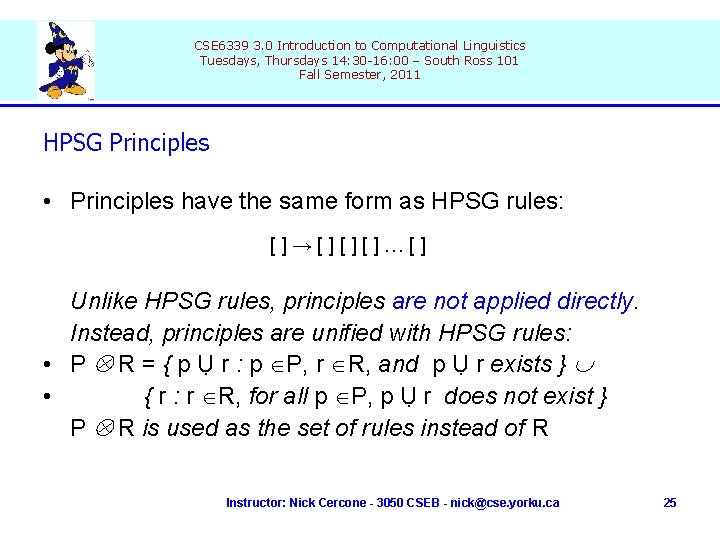

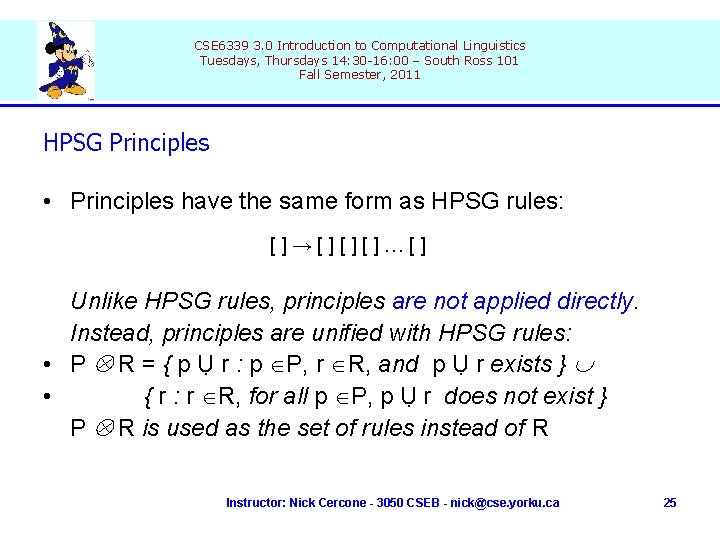

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG Principles • Principles have the same form as HPSG rules: []→[][][]…[] Unlike HPSG rules, principles are not applied directly. § Instead, principles are unified with HPSG rules: • P Ä R = { p Ụ r : p ÎP, r ÎR, and p Ụ r exists } È • { r : r ÎR, for all p ÎP, p Ụ r does not exist } § P Ä R is used as the set of rules instead of R § Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 25

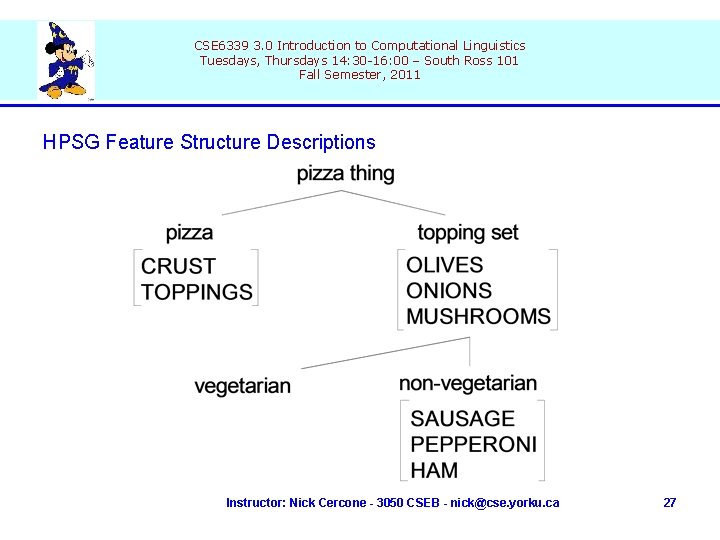

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG Feature Structure Descriptions Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 26

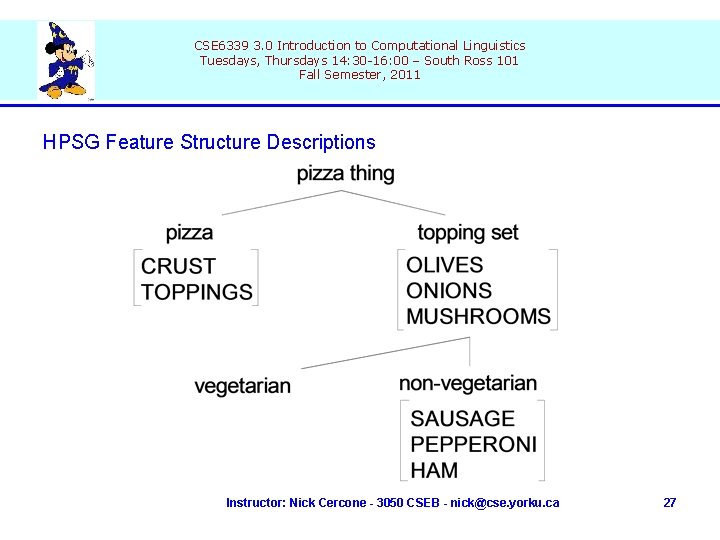

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 HPSG Feature Structure Descriptions Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 27

CSE 6339 3. 0 Introduction to Computational Linguistics Tuesdays, Thursdays 14: 30 -16: 00 – South Ross 101 Fall Semester, 2011 Concluding Remarks If you know what I mean A poet should be of the old-fashioned meaningless brand: obscure, esoteric, symbolic, -- the critics demand it; so if there's a poem of mine that you do understand I'll gladly explain what it means till you don't understand it. Instructor: Nick Cercone - 3050 CSEB - nick@cse. yorku. ca 28

Chomsky computational linguistics

Chomsky computational linguistics Xkcd computational linguistics

Xkcd computational linguistics Computational linguistics olympiad

Computational linguistics olympiad Columbia computational linguistics

Columbia computational linguistics Language

Language Theoretical linguistics vs applied linguistics

Theoretical linguistics vs applied linguistics George yule linguistics

George yule linguistics Rowe concise introduction to linguistics download

Rowe concise introduction to linguistics download An introduction to applied linguistics norbert schmitt

An introduction to applied linguistics norbert schmitt Indexicality

Indexicality Characteristics of computational thinking

Characteristics of computational thinking Computational thinking algorithms and programming

Computational thinking algorithms and programming Grc computational chemistry

Grc computational chemistry Using mathematics and computational thinking

Using mathematics and computational thinking Straight skeleton

Straight skeleton Usc neuroscience undergraduate

Usc neuroscience undergraduate Standard deviation computational formula

Standard deviation computational formula Semi interquartile range

Semi interquartile range Computational math

Computational math Algorithmic thinking gcse

Algorithmic thinking gcse Computational sustainability cornell

Computational sustainability cornell Cmu computational biology

Cmu computational biology Dsp computational building blocks

Dsp computational building blocks Amortized complexity examples

Amortized complexity examples Computational sustainability subjects

Computational sustainability subjects The computational complexity of linear optics

The computational complexity of linear optics Leerlijn computational thinking

Leerlijn computational thinking Computational speed

Computational speed Computational graph backpropagation

Computational graph backpropagation