Mahashweta Das mahashweta dasmavs uta edu CSE 6339

- Slides: 18

Mahashweta Das (mahashweta. das@mavs. uta. edu) CSE 6339 (Dr. Chengkai Li) Feb 9, 2010 “A Comparison of Document Clustering Techniques” Michael Steinbach, George Karypis and Vipin Kumar (Technical Report, CSE, UMN, 2000) Feb 9, 2010 Feb-9, 2010 CSE 6339

Document Clustering • Clustering - act of grouping similar object into sets • Document Clustering - act of collecting similar documents into bins, where similarity is some function on a document • Uses of Document Clustering • Browsing a large collection of documents (document organization, automatic topic extraction, fast information retrieval) • Organizing results returned by search engine (efficient web search, automatic generation of taxonomy of web documents, effective document classifier) - Improves precision and recall in information retrieval systems Feb 9, 2010 CSE 6339 2

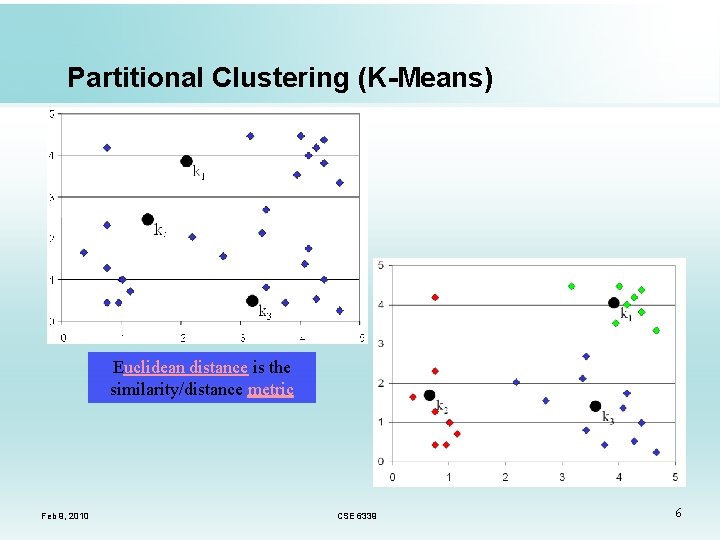

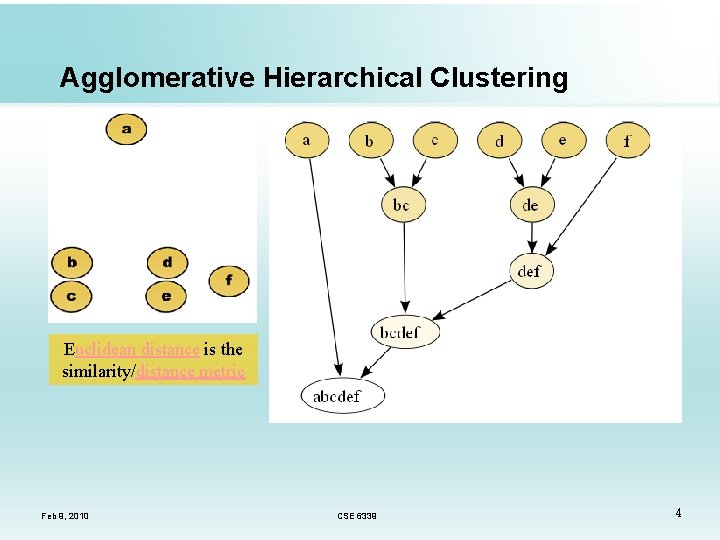

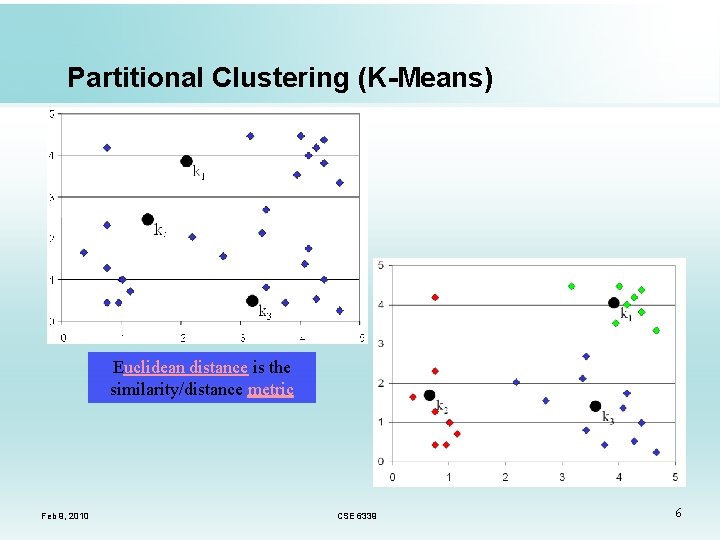

Types of Clustering • Agglomerative Hierarchical Clustering • Begin with as many clusters as objects; most similar clusters are successively merged until only one cluster remains • Superior cluster quality; but O(n 2) complexity • Partitional Clustering Feb 9, 2010 • Begin with k initial centroids and assign all n objects to closest centroid; recompute centroid of each cluster and repeat until centroids don’t change • Efficient O(knt) complexity; but often poor cluster quality CSE 6339 3

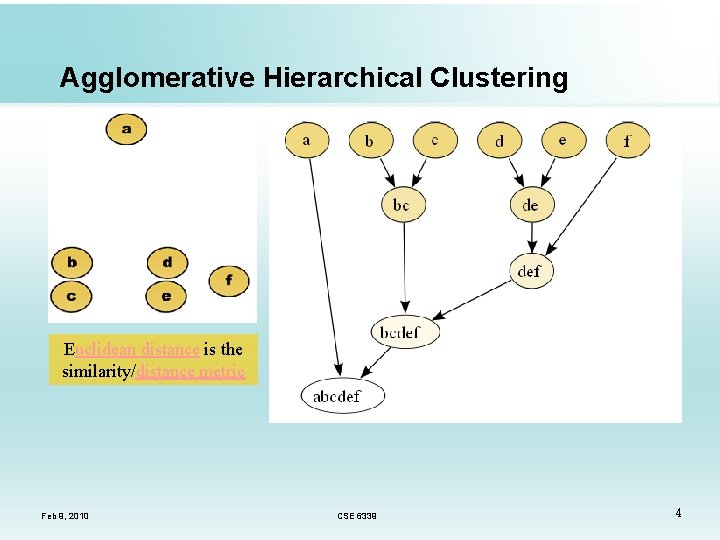

Agglomerative Hierarchical Clustering Euclidean distance is the similarity/distance metric Feb 9, 2010 CSE 6339 4

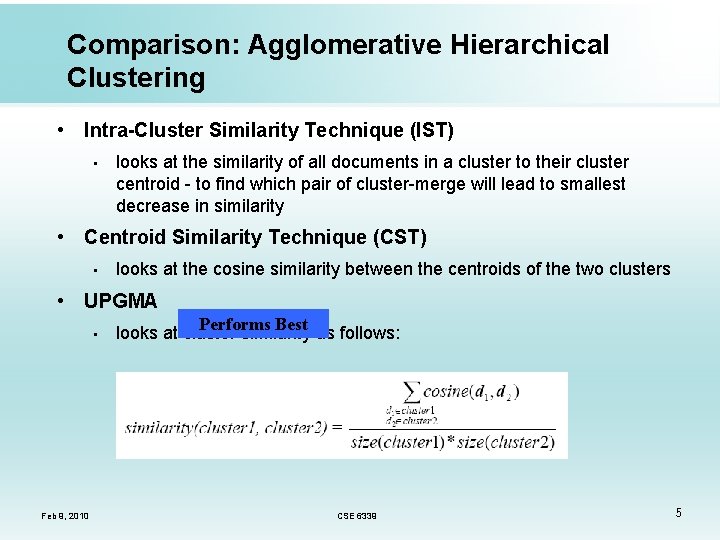

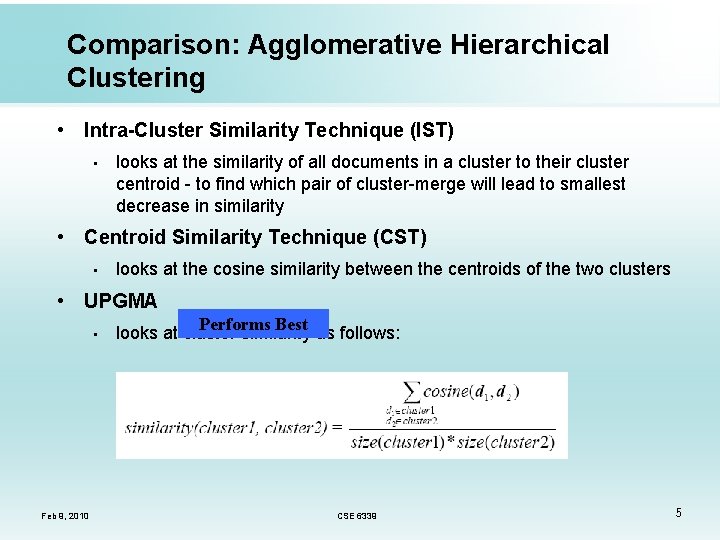

Comparison: Agglomerative Hierarchical Clustering • Intra-Cluster Similarity Technique (IST) • looks at the similarity of all documents in a cluster to their cluster centroid - to find which pair of cluster-merge will lead to smallest decrease in similarity • Centroid Similarity Technique (CST) • looks at the cosine similarity between the centroids of the two clusters • UPGMA • Feb 9, 2010 Performs Best looks at cluster similarity as follows: CSE 6339 5

Partitional Clustering (K-Means) Euclidean distance is the similarity/distance metric Feb 9, 2010 CSE 6339 6

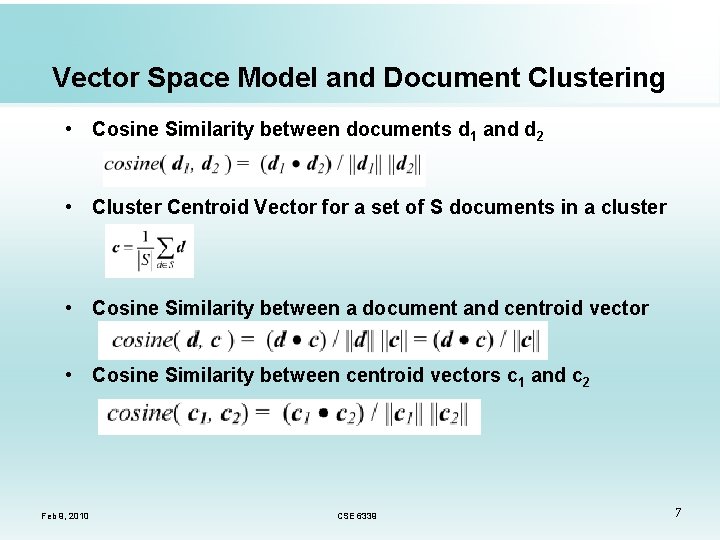

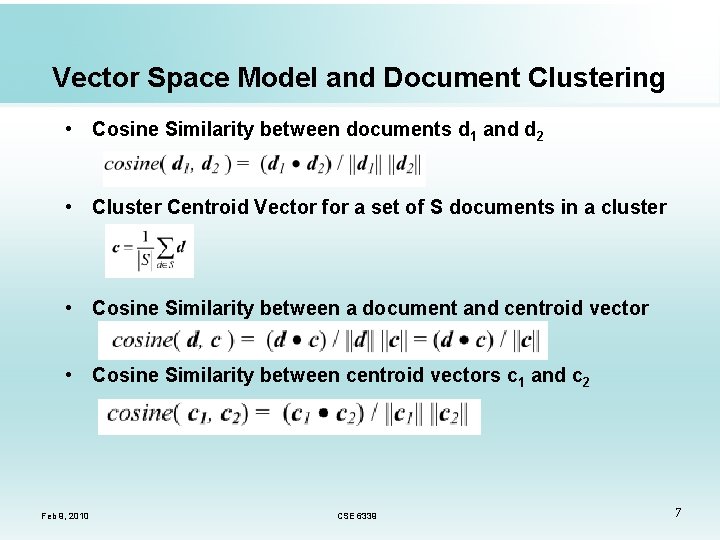

Vector Space Model and Document Clustering • Cosine Similarity between documents d 1 and d 2 • Cluster Centroid Vector for a set of S documents in a cluster • Cosine Similarity between a document and centroid vector • Cosine Similarity between centroid vectors c 1 and c 2 Feb 9, 2010 CSE 6339 7

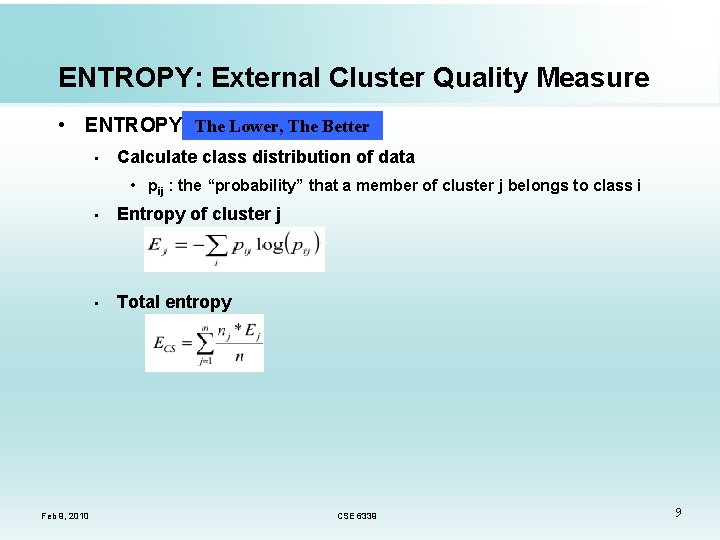

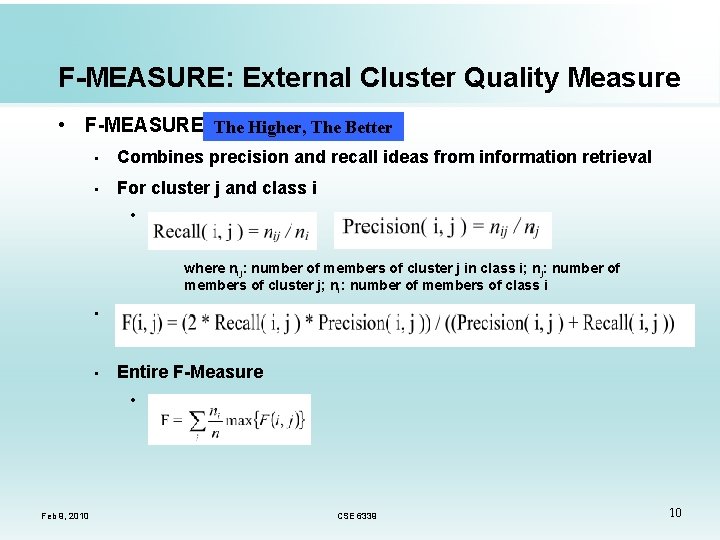

Cluster Quality Evaluation Measures • Internal Quality Measure • Cohesiveness of cluster as measure of cluster quality • OVERALL SIMILARITY • The Higher, The Better • Based on pairwise similarity of documents in a cluster External Quality Measure • Compares the groups produced by clustering techniques to known classes • ENTROPY • F-MEASURE • For a set of S documents in a cluster Feb 9, 2010 CSE 6339 8

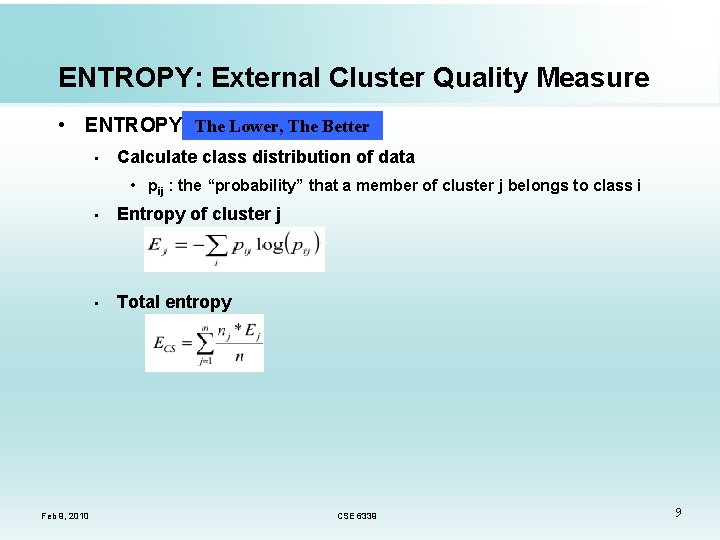

ENTROPY: External Cluster Quality Measure • ENTROPY The Lower, The Better • Calculate class distribution of data • pij : the “probability” that a member of cluster j belongs to class i Feb 9, 2010 • Entropy of cluster j • Total entropy CSE 6339 9

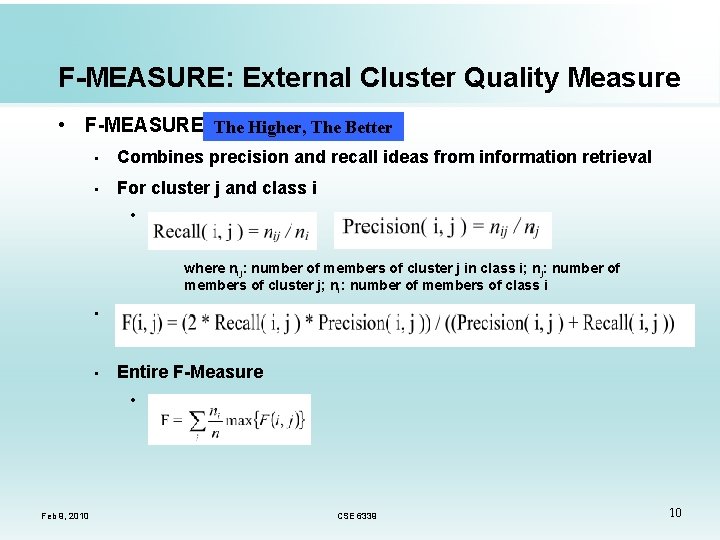

F-MEASURE: External Cluster Quality Measure • F-MEASURE The Higher, The Better • Combines precision and recall ideas from information retrieval • For cluster j and class i • where nij: number of members of cluster j in class i; nj: number of members of cluster j; ni: number of members of class i • P • Entire F-Measure • p Feb 9, 2010 CSE 6339 10

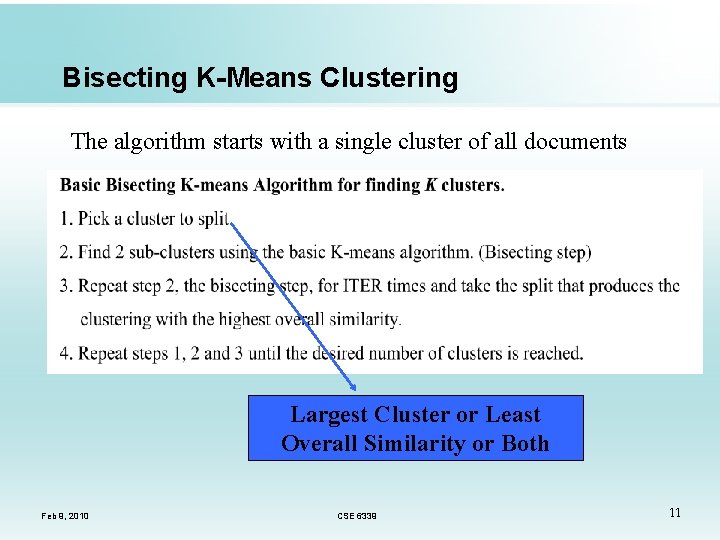

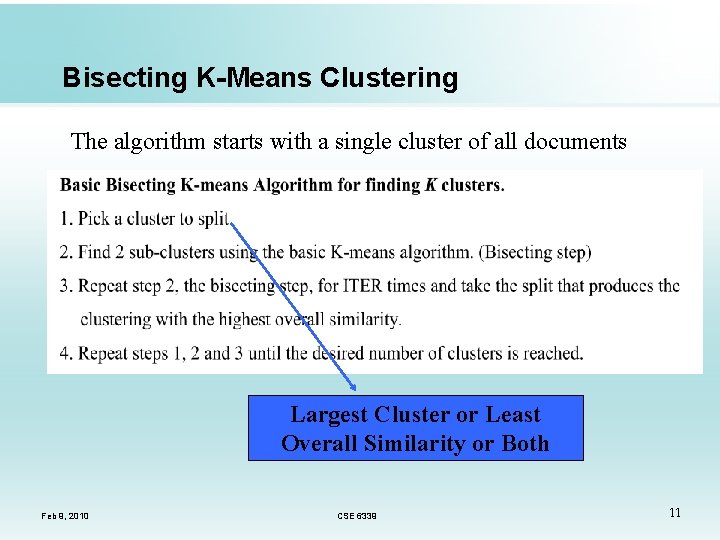

Bisecting K-Means Clustering The algorithm starts with a single cluster of all documents Largest Cluster or Least Overall Similarity or Both Feb 9, 2010 CSE 6339 11

Bisecting K-Means Example Feb 9, 2010 CSE 6339 12

Bisecting K-Means Example Bisecting K-Means Clustering Document Cluster Hierarchy D S S K S L S S Feb 9, 2010 H CSE 6339 K H 4 H 4 H 2 L H 3 H 4 H 2 13

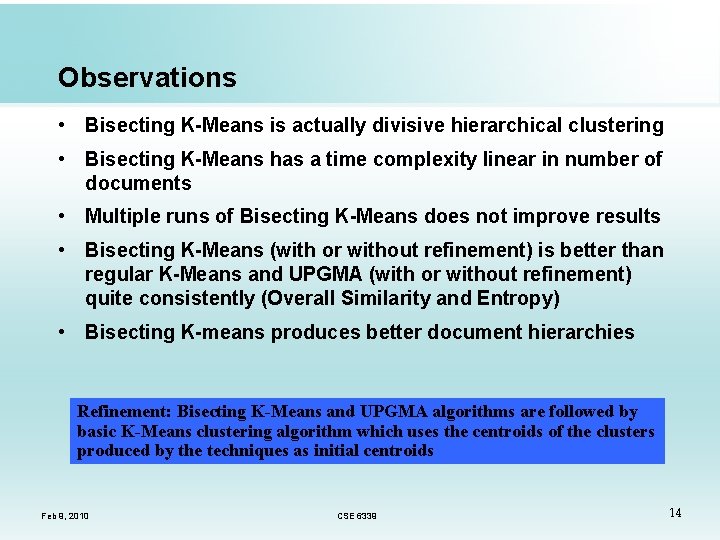

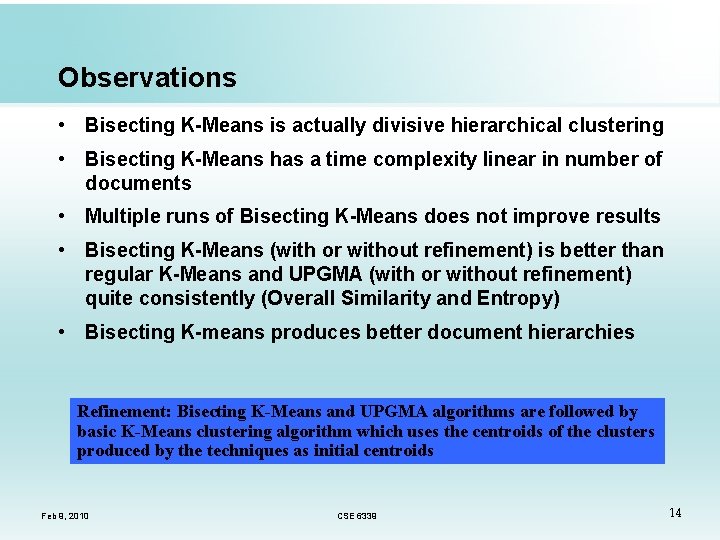

Observations • Bisecting K-Means is actually divisive hierarchical clustering • Bisecting K-Means has a time complexity linear in number of documents • Multiple runs of Bisecting K-Means does not improve results • Bisecting K-Means (with or without refinement) is better than regular K-Means and UPGMA (with or without refinement) quite consistently (Overall Similarity and Entropy) • Bisecting K-means produces better document hierarchies Refinement: Bisecting K-Means and UPGMA algorithms are followed by basic K-Means clustering algorithm which uses the centroids of the clusters produced by the techniques as initial centroids Feb 9, 2010 CSE 6339 14

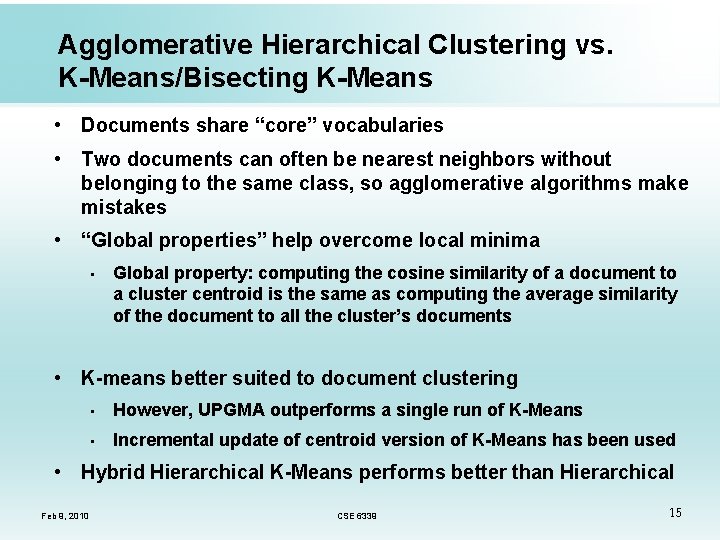

Agglomerative Hierarchical Clustering vs. K-Means/Bisecting K-Means • Documents share “core” vocabularies • Two documents can often be nearest neighbors without belonging to the same class, so agglomerative algorithms make mistakes • “Global properties” help overcome local minima • Global property: computing the cosine similarity of a document to a cluster centroid is the same as computing the average similarity of the document to all the cluster’s documents • K-means better suited to document clustering • However, UPGMA outperforms a single run of K-Means • Incremental update of centroid version of K-Means has been used • Hybrid Hierarchical K-Means performs better than Hierarchical Feb 9, 2010 CSE 6339 15

Bisecting K-Means vs. K-Means • Bisecting K-means tends to produce clusters of relatively uniform size • Regular K-means tends to produce clusters of widely different sizes which affects overall cluster quality measure Feb 9, 2010 • Bisecting K-means beats Regular K-means in Entropy measurement • Is this explanation/intuition sufficient? What is the scope of the algorithm outside document clustering? CSE 6339 16

Thank You !! ? ? Feb 9, 2010 Feb-9, 2010 CSE 6339

References • Cluster Analysis: Basic Concepts and Algorithms, Ruoming Jin www. cs. kent. edu/~jin/DM 07/cluster. ppt • A Comparison of Document Clustering Techniques, Leo Chen www. cs. sfu. ca/~wangk/894 report/chen 1. pdf • Taxa. Miner: An Experimental Framework for Automated Taxonomy Bootstrapping, Vipul Kashyap www. lsdis. cs. uga. edu/~kashyap/talks/lhncbc-talk. ppt • K Means Clustering, Panos Pardalos www. ise. ufl. edu/pardalos/dm/kmeans. pdf • Wikipedia Feb 9, 2010 CSE 6339 18

Dssks

Dssks Cse 6331 uta

Cse 6331 uta Dr gautam goswami

Dr gautam goswami Gautam das uta

Gautam das uta Edu.sharif.edu

Edu.sharif.edu Das alles ist deutschland das alles sind wir

Das alles ist deutschland das alles sind wir Ich bin die wahrheit der weg und das licht

Ich bin die wahrheit der weg und das licht Das alte ist vergangen das neue angefangen

Das alte ist vergangen das neue angefangen Menosprezo das artes e das letras

Menosprezo das artes e das letras Musica e a vida diga la meu irmao

Musica e a vida diga la meu irmao Uta orientation

Uta orientation Jacha uta el alto

Jacha uta el alto Uta noppeney

Uta noppeney Uta math placement test

Uta math placement test Dtutil delete package

Dtutil delete package Www,mymathlab.com

Www,mymathlab.com Uta educational diagnostician

Uta educational diagnostician Double hashing vs linear probing

Double hashing vs linear probing Uta hagen questions

Uta hagen questions