CS 430 INFO 430 Information Retrieval Lecture 14

- Slides: 39

CS 430 / INFO 430 Information Retrieval Lecture 14 Usability 2 1

Course Administration 2

Evaluation • The process of determining the worth of, or assigning a value to, the user interface on the basis of careful examination and judgment. • Making sure that a system is usable before launching it! • Two categories of evaluation methods: – Empirical evaluation: with users – Analytical evaluation: without users Evaluation 3

Evaluation But what is usability? From ISO 9241 -11, Usability comprises the following aspects: • Effectiveness – the accuracy and completeness with which users achieve certain goals Measures: quality of solution, error rates • Efficiency – the relation between the effectiveness and the resources expended in achieving them Measures: task completion time, learning time, clicks number • Satisfaction – the users’ comfort with and positive attitudes towards the use of the system Measures: attitude rating scales Evaluation 4

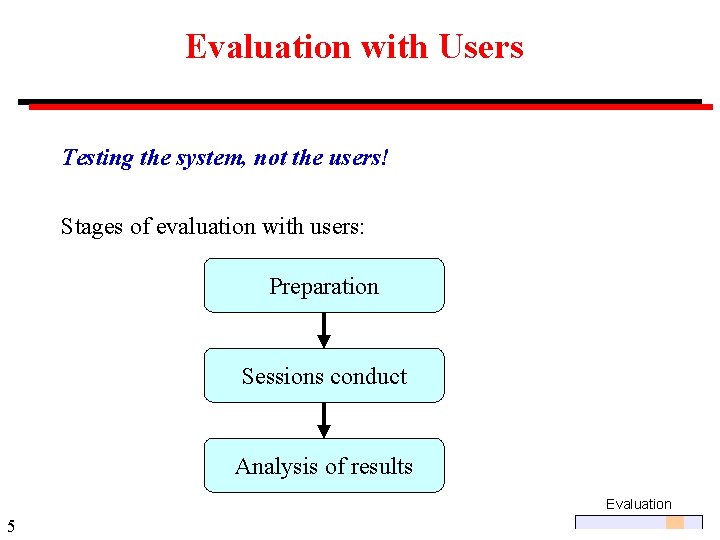

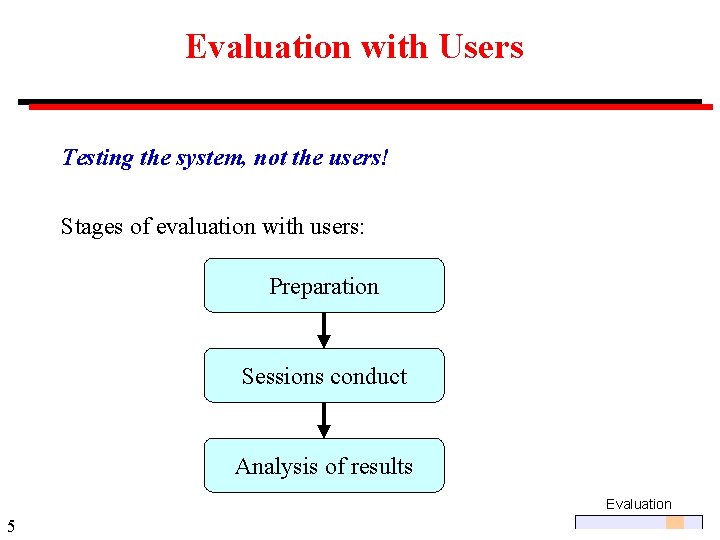

Evaluation with Users Testing the system, not the users! Stages of evaluation with users: Preparation Sessions conduct Analysis of results Evaluation 5

Evaluation with Users Preparation • Determine goals of the usability testing “The user can find the required information in no more than 2 minutes” • Write the user tasks “Answer the question: how hot is the sun? ” • Recruit participants Use the descriptions of users from the requirements phase to detect potential users Evaluation 6

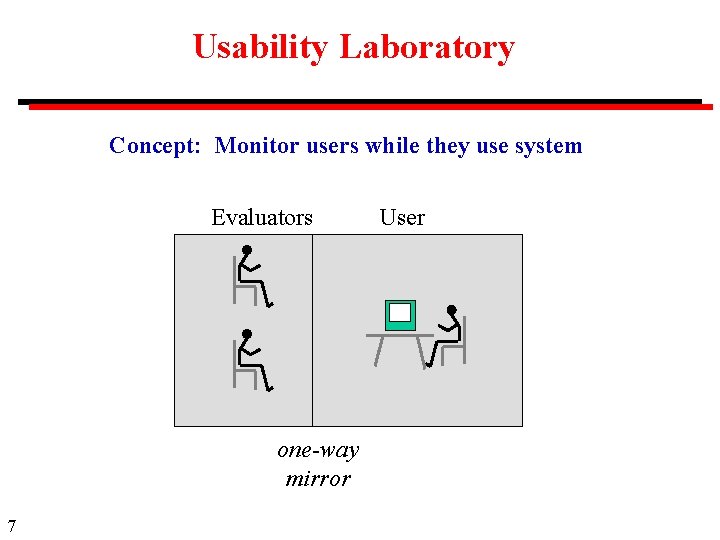

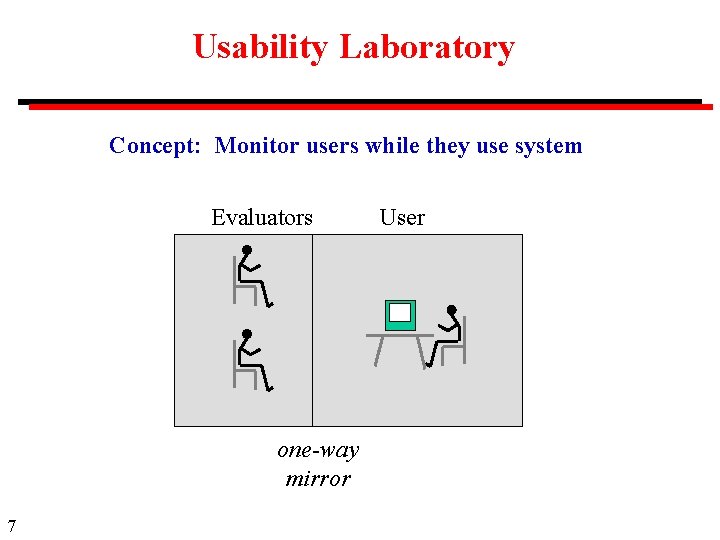

Usability Laboratory Concept: Monitor users while they use system Evaluators one-way mirror 7 User

Evaluation with Users Sessions Conduct • Conduct the session – Usability Lab – Simulated working environment • Observe the user – Human observer(s) – Video camera – Audio recording • Inquire satisfaction data Evaluation 8

Evaluation with Users Results Analysis • If possible, use statistical summaries • Pay close attention to areas where users – were frustrated – took a long time – couldn't complete tasks • Respect the data and users' responses, don't make excuses for designs that failed • Note designs that worked and make sure they're incorporated in the final product. Evaluation 9

Evaluation without Users • Assessing systems using established theories and methods Evaluation techniques • Heuristic Evaluation (Nielsen, 1994) – Evaluate the design using “rules of the thumb” • Cognitive Walkthrough (Wharton et al, 1994) – A formalized way of imagining people’s thoughts and actions when they use the interface for the first time • Claims Analysis – based on scenario-based analysis – Generating positive and negative claims about the effects of features on the user Evaluation 10

Evaluation Example: Eye Tracking 11

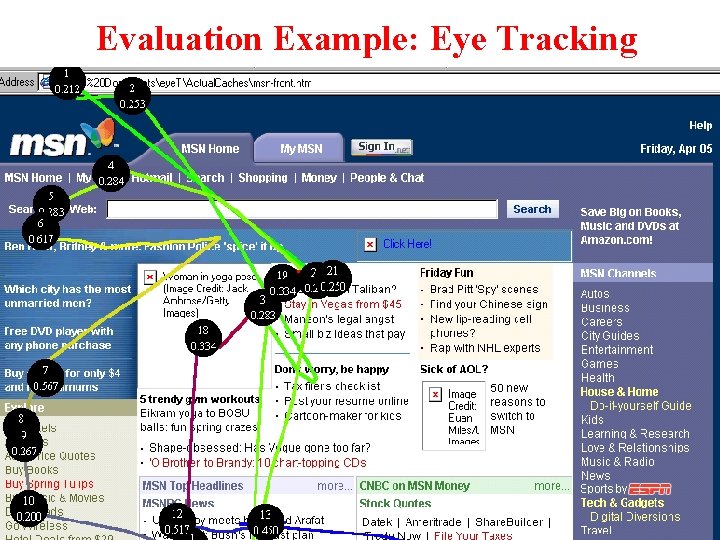

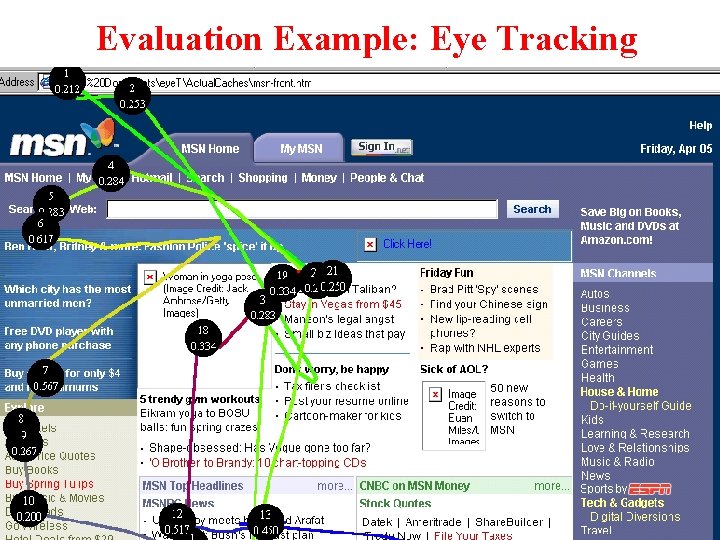

Evaluation Example: Eye Tracking 12

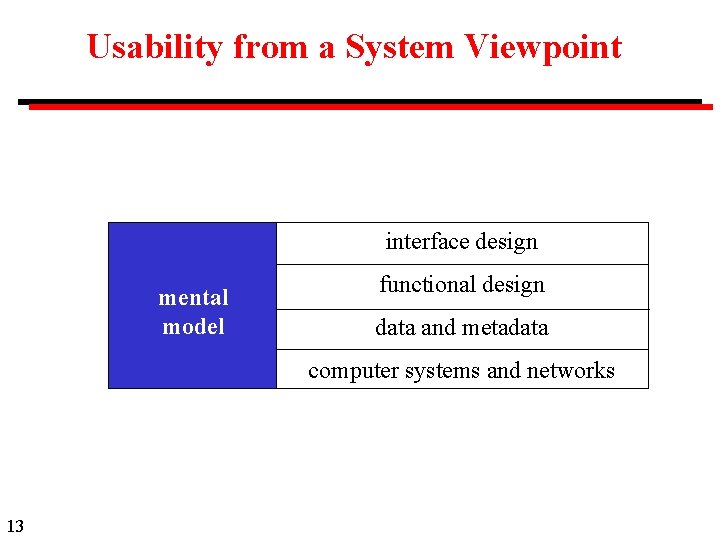

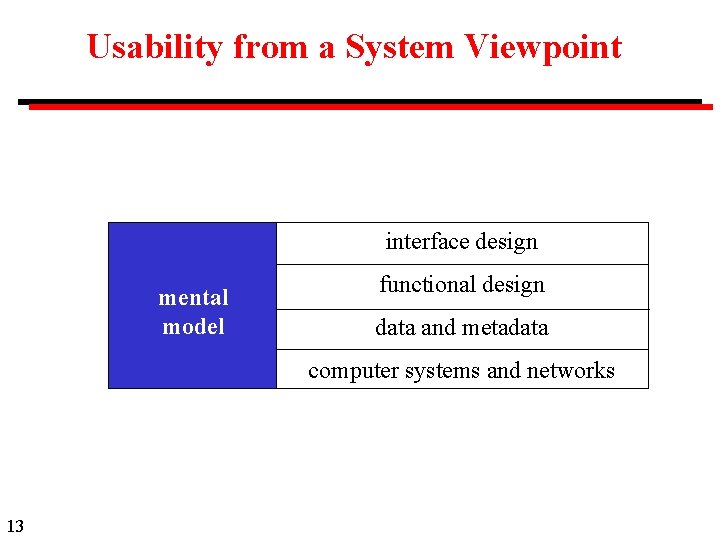

Usability from a System Viewpoint interface design mental model functional design data and metadata computer systems and networks 13

Mental Model The mental (conceptual) model is the user's internal model of what the system provides: • The desk top metaphor -- files and folders • The web model -- click on hyperlinks • Library models search and retrieve search, browse and retrieve 14

Interface Design The interface design is the appearance on the screen and the actual manipulation by the user • Fonts, colors, logos, key board controls, menus, buttons • Mouse control or keyboard control? • Conventions (e. g. , "back", "help") Example: Screen space utilization in Acrobat, American Memory and Mercury page turners. 15

Functional Design The functional design, determines the functions that are offered to the user • Selection of parts of a digital object • Searching a list or sorting the results • Help information • Manipulation of objects on a screen • Pan or zoom 16

Same functions, different interface Example: the desk top metaphor • Mouse -- 1 button (Macintosh), 2 button (Windows) or 3 button (Unix) • Close button -- left of window (Macintosh) right of window (Windows) Example: Boolean query • Type terms and operators (and, or, . . . ) in a text box • Type terms, but select operators from a structure editor 17

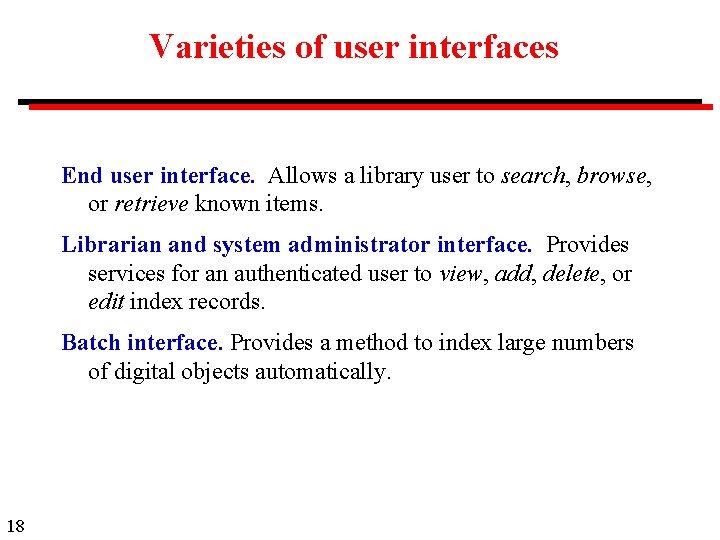

Varieties of user interfaces End user interface. Allows a library user to search, browse, or retrieve known items. Librarian and system administrator interface. Provides services for an authenticated user to view, add, delete, or edit index records. Batch interface. Provides a method to index large numbers of digital objects automatically. 18

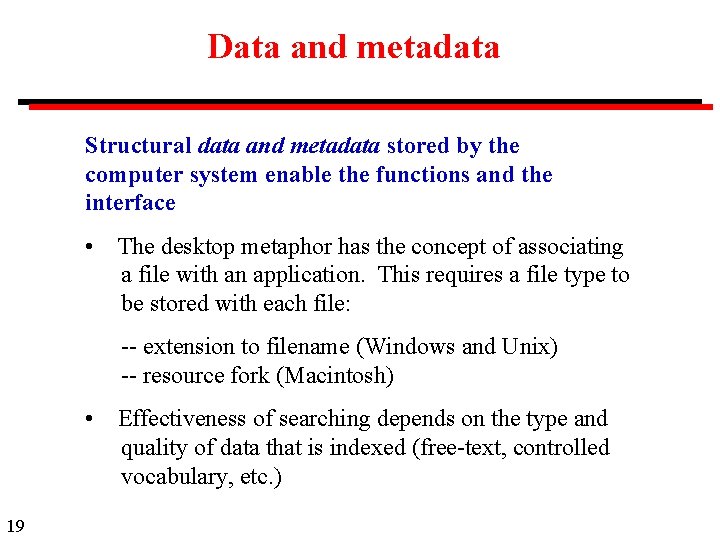

Data and metadata Structural data and metadata stored by the computer system enable the functions and the interface • The desktop metaphor has the concept of associating a file with an application. This requires a file type to be stored with each file: -- extension to filename (Windows and Unix) -- resource fork (Macintosh) • Effectiveness of searching depends on the type and quality of data that is indexed (free-text, controlled vocabulary, etc. ) 19

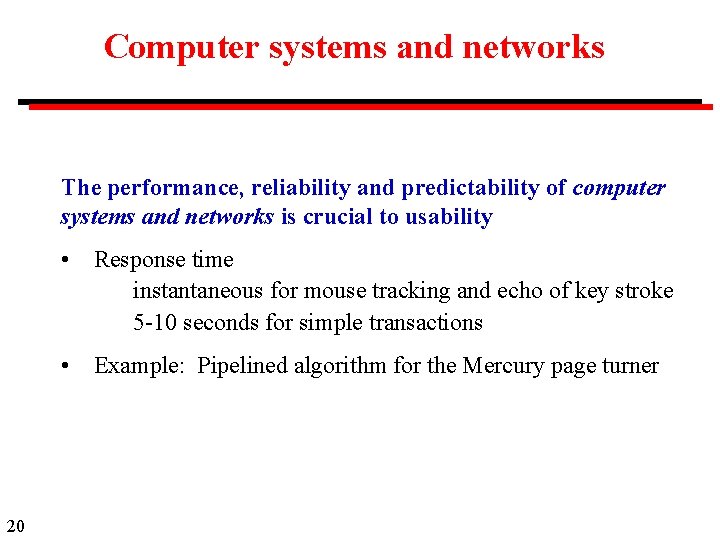

Computer systems and networks The performance, reliability and predictability of computer systems and networks is crucial to usability 20 • Response time instantaneous for mouse tracking and echo of key stroke 5 -10 seconds for simple transactions • Example: Pipelined algorithm for the Mercury page turner

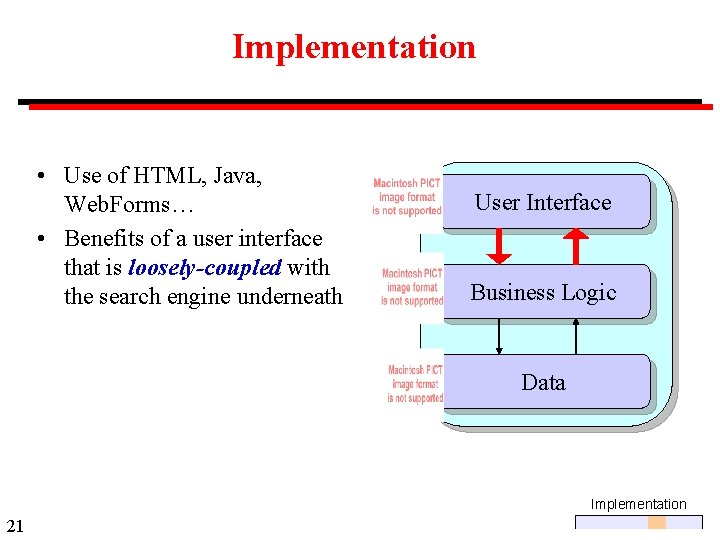

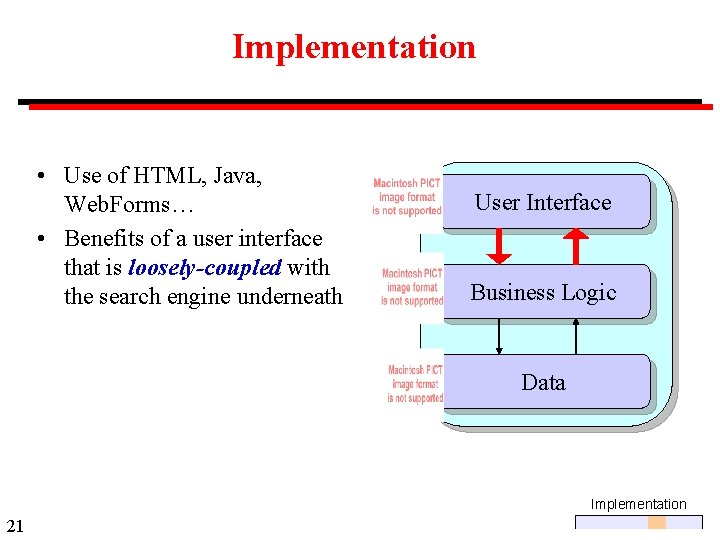

Implementation • Use of HTML, Java, Web. Forms… • Benefits of a user interface that is loosely-coupled with the search engine underneath User Interface Business Logic Data Implementation 21

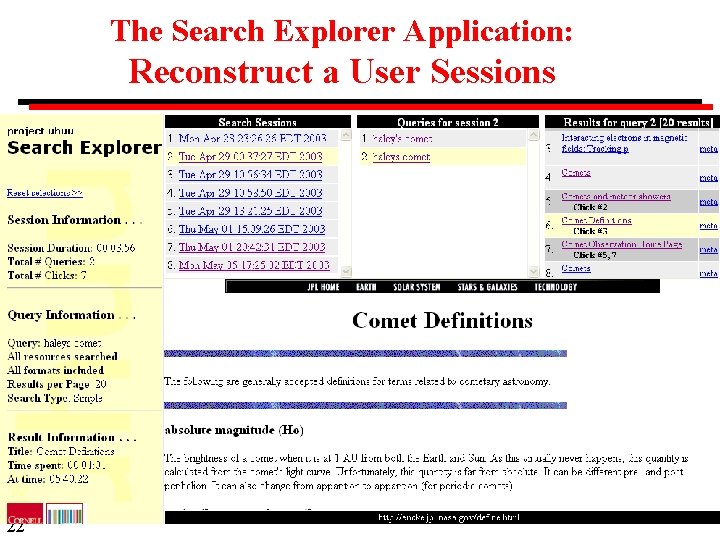

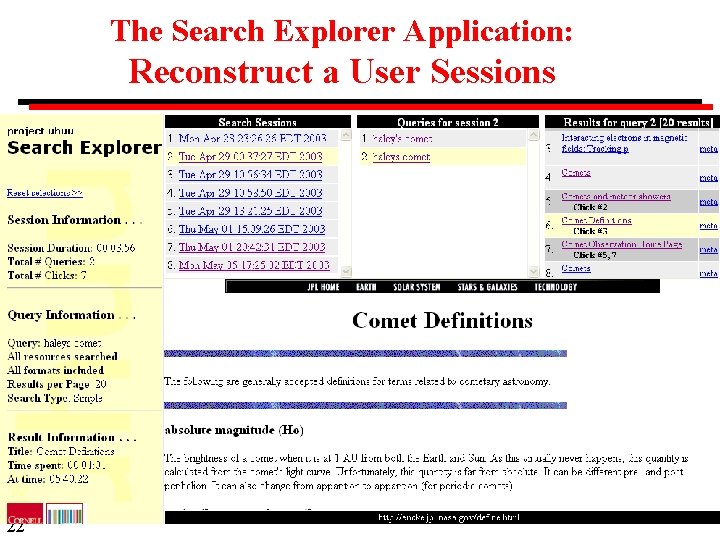

The Search Explorer Application: Reconstruct a User Sessions 22

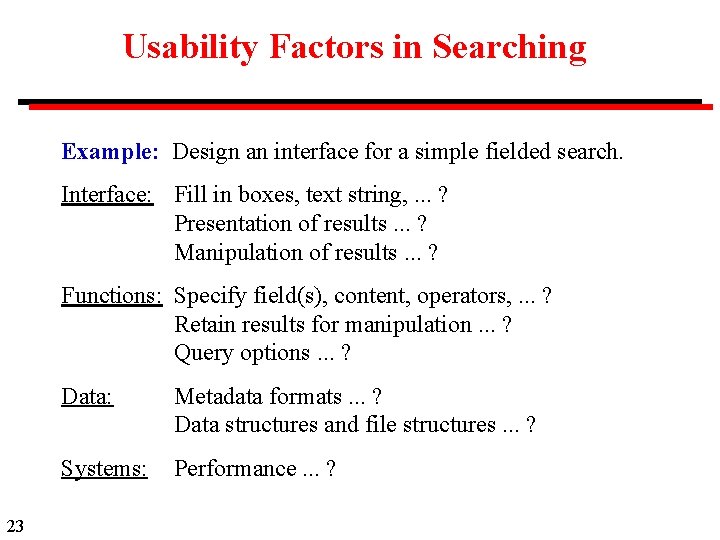

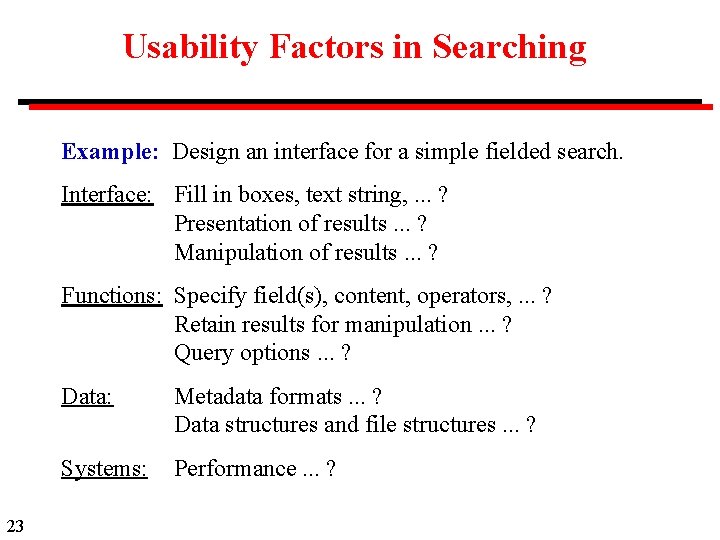

Usability Factors in Searching Example: Design an interface for a simple fielded search. Interface: Fill in boxes, text string, . . . ? Presentation of results. . . ? Manipulation of results. . . ? Functions: Specify field(s), content, operators, . . . ? Retain results for manipulation. . . ? Query options. . . ? 23 Data: Metadata formats. . . ? Data structures and file structures. . . ? Systems: Performance. . . ?

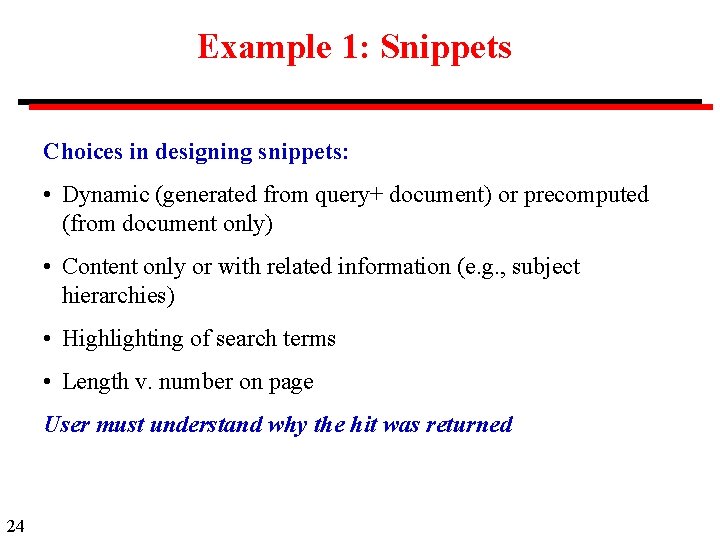

Example 1: Snippets Choices in designing snippets: • Dynamic (generated from query+ document) or precomputed (from document only) • Content only or with related information (e. g. , subject hierarchies) • Highlighting of search terms • Length v. number on page User must understand why the hit was returned 24

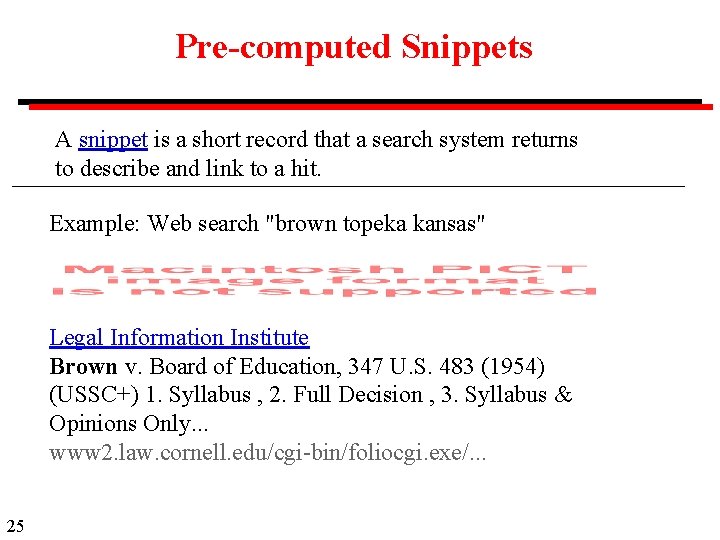

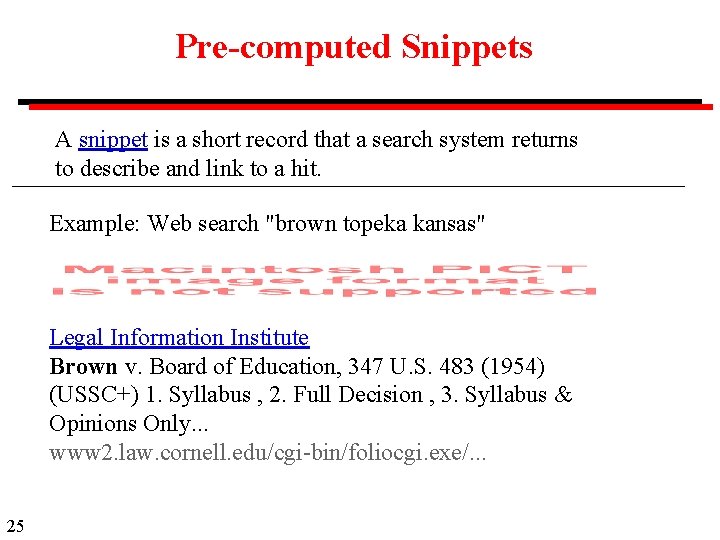

Pre-computed Snippets A snippet is a short record that a search system returns to describe and link to a hit. Example: Web search "brown topeka kansas" Legal Information Institute Brown v. Board of Education, 347 U. S. 483 (1954) (USSC+) 1. Syllabus , 2. Full Decision , 3. Syllabus & Opinions Only. . . www 2. law. cornell. edu/cgi-bin/foliocgi. exe/. . . 25

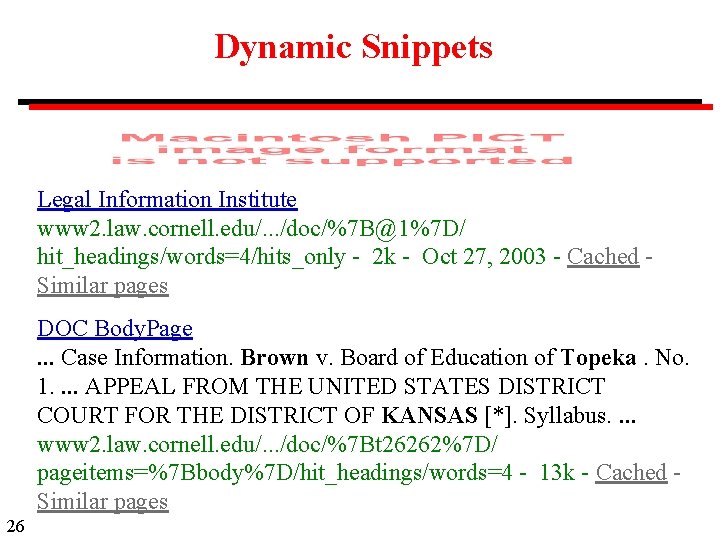

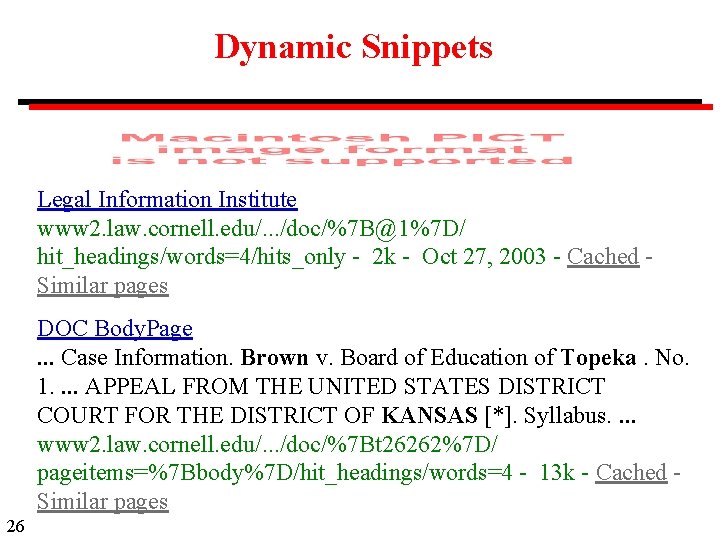

Dynamic Snippets Legal Information Institute www 2. law. cornell. edu/. . . /doc/%7 B@1%7 D/ hit_headings/words=4/hits_only - 2 k - Oct 27, 2003 - Cached Similar pages DOC Body. Page. . . Case Information. Brown v. Board of Education of Topeka. No. 1. . APPEAL FROM THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF KANSAS [*]. Syllabus. . www 2. law. cornell. edu/. . . /doc/%7 Bt 26262%7 D/ pageitems=%7 Bbody%7 D/hit_headings/words=4 - 13 k - Cached Similar pages 26

Pre-computed Snippets 27

Dynamic Snippets with Pre-computed Summary 28

Dynamic Snippets with Pre-computed Summary Pre-computer summary, with space for dynamic snippet 29

Dynamic Snippets with Pre-computed Summary Complete record with dynamic snippet 30

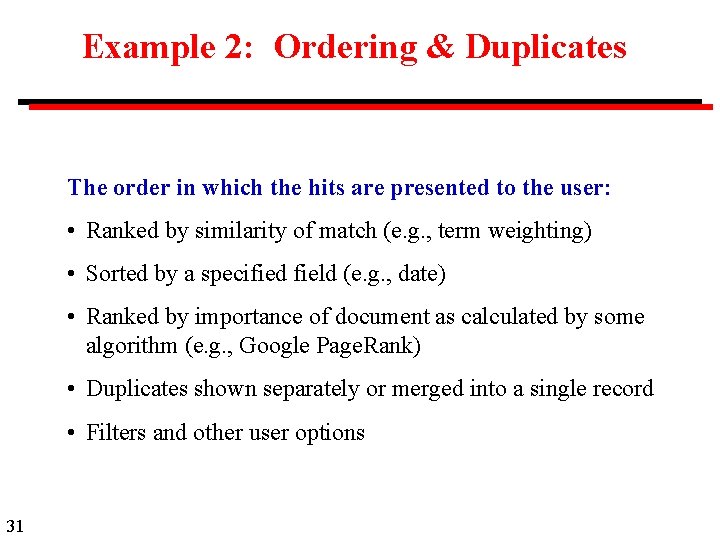

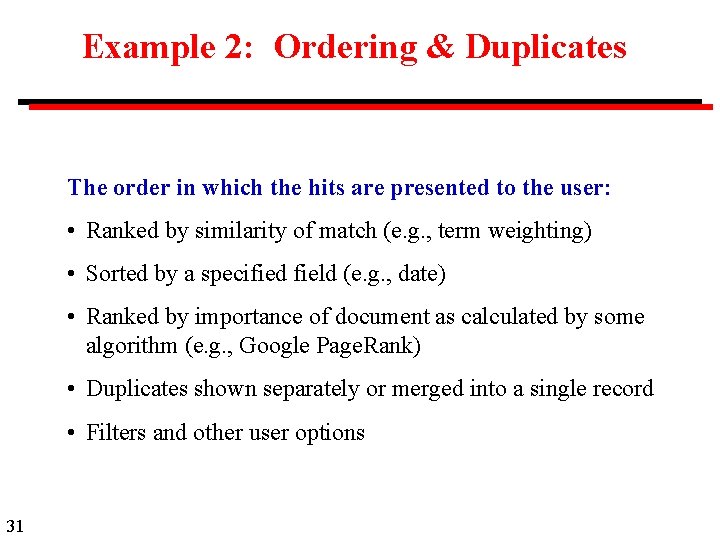

Example 2: Ordering & Duplicates The order in which the hits are presented to the user: • Ranked by similarity of match (e. g. , term weighting) • Sorted by a specified field (e. g. , date) • Ranked by importance of document as calculated by some algorithm (e. g. , Google Page. Rank) • Duplicates shown separately or merged into a single record • Filters and other user options 31

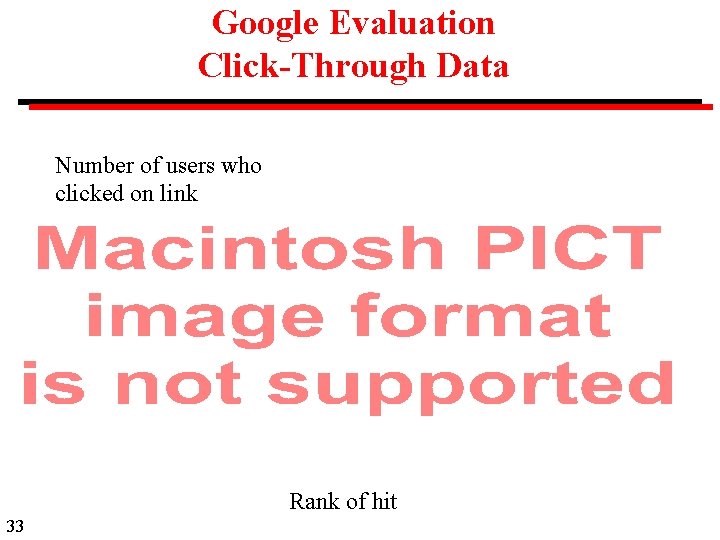

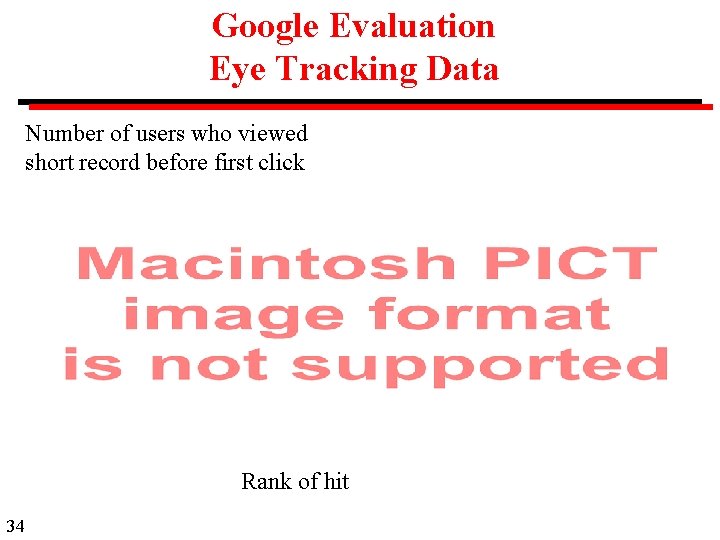

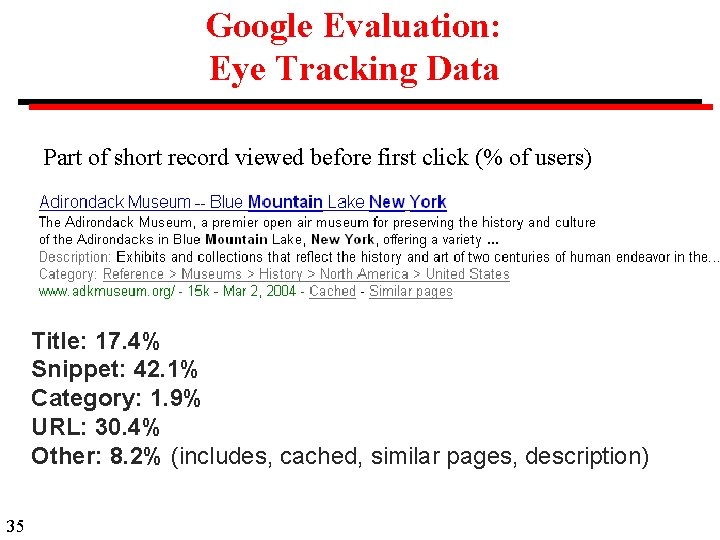

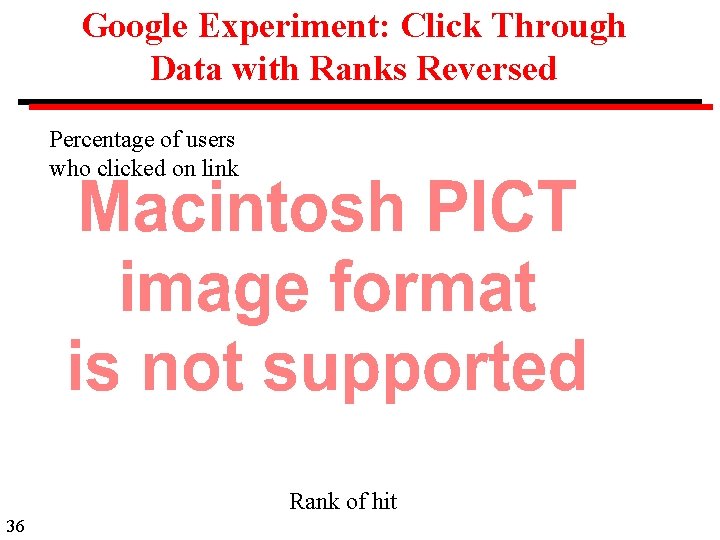

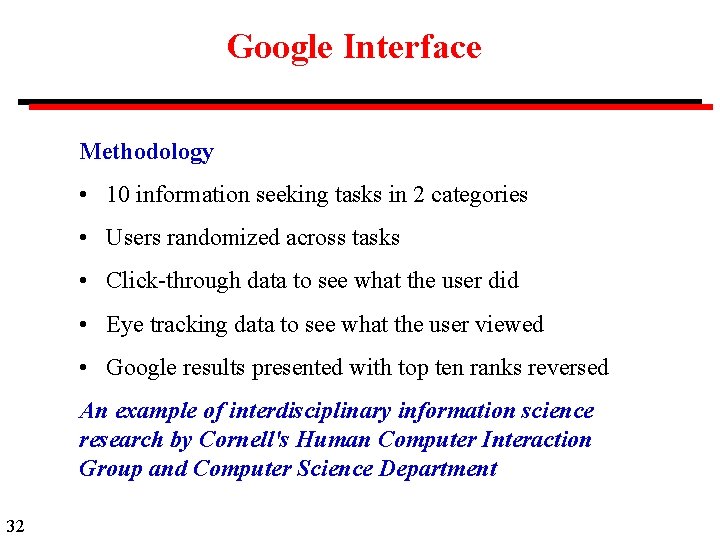

Google Interface Methodology • 10 information seeking tasks in 2 categories • Users randomized across tasks • Click-through data to see what the user did • Eye tracking data to see what the user viewed • Google results presented with top ten ranks reversed An example of interdisciplinary information science research by Cornell's Human Computer Interaction Group and Computer Science Department 32

Google Evaluation Click-Through Data Number of users who clicked on link Rank of hit 33

Google Evaluation Eye Tracking Data Number of users who viewed short record before first click Rank of hit 34

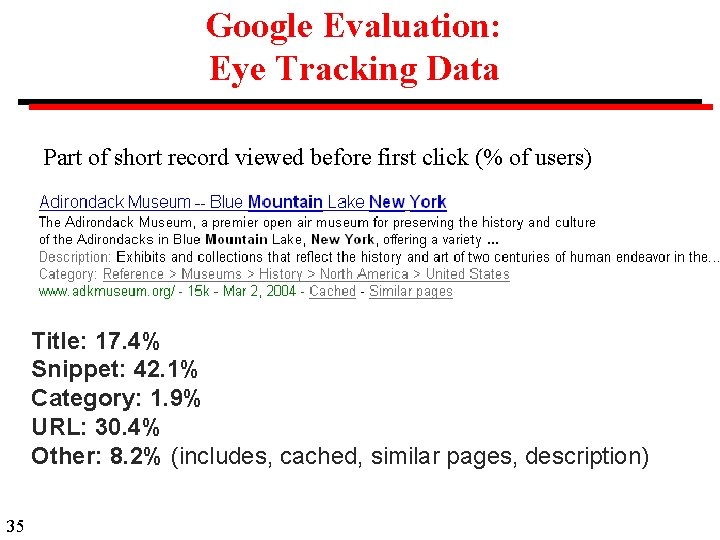

Google Evaluation: Eye Tracking Data Part of short record viewed before first click (% of users) Title: 17. 4% Snippet: 42. 1% Category: 1. 9% URL: 30. 4% Other: 8. 2% (includes, cached, similar pages, description) 35

Google Experiment: Click Through Data with Ranks Reversed Percentage of users who clicked on link Rank of hit 36

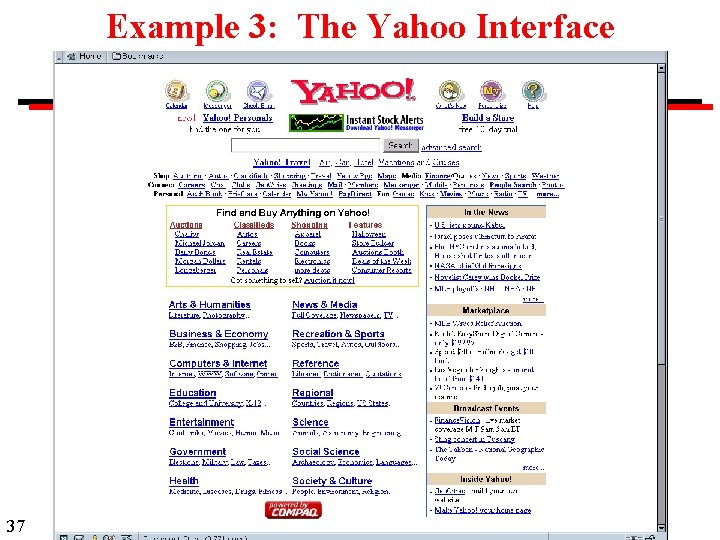

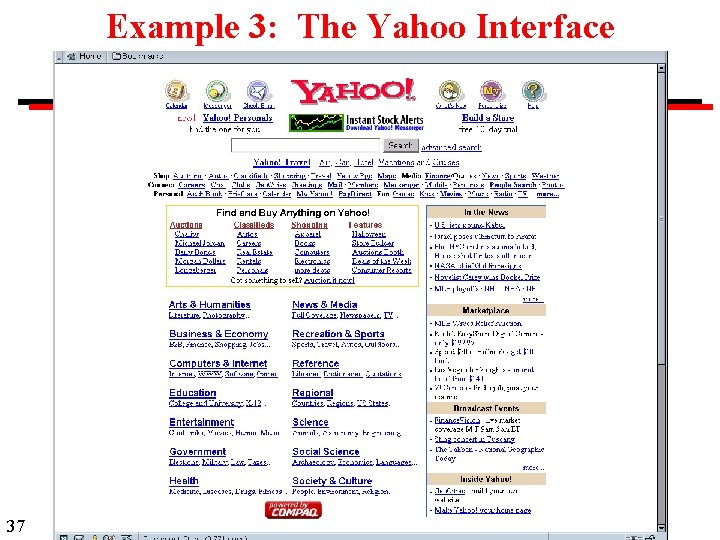

Example 3: The Yahoo Interface 37

The Yahoo Interface The Yahoo interface is cluttered and unattractive, yet Yahoo is one of the most successful of all web sites. Why is this interface successful? • Very many branches from a single web page saves the need for hierarchy of menus. • Simple html markup ensures that the page renders quickly and accurately on all browsers. • Slow changes over the years means that users are familiar with it. 38

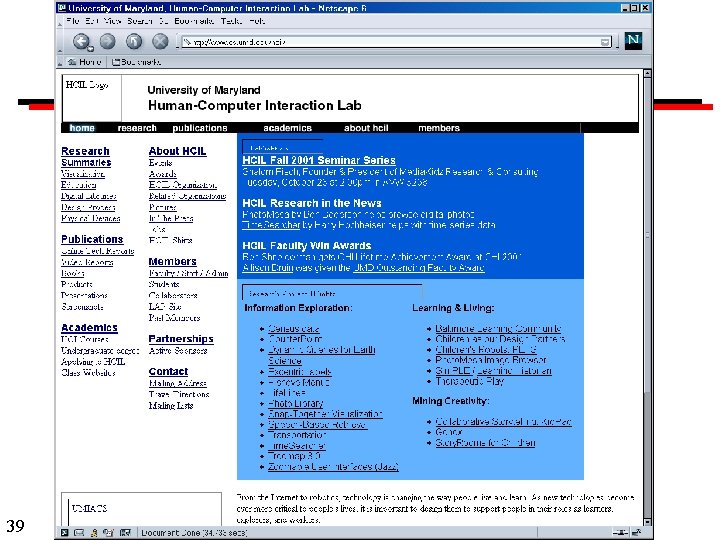

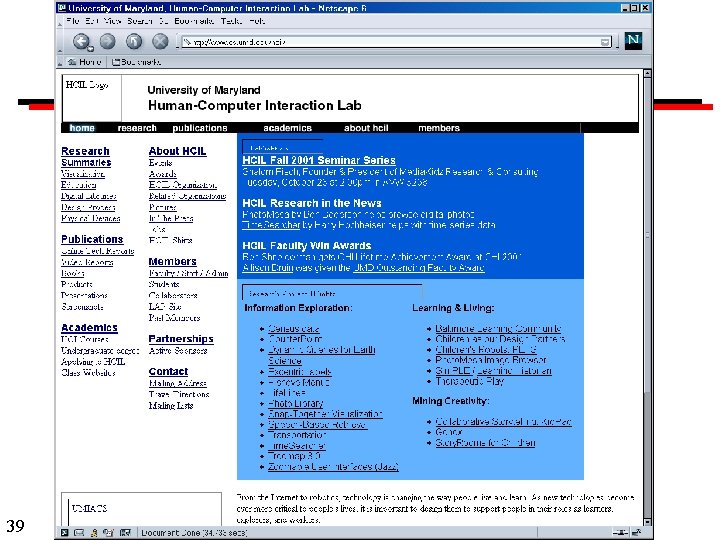

39