CS 430 INFO 430 Information Retrieval Lecture 10

- Slides: 41

CS 430 / INFO 430 Information Retrieval Lecture 10 Evaluation of Retrieval Effectiveness 1 1

Course administration Assignment 1 Everybody should have received an email message with their grades and comments. If you have not received a message, please contact: cs 430 -l@lists. cornell. edu 2

Course administration Regrading requests If you have a question about your grade, send a message to cs 430 -l@lists. cornell. edu. The original grader and I will review your query and I will reply to you. • If we clearly made a mistake, we will correct the grade. • If we discover a mistake that was missed in the first grading, we will deduct points. 3 • If the program did not run for a simple reason (e. g. , wrong files submitted), we will run it and regrade it, but remove the points for poor submission. • When the grade is a matter of judgment, no changes will be made.

Course administration Assignment 2 • We suggest the JAMA package for singular value decomposition in Java. If you wish to use C++, you will need to find a similar package. • You will have to select a suitable value of k, the number of dimensions. In your report explain how you selected k. With only 20 short files, the appropriate value of k for this corpus is almost certainly much less than the value of 100 used in the reading. 4

Course administration Discussion Class 4 (a) Check the Web site for which sections to concentrate on. (b) The PDF version of the file on the TREC site is damaged. Use the Post. Script version, or the PDF version on the course Web site. 5

Retrieval Effectiveness Designing an information retrieval system has many decisions: Manual or automatic indexing? Natural language or controlled vocabulary? What stoplists? What stemming methods? What query syntax? etc. How do we know which of these methods are most effective? Is everything a matter of judgment? 6

Evaluation To place information retrieval on a systematic basis, we need repeatable criteria to evaluate how effective a system is in meeting the information needs of the user of the system. This proves to be very difficult with a human in the loop. It proves hard to define: • the task that the human is attempting • the criteria to measure success 7

Studies of Retrieval Effectiveness • The Cranfield Experiments, Cyril W. Cleverdon, Cranfield College of Aeronautics, 1957 -1968 • SMART System, Gerald Salton, Cornell University, 1964 -1988 • TREC, Donna Harman and Ellen Voorhees, National Institute of Standards and Technology (NIST), 1992 - 8

Evaluation of Matching: Recall and Precision If information retrieval were perfect. . . Every hit would be relevant to the original query, and every relevant item in the body of information would be found. Precision: percentage (or fraction) of the hits that are relevant, i. e. , the extent to which the set of hits retrieved by a query satisfies the requirement that generated the query. Recall: percentage (or fraction) of the relevant items that are found by the query, i. e. , the extent to which the query found all the items that satisfy the requirement. 9

Recall and Precision with Exact Matching: Example • Corpus of 10, 000 documents, 50 on a specific topic • Ideal search finds these 50 documents and reject all others • Actual search identifies 25 documents; 20 are relevant but 5 were on other topics 10 • Precision: 20/25 = 0. 8 (80% of hits were relevant) • Recall: 20/50 = 0. 4 (40% of relevant were found)

Measuring Precision and Recall Precision is easy to measure: • A knowledgeable person looks at each document that is identified and decides whether it is relevant. • In the example, only the 25 documents that are found need to be examined. Recall is difficult to measure: 11 • To know all relevant items, a knowledgeable person must go through the entire collection, looking at every object to decide if it fits the criteria. • In the example, all 10, 000 documents must be examined.

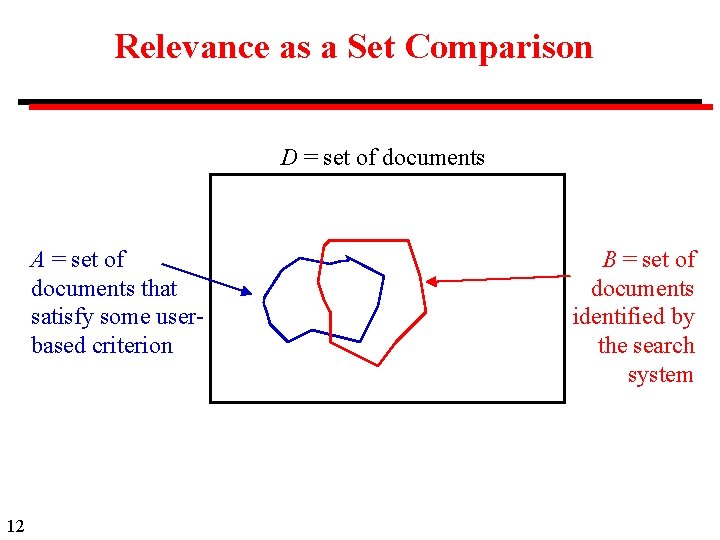

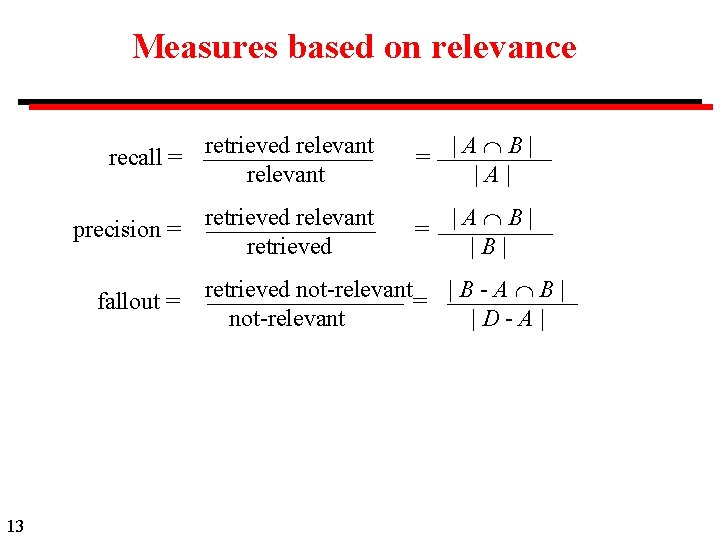

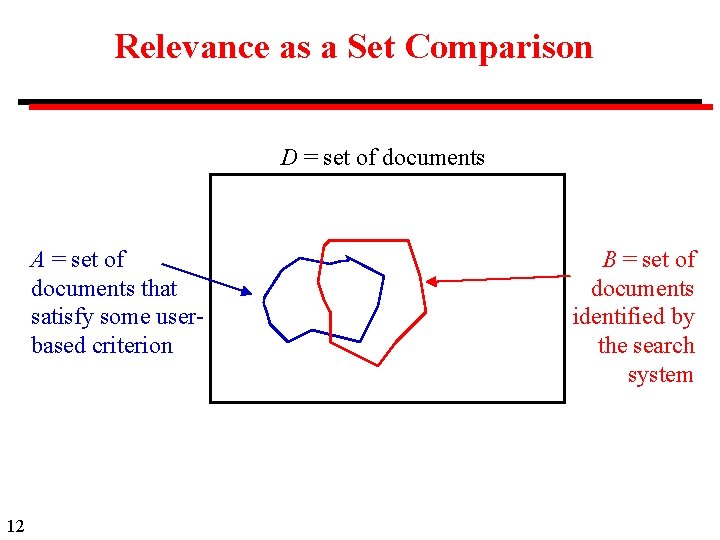

Relevance as a Set Comparison D = set of documents A = set of documents that satisfy some userbased criterion 12 B = set of documents identified by the search system

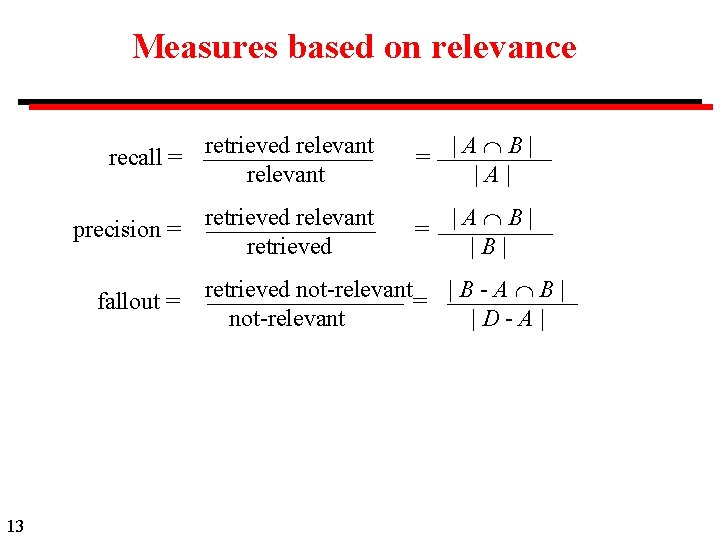

Measures based on relevance recall = retrieved relevant = |A B| |A| retrieved relevant retrieved = |A B| |B| precision = fallout = 13 retrieved not-relevant = | B - A B | not-relevant |D-A|

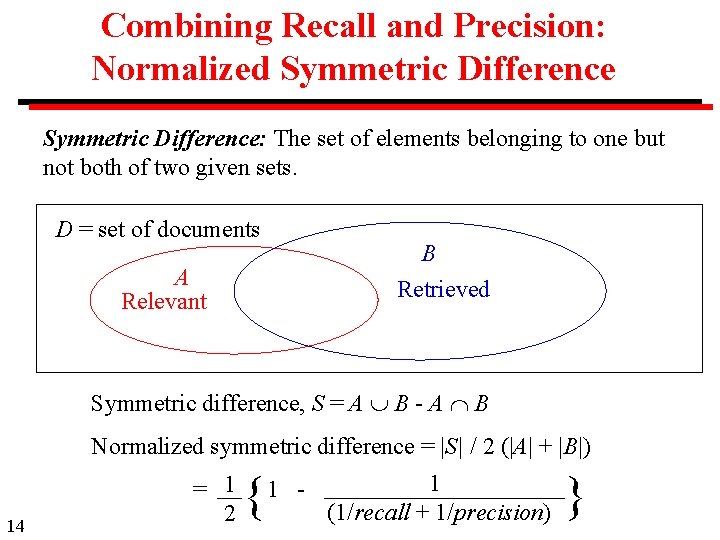

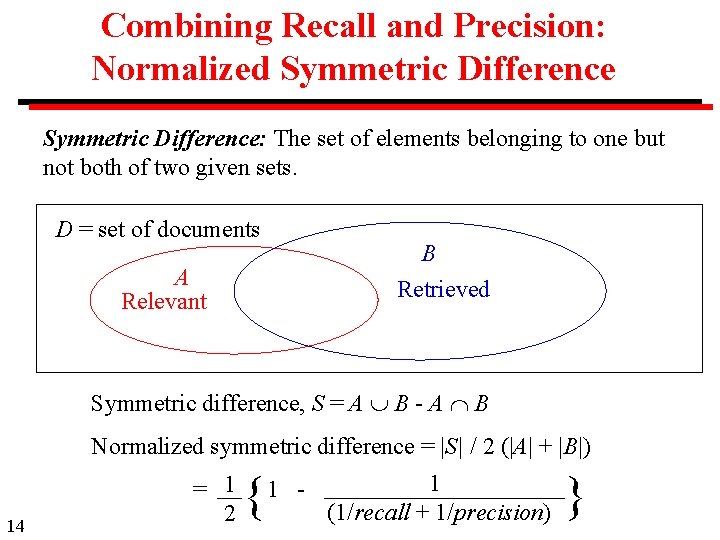

Combining Recall and Precision: Normalized Symmetric Difference: The set of elements belonging to one but not both of two given sets. D = set of documents B Retrieved A Relevant Symmetric difference, S = A B - A B Normalized symmetric difference = |S| / 2 (|A| + |B|) 14 = 1 2 { 1 - 1 (1/recall + 1/precision) }

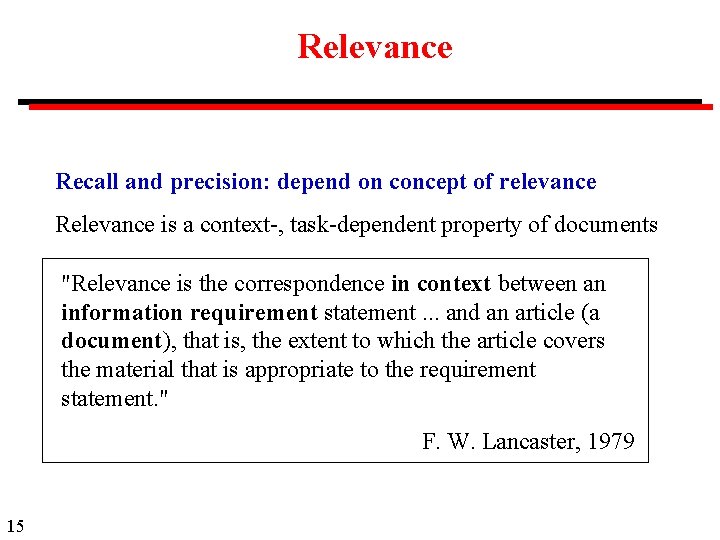

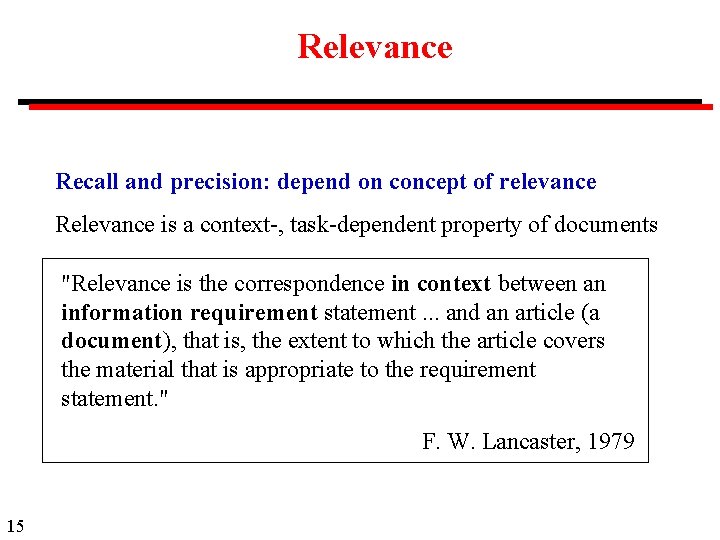

Relevance Recall and precision: depend on concept of relevance Relevance is a context-, task-dependent property of documents "Relevance is the correspondence in context between an information requirement statement. . . and an article (a document), that is, the extent to which the article covers the material that is appropriate to the requirement statement. " F. W. Lancaster, 1979 15

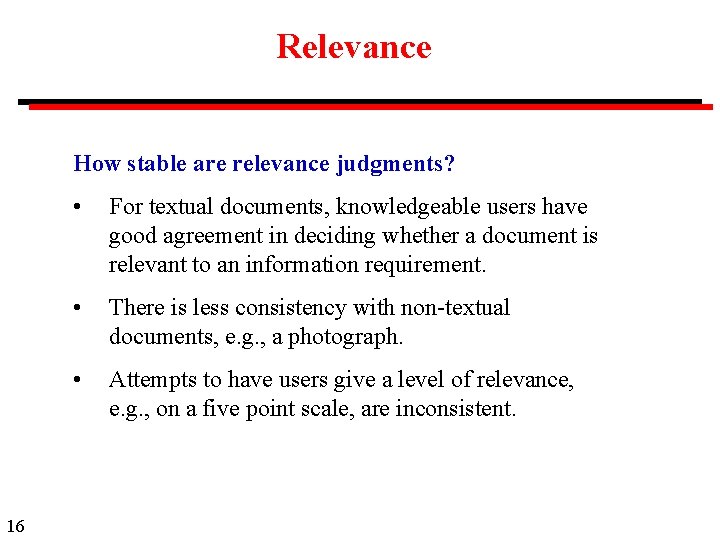

Relevance How stable are relevance judgments? 16 • For textual documents, knowledgeable users have good agreement in deciding whether a document is relevant to an information requirement. • There is less consistency with non-textual documents, e. g. , a photograph. • Attempts to have users give a level of relevance, e. g. , on a five point scale, are inconsistent.

Relevance judgments (TREC) In the TREC experiments, each topic was judged by a single assessor, who also set the topic statement. • In TREC-2, a sample of the topics and documents was rejudged by second expert assessor. The average agreement was about 80%. • In TREC-4, all topics were rejudged by two additional assessors, with 72% agreement among all three assessors. 17

Relevance judgments (TREC) However: • In the TREC-4 tests, most of the agreement was among the documents that all assessors agreed were non-relevant • 30% of documents judged relevant by the first assessor, were judged non-relevant by both additional assessors. Using data from TREC-4 and TREC-6, Voorhees estimates a practical upper bound of 65% precision at 65% recall, as the level at which human experts agree with one another. 18

Cranfield Collection The first Information Retrieval test collection Test collection: 1, 400 documents on aerodynamics Queries: 225 queries, with a list of the documents that should be retrieved for each query 19

Cranfield Second Experiment Comparative efficiency of indexing systems: (Universal Decimal Classification, alphabetical subject index, a special facet classification, Uniterm system of co-ordinate indexing) Four indexes prepared manually for each document in three batches of 6, 000 documents -- total 18, 000 documents, each indexed four times. The documents were reports and paper in aeronautics. Indexes for testing were prepared on index cards and other cards. Very careful control of indexing procedures. 20

Cranfield Second Experiment (continued) Searching: 21 • 1, 200 test questions, each satisfied by at least one document • Reviewed by expert panel • Searches carried out by 3 expert librarians • Two rounds of searching to develop testing methodology • Subsidiary experiments at English Electric Whetstone Laboratory and Western Reserve University

The Cranfield Data The Cranfield data was made widely available and used by other researchers • Salton used the Cranfield data with the SMART system (a) to study the relationship between recall and precision, and (b) to compare automatic indexing with human indexing • Sparc Jones and van Rijsbergen used the Cranfield data for experiments in relevance weighting, clustering, definition of test corpora, etc. 22

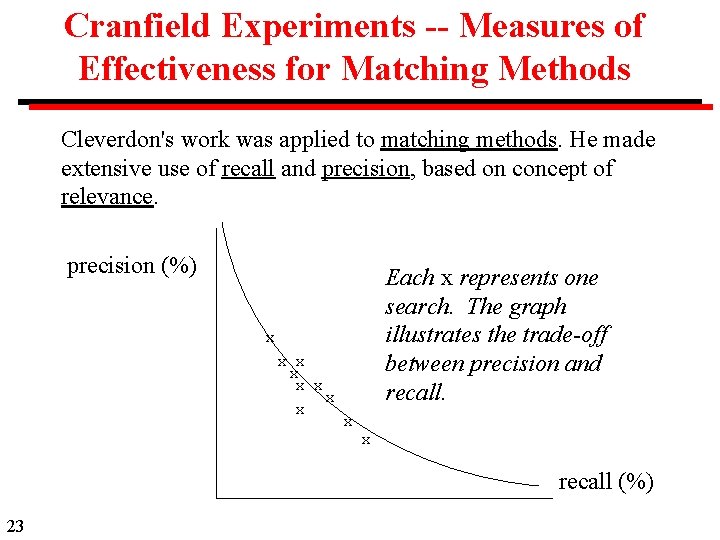

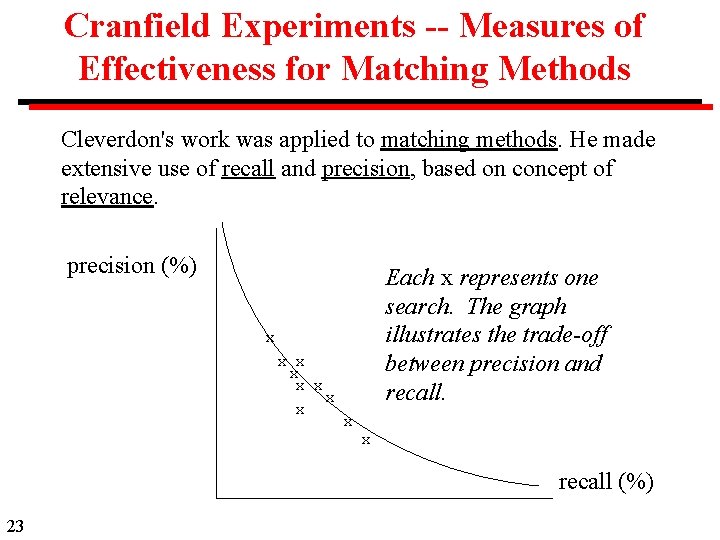

Cranfield Experiments -- Measures of Effectiveness for Matching Methods Cleverdon's work was applied to matching methods. He made extensive use of recall and precision, based on concept of relevance. precision (%) Each x represents one search. The graph illustrates the trade-off between precision and recall. x x x x x recall (%) 23

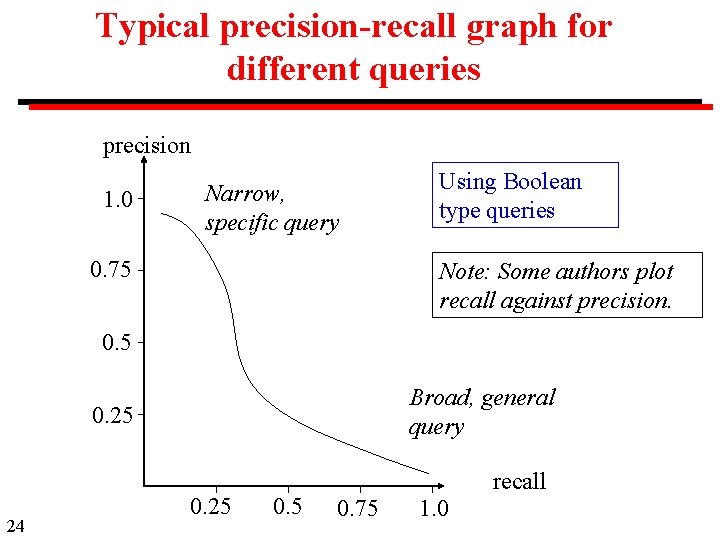

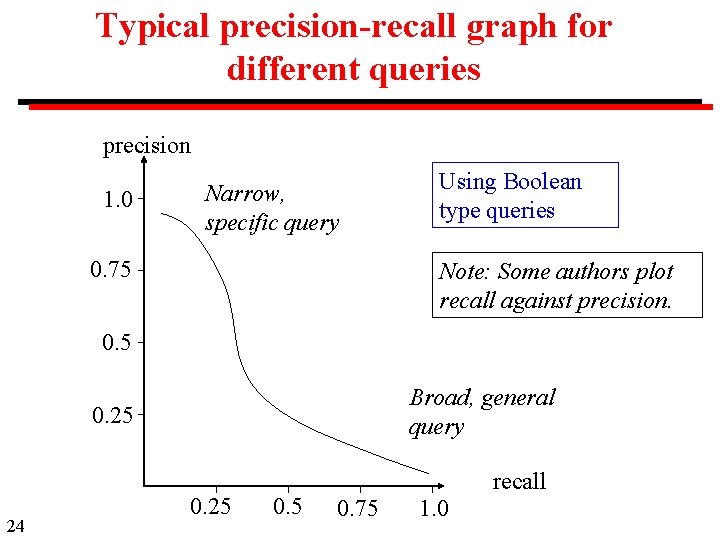

Typical precision-recall graph for different queries precision 1. 0 Narrow, specific query 0. 75 Using Boolean type queries Note: Some authors plot recall against precision. 0. 5 Broad, general query 0. 25 24 0. 25 0. 5 recall 0. 75 1. 0

A Crucial Cranfield Results • The various manual indexing systems have similar retrieval efficiency • Retrieval effectiveness using automatic indexing can be at least as effective as manual indexing with controlled vocabularies -> original results from the Cranfield + SMART experiments (published in 1967) -> considered counter-intuitive -> other results since then have supported this conclusion 25

Precision and Recall with Ranked Results Precision and recall are defined for a fixed set of hits, e. g. , Boolean retrieval. Their use needs to be modified for a ranked list of results. 26

Evaluation: Ranking Methods Precision and recall measure the results of a single query using a specific search system applied to a specific set of documents. Matching methods: Precision and recall are single numbers. Ranking methods: Precision and recall are functions of the rank order. 27

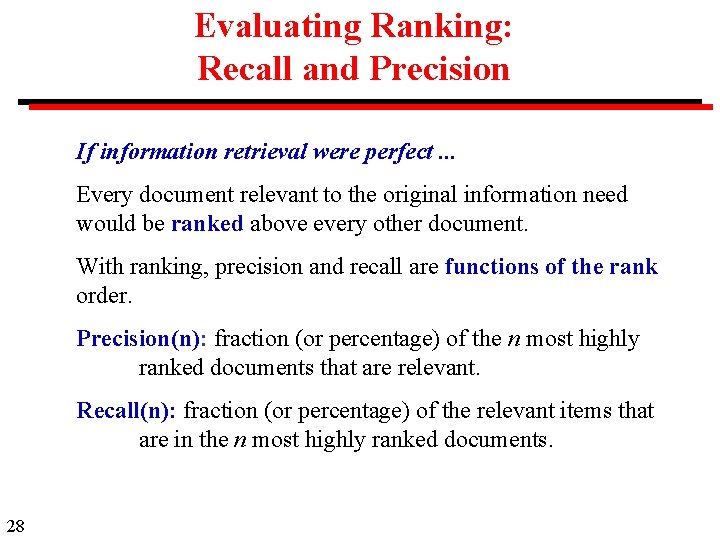

Evaluating Ranking: Recall and Precision If information retrieval were perfect. . . Every document relevant to the original information need would be ranked above every other document. With ranking, precision and recall are functions of the rank order. Precision(n): fraction (or percentage) of the n most highly ranked documents that are relevant. Recall(n): fraction (or percentage) of the relevant items that are in the n most highly ranked documents. 28

Precision and Recall with Ranking Example "Your query found 349, 871 possibly relevant documents. Here are the first eight. " Examination of the first 8 finds that 5 of them are relevant. 29

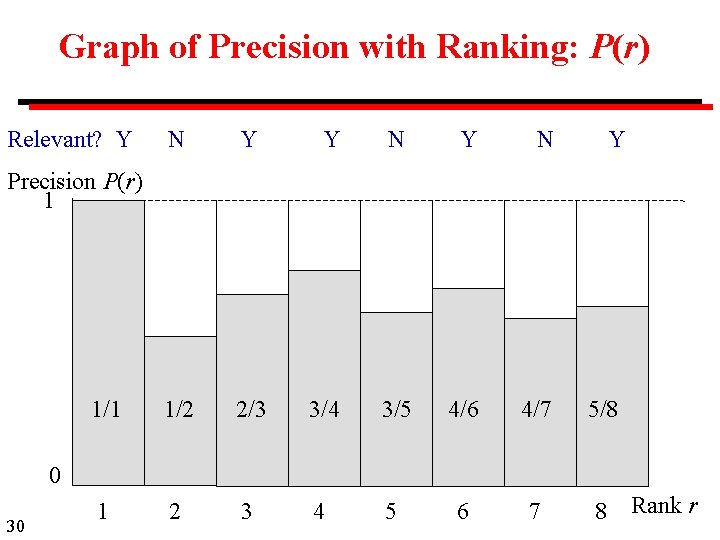

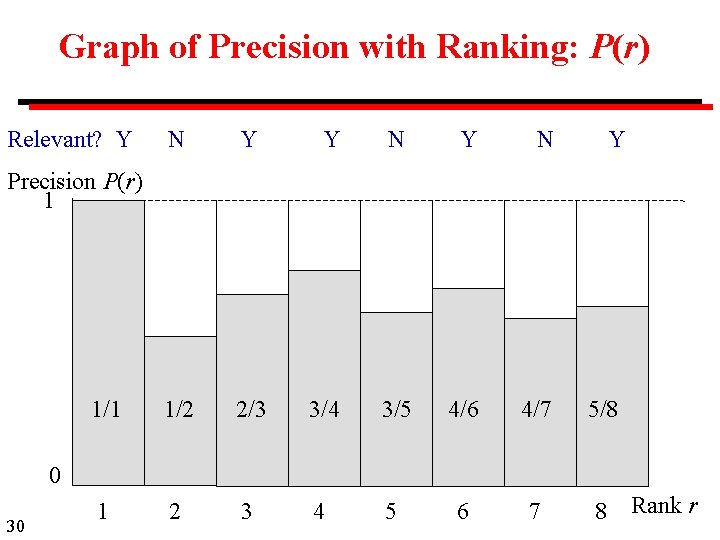

Graph of Precision with Ranking: P(r) Relevant? Y N Y N Y 1/1 1/2 2/3 3/4 3/5 4/6 4/7 5/8 1 2 3 4 5 6 7 8 Precision P(r) 1 0 30 Rank r

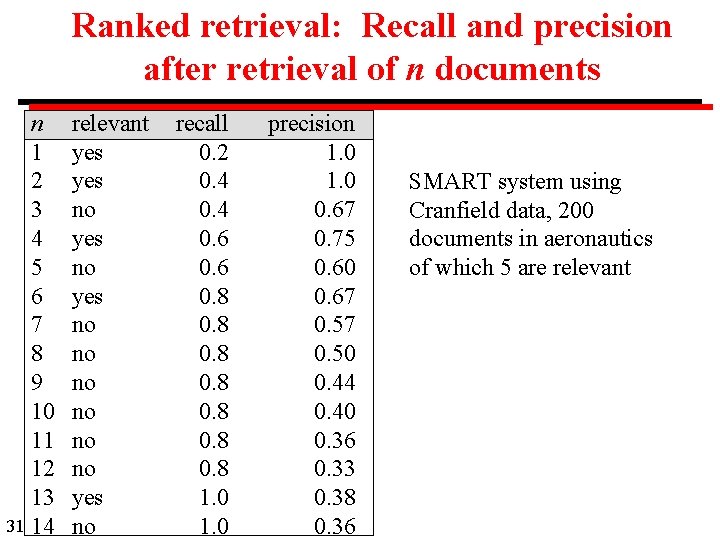

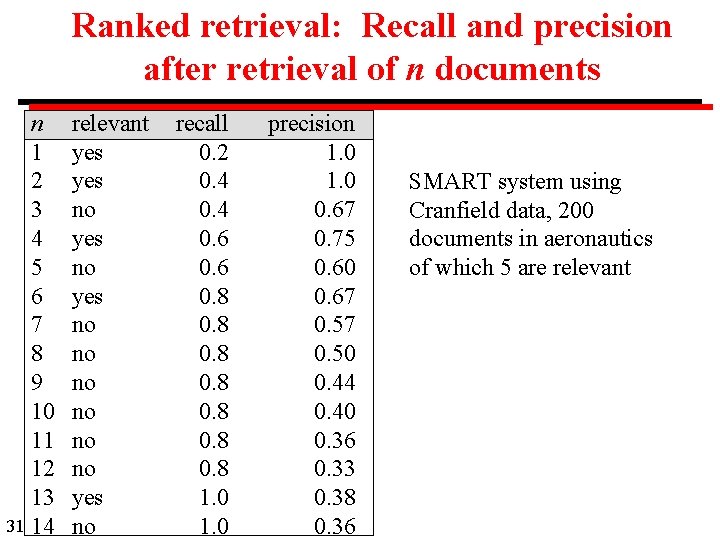

Ranked retrieval: Recall and precision after retrieval of n documents n 1 2 3 4 5 6 7 8 9 10 11 12 13 31 14 relevant yes no no no yes no recall 0. 2 0. 4 0. 6 0. 8 0. 8 1. 0 precision 1. 0 0. 67 0. 75 0. 60 0. 67 0. 50 0. 44 0. 40 0. 36 0. 33 0. 38 0. 36 SMART system using Cranfield data, 200 documents in aeronautics of which 5 are relevant

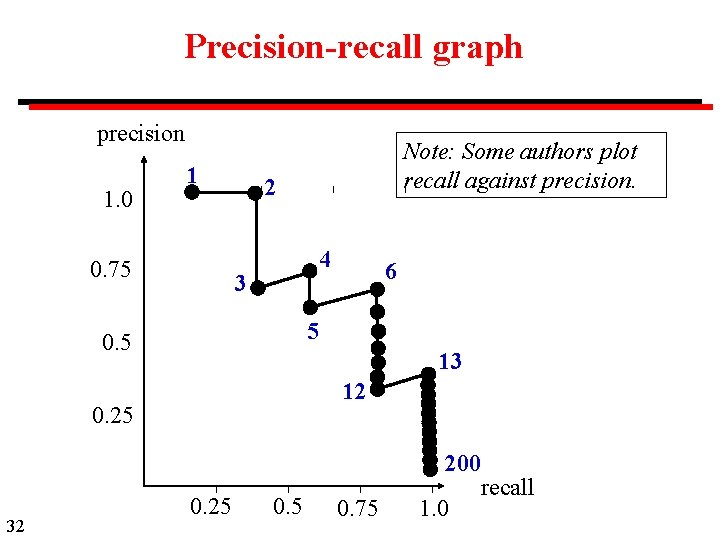

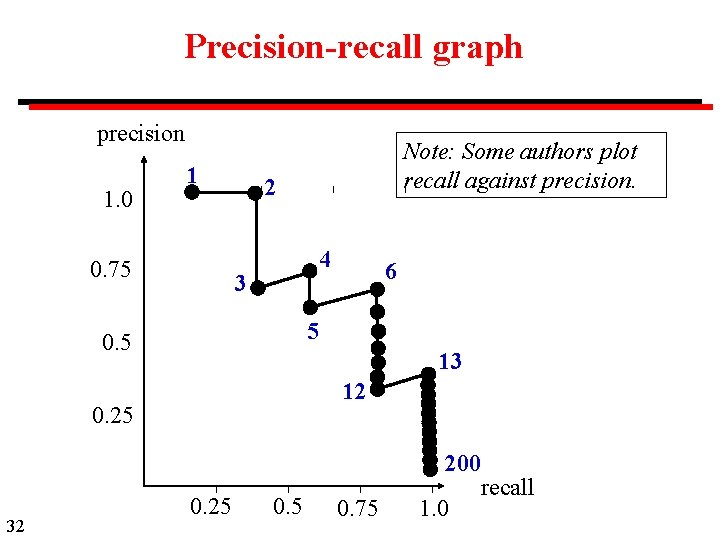

Precision-recall graph precision 1. 0 1 0. 75 Note: Some authors plot recall against precision. 2 4 3 6 5 0. 5 13 12 0. 25 200 32 0. 25 0. 75 1. 0 recall

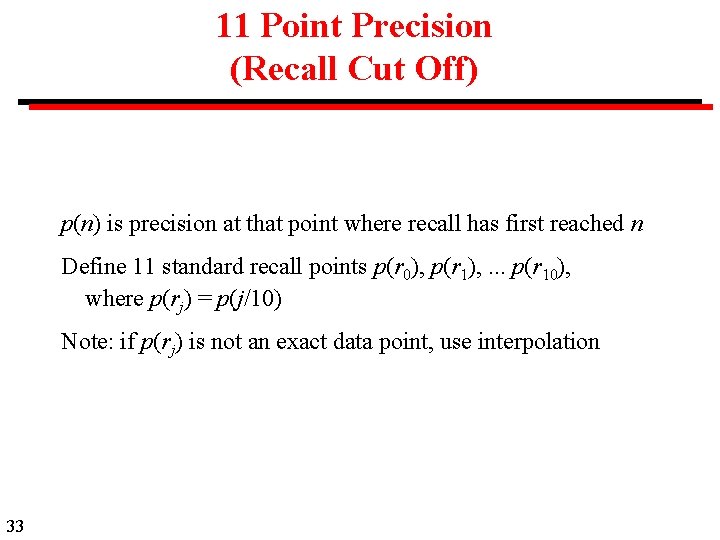

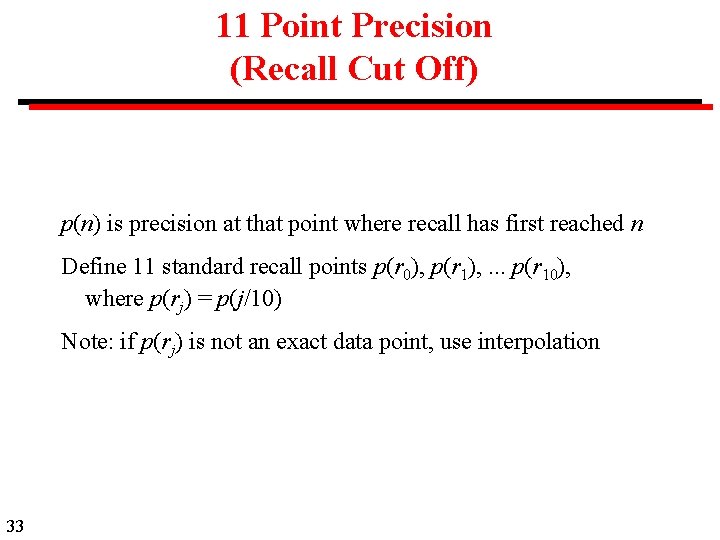

11 Point Precision (Recall Cut Off) p(n) is precision at that point where recall has first reached n Define 11 standard recall points p(r 0), p(r 1), . . . p(r 10), where p(rj) = p(j/10) Note: if p(rj) is not an exact data point, use interpolation 33

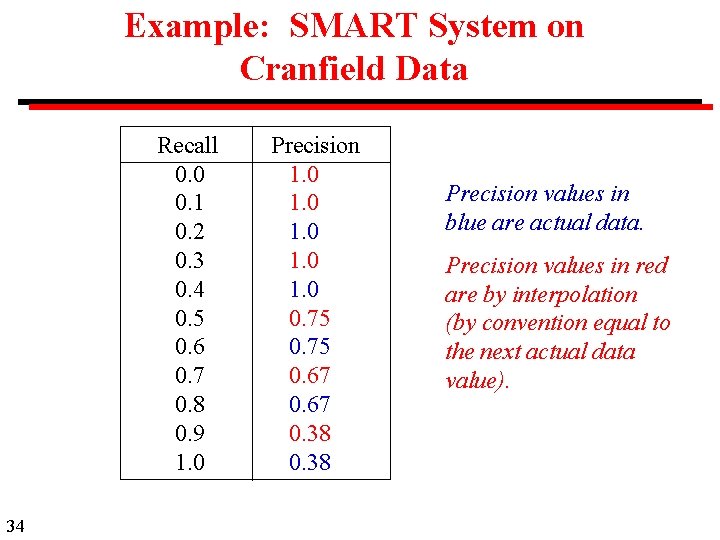

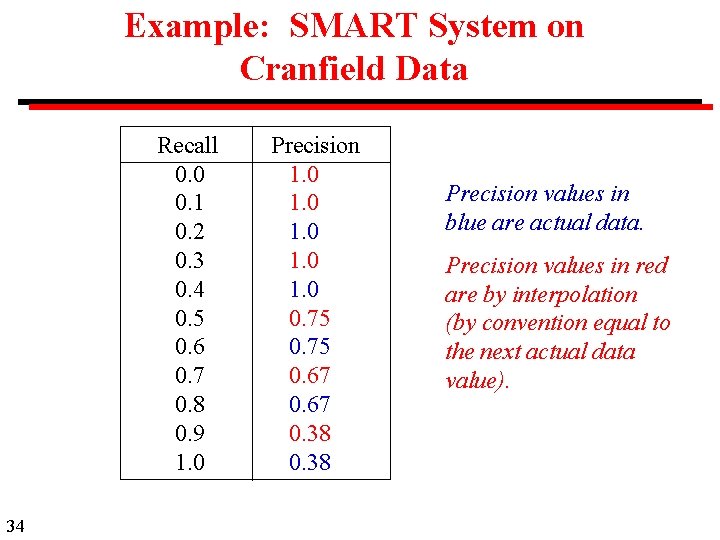

Example: SMART System on Cranfield Data Recall 0. 0 0. 1 0. 2 0. 3 0. 4 0. 5 0. 6 0. 7 0. 8 0. 9 1. 0 34 Precision 1. 0 1. 0 0. 75 0. 67 0. 38 Precision values in blue are actual data. Precision values in red are by interpolation (by convention equal to the next actual data value).

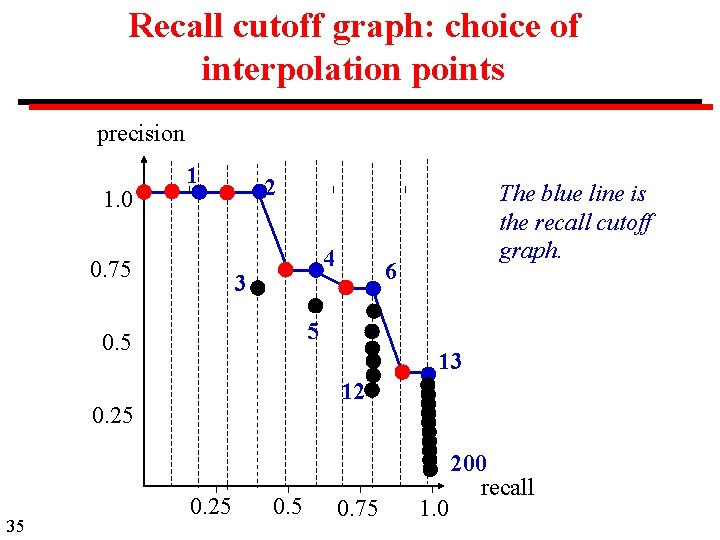

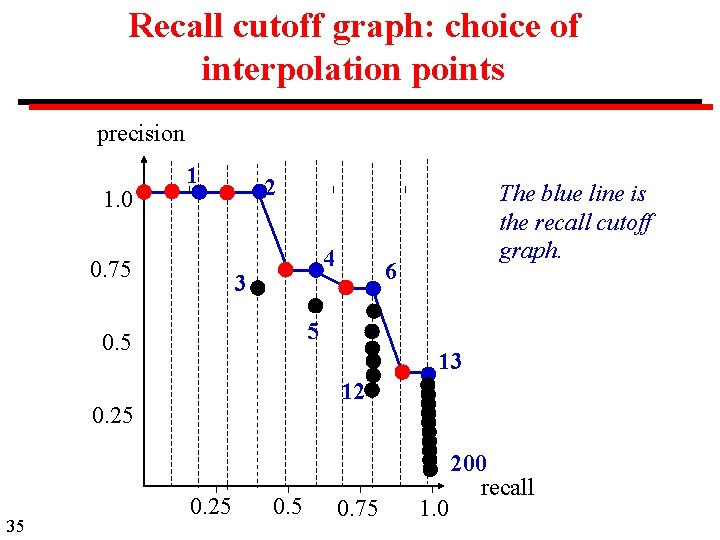

Recall cutoff graph: choice of interpolation points precision 1. 0 1 0. 75 2 4 3 6 5 0. 5 13 12 0. 25 35 The blue line is the recall cutoff graph. 0. 25 0. 75 1. 0 200 recall

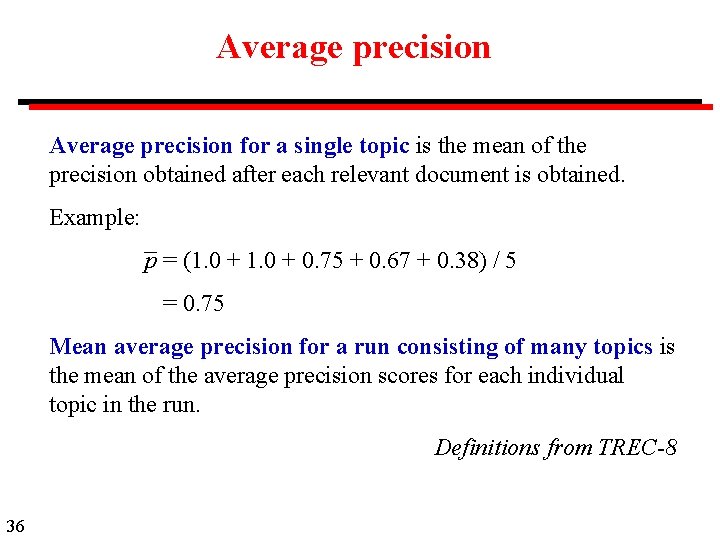

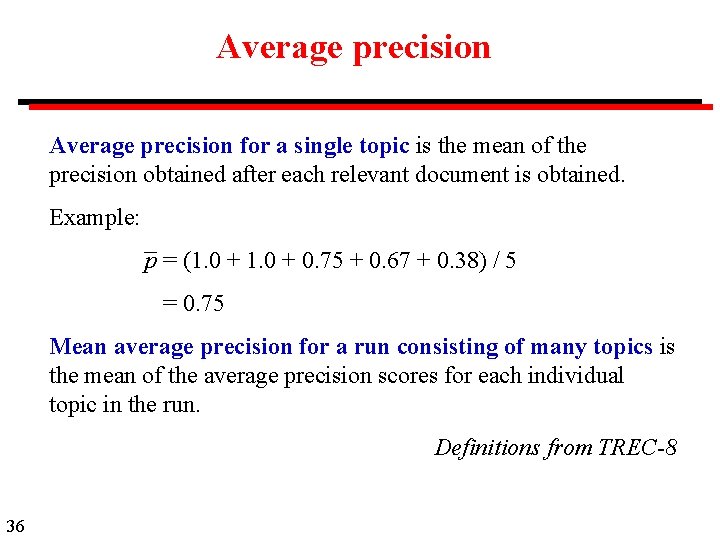

Average precision for a single topic is the mean of the precision obtained after each relevant document is obtained. Example: p = (1. 0 + 0. 75 + 0. 67 + 0. 38) / 5 = 0. 75 Mean average precision for a run consisting of many topics is the mean of the average precision scores for each individual topic in the run. Definitions from TREC-8 36

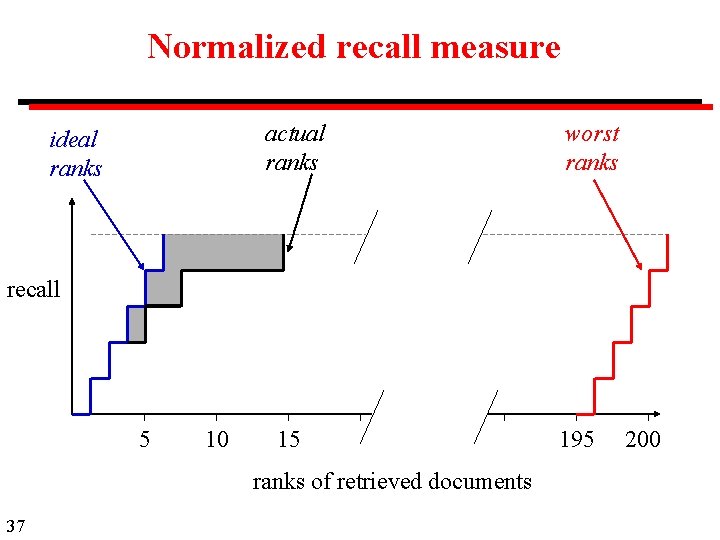

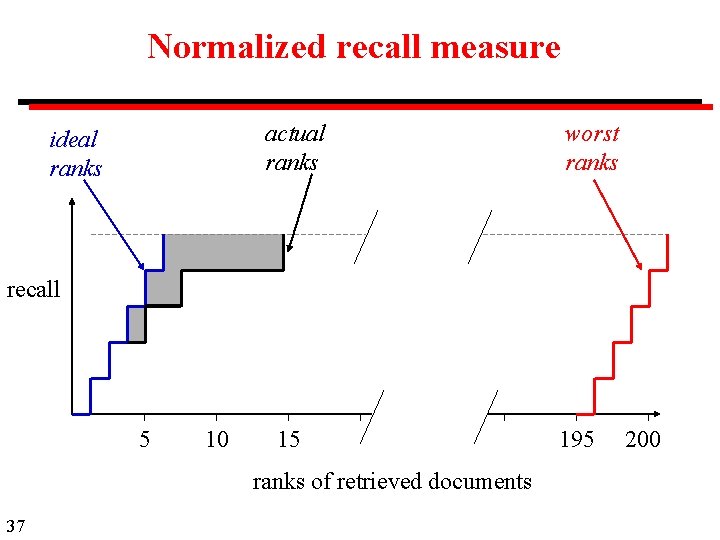

Normalized recall measure actual ranks ideal ranks worst ranks recall 5 10 15 ranks of retrieved documents 37 195 200

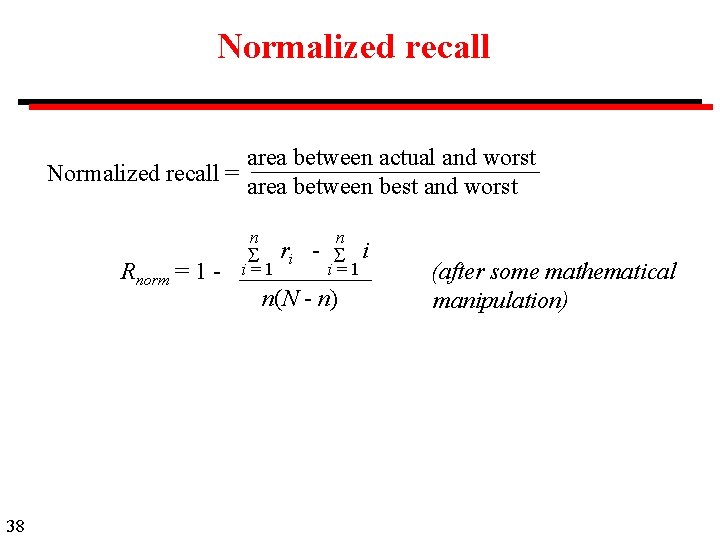

Normalized recall area between actual and worst Normalized recall = area between best and worst n n Rnorm = 1 - 38 i=1 ri - i i=1 n(N - n) (after some mathematical manipulation)

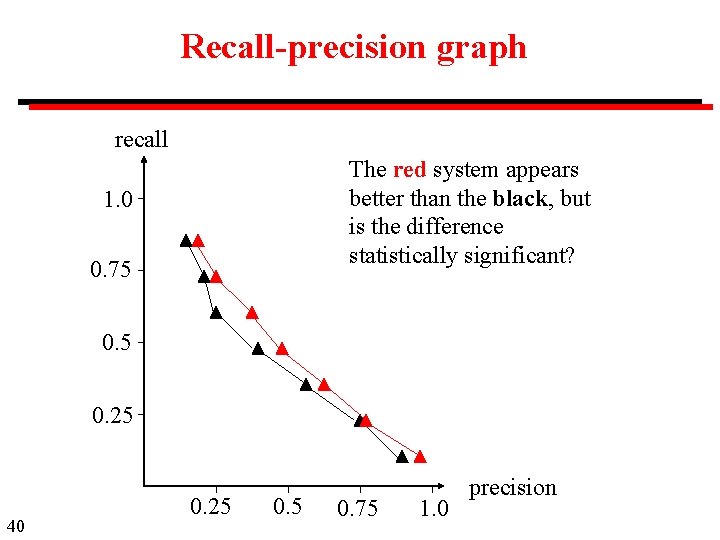

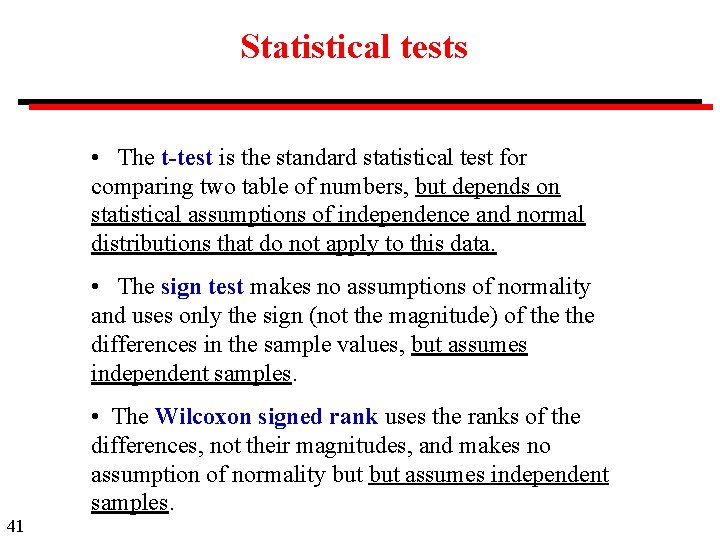

Statistical tests Suppose that a search is carried out on systems i and j System i is superior to system j if, for all test cases, recall(i) >= recall(j) precisions(i) >= precision(j) In practice, we have data from a limited number of test cases. What conclusions can we draw? 39

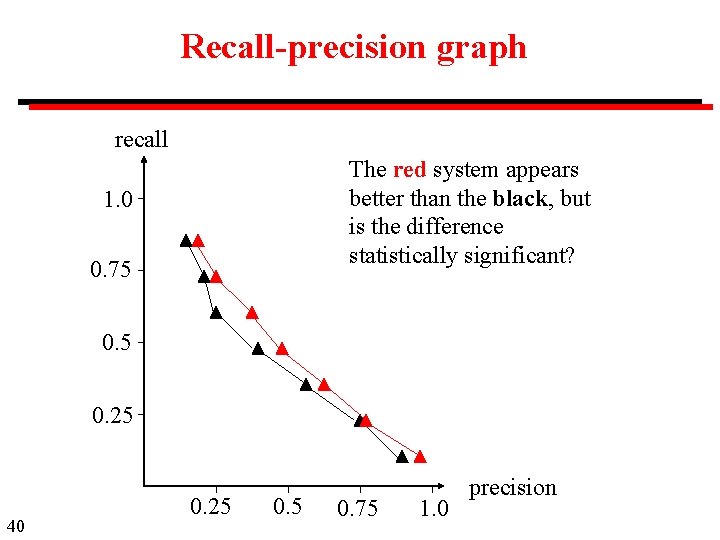

Recall-precision graph recall The red system appears better than the black, but is the difference statistically significant? 1. 0 0. 75 0. 25 40 0. 25 0. 75 1. 0 precision

Statistical tests • The t-test is the standard statistical test for comparing two table of numbers, but depends on statistical assumptions of independence and normal distributions that do not apply to this data. • The sign test makes no assumptions of normality and uses only the sign (not the magnitude) of the differences in the sample values, but assumes independent samples. • The Wilcoxon signed rank uses the ranks of the differences, not their magnitudes, and makes no assumption of normality but assumes independent samples. 41