CS 430 INFO 430 Information Retrieval Lecture 27

- Slides: 43

CS 430 / INFO 430 Information Retrieval Lecture 27 Classification 2 1

Course Administration Final Examination • The final examination is on Tuesday, December 13, between 2: 00 and 3: 30, in Upson B 17. • Format and style of questions will be similar to the mid-term. • A make-up examination will be available on another date, which has not yet been chosen. The proposed date is December 8. If you would like to take the make-up examination, send an email message to Susan Moskwa (moskwa@cs. cornell. edu). 2

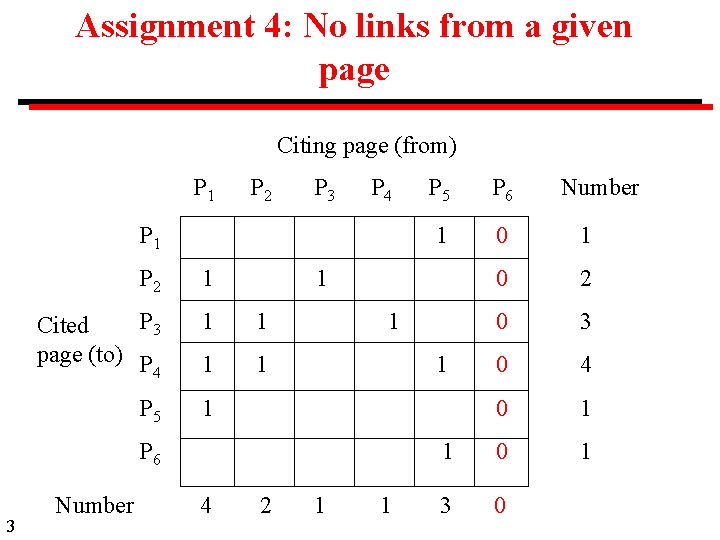

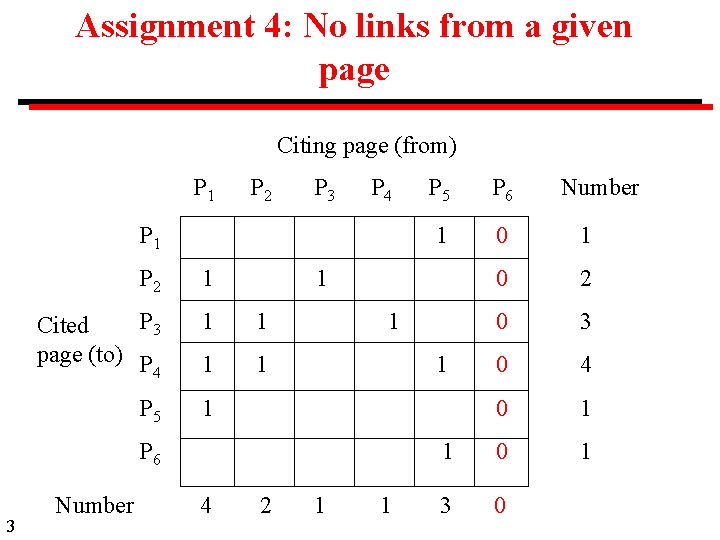

Assignment 4: No links from a given page Citing page (from) P 1 P 2 P 3 P 4 P 1 P 2 1 P 3 Cited page (to) P 4 1 1 P 5 1 3 4 P 6 1 0 2 0 3 0 4 0 1 1 0 1 3 0 1 1 1 P 6 Number P 5 2 1 1 Number

Cluster Analysis Methods that divide a set of n objects into m nonoverlapping subsets. For information discovery, cluster analysis is applied to • terms for thesaurus construction • documents to divide into categories (sometimes called automatic classification, but classification usually requires a pre-determined set of categories). 4

Cluster Analysis Metrics Documents clustered on the basis of a similarity measure calculated from the terms that they contain. Documents clustered on the basis of co-occurring citations. Terms clustered on the basis of the documents in which they co-occur. 5

Non-hierarchical and Hierarchical Methods Non-hierarchical methods Elements are divided into m non-overlapping sets where m is predetermined. Hierarchical methods m is varied progressively to create a hierarchy of solutions. Agglomerative methods m is initially equal to n, the total number of elements, where every element is considered to be a cluster with one element. The hierarchy is produced by incrementally combining clusters. 6

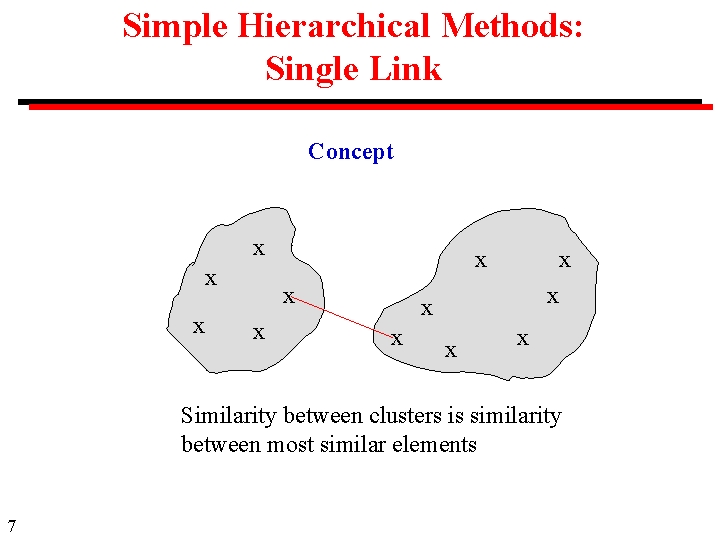

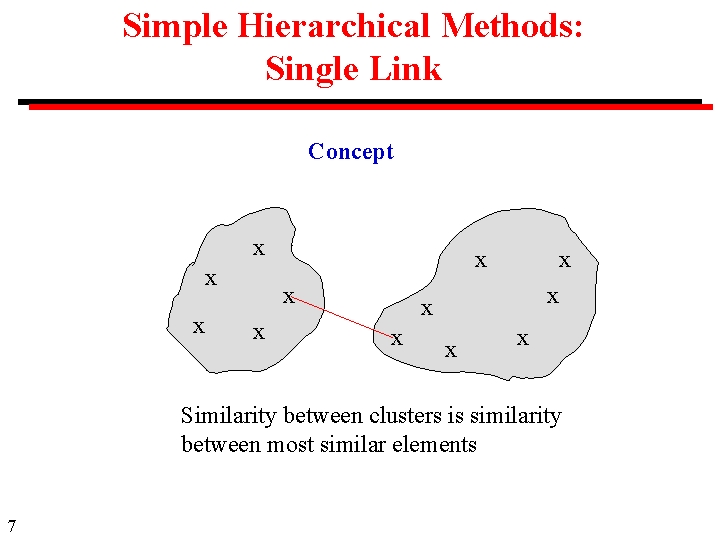

Simple Hierarchical Methods: Single Link Concept x x x Similarity between clusters is similarity between most similar elements 7

Single Link A simple agglomerative method. Initially, each element is its own cluster with one element. At each step, calculate the similarity between each pair of clusters as the most similar pair of elements that are not yet in the same cluster. Merge the two clusters that are most similar. May lead to long, straggling clusters (chaining). Very simple computation. 8

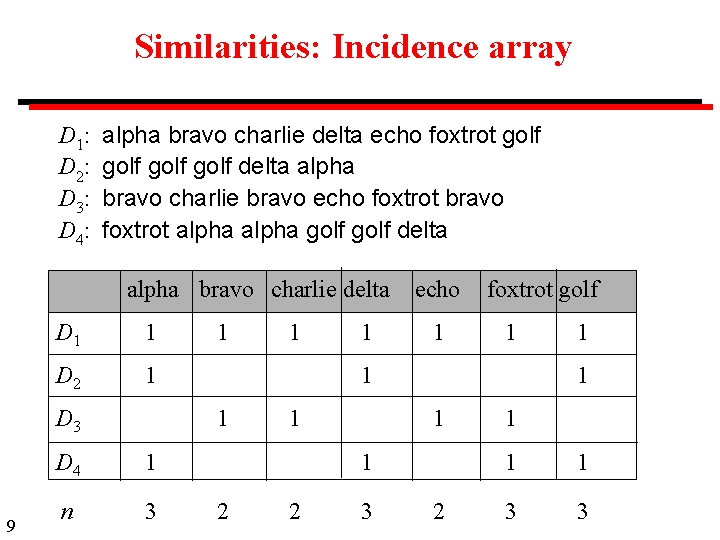

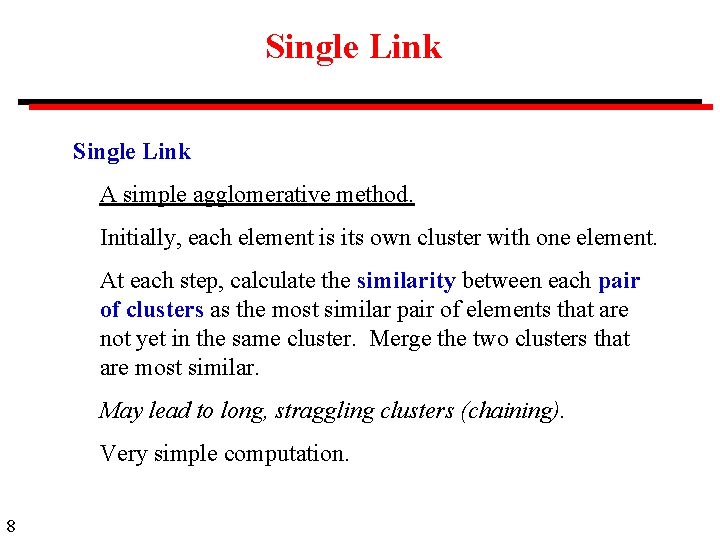

Similarities: Incidence array D 1: D 2: D 3: D 4: alpha bravo charlie delta echo foxtrot golf delta alpha bravo charlie bravo echo foxtrot bravo foxtrot alpha golf delta alpha bravo charlie delta D 1 1 D 2 1 D 3 9 1 1 n 3 1 1 foxtrot golf 1 1 1 D 4 1 echo 1 1 2 2 3 1 2 1 1 1 3 3

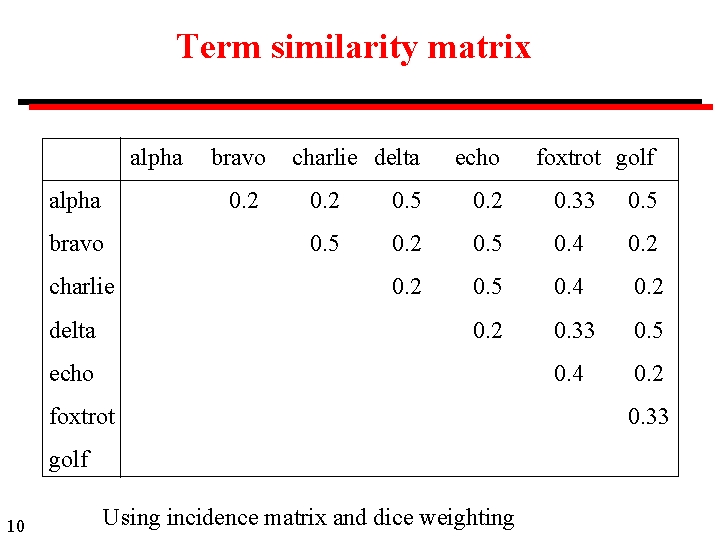

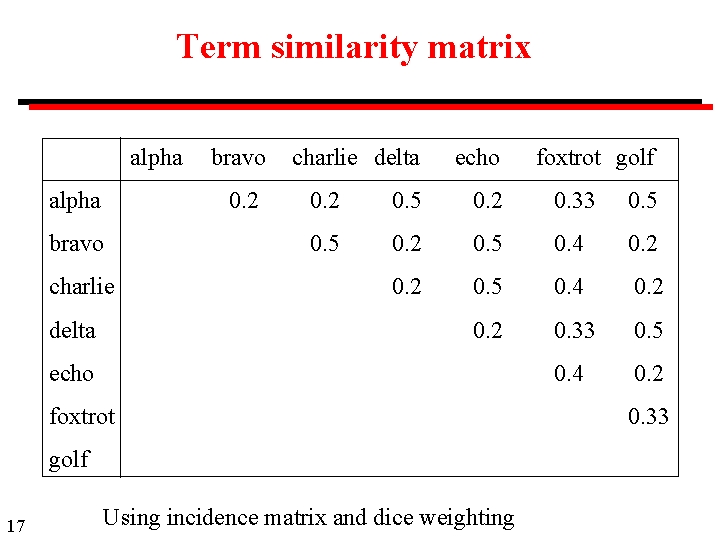

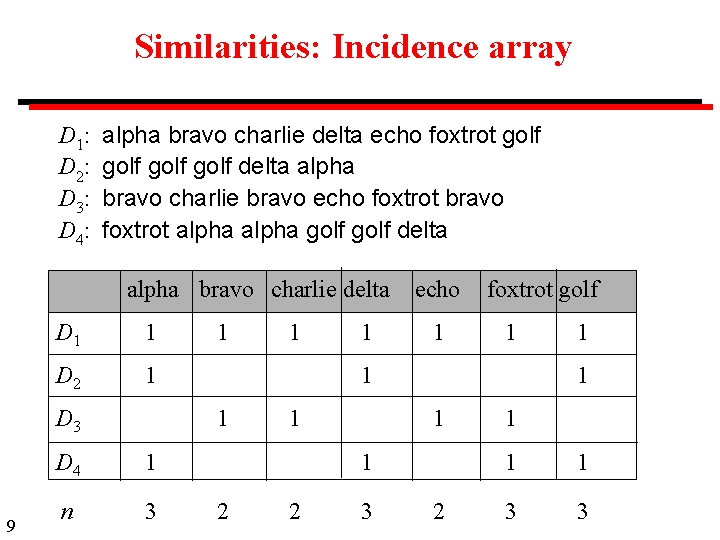

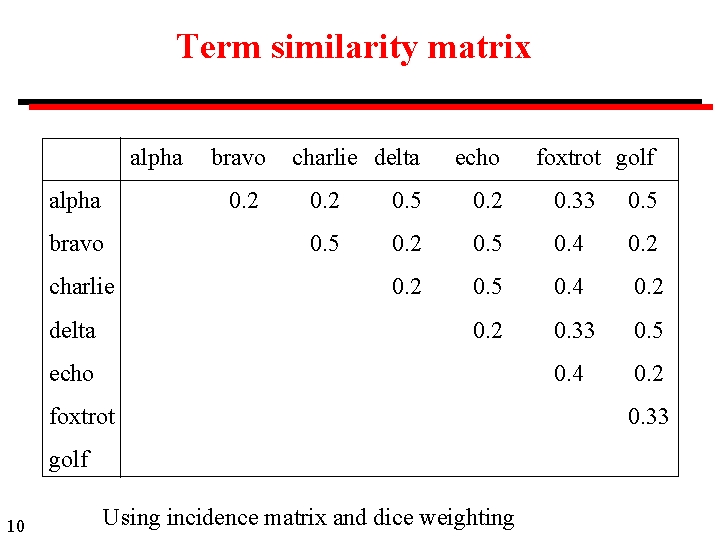

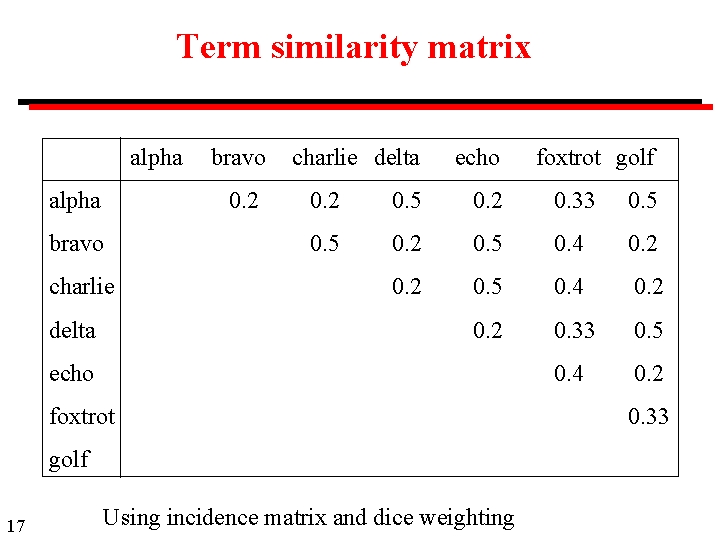

Term similarity matrix alpha bravo 0. 2 bravo charlie delta echo 0. 2 0. 5 0. 2 0. 33 0. 5 0. 2 0. 5 0. 4 0. 2 0. 33 0. 5 0. 4 0. 2 echo foxtrot golf 10 foxtrot golf Using incidence matrix and dice weighting 0. 33

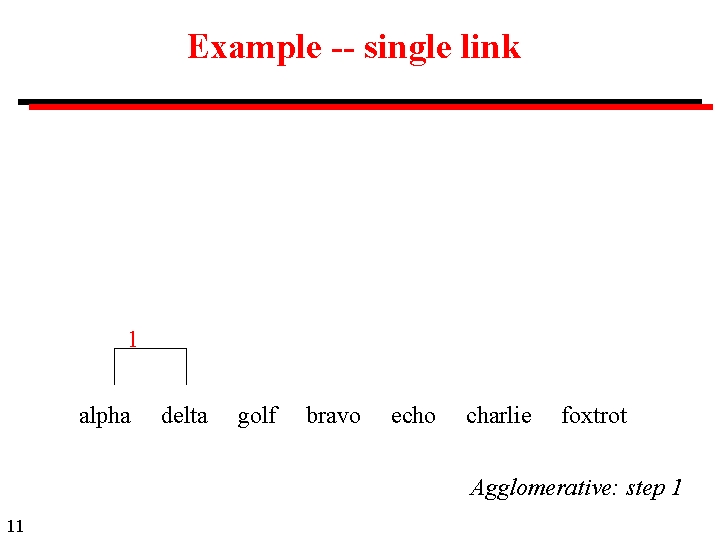

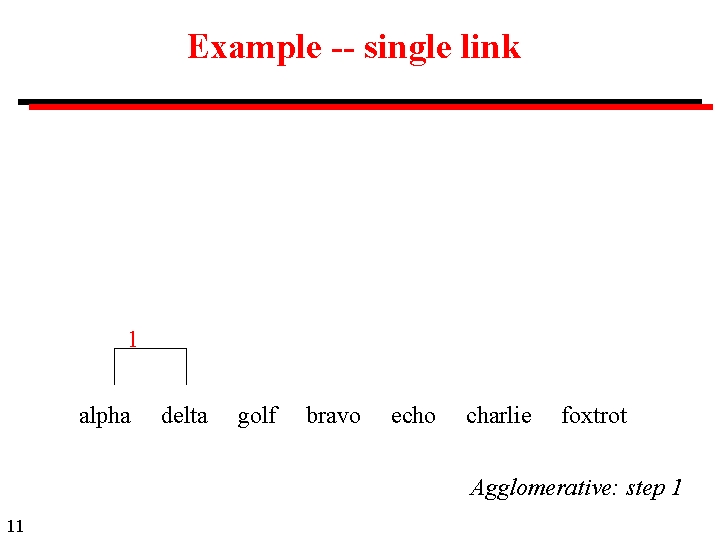

Example -- single link 1 alpha delta golf bravo echo charlie foxtrot Agglomerative: step 1 11

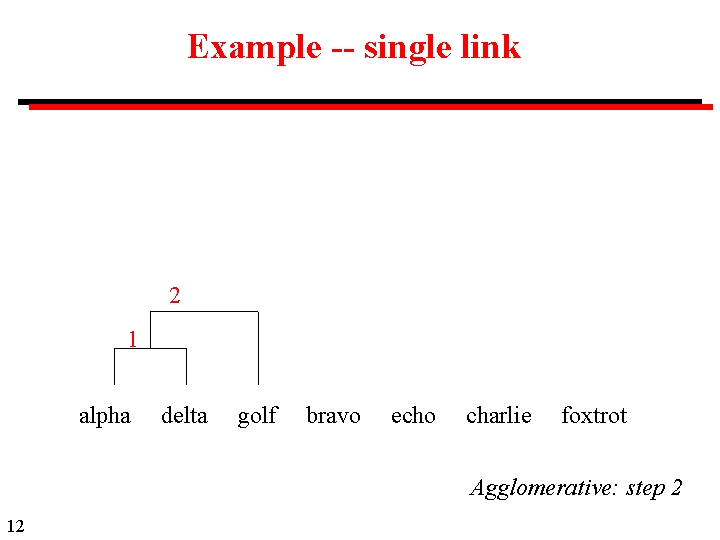

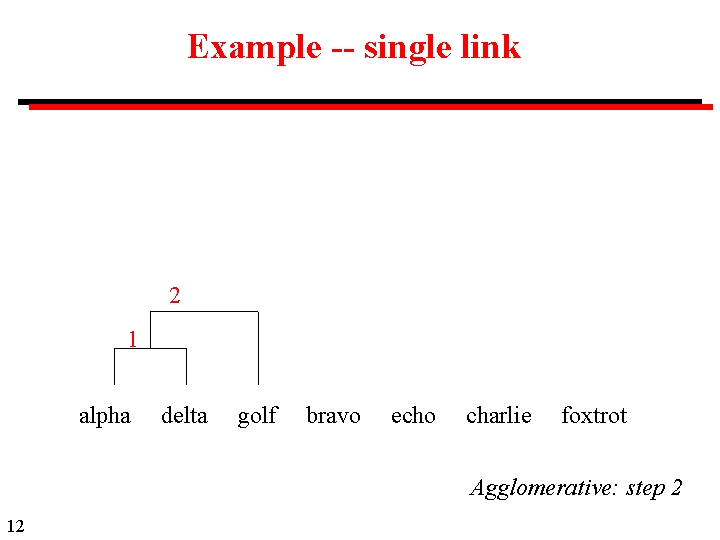

Example -- single link 2 1 alpha delta golf bravo echo charlie foxtrot Agglomerative: step 2 12

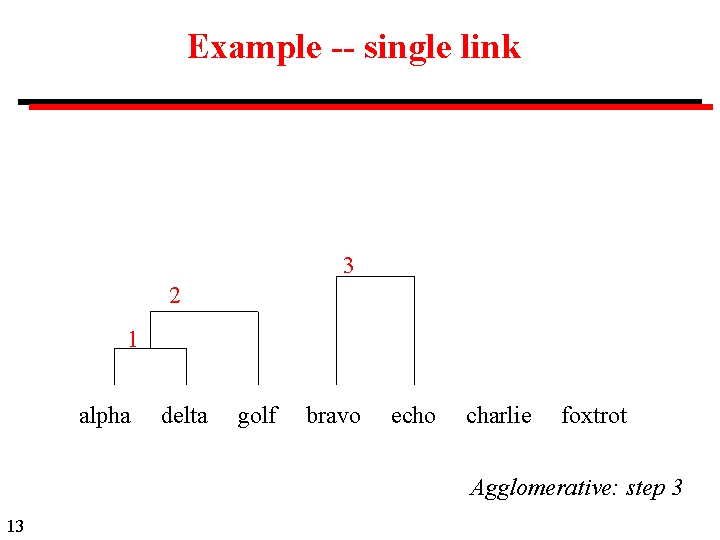

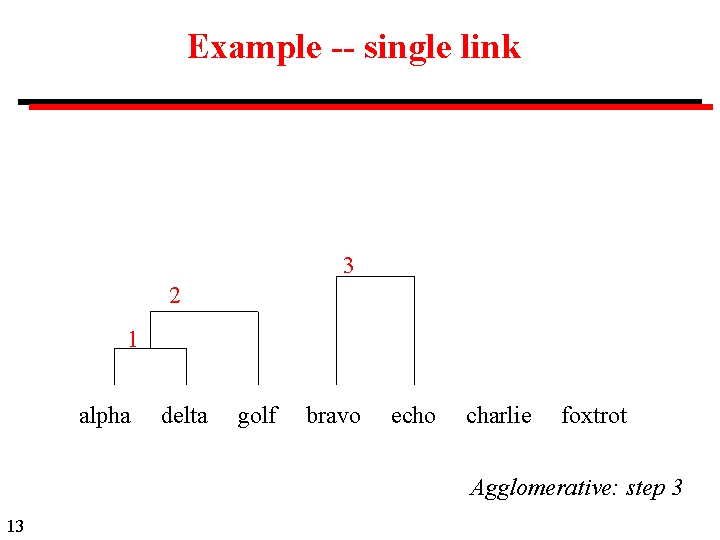

Example -- single link 3 2 1 alpha delta golf bravo echo charlie foxtrot Agglomerative: step 3 13

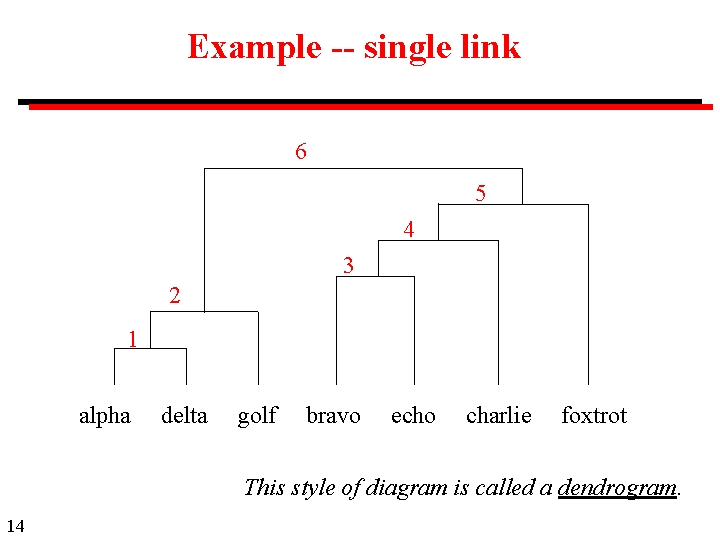

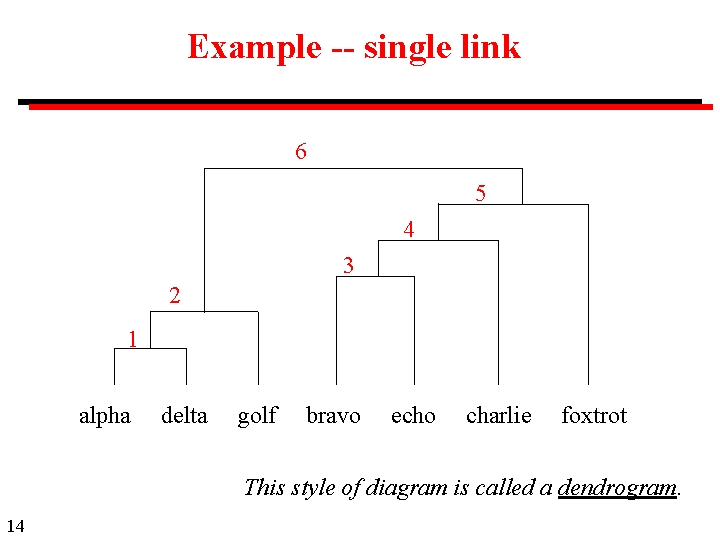

Example -- single link 6 5 4 3 2 1 alpha delta golf bravo echo charlie foxtrot This style of diagram is called a dendrogram. 14

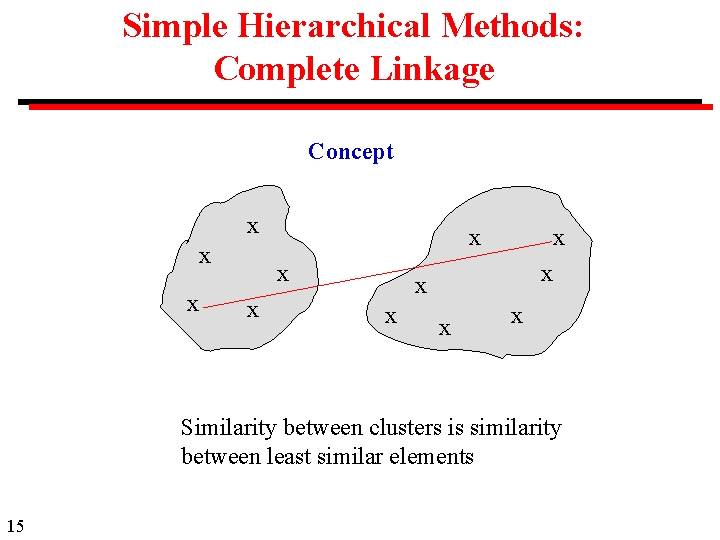

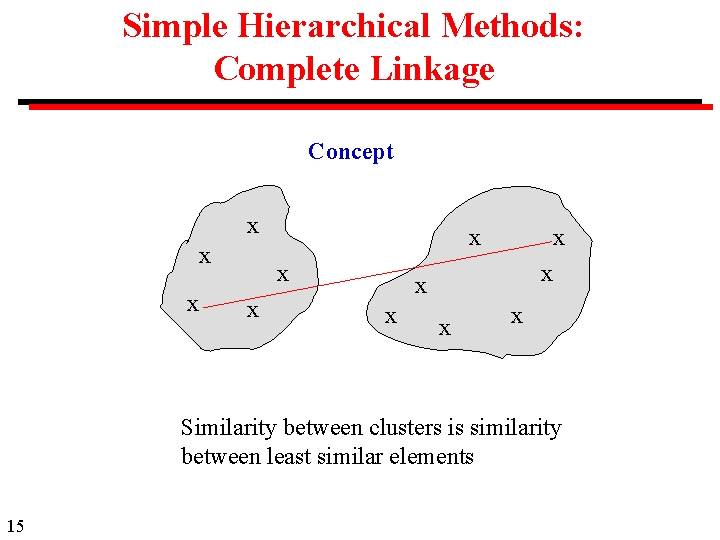

Simple Hierarchical Methods: Complete Linkage Concept x x x Similarity between clusters is similarity between least similar elements 15

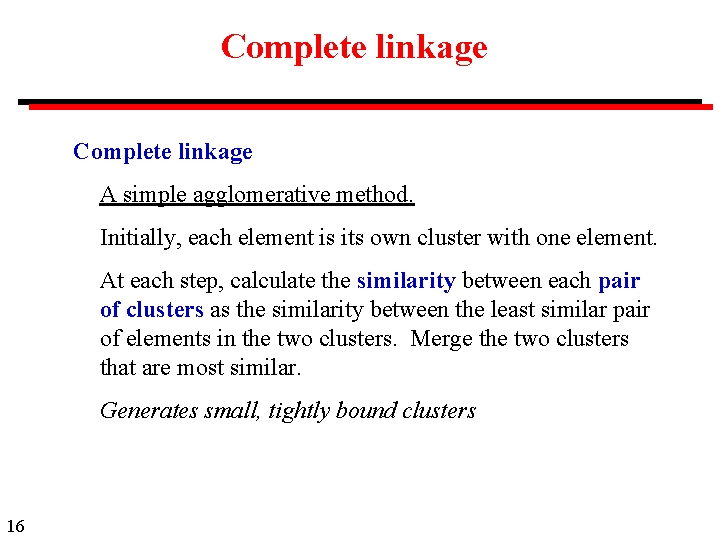

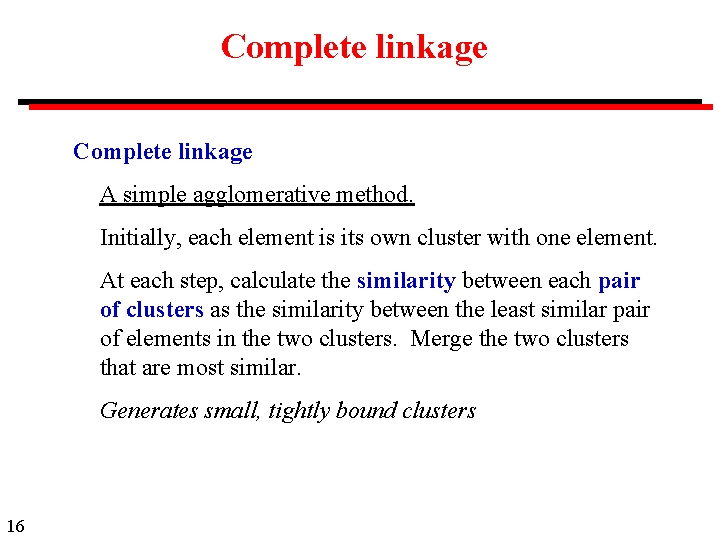

Complete linkage A simple agglomerative method. Initially, each element is its own cluster with one element. At each step, calculate the similarity between each pair of clusters as the similarity between the least similar pair of elements in the two clusters. Merge the two clusters that are most similar. Generates small, tightly bound clusters 16

Term similarity matrix alpha bravo 0. 2 bravo charlie delta echo 0. 2 0. 5 0. 2 0. 33 0. 5 0. 2 0. 5 0. 4 0. 2 0. 33 0. 5 0. 4 0. 2 echo foxtrot golf 17 foxtrot golf Using incidence matrix and dice weighting 0. 33

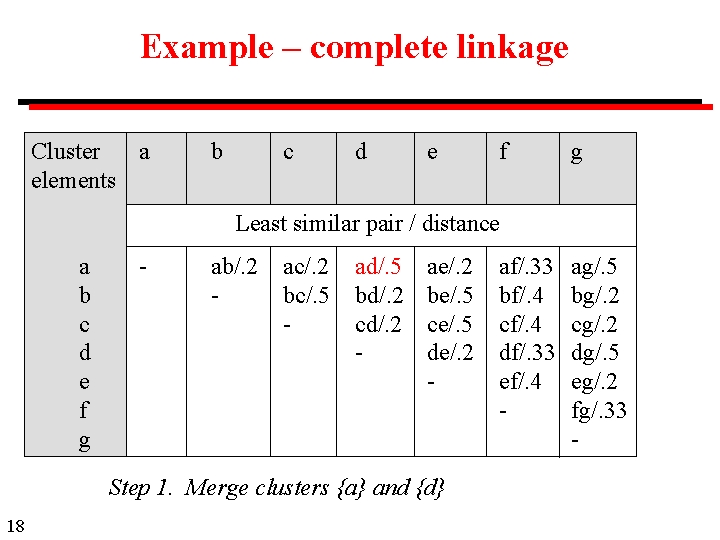

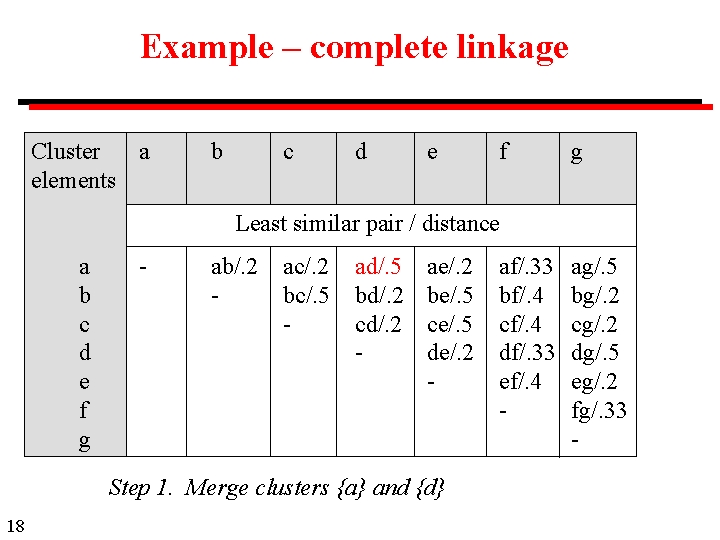

Example – complete linkage Cluster a elements b c d e f g Least similar pair / distance a b c d e f g - ab/. 2 - ac/. 2 bc/. 5 - ad/. 5 bd/. 2 cd/. 2 - ae/. 2 be/. 5 ce/. 5 de/. 2 - Step 1. Merge clusters {a} and {d} 18 af/. 33 bf/. 4 cf/. 4 df/. 33 ef/. 4 - ag/. 5 bg/. 2 cg/. 2 dg/. 5 eg/. 2 fg/. 33 -

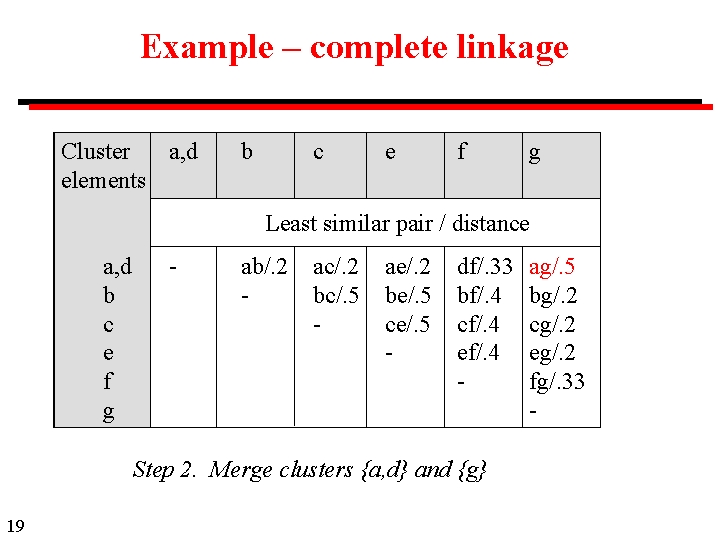

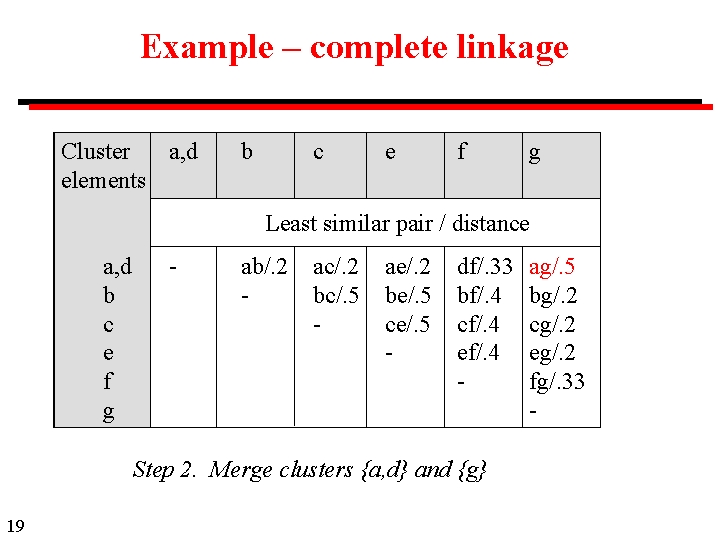

Example – complete linkage Cluster a, d elements b c e f g Least similar pair / distance a, d b c e f g - ab/. 2 - ac/. 2 bc/. 5 - ae/. 2 be/. 5 ce/. 5 - df/. 33 bf/. 4 cf/. 4 ef/. 4 - Step 2. Merge clusters {a, d} and {g} 19 ag/. 5 bg/. 2 cg/. 2 eg/. 2 fg/. 33 -

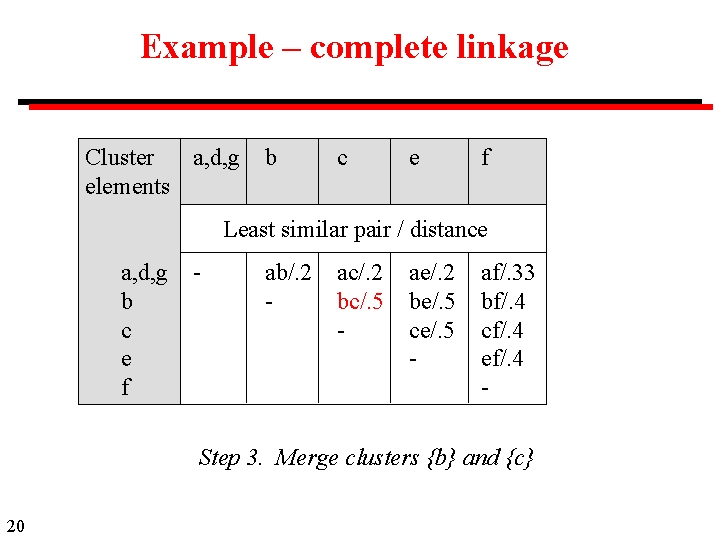

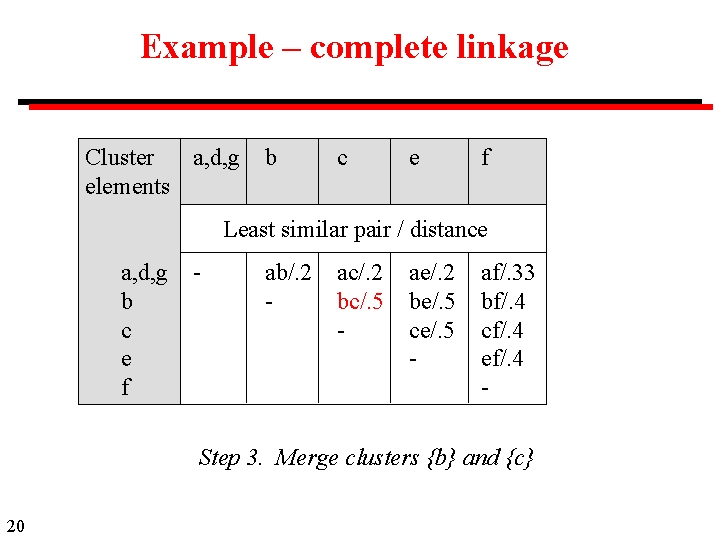

Example – complete linkage Cluster a, d, g elements b c e f Least similar pair / distance a, d, g b c e f - ab/. 2 - ac/. 2 bc/. 5 - ae/. 2 be/. 5 ce/. 5 - af/. 33 bf/. 4 cf/. 4 ef/. 4 - Step 3. Merge clusters {b} and {c} 20

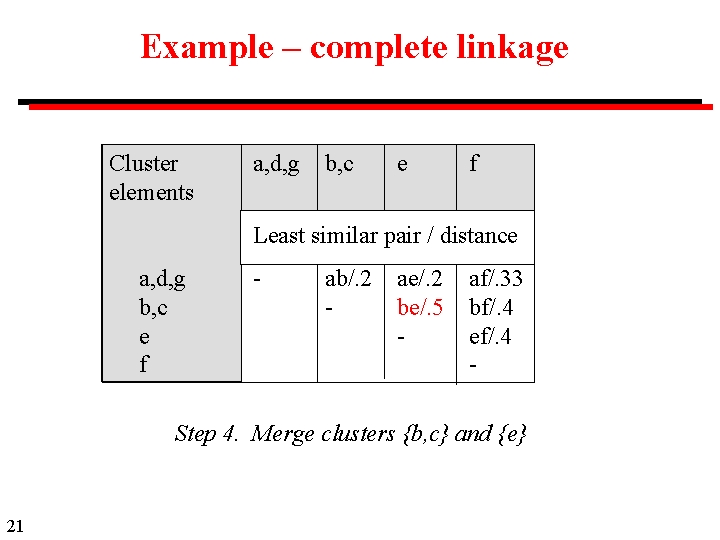

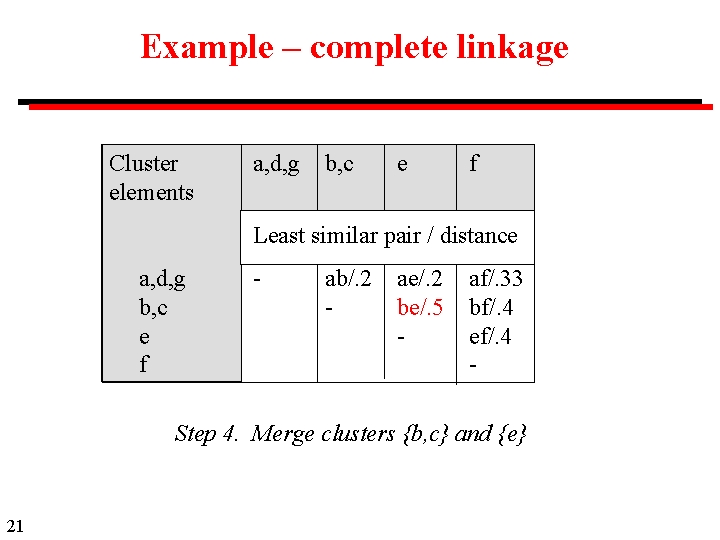

Example – complete linkage Cluster elements a, d, g b, c e f Least similar pair / distance a, d, g b, c e f - ab/. 2 - ae/. 2 be/. 5 - af/. 33 bf/. 4 ef/. 4 - Step 4. Merge clusters {b, c} and {e} 21

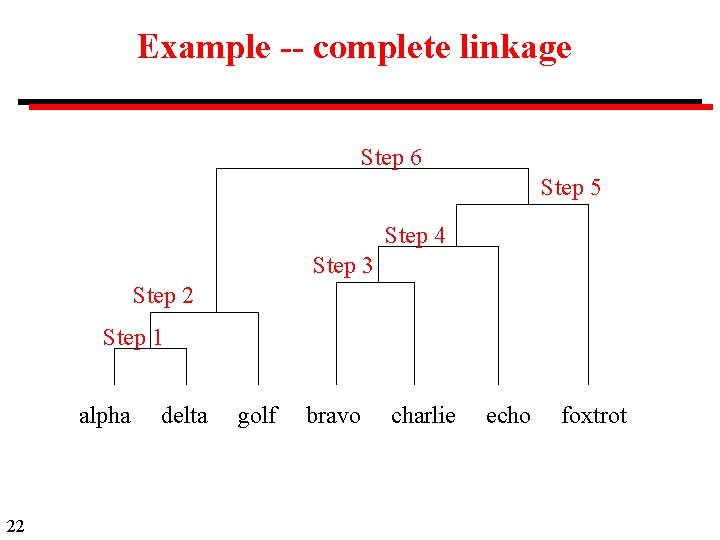

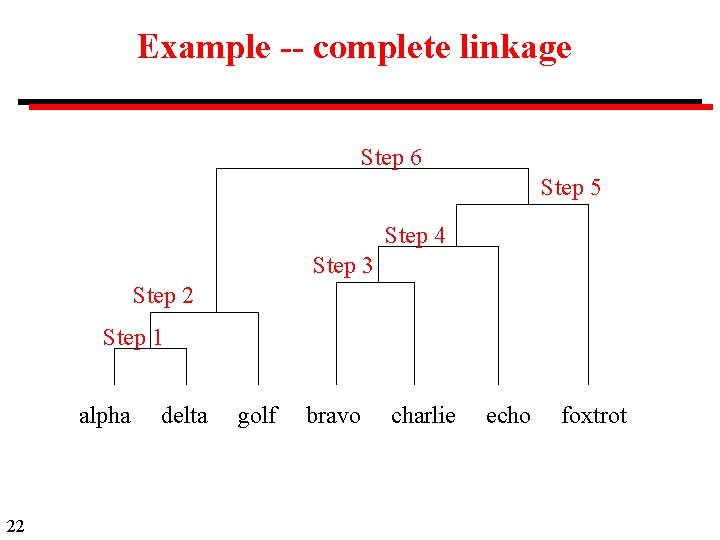

Example -- complete linkage Step 6 Step 5 Step 4 Step 3 Step 2 Step 1 alpha 22 delta golf bravo charlie echo foxtrot

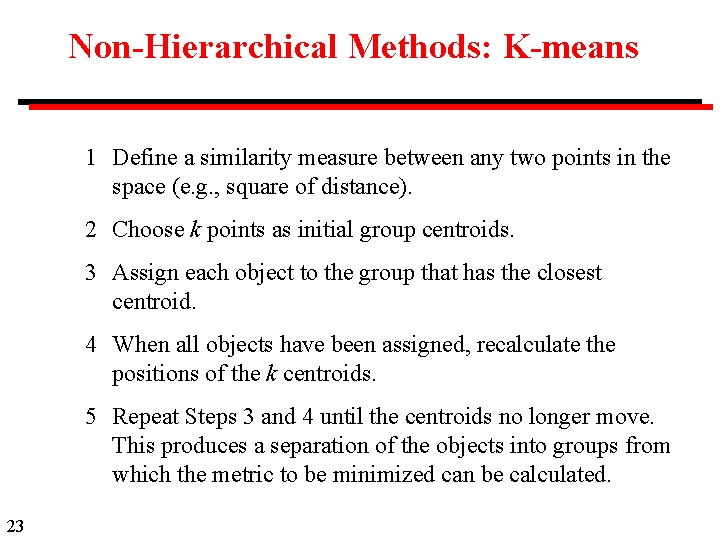

Non-Hierarchical Methods: K-means 1 Define a similarity measure between any two points in the space (e. g. , square of distance). 2 Choose k points as initial group centroids. 3 Assign each object to the group that has the closest centroid. 4 When all objects have been assigned, recalculate the positions of the k centroids. 5 Repeat Steps 3 and 4 until the centroids no longer move. This produces a separation of the objects into groups from which the metric to be minimized can be calculated. 23

K-means • Iteration converges under a very general set of conditions • Results depend on the choice of the k initial centroids • Methods can be used to generate a sequence of solutions for k increasing from 1 to n. Note that, in general, the results will not be hierarchical. 24

Problems with cluster analysis in information retrieval Selection of attributes on which items are clustered Choice of similarity measure and algorithm Computational resources Assessing validity and stability of clusters Updating clusters as data changes Method for using the clusters in information retrieval 25

Example 1: Concept Spaces for Scientific Terms Large-scale searches can only match terms specified by the user to terms appearing in documents. Cluster analysis can be used to provide information retrieval by concepts, rather than by terms. Bruce Schatz, William H. Mischo, Timothy W. Cole, Joseph B. Hardin, Ann P. Bishop (University of Illinois), Hsinchun Chen (University of Arizona), Federating Diverse Collections of Scientific Literature, IEEE Computer, May 1996. Federating Diverse Collections of Scientific Literature 26

Concept Spaces: Methodology Concept space: A similarity matrix based on co-occurrence of terms. Approach: Use cluster analysis to generate "concept spaces" automatically, i. e. , clusters of terms that embrace a single semantic concept. Arrange concepts in a hierarchical classification. 27

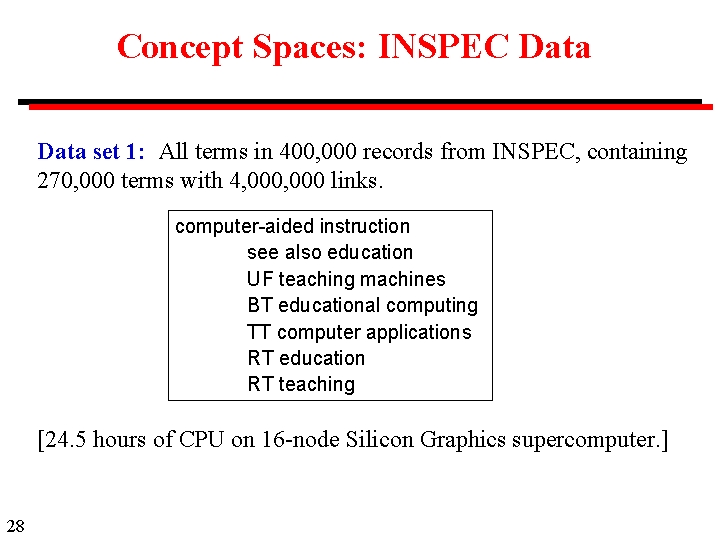

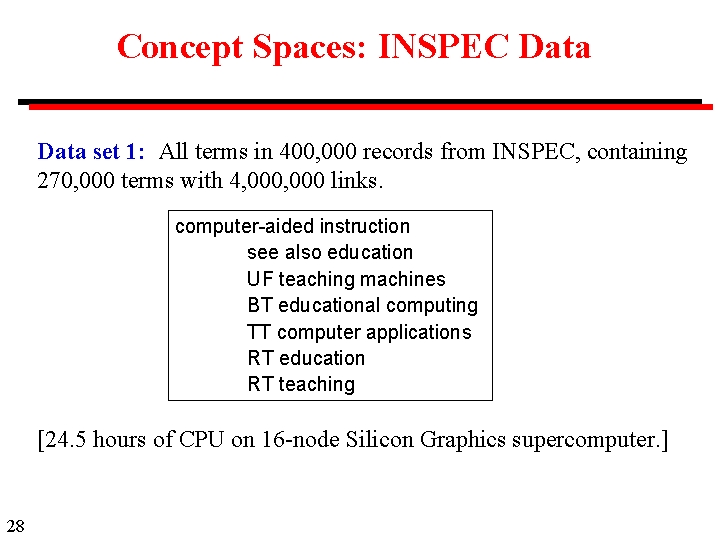

Concept Spaces: INSPEC Data set 1: All terms in 400, 000 records from INSPEC, containing 270, 000 terms with 4, 000 links. computer-aided instruction see also education UF teaching machines BT educational computing TT computer applications RT education RT teaching [24. 5 hours of CPU on 16 -node Silicon Graphics supercomputer. ] 28

Concept Space: Compendex Data set 2: (a) 4, 000 abstracts from the Compendex database covering all of engineering as the collection, partitioned along classification code lines into some 600 community repositories. [ Four days of CPU on 64 -processor Convex Exemplar. ] (b) In the largest experiment, 10, 000 abstracts, were divided into sets of 100, 000 and the concept space for each set generated separately. The sets were selected by the existing classification scheme. 29

Objectives 30 • Semantic retrieval (using concept spaces for term suggestion) • Semantic interoperability (vocabulary switching across subject domains) • Semantic indexing (concept identification of document content) • Information representation (information units for uniform manipulation)

Use of Concept Space: Term Suggestion 31

Future Use of Concept Space: Vocabulary Switching "I'm a civil engineer who designs bridges. I'm interested in using fluid dynamics to compute the structural effects of wind currents on long structures. Ocean engineers who design undersea cables probably do similar computations for the structural effects of water currents on long structures. I want you [the system] to change my civil engineering fluid dynamics terms into the ocean engineering terms and search the undersea cable literature. " 32

Example 2: Visual thesaurus for geographic images Methodology: • Divide images into small regions. • Create a similarity measure based on properties of these images. • Use cluster analysis tools to generate clusters of similar images. • Provide alternative representations of clusters. Marshall Ramsey, Hsinchun Chen, Bin Zhu, A Collection of Visual Thesauri for Browsing Large Collections of Geographic Images, May 1997. http: //ai. bpa. arizona. edu/~mramsey/papers/visual. Thesaurus/visual Thesaurus. html 33

34

Example 3: Cluster Analysis of Social Science Journal In the social sciences, subject boundaries are unclear. Can citation patterns be used to develop criteria for matching information services to the interests of users? W. Y. Arms and C. R. Arms, Cluster analysis used on social science citations, Journal of Documentation, 34 (1) pp 1 -11, March 1978. 35

Methodology Assumption: Two journals are close to each other if they are cited by the same source journals, with similar relative frequencies. Sources of citations: Select a sample of n social science journals. Citation matrix: Construct an m x n matrix in which the ijth element is the number of citations to journal i from journal j. Normalization: All data was normalized so that the sum of the elements in each row is 1. 36

Data Pilot study: 5, 000 citations from the 1970 volumes of 17 major journals from across the social sciences. Criminology citations: Every fifth citation from a set of criminology journals (3 sets of data for 1950, 1960, 1970). Main file (52, 000 citations): (a) Every citation from the 1970 volumes of the 48 most cited source journals in the pilot study. 37 (b) Every citation from the 1970 volumes of 47 randomly selected journals.

Sample sizes Sample Source journals Target journals Pilot 17 115 Criminology: 1950 1960 1970 10 13 27 18 49 108 Main file: ranked random 48 47 495 254 Excludes journals that are cited by only one source. These were assumed to cluster with the source. 38

Algorithms Main analysis used a non-hierarchical method of E. M. L. Beale and M. G. Kendal based on Euclidean distance. For comparison, 36 psychology journals clustered using: single-linkage complete-linkage van Rijsbergen's algorithm Beale/Kendal algorithm and complete-linkage produced similar results. Single-linkage suffered from chaining. 39 Van Rijsbergen algorithm seeks very clear-cut clusters, which were not found in the data.

Non-hierarchical clusters 40 Economics clusters in the pilot study

Non-hierarchical dendrogram 41 Part of a dendrogram showing non-hierarchical structure

Conclusion "The overall conclusion must be that cluster analysis is not a practical method of designing secondary services in the social sciences. " 42 • Because of skewed distributions very large amounts of data are required. • Results are complex and difficult to interpret. • Overlap between social sciences leads to results that are sensitive to the precise data and algorithms chosen.

The End Return objects Return hits Browse content Scan results Search index 43