CS 430 INFO 430 Information Retrieval Lecture 11

- Slides: 34

CS 430 / INFO 430 Information Retrieval Lecture 11 Evaluation of Retrieval Effectiveness 2 1

Course administration Assignment 2 A minor revision of wording was made on Wednesday. For this assignment, submit a single program. 2

CS 430 / INFO 430 Information Retrieval Completion of Lecture 10 3

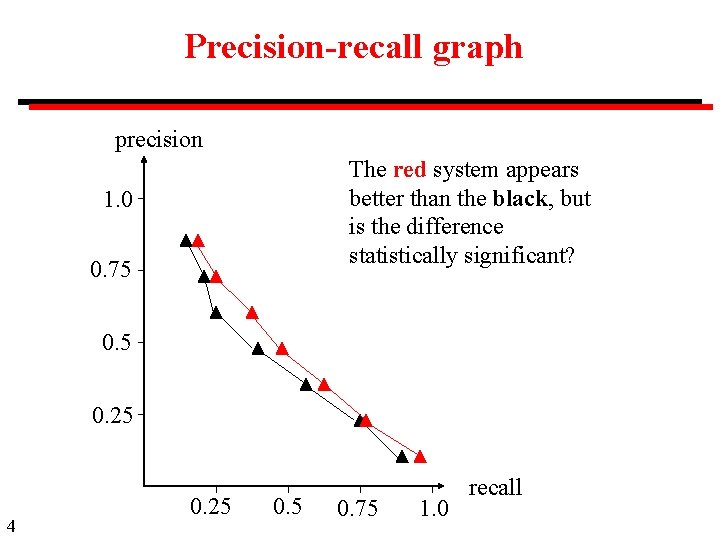

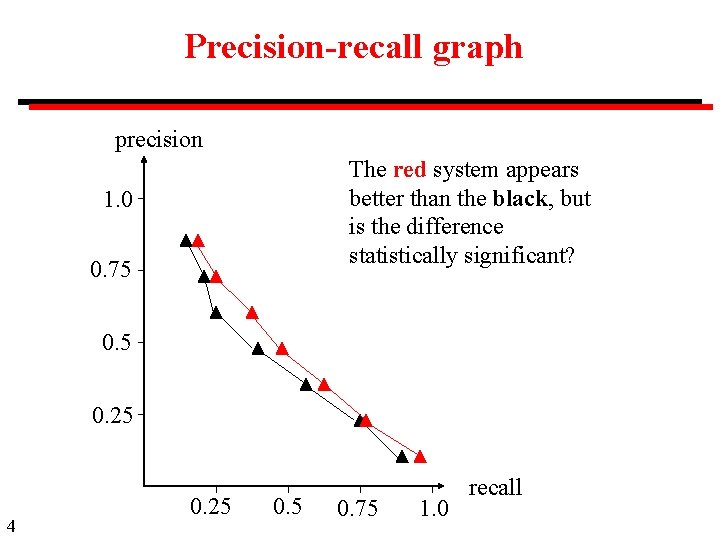

Precision-recall graph precision The red system appears better than the black, but is the difference statistically significant? 1. 0 0. 75 0. 25 4 0. 25 0. 75 1. 0 recall

Statistical tests Suppose that a search is carried out on systems i and j System i is superior to system j if, for all test cases, recall(i) >= recall(j) precisions(i) >= precision(j) In practice, we have data from a limited number of test cases. What conclusions can we draw? 5

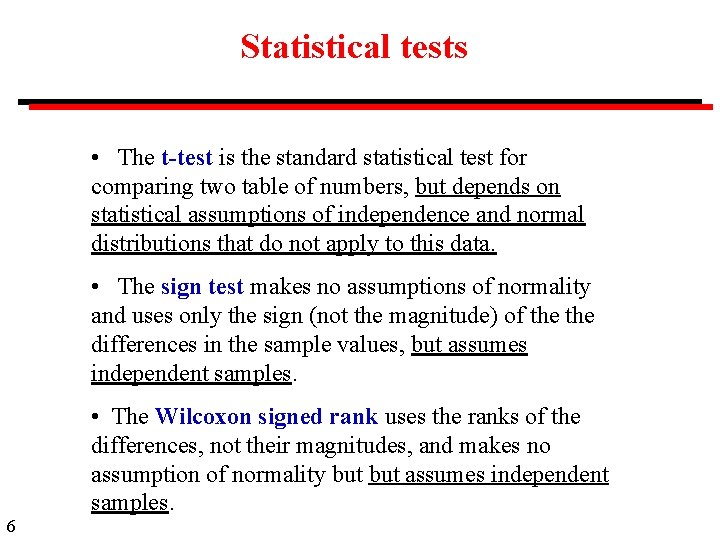

Statistical tests • The t-test is the standard statistical test for comparing two table of numbers, but depends on statistical assumptions of independence and normal distributions that do not apply to this data. • The sign test makes no assumptions of normality and uses only the sign (not the magnitude) of the differences in the sample values, but assumes independent samples. • The Wilcoxon signed rank uses the ranks of the differences, not their magnitudes, and makes no assumption of normality but assumes independent samples. 6

CS 430 / INFO 430 Information Retrieval Lecture 11 Evaluation of Retrieval Effectiveness 2 7

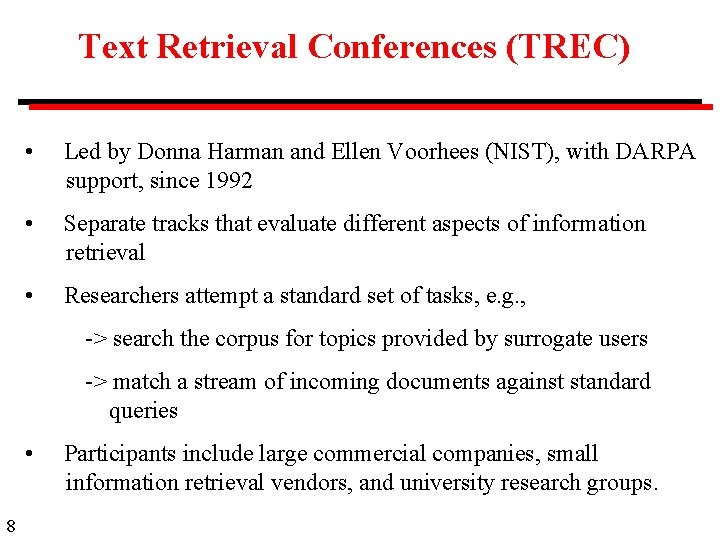

Text Retrieval Conferences (TREC) • Led by Donna Harman and Ellen Voorhees (NIST), with DARPA support, since 1992 • Separate tracks that evaluate different aspects of information retrieval • Researchers attempt a standard set of tasks, e. g. , -> search the corpus for topics provided by surrogate users -> match a stream of incoming documents against standard queries • 8 Participants include large commercial companies, small information retrieval vendors, and university research groups.

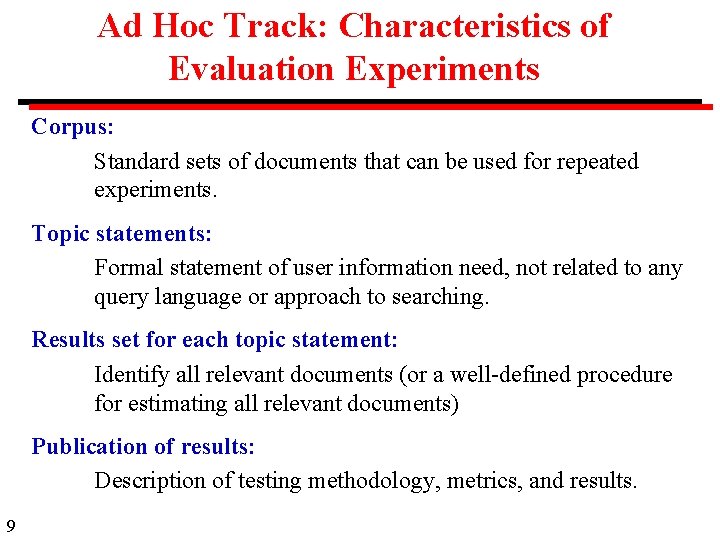

Ad Hoc Track: Characteristics of Evaluation Experiments Corpus: Standard sets of documents that can be used for repeated experiments. Topic statements: Formal statement of user information need, not related to any query language or approach to searching. Results set for each topic statement: Identify all relevant documents (or a well-defined procedure for estimating all relevant documents) Publication of results: Description of testing methodology, metrics, and results. 9

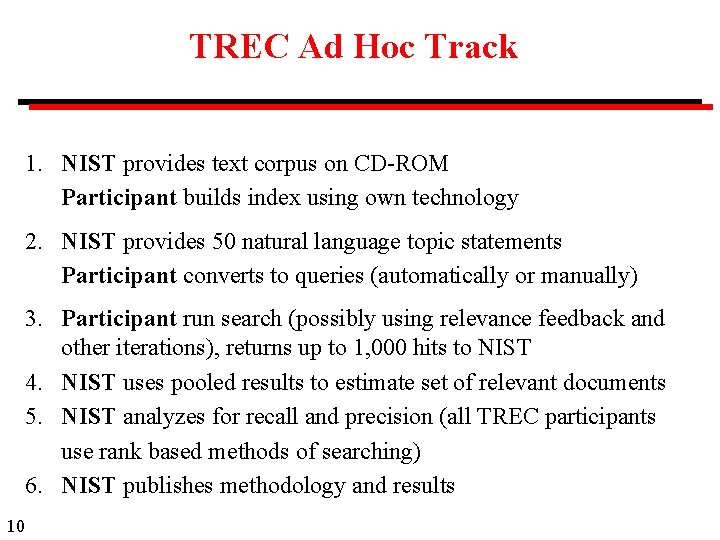

TREC Ad Hoc Track 1. NIST provides text corpus on CD-ROM Participant builds index using own technology 2. NIST provides 50 natural language topic statements Participant converts to queries (automatically or manually) 3. Participant run search (possibly using relevance feedback and other iterations), returns up to 1, 000 hits to NIST 4. NIST uses pooled results to estimate set of relevant documents 5. NIST analyzes for recall and precision (all TREC participants use rank based methods of searching) 6. NIST publishes methodology and results 10

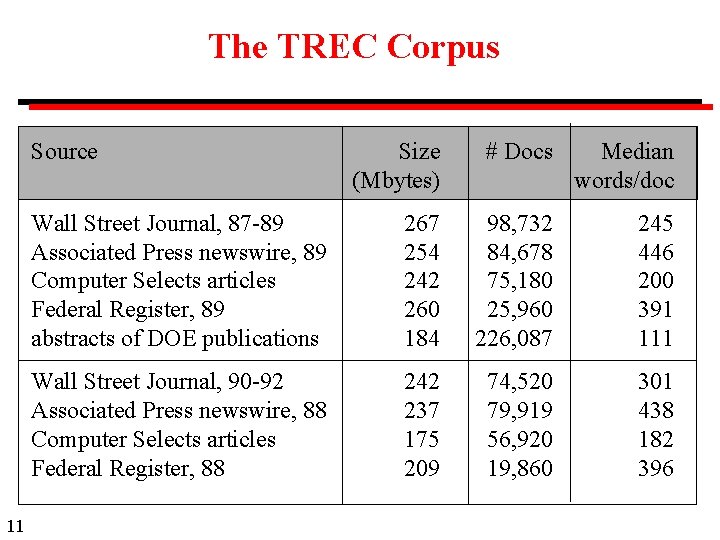

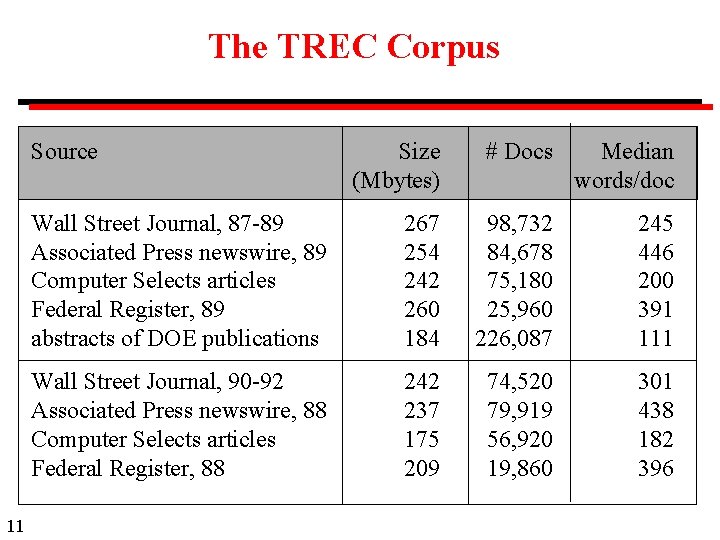

The TREC Corpus Source 11 Size (Mbytes) # Docs Median words/doc Wall Street Journal, 87 -89 Associated Press newswire, 89 Computer Selects articles Federal Register, 89 abstracts of DOE publications 267 254 242 260 184 98, 732 84, 678 75, 180 25, 960 226, 087 245 446 200 391 111 Wall Street Journal, 90 -92 Associated Press newswire, 88 Computer Selects articles Federal Register, 88 242 237 175 209 74, 520 79, 919 56, 920 19, 860 301 438 182 396

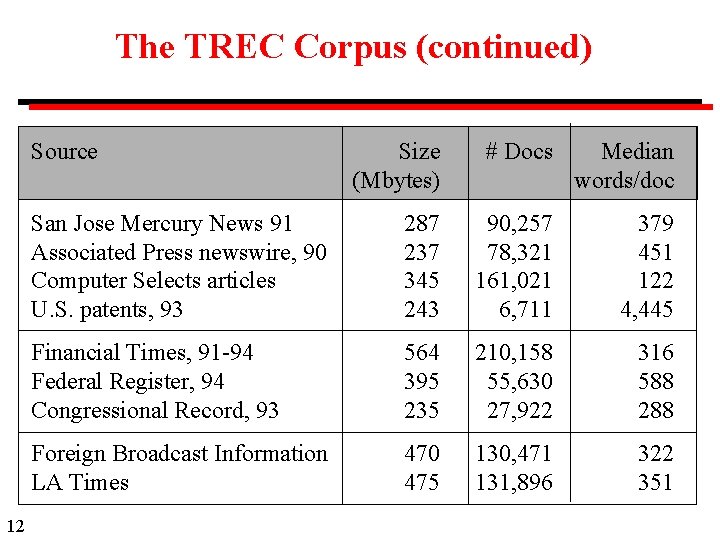

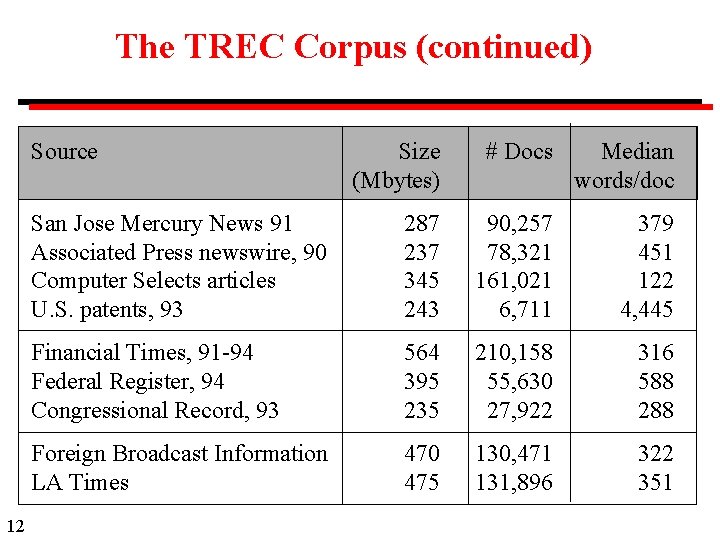

The TREC Corpus (continued) Source 12 Size (Mbytes) # Docs Median words/doc San Jose Mercury News 91 Associated Press newswire, 90 Computer Selects articles U. S. patents, 93 287 237 345 243 90, 257 78, 321 161, 021 6, 711 379 451 122 4, 445 Financial Times, 91 -94 Federal Register, 94 Congressional Record, 93 564 395 235 210, 158 55, 630 27, 922 316 588 288 Foreign Broadcast Information LA Times 470 475 130, 471 131, 896 322 351

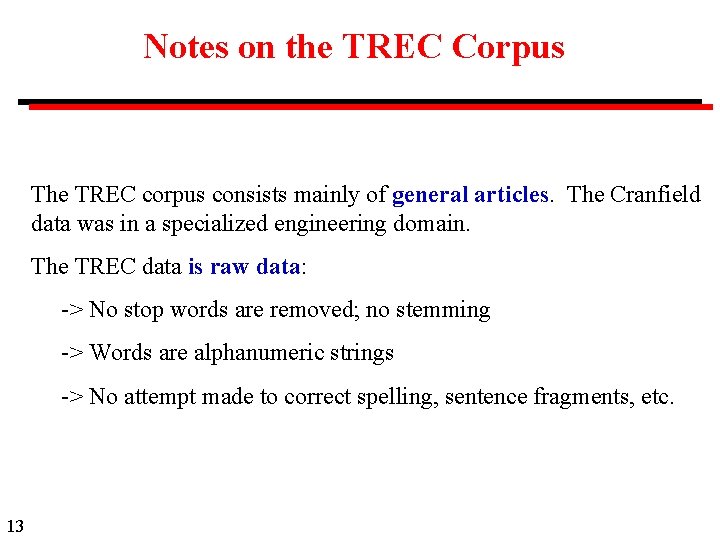

Notes on the TREC Corpus The TREC corpus consists mainly of general articles. The Cranfield data was in a specialized engineering domain. The TREC data is raw data: -> No stop words are removed; no stemming -> Words are alphanumeric strings -> No attempt made to correct spelling, sentence fragments, etc. 13

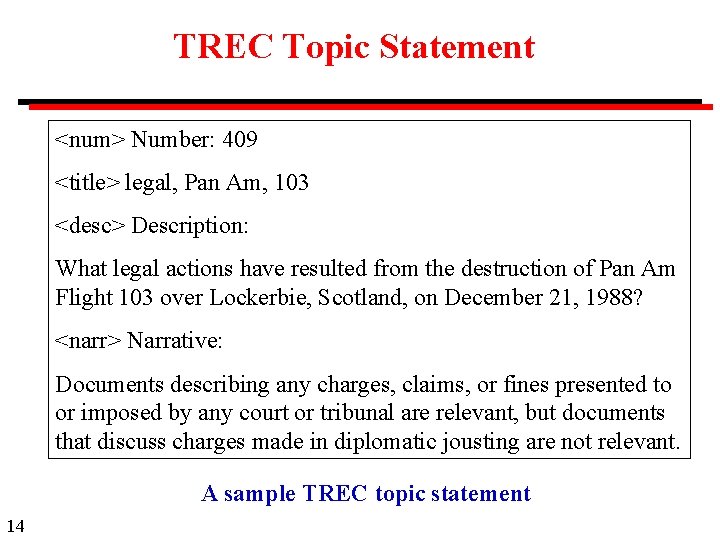

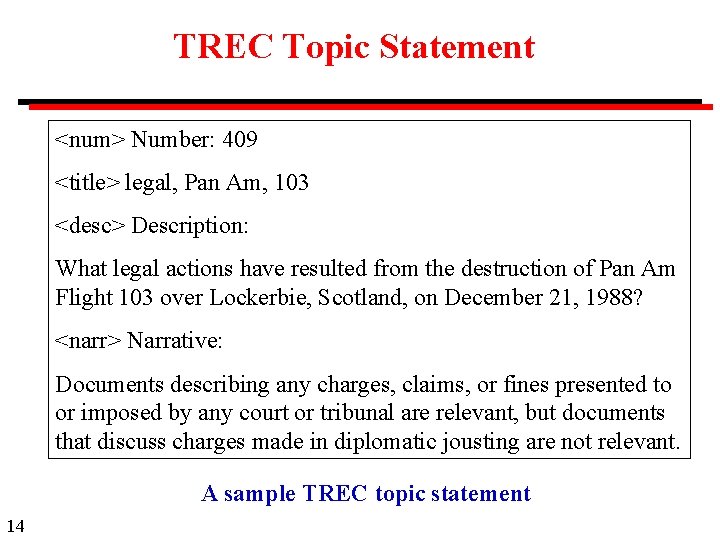

TREC Topic Statement <num> Number: 409 <title> legal, Pan Am, 103 <desc> Description: What legal actions have resulted from the destruction of Pan Am Flight 103 over Lockerbie, Scotland, on December 21, 1988? <narr> Narrative: Documents describing any charges, claims, or fines presented to or imposed by any court or tribunal are relevant, but documents that discuss charges made in diplomatic jousting are not relevant. A sample TREC topic statement 14

Relevance Assessment: TREC Problem: Too many documents to inspect each one for relevance. Solution: For each topic statement, a pool of potentially relevant documents is assembled, using the top 100 ranked documents from each participant The human expert who set the query looks at every document in the pool and determines whether it is relevant. Documents outside the pool are not examined. In a TREC-8 example, with 71 participants: 7, 100 documents in the pool 1, 736 unique documents (eliminating duplicates) 94 judged relevant 15

Some other TREC tracks (not all tracks offered every year) Cross-Language Track Retrieve documents written in different languages using topics that are in one language. Filtering Track In a stream of incoming documents, retrieve those documents that match the user's interest as represented by a query. Adaptive filtering modifies the query based on relevance feed-back. Genome Track Study the retrieval of genomic data: gene sequences and supporting documentation, e. g. , research papers, lab reports, etc. 16

Some Other TREC Tracks (continued) HARD Track High accuracy retrieval, leveraging additional information about the searcher and/or the search context. Question Answering Track Systems that answer questions, rather than return documents. Video Track Content-based retrieval of digital video. Web Track Search techniques and repeatable experiments on Web documents. 17

A Cornell Footnote The TREC analysis uses a program developed by Chris Buckley, who spent 17 years at Cornell before completing his Ph. D. in 1995. Buckley has continued to maintain the SMART software and has been a participant at every TREC conference. SMART has been used as the basis against which other systems are compared. During the early TREC conferences, the tuning of SMART with the TREC corpus led to steady improvements in retrieval efficiency, but after about TREC-5 a plateau was reached. TREC-8, in 1999, was the final year for the ad hoc experiment. 18

Reading Ellen M. Voorhees and Donna Harman, TREC Experiment and Evaluation in Information Retrieval. MIT Press, 2005. 19

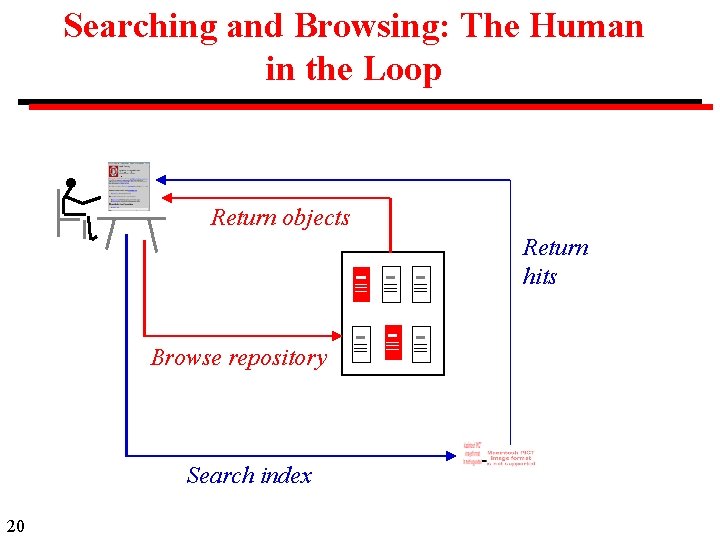

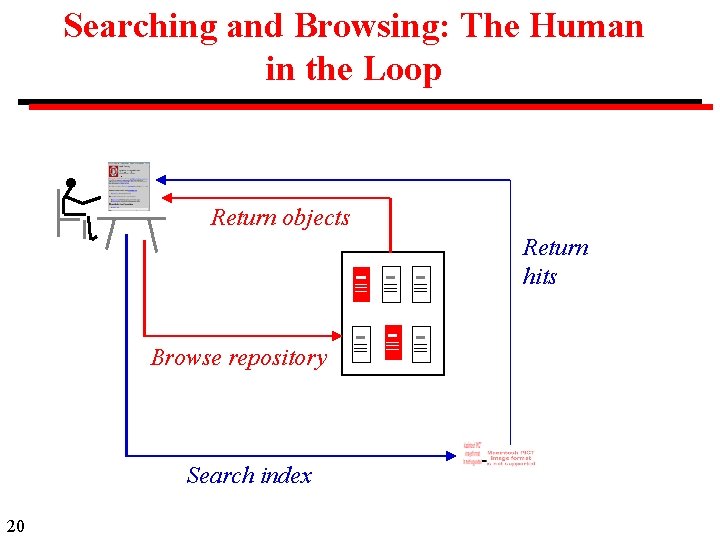

Searching and Browsing: The Human in the Loop Return objects Return hits Browse repository Search index 20

Information Discovery: Examples and Measures of Success People have many reasons to look for information: • Known item Where will I find the wording of the US Copyright Act? Success: A document from a reliable source that has the current wording of the act. • Fact What is the capital of Barbados? Success: The name of the capital from an up to date reliable source. 21

Information Discovery: Examples and Measures of Success (continued) People have many reasons to look for information: • Introduction or overview How do diesel engines work? Success: A document that is technically correct, of the appropriate length and technical depth for the audience. • Related information (annotation) Is there a review of this item? Success: A review, if one exists, written by a competent author. 22

Information Discovery: Examples and Measures of Success (continued) People have many reasons to look for information: • Comprehensive search What is known of the effects of global warming on hurricanes? Success: A list of all research papers on this topic. Historically, comprehensive search was the application that motivated information retrieval. It is important in such areas as medicine, law, and academic research. The standard methods for evaluating search services are appropriate only for comprehensive search. 23

Evaluation: User criteria System-centered and user-centered evaluation -> Is user satisfied? -> Is user successful? System efficiency -> What efforts are involved in carrying out the search? Suggested criteria (none very satisfactory) • • • 24 recall and precision response time user effort form of presentation content coverage

The TREC Interactive Track has tried several experimental approaches: • Manual query construction with interactive feedback and query modification with routing (TREC-1, 2, and 3) and ad hoc (TREC-4). • Aspectual recall with inter-system comparison (TREC 5, and 6) • Aspectual recall without inter-system comparison (TREC-7, and 8) • Fact-finding without inter-system comparison (TREC-9 and later) 25

TREC-6 Interactive Track Aspectual recall: Retrieve as many relevant documents as possible in 20 minutes, so that taken together they cover as many different aspects of the task as possible. Topics: Six topics from the ad hoc track. Assessment: Documents from all participants pooled and aspects matrix of participant success created by NIST staff. Experimental design: Order of searching and system used followed standard Latin square block design. Control system: A baseline system, ZPRISE, used by all participants. 26

TREC-6 Interactive Track Analysis: Use of a standard statistical experimental design allowed analysis of results using analysis of variance. Topic and researcher are considered random effects and the system as a fixed effect. Results: Significant effects of topic, searcher, and system within site. Results between sites were not significant. Observations on methodology: Even a small study (six topics) was a major commitment, including training of subjects, questionnaires, etc. 27

D-Lib Working Group on Metrics DARPA-funded attempt to develop a TREC-like approach to digital libraries (1997) with a human in the loop. "This Working Group is aimed at developing a consensus on an appropriate set of metrics to evaluate and compare the effectiveness of digital libraries and component technologies in a distributed environment. Initial emphasis will be on (a) information discovery with a human in the loop, and (b) retrieval in a heterogeneous world. " Very little progress made. See: http: //www. dlib. org/metrics/public/index. html 28

MIRA Evaluation Frameworks for Interactive Multimedia Information Retrieval Applications European study 1996 -99 Chair Keith Van Rijsbergen, Glasgow University Expertise Multi Media Information Retrieval Human Computer Interaction Case Based Reasoning Natural Language Processing 29

Some MIRA Aims 30 • Bring the user back into the evaluation process. • Understand the changing nature of Information Retrieval tasks and their evaluation. • Evaluate traditional evaluation methodologies. • Understand how interaction affects evaluation. • Understand how new media affects evaluation. • Make evaluation methods more practical for smaller groups.

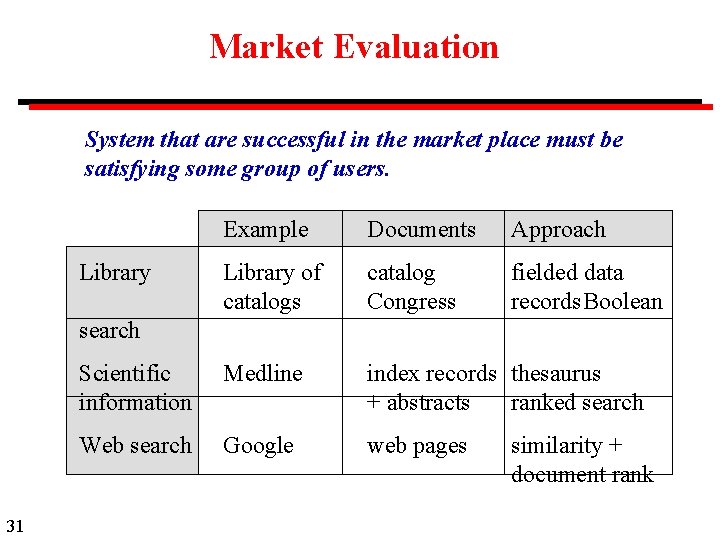

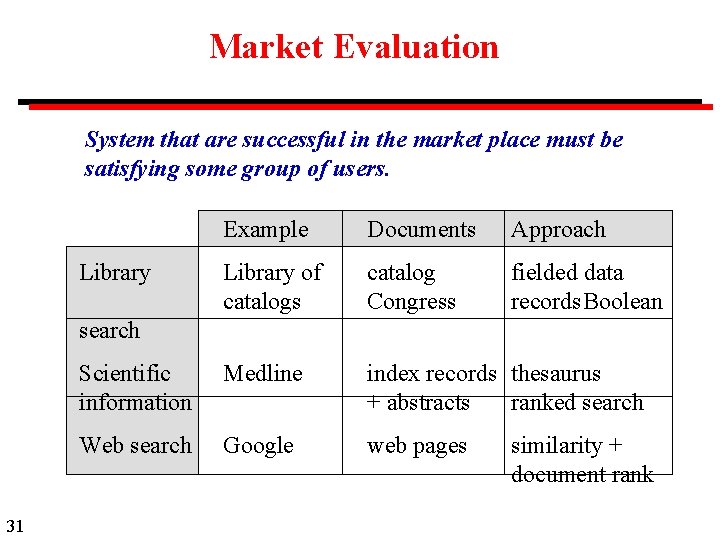

Market Evaluation System that are successful in the market place must be satisfying some group of users. Example Documents Approach Library of catalogs catalog Congress fielded data records. Boolean Scientific information Medline index records thesaurus + abstracts ranked search Web search Google web pages Library search 31 similarity + document rank

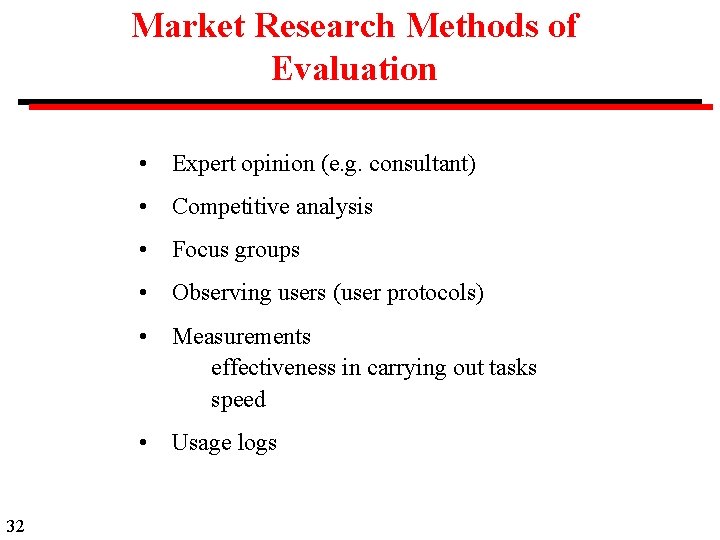

Market Research Methods of Evaluation 32 • Expert opinion (e. g. consultant) • Competitive analysis • Focus groups • Observing users (user protocols) • Measurements effectiveness in carrying out tasks speed • Usage logs

Market Research Methods Initial Expert opinions Competitive analysis Focus groups Observing users Measurements Usage logs 33 Mock-up Prototype Production

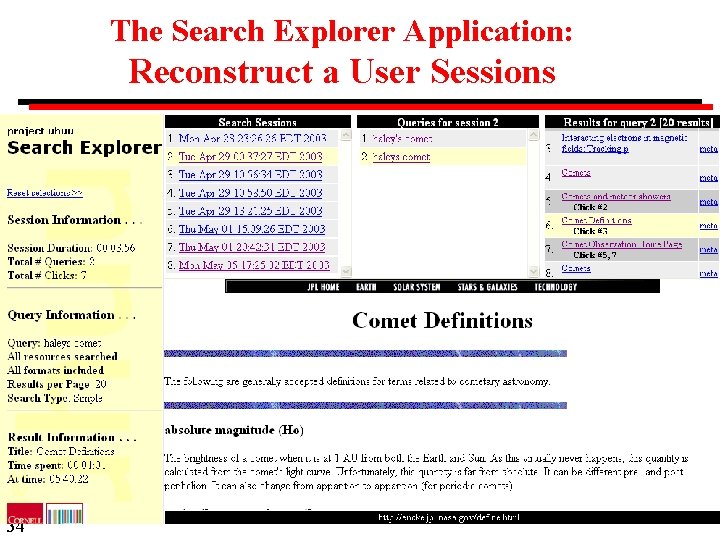

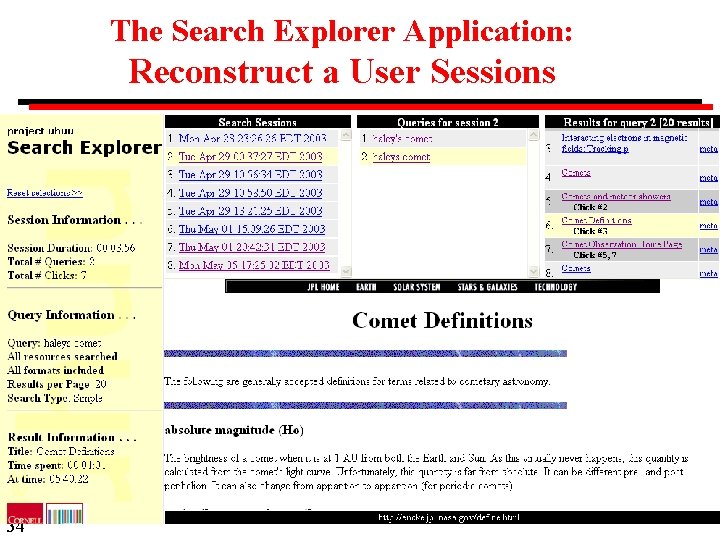

The Search Explorer Application: Reconstruct a User Sessions 34