CS 430 INFO 430 Information Retrieval Lecture 6

- Slides: 21

CS 430 / INFO 430 Information Retrieval Lecture 6 Vector Methods 2 1

Course Administration New Teaching Assistant • Veselin (Ves) Stoyanov <ves@cs. cornell. edu> Wednesday Classes • The grading for participation allows you to miss a couple of classes. • The material in the discussion classes will be part of the examinations. 2

Course Administration Assignment 1 • Programs must run from command prompt in CSUGLAB. • Assignment 1 is an individual assignment. Discuss the concepts and the choice of methods with your colleagues, but the actual programs and report much be individual work. • Since the volume of data is small, data structures that are implemented entirely within memory are expected. 3

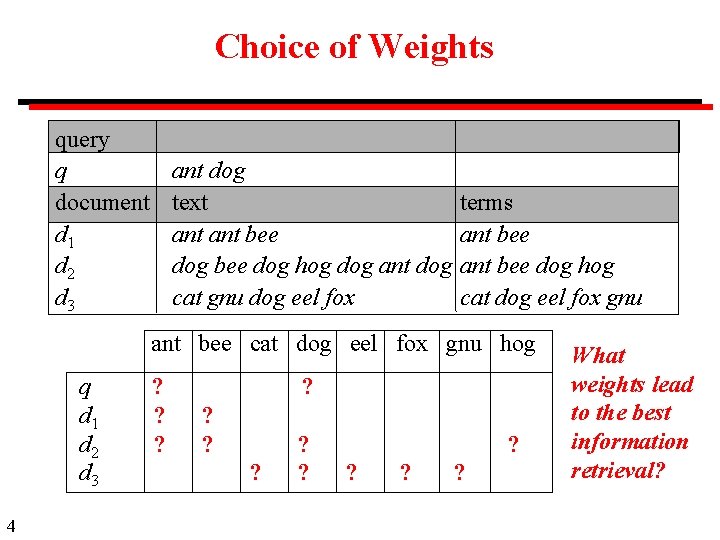

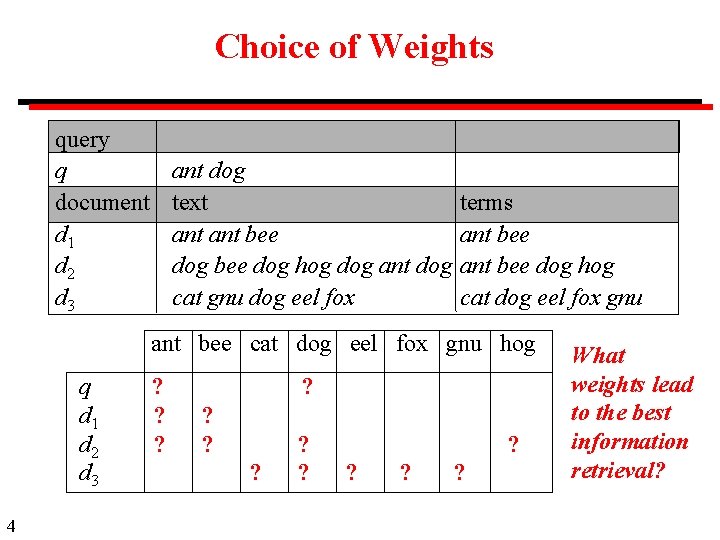

Choice of Weights query q document d 1 d 2 d 3 ant dog text ant bee dog hog dog ant dog cat gnu dog eel fox terms ant bee dog hog cat dog eel fox gnu ant bee cat dog eel fox gnu hog What weights lead q ? ? to the best d 1 ? ? information d 2 ? ? retrieval? d 3 ? ? ? 4

Methods for Selecting Weights Empirical Test a large number of possible weighting schemes with actual data. (This lecture, based on work of Salton, et al. ) Model based Develop a mathematical model of word distribution and derive weighting scheme theoretically. (Probabilistic model of information retrieval. ) 5

Weighting Term Frequency (tf) Suppose term j appears fij times in document i. What weighting should be given to a term j? Term Frequency: Concept A term that appears many times within a document is likely to be more important than a term that appears only once. 6

Term Frequency: Free-text Document Length of document Simple method (as illustrated in Lecture 3) is to use fij as the termi frequency. . but, in free-text documents, terms are likely to appear more often in long documents. Therefore fij should be scaled by some variable related to document length. 7

Term Frequency: Free-text Document A standard method for free-text documents Scale fij relative to the frequency of other terms in the document. This partially corrects for variations in the i length of the documents. Let mi = max (fij) i. e. , mi is the maximum frequency of any term in document i. Term frequency (tf): tfij = fij / mi when fij > 0 Note: There is no special justification for taking this form of term frequency except that it works well in practice and is easy to calculate. 8

Weighting Inverse Document Frequency (idf) Suppose term j appears fij times in document i. What weighting should be given to a term j? Inverse Document Frequency: Concept A term that occurs in a few documents is likely to be a better discriminator that a term that appears in most or all documents. 9

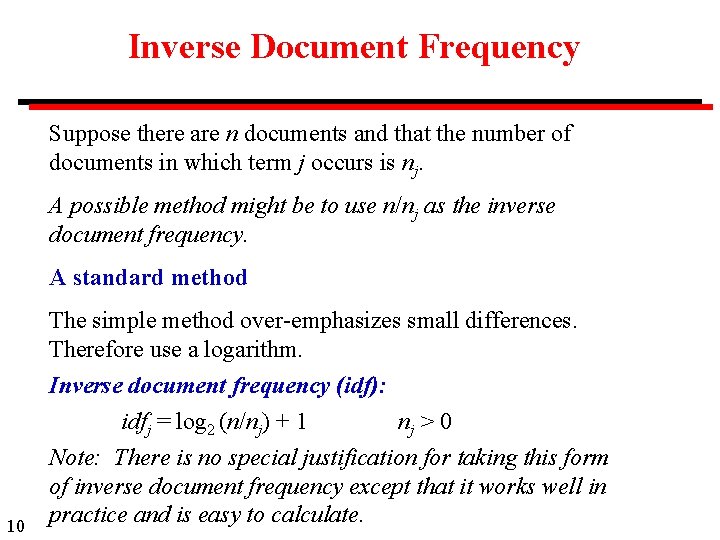

Inverse Document Frequency Suppose there are n documents and that the number of documents in which term j occurs is nj. A possible method might be to use n/nj as the inverse document frequency. A standard method The simple method over-emphasizes small differences. Therefore use a logarithm. 10 Inverse document frequency (idf): idfj = log 2 (n/nj) + 1 nj > 0 Note: There is no special justification for taking this form of inverse document frequency except that it works well in practice and is easy to calculate.

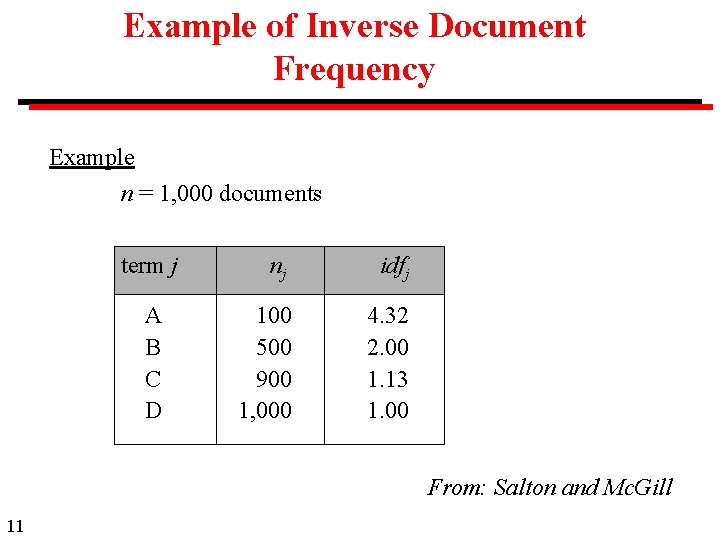

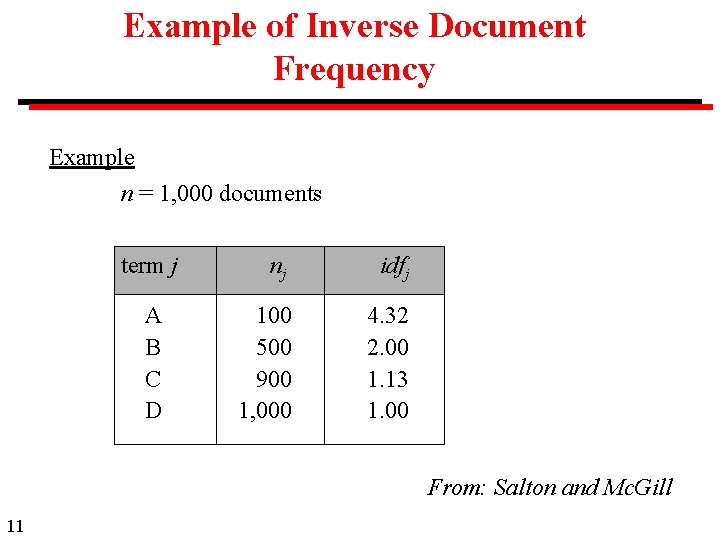

Example of Inverse Document Frequency Example n = 1, 000 documents term j A B C D nj idfj 100 500 900 1, 000 4. 32 2. 00 1. 13 1. 00 From: Salton and Mc. Gill 11

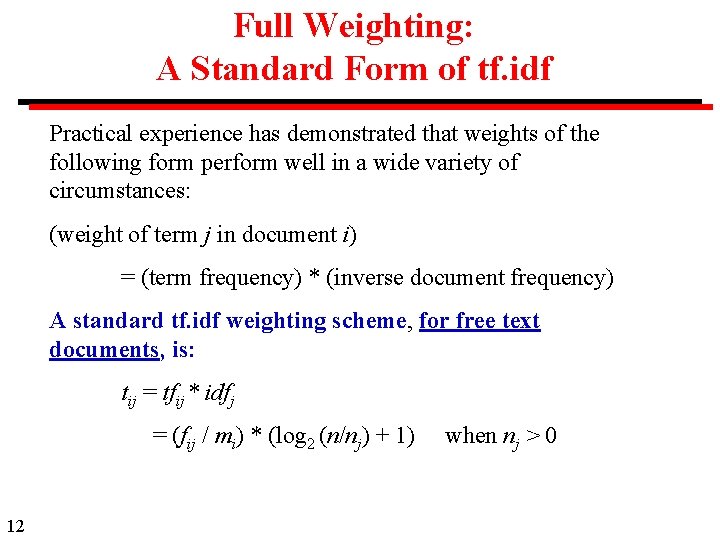

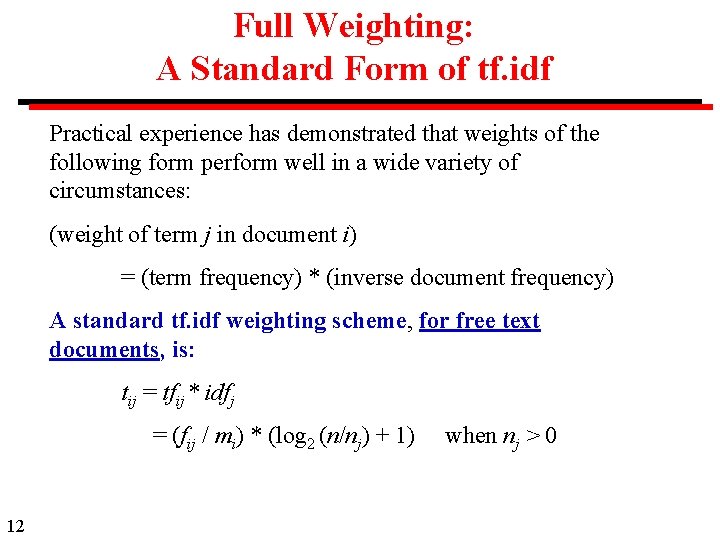

Full Weighting: A Standard Form of tf. idf Practical experience has demonstrated that weights of the following form perform well in a wide variety of circumstances: (weight of term j in document i) = (term frequency) * (inverse document frequency) A standard tf. idf weighting scheme, for free text documents, is: tij = tfij * idfj = (fij / mi) * (log 2 (n/nj) + 1) when nj > 0 12

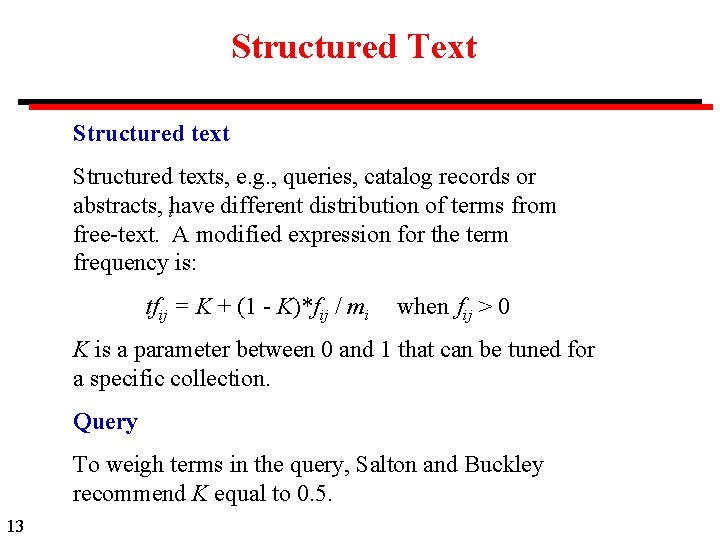

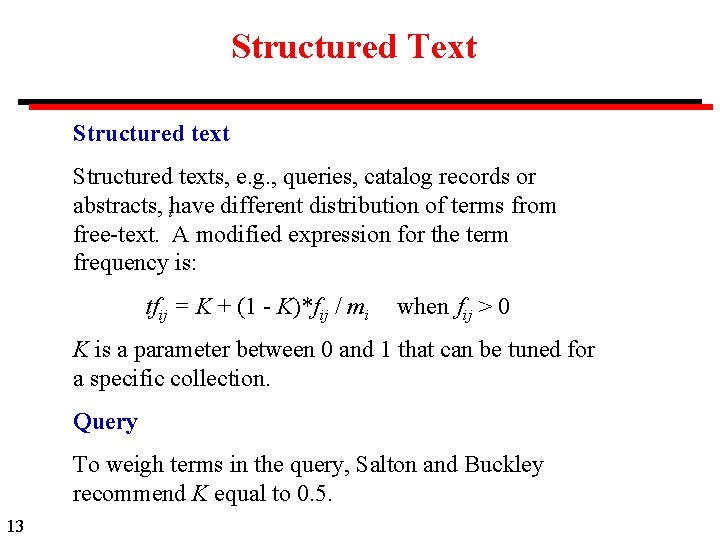

Structured Text Structured texts, e. g. , queries, catalog records or abstracts, have different distribution of terms from i free-text. A modified expression for the term frequency is: tfij = K + (1 - K)*fij / mi when fij > 0 K is a parameter between 0 and 1 that can be tuned for a specific collection. Query To weigh terms in the query, Salton and Buckley recommend K equal to 0. 5. 13

Summary: Similarity Calculation The similarity between query q and document i is given by: n t qktik k=1 cos(dq, di) = |dq| |di| Where dq and di are the corresponding weighted term vectors, with components in the k dimension (corresponding to term k) given by: tqk = (0. 5 + 0. 5*fqk / mq)*(log 2 (n/nk) + 1) when fqk > 0 tik = (fik / mi) * (log 2 (n/nk) + 1) when fik > 0 14

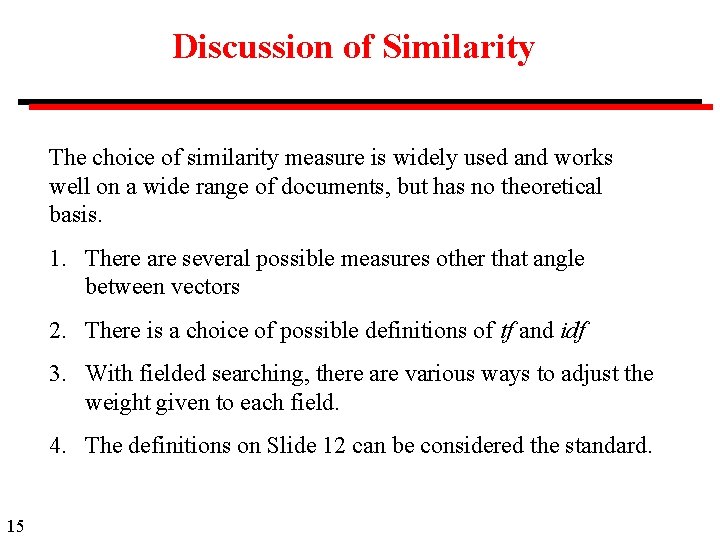

Discussion of Similarity The choice of similarity measure is widely used and works well on a wide range of documents, but has no theoretical basis. 1. There are several possible measures other that angle between vectors 2. There is a choice of possible definitions of tf and idf 3. With fielded searching, there are various ways to adjust the weight given to each field. 4. The definitions on Slide 12 can be considered the standard. 15

16

Jakarta Lucene is a high-performance, full-featured text search engine library written entirely in Java. The technology is suitable for nearly any application that requires full-text search, especially cross-platform. Jakarta Lucene is an open source project available for free download from Apache Jakarta. Versions are also available is several other languages, including C++. The original author was Doug Cutting. http: //jakarta. apache. org/lucene/docs/ 17

18

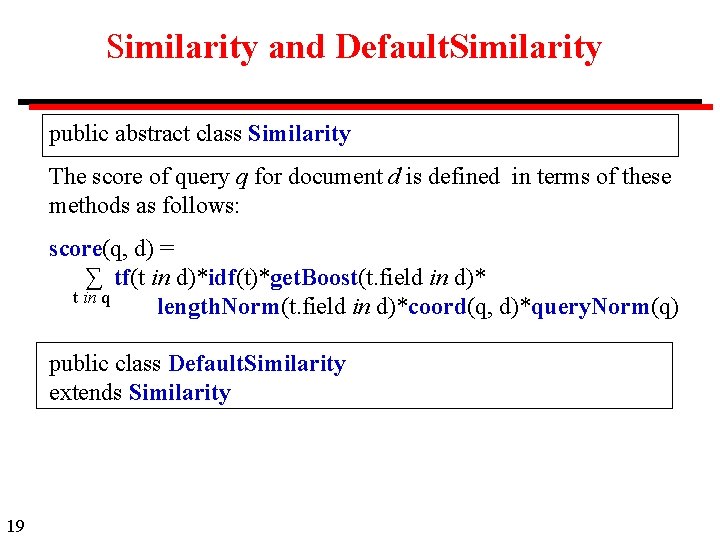

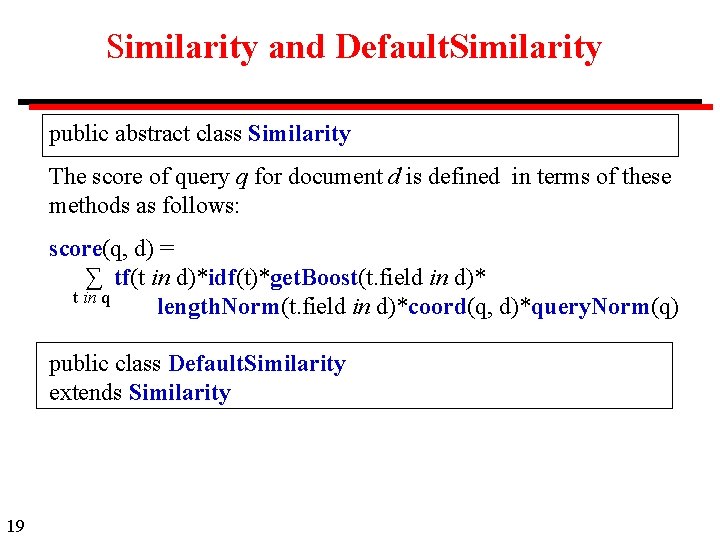

Similarity and Default. Similarity public abstract class Similarity The score of query q for document d is defined in terms of these methods as follows: score(q, d) = ∑ tf(t in d)*idf(t)*get. Boost(t. field in d)* t in q length. Norm(t. field in d)*coord(q, d)*query. Norm(q) public class Default. Similarity extends Similarity 19

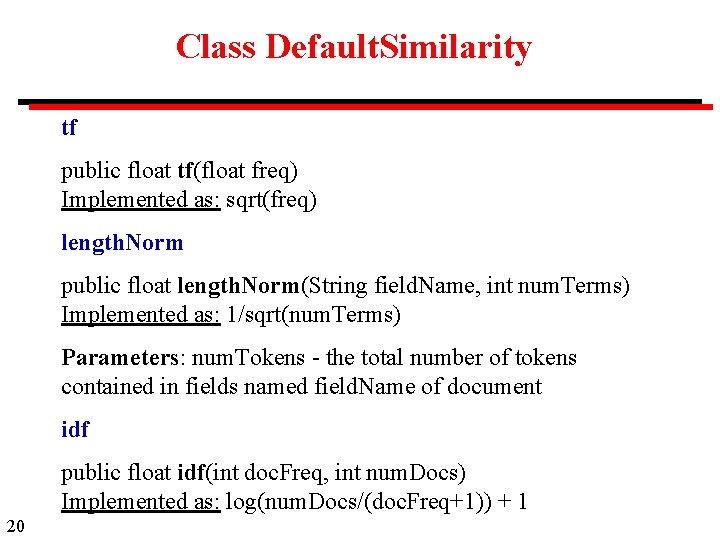

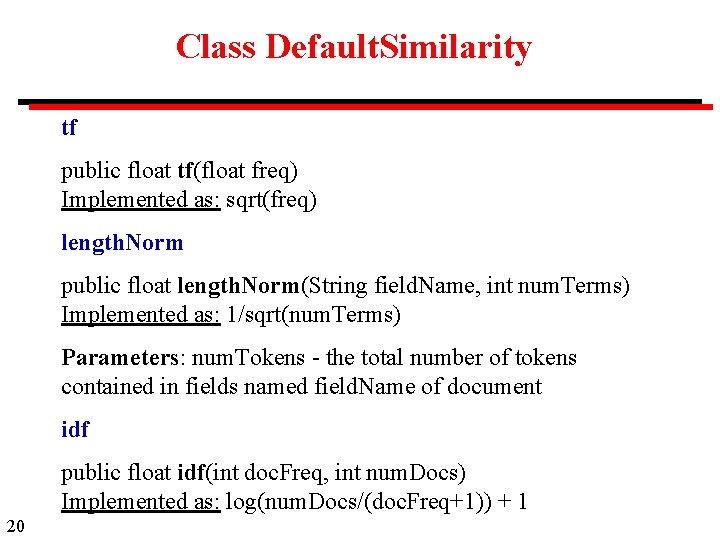

Class Default. Similarity tf public float tf(float freq) Implemented as: sqrt(freq) length. Norm public float length. Norm(String field. Name, int num. Terms) Implemented as: 1/sqrt(num. Terms) Parameters: num. Tokens - the total number of tokens contained in fields named field. Name of document idf public float idf(int doc. Freq, int num. Docs) Implemented as: log(num. Docs/(doc. Freq+1)) + 1 20

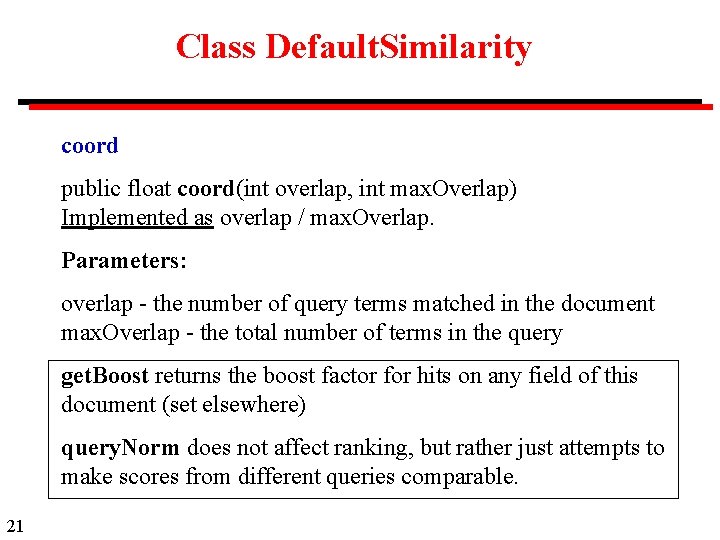

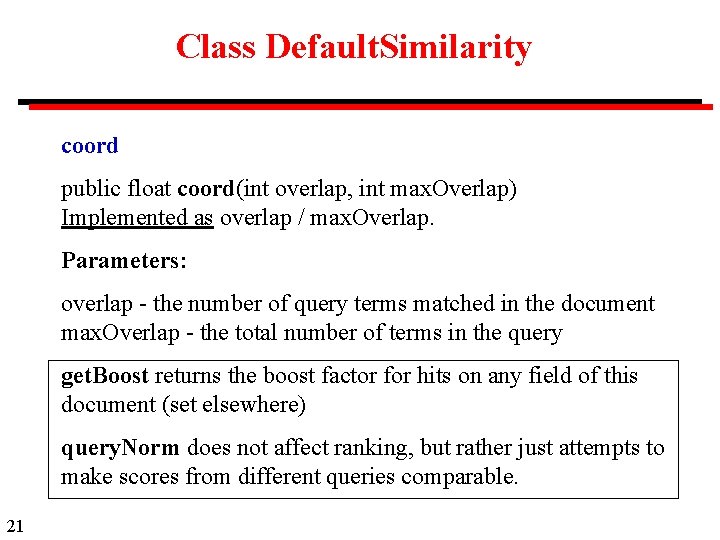

Class Default. Similarity coord public float coord(int overlap, int max. Overlap) Implemented as overlap / max. Overlap. Parameters: overlap - the number of query terms matched in the document max. Overlap - the total number of terms in the query get. Boost returns the boost factor for hits on any field of this document (set elsewhere) query. Norm does not affect ranking, but rather just attempts to make scores from different queries comparable. 21