CS 3214 Project 2 ForkJoin Threadpool Help Session

![Example mergesort(threadpool tp, array A) { future* f = threadpool_submit(tp, mergesort, A[. . left]); Example mergesort(threadpool tp, array A) { future* f = threadpool_submit(tp, mergesort, A[. . left]);](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-17.jpg)

![Task Tree sort(A[0. . 64]) sort(A[0. . 32]) sort(A[0. . 16]) sort(A[32. . 64]) Task Tree sort(A[0. . 64]) sort(A[0. . 32]) sort(A[0. . 16]) sort(A[32. . 64])](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-18.jpg)

![Illustrated TP API Global sort(A[0. . 16]) sort(A[16. . 32]) sort(A[32. . 48]) sort(A[0. Illustrated TP API Global sort(A[0. . 16]) sort(A[16. . 32]) sort(A[32. . 48]) sort(A[0.](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-19.jpg)

![Illustrated Work Stealing Global A �� sort(A[0. . 16]) sort(A[0. . 32]) B �� Illustrated Work Stealing Global A �� sort(A[0. . 16]) sort(A[0. . 32]) B ��](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-20.jpg)

![Test Driver $ ~cs 3214/bin/fjdriver. py [options] – – Run with -r for only Test Driver $ ~cs 3214/bin/fjdriver. py [options] – – Run with -r for only](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-37.jpg)

- Slides: 48

CS 3214: Project 2 Fork-Join Threadpool Help Session Wednesday, February 27 th Arnab Kumar Paul <akpaul@vt. edu> Ashish Baghudana <ashishb@vt. edu>

Topics – – – REVISED Basics / Getting Started Threadpool Design – Work Stealing – Work Helping Implementation Decisions Logistics – Grades – Testdriver / scoreboard FAQ / Debugging Tips

Basics / Getting Started

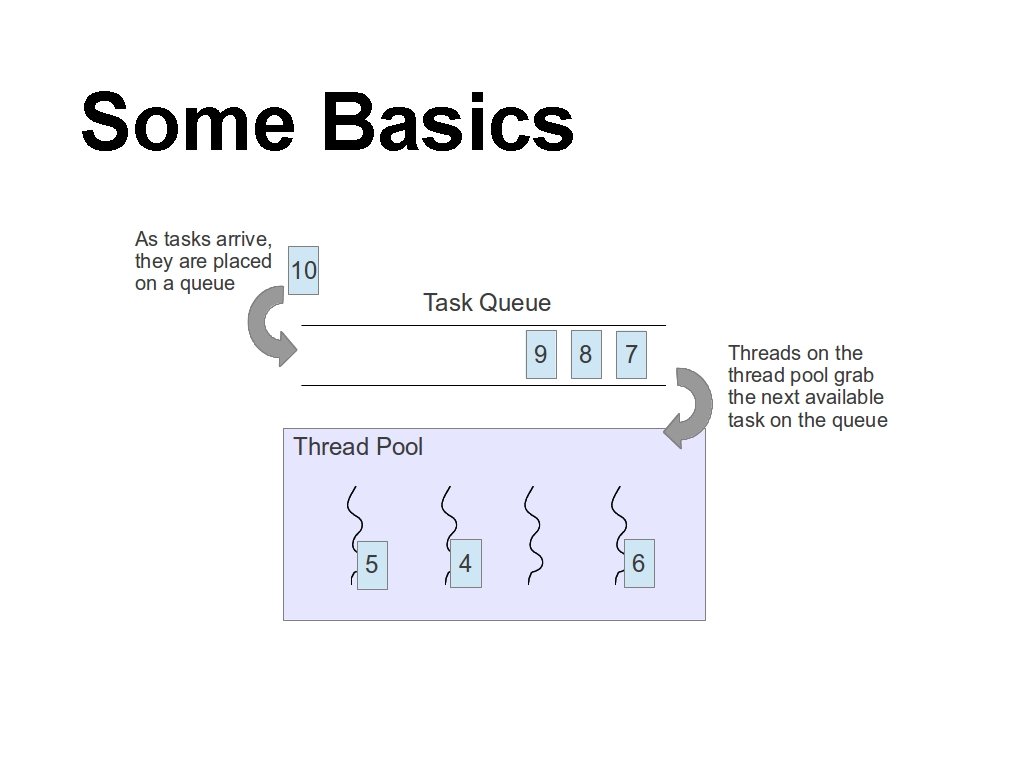

Some Basics – – Threads – Different from processes? – How? Threadpools – Automatic dependency management of tasks – Easy to quickly add concurrency without locking semantics: just call the threadpool API

Some Basics

Getting Started – https: //git. cs. vt. edu/cs 3214 -staff/threadlab – Fork / clone the repo – Set to private – Similar to shell: grading on git usage $ git clone https: //git. cs. vt. edu/ashishb/threadlab

Getting Started – What do we write? – Only threadpool. c – Forward declarations of structs, prototypes of functions given in threadpool. h – Provide implementations of functions, definitions of structs – Any other functions/variables should be static

Futures – – What is a future? They’re becoming a more common concept in today’s world of parallel computers fetch('https: //s 1. jamestaylr. net/status', { method: 'get' }). then((response) => { return response. json(); }). then((json) { console. log(json); }). catch((err) => { console. error(err); });

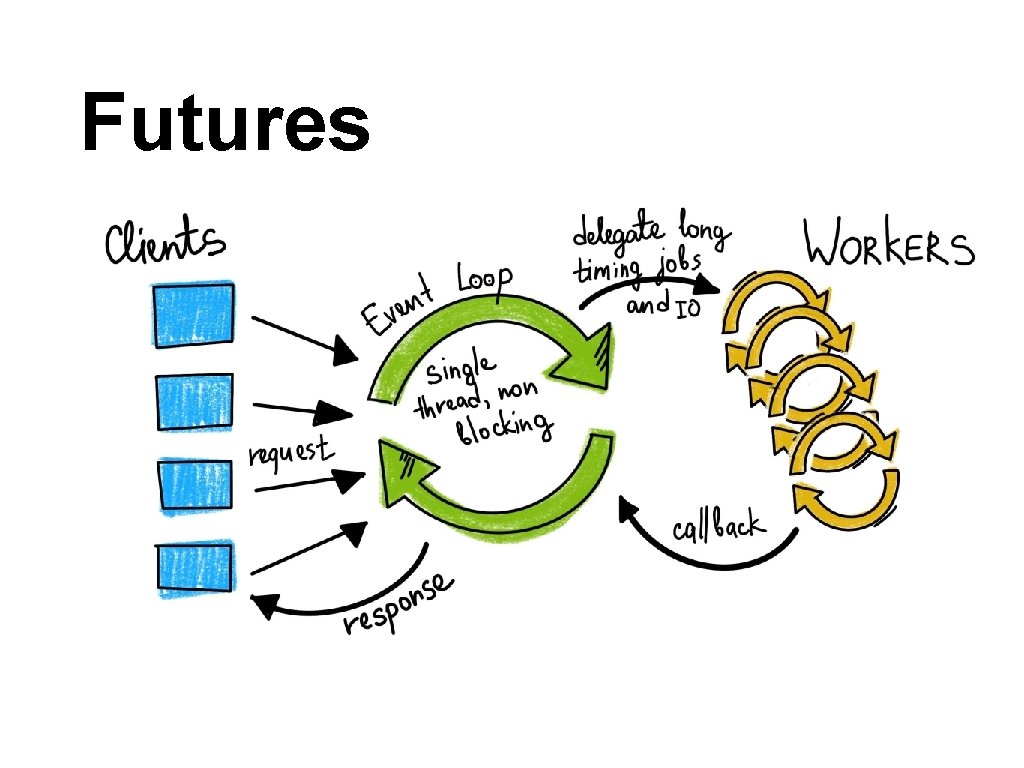

Futures

Futures – – For this project, a future is an instance of a task that you must execute To the user, a future is an opaque object, they can only use it to get the result later The result can be retrieved via future_get() The job of the threadpool is to execute these futures in parallel (as much as possible)

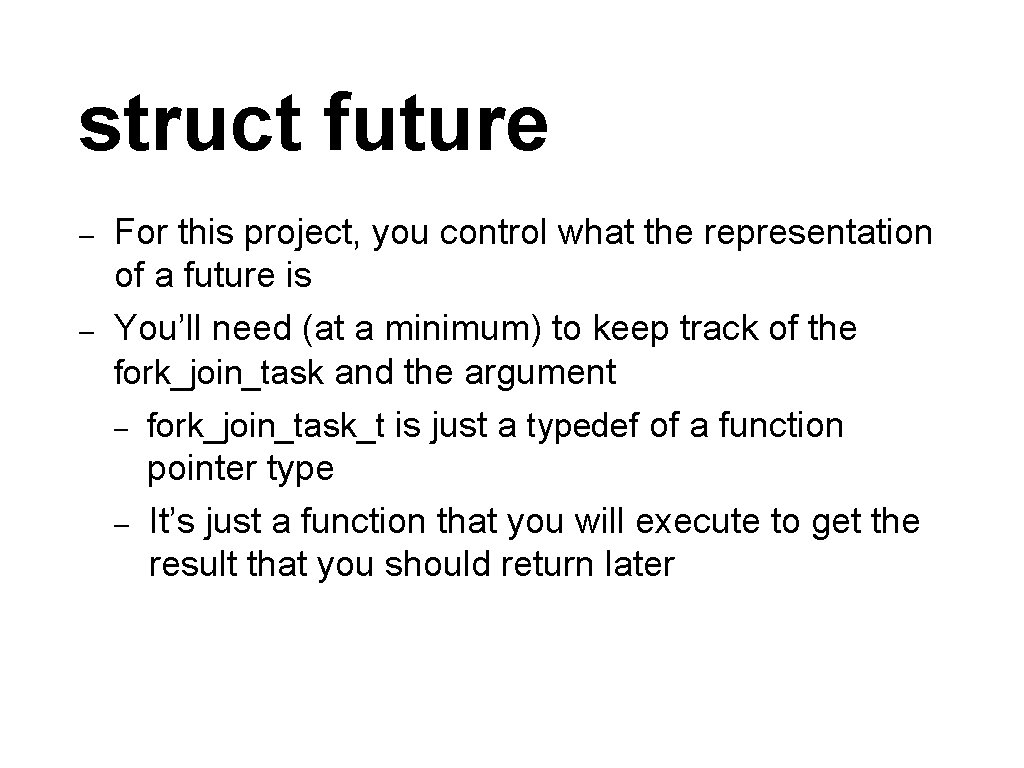

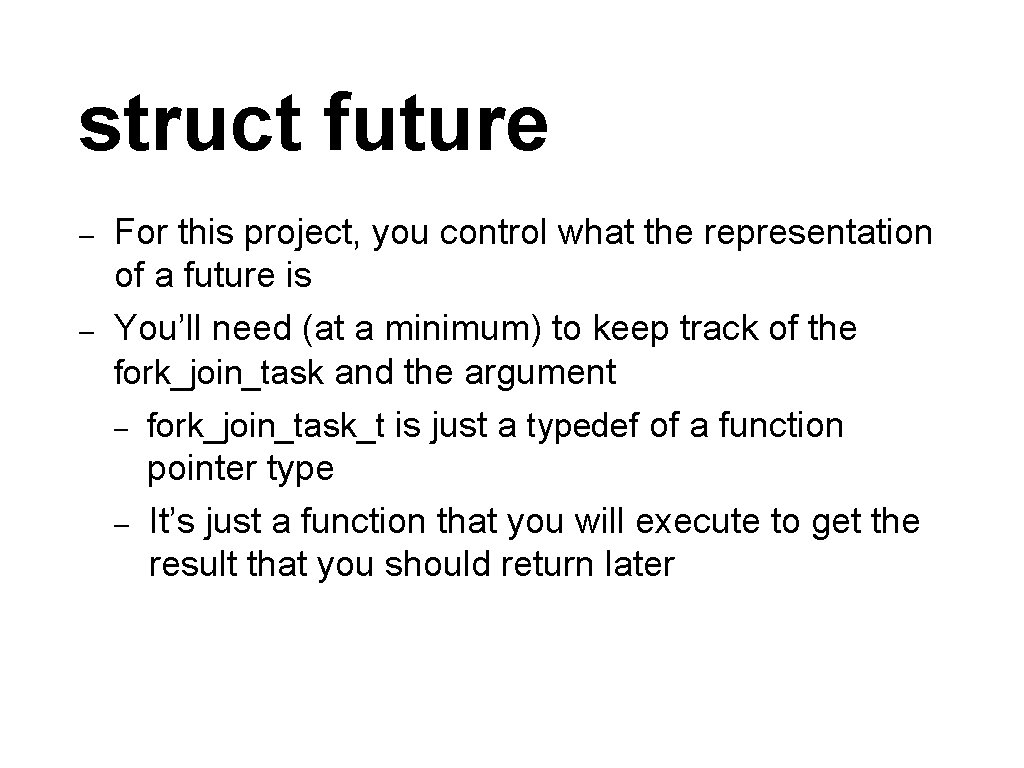

struct future – – For this project, you control what the representation of a future is You’ll need (at a minimum) to keep track of the fork_join_task and the argument – fork_join_task_t is just a typedef of a function pointer type – It’s just a function that you will execute to get the result that you should return later

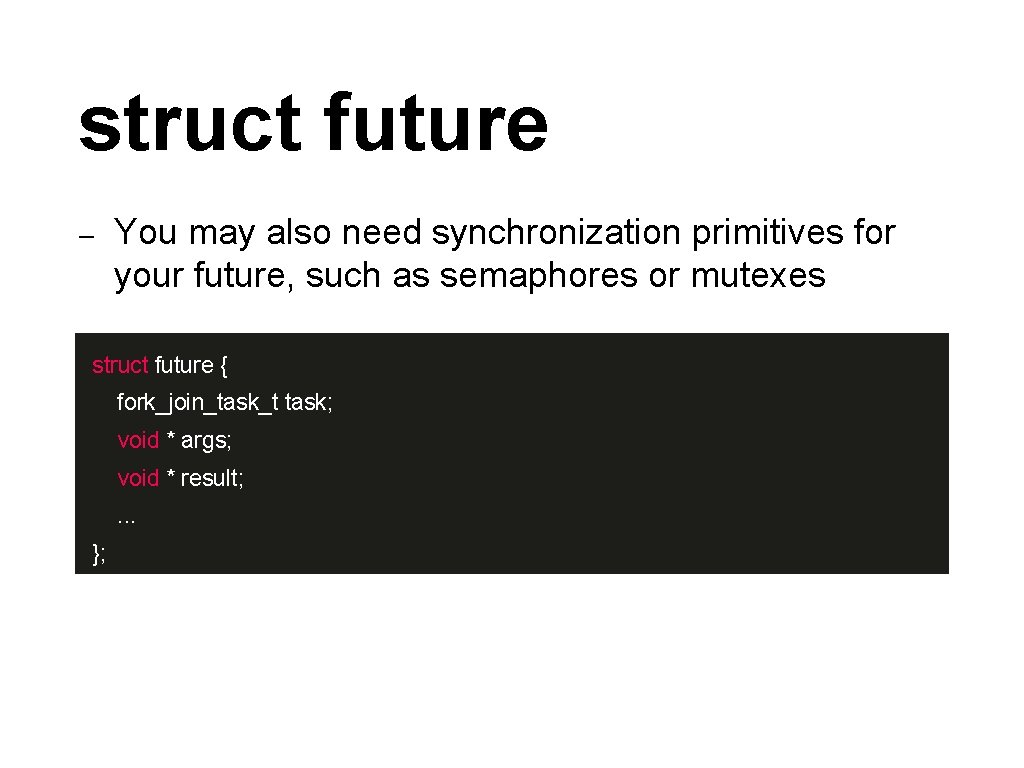

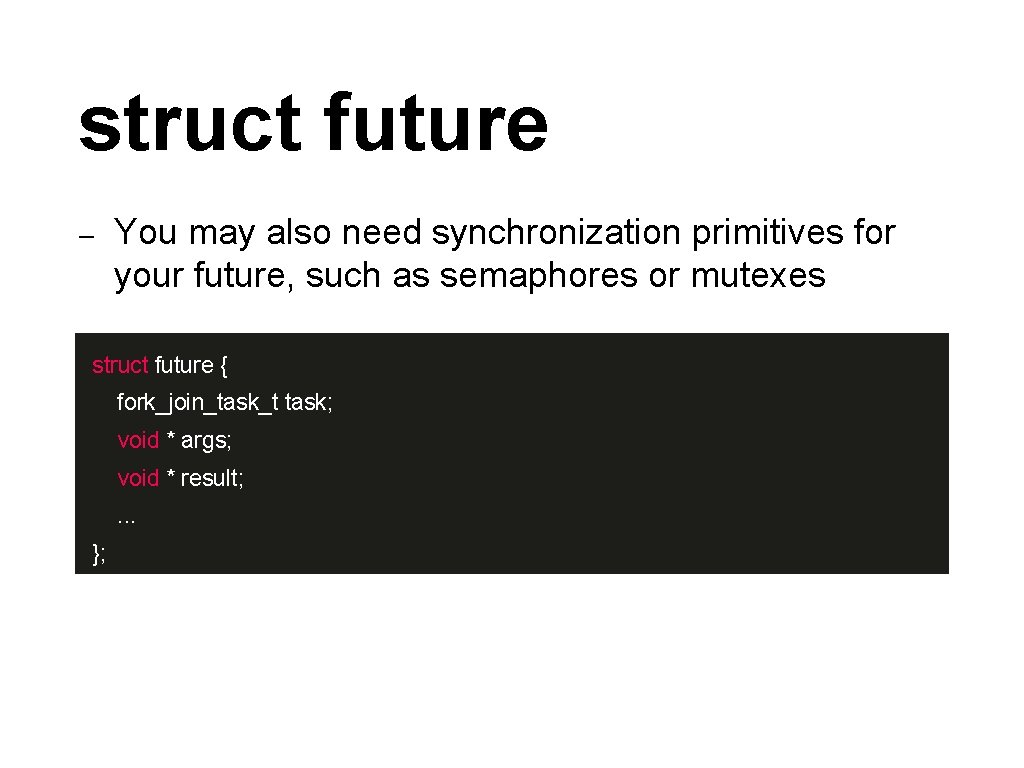

struct future – You may also need synchronization primitives for your future, such as semaphores or mutexes struct future { fork_join_task_t task; void * args; void * result; . . . };

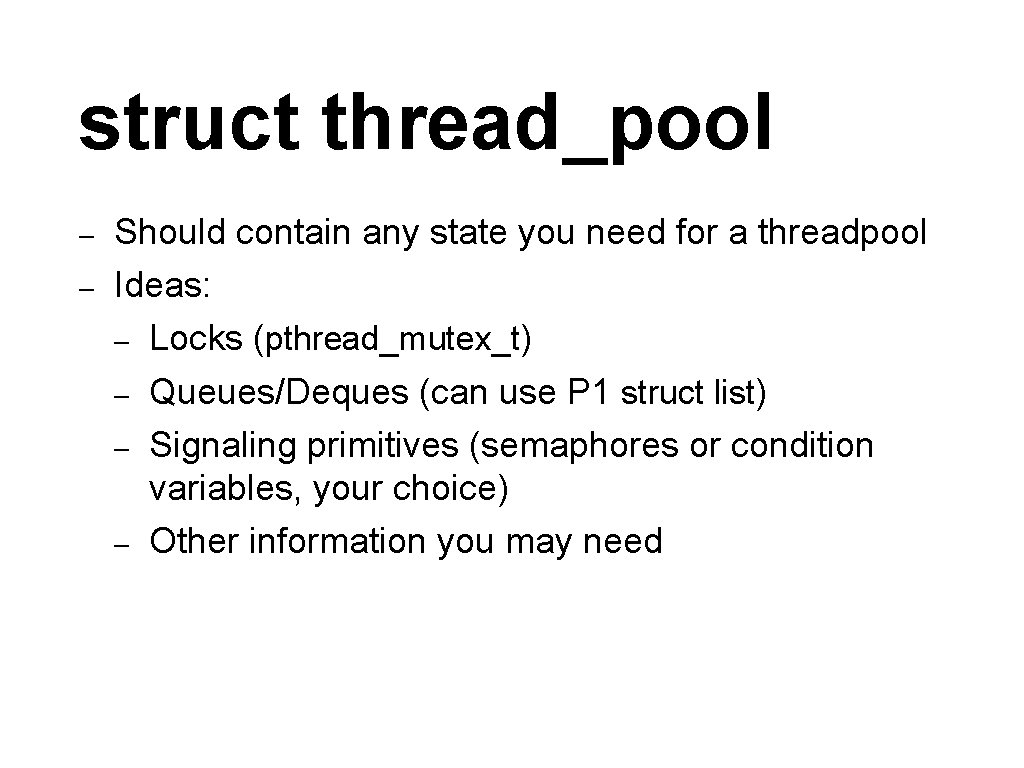

struct thread_pool – – Should contain any state you need for a threadpool Ideas: – Locks (pthread_mutex_t) – Queues/Deques (can use P 1 struct list) – Signaling primitives (semaphores or condition variables, your choice) – Other information you may need

Functions to Implement struct thread_pool * thread_pool_new(int nthreads); void thread_pool_shutdown_and_destroy(struct thread_pool *); struct future * thread_pool_submit( struct thread_pool *pool, fork_join_task_t task, void * data); void * future_get(struct future *); void future_free(struct future *);

Threadpool Design

Threadpool Design – – Methodologies – Split up tasks among n workers – Work sharing – Work stealing Differences? Advantages, disadvantages? – Read section 2. 1 thoroughly No global variables!

![Example mergesortthreadpool tp array A future f threadpoolsubmittp mergesort A left Example mergesort(threadpool tp, array A) { future* f = threadpool_submit(tp, mergesort, A[. . left]);](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-17.jpg)

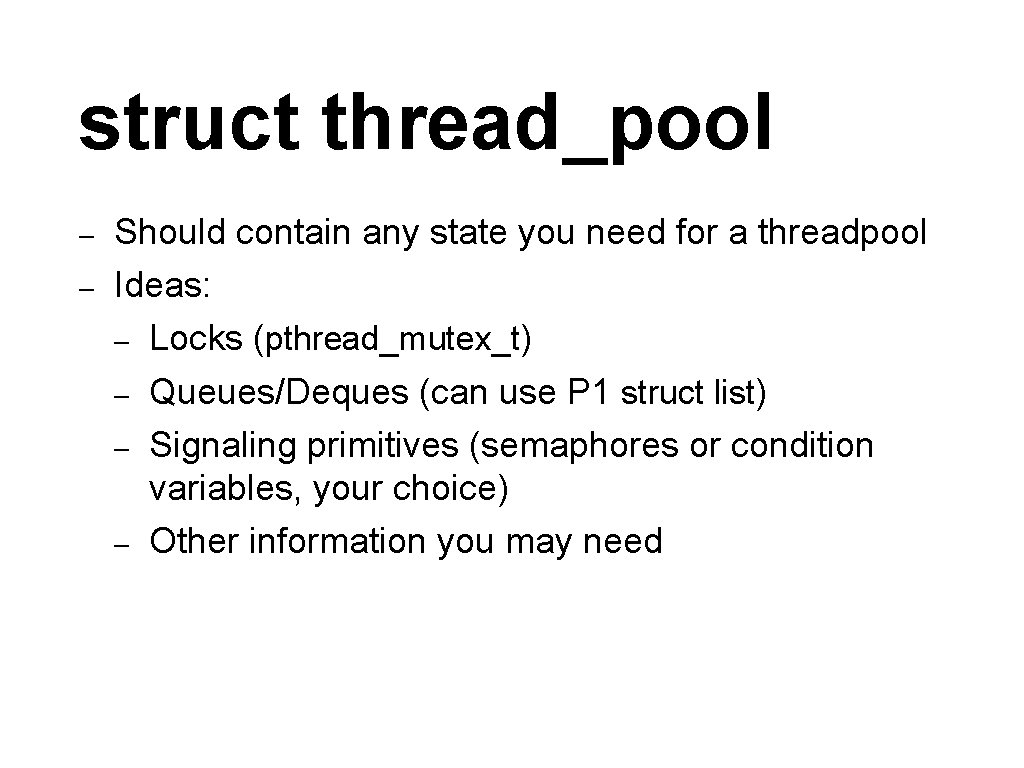

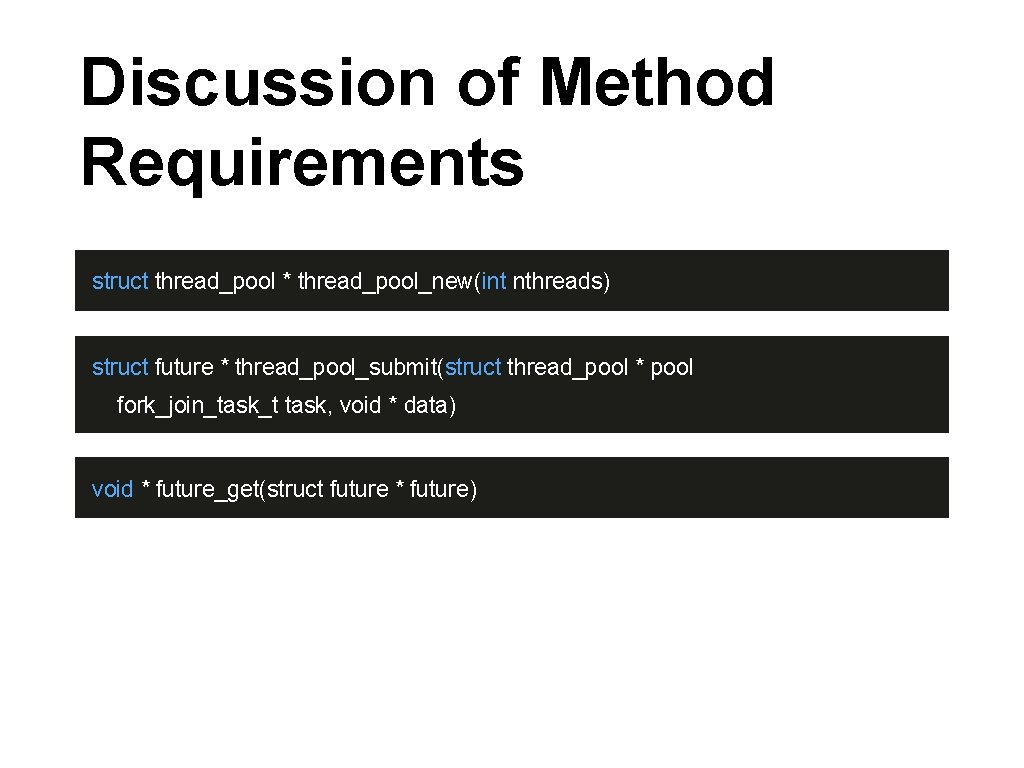

Example mergesort(threadpool tp, array A) { future* f = threadpool_submit(tp, mergesort, A[. . left]); merge_sort_parallel(tp, A[right. . ]); return merge(future_get(f), A[right. . ]); }

![Task Tree sortA0 64 sortA0 32 sortA0 16 sortA32 64 Task Tree sort(A[0. . 64]) sort(A[0. . 32]) sort(A[0. . 16]) sort(A[32. . 64])](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-18.jpg)

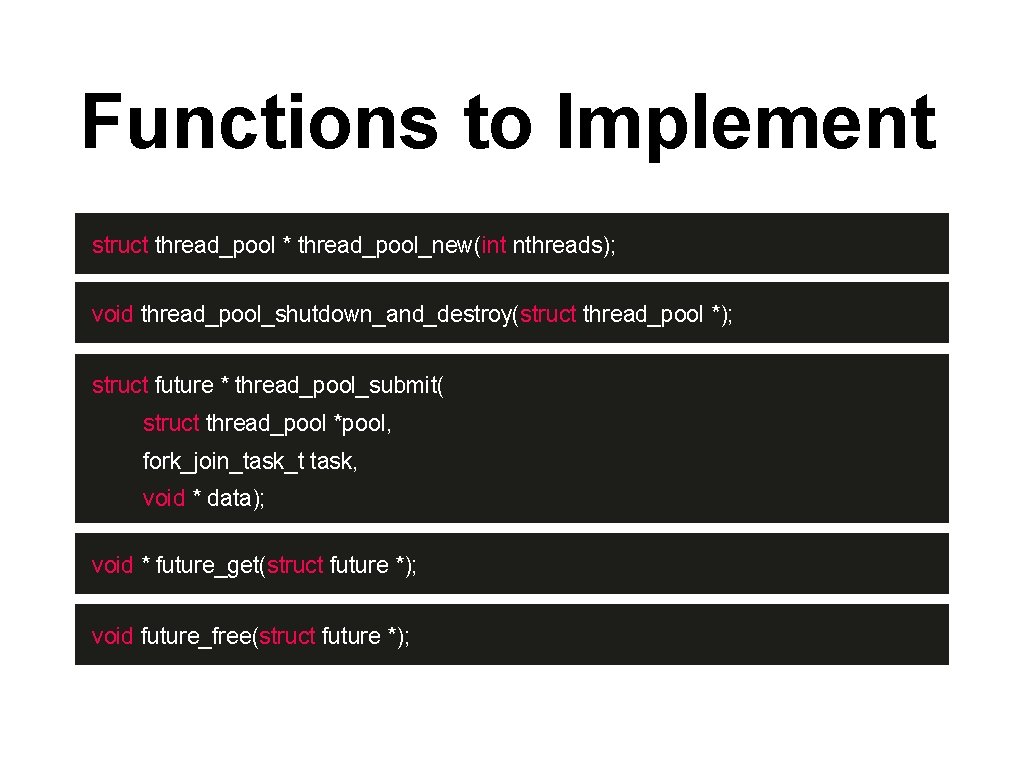

Task Tree sort(A[0. . 64]) sort(A[0. . 32]) sort(A[0. . 16]) sort(A[32. . 64]) sort(A[16. . 32]) sort(A[32. . 48]) sort(A[48

![Illustrated TP API Global sortA0 16 sortA16 32 sortA32 48 sortA0 Illustrated TP API Global sort(A[0. . 16]) sort(A[16. . 32]) sort(A[32. . 48]) sort(A[0.](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-19.jpg)

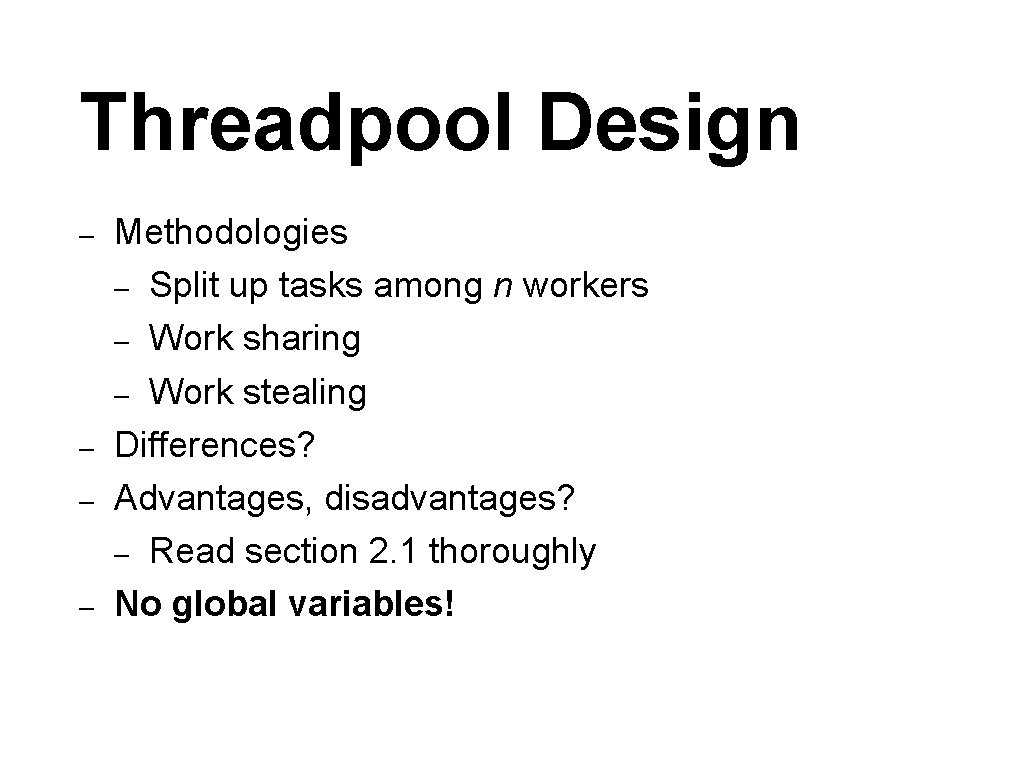

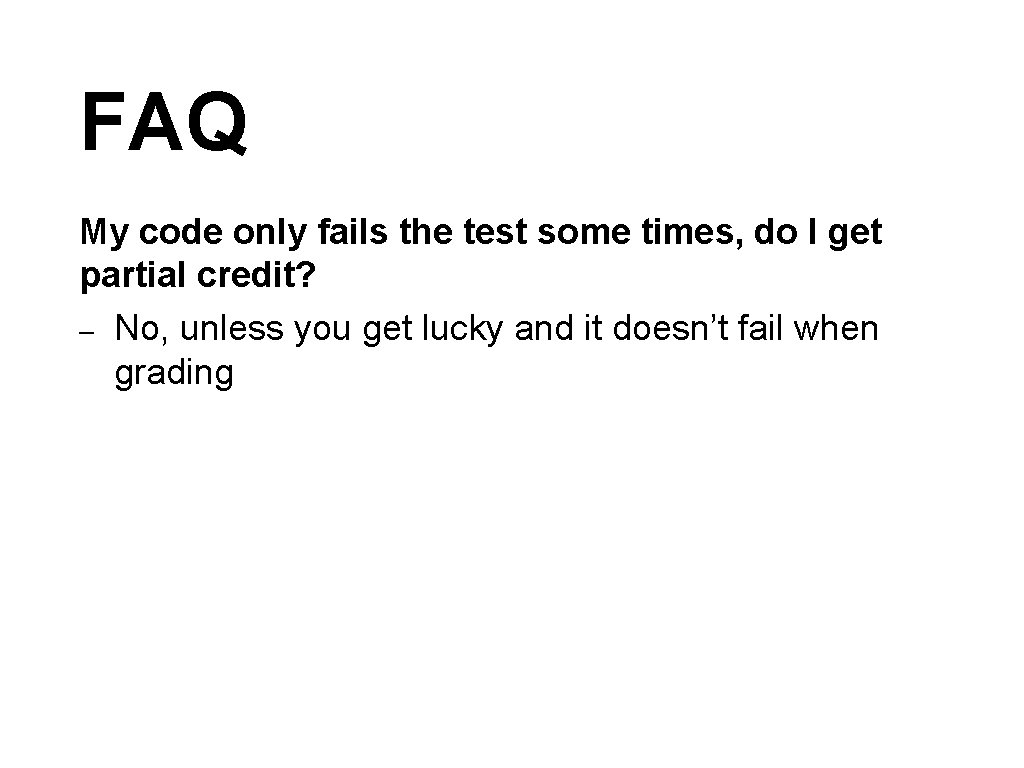

Illustrated TP API Global sort(A[0. . 16]) sort(A[16. . 32]) sort(A[32. . 48]) sort(A[0. . 32]) sort(A[32. . 64]) sort(A[48. . 64]) sort(A[0. . 64]) A �� B ��

![Illustrated Work Stealing Global A sortA0 16 sortA0 32 B Illustrated Work Stealing Global A �� sort(A[0. . 16]) sort(A[0. . 32]) B ��](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-20.jpg)

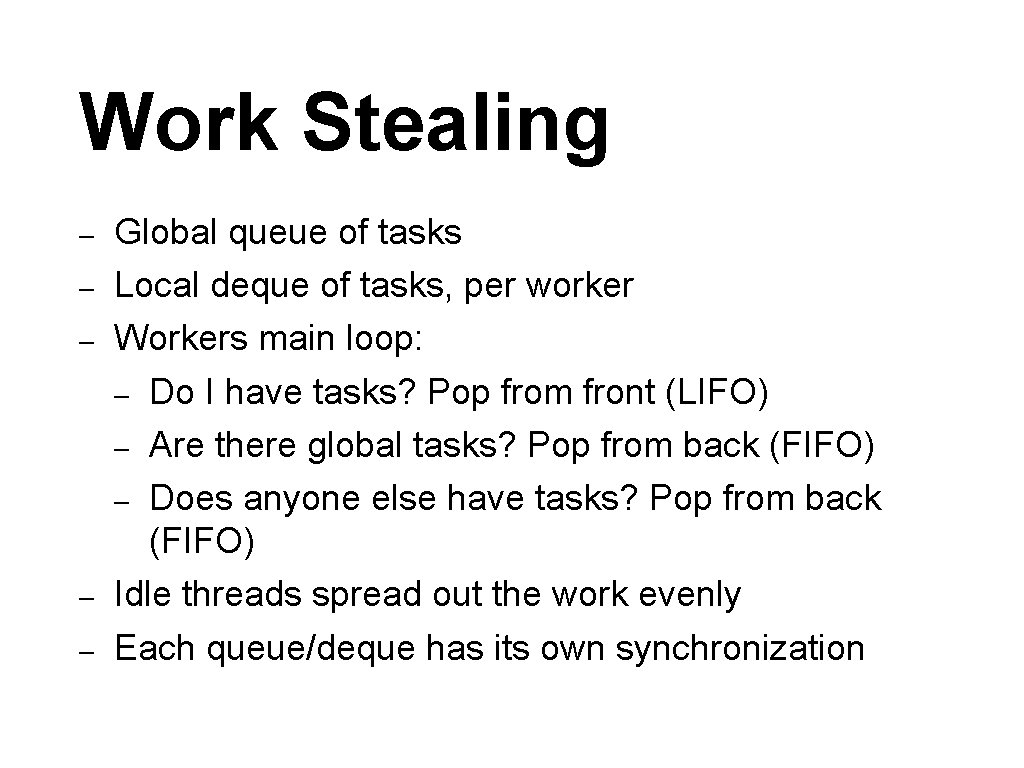

Illustrated Work Stealing Global A �� sort(A[0. . 16]) sort(A[0. . 32]) B �� sort(A[32. . 48]) sort(A[16. . 32]) sort(A[0. . 64]) sort(A[32. . 64]) sort(A[48. . 64])

Work Stealing – – – Global queue of tasks Local deque of tasks, per worker Workers main loop: – Do I have tasks? Pop from front (LIFO) – Are there global tasks? Pop from back (FIFO) – Does anyone else have tasks? Pop from back (FIFO) Idle threads spread out the work evenly Each queue/deque has its own synchronization

Work Helping – – – In future_get, you can only return the result once the future is done executing The task might not be completed when future_get is called It might not even be running yet!

Example fib(int n) = { future* f = submit(fib, n - 1); int y = fib(n - 2); return future_get(f) + y; }

Work Helping – – – Consider what needs to happen for you to get the result from future_get in all cases If the future is already executed, you’re done, you have the result! – Woo! If the future is not done?

Work Helping – – Naively, you could try to block on a semaphore /condition variable until the future is completed, then return the result What if you only have one thread in the threadpool?

Work Helping – – You want to minimize threads sleeping, and maximize the time threads are executing tasks If no threads are executing the task you depend on, you might as well do it yourself What if a thread is executing the task you depend on? May be beneficial to execute other tasks instead of sleeping until that task is done

Implementation Decisions

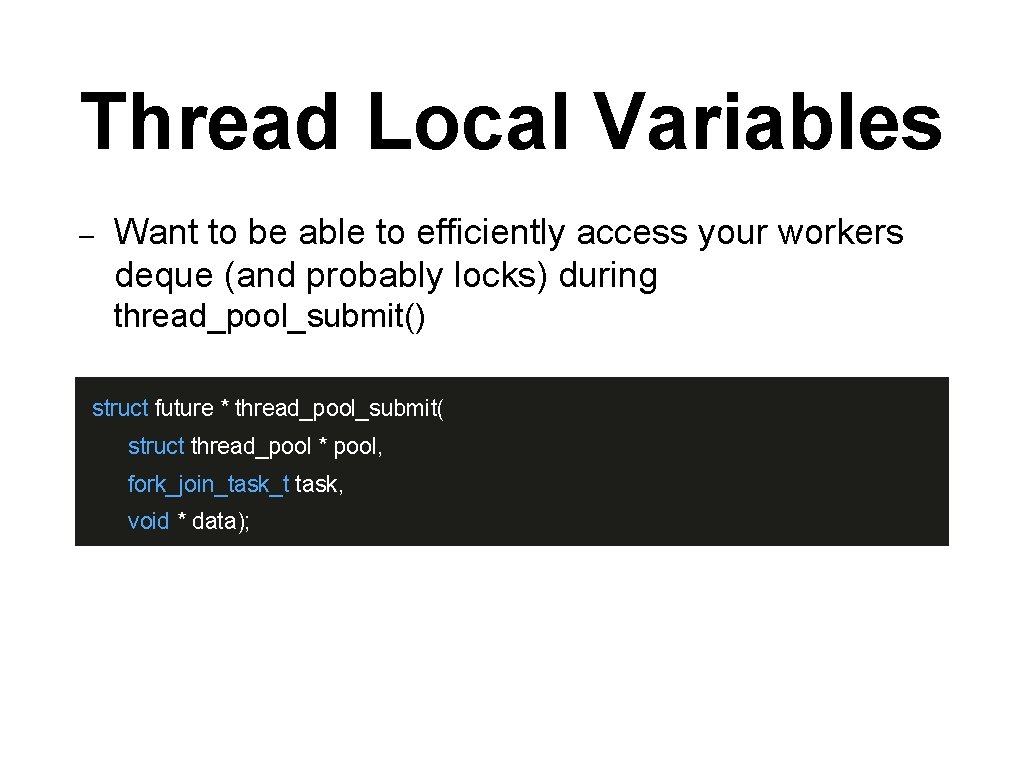

Thread Local Variables – Want to be able to efficiently access your workers deque (and probably locks) during thread_pool_submit() struct future * thread_pool_submit( struct thread_pool * pool, fork_join_task_t task, void * data);

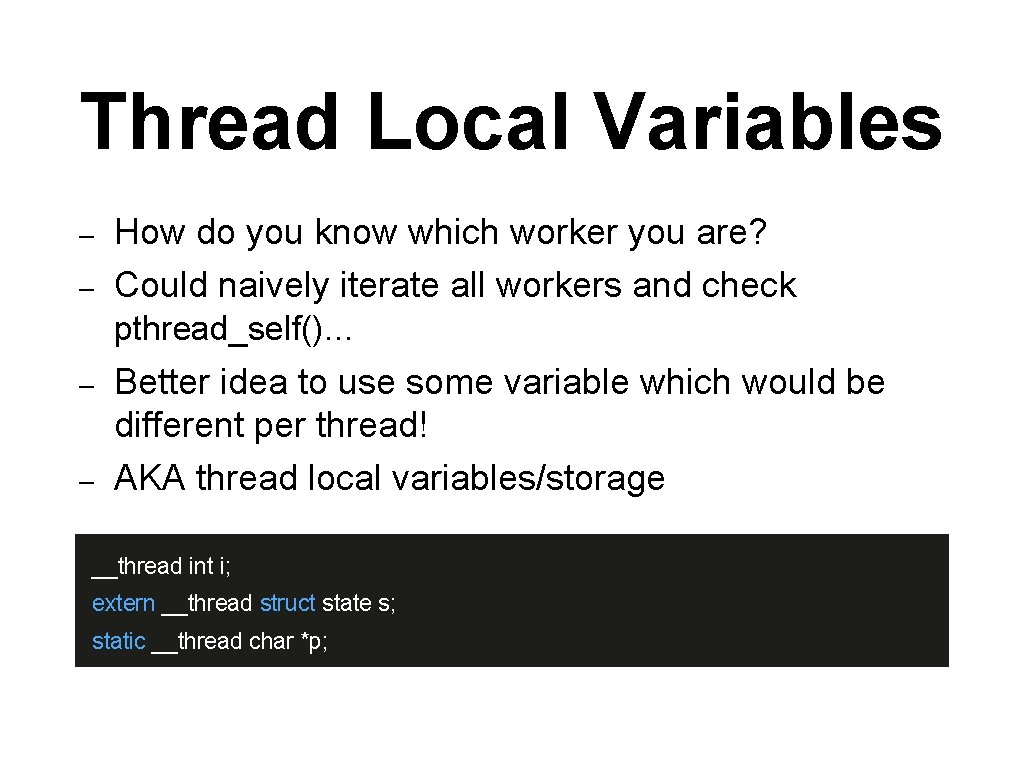

Thread Local Variables – – How do you know which worker you are? Could naively iterate all workers and check pthread_self(). . . Better idea to use some variable which would be different per thread! AKA thread local variables/storage __thread int i; extern __thread struct state s; static __thread char *p;

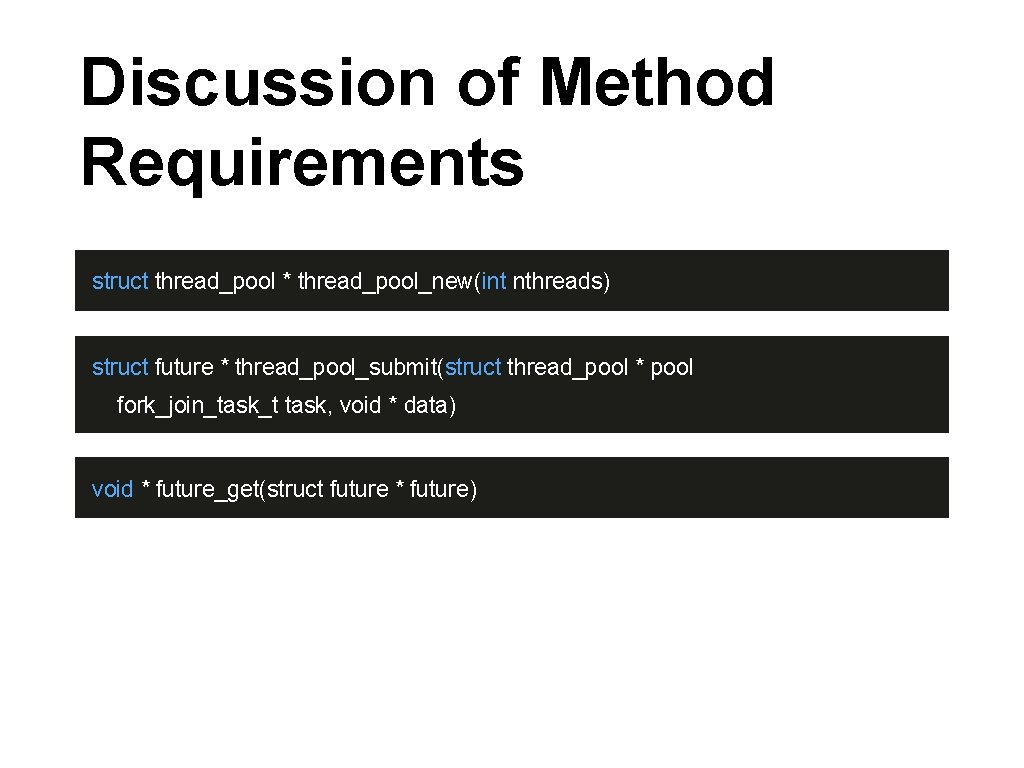

Discussion of Method Requirements struct thread_pool * thread_pool_new(int nthreads) struct future * thread_pool_submit(struct thread_pool * pool fork_join_task_t task, void * data) void * future_get(struct future * future)

Logistics / Grading

Logistics – – – Please submit code that compiles Test using the driver before submitting! – Don’t just run the tests individually “Passing” a test means that you get the correct result without crashing, within the time limit When grading, these tests will be run 3 -5 times, and if you crash a single time, it’s considered failing Benchmarked times will be the average of the 3 -5 runs, assuming you pass all of them

Logistics: Grading – Grade breakdown: – 9 points for logistics (git, documentation, etc. ) – 18 points for basic tests – 28 points for advanced tests – 45 points for performance – New dimension to systems assignments: performance

Logistics: Test Points – Test points breakdown: – 6 points per basic test, 2 per thread count for passing – 2 points per advanced test/size, only counts if you pass all thread counts for a test. – That is, if you pass Mergesort Large with 5 threads, but don’t pass with 20 threads you won’t get any points for Mergesort Large.

Logistics: Performance – – – Relative to peers and sample implementations No multithread performance increase = none of the performance points (you may still get correctness points) Points only for the tests on the scoreboard (N queens, mergesort, quicksort, all the largest size) 5 points per thread count per test Total of 45 points

Logistics: Performance – – Pay attention to how your peers are doing on the scoreboard Aim for less than 5 seconds on the sorts (with 20 threads) and less than 2 seconds on N queens (with 20 threads) as a very loose guideline based on previous results

![Test Driver cs 3214binfjdriver py options Run with r for only Test Driver $ ~cs 3214/bin/fjdriver. py [options] – – Run with -r for only](https://slidetodoc.com/presentation_image_h2/a8a4dff3e0b61314f5ebd2655724f64c/image-37.jpg)

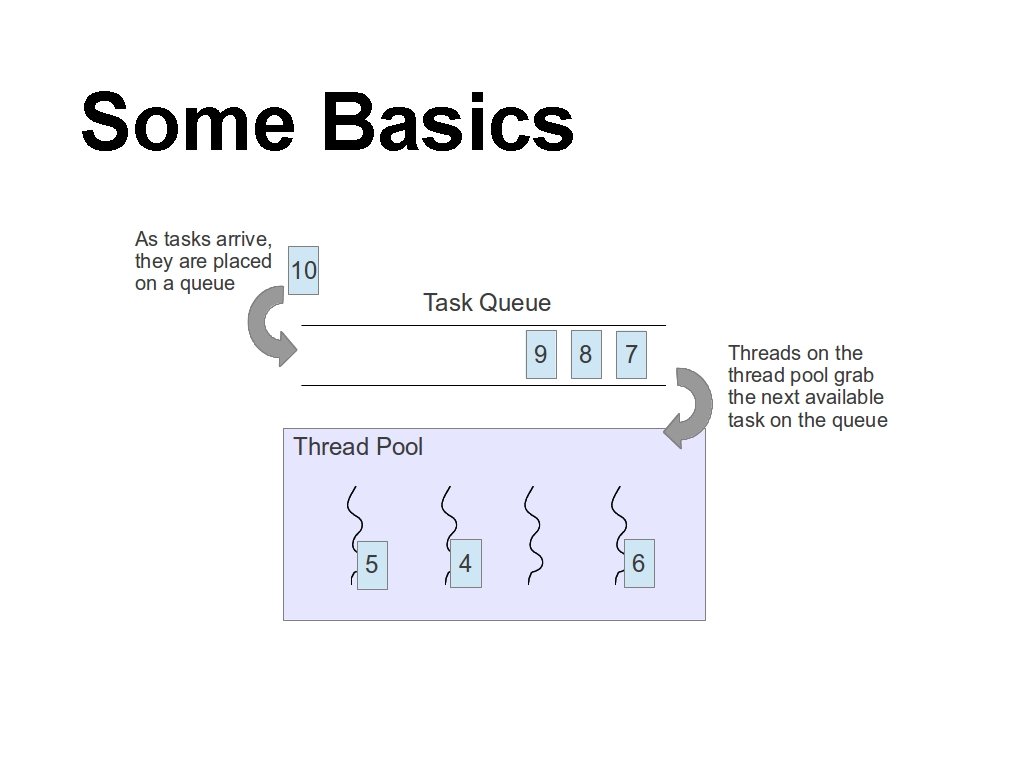

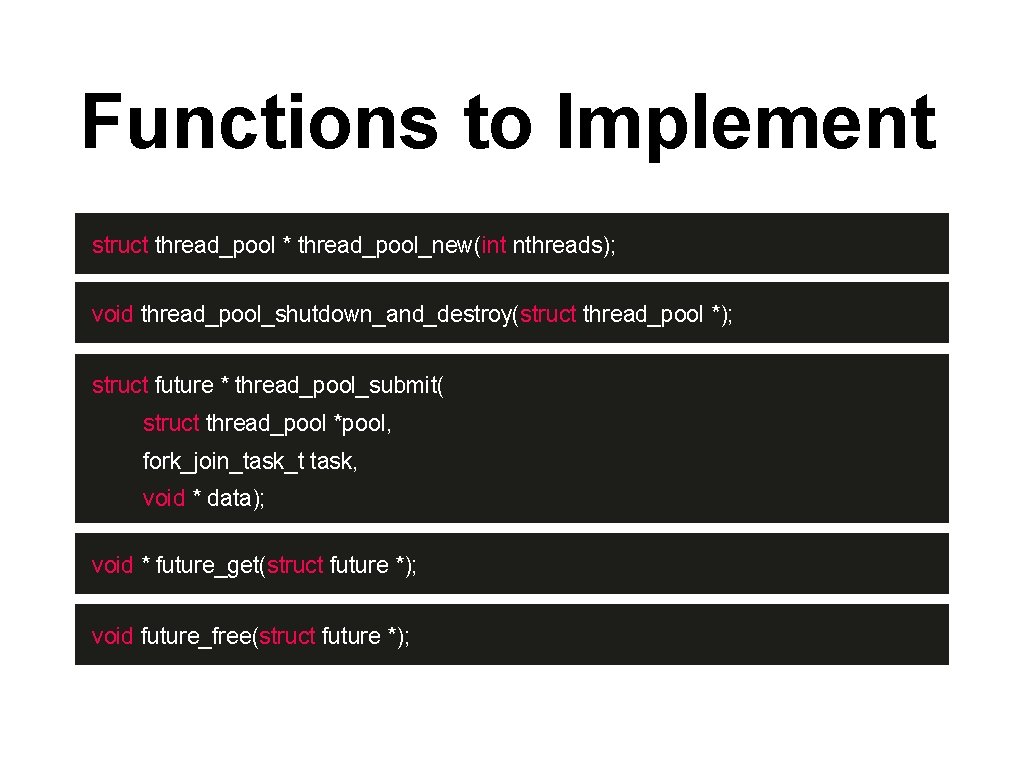

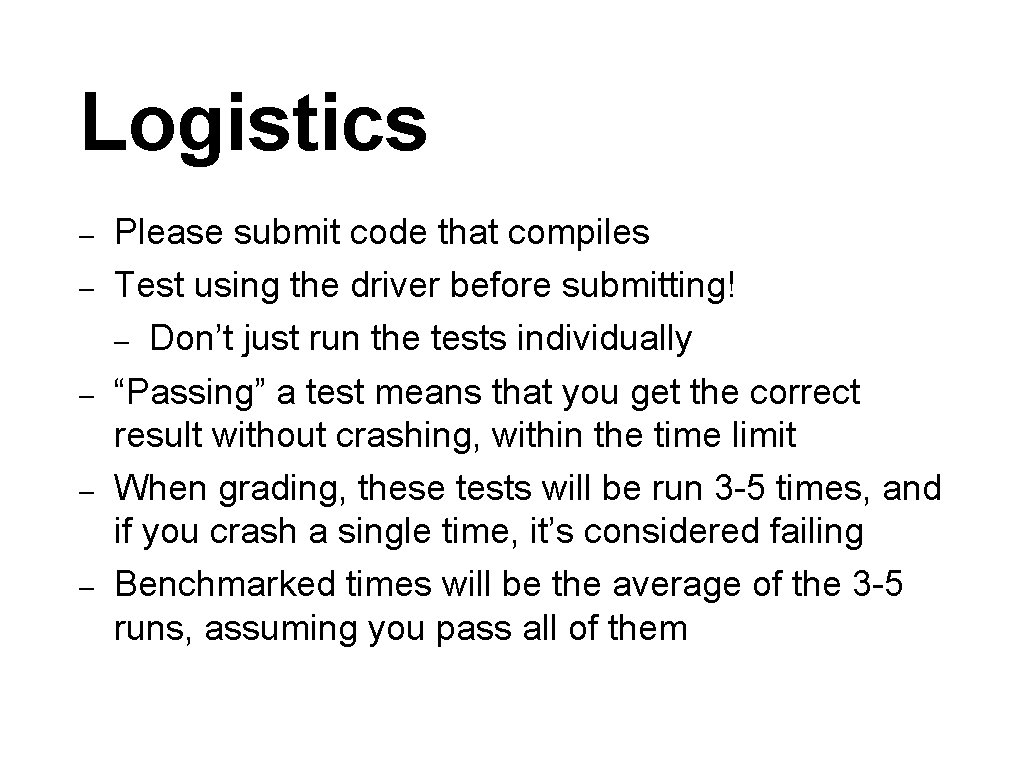

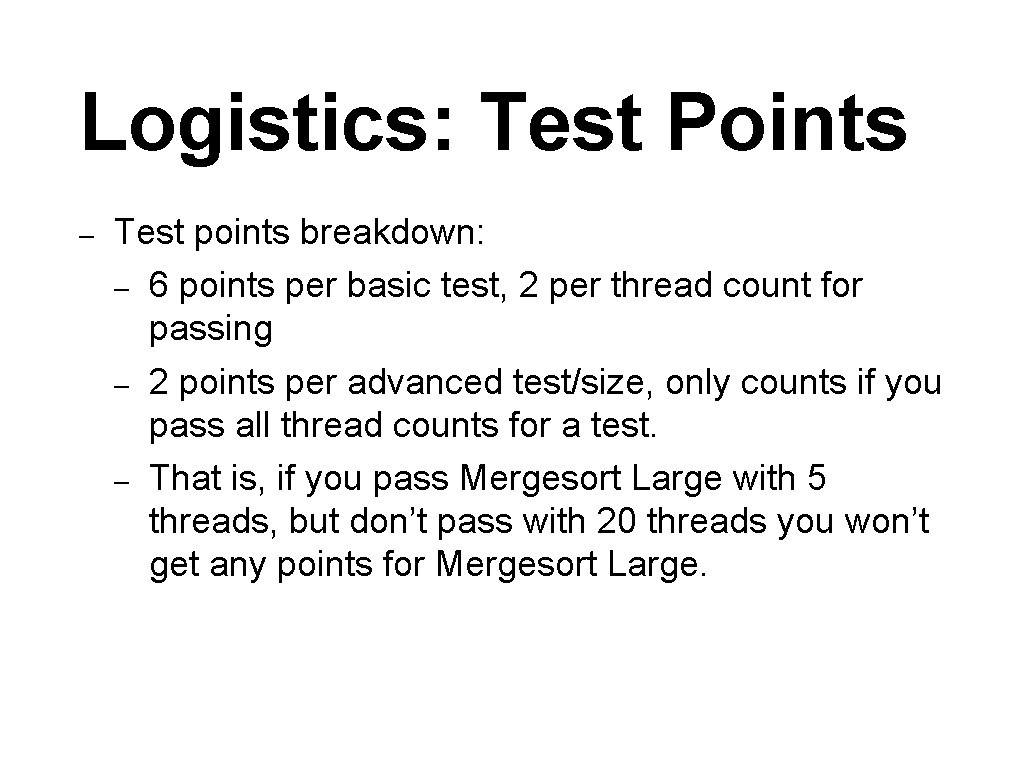

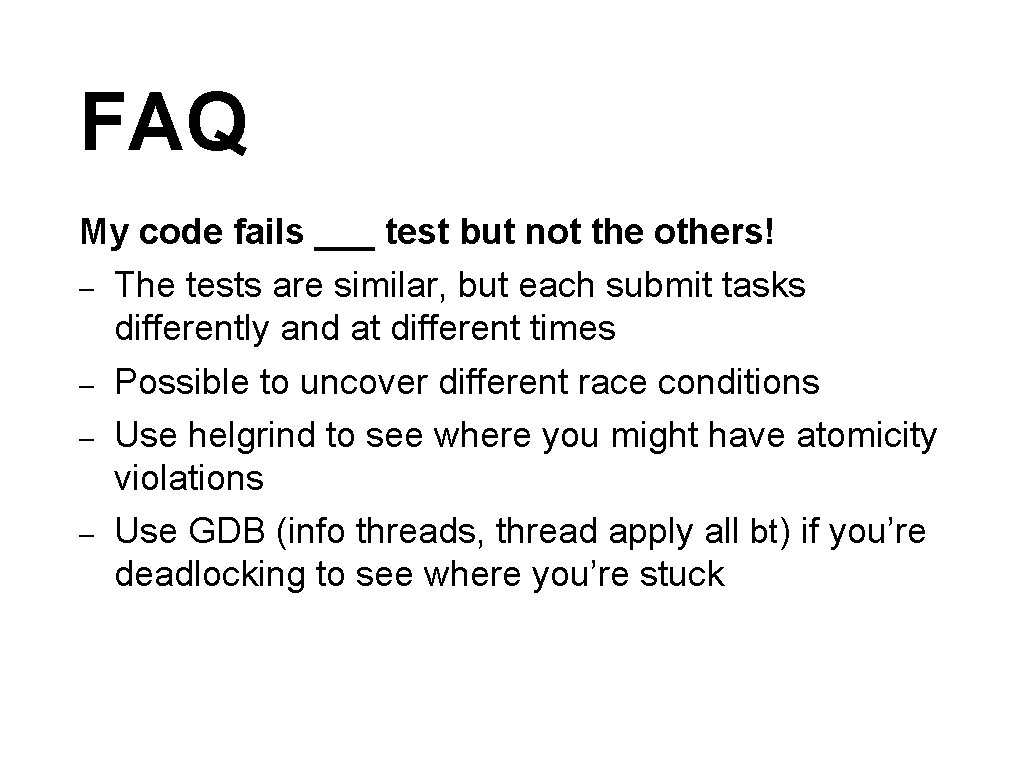

Test Driver $ ~cs 3214/bin/fjdriver. py [options] – – Run with -r for only basic tests Run with -t basic 1 to select one test Can take a long time to run all tests Reports if you passed each test, and times for the benchmarked ones

Test Driver – – Make sure you run multiple times, race conditions can cause you to crash only 20% of the time Will run multiple times to ensure consistency when grading (and get a good average for times) All of the tests are C programs, compiled against your threadpool Threadpool acts as a library – Some toy tests (parallel fibonacci) – Some more practical tests that are benchmarked

Test Driver – Simulate grading environment: $ ~cs 3214/bin/fjdriver. py -g -B 5 – Runs the tests 5 times and averages results

Scoreboard – – – https: //courses. cs. vt. edu/~cs 3214/spring 2019/#/fjpo olstats You can post your results to the scoreboard by using the fjpostresults. py script Remove your old submissions to not clog it up

FAQ / Discussion

FAQ How long does this take? – Writing, not so long – Implementations roughly 250 lines – Can spend a lot of time debugging – GDB, Helgrind, and regular Valgrind are friends – Start early, as with all the projects – Don’t underestimate creating prototypes or trying out different strategies

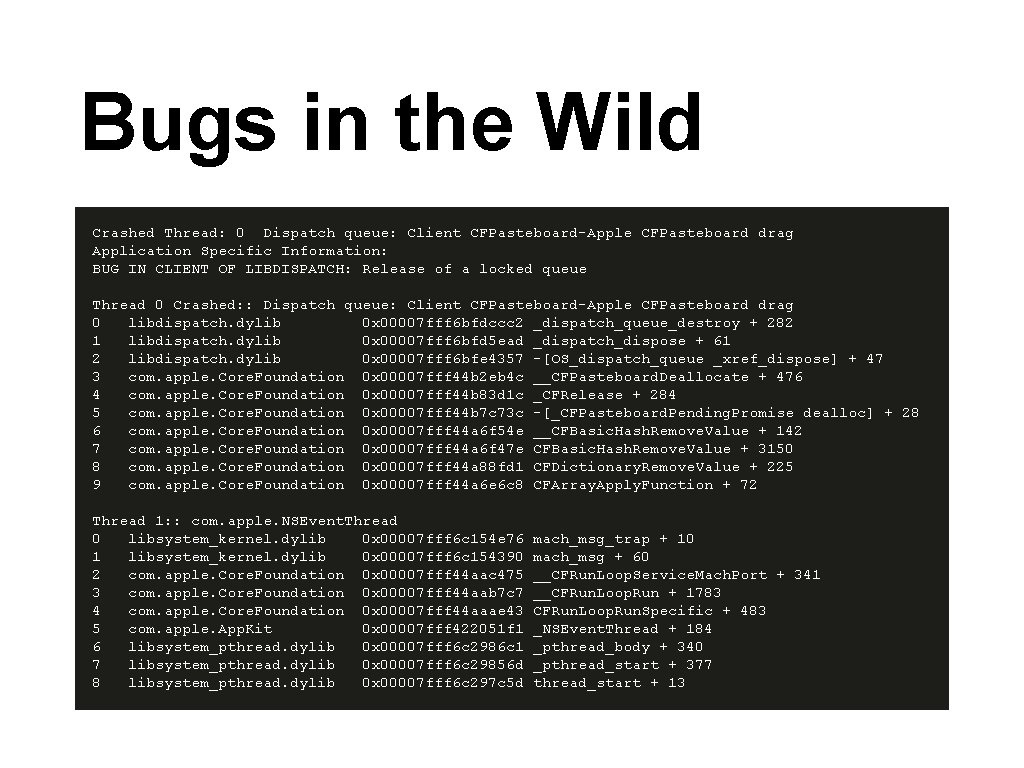

FAQ My code only fails the test some times, do I get partial credit? – No, unless you get lucky and it doesn’t fail when grading

FAQ My code fails ___ test but not the others! – The tests are similar, but each submit tasks differently and at different times – Possible to uncover different race conditions – Use helgrind to see where you might have atomicity violations – Use GDB (info threads, thread apply all bt) if you’re deadlocking to see where you’re stuck

Debugging – – – Multi-threading is difficult Sometimes GDB will be useful, other times you may want to try Helgrind instead Unless you’re doing something very fancy, your code should run quietly under Helgrind – However, running quietly under Helgrind gives no guarantees

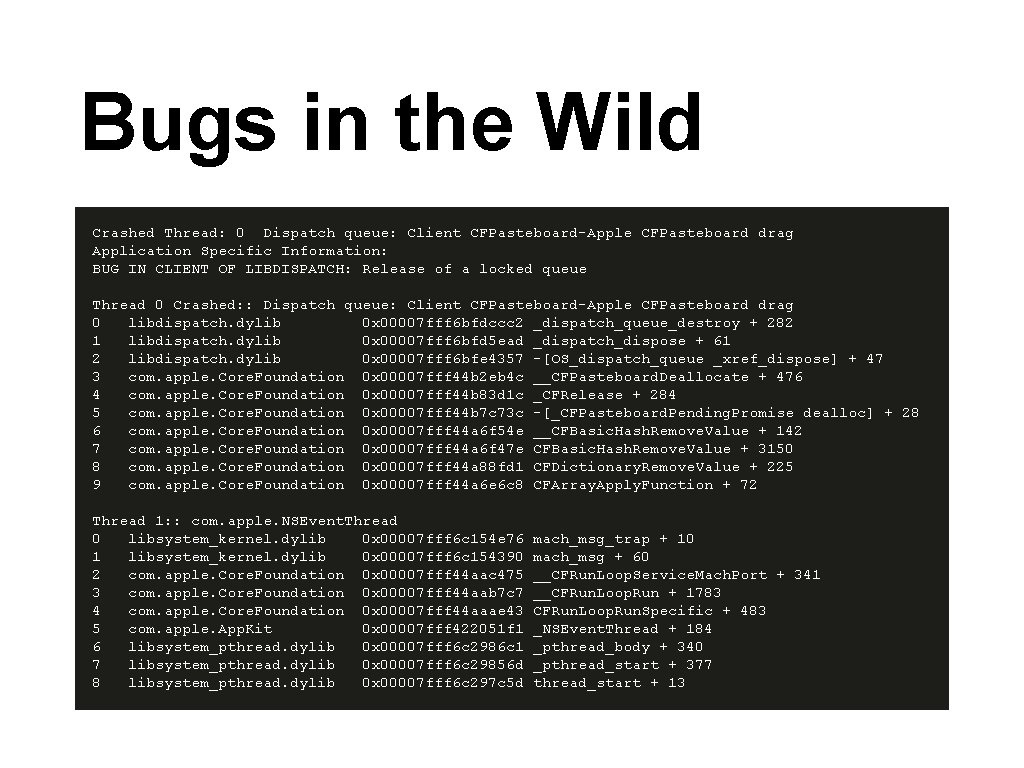

Bugs in the Wild Crashed Thread: 0 Dispatch queue: Client CFPasteboard-Apple CFPasteboard drag Application Specific Information: BUG IN CLIENT OF LIBDISPATCH: Release of a locked queue Thread 0 Crashed: : Dispatch queue: Client CFPasteboard-Apple CFPasteboard drag 0 libdispatch. dylib 0 x 00007 fff 6 bfdccc 2 _dispatch_queue_destroy + 282 1 libdispatch. dylib 0 x 00007 fff 6 bfd 5 ead _dispatch_dispose + 61 2 libdispatch. dylib 0 x 00007 fff 6 bfe 4357 -[OS_dispatch_queue _xref_dispose] + 47 3 com. apple. Core. Foundation 0 x 00007 fff 44 b 2 eb 4 c __CFPasteboard. Deallocate + 476 4 com. apple. Core. Foundation 0 x 00007 fff 44 b 83 d 1 c _CFRelease + 284 5 com. apple. Core. Foundation 0 x 00007 fff 44 b 7 c 73 c -[_CFPasteboard. Pending. Promise dealloc] + 28 6 com. apple. Core. Foundation 0 x 00007 fff 44 a 6 f 54 e __CFBasic. Hash. Remove. Value + 142 7 com. apple. Core. Foundation 0 x 00007 fff 44 a 6 f 47 e CFBasic. Hash. Remove. Value + 3150 8 com. apple. Core. Foundation 0 x 00007 fff 44 a 88 fd 1 CFDictionary. Remove. Value + 225 9 com. apple. Core. Foundation 0 x 00007 fff 44 a 6 e 6 c 8 CFArray. Apply. Function + 72 Thread 1: : com. apple. NSEvent. Thread 0 libsystem_kernel. dylib 0 x 00007 fff 6 c 154 e 76 1 libsystem_kernel. dylib 0 x 00007 fff 6 c 154390 2 com. apple. Core. Foundation 0 x 00007 fff 44 aac 475 3 com. apple. Core. Foundation 0 x 00007 fff 44 aab 7 c 7 4 com. apple. Core. Foundation 0 x 00007 fff 44 aaae 43 5 com. apple. App. Kit 0 x 00007 fff 422051 f 1 6 libsystem_pthread. dylib 0 x 00007 fff 6 c 2986 c 1 7 libsystem_pthread. dylib 0 x 00007 fff 6 c 29856 d 8 libsystem_pthread. dylib 0 x 00007 fff 6 c 297 c 5 d mach_msg_trap + 10 mach_msg + 60 __CFRun. Loop. Service. Mach. Port + 341 __CFRun. Loop. Run + 1783 CFRun. Loop. Run. Specific + 483 _NSEvent. Thread + 184 _pthread_body + 340 _pthread_start + 377 thread_start + 13

Debugging – – Also try out Willgrind, a tool developed specifically for this project. Find race conditions, deadlock, and even profile how balanced the tasks are between your threads.

Questions? Thank you for attending!