COS 318 Project 4 Preemptive Scheduling Fall 2002

- Slides: 23

COS 318 - Project #4 Preemptive Scheduling Fall 2002 3/5/2021

Overview § Implement preemptive OS with: § All the functionality of preview project’s OS § Additional thread synchronization: § MESA style monitors (condition variables) § Support for a preemptive scheduling: § Implement the timed interrupt handler (irq 0) § Enforce all necessary atomicity 3/5/2021

Review Processes and Threads § Threads (trusted) § Linked with the kernel § Can share address space, variables, etc. § Access kernel services (yield, exit, lock_*, condition_*, getpid(), *priority()) with direct calls to kernel functions § Use a single stack while running thread code and kernel code *Differences from previous project in italics 3/5/2021

Review Processes and Threads cont’d § Processes (untrusted) § Linked separately from kernel § Appear after kernel in image file § Cannot share address space, variables, etc. § Access kernel services (yield, exit, getpid, *priority) via a unified system call mechanism § Use a user stack while running process code and a kernel stack whilst running kernel level code *Differences from previous project in italics 3/5/2021

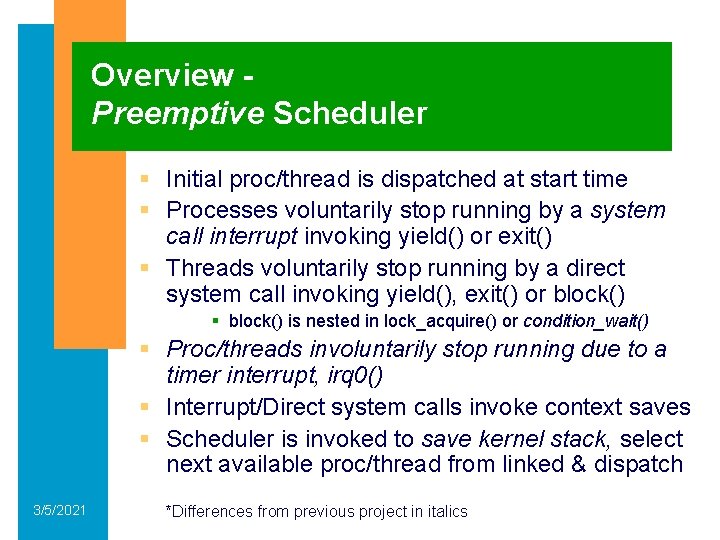

Overview Preemptive Scheduler § Initial proc/thread is dispatched at start time § Processes voluntarily stop running by a system call interrupt invoking yield() or exit() § Threads voluntarily stop running by a direct system call invoking yield(), exit() or block() § block() is nested in lock_acquire() or condition_wait() § Proc/threads involuntarily stop running due to a timer interrupt, irq 0() § Interrupt/Direct system calls invoke context saves § Scheduler is invoked to save kernel stack, select next available proc/thread from linked & dispatch 3/5/2021 *Differences from previous project in italics

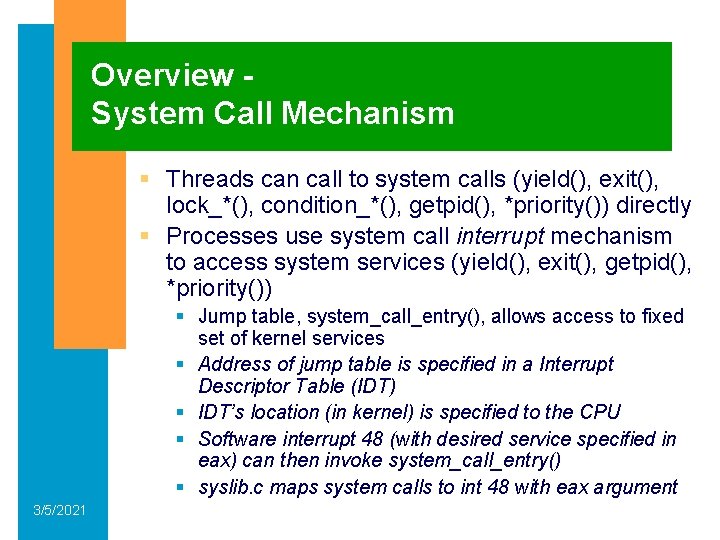

Overview System Call Mechanism § Threads can call to system calls (yield(), exit(), lock_*(), condition_*(), getpid(), *priority()) directly § Processes use system call interrupt mechanism to access system services (yield(), exit(), getpid(), *priority()) § Jump table, system_call_entry(), allows access to fixed set of kernel services § Address of jump table is specified in a Interrupt Descriptor Table (IDT) § IDT’s location (in kernel) is specified to the CPU § Software interrupt 48 (with desired service specified in eax) can then invoke system_call_entry() § syslib. c maps system calls to int 48 with eax argument 3/5/2021

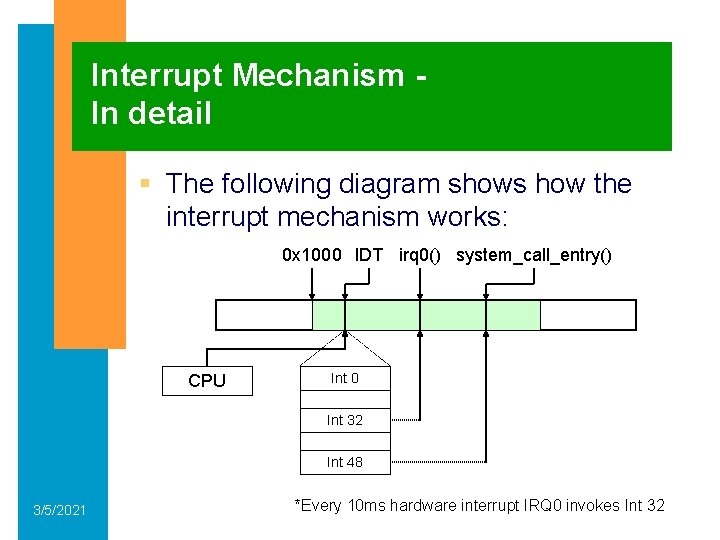

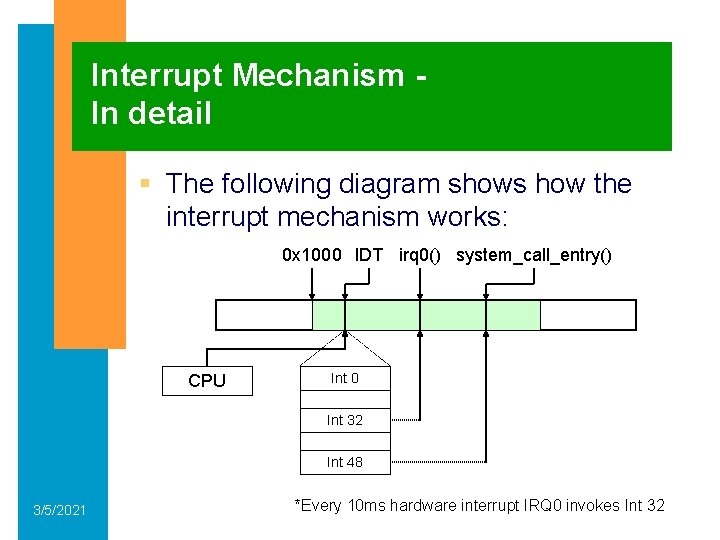

Interrupt Mechanism In detail § The following diagram shows how the interrupt mechanism works: 0 x 1000 IDT irq 0() system_call_entry() CPU Int 0 Int 32 Int 48 3/5/2021 *Every 10 ms hardware interrupt IRQ 0 invokes Int 32

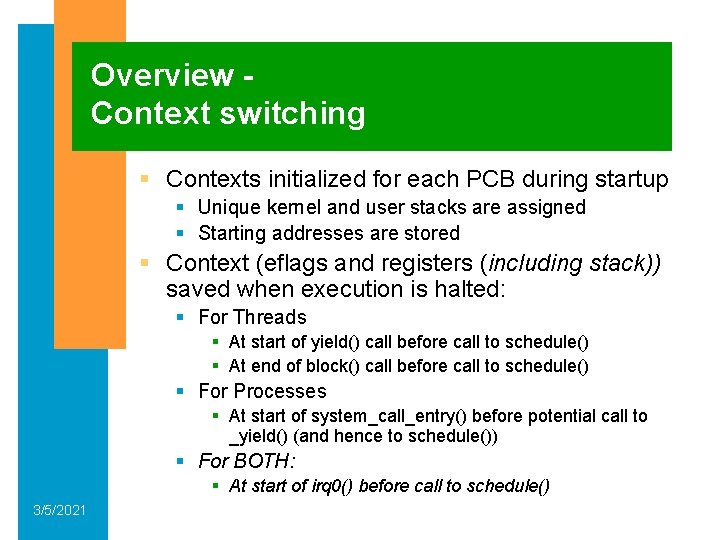

Overview Context switching § Contexts initialized for each PCB during startup § Unique kernel and user stacks are assigned § Starting addresses are stored § Context (eflags and registers (including stack)) saved when execution is halted: § For Threads § At start of yield() call before call to schedule() § At end of block() call before call to schedule() § For Processes § At start of system_call_entry() before potential call to _yield() (and hence to schedule()) § For BOTH: § At start of irq 0() before call to schedule() 3/5/2021

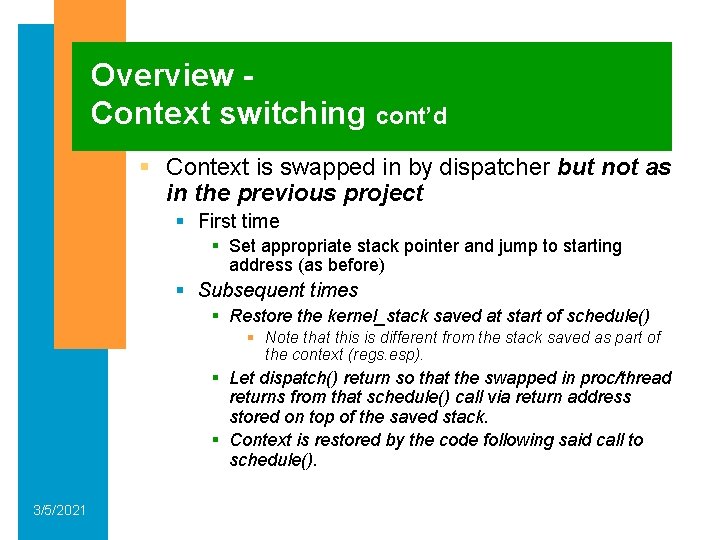

Overview Context switching cont’d § Context is swapped in by dispatcher but not as in the previous project § First time § Set appropriate stack pointer and jump to starting address (as before) § Subsequent times § Restore the kernel_stack saved at start of schedule() § Note that this is different from the stack saved as part of the context (regs. esp). § Let dispatch() return so that the swapped in proc/thread returns from that schedule() call via return address stored on top of the saved stack. § Context is restored by the code following said call to schedule(). 3/5/2021

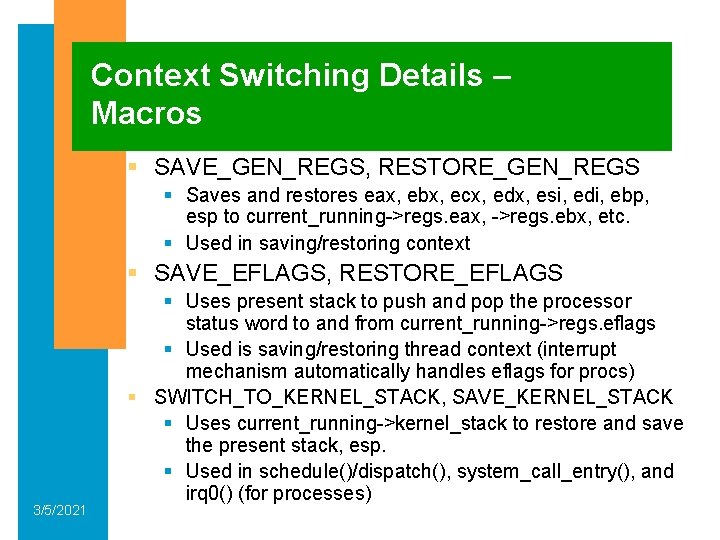

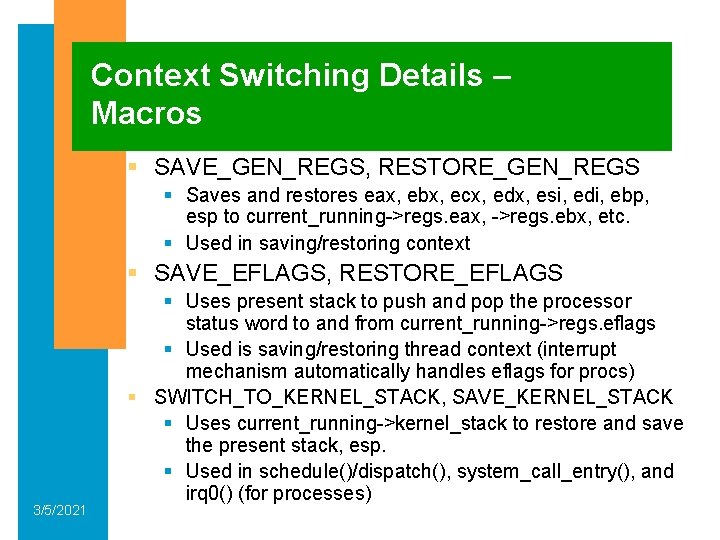

Context Switching Details – Macros § SAVE_GEN_REGS, RESTORE_GEN_REGS § Saves and restores eax, ebx, ecx, edx, esi, edi, ebp, esp to current_running->regs. eax, ->regs. ebx, etc. § Used in saving/restoring context § SAVE_EFLAGS, RESTORE_EFLAGS 3/5/2021 § Uses present stack to push and pop the processor status word to and from current_running->regs. eflags § Used is saving/restoring thread context (interrupt mechanism automatically handles eflags for procs) § SWITCH_TO_KERNEL_STACK, SAVE_KERNEL_STACK § Uses current_running->kernel_stack to restore and save the present stack, esp. § Used in schedule()/dispatch(), system_call_entry(), and irq 0() (for processes)

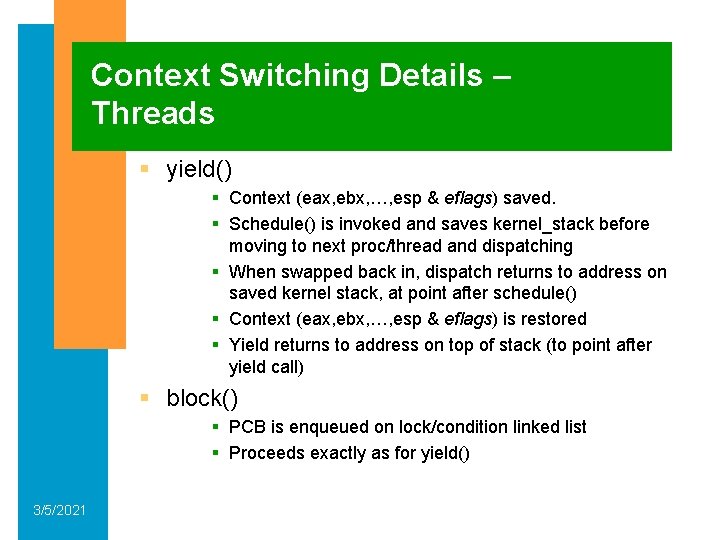

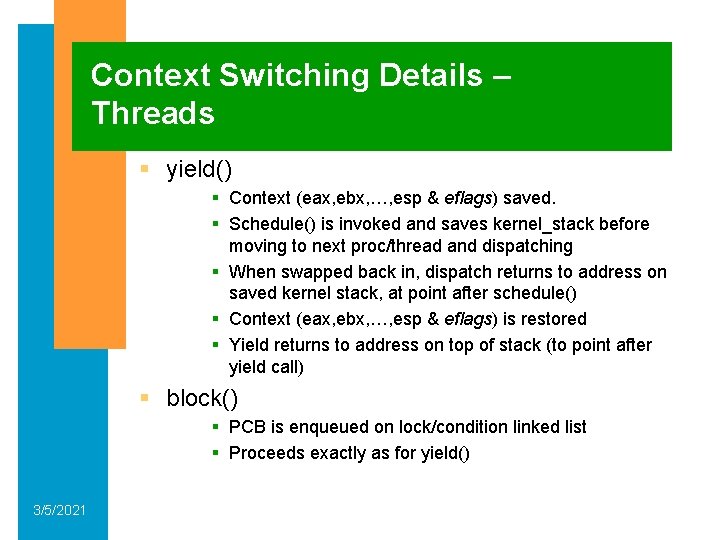

Context Switching Details – Threads § yield() § Context (eax, ebx, …, esp & eflags) saved. § Schedule() is invoked and saves kernel_stack before moving to next proc/thread and dispatching § When swapped back in, dispatch returns to address on saved kernel stack, at point after schedule() § Context (eax, ebx, …, esp & eflags) is restored § Yield returns to address on top of stack (to point after yield call) § block() § PCB is enqueued on lock/condition linked list § Proceeds exactly as for yield() 3/5/2021

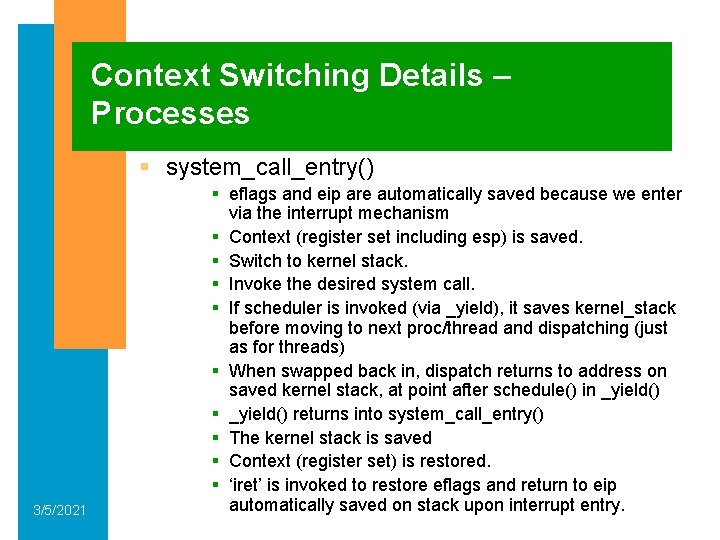

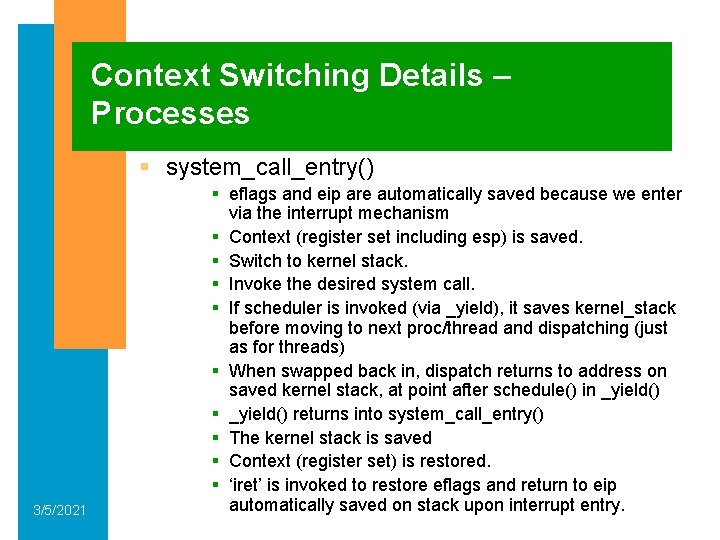

Context Switching Details – Processes § system_call_entry() 3/5/2021 § eflags and eip are automatically saved because we enter via the interrupt mechanism § Context (register set including esp) is saved. § Switch to kernel stack. § Invoke the desired system call. § If scheduler is invoked (via _yield), it saves kernel_stack before moving to next proc/thread and dispatching (just as for threads) § When swapped back in, dispatch returns to address on saved kernel stack, at point after schedule() in _yield() § _yield() returns into system_call_entry() § The kernel stack is saved § Context (register set) is restored. § ‘iret’ is invoked to restore eflags and return to eip automatically saved on stack upon interrupt entry.

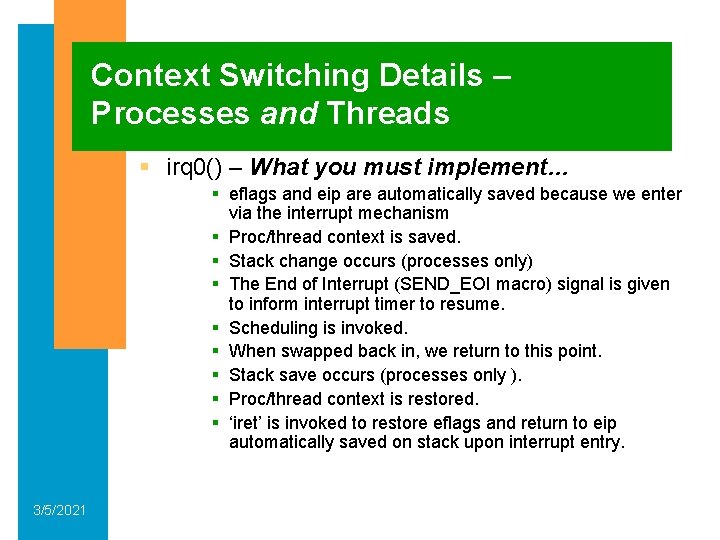

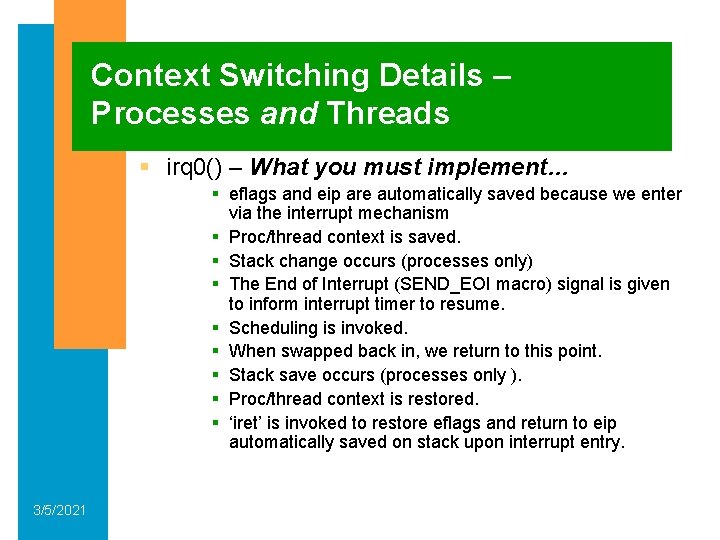

Context Switching Details – Processes and Threads § irq 0() – What you must implement… § eflags and eip are automatically saved because we enter via the interrupt mechanism § Proc/thread context is saved. § Stack change occurs (processes only) § The End of Interrupt (SEND_EOI macro) signal is given to inform interrupt timer to resume. § Scheduling is invoked. § When swapped back in, we return to this point. § Stack save occurs (processes only ). § Proc/thread context is restored. § ‘iret’ is invoked to restore eflags and return to eip automatically saved on stack upon interrupt entry. 3/5/2021

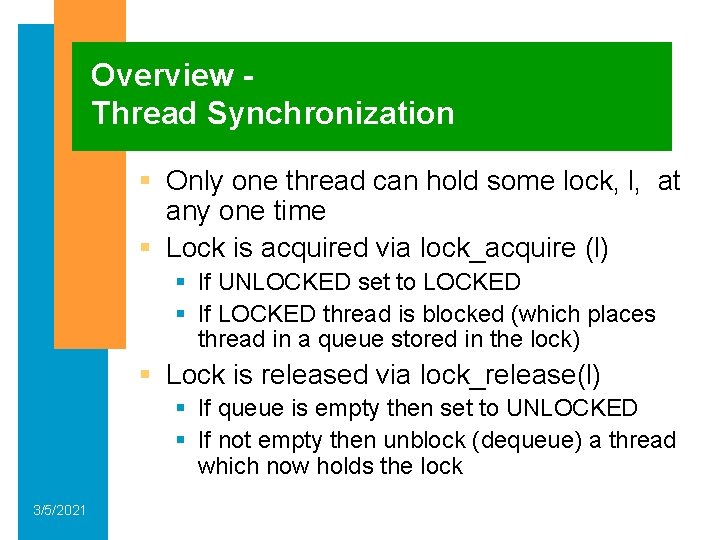

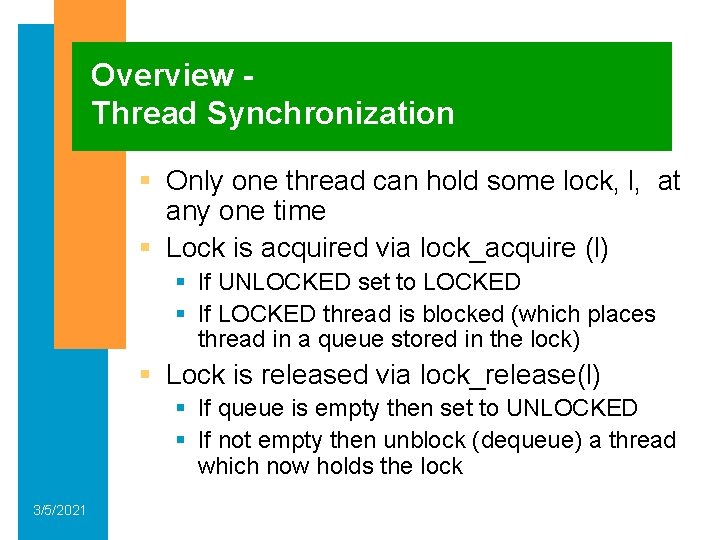

Overview Thread Synchronization § Only one thread can hold some lock, l, at any one time § Lock is acquired via lock_acquire (l) § If UNLOCKED set to LOCKED § If LOCKED thread is blocked (which places thread in a queue stored in the lock) § Lock is released via lock_release(l) § If queue is empty then set to UNLOCKED § If not empty then unblock (dequeue) a thread which now holds the lock 3/5/2021

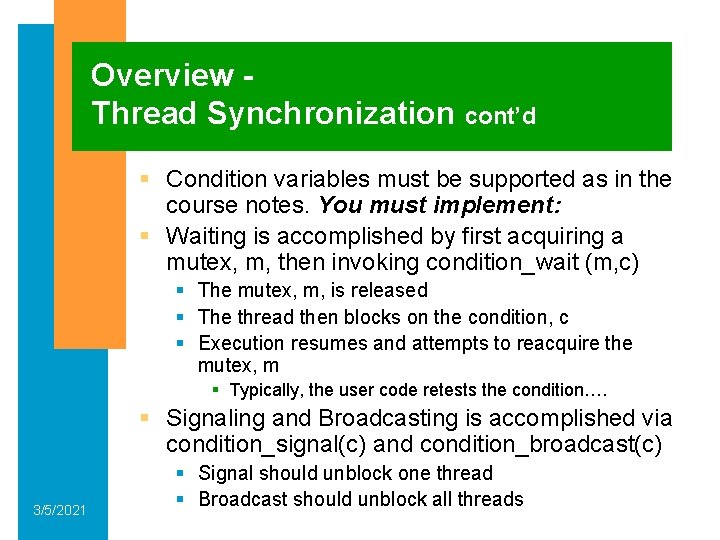

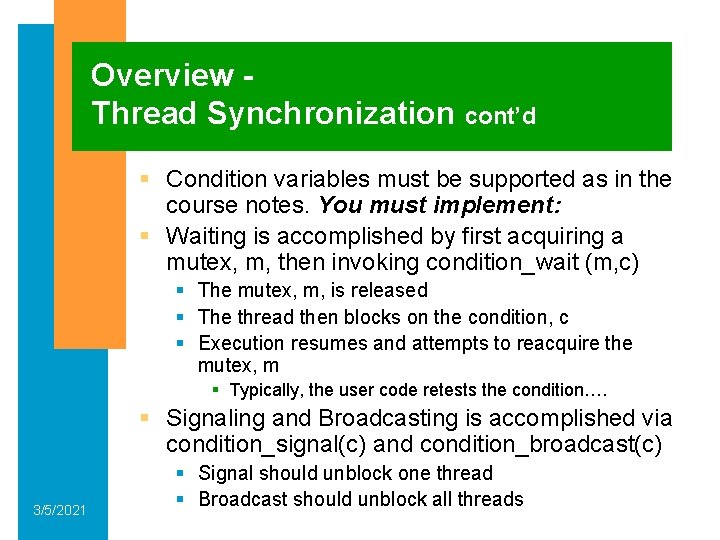

Overview Thread Synchronization cont’d § Condition variables must be supported as in the course notes. You must implement: § Waiting is accomplished by first acquiring a mutex, m, then invoking condition_wait (m, c) § The mutex, m, is released § The thread then blocks on the condition, c § Execution resumes and attempts to reacquire the mutex, m § Typically, the user code retests the condition…. § Signaling and Broadcasting is accomplished via condition_signal(c) and condition_broadcast(c) 3/5/2021 § Signal should unblock one thread § Broadcast should unblock all threads

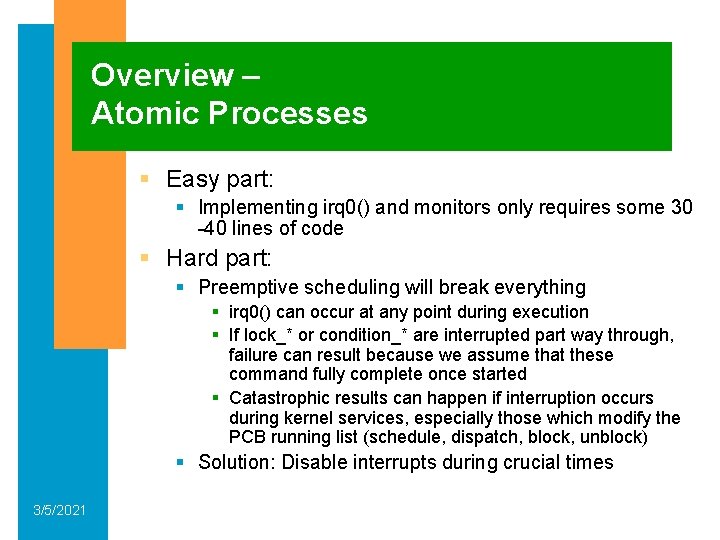

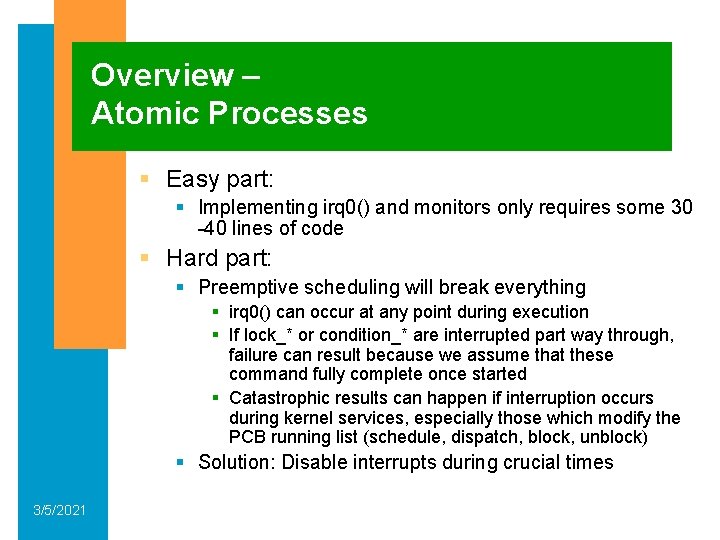

Overview – Atomic Processes § Easy part: § Implementing irq 0() and monitors only requires some 30 -40 lines of code § Hard part: § Preemptive scheduling will break everything § irq 0() can occur at any point during execution § If lock_* or condition_* are interrupted part way through, failure can result because we assume that these command fully complete once started § Catastrophic results can happen if interruption occurs during kernel services, especially those which modify the PCB running list (schedule, dispatch, block, unblock) § Solution: Disable interrupts during crucial times 3/5/2021

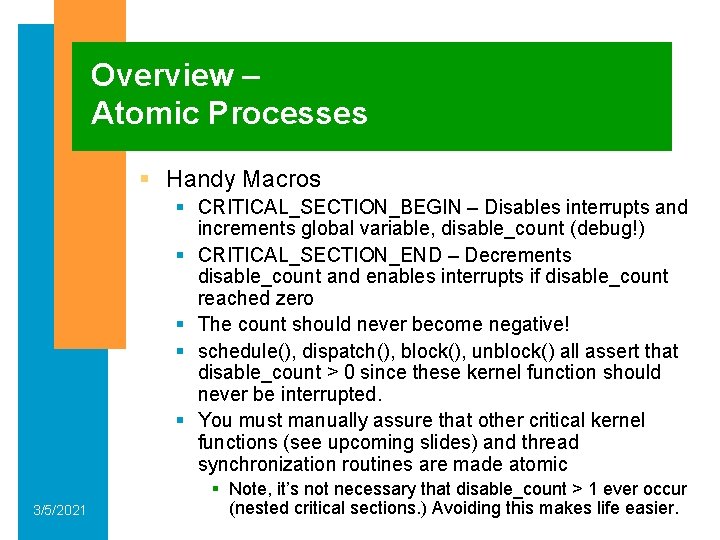

Overview – Atomic Processes § Handy Macros § CRITICAL_SECTION_BEGIN – Disables interrupts and increments global variable, disable_count (debug!) § CRITICAL_SECTION_END – Decrements disable_count and enables interrupts if disable_count reached zero § The count should never become negative! § schedule(), dispatch(), block(), unblock() all assert that disable_count > 0 since these kernel function should never be interrupted. § You must manually assure that other critical kernel functions (see upcoming slides) and thread synchronization routines are made atomic 3/5/2021 § Note, it’s not necessary that disable_count > 1 ever occur (nested critical sections. ) Avoiding this makes life easier.

Atomicity – Details § schedule(), dispatch(), block(), unblock() all assert that interrupts are disabled § All entry points to these functions must incorporate CRITICAL_SECTION_BEGIN § system_call_entry() § Process system call could lead to _yield(), exit() which call to schedule() § yield() § Thread’s direct system call will lead to schedule() § exit() § But only for a thread’s direct call since processes only call here via system_call_entry (test current_running->in_kernel) § irq 0() § This results in a call to schedule() for threads and processes § lock_*, condition_* 3/5/2021 § To ensure atomicity and because can call block(), unblock()

Atomicity – Details cont’d § When any block() or unblock() call completes, the interrupts are disabled (before the call, or before dispatch swapped in a newly unblocked thread) § CRITICAL_SECTION_END must ultimately appear after such calls § At end of lock_*, condition_* § FIRST_TIME proc/threads launch directly from the dispatcher which assumes interrupts are disabled § CRITICAL_SECTION_END must appear before jumping to the start address § Non-FIRST_TIME dispatch() calls swap in saved kernel stack and return to begin execution after the schedule() call that caused the context to originally swap out § CRITICAL_SECTION_END must ultimately appear at the end of all eventual context restorations 3/5/2021 § At end of system_call_entry(), yield() or irq 0()

Atomicity – Thread Synchronization Functions § As mentioned, lock_* and condition_* must execute atomically § Furthermore, lock_acquire(), lock_release(), condition_*() must disable interrupts while calling to block(), unblock() 3/5/2021 § All code within these functions should be bracketed within CRITICAL_SECTION_* macros § Difficulties can arise if critical sections become nested when condition_wait() calls to lock_acquire(), lock_release() leading to non-zero disable_count when context restoration completes -- BAD § You may simplify life by defining _lock_acquire(), and _lock_release(), which are seen only by condition_wait() and which do not invoke critical section macros § Since you disable interrupts at the start of condition_wait() and are assured that block() returns with interrupts disabled everything should work safely.

Implementation Details Code Files § You are responsible for: § scheduler. c § The preemptive interrupt handler should be implemented in irq 0() as described previously § All other functions are already implemented. § Atomicity must be insured by (en)(dis)abling interrupts appropriately in system_call_entry(), yield(), dispatch(), exit(), and irq 0() § thread. c § Condition variables must be implemented by manipulating locks and the condition wait queue as described § Atomicity must be insured by (en)(dis)abling interrupts appropriately as described 3/5/2021

Implementation Details Code Files cont’d § You are NOT responsible for: § common. h § Some general global definitions § kernel. h, kernel. c, syslib. h, syslib. c § Code to setup OS & process system call mechanism § util. h, util. c § Useful utilities (no standard libraries are available) § th. h, th 1. c, th 2. c, process 1. c, process 2. c § Some proc/threads that run in this project § thread. h, scheduler. h, creatimage, bootblock § The given utils/interfaces/definitions 3/5/2021

Implementation Details Extra Credit § Prioritized Scheduling… 3/5/2021