Computing Resources for the AIACE Experiment Raffaella De

- Slides: 22

Computing Resources for the AIACE Experiment Raffaella De Vita INFN – Sezione di Genova • • The AIACE experiment On-Site Computing (JLab) Off-Site Computing (Ge, LNF) Future Perspectives and Summary • Computing models/needs for other nuclear physics experiments

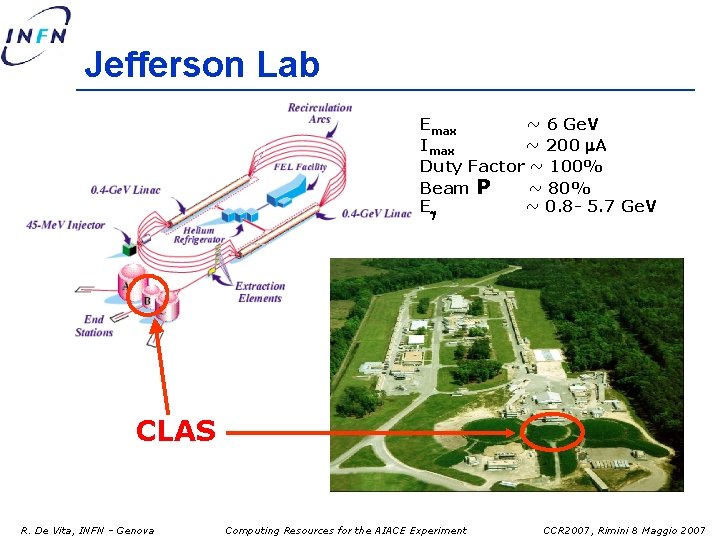

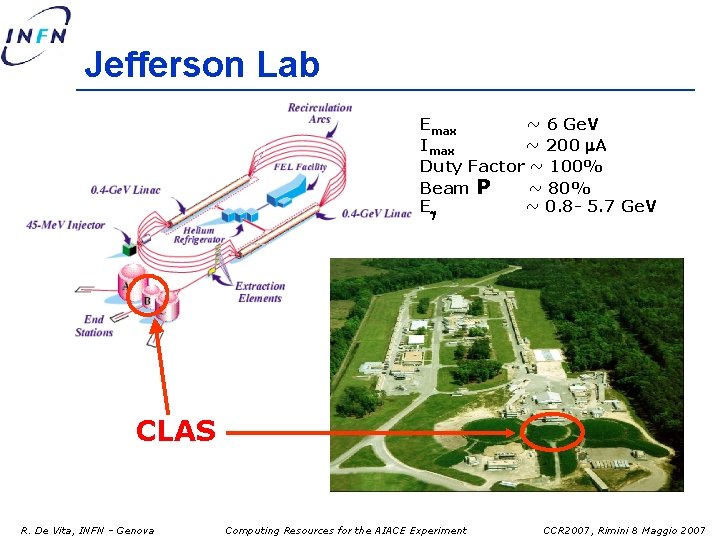

AIACE The Aiace Experiment Attività Italiana A CEBAF: Laboratori Nazionali di Frascati & INFN e Università di Genova Physics Goal: Study of the hadron structure and of the properties of strong interaction in terms of quarks and gluons using electromagnetic probes Experimental Setup: Jefferson Laboratory (Newport News, VA-USA) with the CEBAF accelerator (electron and photons up to 6 Ge. V) and the CLAS detector. International Collaboration (CLAS) : 35 Institutes from 7 Countries ~150 Members (~9% AIACE) Italian Collaboration: Scientist: 9. 4 fte, Technical Staff 2. 6 fte Activity: Data taking started in 1998 Publications: 55 physics papers published on refereed journals (PRL, PRC, PRD) 20 detector-related papers (NIM, IEEE) 21 papers (28%) with AIACE Leading Authors R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

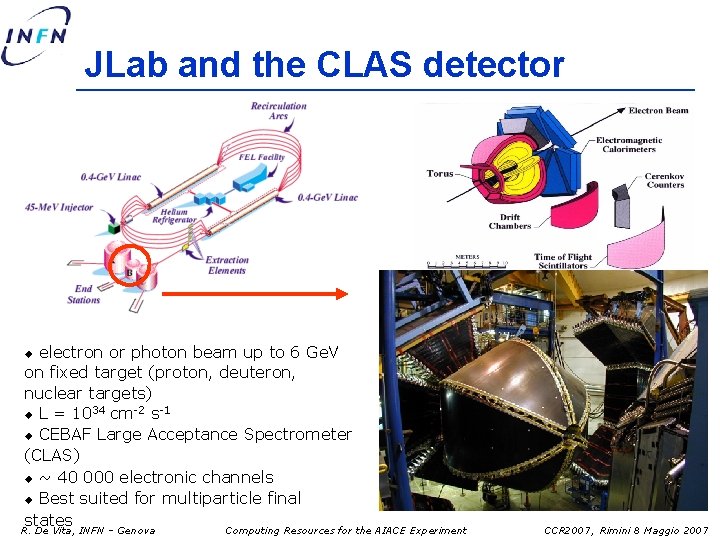

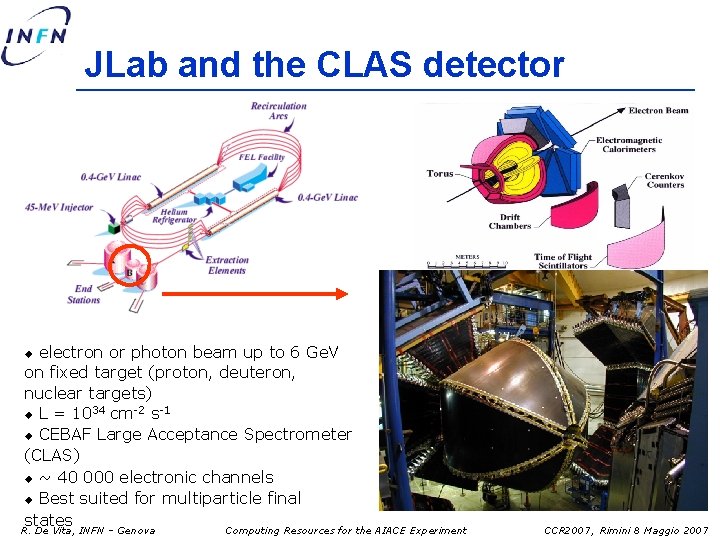

JLab and the CLAS detector electron or photon beam up to 6 Ge. V on fixed target (proton, deuteron, nuclear targets) 34 cm-2 s-1 u L = 10 u CEBAF Large Acceptance Spectrometer (CLAS) u ~ 40 000 electronic channels u Best suited for multiparticle final states u R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

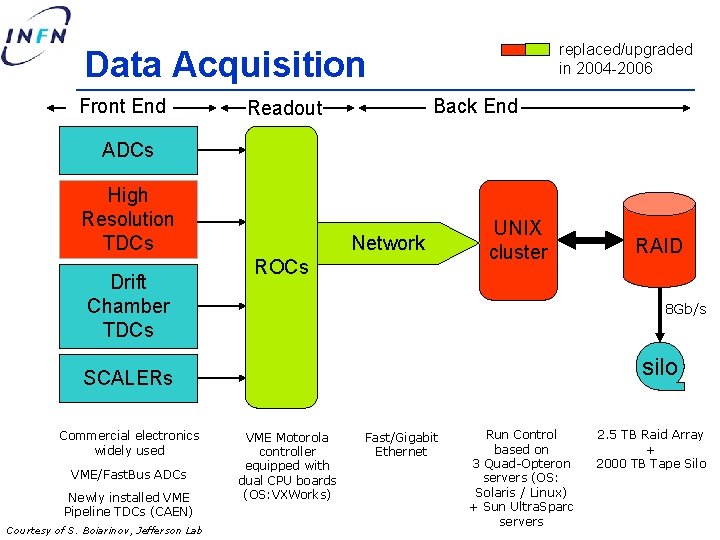

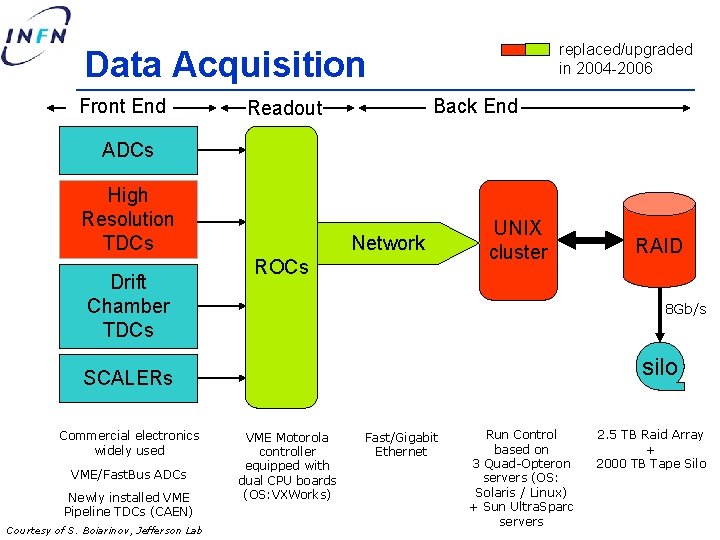

replaced/upgraded in 2004 -2006 Data Acquisition Front End Back End Readout ADCs High Resolution TDCs Drift Chamber TDCs Network ROCs UNIX cluster 8 Gb/s silo SCALERs Commercial electronics widely used VME/Fast. Bus ADCs Newly installed VME Pipeline TDCs (CAEN) Courtesy of S. Boiarinov, Jefferson Lab RAID VME Motorola controller equipped with dual CPU boards (OS: VXWorks) Fast/Gigabit Ethernet Run Control based on 3 Quad-Opteron servers (OS: Solaris / Linux) + Sun Ultra. Sparc servers 2. 5 TB Raid Array + 2000 TB Tape Silo

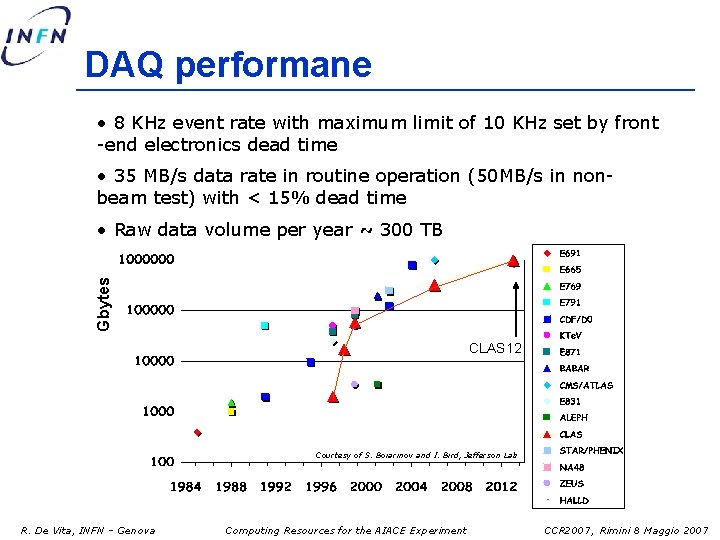

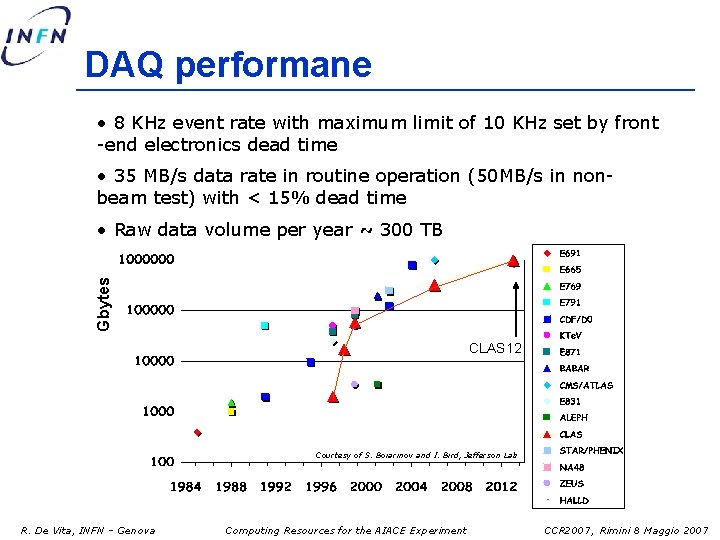

DAQ performane • 8 KHz event rate with maximum limit of 10 KHz set by front -end electronics dead time • 35 MB/s data rate in routine operation (50 MB/s in nonbeam test) with < 15% dead time Gbytes • Raw data volume per year ~ 300 TB CLAS 12 Courtesy of S. Boiarinov and I. Bird, Jefferson Lab R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

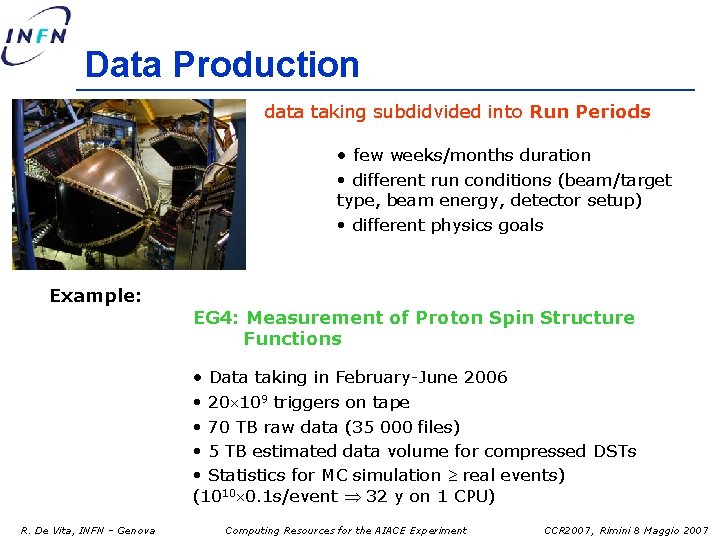

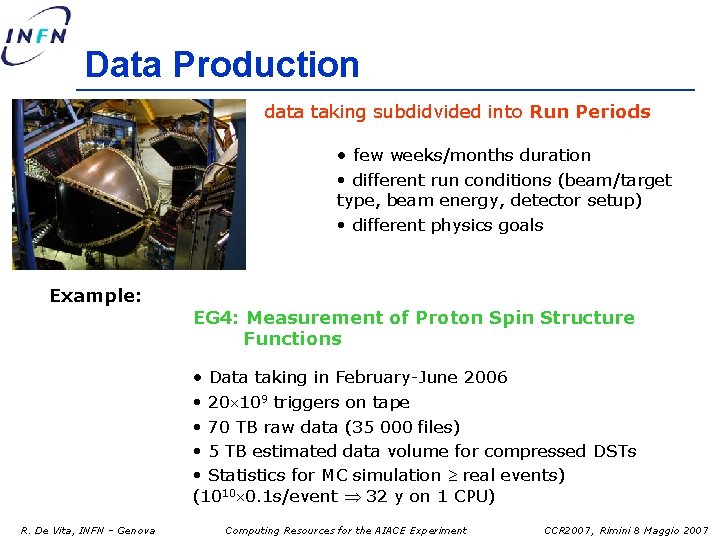

Data Production data taking subdidvided into Run Periods • few weeks/months duration • different run conditions (beam/target type, beam energy, detector setup) • different physics goals Example: EG 4: Measurement of Proton Spin Structure Functions • Data taking in February-June 2006 • 20 109 triggers on tape • 70 TB raw data (35 000 files) • 5 TB estimated data volume for compressed DSTs • Statistics for MC simulation real events) (1010 0. 1 s/event 32 y on 1 CPU) R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

On site data processing • detector calibration and raw data processing including DST production performed using on-site computing facilities, i. e. JLab farm: o 166 dual processor systems o ~360 000 specint 2000 o software: Red. Hat Enterprise Linux 3, LSF 6. 0 • 80% of computing resources available for CLAS data processing o 70 % reserved for processing of raw data o 30 % available for all CLAS users (80 cpu 150 people) • limited resources are left for physics analysis and MC simulations these tasks are performed off-site with alternative computing facilities R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

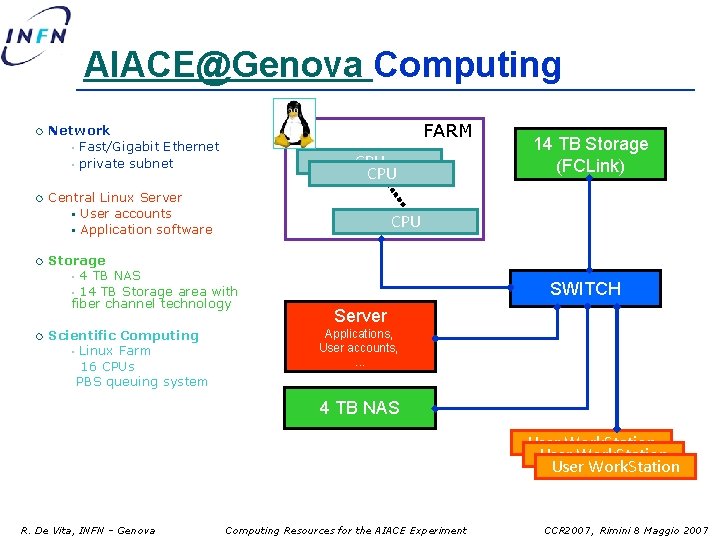

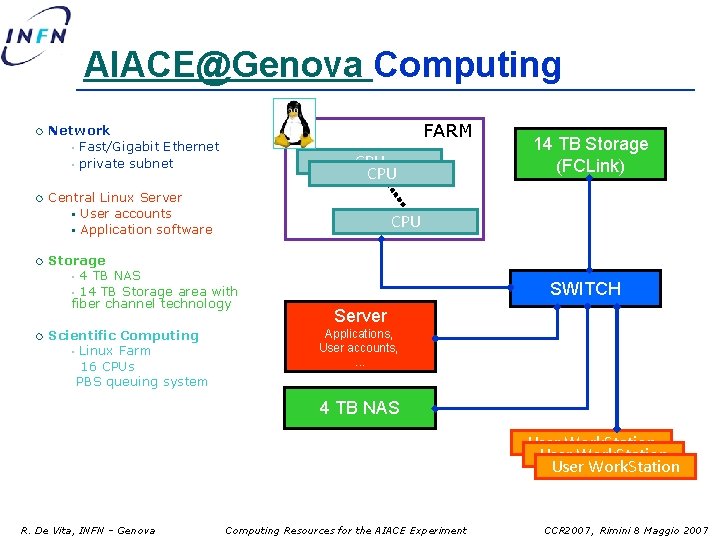

AIACE@Genova Computing ¡ ¡ FARM Network • Fast/Gigabit Ethernet • private subnet CPU Central Linux Server § User accounts § Application software CPU Storage • 4 TB NAS • 14 TB Storage area with fiber channel technology Scientific Computing • Linux Farm 16 CPUs PBS queuing system 14 TB Storage (FCLink) SWITCH Server Applications, User accounts, . . . 4 TB NAS User Work. Station R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

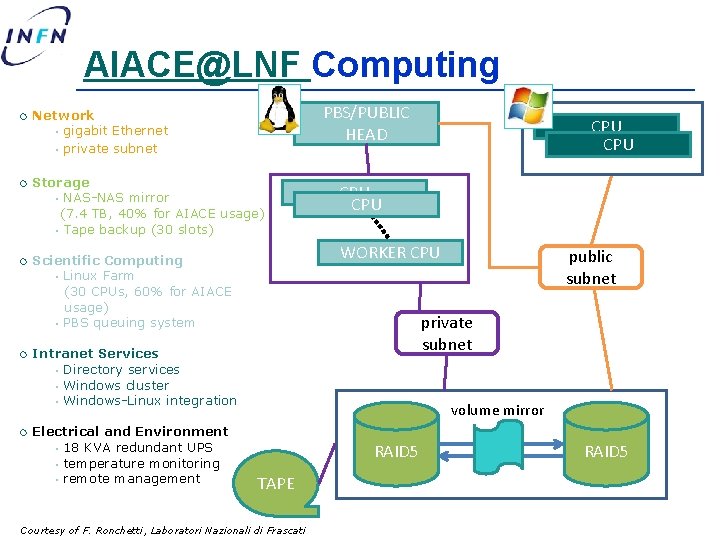

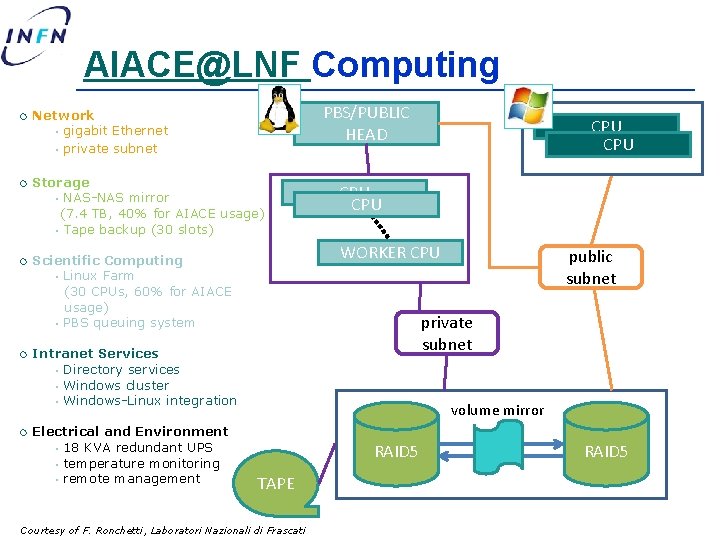

AIACE@LNF Computing PBS/PUBLIC HEAD ¡ Network • gigabit Ethernet • private subnet ¡ Storage • NAS-NAS mirror (7. 4 TB, 40% for AIACE usage) • Tape backup (30 slots) ¡ Scientific Computing • Linux Farm (30 CPUs, 60% for AIACE usage) • PBS queuing system ¡ ¡ CPU WORKER CPU public subnet private subnet Intranet Services • Directory services • Windows cluster • Windows-Linux integration Electrical and Environment • 18 KVA redundant UPS • temperature monitoring • remote management CPU volume mirror RAID 5 TAPE Courtesy of F. Ronchetti, Laboratori Nazionali di Frascati RAID 5

Off Site Data Processing • Ongoing analysis projects: LNF: 4 physics analysis of hadronic reactions 1 data set (2 TB DSTs) 4 different sets of MC simulations Genova: 5 analysis 3 data sets (15 TB DSTs) 5 different sets of MC simulations • Data transfer from JLab through network connection • Present storage resources seem adequate for local computing • Available computing power (Genova=20 CPUs + LNF=18 CPUs) not sufficient !!! Possible solution: • Usage of Tier 1 computing facilities (preferably through GRID) • Requested 50 000 specint 2000 + local storage currently not available • Other solutions: Tier 2 ? ? ? R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

Summary • Activity of AIACE Collaboration continues at JLab with intensive data collection and analysis efforts • Data volume almost comparable with high energy experiments (LHC) • Current model based on small local storage and farms for physics analysis and simulations • Presently available computing resources not sufficient • Usage of GRID and related computing resources may solve the problem. Possibility is being considered (Not easy!!) R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

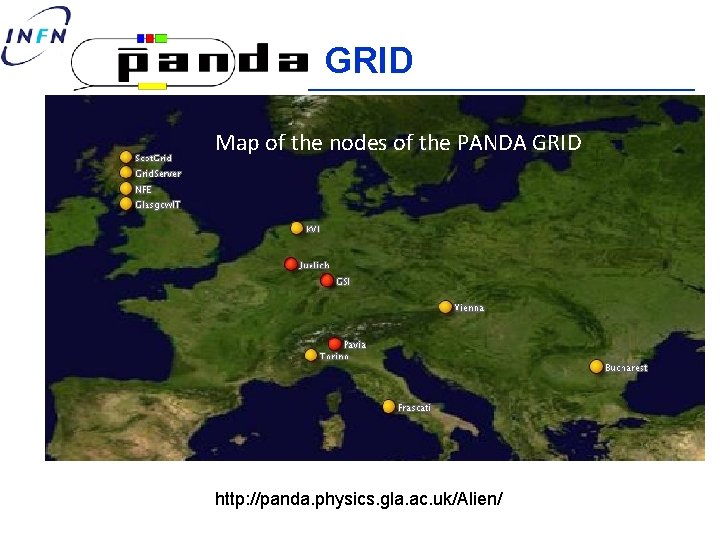

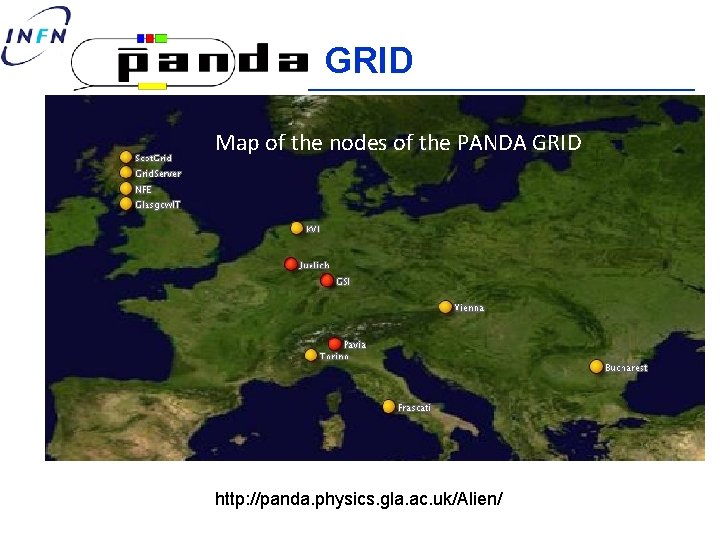

GRID Map of the nodes of the PANDA GRID http: //panda. physics. gla. ac. uk/Alien/

PANDAGRID • PANDA Grid management is done by Glasgow group • Grid infrastructure and know-how are expanding inside PANDA • Latest additions: Pavia, Scot. Grid • Panda. Root (PANDA simulation software) installs and runs well on all platforms • The only errors encountered were due to user misconfiguration or to access restrictions (firewalls) • Panda. Root/CBM developers are now acquainted with the gridware • Panda Grid already used and always ready for simulations! http: //panda. physics. gla. ac. uk/Alien/

Latest work • Improved Panda. Root installation scripts, retested on x 86_64 • Debugged PBS and LSF problems • Established full simulation/reconstruction chain with catalogue triggers • Jobs ran in one week test: ~15000 • Necessity to link to other GRID activity http: //panda. physics. gla. ac. uk/Alien/

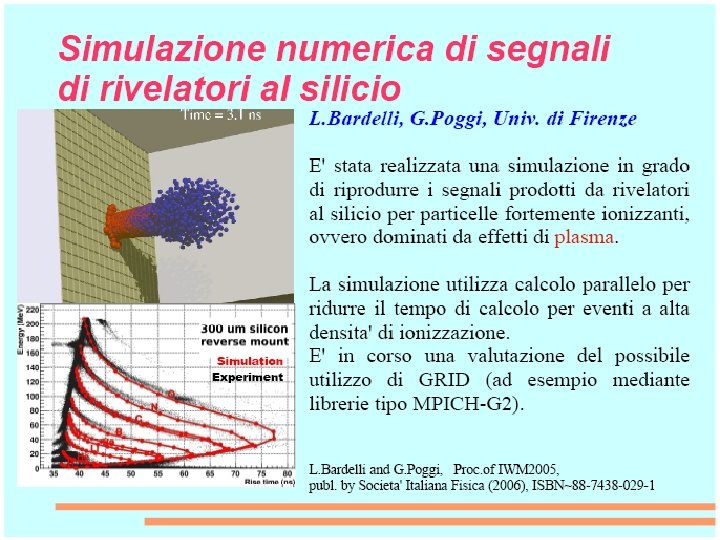

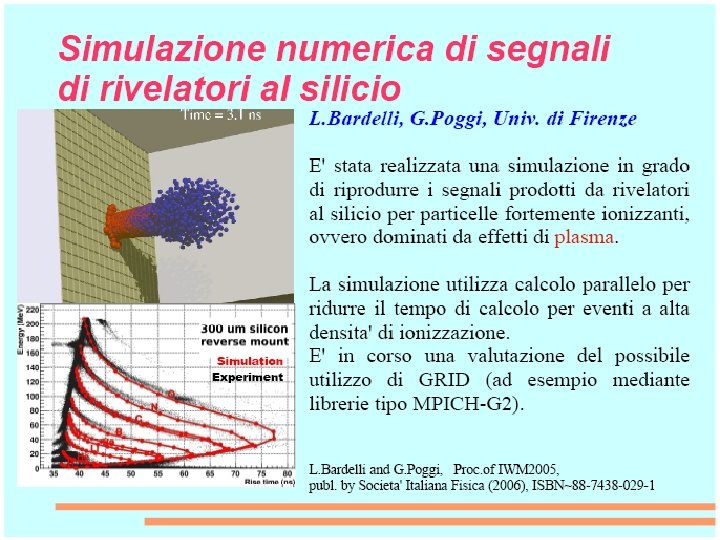

Fazia

Jefferson Lab Emax ~ 6 Ge. V Imax ~ 200 m. A Duty Factor ~ 100% Beam P ~ 80% Eg ~ 0. 8 - 5. 7 Ge. V CLAS R. De Vita, INFN – Genova Computing Resources for the AIACE Experiment CCR 2007, Rimini 8 Maggio 2007

Jlab Linux Farm • 1000 jobs running simultaneously • full usage of available resources • 50% of resources used CLAS for raw data reconstruction • average number of jobs/user 10 -20

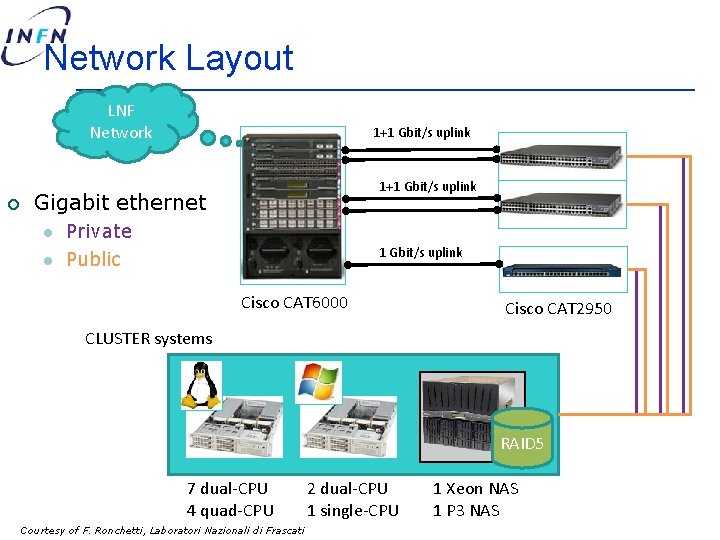

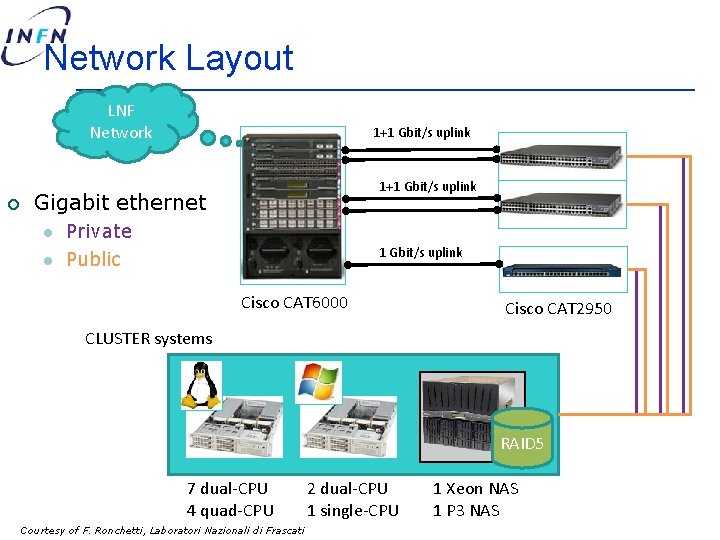

Network Layout LNF Network ¡ 1+1 Gbit/s uplink Gigabit ethernet l l Private Public 1 Gbit/s uplink Cisco CAT 6000 Cisco CAT 2950 CLUSTER systems RAID 5 7 dual-CPU 4 quad-CPU Courtesy of F. Ronchetti, Laboratori Nazionali di Frascati 2 dual-CPU 1 single-CPU 1 Xeon NAS 1 P 3 NAS

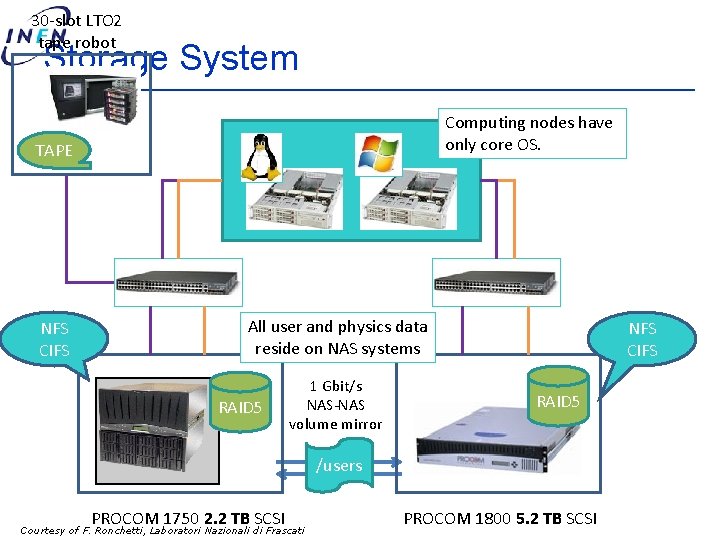

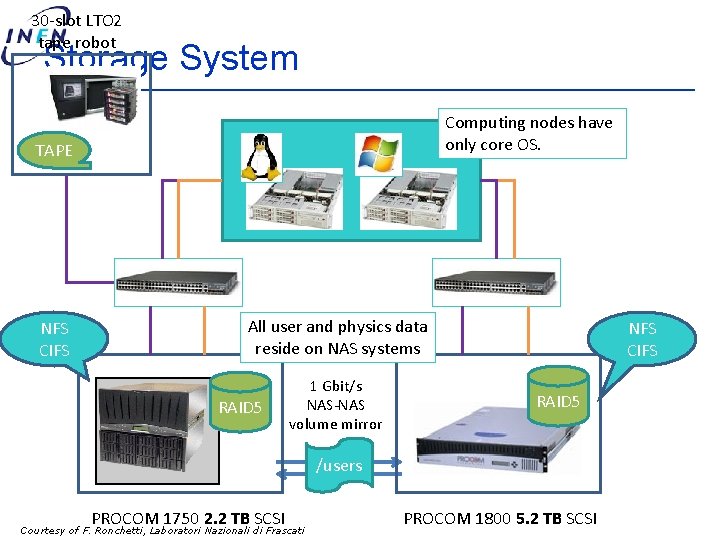

30 -slot LTO 2 tape robot Storage System Computing nodes have only core OS. TAPE NFS CIFS All user and physics data reside on NAS systems RAID 5 1 Gbit/s NAS-NAS volume mirror NFS CIFS RAID 5 /users PROCOM 1750 2. 2 TB SCSI Courtesy of F. Ronchetti, Laboratori Nazionali di Frascati PROCOM 1800 5. 2 TB SCSI

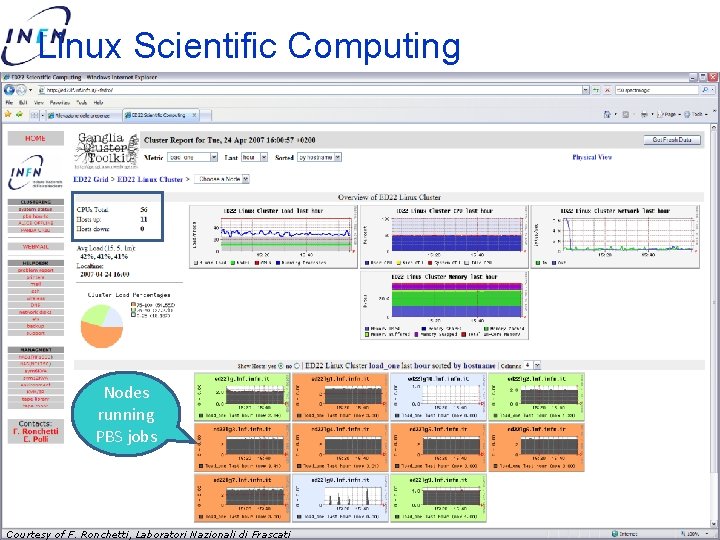

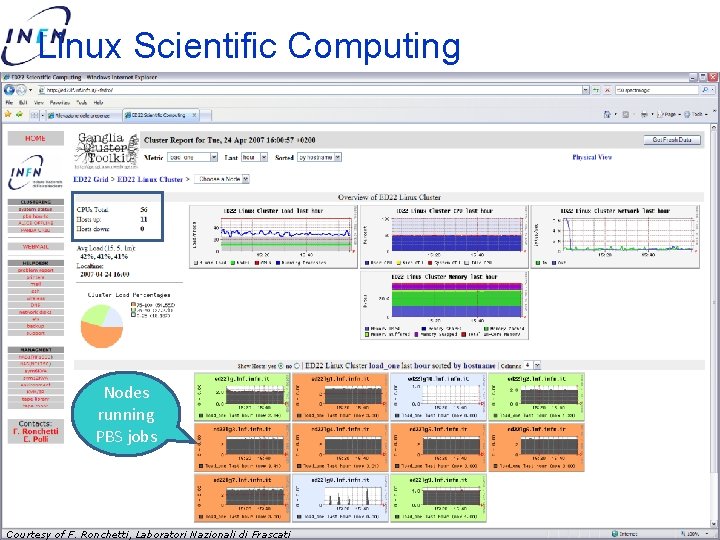

Linux Scientific Computing Nodes running PBS jobs Courtesy of F. Ronchetti, Laboratori Nazionali di Frascati

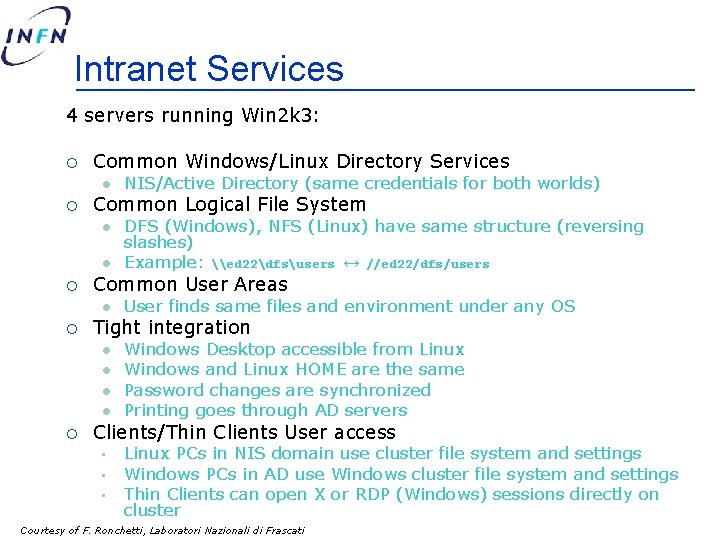

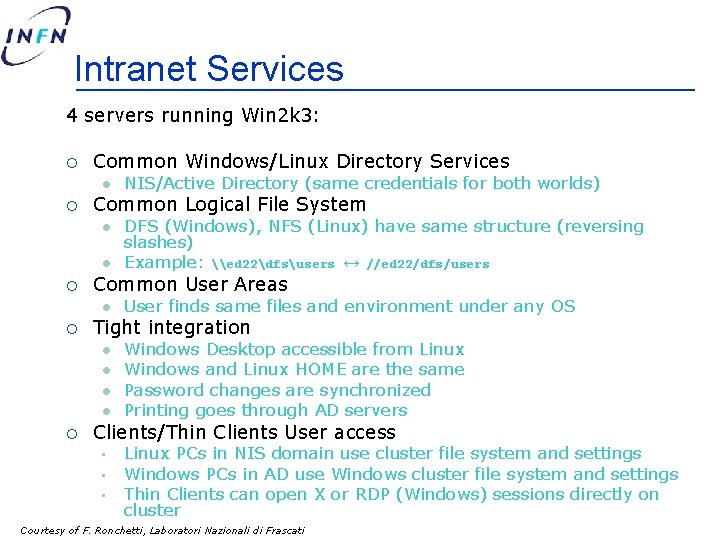

Intranet Services 4 servers running Win 2 k 3: ¡ Common Windows/Linux Directory Services l ¡ Common Logical File System l l ¡ User finds same files and environment under any OS Tight integration l l ¡ DFS (Windows), NFS (Linux) have same structure (reversing slashes) Example: \ed 22dfsusers ↔ //ed 22/dfs/users Common User Areas l ¡ NIS/Active Directory (same credentials for both worlds) Windows Desktop accessible from Linux Windows and Linux HOME are the same Password changes are synchronized Printing goes through AD servers Clients/Thin Clients User access • • • Linux PCs in NIS domain use cluster file system and settings Windows PCs in AD use Windows cluster file system and settings Thin Clients can open X or RDP (Windows) sessions directly on cluster Courtesy of F. Ronchetti, Laboratori Nazionali di Frascati