Computer Organization and Design Pipelining Montek Singh Dec

- Slides: 21

Computer Organization and Design Pipelining Montek Singh Dec 2, 2015 Lecture 16 (SELF STUDY – not covered on the final exam)

Pipelining Between 411 problems sets, I haven’t had a minute to do laundry Now that’s what I call dirty laundry

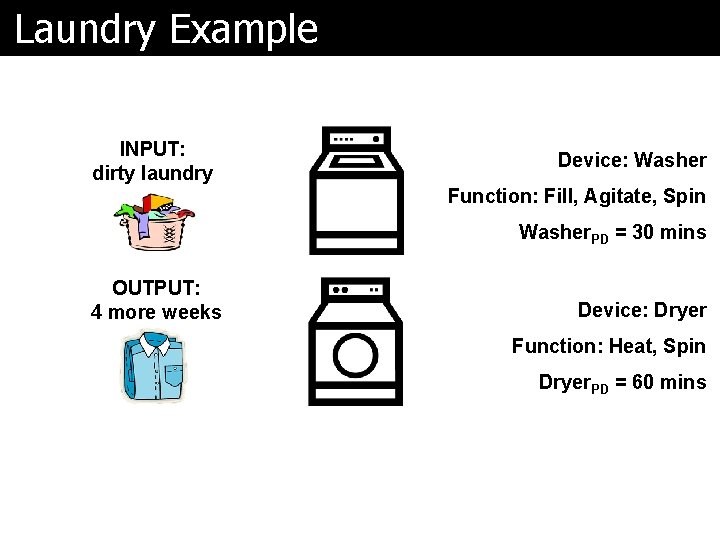

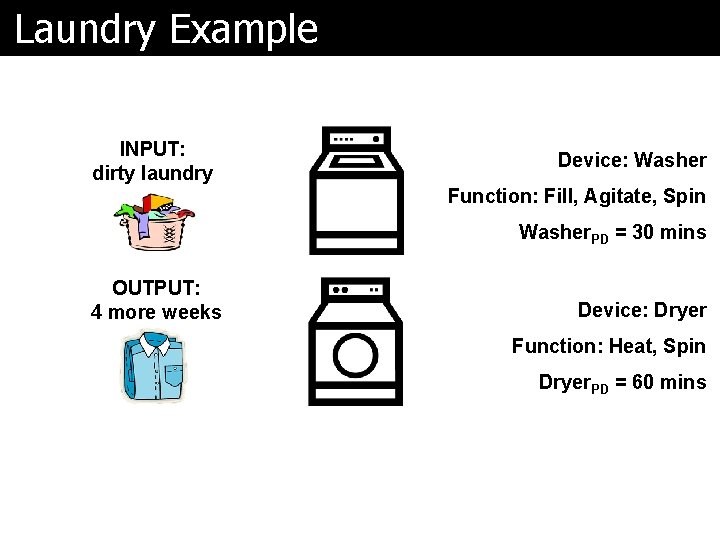

Laundry Example INPUT: dirty laundry Device: Washer Function: Fill, Agitate, Spin Washer. PD = 30 mins OUTPUT: 4 more weeks Device: Dryer Function: Heat, Spin Dryer. PD = 60 mins

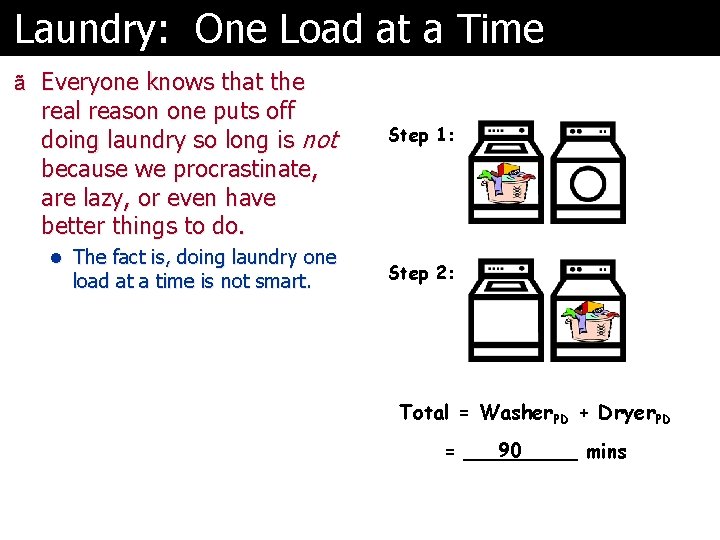

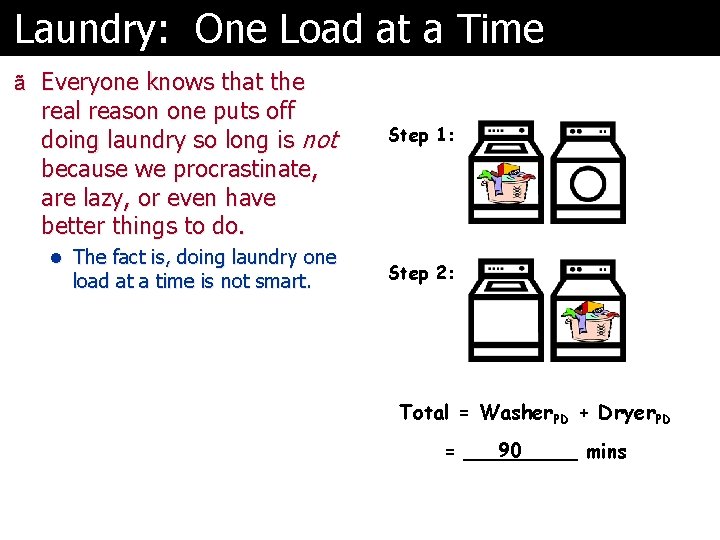

Laundry: One Load at a Time ã Everyone knows that the real reason one puts off doing laundry so long is not because we procrastinate, are lazy, or even have better things to do. l The fact is, doing laundry one load at a time is not smart. Step 1: Step 2: Total = Washer. PD + Dryer. PD 90 = _____ mins

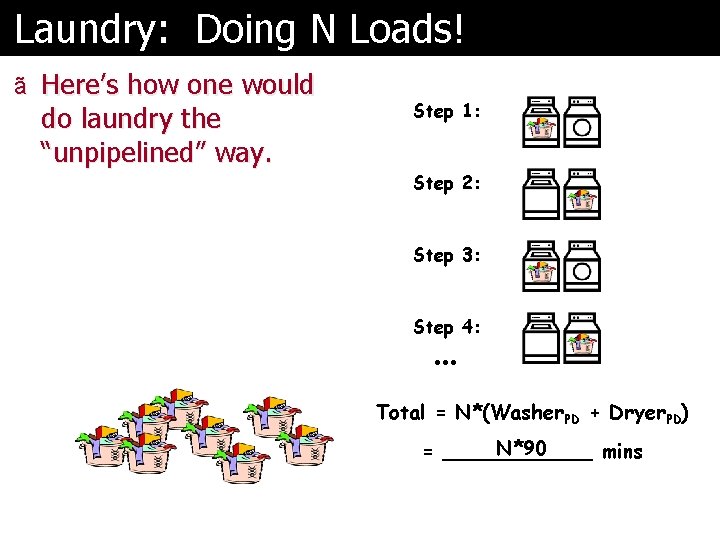

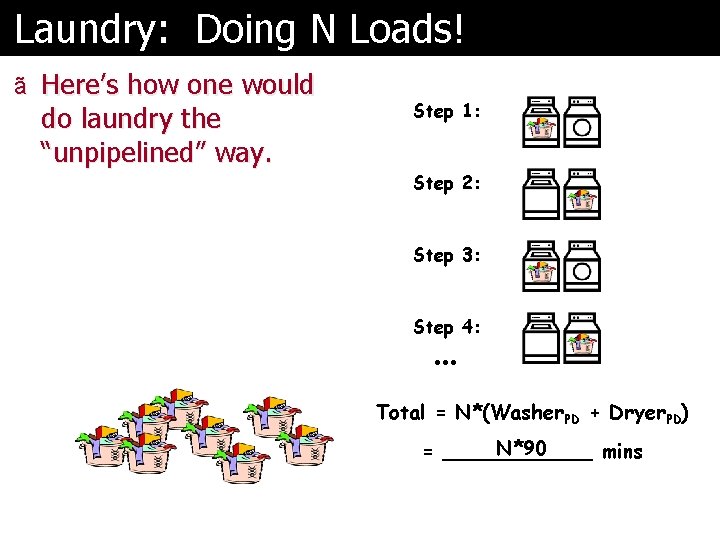

Laundry: Doing N Loads! ã Here’s how one would do laundry the “unpipelined” way. Step 1: Step 2: Step 3: Step 4: … Total = N*(Washer. PD + Dryer. PD) N*90 = ______ mins

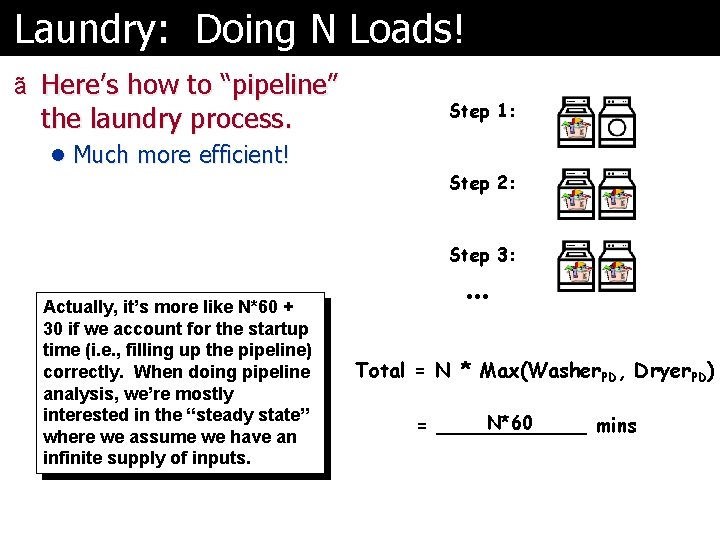

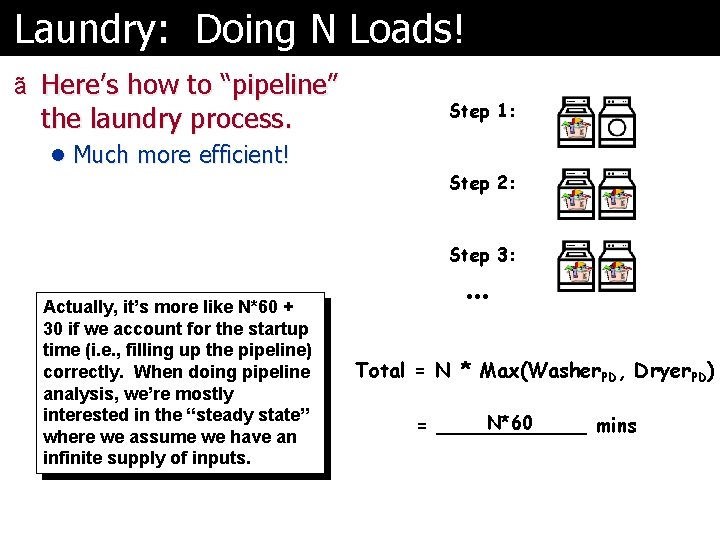

Laundry: Doing N Loads! ã Here’s how to “pipeline” the laundry process. l Much more efficient! Step 1: Step 2: Step 3: Actually, it’s more like N*60 + 30 if we account for the startup time (i. e. , filling up the pipeline) correctly. When doing pipeline analysis, we’re mostly interested in the “steady state” where we assume we have an infinite supply of inputs. … Total = N * Max(Washer. PD, Dryer. PD) N*60 = ______ mins

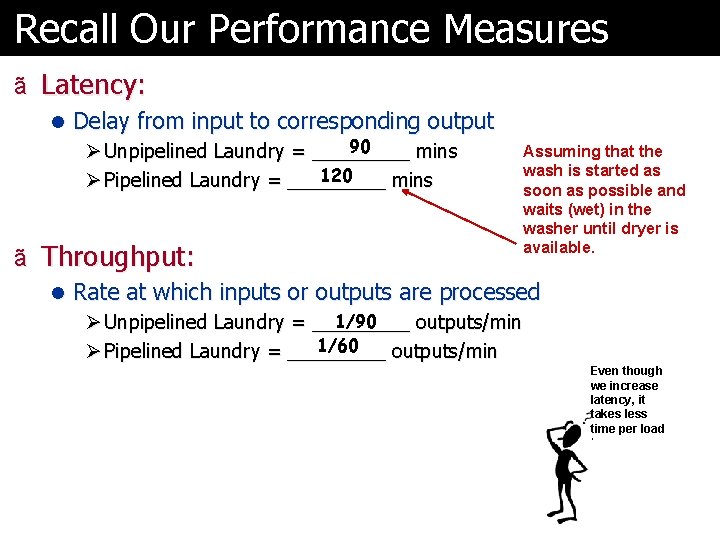

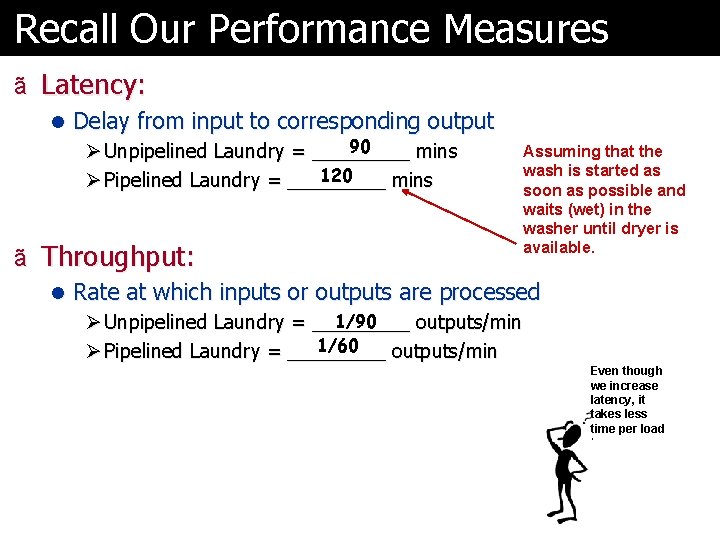

Recall Our Performance Measures ã Latency: l Delay from input to corresponding output 90 Ø Unpipelined Laundry = _____ mins 120 Ø Pipelined Laundry = _____ mins ã Throughput: Assuming that the wash is started as soon as possible and waits (wet) in the washer until dryer is available. l Rate at which inputs or outputs are processed 1/90 Ø Unpipelined Laundry = _____ outputs/min 1/60 Ø Pipelined Laundry = _____ outputs/min Even though we increase latency, it takes less time per load

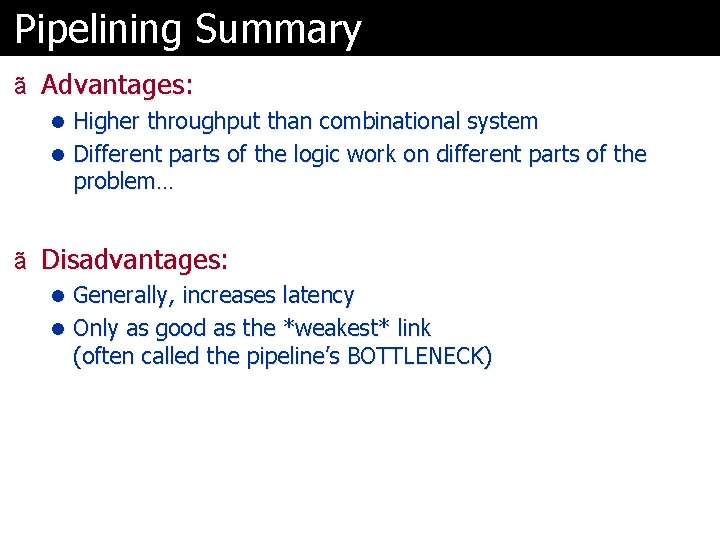

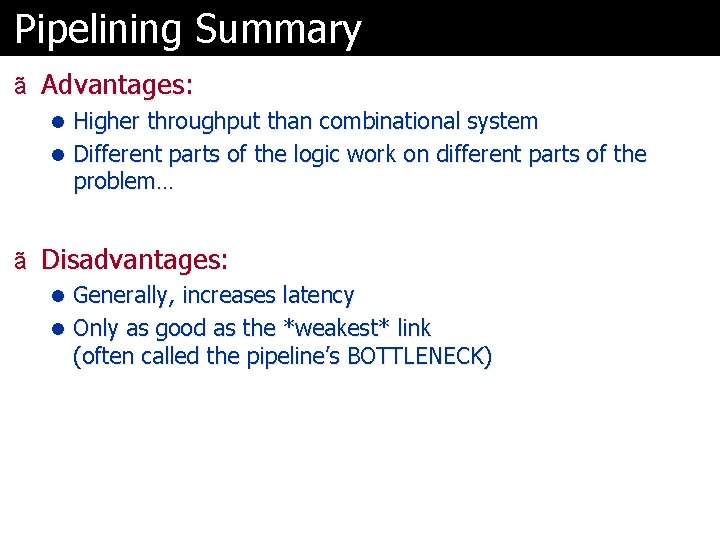

Pipelining Summary ã Advantages: l Higher throughput than combinational system l Different parts of the logic work on different parts of the problem… ã Disadvantages: l Generally, increases latency l Only as good as the *weakest* link (often called the pipeline’s BOTTLENECK)

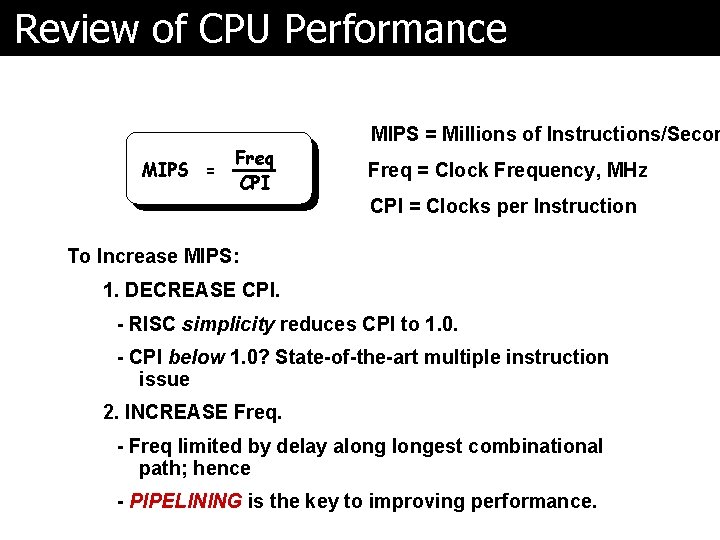

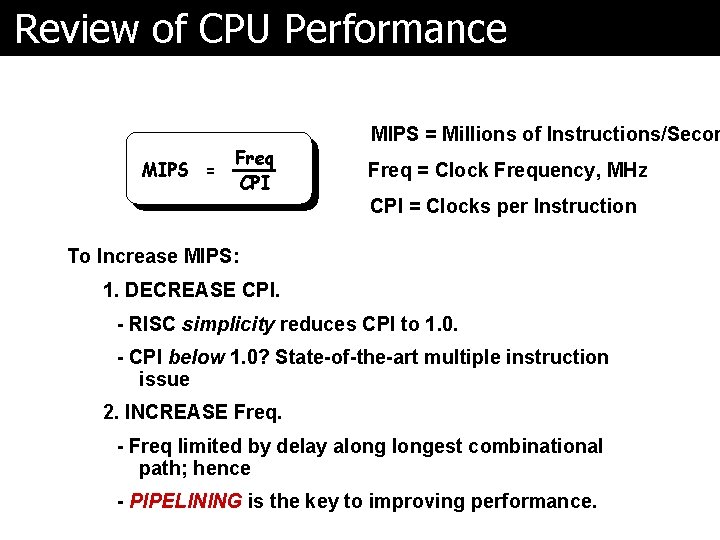

Review of CPU Performance MIPS = Freq CPI MIPS = Millions of Instructions/Secon Freq = Clock Frequency, MHz CPI = Clocks per Instruction To Increase MIPS: 1. DECREASE CPI. - RISC simplicity reduces CPI to 1. 0. - CPI below 1. 0? State-of-the-art multiple instruction issue 2. INCREASE Freq. - Freq limited by delay alongest combinational path; hence - PIPELINING is the key to improving performance.

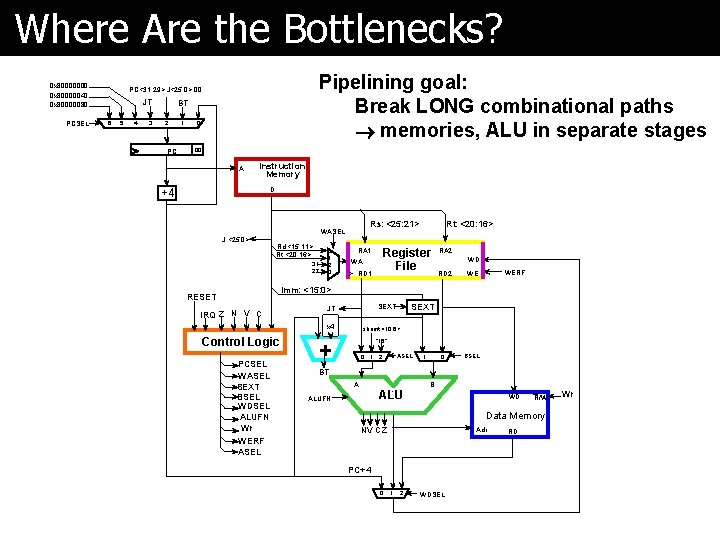

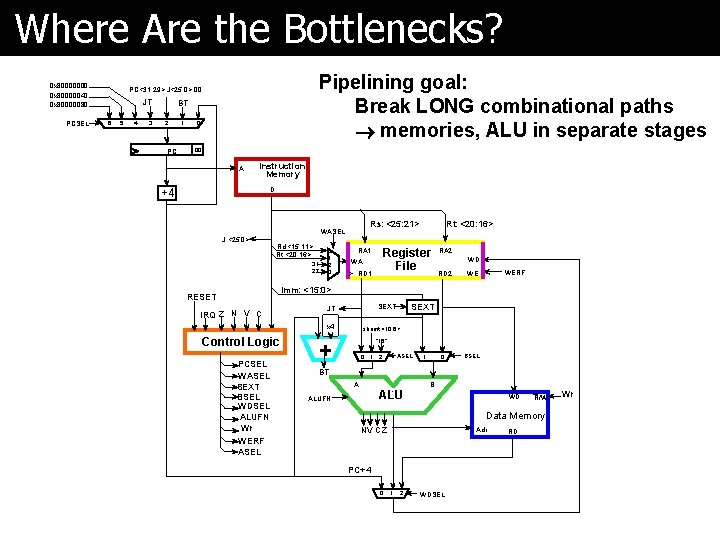

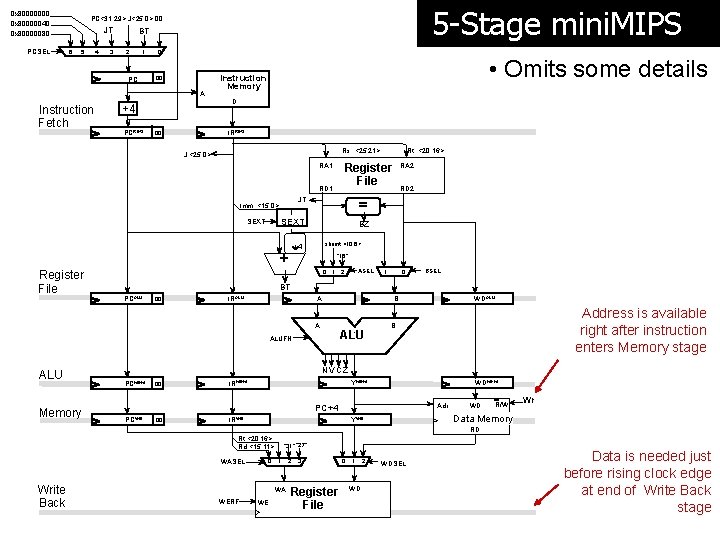

Where Are the Bottlenecks? 0 x 80000000 0 x 80000040 0 x 80000080 PCSEL Pipelining goal: Break LONG combinational paths memories, ALU in separate stages PC<31: 29>: J<25: 0>: 00 JT 6 5 4 3 BT 2 PC 1 0 00 A Instruction Memory D +4 J: <25: 0> Rs: <25: 21> WASEL Rd: <15: 11> Rt: <20: 16> 31 27 0 1 2 3 Rt: <20: 16> Register File RA 1 WA WA RD 1 RA 2 WD RD 2 WERF WE Imm: <15: 0> RESET IRQ Z N V C x 4 Control Logic PCSEL WASEL SEXT BSEL WDSEL ALUFN Wr WERF ASEL SEXT JT shamt: <10: 6> “ 16” + ASEL 0 1 2 1 0 BSEL BT A ALUFN B WD R/W Data Memory NV C Z Adr PC+4 0 1 2 WDSEL RD Wr

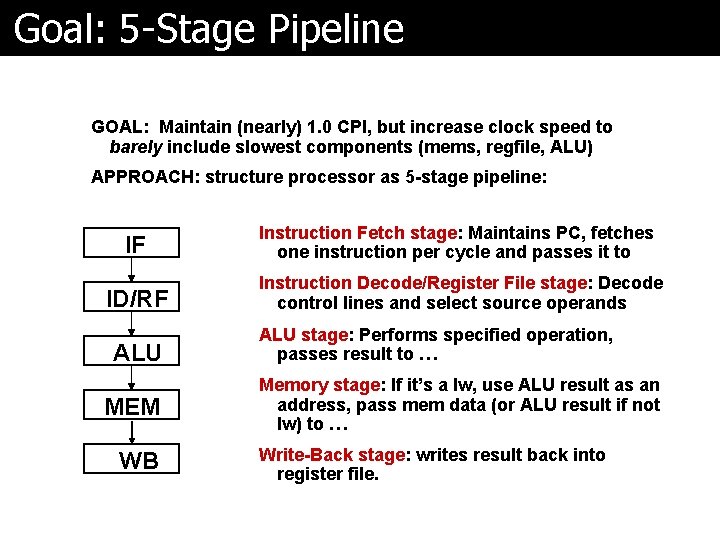

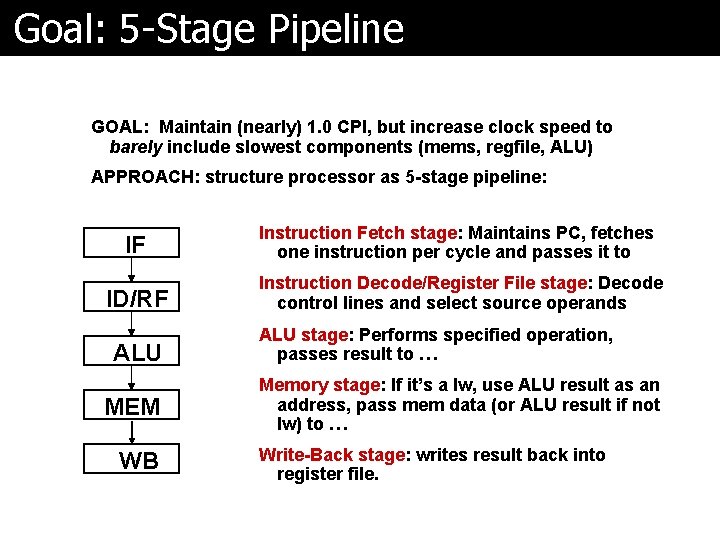

Goal: 5 -Stage Pipeline GOAL: Maintain (nearly) 1. 0 CPI, but increase clock speed to barely include slowest components (mems, regfile, ALU) APPROACH: structure processor as 5 -stage pipeline: IF Instruction Fetch stage: Maintains PC, fetches one instruction per cycle and passes it to ID/RF Instruction Decode/Register File stage: Decode control lines and select source operands ALU MEM WB ALU stage: Performs specified operation, passes result to … Memory stage: If it’s a lw, use ALU result as an address, pass mem data (or ALU result if not lw) to … Write-Back stage: writes result back into register file.

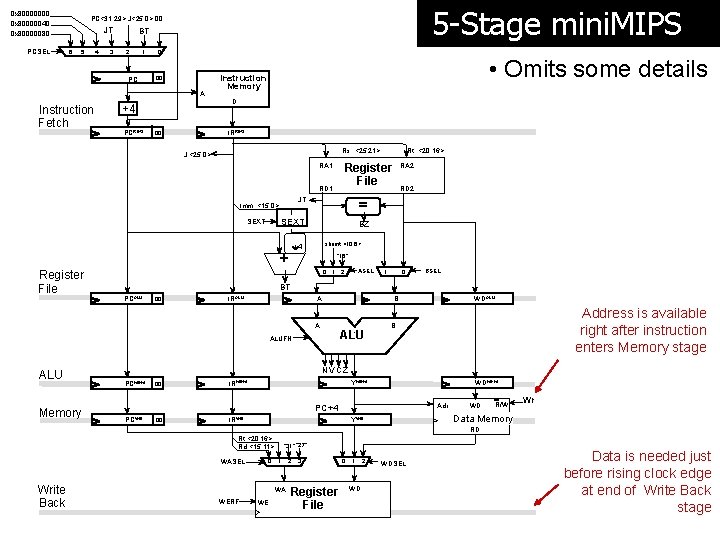

0 x 80000000 0 x 80000040 0 x 80000080 PCSEL 5 -Stage mini. MIPS PC<31: 29>: J<25: 0>: 00 JT 6 5 4 3 BT 2 1 PC 0 00 A Instruction Fetch D +4 PCREG • Omits some details Instruction Memory IRREG 00 Rs: <25: 21> J: <25: 0> Register File RA 1 WA RD 1 JT Imm: <15: 0> Register File shamt: <10: 6> “ 16” 1 0 BSEL BT PCALU 00 IRALU B A ALUFN Memory ASEL 0 1 2 A ALU RD 2 BZ x 4 + RA 2 = SEXT Rt: <20: 16> WDALU Address is available right after instruction enters Memory stage B NV C Z PCMEM 00 YMEM IRMEM WDMEM Adr PC+4 PCWB 00 R/W Wr Data Memory YWB IRWB WD RD Rt: <20: 16> Rd: <15: 11> WASEL Write Back “ 31” “ 27” 0 1 WA WERF WA WE 2 3 Register File 0 1 WD 2 WDSEL Data is needed just before rising clock edge at end of Write Back stage

Pipelining ã Improve performance by increasing instruction throughput Ideal speedup is number of stages in the pipeline. Do we achieve this?

Pipelining ã What makes it easy l all instructions are the same length l just a few instruction formats l memory operands appear only in loads and stores ã What makes it hard? l structural hazards: suppose we had only one memory l control hazards: need to worry about branch instructions l data hazards: an instruction depends on a previous instruction ã Net effect: l Individual instructions still take the same number of cycles l But improved throughput by increasing the number of simultaneously executing instructions

Data Hazards ã Problem with starting next instruction before first is finished l dependencies that “go backward in time” are data hazards

Software Solution ã Have compiler guarantee no hazards l Where do we insert the “nops” ? Ø Between “producing” and “consuming” instructions! sub and or add sw $2, $1, $3 $12, $5 $13, $6, $2 $14, $2 $15, 100($2) ã Problem: this really slows us down!

Forwarding ã Bypass/forward results as soon as they are produced/needed. Don’t wait for them to be written back into registers!

Can't always forward ã Load word can still cause a hazard: l an instruction tries to read a register following a load instruction that writes to the same register. STALL!

Stalling ã When needed, stall the pipeline by keeping an instruction in the same stage fpr an extra clock cycle.

Branch Hazards ã When branching, other instructions are in the pipeline! l need to add hardware for flushing instructions if we are wrong

Pipeline Summary ã A very common technique to improve throughput of any circuit l used in all modern processors! ã Fallacies: l “Pipelining is easy. ” No, smart people get it wrong all of the time! l “Pipelining is independent of ISA. ” No, many ISA decisions impact how easy/costly it is to implement pipelining (i. e. branch semantics, addressing modes). l “Increasing pipeline stages improves performance. ” No, returns diminish because of increasing complexity.