CGW 2007 How Trustworthy is Craftys Analysis of

- Slides: 27

CGW 2007 How Trustworthy is Crafty’s Analysis of Chess Champions? Matej Guid, Aritz Pérez, and Ivan Bratko University of Ljubljana Slovenia

Introduction High-quality chess programs provided an opportunity of a more objective comparison between Chess Champions ð Guid and Bratko carried out a computer analysis of games played by World Chess Champions (ICGA Journal, Vol 29, No. 2, June 2006). ð an attempt at an objective assessment of chess playing strength of chess players of different times Crafty’s Analysis of Chess Champions (2006) ð the idea was to determine the chess players' quality of play (regardless of the game score) ð computer analyses of individual moves made by each player ð an open source chess program Crafty was used for the analysis I Wilhelm Steinitz, 1886 - 1894

II Emanuel Lasker, 1894 -1921

Readers’ feedback ð A frequent comment by the readers could be summarised as: “A very interesting study, but it has a flaw in that program Crafty, whose rating is only about 2620, was used to analyse the performance of players stronger than this. For this reason the results cannot be useful”. ð Some readers speculated further that the program will give better ranking to players that have a similar strength to the program itself. Reservations by the readers ð The three main objections to the used methodology: ü the program used for analysis was too weak ü the search depth performed by the program was too shallow ü the number of analysed positions was too low (at least for some players) III Jose Raul Capablanca, 1921 -1927

II Emanuel Lasker, 1894 -1921

Readers’ feedback ð A frequent comment by the readers could be summarised as: “A very interesting study, but it has a flaw in that program Crafty, whose rating is only about 2620, was used to analyse the performance of players stronger than this. For this reason the results cannot be useful”. ð Some readers speculated further that the program will give better ranking to players that have a similar strength to the program itself. Reservations by the readers ð The three main objections to the used methodology: ü the program used for analysis was too weak ü the search depth performed by the program was too shallow ü the number of analysed positions was too low (at least for some players) III Jose Raul Capablanca, 1921 -1927

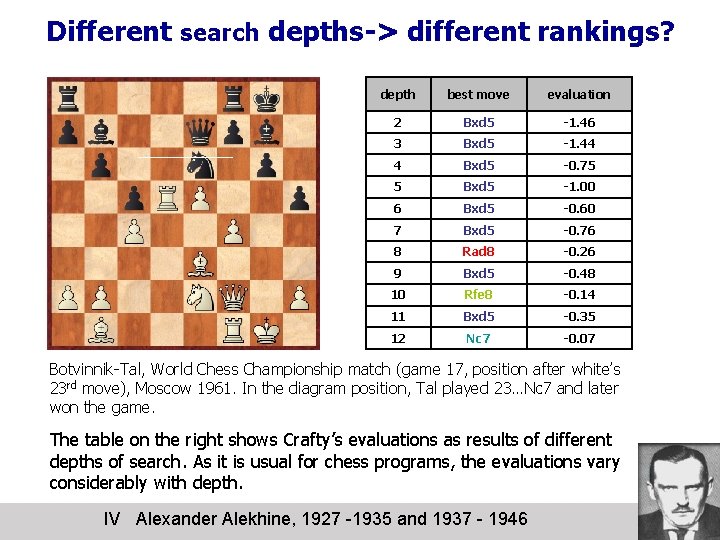

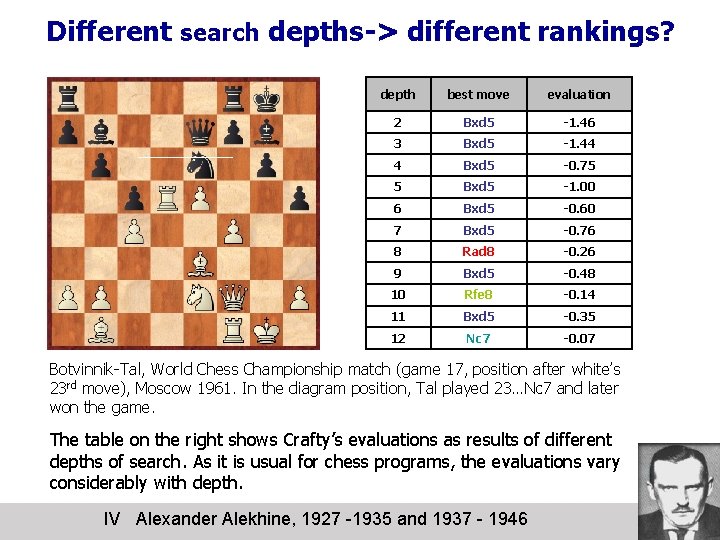

Different search depths-> different rankings? depth best move evaluation 2 Bxd 5 -1. 46 3 Bxd 5 -1. 44 4 Bxd 5 -0. 75 5 Bxd 5 -1. 00 6 Bxd 5 -0. 60 7 Bxd 5 -0. 76 8 Rad 8 -0. 26 9 Bxd 5 -0. 48 10 Rfe 8 -0. 14 11 Bxd 5 -0. 35 12 Nc 7 -0. 07 Botvinnik-Tal, World Chess Championship match (game 17, position after white’s 23 rd move), Moscow 1961. In the diagram position, Tal played 23…Nc 7 and later won the game. The table on the right shows Crafty’s evaluations as results of different depths of search. As it is usual for chess programs, the evaluations vary considerably with depth. IV Alexander Alekhine, 1927 -1935 and 1937 - 1946

Ranking of the players at different depths ð In particular, we were interested in observing to what extent is the ranking of the players preserved at different depths of search. Searching deeper ð strength of computer chess programs increases with search depth ð K. Thompson (1982): searching to only one ply deeper results in more than 200 rating points stronger performance of the program ð The gains in the strength diminish with additional search, but are nevertheless significant at search depths up to 20 plies (J. R. Steenhuisen, 2005) Preservation of the rankings at different search depths. . . would therefore suggest that: ð the same rankings would have been obtained by searching deeper ð using a stronger chess program would NOT affect the results significantly V Max Euwe, 1935 - 1937

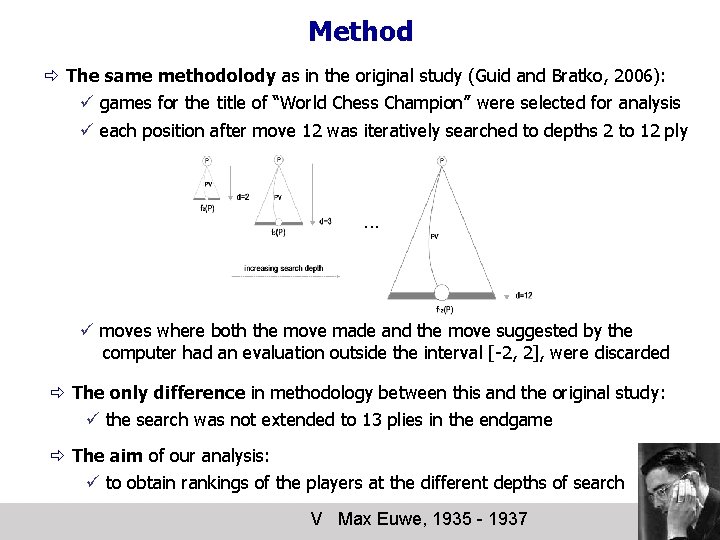

Method ð The same methodolody as in the original study (Guid and Bratko, 2006): ü games for the title of “World Chess Champion” were selected for analysis ü each position after move 12 was iteratively searched to depths 2 to 12 ply ü moves where both the move made and the move suggested by the computer had an evaluation outside the interval [-2, 2], were discarded ð The only difference in methodology between this and the original study: ü the search was not extended to 13 plies in the endgame ð The aim of our analysis: ü to obtain rankings of the players at the different depths of search V Max Euwe, 1935 - 1937

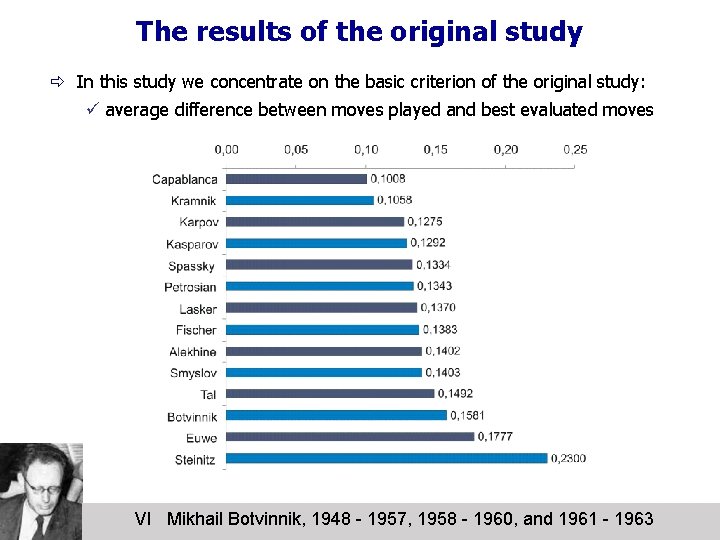

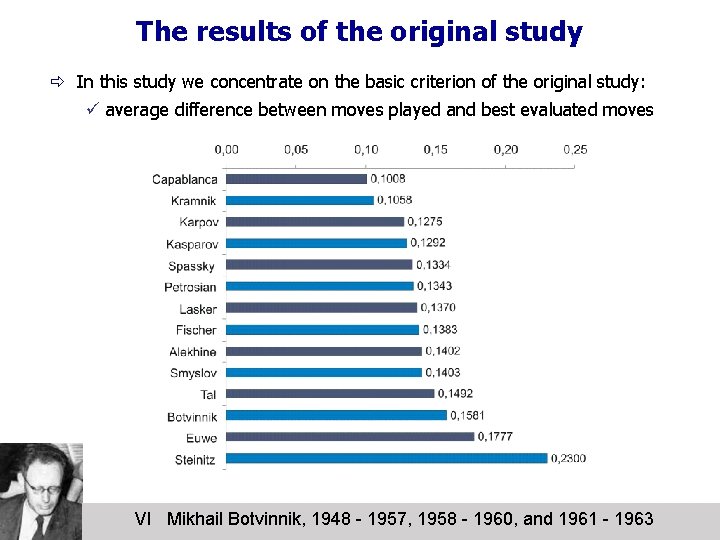

The results of the original study ð In this study we concentrate on the basic criterion of the original study: ü average difference between moves played and best evaluated moves VI Mikhail Botvinnik, 1948 - 1957, 1958 - 1960, and 1961 - 1963

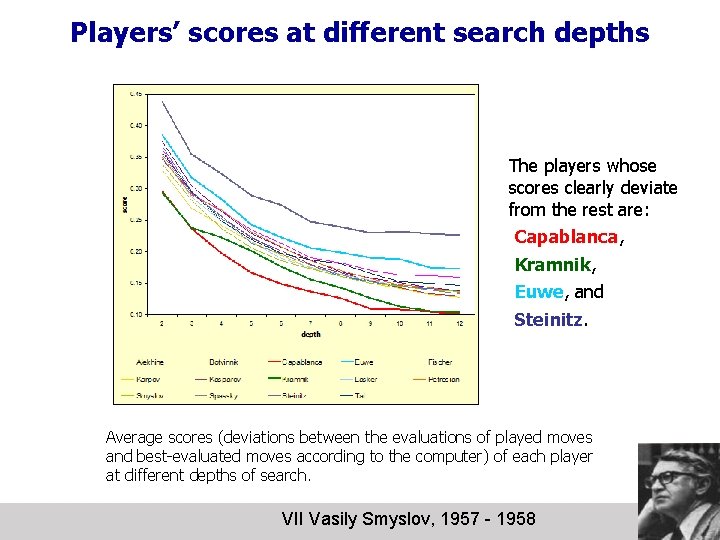

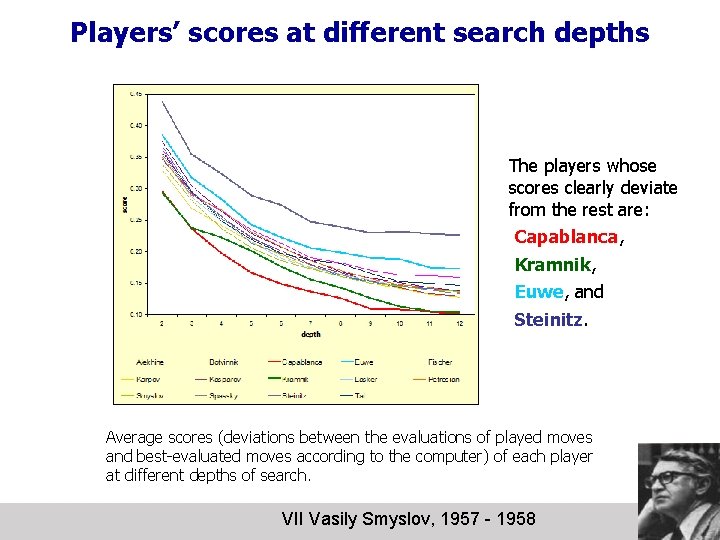

Players’ scores at different search depths The players whose scores clearly deviate from the rest are: Capablanca, Kramnik, Euwe, and Steinitz. Average scores (deviations between the evaluations of played moves and best-evaluated moves according to the computer) of each player at different depths of search. VII Vasily Smyslov, 1957 - 1958

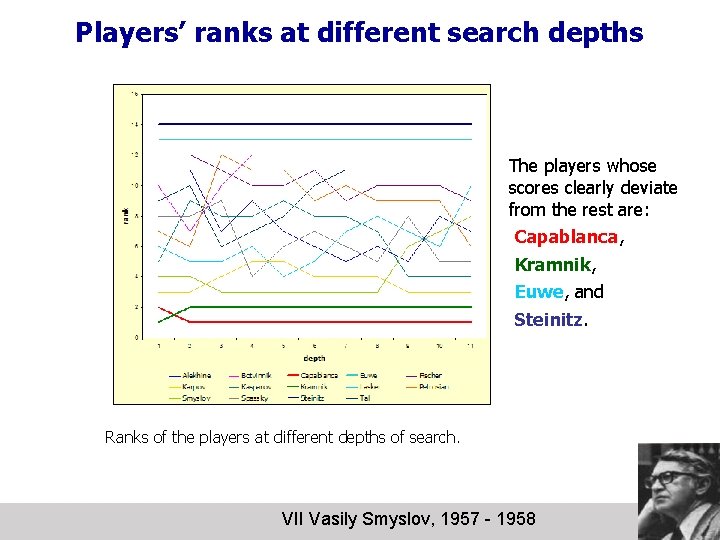

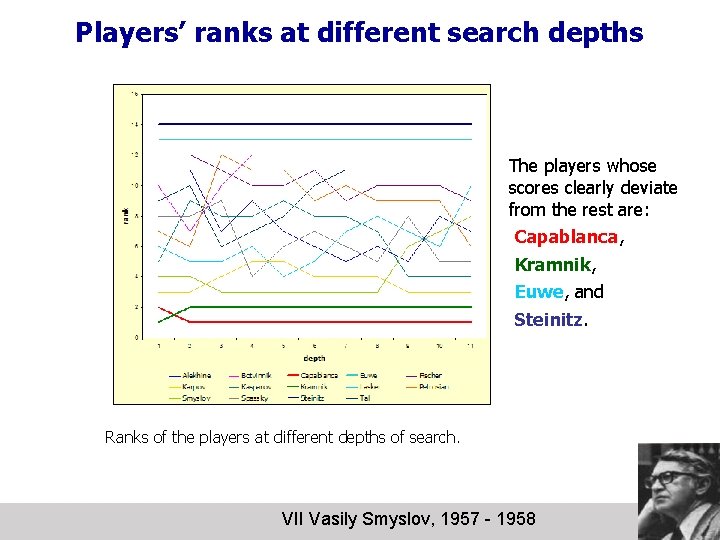

Players’ ranks at different search depths The players whose scores clearly deviate from the rest are: Capablanca, Kramnik, Euwe, and Steinitz. Ranks of the players at different depths of search. VII Vasily Smyslov, 1957 - 1958

Stability of the rankings In order to check the reliability of the program as a tool for ranking chess players, it was our goal to determine the stability of the obtained rankings: ð in different subsets of analysed positions, ð with increasing search depth. Subsets of analysed positions For each player: ð 100 subsets from the original dataset were generated ð each subset contained 500 randomly chosen positions (without replacement) VIII Mikhail Tal, 1960 - 1961

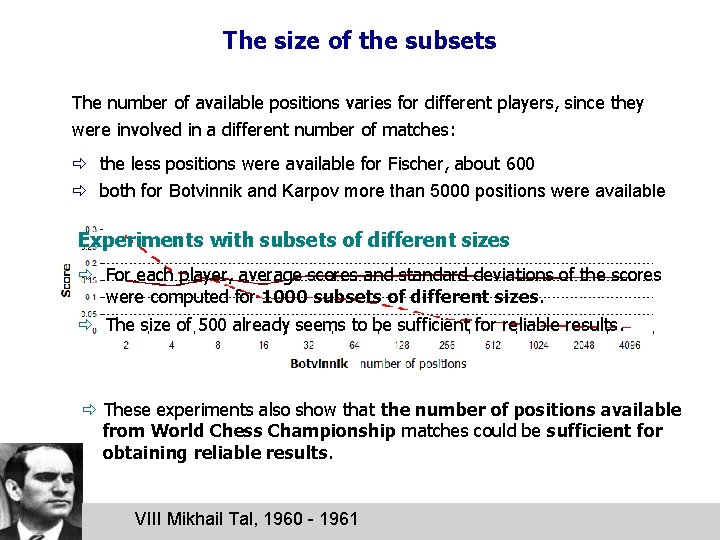

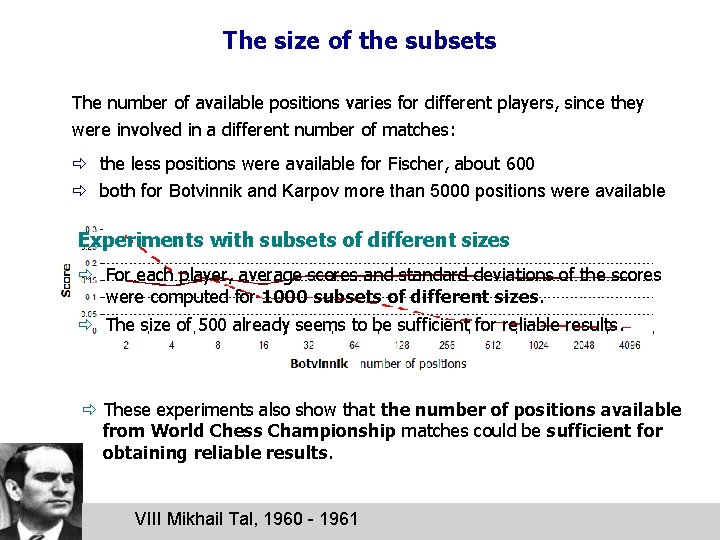

The size of the subsets The number of available positions varies for different players, since they were involved in a different number of matches: ð the less positions were available for Fischer, about 600 ð both for Botvinnik and Karpov more than 5000 positions were available Experiments with subsets of different sizes ð For each player, average scores and standard deviations of the scores were computed for 1000 subsets of different sizes. ð The size of 500 already seems to be sufficient for reliable results. ð These experiments also show that the number of positions available from World Chess Championship matches could be sufficient for obtaining reliable results. VIII Mikhail Tal, 1960 - 1961

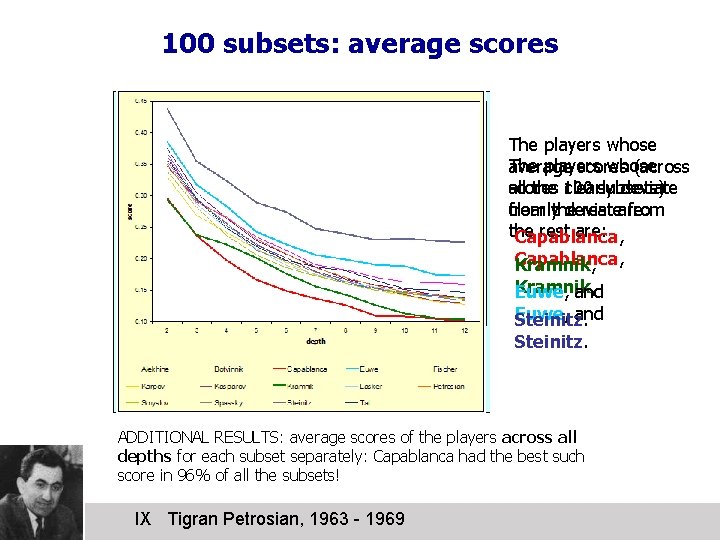

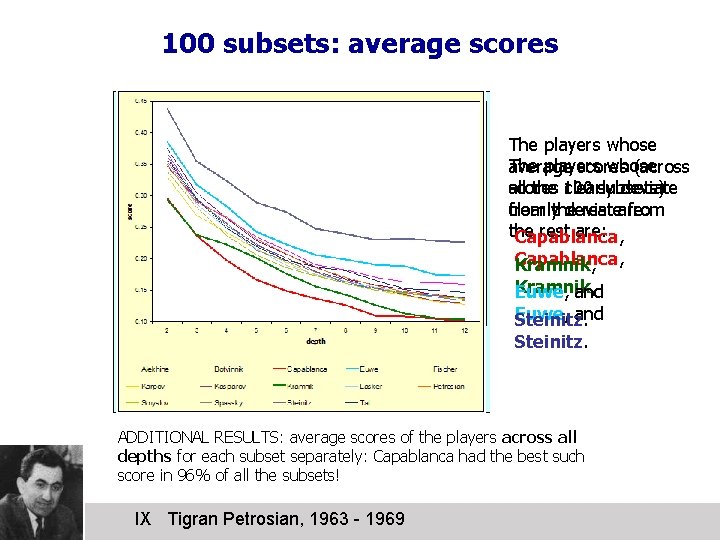

100 subsets: average scores The players whose average scores (across scores clearly deviate all the 100 subsets) from rest are: clearlythe deviate from the rest are: Capablanca, Kramnik, Euwe, and Steinitz. ADDITIONAL RESULTS: average scores of the players across all depths for each subset separately: Capablanca had the best such score in 96% of all the subsets! IX Tigran Petrosian, 1963 - 1969

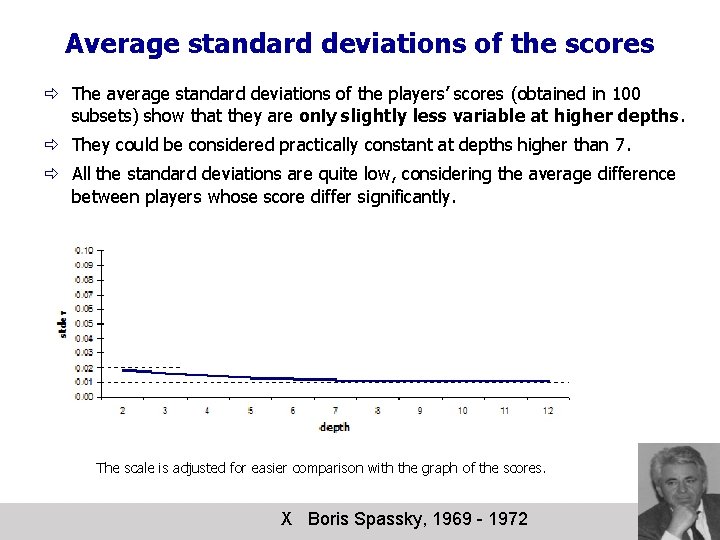

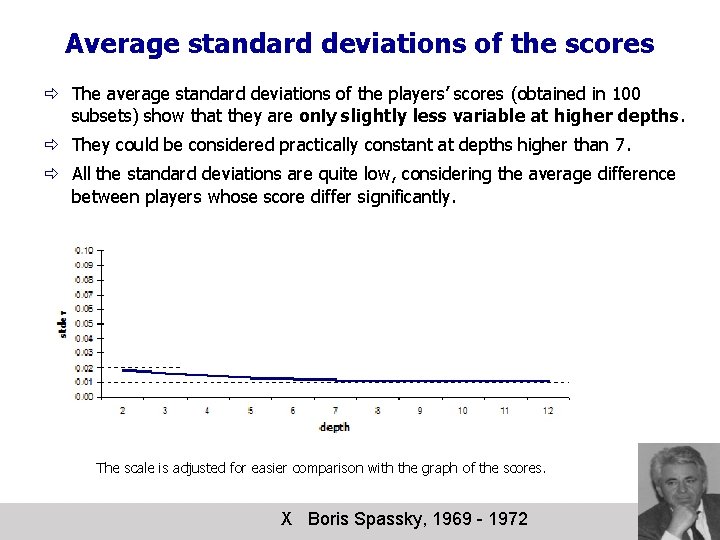

Average standard deviations of the scores ð The average standard deviations of the players’ scores (obtained in 100 subsets) show that they are only slightly less variable at higher depths. ð They could be considered practically constant at depths higher than 7. ð All the standard deviations are quite low, considering the average difference between players whose score differ significantly. The scale is adjusted for easier comparison with the graph of the scores. X Boris Spassky, 1969 - 1972

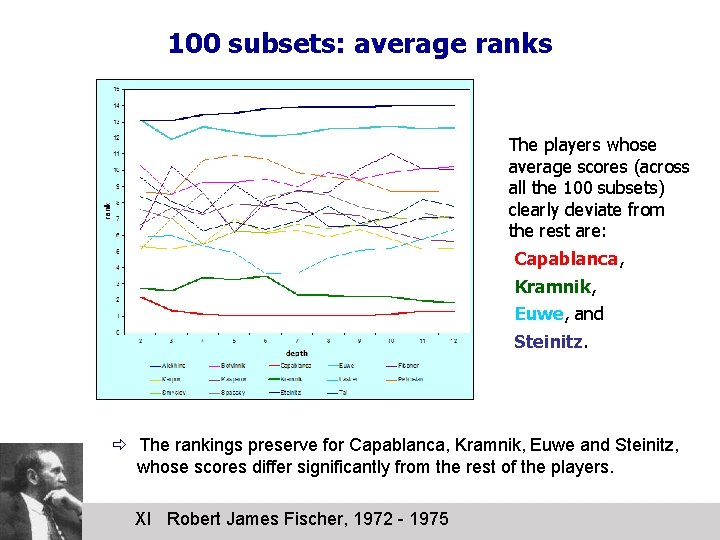

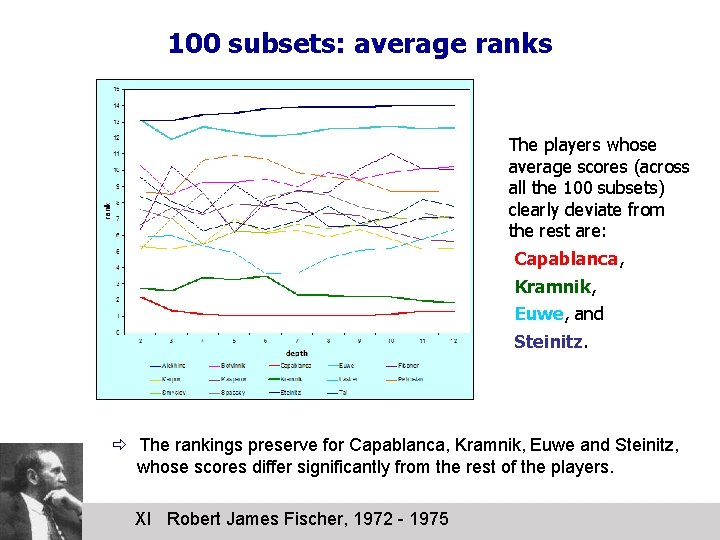

100 subsets: average ranks The players whose average scores (across all the 100 subsets) clearly deviate from the rest are: Capablanca, Kramnik, Euwe, and Steinitz. ð The rankings preserve for Capablanca, Kramnik, Euwe and Steinitz, whose scores differ significantly from the rest of the players. XI Robert James Fischer, 1972 - 1975

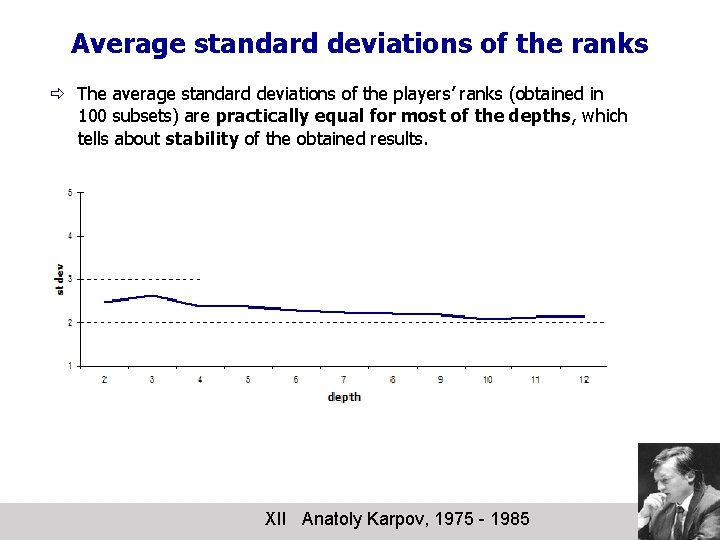

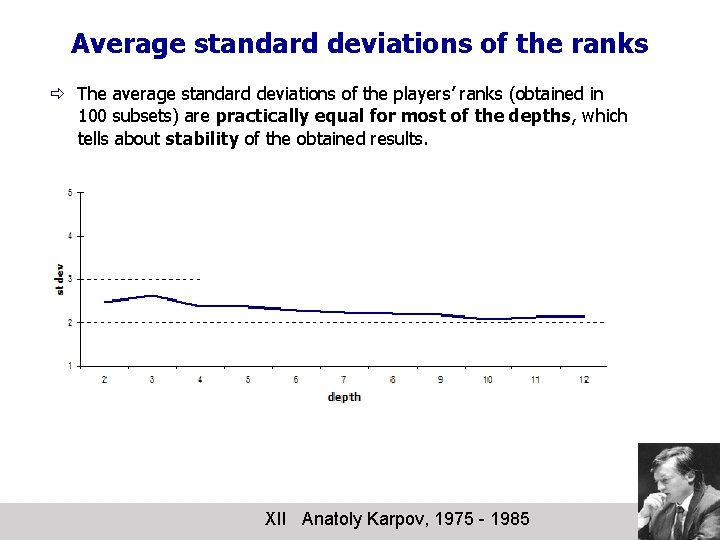

Average standard deviations of the ranks ð The average standard deviations of the players’ ranks (obtained in 100 subsets) are practically equal for most of the depths, which tells about stability of the obtained results. XII Anatoly Karpov, 1975 - 1985

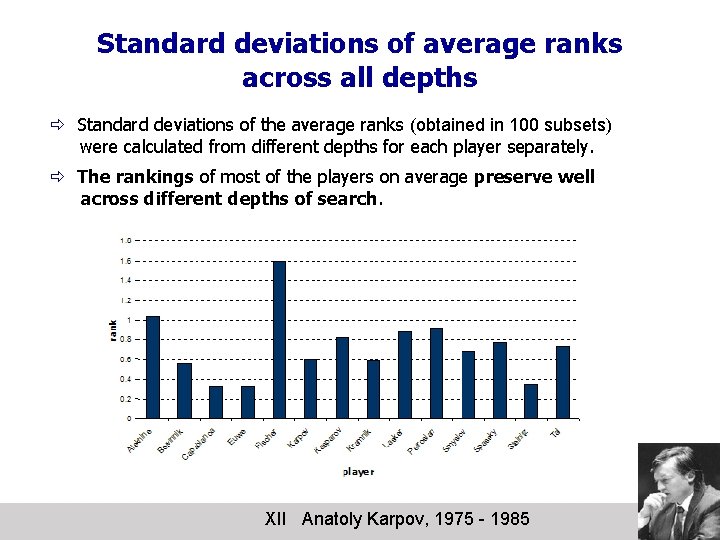

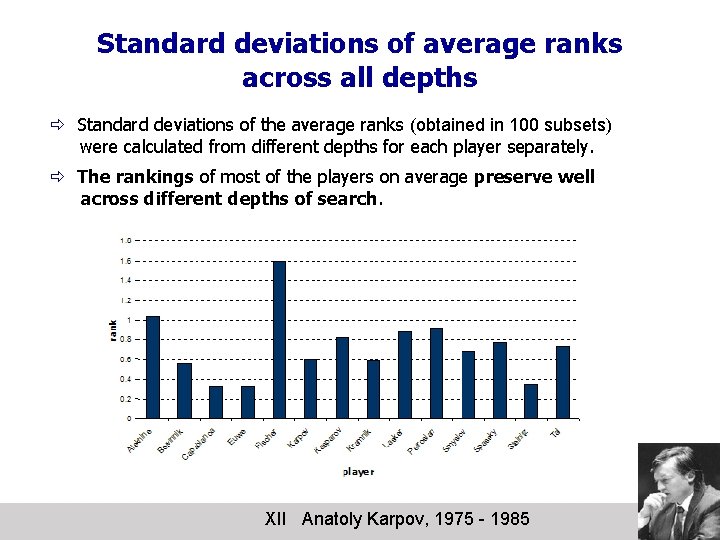

Standard deviations of average ranks across all depths ð Standard deviations of the average ranks (obtained in 100 subsets) were calculated from different depths for each player separately. ð The rankings of most of the players on average preserve well across different depths of search. XII Anatoly Karpov, 1975 - 1985

Different strengths of the program, yet so similar the obtained rankings!? ð Crafty’s chess strength at very shallow depths is much weaker than the one of an ordinary club player, yet the obtained rankings are so similar at all the depths. . . ð What are conditions under which the program gives reliable rankings? ð Is a stronger program necessarily a better one for ranking chess players? The obtained results already speak for themselves. . . however, we also provide: ð A simple probabilistic model of ranking by imperfect referee ð A more sophisticated mathematical explanation XIII Garry Kasparov, 1985 - 2000

A Simple Probabilistic Model of Ranking by Imperfect Referee. . . shows the following: ð To obtain a sensible ranking of players, it is not necessary to use a computer that is stronger than the players themselves. ð The (fallible) computer will NOT exhibit preference for players of similar strength to the computer. (see the paper for details) XIII Garry Kasparov, 1985 - 2000

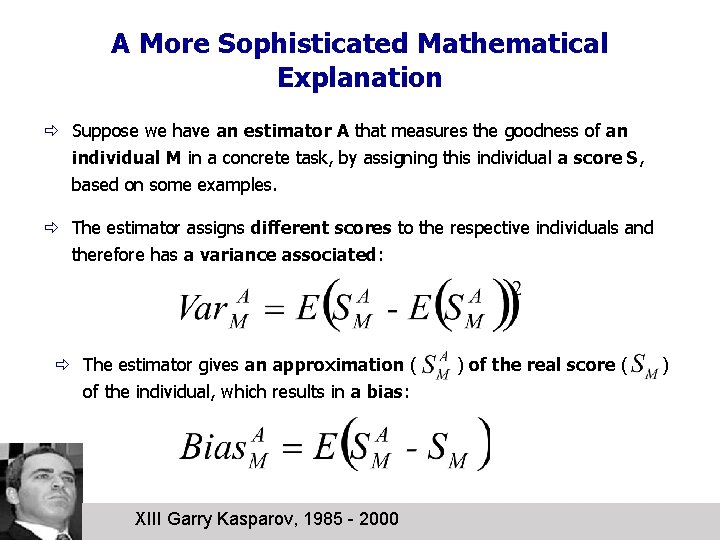

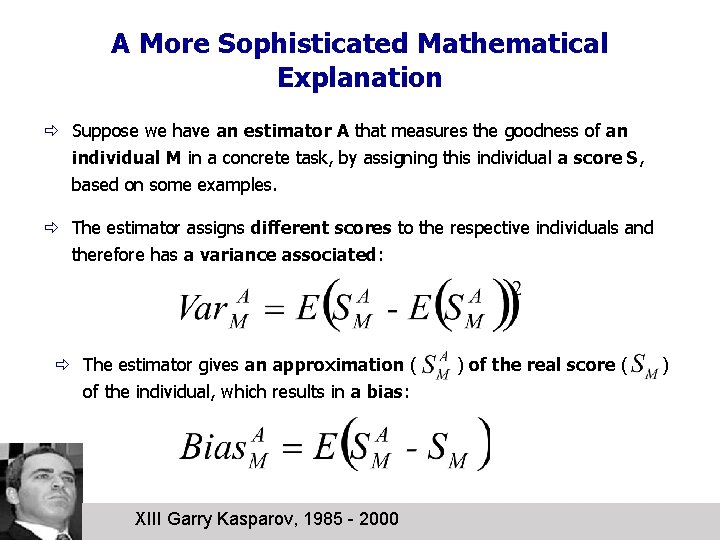

A More Sophisticated Mathematical Explanation ð Suppose we have an estimator A that measures the goodness of an individual M in a concrete task, by assigning this individual a score S, based on some examples. ð The estimator assigns different scores to the respective individuals and therefore has a variance associated: ð The estimator gives an approximation ( of the individual, which results in a bias: XIII Garry Kasparov, 1985 - 2000 ) of the real score ( )

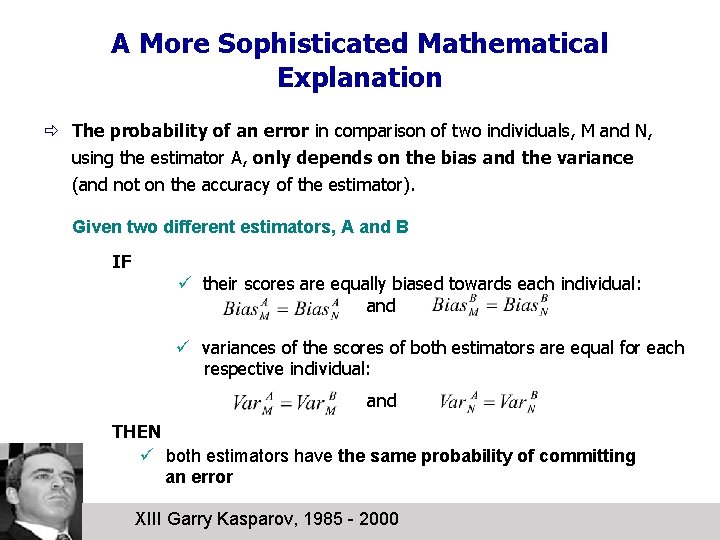

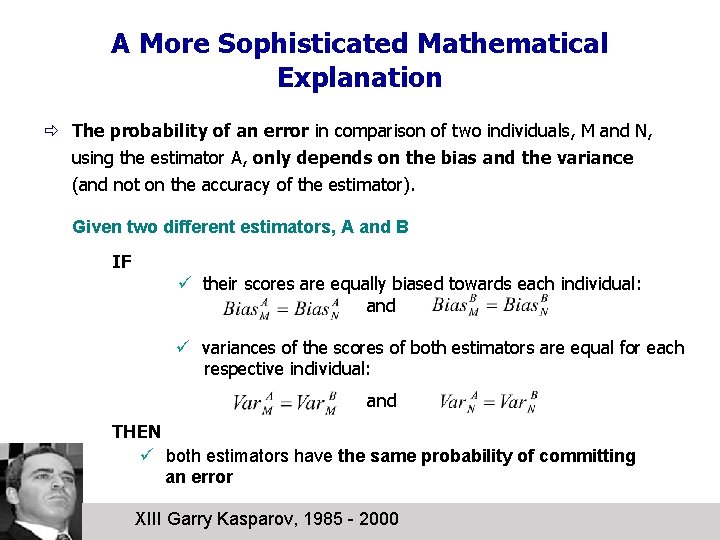

A More Sophisticated Mathematical Explanation ð The probability of an error in comparison of two individuals, M and N, using the estimator A, only depends on the bias and the variance (and not on the accuracy of the estimator). Given two different estimators, A and B IF ü their scores are equally biased towards each individual: and ü variances of the scores of both estimators are equal for each respective individual: and THEN ü both estimators have the same probability of committing an error XIII Garry Kasparov, 1985 - 2000

An additional experiment: Crafty, biases, and variances ð In our study the subscript and the superscript of ü M - a player ü A - a depth of search ð The real score refer to: could not be determined. ð For each player, the average score at depth 12, obtained from all available positions of each respective player, served as the best possible approximation of. ð The biases and the variances of each player were observed at each search depth up to 11. ð Once again the 100 subsets were used. XIV Vladimir Kramnik, 2000 -

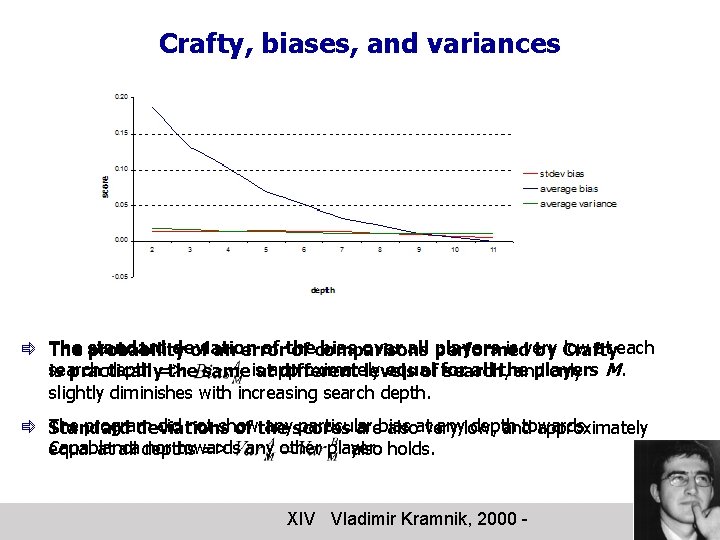

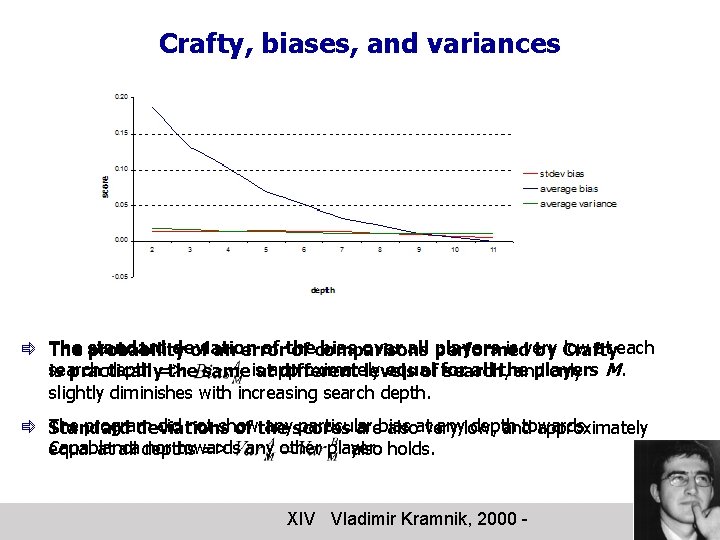

Crafty, biases, and variances ð The standard deviation of the bias over all players is very low at each probability of an error of comparisons performed by Crafty search depth => equal all the players is practically the sameisatapproximately different levels of for search, and only M. slightly diminishes with increasing search depth. ð The program did not show anyscores particular anylow, depth towards Standard deviations of the arebias alsoatvery and approximately Capablanca nor towards equal at all depths => any other player. also holds. XIV Vladimir Kramnik, 2000 -

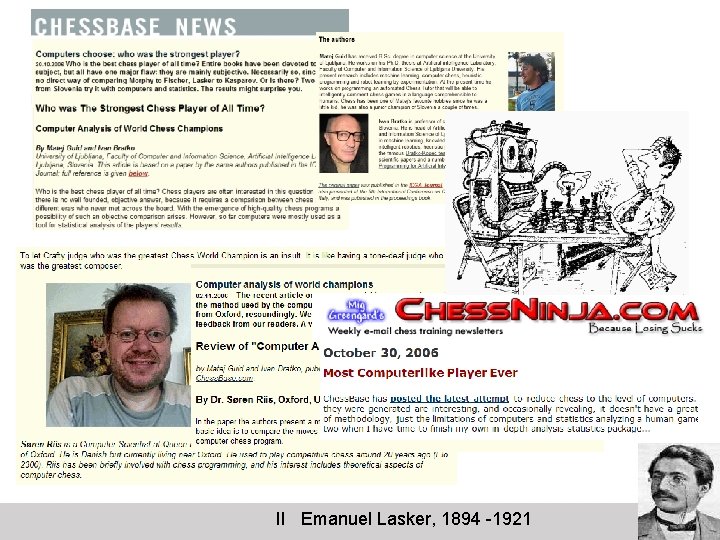

Conclusion ð In this study we analysed how trustworthy are the rankings of Chess Champions, produced by computer analysis using the program Crafty. ð Our study shows that it is not very likely that the ranking of at least the players whose scores differ enough from the others would change if: ü a stronger chess program was used, ü the program would search deeper, and ü larger sets of positions were available for the analysis. ð The rankings are surprisingly stable: ü over a large interval of search depths, and ü over a large variation of sample positions. ð It is particularly surprising that even extremely shallow search of just two or three ply enable reasonable rankings. XIV Vladimir Kramnik, 2000 -

Cgw middle school

Cgw middle school Social needs

Social needs How was it produced

How was it produced A boy scout is trustworthy loyal helpful

A boy scout is trustworthy loyal helpful Unreliable narrator def

Unreliable narrator def A scout is trustworthy

A scout is trustworthy A trustworthy beast

A trustworthy beast Verifacts trustworthy

Verifacts trustworthy Fulfilling obligations in a dependable and trustworthy way

Fulfilling obligations in a dependable and trustworthy way Since she believed him to be both candid and trustworthy

Since she believed him to be both candid and trustworthy Trustworthy security systems

Trustworthy security systems Trustworthy computing initiative

Trustworthy computing initiative Trustworthy environment

Trustworthy environment Simple linear regression excel

Simple linear regression excel Cara mengaktifkan macro di excel 2010

Cara mengaktifkan macro di excel 2010 Mental health act (2007)

Mental health act (2007) Delhi fire service act

Delhi fire service act Df 23/2007

Df 23/2007 12 oktober 2007

12 oktober 2007 Ogle 2007 blg 368 lb

Ogle 2007 blg 368 lb Exercises for microsoft word

Exercises for microsoft word Sale of goods and supply of services act 2007

Sale of goods and supply of services act 2007 June 2007 physics regents

June 2007 physics regents Power pivot

Power pivot Inei censo 2007 resultados

Inei censo 2007 resultados Summary of public procurement act, 2007

Summary of public procurement act, 2007 Ods to excel

Ods to excel Tombol size button terletak di bagian pojok

Tombol size button terletak di bagian pojok