ATLAS Data Challenge on Nordu Grid CHEP 2003

- Slides: 23

ATLAS Data Challenge on Nordu. Grid CHEP 2003 – UCSD Anders Wäänänen waananen@nbi. dk

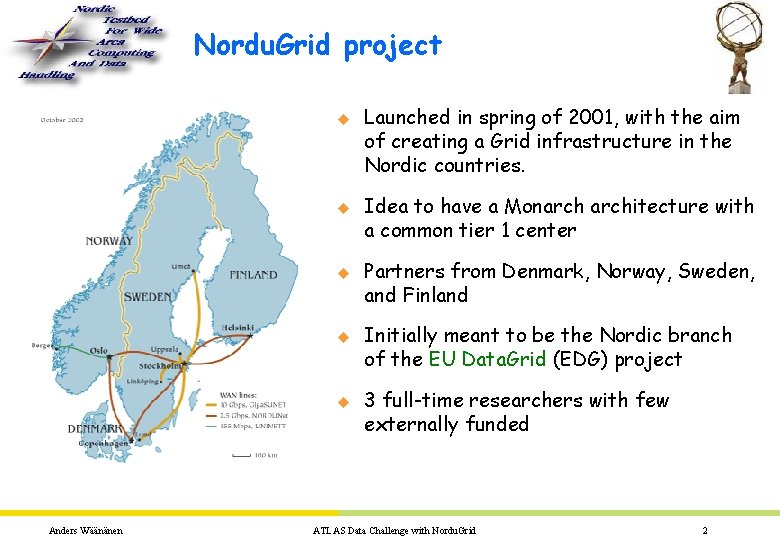

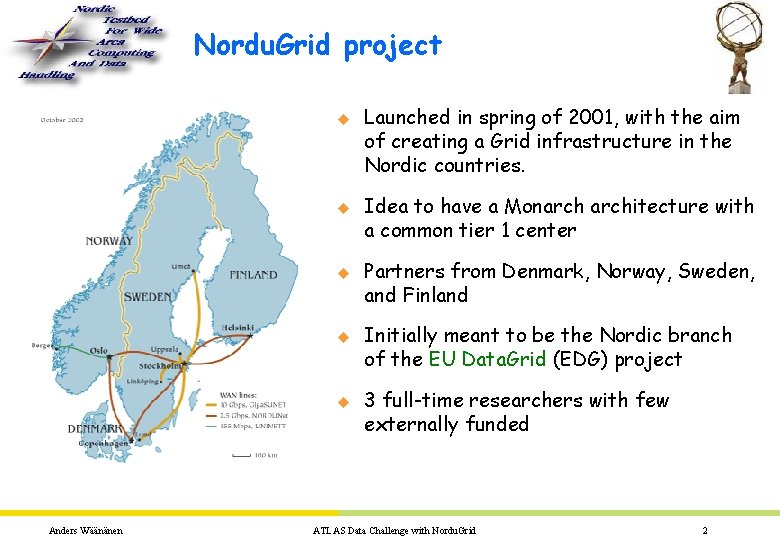

Nordu. Grid project u u u Anders Wäänänen Launched in spring of 2001, with the aim of creating a Grid infrastructure in the Nordic countries. Idea to have a Monarchitecture with a common tier 1 center Partners from Denmark, Norway, Sweden, and Finland Initially meant to be the Nordic branch of the EU Data. Grid (EDG) project 3 full-time researchers with few externally funded ATLAS Data Challenge with Nordu. Grid 2

Motivations u Nordu. Grid u One goal was to have the ATLAS data challenge run by May 2002 u Should be based on the Globus Toolkit™ u Available n Grid middleware: The Globus Toolkit™ s n was initially meant to be a pure deployment project A toolbox – not a complete solution European Data. Grid software s Not mature for production in the beginning of 2002 s Architecture problems Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 3

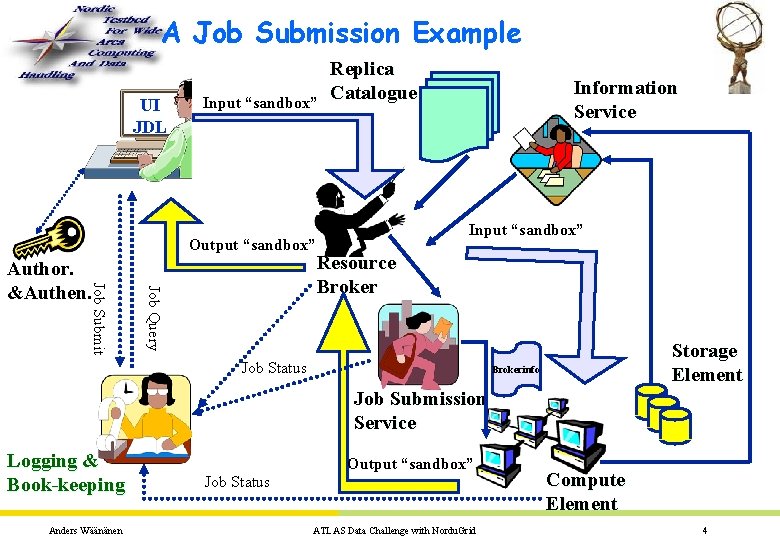

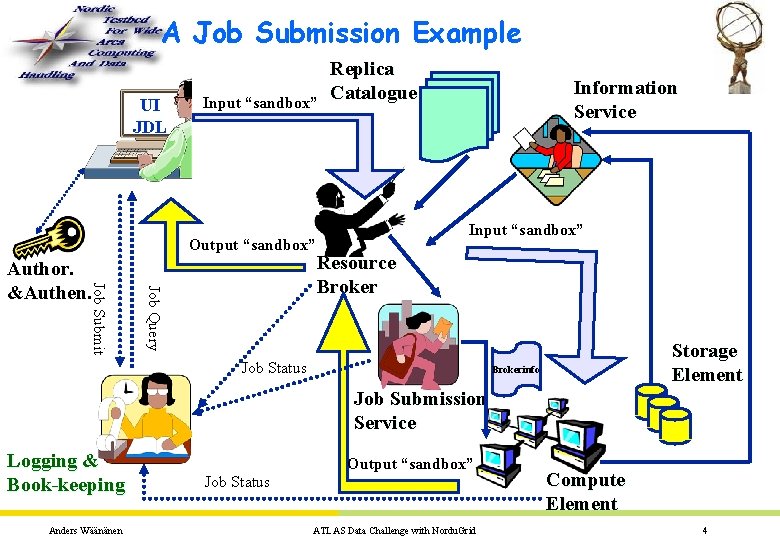

A Job Submission Example UI JDL Input “sandbox” Output “sandbox” Job Query Job Submit Author. &Authen. Replica Catalogue Information Service Input “sandbox” Resource Broker Job Status Storage Element Brokerinfo Job Submission Service Logging & Book-keeping Anders Wäänänen Job Status Output “sandbox” ATLAS Data Challenge with Nordu. Grid Compute Element 4

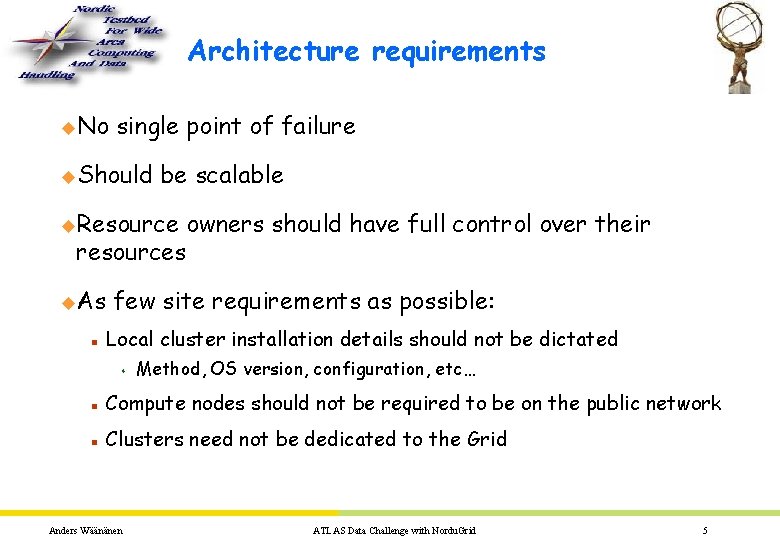

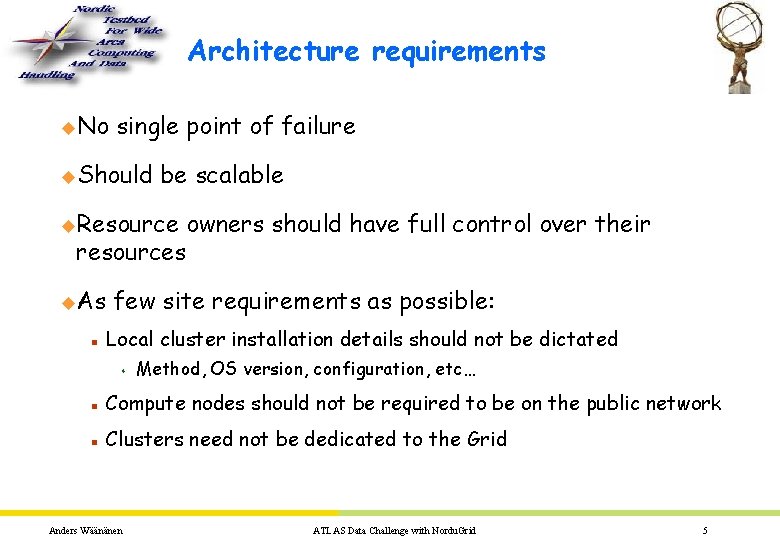

Architecture requirements u. No single point of failure u. Should be scalable u. Resource owners should have full control over their resources u. As n few site requirements as possible: Local cluster installation details should not be dictated s Method, OS version, configuration, etc… n Compute nodes should not be required to be on the public network n Clusters need not be dedicated to the Grid Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 5

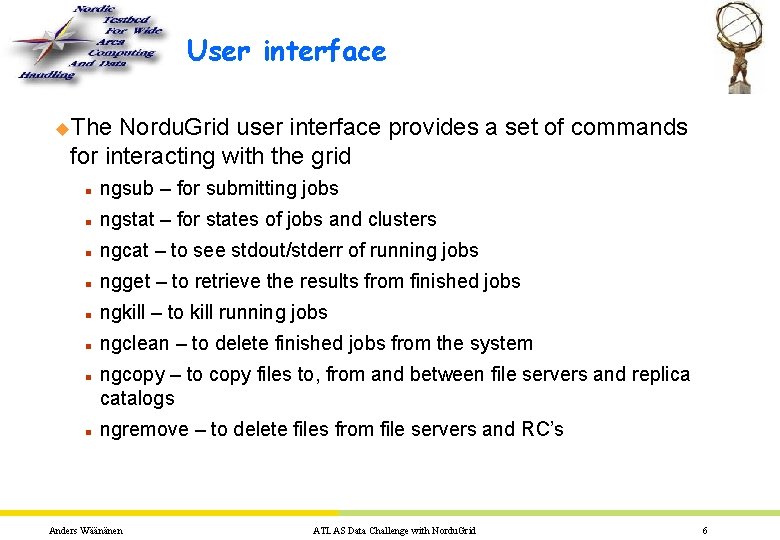

User interface u. The Nordu. Grid user interface provides a set of commands for interacting with the grid n ngsub – for submitting jobs n ngstat – for states of jobs and clusters n ngcat – to see stdout/stderr of running jobs n ngget – to retrieve the results from finished jobs n ngkill – to kill running jobs n ngclean – to delete finished jobs from the system n n ngcopy – to copy files to, from and between file servers and replica catalogs ngremove – to delete files from file servers and RC’s Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 6

ATLAS Data Challenges u. A series of computing challenges within Atlas of increasing size and complexity. u Preparing for data-taking and analysis at the LHC. u Thorough validation of the complete Atlas software suite. u Introduction possible. Anders Wäänänen and use of Grid middleware as fast and as much as ATLAS Data Challenge with Nordu. Grid 7

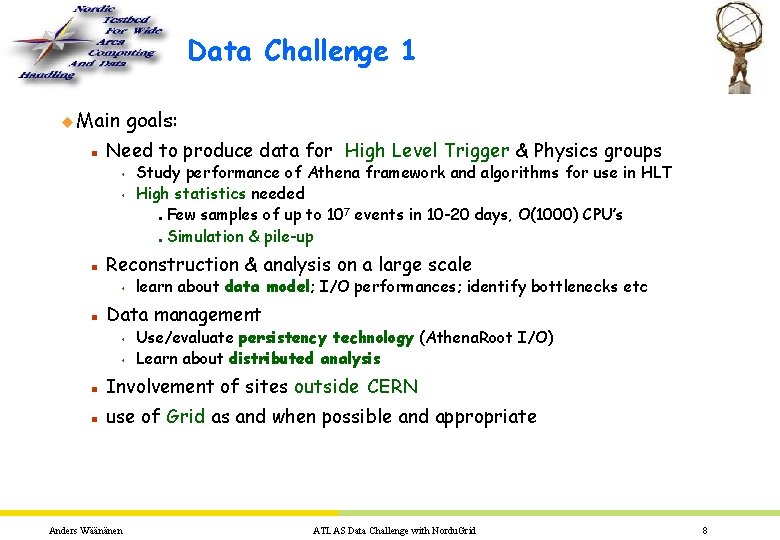

Data Challenge 1 u Main n goals: Need to produce data for High Level Trigger & Physics groups s s Study performance of Athena framework and algorithms for use in HLT High statistics needed Few samples of up to 107 events in 10 -20 days, O(1000) CPU’s Simulation & pile-up n n n Reconstruction & analysis on a large scale s n learn about data model; I/O performances; identify bottlenecks etc Data management s s Use/evaluate persistency technology (Athena. Root I/O) Learn about distributed analysis n Involvement of sites outside CERN n use of Grid as and when possible and appropriate Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 8

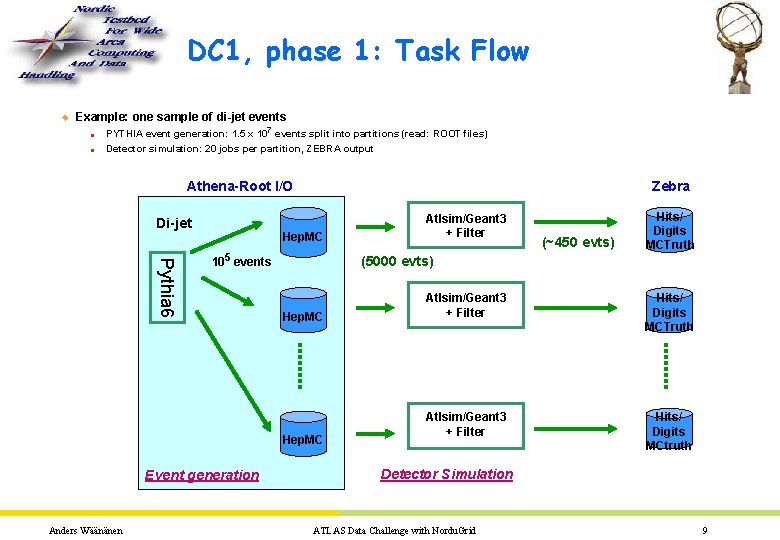

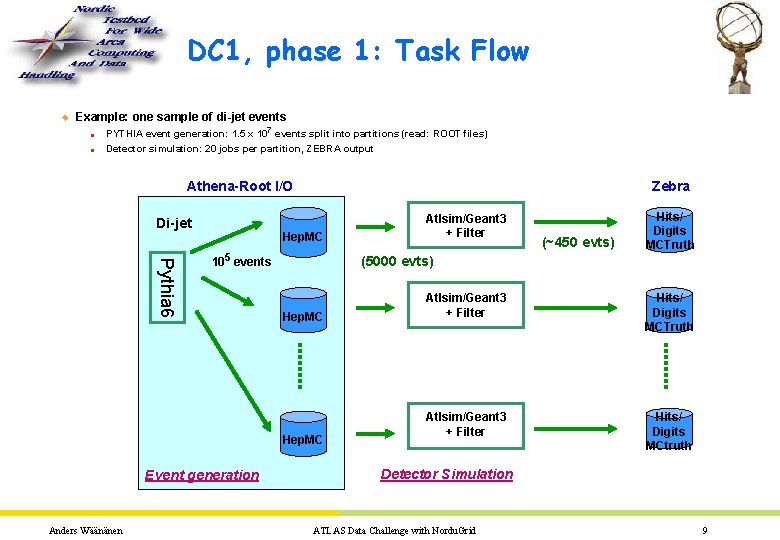

DC 1, phase 1: Task Flow u Example: one sample of di-jet events n PYTHIA event generation: 1. 5 x 107 events split into partitions (read: ROOT files) n Detector simulation: 20 jobs per partition, ZEBRA output Athena-Root I/O Di-jet Zebra Hep. MC Pythia 6 105 events Anders Wäänänen (~450 evts) Hits/ Digits MCTruth (5000 evts) Hep. MC Event generation Atlsim/Geant 3 + Filter Hits/ Digits MCTruth Atlsim/Geant 3 + Filter Hits/ Digits MCtruth Detector Simulation ATLAS Data Challenge with Nordu. Grid 9

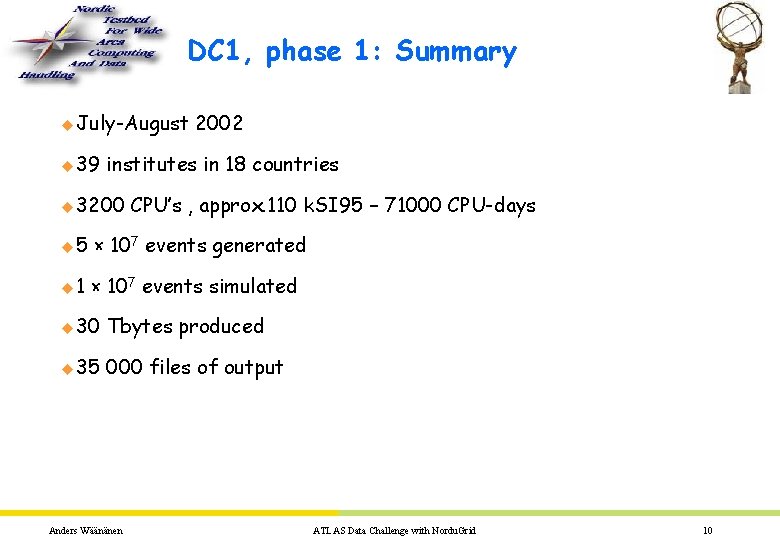

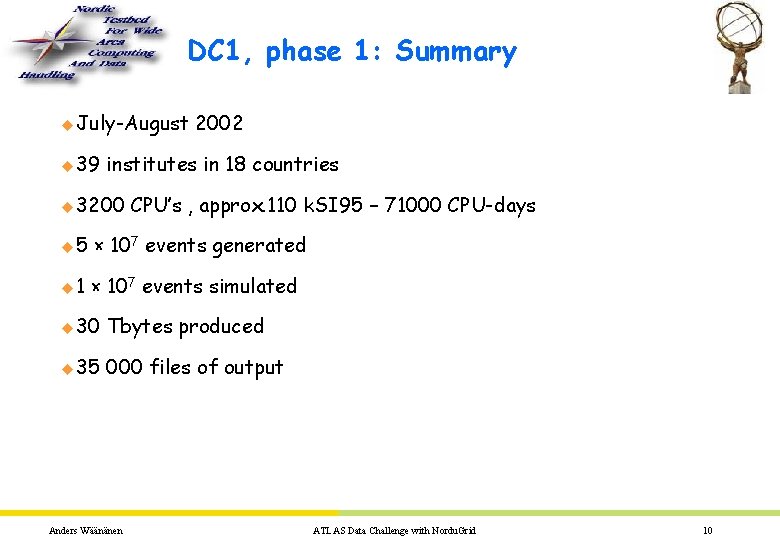

DC 1, phase 1: Summary u July-August u 39 2002 institutes in 18 countries u 3200 CPU’s , approx. 110 k. SI 95 – 71000 CPU-days u 5 × 107 events generated u 1 × 107 events simulated u 30 Tbytes produced u 35 000 files of output Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 10

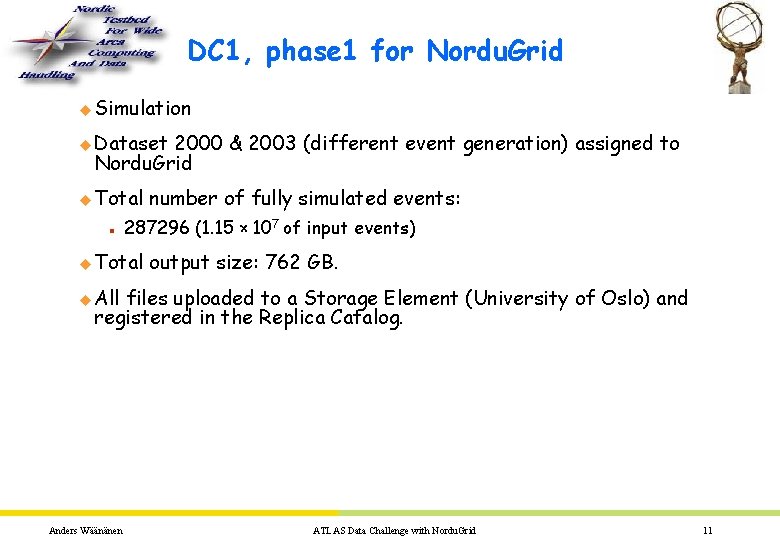

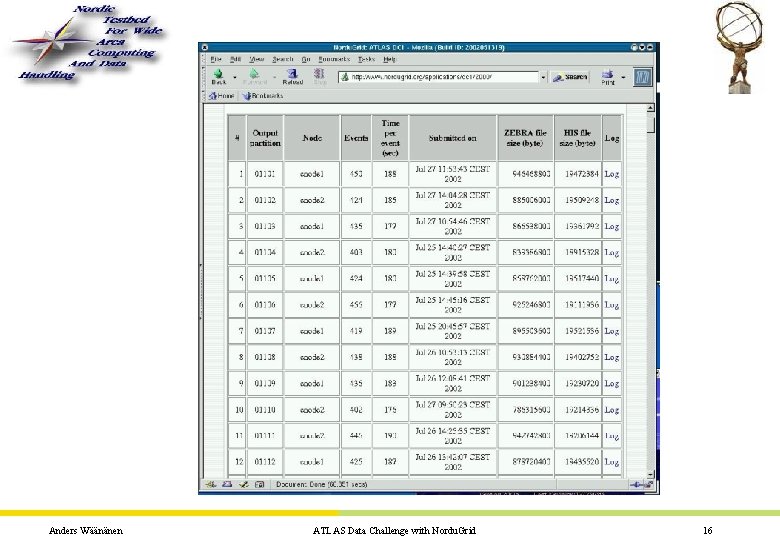

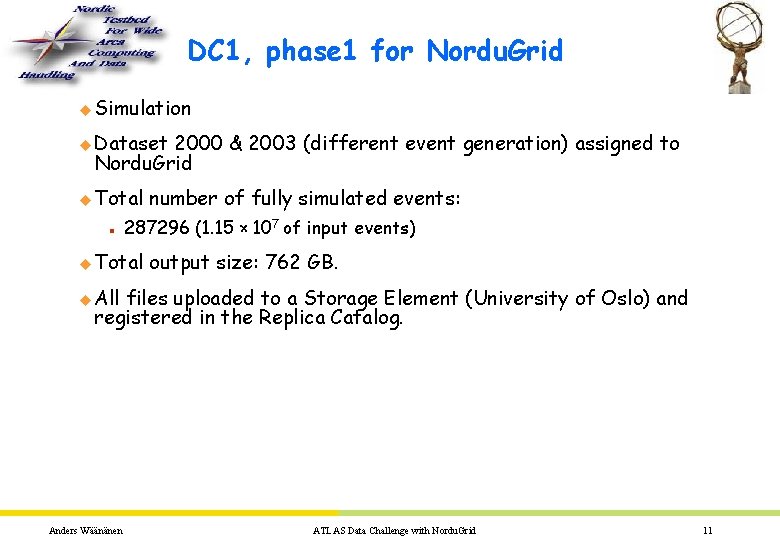

DC 1, phase 1 for Nordu. Grid u Simulation u Dataset 2000 & 2003 (different event generation) assigned to Nordu. Grid u Total n number of fully simulated events: 287296 (1. 15 × 107 of input events) u Total output size: 762 GB. u All files uploaded to a Storage Element (University of Oslo) and registered in the Replica Catalog. Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 11

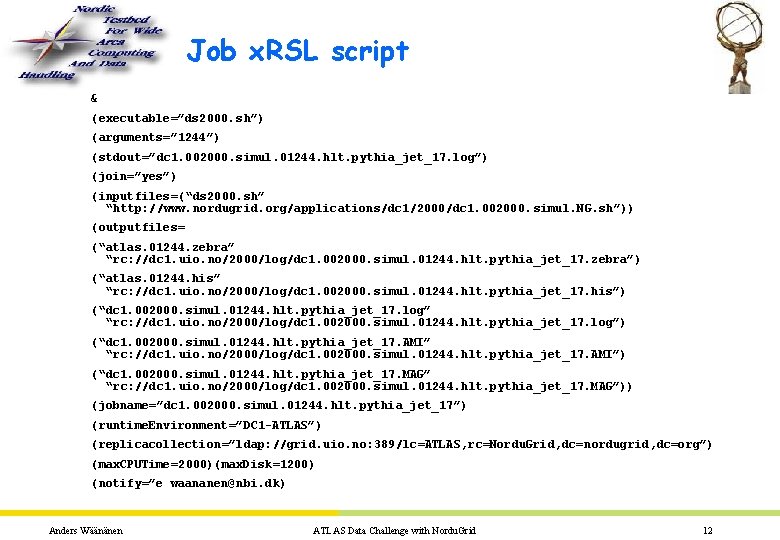

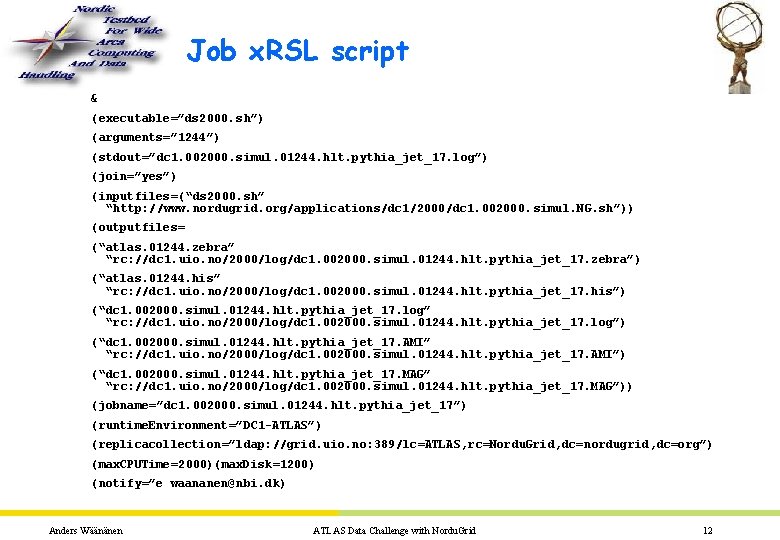

Job x. RSL script & (executable=”ds 2000. sh”) (arguments=” 1244”) (stdout=”dc 1. 002000. simul. 01244. hlt. pythia_jet_17. log”) (join=”yes”) (inputfiles=(“ds 2000. sh” “http: //www. nordugrid. org/applications/dc 1/2000/dc 1. 002000. simul. NG. sh”)) (outputfiles= (“atlas. 01244. zebra” “rc: //dc 1. uio. no/2000/log/dc 1. 002000. simul. 01244. hlt. pythia_jet_17. zebra”) (“atlas. 01244. his” “rc: //dc 1. uio. no/2000/log/dc 1. 002000. simul. 01244. hlt. pythia_jet_17. his”) (“dc 1. 002000. simul. 01244. hlt. pythia_jet_17. log” “rc: //dc 1. uio. no/2000/log/dc 1. 002000. simul. 01244. hlt. pythia_jet_17. log”) (“dc 1. 002000. simul. 01244. hlt. pythia_jet_17. AMI” “rc: //dc 1. uio. no/2000/log/dc 1. 002000. simul. 01244. hlt. pythia_jet_17. AMI”) (“dc 1. 002000. simul. 01244. hlt. pythia_jet_17. MAG” “rc: //dc 1. uio. no/2000/log/dc 1. 002000. simul. 01244. hlt. pythia_jet_17. MAG”)) (jobname=”dc 1. 002000. simul. 01244. hlt. pythia_jet_17”) (runtime. Environment=”DC 1 -ATLAS”) (replicacollection=”ldap: //grid. uio. no: 389/lc=ATLAS, rc=Nordu. Grid, dc=nordugrid, dc=org”) (max. CPUTime=2000)(max. Disk=1200) (notify=”e waananen@nbi. dk) Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 12

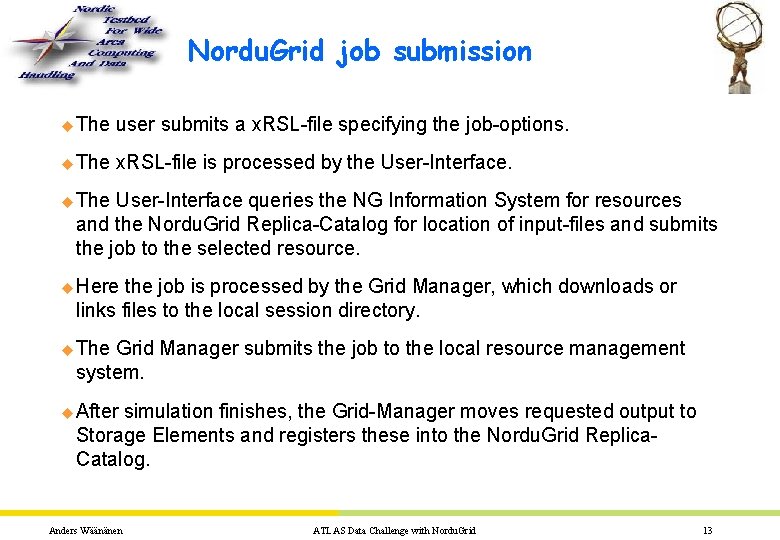

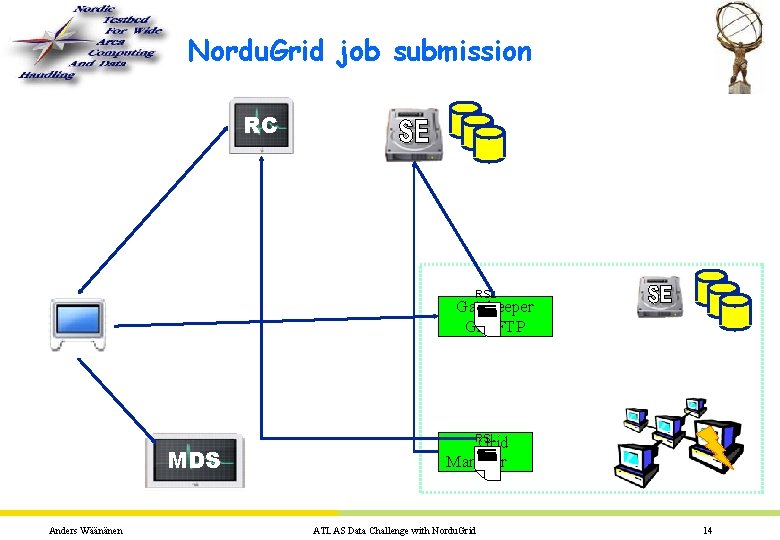

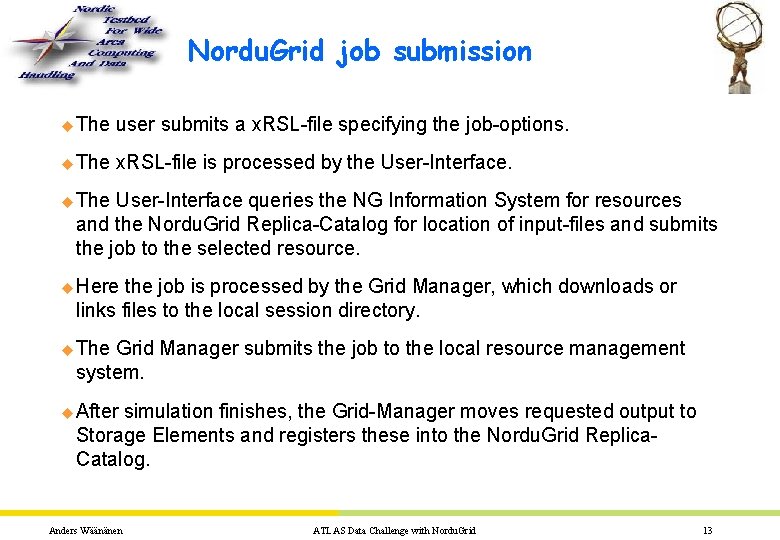

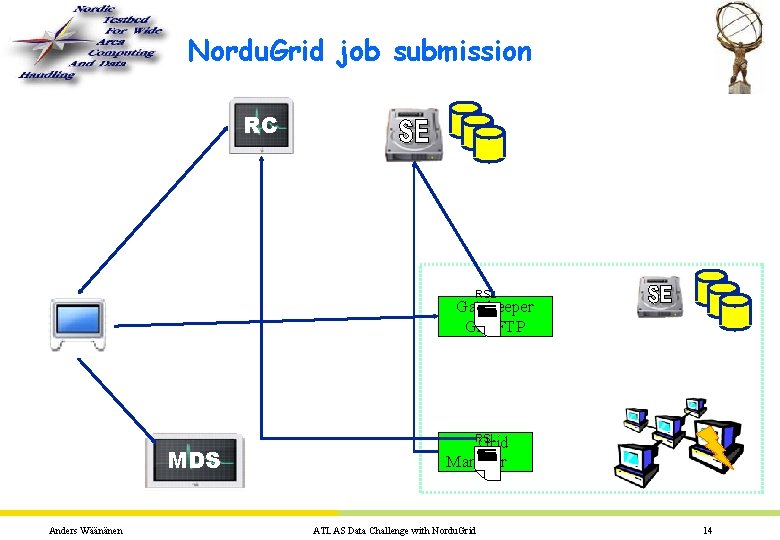

Nordu. Grid job submission u The user submits a x. RSL-file specifying the job-options. u The x. RSL-file is processed by the User-Interface. u The User-Interface queries the NG Information System for resources and the Nordu. Grid Replica-Catalog for location of input-files and submits the job to the selected resource. u Here the job is processed by the Grid Manager, which downloads or links files to the local session directory. u The Grid Manager submits the job to the local resource management system. u After simulation finishes, the Grid-Manager moves requested output to Storage Elements and registers these into the Nordu. Grid Replica. Catalog. Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 13

Nordu. Grid job submission RC RSL Gatekeeper Grid. FTP MDS Anders Wäänänen RSL Grid Manager ATLAS Data Challenge with Nordu. Grid 14

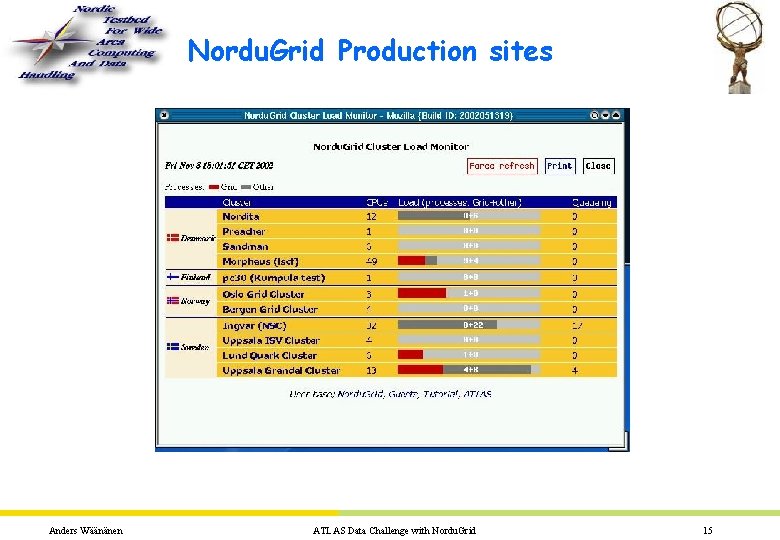

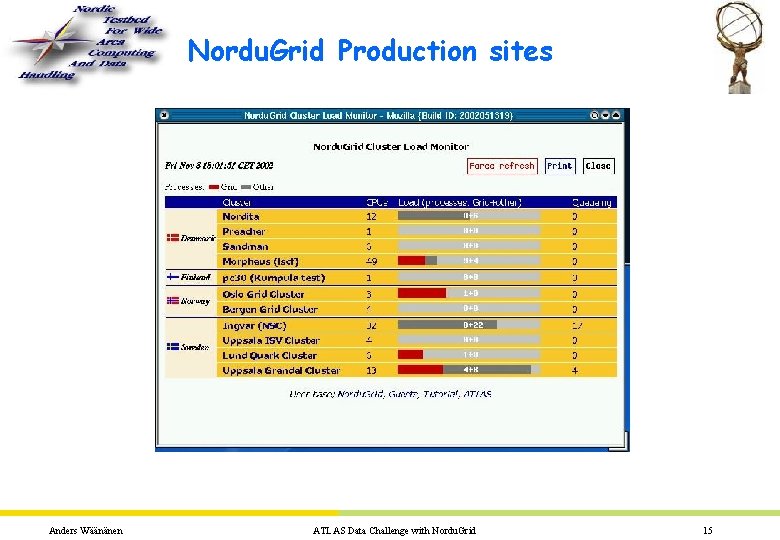

Nordu. Grid Production sites Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 15

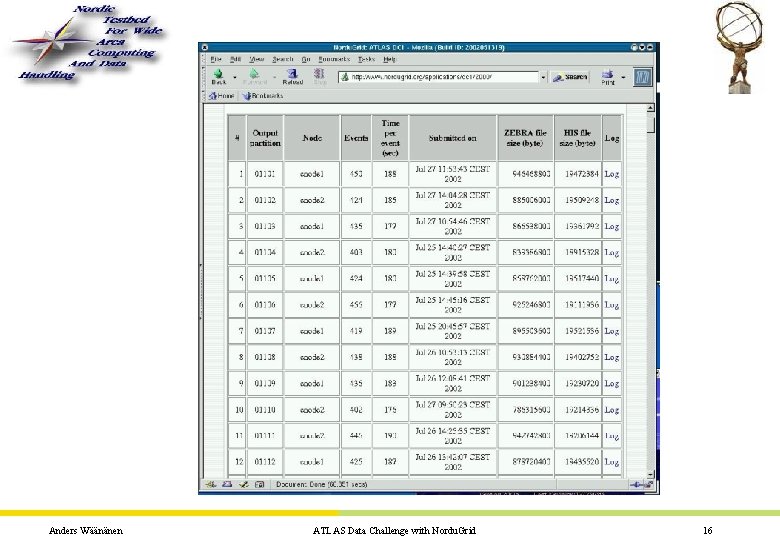

Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 16

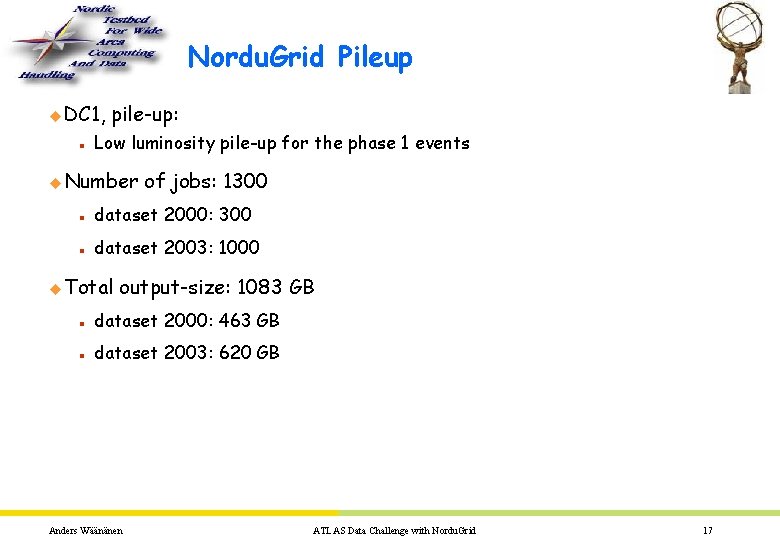

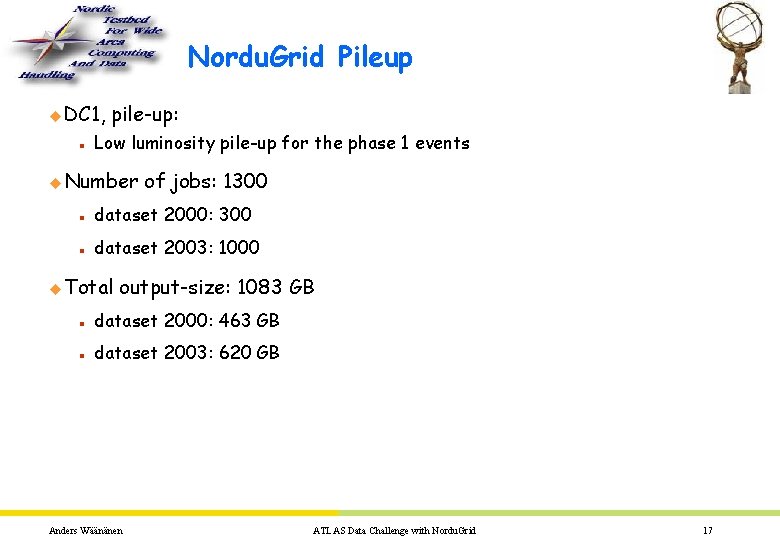

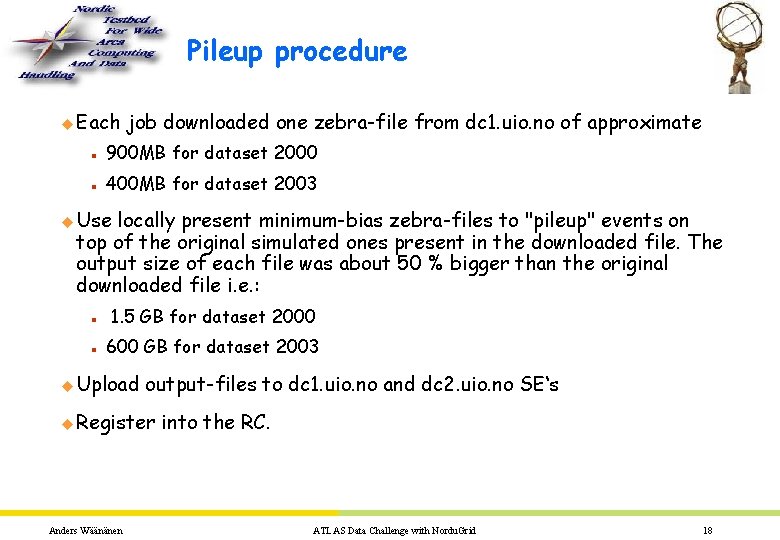

Nordu. Grid Pileup u DC 1, n pile-up: Low luminosity pile-up for the phase 1 events u Number of jobs: 1300 n dataset 2000: 300 n dataset 2003: 1000 u Total output-size: 1083 GB n dataset 2000: 463 GB n dataset 2003: 620 GB Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 17

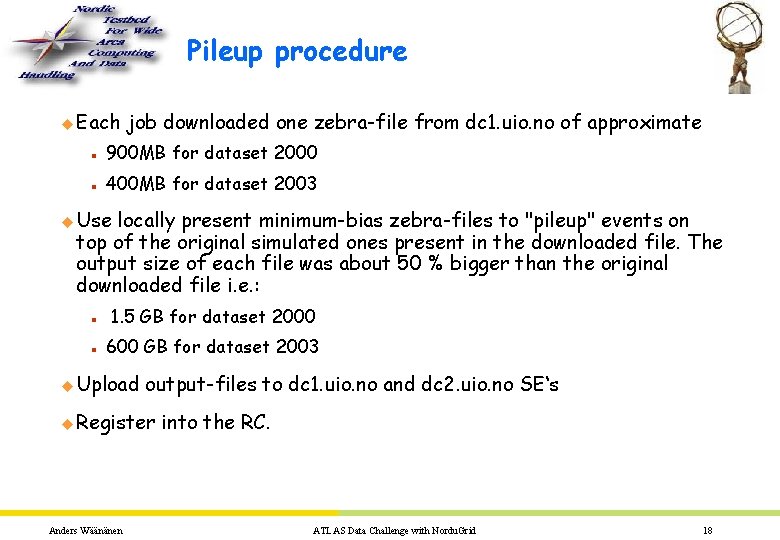

Pileup procedure u Each job downloaded one zebra-file from dc 1. uio. no of approximate n 900 MB for dataset 2000 n 400 MB for dataset 2003 u Use locally present minimum-bias zebra-files to "pileup" events on top of the original simulated ones present in the downloaded file. The output size of each file was about 50 % bigger than the original downloaded file i. e. : n 1. 5 GB for dataset 2000 n 600 GB for dataset 2003 u Upload output-files to dc 1. uio. no and dc 2. uio. no SE‘s u Register Anders Wäänänen into the RC. ATLAS Data Challenge with Nordu. Grid 18

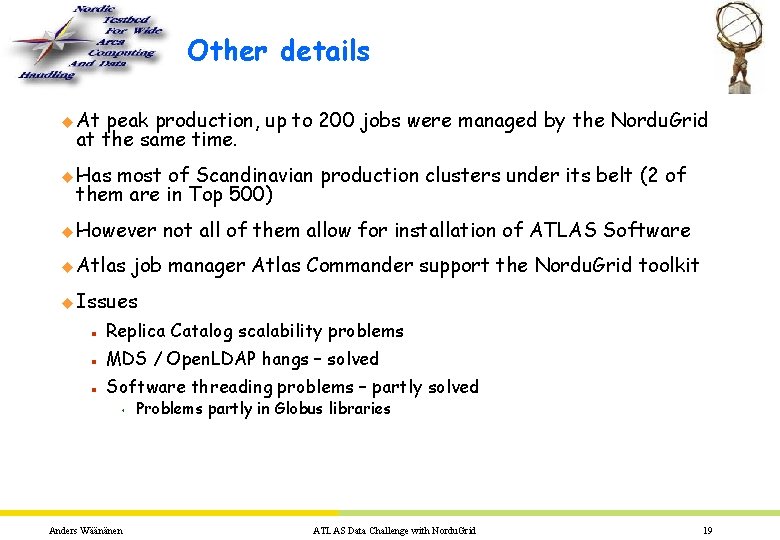

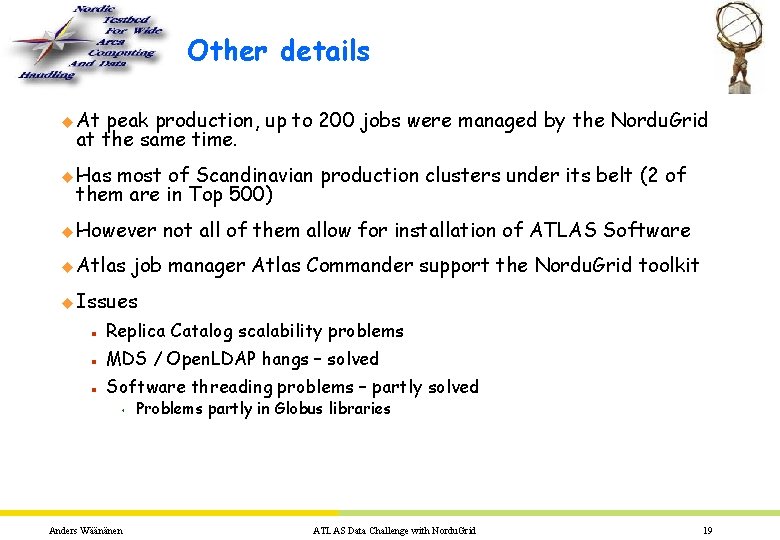

Other details u At peak production, up to 200 jobs were managed by the Nordu. Grid at the same time. u Has most of Scandinavian production clusters under its belt (2 of them are in Top 500) u However u Atlas not all of them allow for installation of ATLAS Software job manager Atlas Commander support the Nordu. Grid toolkit u Issues n Replica Catalog scalability problems n MDS / Open. LDAP hangs – solved n Software threading problems – partly solved s Anders Wäänänen Problems partly in Globus libraries ATLAS Data Challenge with Nordu. Grid 19

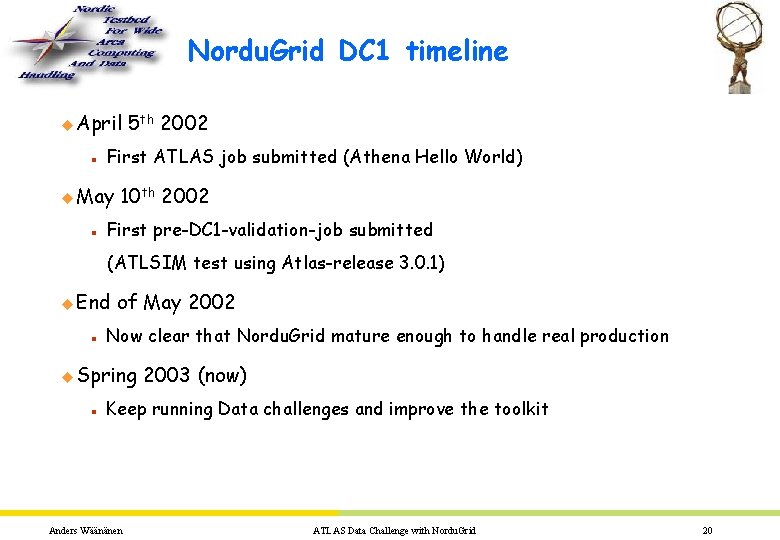

Nordu. Grid DC 1 timeline u April n First ATLAS job submitted (Athena Hello World) u May n 5 th 2002 10 th 2002 First pre-DC 1 -validation-job submitted (ATLSIM test using Atlas-release 3. 0. 1) u End n of May 2002 Now clear that Nordu. Grid mature enough to handle real production u Spring n 2003 (now) Keep running Data challenges and improve the toolkit Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 20

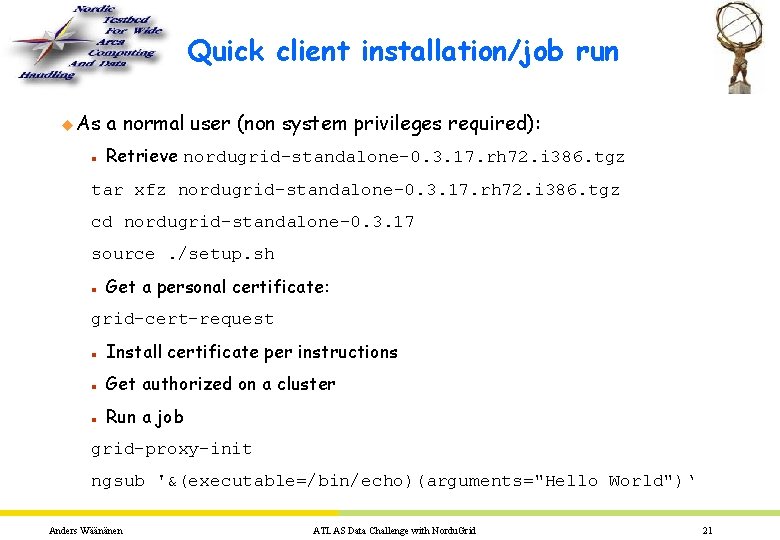

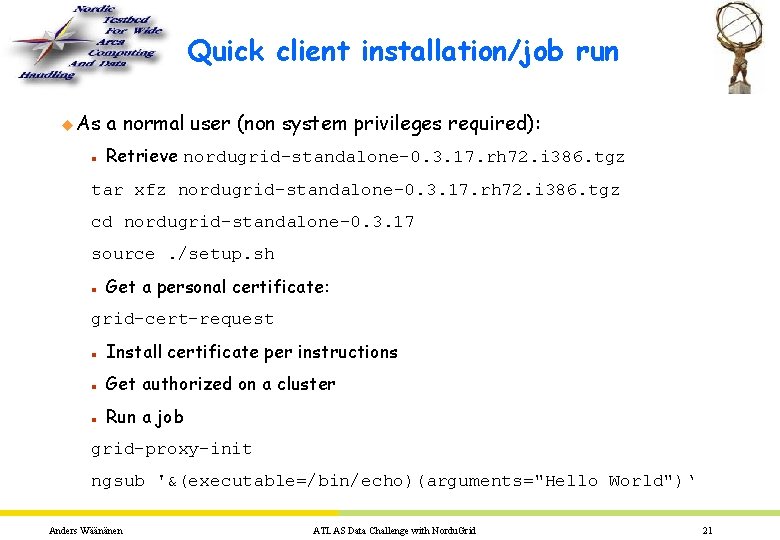

Quick client installation/job run u As n a normal user (non system privileges required): Retrieve nordugrid-standalone-0. 3. 17. rh 72. i 386. tgz tar xfz nordugrid-standalone-0. 3. 17. rh 72. i 386. tgz cd nordugrid-standalone-0. 3. 17 source. /setup. sh n Get a personal certificate: grid-cert-request n Install certificate per instructions n Get authorized on a cluster n Run a job grid-proxy-init ngsub '&(executable=/bin/echo)(arguments="Hello World")‘ Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 21

Resources u Documentation u Main n Web site: http: //www. nordugrid. org/ u ATLAS n DC 1 with Nordu. Grid http: //www. nordugrid. org/applications/dc 1/ u Software n and source code are available for download repository ftp: //ftp. nordugrid. org/pub/nordugrid/ Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 22

The Nordu. Grid core group u Александр u Balázs Константинов Kónya u Mattias u Оксана Ellert Смирнова u Jakob Langgaard Nielsen u Trond Myklebust u Anders Wäänänen ATLAS Data Challenge with Nordu. Grid 23