18 742 Spring 2011 Parallel Computer Architecture Lecture

- Slides: 27

18 -742 Spring 2011 Parallel Computer Architecture Lecture 2: Basics and Intro to Multiprocessors Prof. Onur Mutlu Carnegie Mellon University

Announcements n Quiz 0 n Project topics q q q Some ideas will be out Talk with Chris Craik and me However, you should do the literature search and come up with a concrete research project to advance the state of the art n You have already read many papers in 740/741, 447 2

Last Lecture n n n Logistics Static vs. Dynamic Scheduling (brief overview) Dynamic Tasking and Task Queues 3

Readings for This Week n Required – Enter reviews in the online system q q q n Hill, Jouppi, Sohi, “Multiprocessors and Multicomputers, ” pp. 551 -560 in Readings in Computer Architecture. Hill, Jouppi, Sohi, “Dataflow and Multithreading, ” pp. 309 -314 in Readings in Computer Architecture. Levin and Redell, “How (and how not) to write a good systems paper, ” OSR 1983. Recommended q q Blumofe et al. , “Cilk: an efficient multithreaded runtime system, ” PPo. PP 1995. Historical: Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 Barroso et al. , “Piranha: A Scalable Architecture Based on Single. Chip Multiprocessing, ” ISCA 2000. Culler & Singh, Chapter 1 4

How to Do the Paper Reviews n Brief summary q q n is the problem the paper is trying to solve? are the key ideas of the paper? Key insights? is the key contribution to literature at the time it was written? are the most important things you take out from it? Strengths (most important ones) q n What Does the paper solve the problem well? Weaknesses (most important ones) q This is where you should think critically. Every paper/idea has a weakness. This does not mean the paper is necessarily bad. It means there is room for improvement and future research can accomplish this. n Can you do (much) better? Present your thoughts/ideas. What have you learned/enjoyed most in the paper? Why? n Review should be short and concise (~half a page or shorter) n 5

Research Project n Goals: q q n n Develop novel ideas to solve an important problem Rigorously evaluate the benefits of the ideas The problem and ideas need to be concrete You should be doing problem-oriented research 6

Research Proposal Outline n The Problem: What is the problem you are trying to solve q n Novelty: Why has previous research not solved this problem? What are its shortcomings? q n n Define clearly. Describe/cite all relevant works you know of and describe why these works are inadequate to solve the problem. Idea: What is your initial idea/insight? What new solution are you proposing to the problem? Why does it make sense? How does/could it solve the problem better? Hypothesis: What is the main hypothesis you will test? Methodology: How will you test the hypothesis/ideas? Describe what simulator or model you will use and what initial experiments you will do. Plan: Describe the steps you will take. What will you accomplish by Milestone 1, 2, and Final Report? Give 75%, 100%, 125% and moonshot goals. All research projects can be and should be described in this fashion. 7

Heilmeier’s Catechism (version 1) n n n n n What are you trying to do? Articulate your objectives using absolutely no jargon. How is it done today, and what are the limits of current practice? What's new in your approach and why do you think it will be successful? Who cares? If you're successful, what difference will it make? What are the risks and the payoffs? How much will it cost? How long will it take? What are the midterm and final "exams" to check for success? 8

Heilmeier’s Catechism (version 2) n n n What is the problem? Why is it hard? How is it solved today? What is the new technical idea? Why can we succeed now? What is the impact if successful? 9

Readings for Next Lecture n Required: q q n Hill and Marty, “Amdahl’s Law in the Multi-Core Era, ” IEEE Computer 2008. Annavaram et al. , “Mitigating Amdahl’s Law Through EPI Throttling, ” ISCA 2005. Suleman et al. , “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures, ” ASPLOS 2009. Ipek et al. , “Core Fusion: Accommodating Software Diversity in Chip Multiprocessors, ” ISCA 2007. Recommended: q q Olukotun et al. , “The Case for a Single-Chip Multiprocessor, ” ASPLOS 1996. Barroso et al. , “Piranha: A Scalable Architecture Based on Single-Chip Multiprocessing, ” ISCA 2000. Kongetira et al. , “Niagara: A 32 -Way Multithreaded SPARC Processor, ” IEEE Micro 2005. Amdahl, “Validity of the single processor approach to achieving large scale computing capabilities, ” AFIPS 1967. 10

Parallel Computer Architecture: Basics 11

What is a Parallel Computer? n n Definition of a “parallel computer” not really precise “A ‘parallel computer’ is a “collection of processing elements that communicate and cooperate to solve large problems fast” q n Almasi and Gottlieb, “Highly Parallel Computing, ” 1989 Is a superscalar processor a parallel computer? n A processor that gives the illusion of executing a sequential ISA on a single thread at a time is a sequential machine Almost anything else is a parallel machine n Examples of parallel machines: n q q q Multiple program counters (PCs) Multiple data being operated on simultaneously Some combination 12

Flynn’s Taxonomy of Computers n n n Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 SISD: Single instruction operates on single data element SIMD: Single instruction operates on multiple data elements q q n MISD: Multiple instructions operate on single data element q n Array processor Vector processor Closest form: systolic array processor, streaming processor MIMD: Multiple instructions operate on multiple data elements (multiple instruction streams) q q Multiprocessor Multithreaded processor 13

Why Parallel Computers? n Parallelism: Doing multiple things at a time Things: instructions, operations, tasks n Main Goal n q Improve performance (Execution time or task throughput) n n Execution time of a program governed by Amdahl’s Law Other Goals q Reduce power consumption n n q Improve cost efficiency and scalability, reduce complexity n q (4 N units at freq F/4) consume less power than (N units at freq F) Why? Harder to design a single unit that performs as well as N simpler units Improve dependability: Redundant execution in space 14

Types of Parallelism and How to Exploit Them n Instruction Level Parallelism q q q n Data Parallelism q q q n Different instructions within a stream can be executed in parallel Pipelining, out-of-order execution, speculative execution, VLIW Dataflow Different pieces of data can be operated on in parallel SIMD: Vector processing, array processing Systolic arrays, streaming processors Task Level Parallelism q q q Different “tasks/threads” can be executed in parallel Multithreading Multiprocessing (multi-core) 15

Task-Level Parallelism: Creating Tasks n Partition a single problem into multiple related tasks (threads) q Explicitly: Parallel programming n Easy when tasks are natural in the problem q n q Difficult when natural task boundaries are unclear Transparently/implicitly: Thread level speculation n n Web/database queries Partition a single thread speculatively Run many independent tasks (processes) together q Easy when there are many processes n q Batch simulations, different users, cloud computing workloads Does not improve the performance of a single task 16

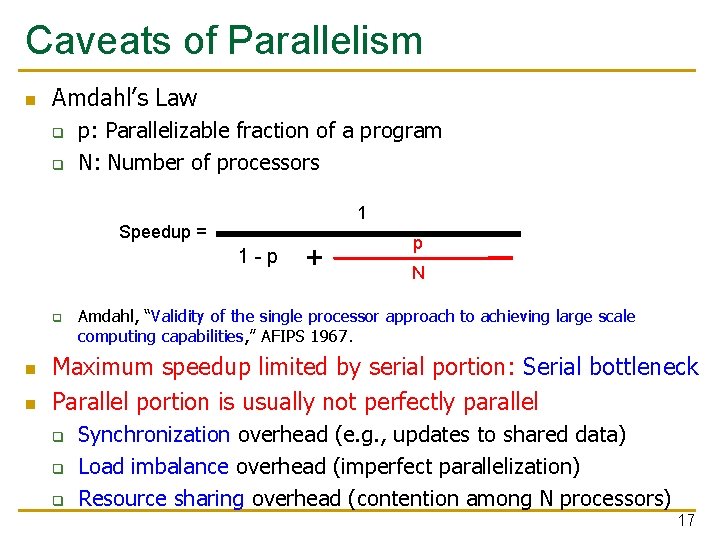

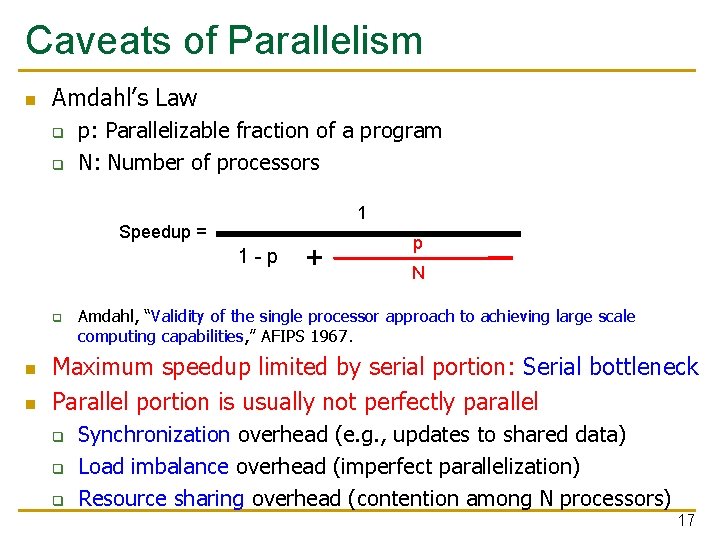

Caveats of Parallelism n Amdahl’s Law q q p: Parallelizable fraction of a program N: Number of processors 1 Speedup = 1 -p q n n + p N Amdahl, “Validity of the single processor approach to achieving large scale computing capabilities, ” AFIPS 1967. Maximum speedup limited by serial portion: Serial bottleneck Parallel portion is usually not perfectly parallel q q q Synchronization overhead (e. g. , updates to shared data) Load imbalance overhead (imperfect parallelization) Resource sharing overhead (contention among N processors) 17

Parallel Speedup Example n n a 4 x 4 + a 3 x 3 + a 2 x 2 + a 1 x + a 0 Assume each operation 1 cycle, no communication cost, each op can be executed in a different processor n How fast is this with a single processor? n How fast is this with 3 processors? n n Fair speedup comparison? Need to compare the best algorithms for 1 and P processors 18

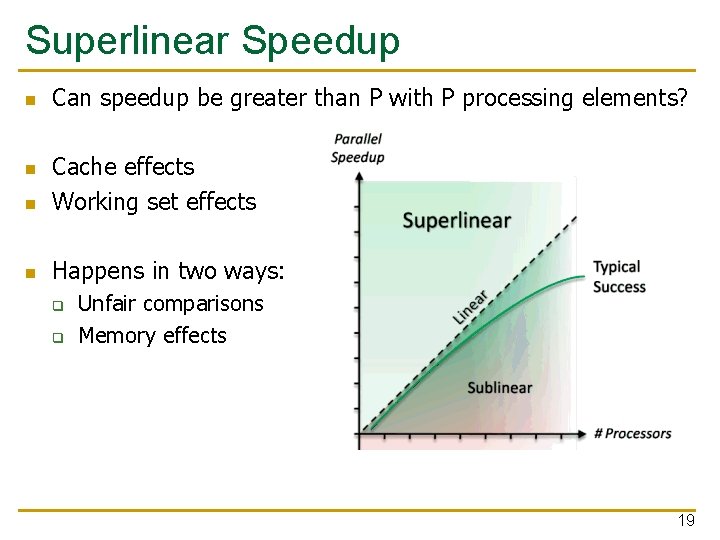

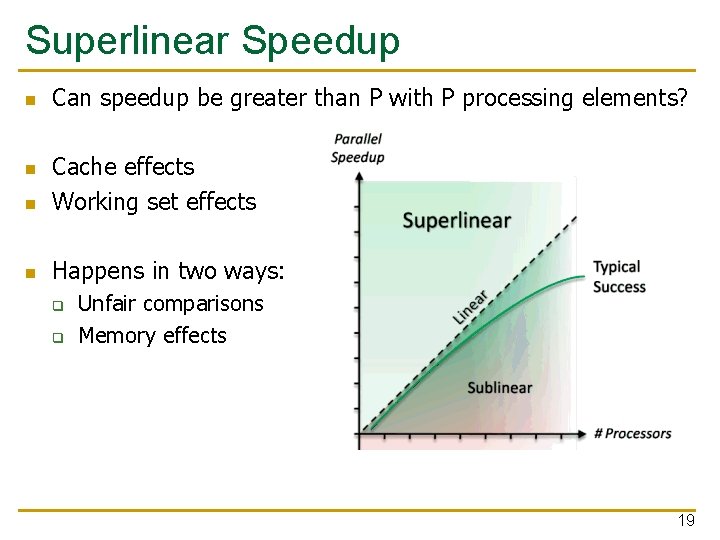

Superlinear Speedup n Can speedup be greater than P with P processing elements? n Cache effects Working set effects n Happens in two ways: n q q Unfair comparisons Memory effects 19

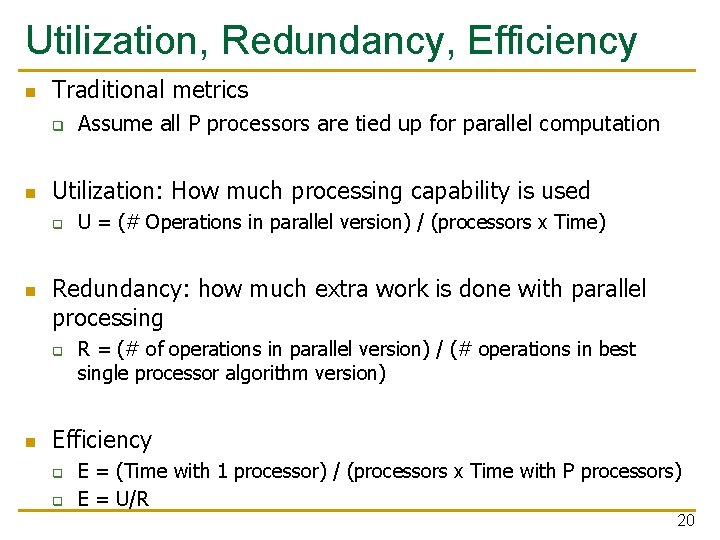

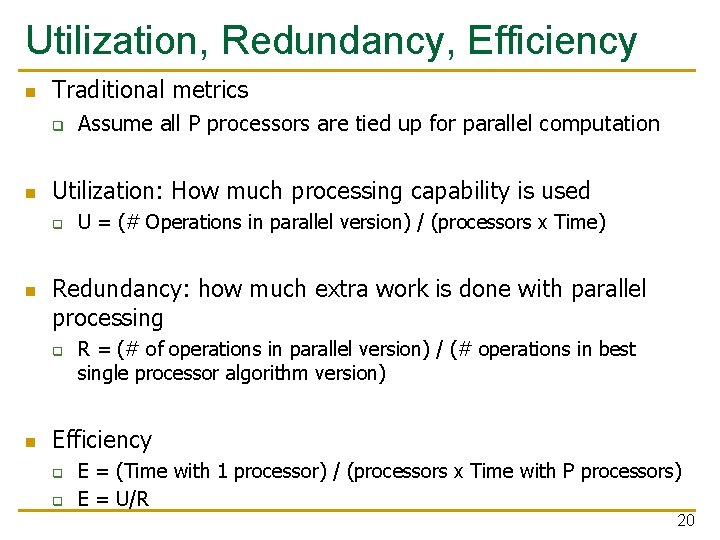

Utilization, Redundancy, Efficiency n Traditional metrics q n Utilization: How much processing capability is used q n U = (# Operations in parallel version) / (processors x Time) Redundancy: how much extra work is done with parallel processing q n Assume all P processors are tied up for parallel computation R = (# of operations in parallel version) / (# operations in best single processor algorithm version) Efficiency q q E = (Time with 1 processor) / (processors x Time with P processors) E = U/R 20

200 190 180 170 160 150 140 130 120 110 Speedup 100 90 80 70 60 50 40 30 20 10 0 0 0, 03 0, 06 0, 09 0, 12 0, 15 0, 18 0, 21 0, 24 0, 27 0, 33 0, 36 0, 39 0, 42 0, 45 0, 48 0, 51 0, 54 0, 57 0, 63 0, 66 0, 69 0, 72 0, 75 0, 78 0, 81 0, 84 0, 87 0, 93 0, 96 0, 99 Sequential Bottleneck N=1000 p (parallel fraction) 21

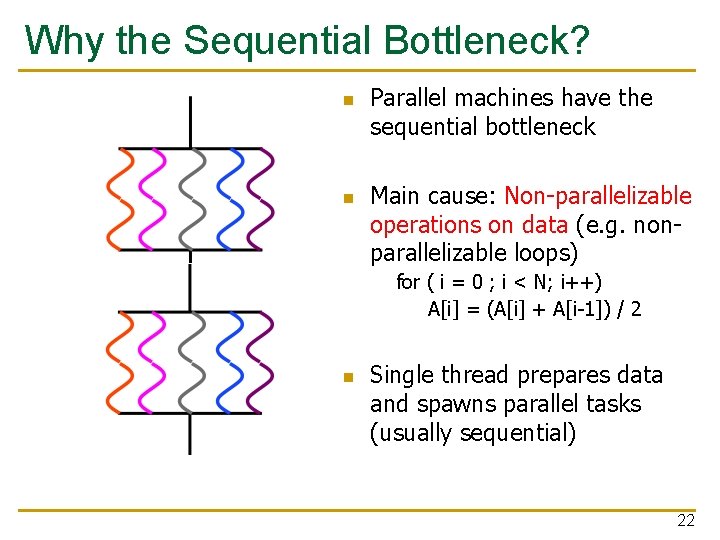

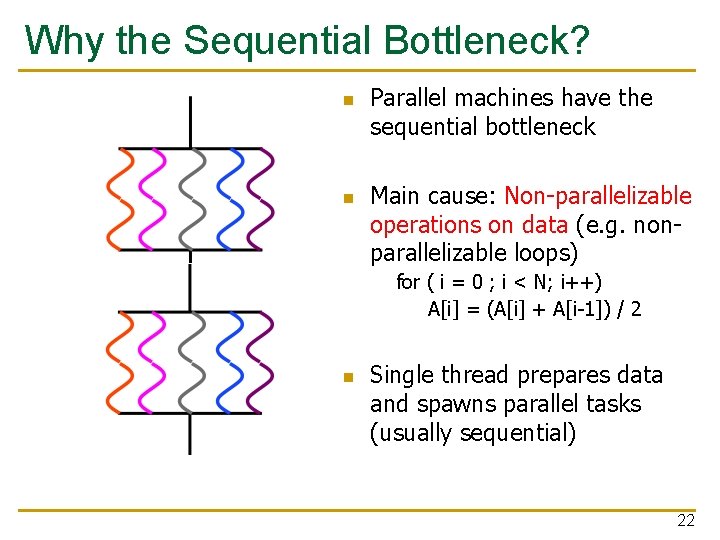

Why the Sequential Bottleneck? n n Parallel machines have the sequential bottleneck Main cause: Non-parallelizable operations on data (e. g. nonparallelizable loops) for ( i = 0 ; i < N; i++) A[i] = (A[i] + A[i-1]) / 2 n Single thread prepares data and spawns parallel tasks (usually sequential) 22

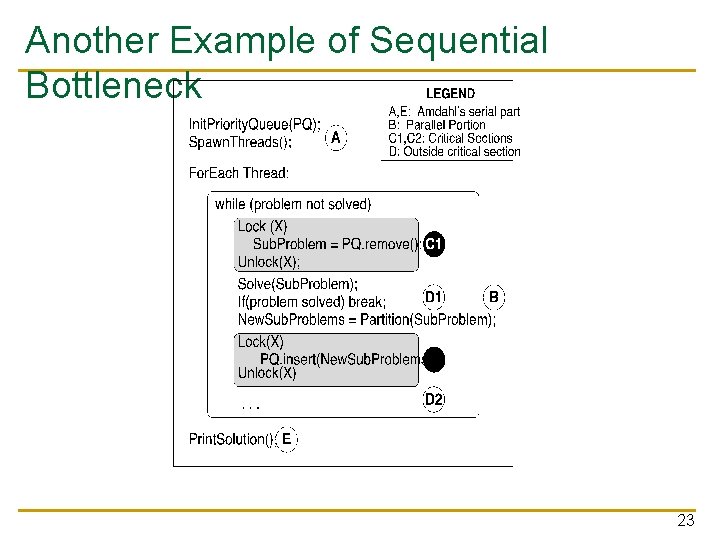

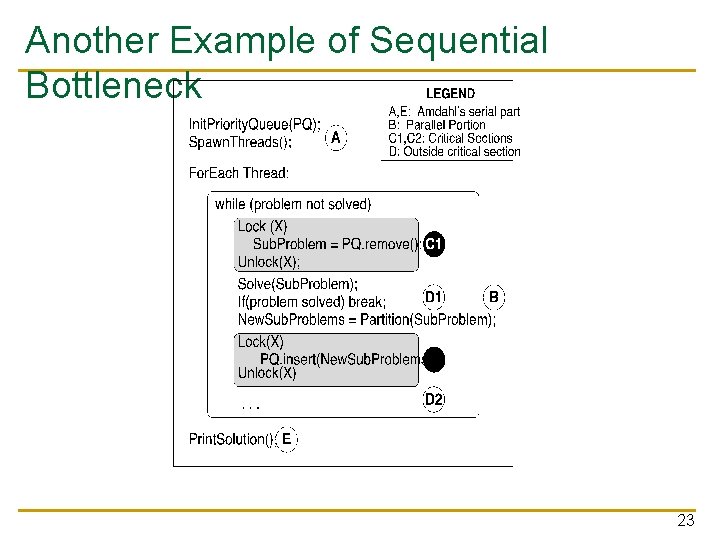

Another Example of Sequential Bottleneck 23

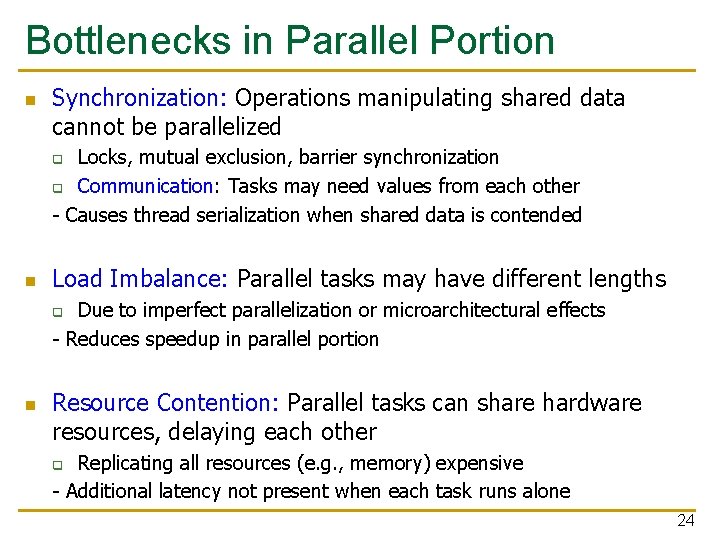

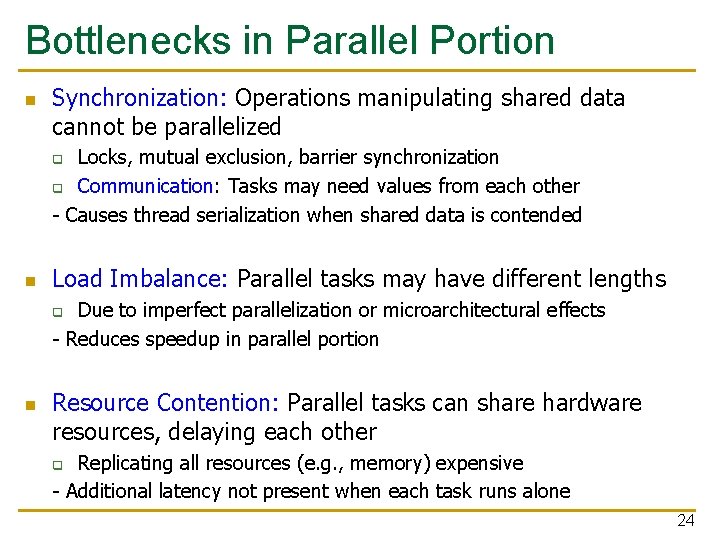

Bottlenecks in Parallel Portion n Synchronization: Operations manipulating shared data cannot be parallelized Locks, mutual exclusion, barrier synchronization q Communication: Tasks may need values from each other - Causes thread serialization when shared data is contended q n Load Imbalance: Parallel tasks may have different lengths Due to imperfect parallelization or microarchitectural effects - Reduces speedup in parallel portion q n Resource Contention: Parallel tasks can share hardware resources, delaying each other Replicating all resources (e. g. , memory) expensive - Additional latency not present when each task runs alone q 24

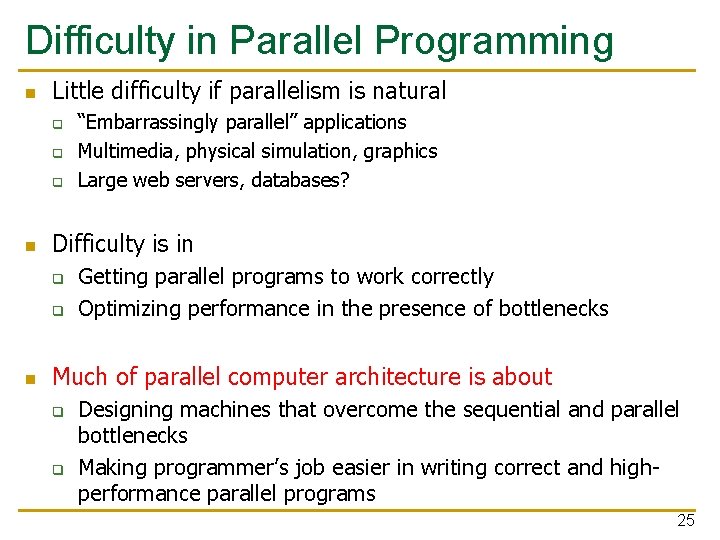

Difficulty in Parallel Programming n Little difficulty if parallelism is natural q q q n Difficulty is in q q n “Embarrassingly parallel” applications Multimedia, physical simulation, graphics Large web servers, databases? Getting parallel programs to work correctly Optimizing performance in the presence of bottlenecks Much of parallel computer architecture is about q q Designing machines that overcome the sequential and parallel bottlenecks Making programmer’s job easier in writing correct and highperformance parallel programs 25

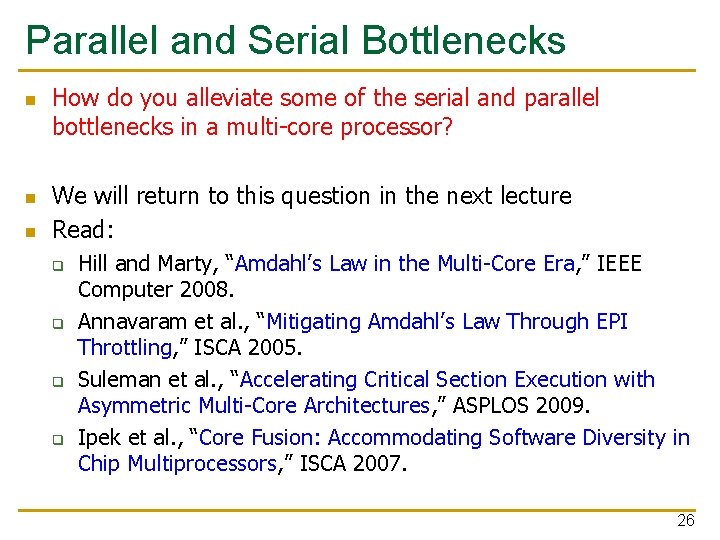

Parallel and Serial Bottlenecks n n n How do you alleviate some of the serial and parallel bottlenecks in a multi-core processor? We will return to this question in the next lecture Read: q q Hill and Marty, “Amdahl’s Law in the Multi-Core Era, ” IEEE Computer 2008. Annavaram et al. , “Mitigating Amdahl’s Law Through EPI Throttling, ” ISCA 2005. Suleman et al. , “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures, ” ASPLOS 2009. Ipek et al. , “Core Fusion: Accommodating Software Diversity in Chip Multiprocessors, ” ISCA 2007. 26

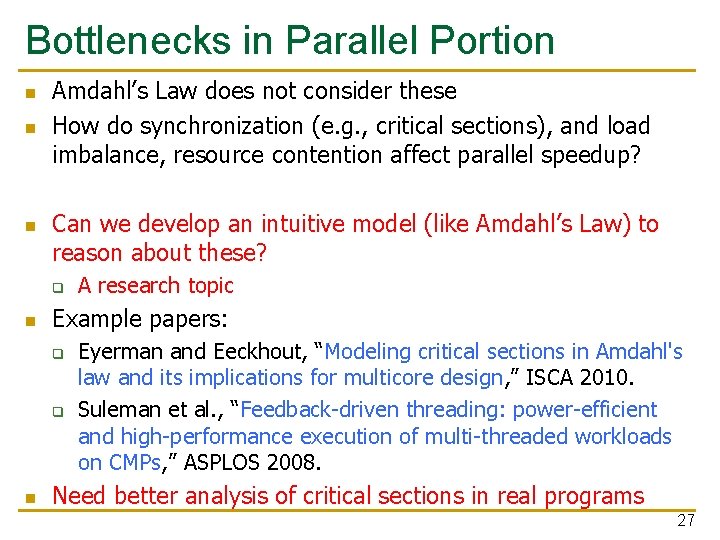

Bottlenecks in Parallel Portion n Amdahl’s Law does not consider these How do synchronization (e. g. , critical sections), and load imbalance, resource contention affect parallel speedup? Can we develop an intuitive model (like Amdahl’s Law) to reason about these? q n Example papers: q q n A research topic Eyerman and Eeckhout, “Modeling critical sections in Amdahl's law and its implications for multicore design, ” ISCA 2010. Suleman et al. , “Feedback-driven threading: power-efficient and high-performance execution of multi-threaded workloads on CMPs, ” ASPLOS 2008. Need better analysis of critical sections in real programs 27