16 482 16 561 Computer Architecture and Design

- Slides: 33

16. 482 / 16. 561 Computer Architecture and Design Instructor: Dr. Michael Geiger Fall 2013 Lecture 7: Dynamic scheduling

Lecture outline n Announcements/reminders q n HW 5 to be posted; due 11/4 Today’s lecture q q q 9/25/2020 Dependences Determining stalls in realistic pipeline Dynamic scheduling with Tomasulo’s Algorithm Computer Architecture Lecture 6 2

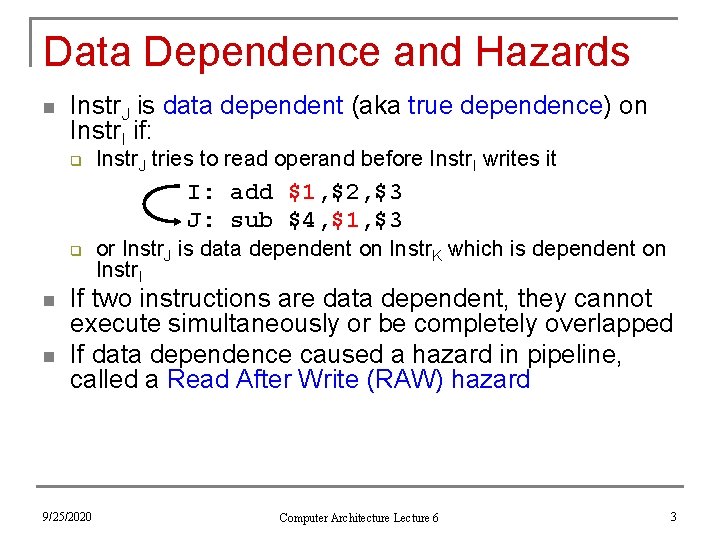

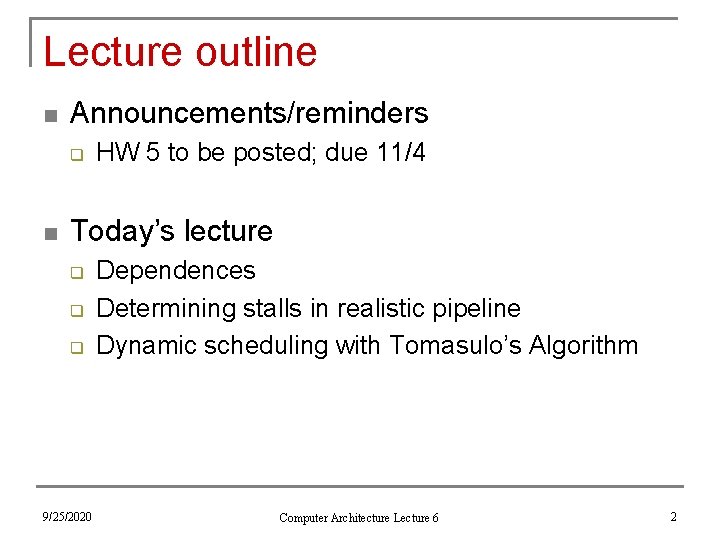

Data Dependence and Hazards n Instr. J is data dependent (aka true dependence) on Instr. I if: q Instr. J tries to read operand before Instr. I writes it I: add $1, $2, $3 J: sub $4, $1, $3 q n n or Instr. J is data dependent on Instr. K which is dependent on Instr. I If two instructions are data dependent, they cannot execute simultaneously or be completely overlapped If data dependence caused a hazard in pipeline, called a Read After Write (RAW) hazard 9/25/2020 Computer Architecture Lecture 6 3

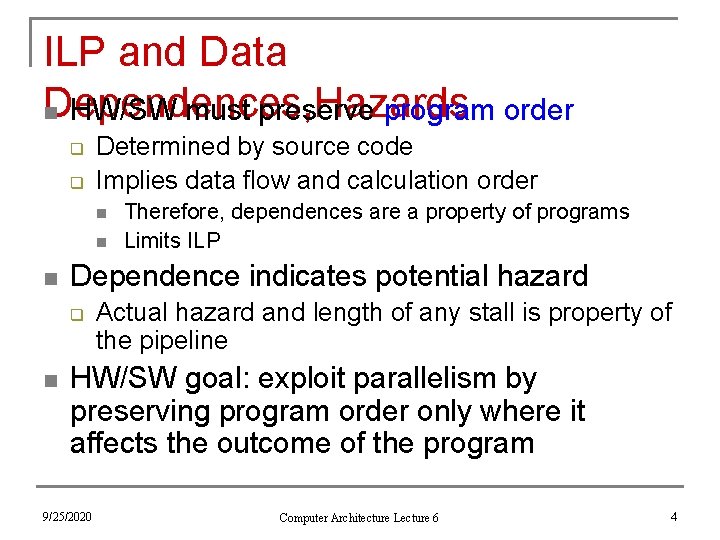

ILP and Data Dependences, Hazards n HW/SW must preserve program order q q Determined by source code Implies data flow and calculation order n n n Dependence indicates potential hazard q n Therefore, dependences are a property of programs Limits ILP Actual hazard and length of any stall is property of the pipeline HW/SW goal: exploit parallelism by preserving program order only where it affects the outcome of the program 9/25/2020 Computer Architecture Lecture 6 4

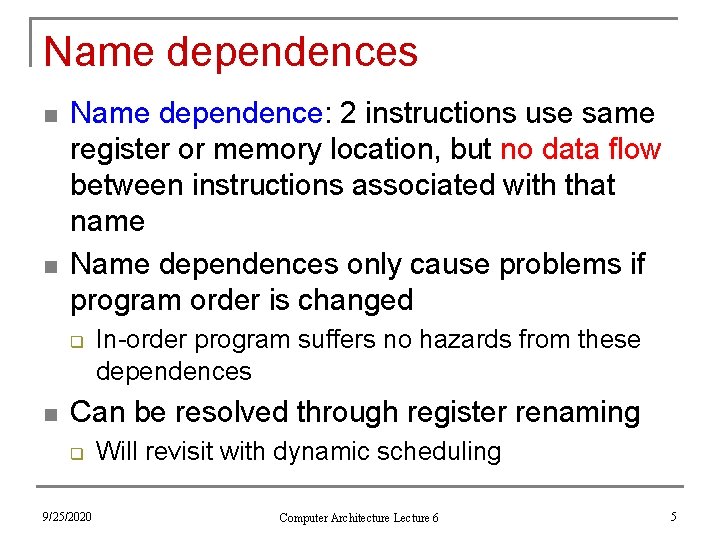

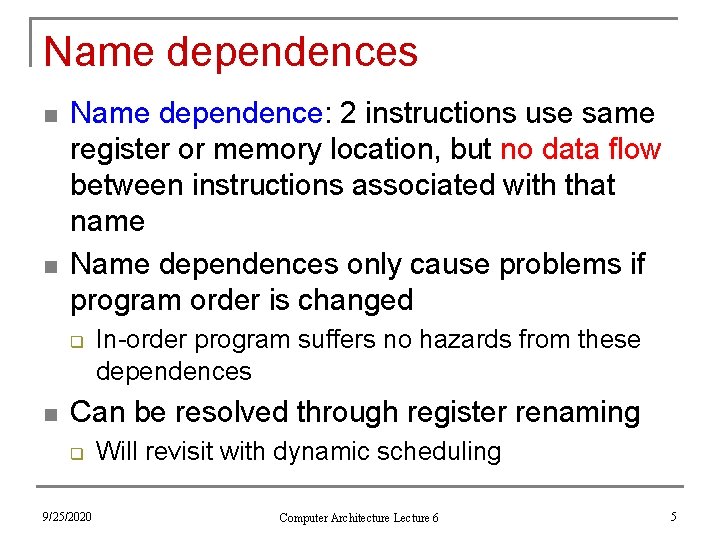

Name dependences n n Name dependence: 2 instructions use same register or memory location, but no data flow between instructions associated with that name Name dependences only cause problems if program order is changed q n In-order program suffers no hazards from these dependences Can be resolved through register renaming q 9/25/2020 Will revisit with dynamic scheduling Computer Architecture Lecture 6 5

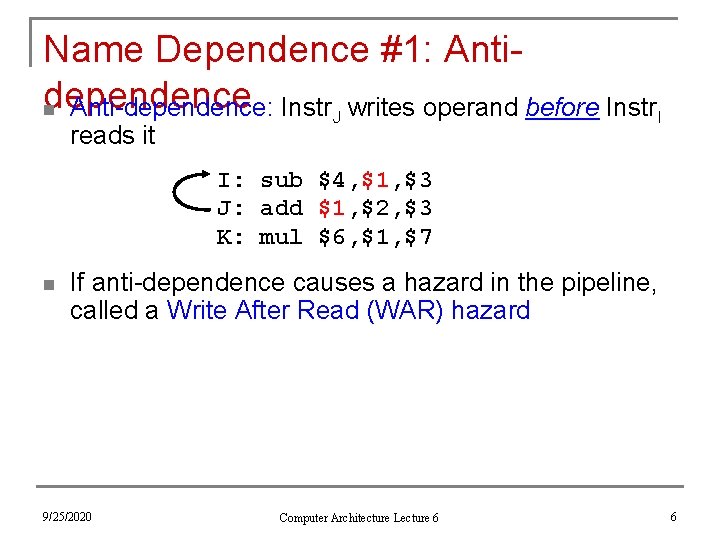

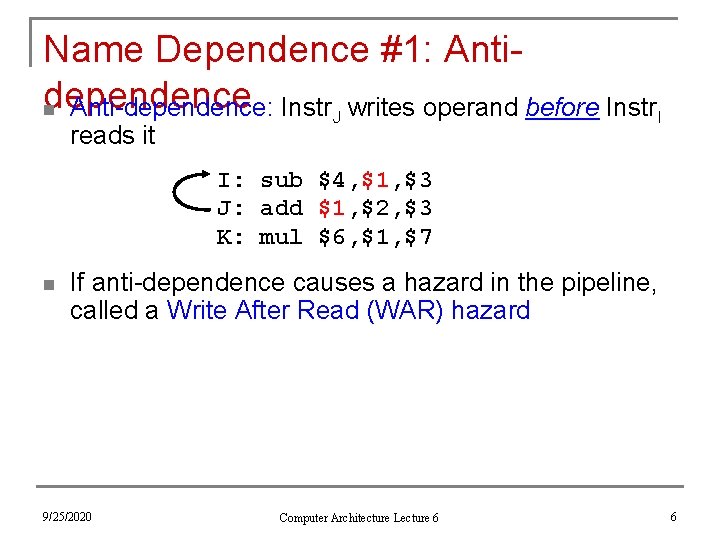

Name Dependence #1: Antidependence n Anti-dependence: Instr. J writes operand before Instr. I reads it I: sub $4, $1, $3 J: add $1, $2, $3 K: mul $6, $1, $7 n If anti-dependence causes a hazard in the pipeline, called a Write After Read (WAR) hazard 9/25/2020 Computer Architecture Lecture 6 6

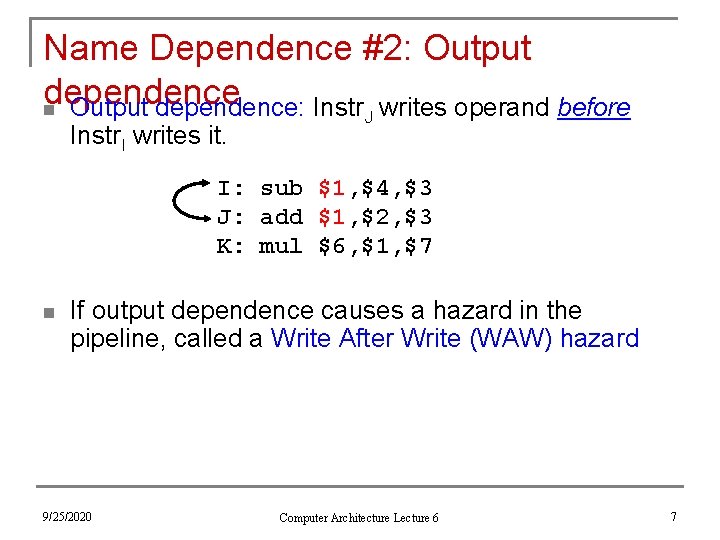

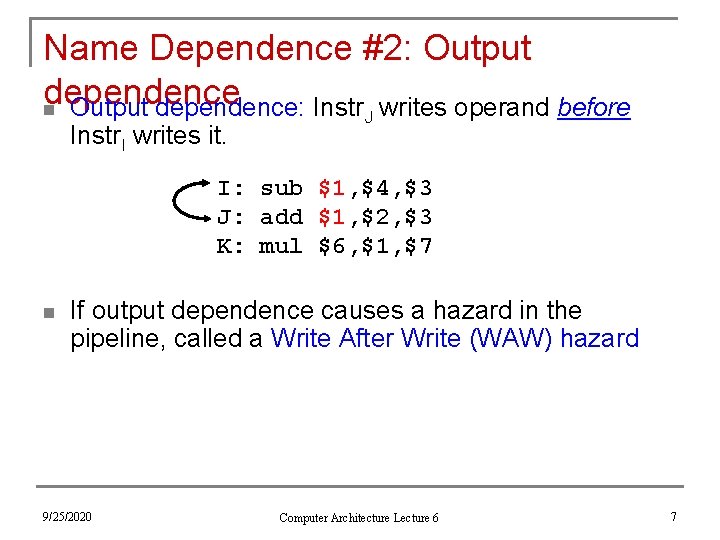

Name Dependence #2: Output dependence n Output dependence: Instr writes operand before Instr. I writes it. J I: sub $1, $4, $3 J: add $1, $2, $3 K: mul $6, $1, $7 n If output dependence causes a hazard in the pipeline, called a Write After Write (WAW) hazard 9/25/2020 Computer Architecture Lecture 6 7

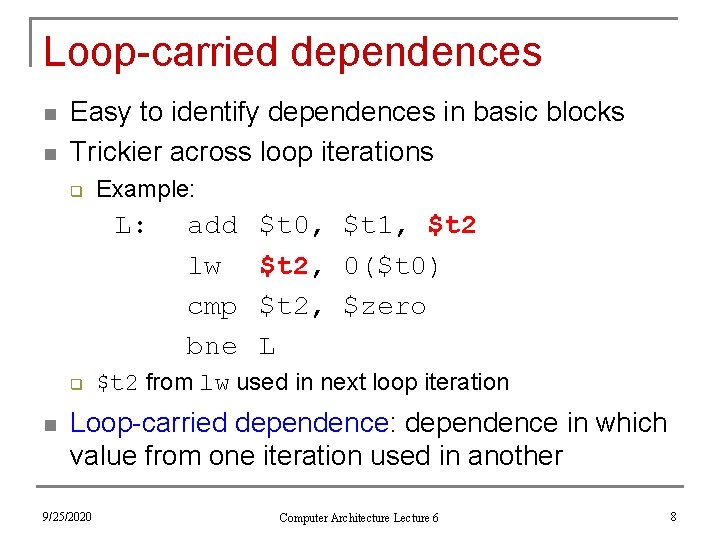

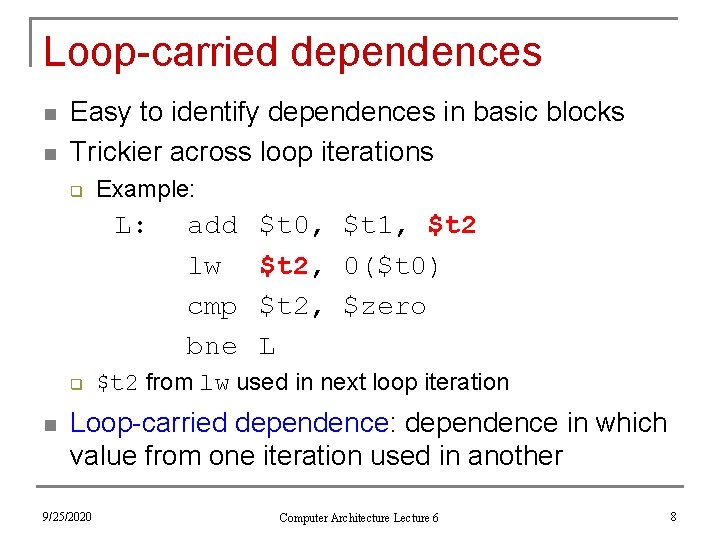

Loop-carried dependences n n Easy to identify dependences in basic blocks Trickier across loop iterations q Example: L: q n add lw cmp bne $t 0, $t 1, $t 2, 0($t 0) $t 2, $zero L $t 2 from lw used in next loop iteration Loop-carried dependence: dependence in which value from one iteration used in another 9/25/2020 Computer Architecture Lecture 6 8

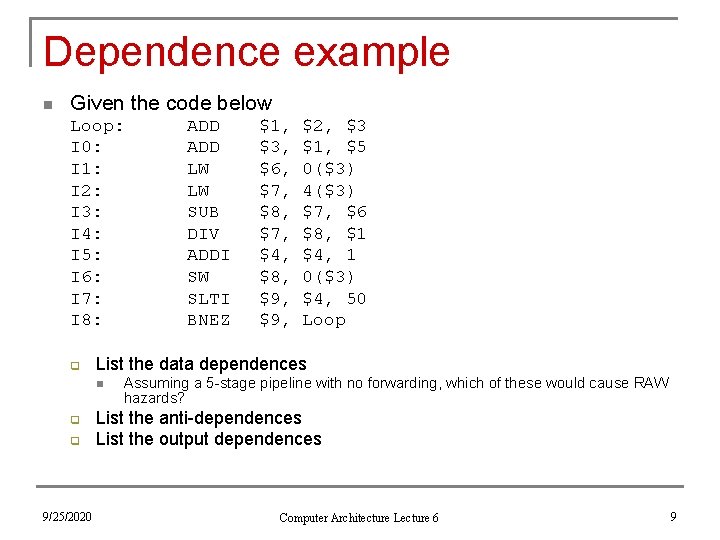

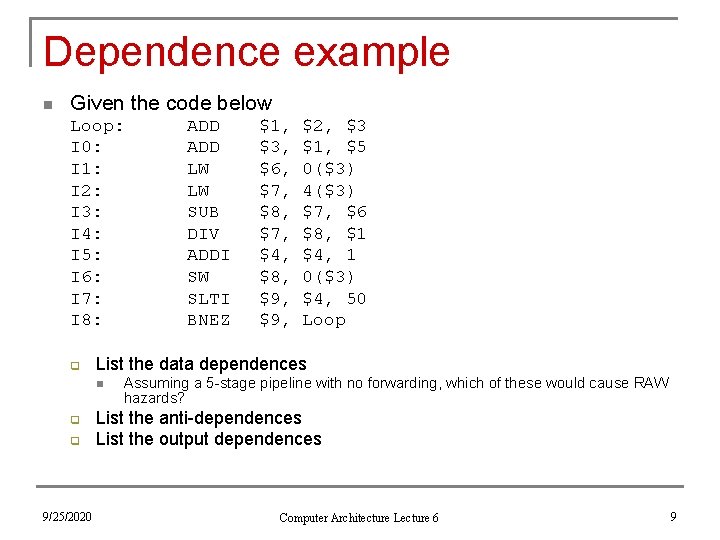

Dependence example n Given the code below Loop: I 0: I 1: I 2: I 3: I 4: I 5: I 6: I 7: I 8: q q 9/25/2020 $1, $3, $6, $7, $8, $7, $4, $8, $9, $2, $3 $1, $5 0($3) 4($3) $7, $6 $8, $1 $4, 1 0($3) $4, 50 Loop List the data dependences n q ADD LW LW SUB DIV ADDI SW SLTI BNEZ Assuming a 5 -stage pipeline with no forwarding, which of these would cause RAW hazards? List the anti-dependences List the output dependences Computer Architecture Lecture 6 9

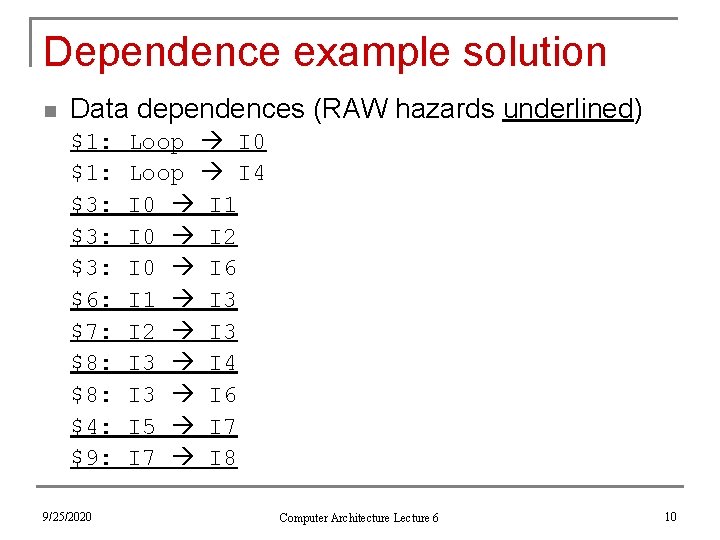

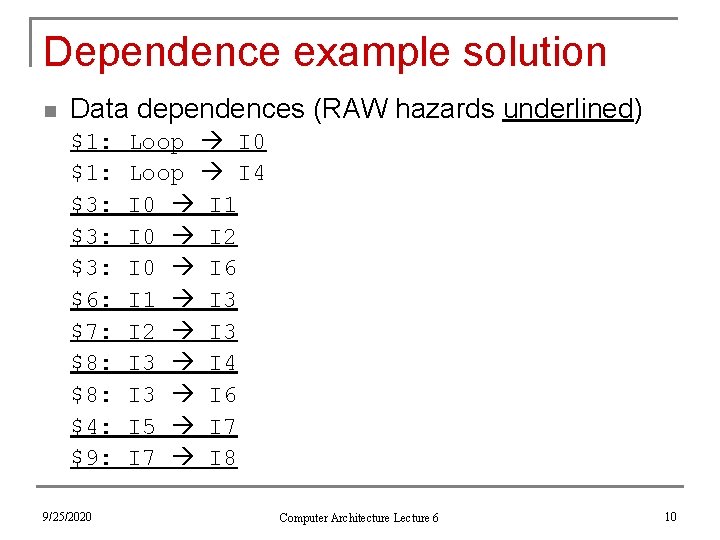

Dependence example solution n Data dependences (RAW hazards underlined) $1: $3: $3: $6: $7: $8: $4: $9: 9/25/2020 Loop I 4 I 0 I 1 I 0 I 2 I 0 I 6 I 1 I 3 I 2 I 3 I 4 I 3 I 6 I 5 I 7 I 8 Computer Architecture Lecture 6 10

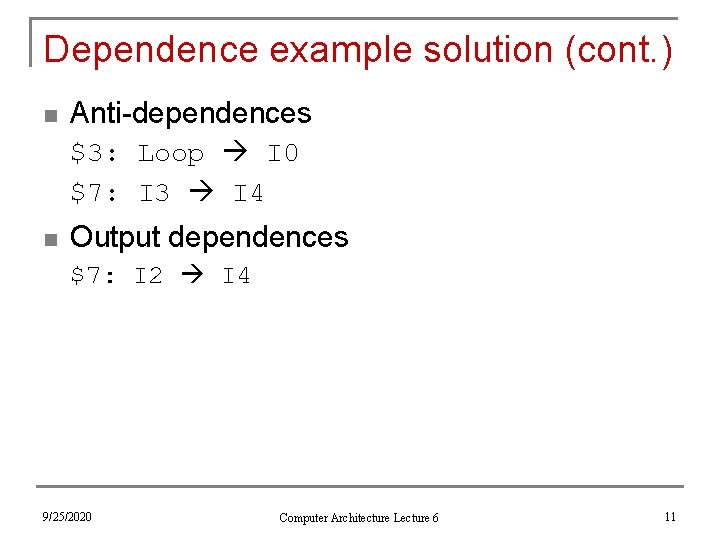

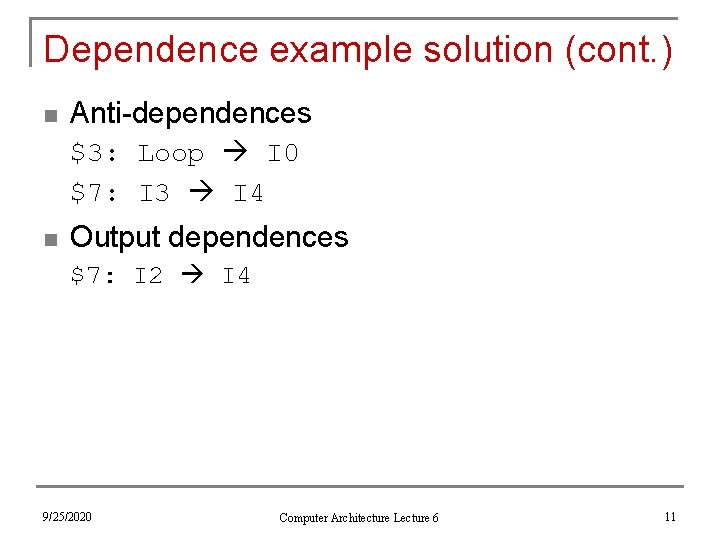

Dependence example solution (cont. ) n Anti-dependences $3: Loop I 0 $7: I 3 I 4 n Output dependences $7: I 2 I 4 9/25/2020 Computer Architecture Lecture 6 11

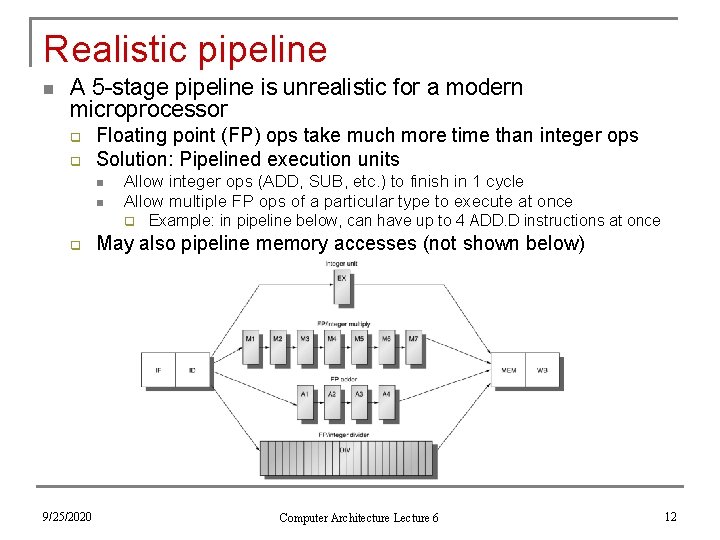

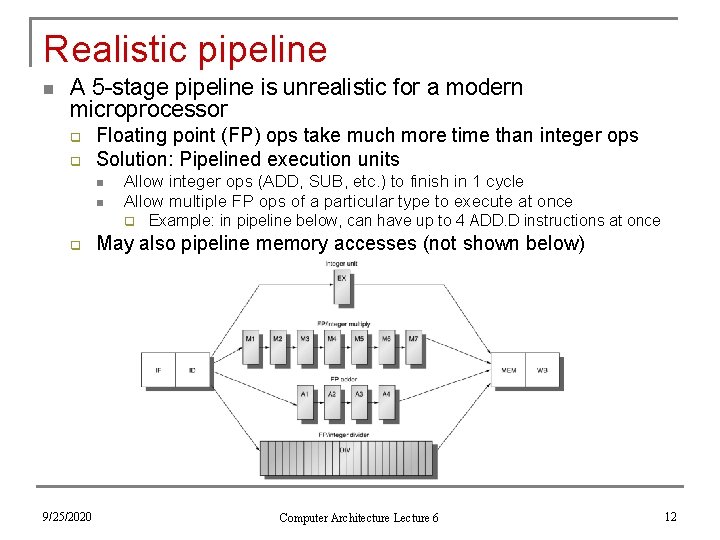

Realistic pipeline n A 5 -stage pipeline is unrealistic for a modern microprocessor q q Floating point (FP) ops take much more time than integer ops Solution: Pipelined execution units n n Allow integer ops (ADD, SUB, etc. ) to finish in 1 cycle Allow multiple FP ops of a particular type to execute at once q q 9/25/2020 Example: in pipeline below, can have up to 4 ADD. D instructions at once May also pipeline memory accesses (not shown below) Computer Architecture Lecture 6 12

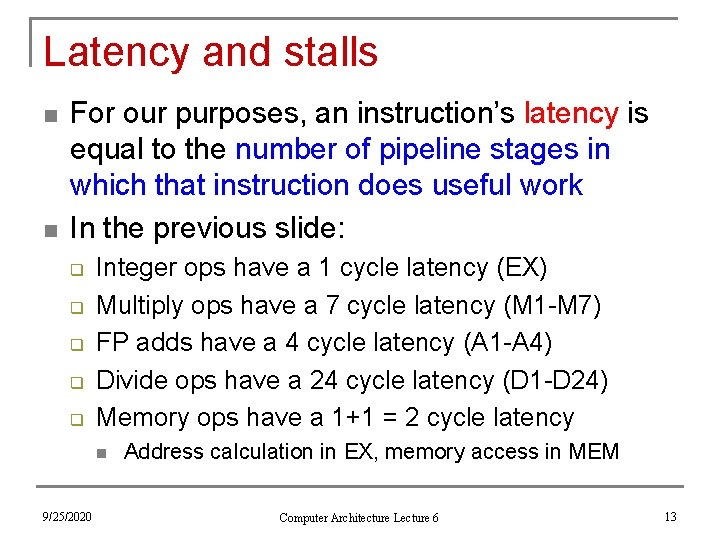

Latency and stalls n n For our purposes, an instruction’s latency is equal to the number of pipeline stages in which that instruction does useful work In the previous slide: q q q Integer ops have a 1 cycle latency (EX) Multiply ops have a 7 cycle latency (M 1 -M 7) FP adds have a 4 cycle latency (A 1 -A 4) Divide ops have a 24 cycle latency (D 1 -D 24) Memory ops have a 1+1 = 2 cycle latency n 9/25/2020 Address calculation in EX, memory access in MEM Computer Architecture Lecture 6 13

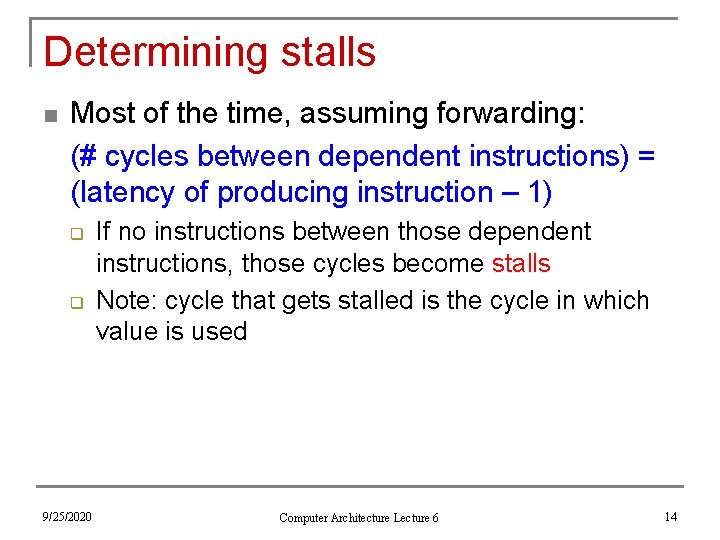

Determining stalls n Most of the time, assuming forwarding: (# cycles between dependent instructions) = (latency of producing instruction – 1) q q 9/25/2020 If no instructions between those dependent instructions, those cycles become stalls Note: cycle that gets stalled is the cycle in which value is used Computer Architecture Lecture 6 14

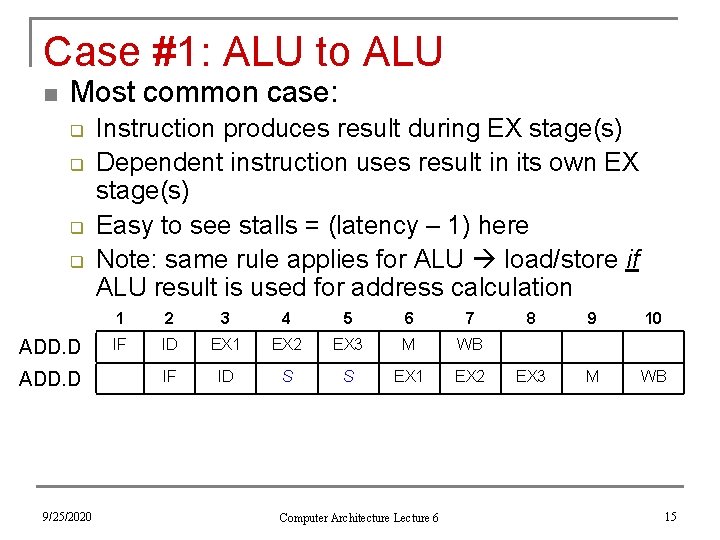

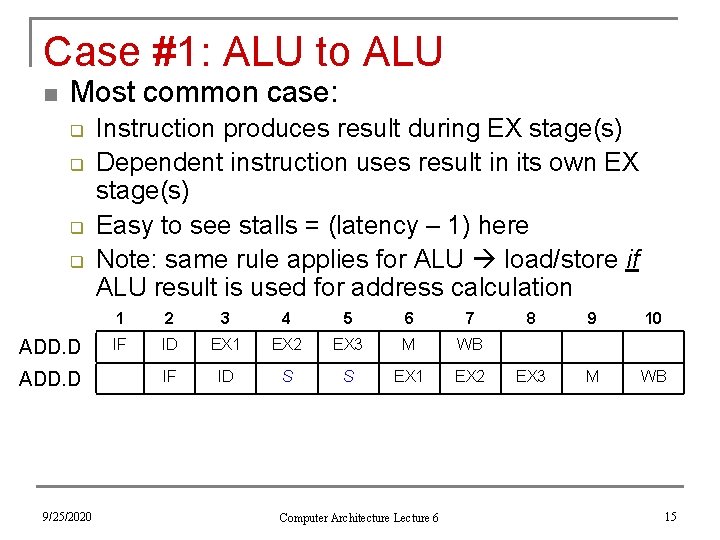

Case #1: ALU to ALU n Most common case: q q ADD. D 9/25/2020 Instruction produces result during EX stage(s) Dependent instruction uses result in its own EX stage(s) Easy to see stalls = (latency – 1) here Note: same rule applies for ALU load/store if ALU result is used for address calculation 1 2 3 4 5 6 7 IF ID EX 1 EX 2 EX 3 M WB IF ID S S EX 1 EX 2 Computer Architecture Lecture 6 8 9 10 EX 3 M WB 15

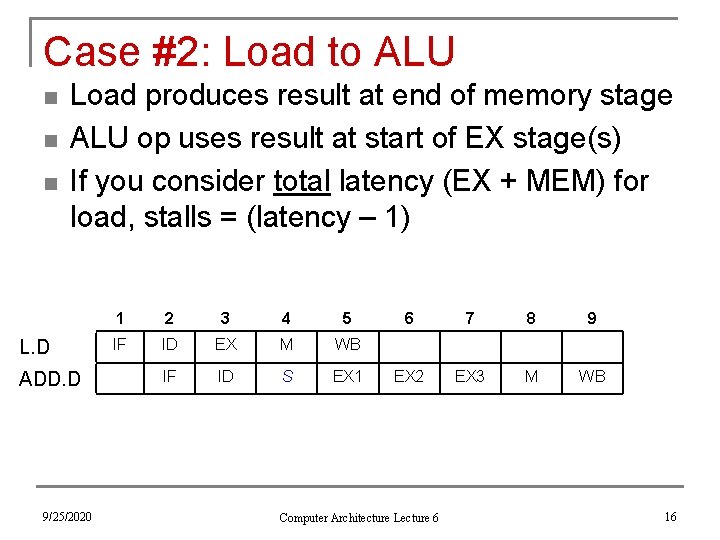

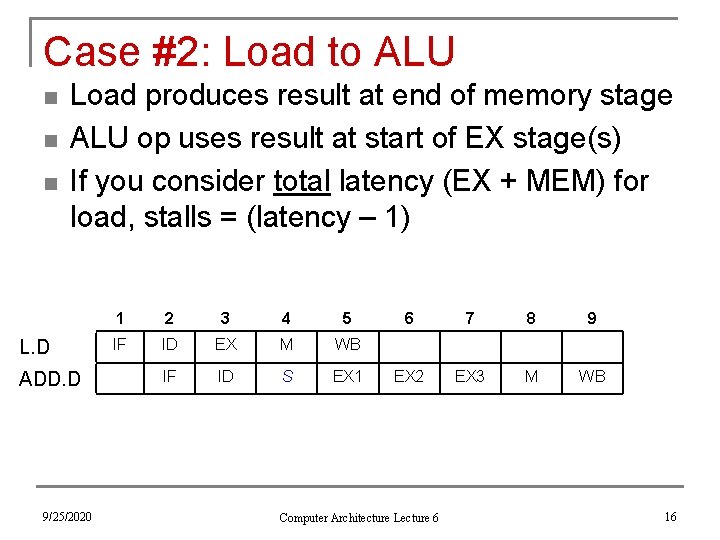

Case #2: Load to ALU n n n Load produces result at end of memory stage ALU op uses result at start of EX stage(s) If you consider total latency (EX + MEM) for load, stalls = (latency – 1) L. D ADD. D 9/25/2020 1 2 3 4 5 IF ID EX M WB IF ID S EX 1 6 7 8 9 EX 2 EX 3 M WB Computer Architecture Lecture 6 16

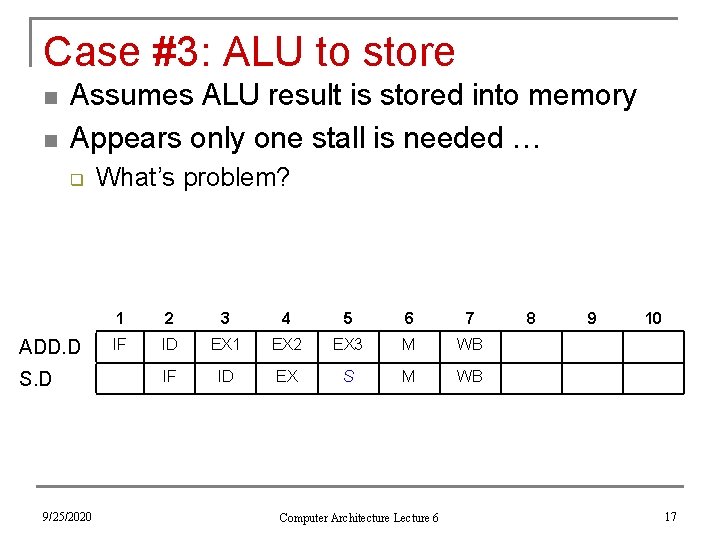

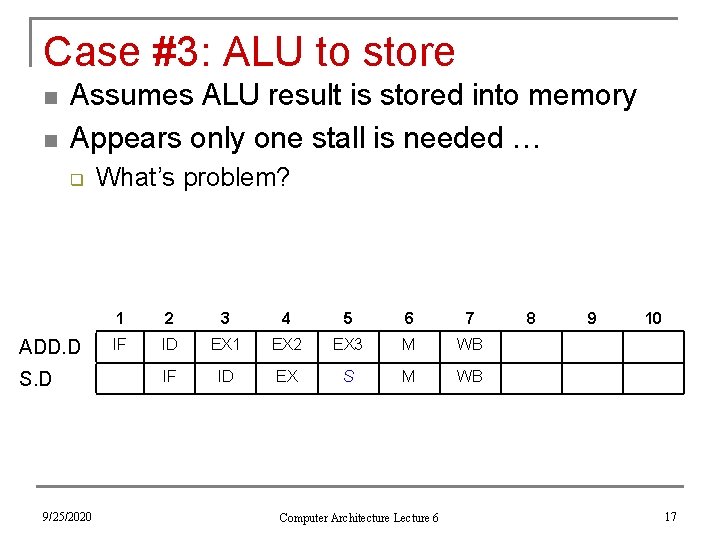

Case #3: ALU to store n n Assumes ALU result is stored into memory Appears only one stall is needed … q ADD. D S. D 9/25/2020 What’s problem? 1 2 3 4 5 6 7 IF ID EX 1 EX 2 EX 3 M WB IF ID EX S M WB Computer Architecture Lecture 6 8 9 10 17

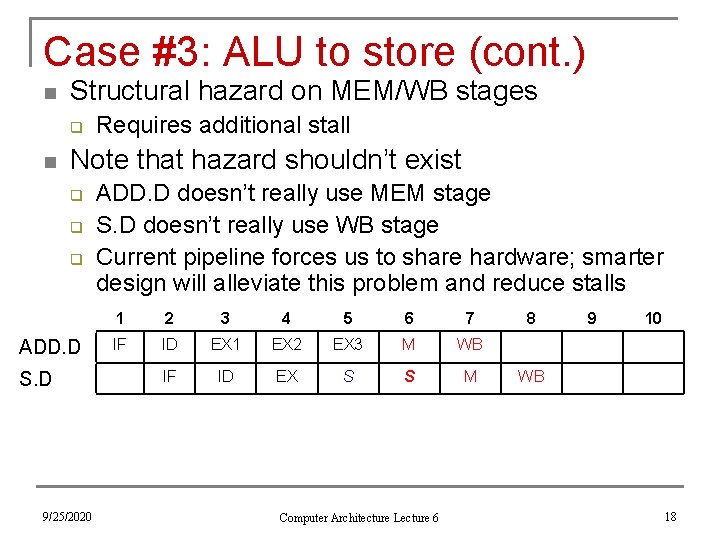

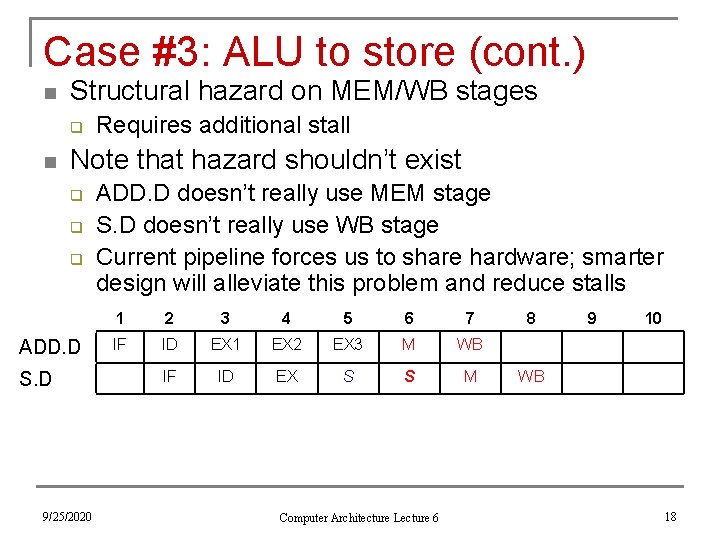

Case #3: ALU to store (cont. ) n Structural hazard on MEM/WB stages q n Requires additional stall Note that hazard shouldn’t exist q q q ADD. D S. D 9/25/2020 ADD. D doesn’t really use MEM stage S. D doesn’t really use WB stage Current pipeline forces us to share hardware; smarter design will alleviate this problem and reduce stalls 1 2 3 4 5 6 7 IF ID EX 1 EX 2 EX 3 M WB IF ID EX S S M Computer Architecture Lecture 6 8 9 10 WB 18

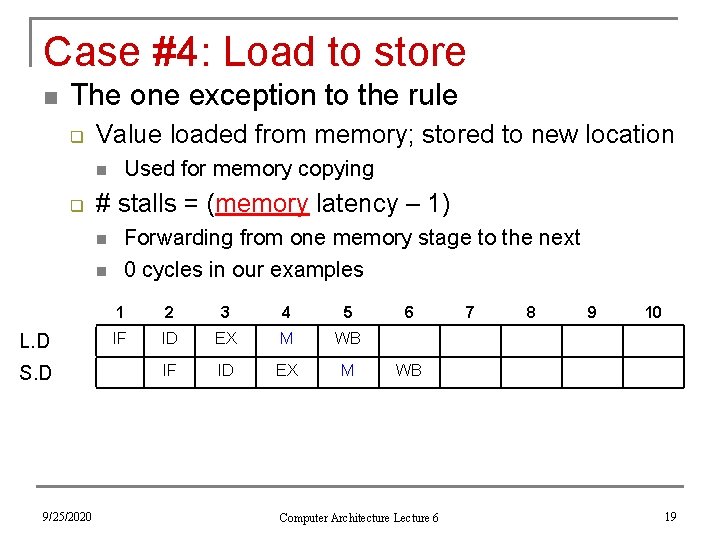

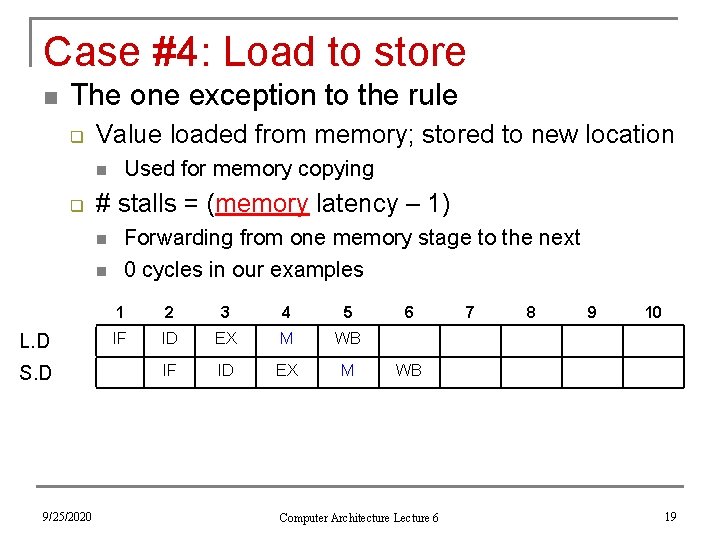

Case #4: Load to store n The one exception to the rule q Value loaded from memory; stored to new location n q # stalls = (memory latency – 1) n n L. D S. D 9/25/2020 Used for memory copying Forwarding from one memory stage to the next 0 cycles in our examples 1 2 3 4 5 IF ID EX M WB IF ID EX M 6 7 8 9 10 WB Computer Architecture Lecture 6 19

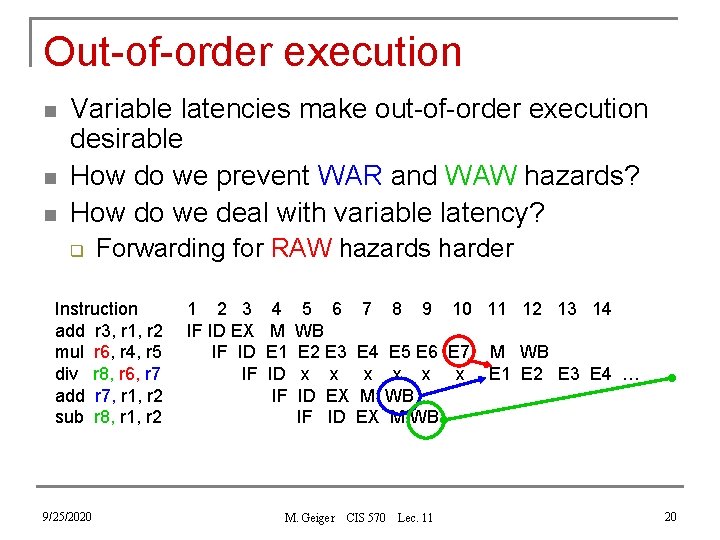

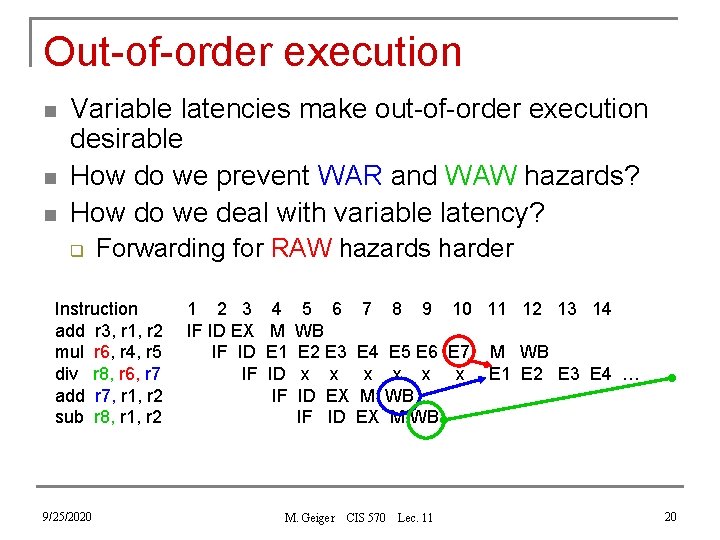

Out-of-order execution n Variable latencies make out-of-order execution desirable How do we prevent WAR and WAW hazards? How do we deal with variable latency? q Forwarding for RAW hazards harder Instruction add r 3, r 1, r 2 mul r 6, r 4, r 5 div r 8, r 6, r 7 add r 7, r 1, r 2 sub r 8, r 1, r 2 9/25/2020 1 2 3 IF ID EX IF ID IF 4 M E 1 ID IF 5 6 WB E 2 E 3 x x ID EX IF ID M. Geiger 7 8 9 10 11 12 13 14 E 5 E 6 E 7 x x M WB EX M WB CIS 570 Lec. 11 M WB E 1 E 2 E 3 E 4 … 20

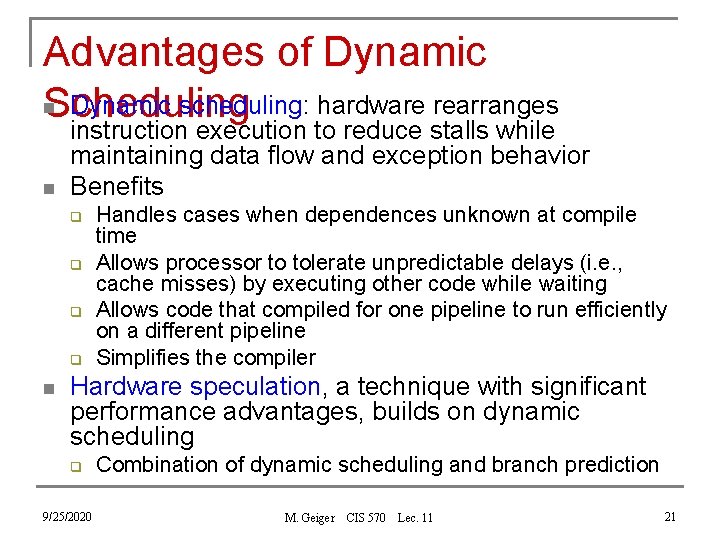

Advantages of Dynamic n Dynamic scheduling: hardware rearranges Scheduling n instruction execution to reduce stalls while maintaining data flow and exception behavior Benefits q q n Handles cases when dependences unknown at compile time Allows processor to tolerate unpredictable delays (i. e. , cache misses) by executing other code while waiting Allows code that compiled for one pipeline to run efficiently on a different pipeline Simplifies the compiler Hardware speculation, a technique with significant performance advantages, builds on dynamic scheduling q 9/25/2020 Combination of dynamic scheduling and branch prediction M. Geiger CIS 570 Lec. 11 21

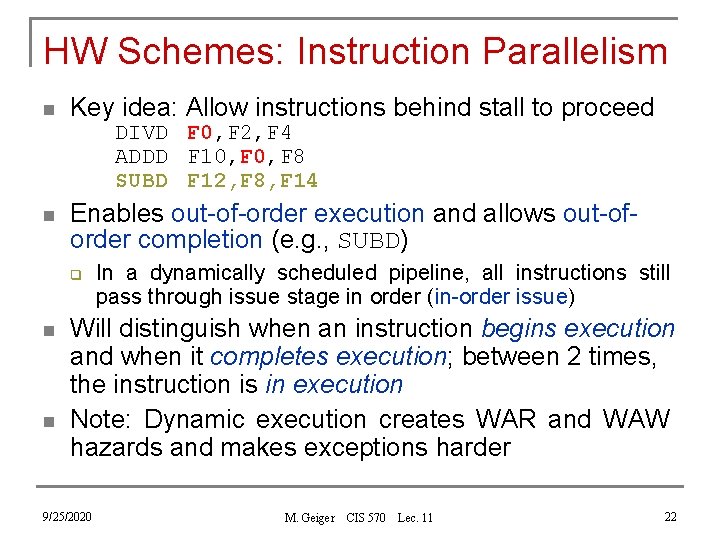

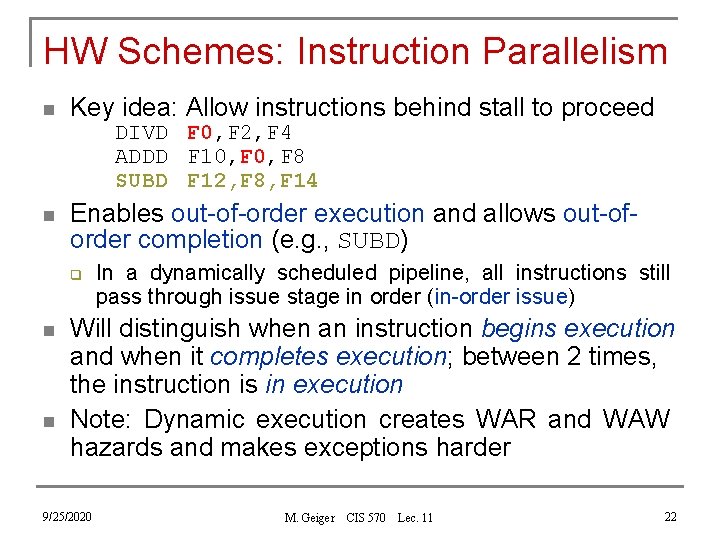

HW Schemes: Instruction Parallelism n Key idea: Allow instructions behind stall to proceed DIVD F 0, F 2, F 4 ADDD F 10, F 8 SUBD F 12, F 8, F 14 n Enables out-of-order execution and allows out-oforder completion (e. g. , SUBD) q n n In a dynamically scheduled pipeline, all instructions still pass through issue stage in order (in-order issue) Will distinguish when an instruction begins execution and when it completes execution; between 2 times, the instruction is in execution Note: Dynamic execution creates WAR and WAW hazards and makes exceptions harder 9/25/2020 M. Geiger CIS 570 Lec. 11 22

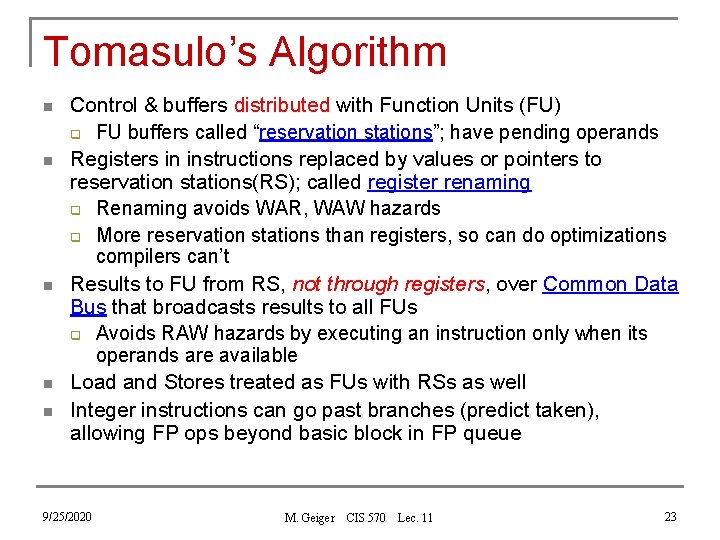

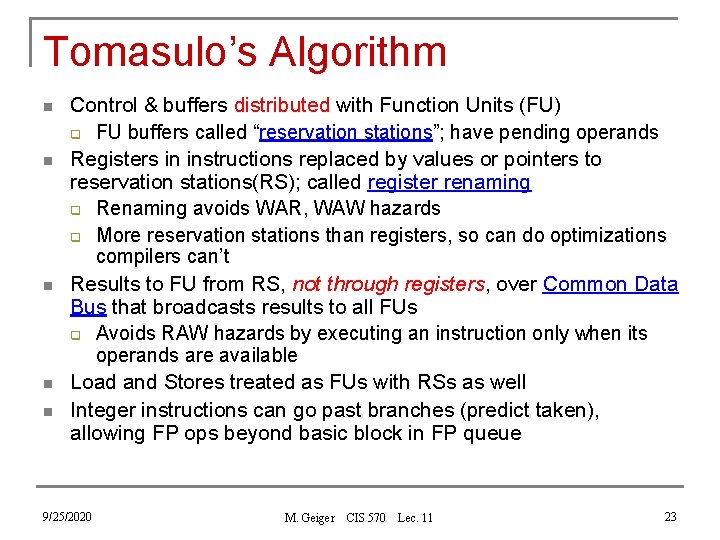

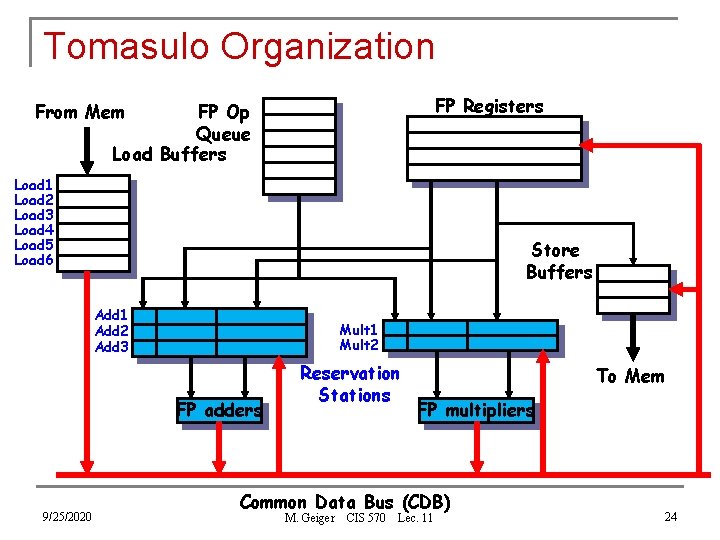

Tomasulo’s Algorithm n n n Control & buffers distributed with Function Units (FU) q FU buffers called “reservation stations”; have pending operands Registers in instructions replaced by values or pointers to reservation stations(RS); called register renaming q Renaming avoids WAR, WAW hazards q More reservation stations than registers, so can do optimizations compilers can’t Results to FU from RS, not through registers, over Common Data Bus that broadcasts results to all FUs q Avoids RAW hazards by executing an instruction only when its operands are available Load and Stores treated as FUs with RSs as well Integer instructions can go past branches (predict taken), allowing FP ops beyond basic block in FP queue 9/25/2020 M. Geiger CIS 570 Lec. 11 23

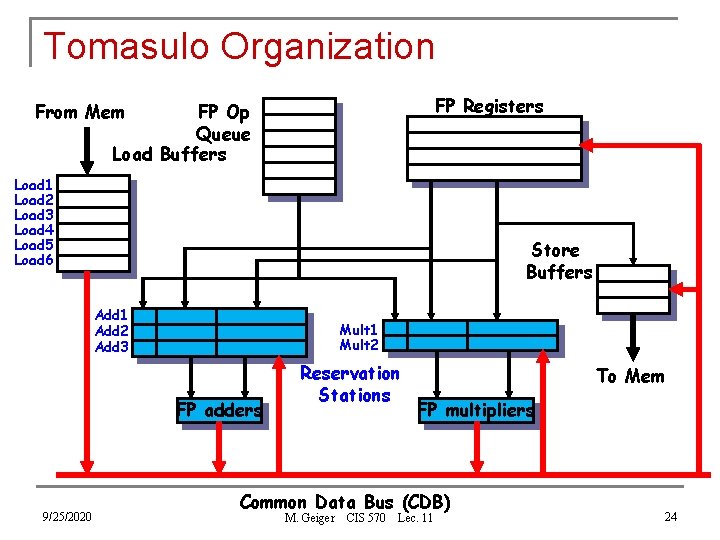

Tomasulo Organization FP Registers From Mem FP Op Queue Load Buffers Load 1 Load 2 Load 3 Load 4 Load 5 Load 6 Store Buffers Add 1 Add 2 Add 3 Mult 1 Mult 2 FP adders 9/25/2020 Reservation Stations To Mem FP multipliers Common Data Bus (CDB) M. Geiger CIS 570 Lec. 11 24

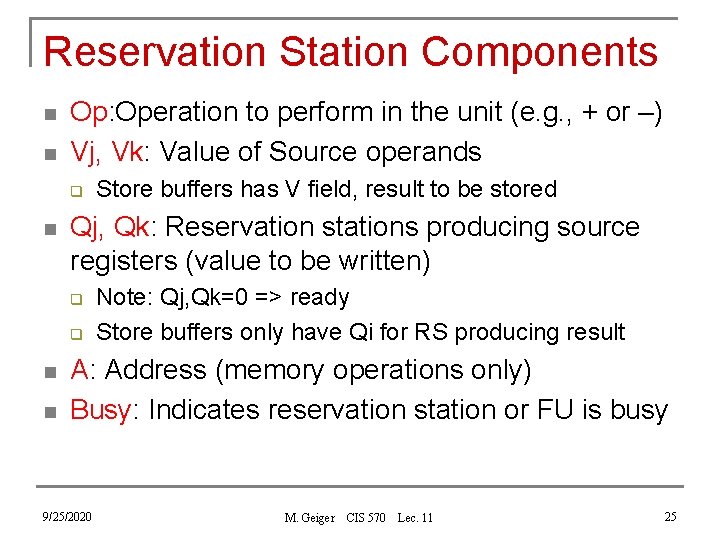

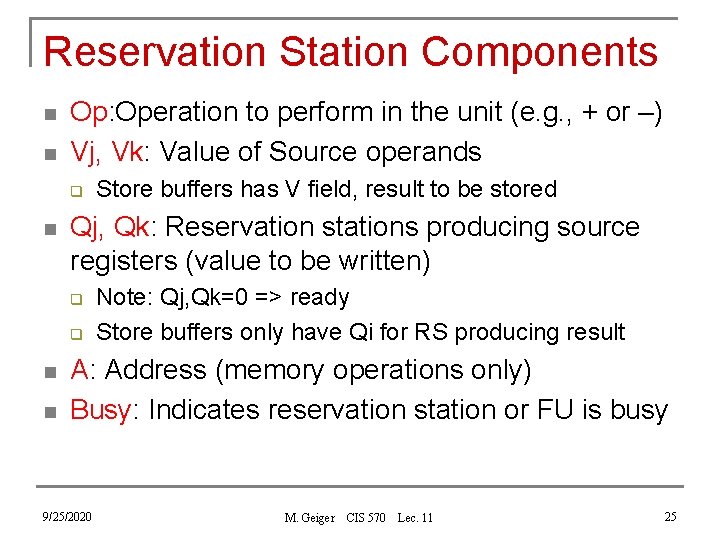

Reservation Station Components n n Op: Operation to perform in the unit (e. g. , + or –) Vj, Vk: Value of Source operands q n Qj, Qk: Reservation stations producing source registers (value to be written) q q n n Store buffers has V field, result to be stored Note: Qj, Qk=0 => ready Store buffers only have Qi for RS producing result A: Address (memory operations only) Busy: Indicates reservation station or FU is busy 9/25/2020 M. Geiger CIS 570 Lec. 11 25

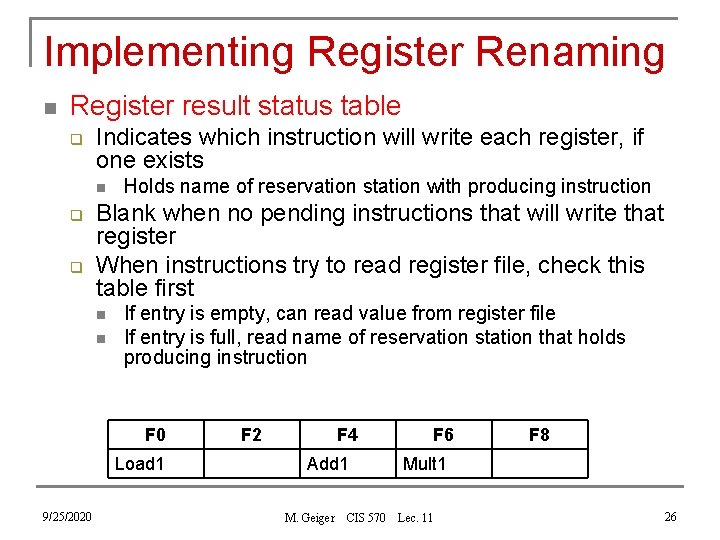

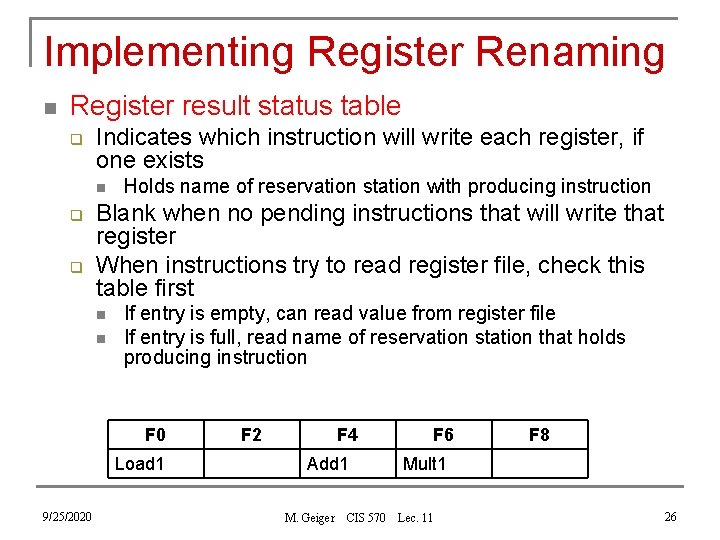

Implementing Register Renaming n Register result status table q Indicates which instruction will write each register, if one exists n q q Holds name of reservation station with producing instruction Blank when no pending instructions that will write that register When instructions try to read register file, check this table first n n If entry is empty, can read value from register file If entry is full, read name of reservation station that holds producing instruction F 0 Load 1 9/25/2020 F 2 F 4 Add 1 M. Geiger CIS 570 F 6 F 8 Mult 1 Lec. 11 26

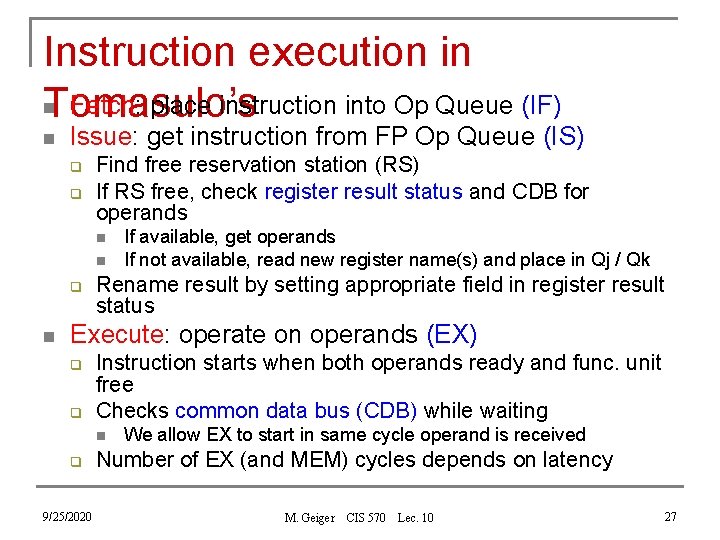

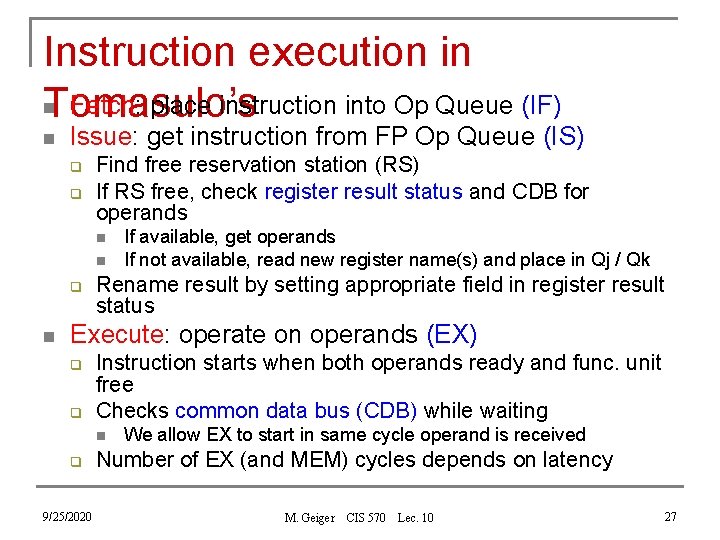

Instruction execution in n Fetch: place instruction into Op Queue (IF) Tomasulo’s n Issue: get instruction from FP Op Queue (IS) q q Find free reservation station (RS) If RS free, check register result status and CDB for operands n n q n If available, get operands If not available, read new register name(s) and place in Qj / Qk Rename result by setting appropriate field in register result status Execute: operate on operands (EX) q q Instruction starts when both operands ready and func. unit free Checks common data bus (CDB) while waiting n q 9/25/2020 We allow EX to start in same cycle operand is received Number of EX (and MEM) cycles depends on latency M. Geiger CIS 570 Lec. 10 27

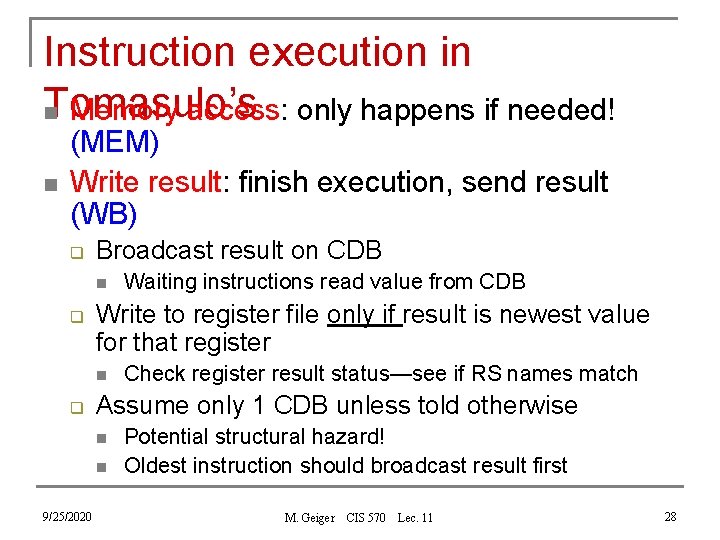

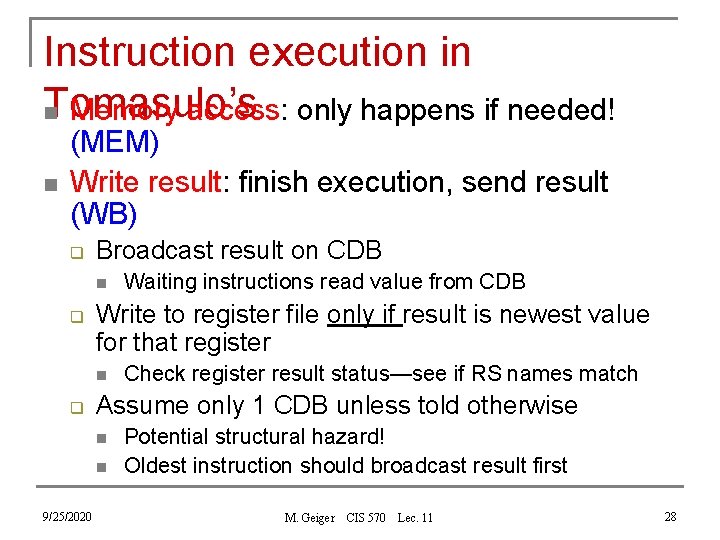

Instruction execution in Tomasulo’s n Memory access: only happens if needed! n (MEM) Write result: finish execution, send result (WB) q Broadcast result on CDB n q Write to register file only if result is newest value for that register n q Check register result status—see if RS names match Assume only 1 CDB unless told otherwise n n 9/25/2020 Waiting instructions read value from CDB Potential structural hazard! Oldest instruction should broadcast result first M. Geiger CIS 570 Lec. 11 28

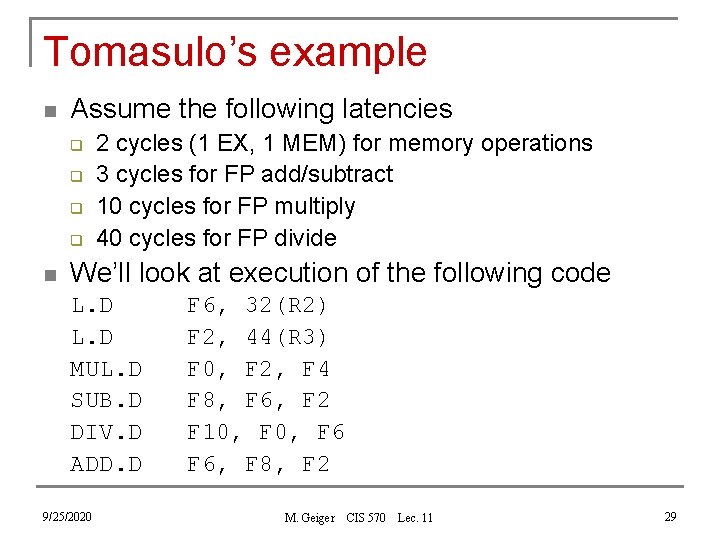

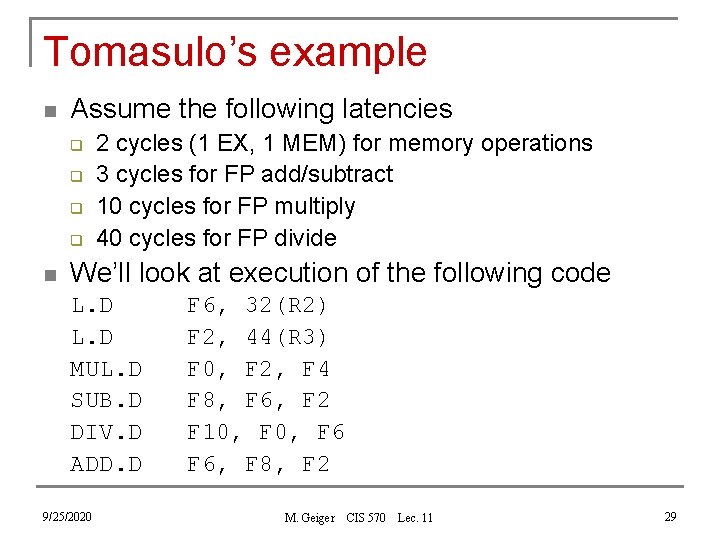

Tomasulo’s example n Assume the following latencies q q n 2 cycles (1 EX, 1 MEM) for memory operations 3 cycles for FP add/subtract 10 cycles for FP multiply 40 cycles for FP divide We’ll look at execution of the following code L. D MUL. D SUB. D DIV. D ADD. D 9/25/2020 F 6, 32(R 2) F 2, 44(R 3) F 0, F 2, F 4 F 8, F 6, F 2 F 10, F 6 F 6, F 8, F 2 M. Geiger CIS 570 Lec. 11 29

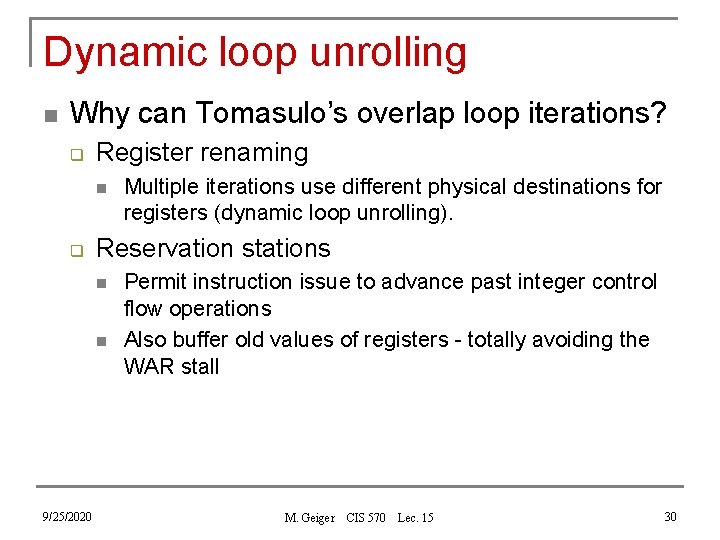

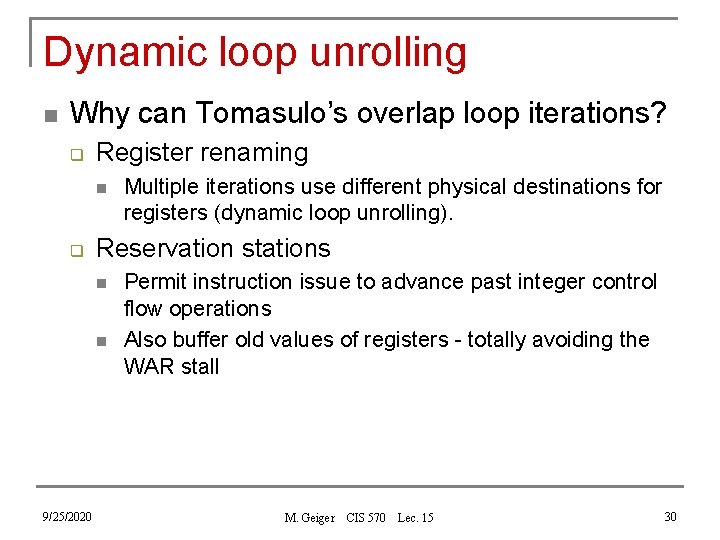

Dynamic loop unrolling n Why can Tomasulo’s overlap loop iterations? q Register renaming n q Reservation stations n n 9/25/2020 Multiple iterations use different physical destinations for registers (dynamic loop unrolling). Permit instruction issue to advance past integer control flow operations Also buffer old values of registers - totally avoiding the WAR stall M. Geiger CIS 570 Lec. 15 30

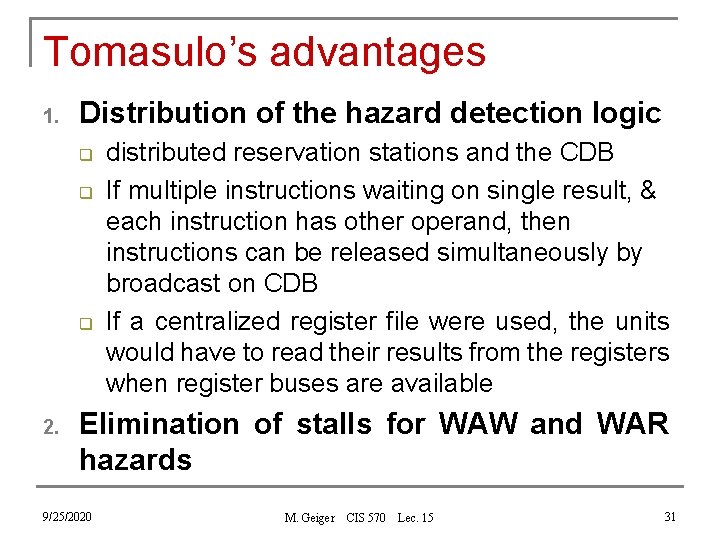

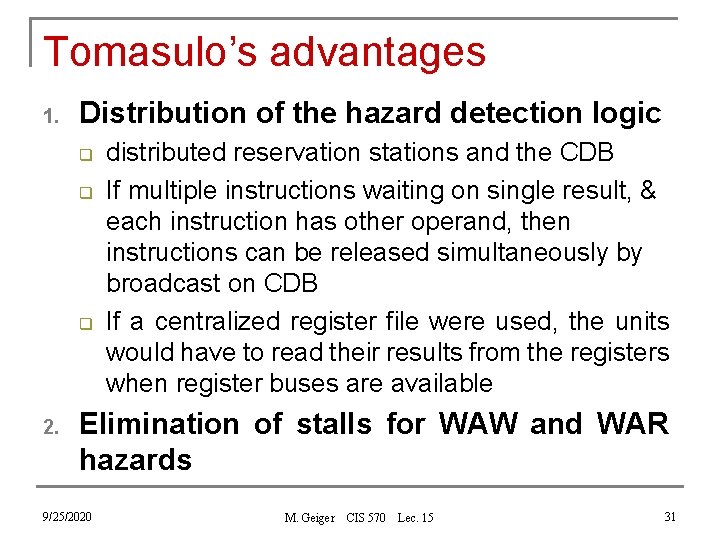

Tomasulo’s advantages 1. Distribution of the hazard detection logic q q q 2. distributed reservation stations and the CDB If multiple instructions waiting on single result, & each instruction has other operand, then instructions can be released simultaneously by broadcast on CDB If a centralized register file were used, the units would have to read their results from the registers when register buses are available Elimination of stalls for WAW and WAR hazards 9/25/2020 M. Geiger CIS 570 Lec. 15 31

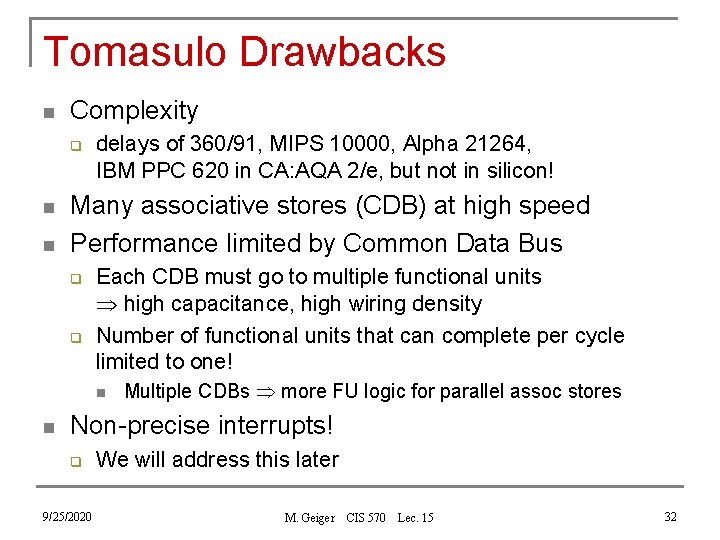

Tomasulo Drawbacks n Complexity q n n delays of 360/91, MIPS 10000, Alpha 21264, IBM PPC 620 in CA: AQA 2/e, but not in silicon! Many associative stores (CDB) at high speed Performance limited by Common Data Bus q q Each CDB must go to multiple functional units high capacitance, high wiring density Number of functional units that can complete per cycle limited to one! n n Multiple CDBs more FU logic for parallel assoc stores Non-precise interrupts! q 9/25/2020 We will address this later M. Geiger CIS 570 Lec. 15 32

Final notes n Next time q q q n Speculation Multiple issue Multithreading Reminders q 9/25/2020 HW 5 to be posted; due 11/4 Computer Architecture Lecture 6 33