Virtual Memory Adapted from lecture notes of Dr

- Slides: 23

Virtual Memory Adapted from lecture notes of Dr. Patterson and Dr. Kubiatowicz of UC Berkeley

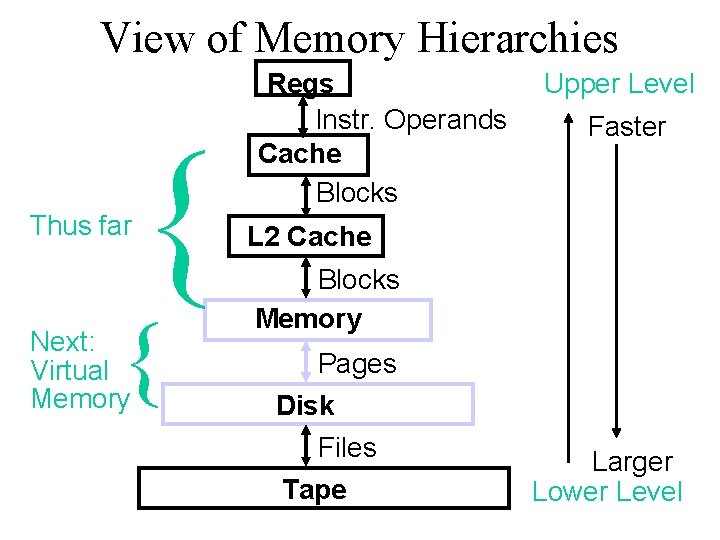

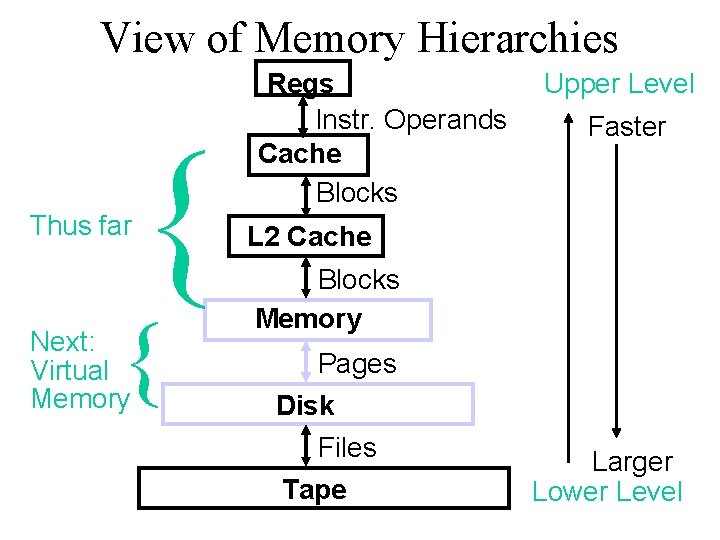

View of Memory Hierarchies Thus far { { Next: Virtual Memory Regs Instr. Operands Cache Blocks Upper Level Faster L 2 Cache Blocks Memory Pages Disk Files Tape Larger Lower Level

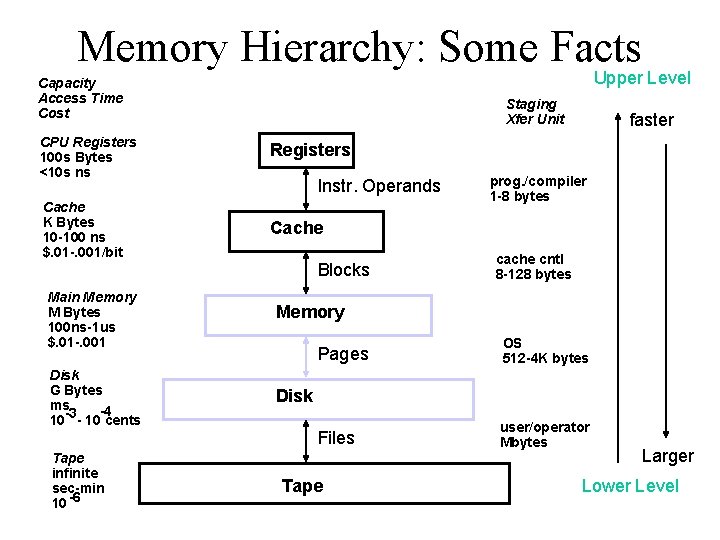

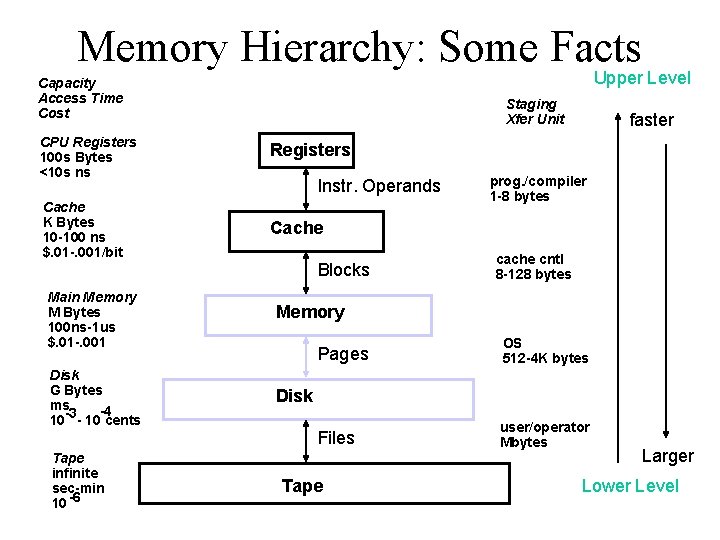

Memory Hierarchy: Some Facts Upper Level Capacity Access Time Cost Staging Xfer Unit CPU Registers 100 s Bytes <10 s ns Registers Cache K Bytes 10 -100 ns $. 01 -. 001/bit Cache Instr. Operands Blocks Main Memory M Bytes 100 ns-1 us $. 01 -. 001 Disk G Bytes ms -4 -3 10 - 10 cents Tape infinite sec-min 10 -6 faster prog. /compiler 1 -8 bytes cache cntl 8 -128 bytes Memory Pages OS 512 -4 K bytes Files user/operator Mbytes Disk Tape Larger Lower Level

Virtual Memory: Motivation • If Principle of Locality allows caches to offer (usually) speed of cache memory with size of DRAM memory, then recursively why not use at next level to give speed of DRAM memory, size of Disk memory? • Treat Memory as “cache” for Disk !!!

• Share memory between multiple processes but still provide protection – don’t let one program read/write memory of another • Address space – give each program the illusion that it has its own private memory – Suppose code starts at addr 0 x 40000000. But different processes have different code, both at the same address! So each program has a different view of memory

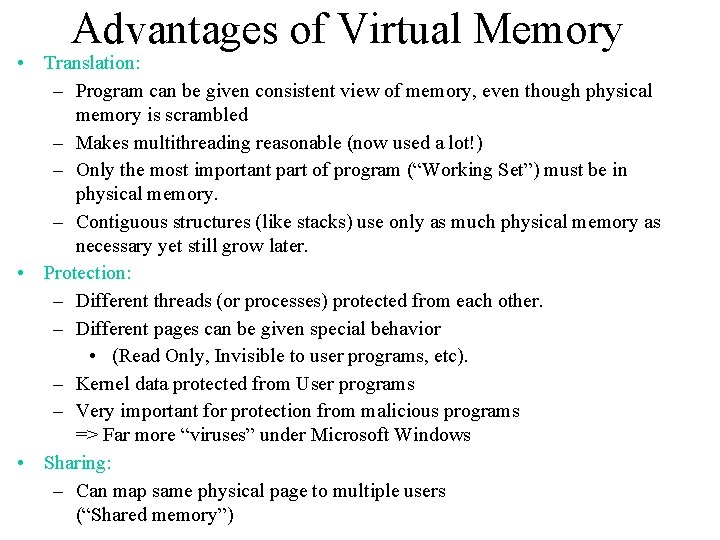

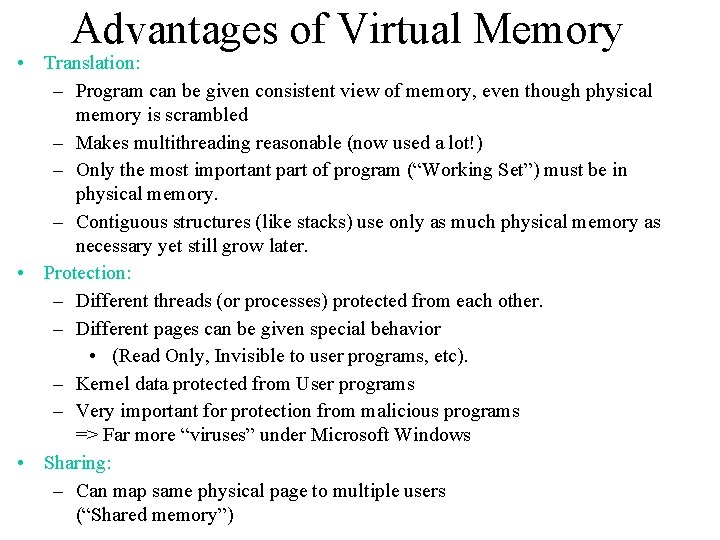

Advantages of Virtual Memory • Translation: – Program can be given consistent view of memory, even though physical memory is scrambled – Makes multithreading reasonable (now used a lot!) – Only the most important part of program (“Working Set”) must be in physical memory. – Contiguous structures (like stacks) use only as much physical memory as necessary yet still grow later. • Protection: – Different threads (or processes) protected from each other. – Different pages can be given special behavior • (Read Only, Invisible to user programs, etc). – Kernel data protected from User programs – Very important for protection from malicious programs => Far more “viruses” under Microsoft Windows • Sharing: – Can map same physical page to multiple users (“Shared memory”)

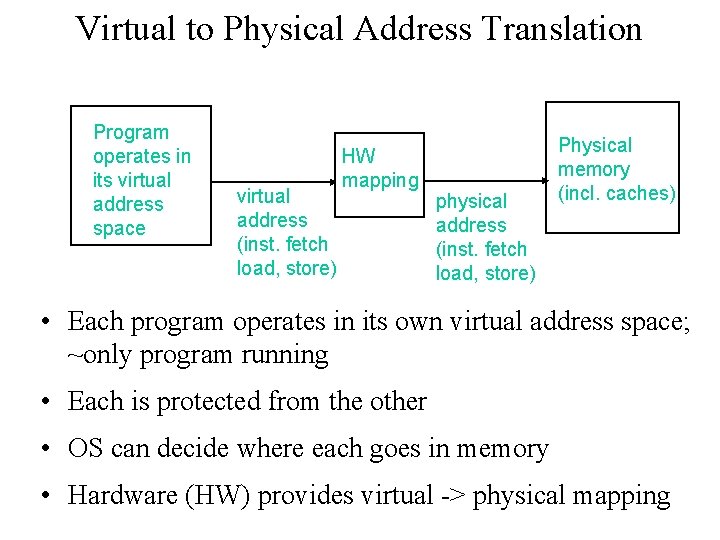

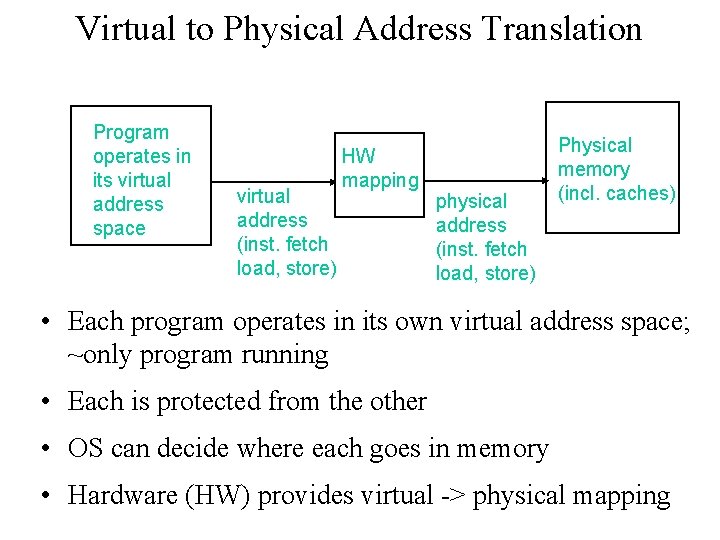

Virtual to Physical Address Translation Program operates in its virtual address space virtual address (inst. fetch load, store) HW mapping physical address (inst. fetch load, store) Physical memory (incl. caches) • Each program operates in its own virtual address space; ~only program running • Each is protected from the other • OS can decide where each goes in memory • Hardware (HW) provides virtual -> physical mapping

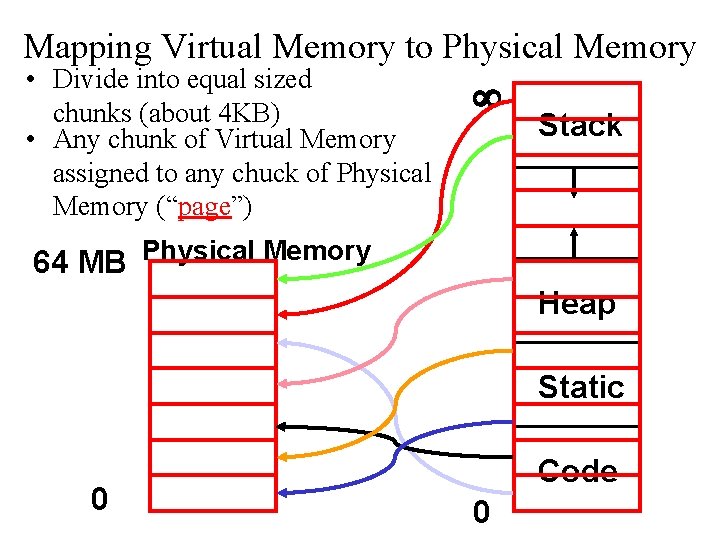

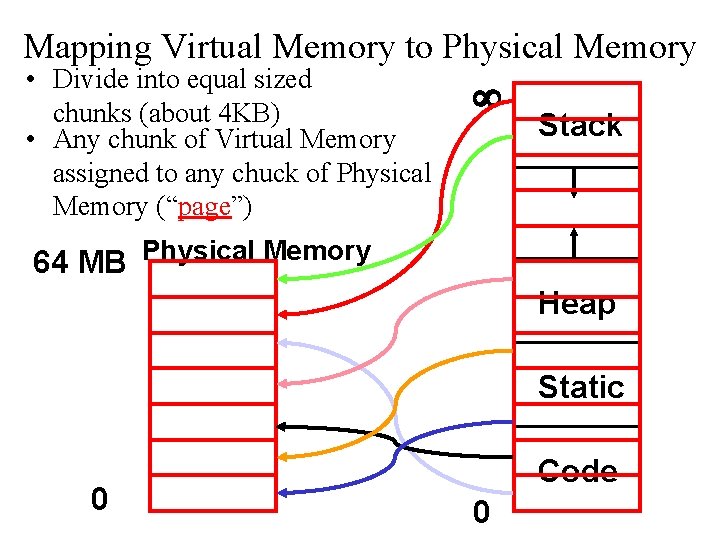

Mapping Virtual Memory to Physical Memory • Divide into equal sized chunks (about 4 KB) • Any chunk of Virtual Memory assigned to any chuck of Physical Memory (“page”) ¥ Stack Physical Memory 64 MB Heap Static 0 Code 0

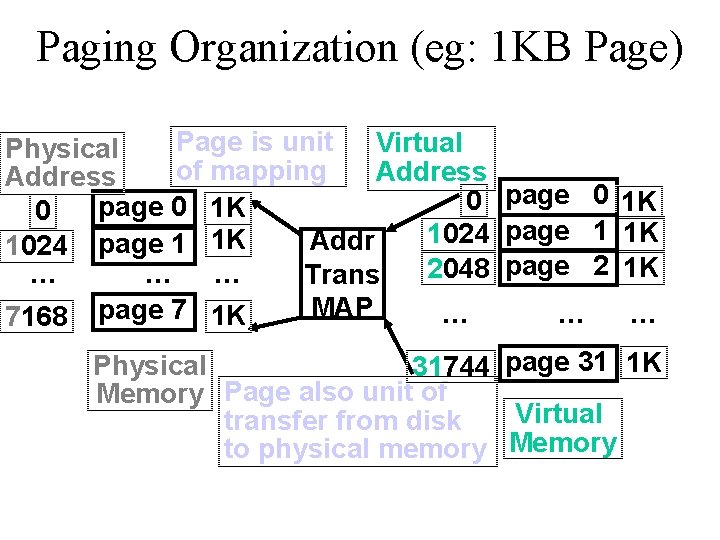

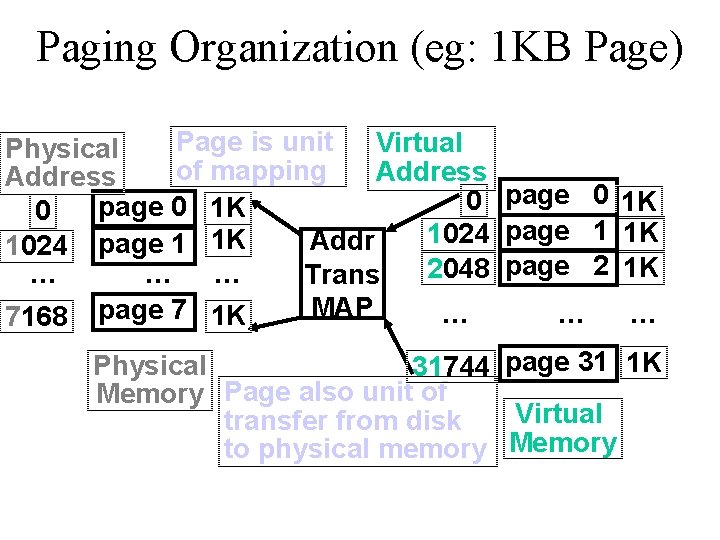

Paging Organization (eg: 1 KB Page) Page is unit Virtual Physical of mapping Address page 0 1 K 0 1024 page 1 1 K Addr 1024 page 1 1 K 2048 page 2 1 K. . Trans MAP. . 7168 page 7 1 K Physical 31744 page 31 1 K Memory Page also unit of Virtual transfer from disk to physical memory Memory

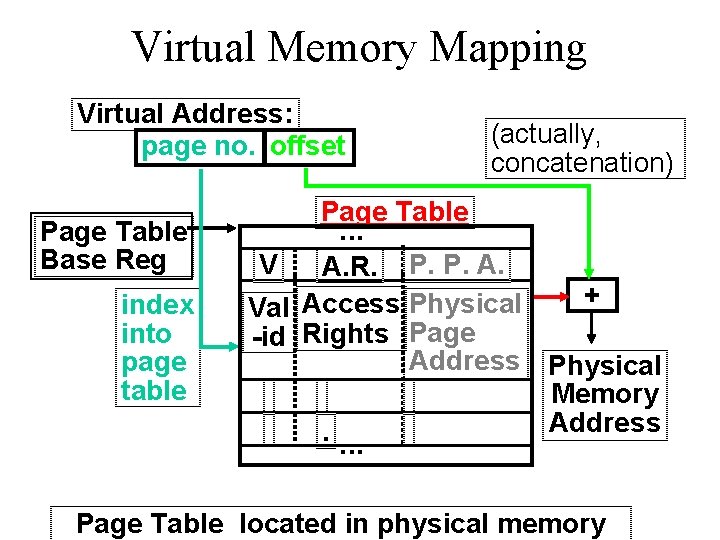

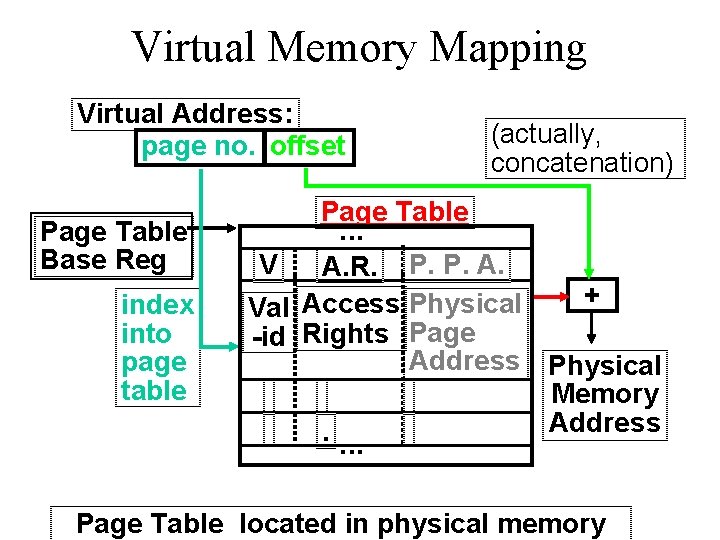

Virtual Memory Mapping Virtual Address: page no. offset Page Table Base Reg index into page table (actually, concatenation) Page Table . . . V A. R. P. P. A. + Val Access Physical -id Rights Page Address Physical Memory Address. . Page Table located in physical memory

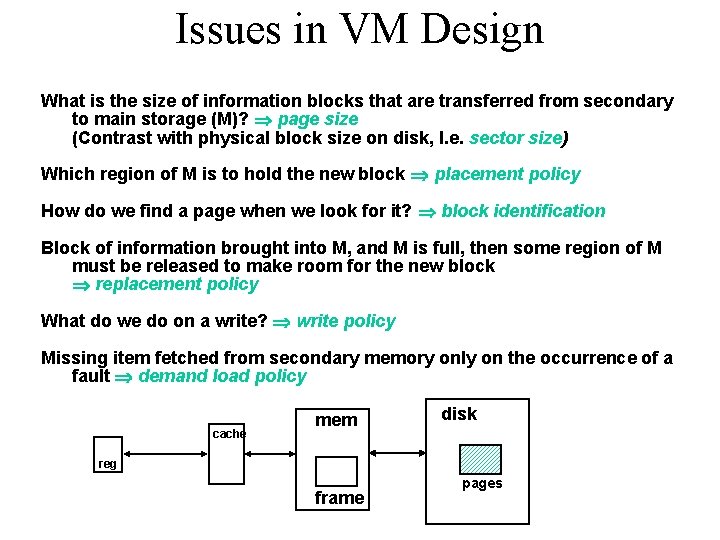

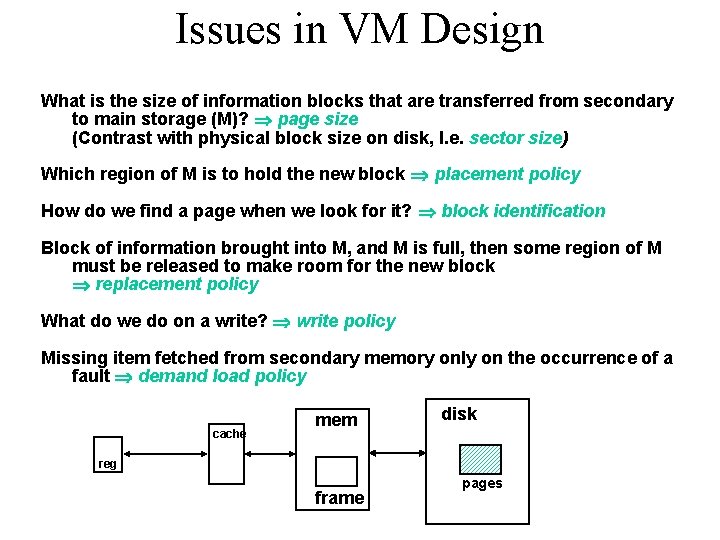

Issues in VM Design What is the size of information blocks that are transferred from secondary to main storage (M)? page size (Contrast with physical block size on disk, I. e. sector size) Which region of M is to hold the new block placement policy How do we find a page when we look for it? block identification Block of information brought into M, and M is full, then some region of M must be released to make room for the new block replacement policy What do we do on a write? write policy Missing item fetched from secondary memory only on the occurrence of a fault demand load policy cache mem disk reg frame pages

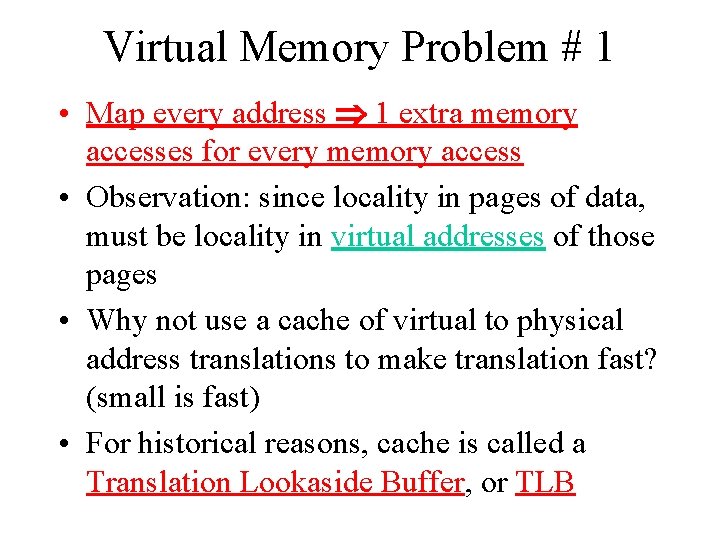

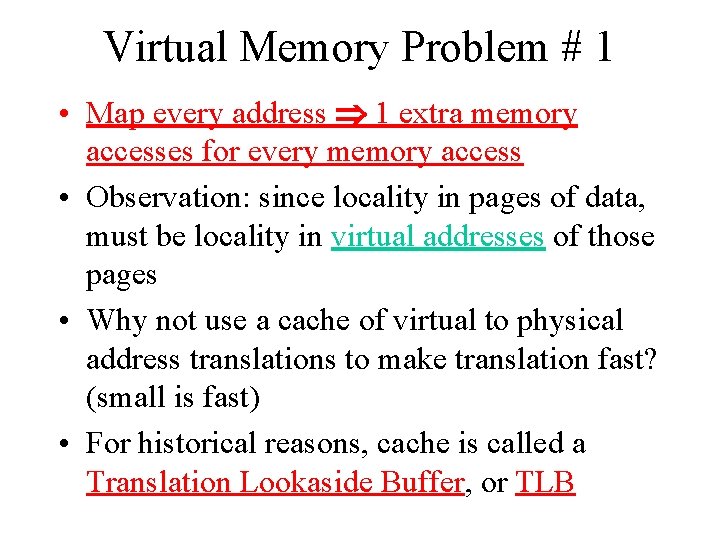

Virtual Memory Problem # 1 • Map every address 1 extra memory accesses for every memory access • Observation: since locality in pages of data, must be locality in virtual addresses of those pages • Why not use a cache of virtual to physical address translations to make translation fast? (small is fast) • For historical reasons, cache is called a Translation Lookaside Buffer, or TLB

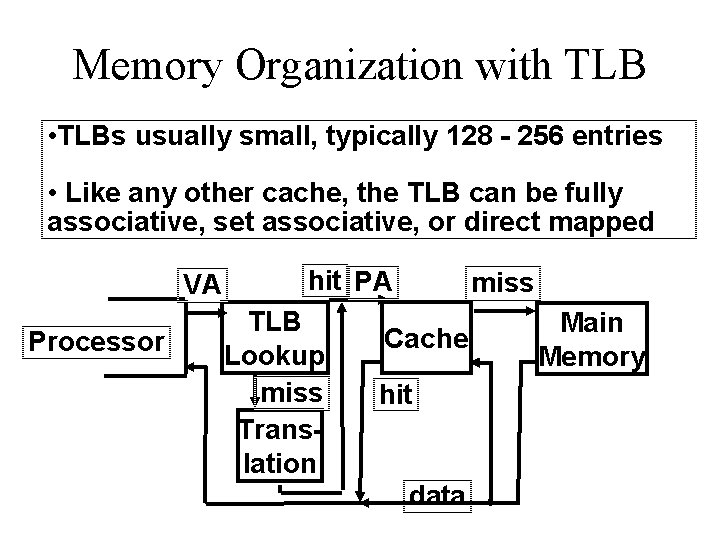

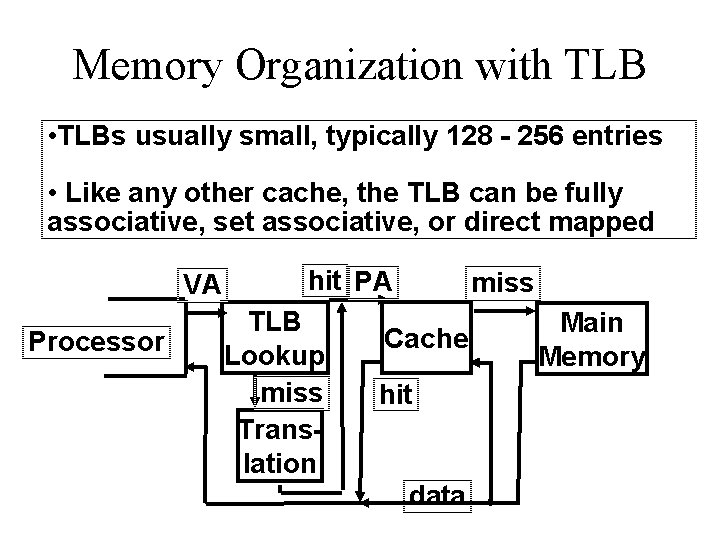

Memory Organization with TLB • TLBs usually small, typically 128 - 256 entries • Like any other cache, the TLB can be fully associative, set associative, or direct mapped VA Processor hit PA TLB Lookup miss Translation miss Cache hit data Main Memory

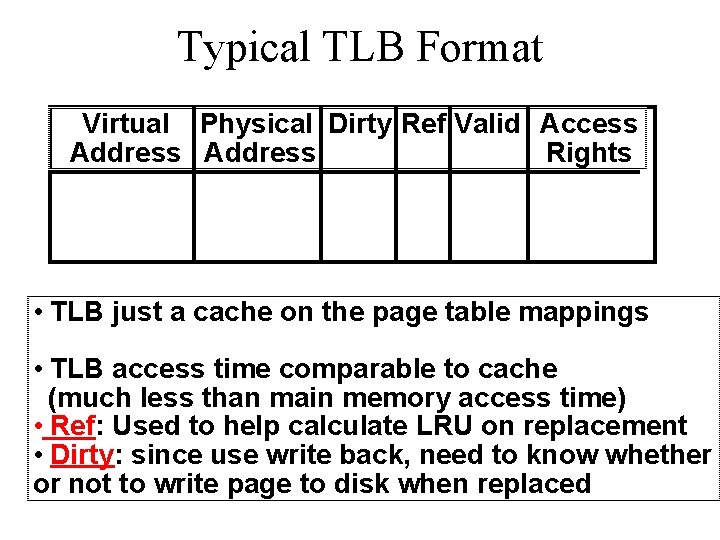

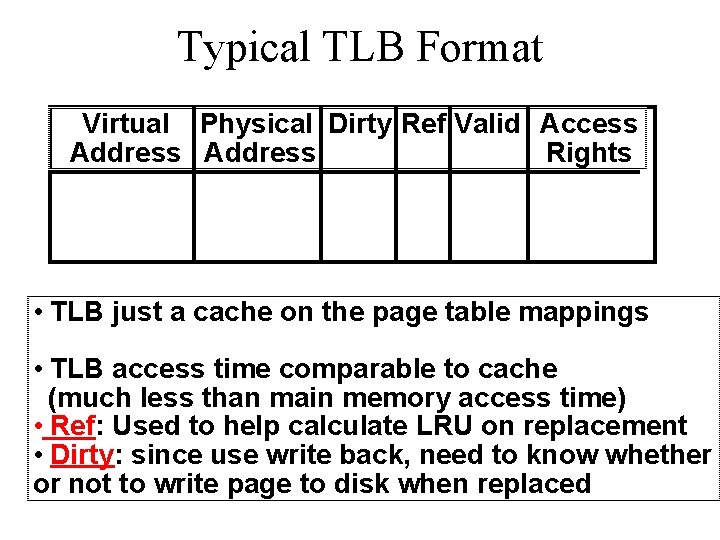

Typical TLB Format Virtual Physical Dirty Ref Valid Access Address Rights • TLB just a cache on the page table mappings • TLB access time comparable to cache (much less than main memory access time) • Ref: Used to help calculate LRU on replacement • Dirty: since use write back, need to know whether or not to write page to disk when replaced

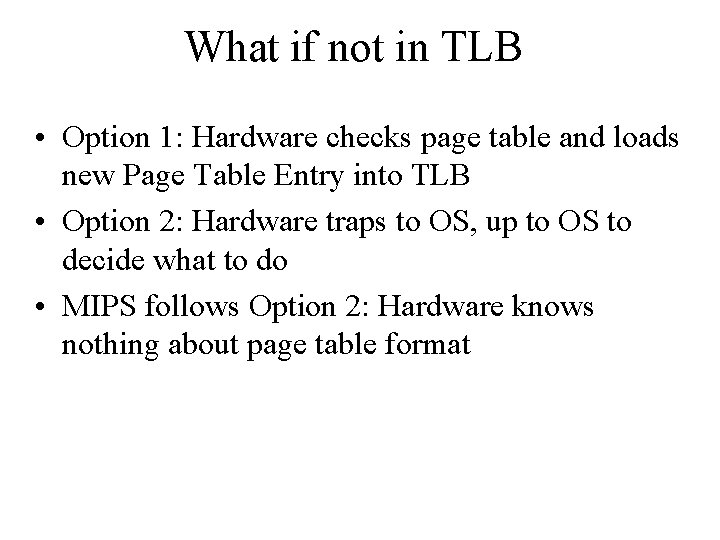

What if not in TLB • Option 1: Hardware checks page table and loads new Page Table Entry into TLB • Option 2: Hardware traps to OS, up to OS to decide what to do • MIPS follows Option 2: Hardware knows nothing about page table format

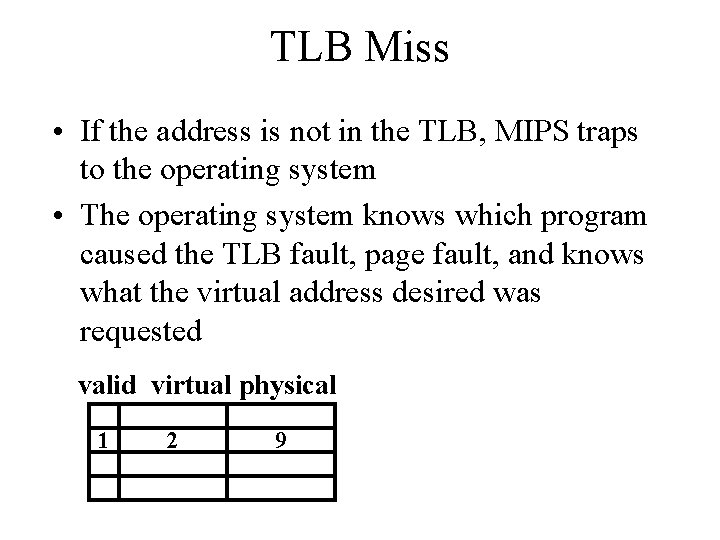

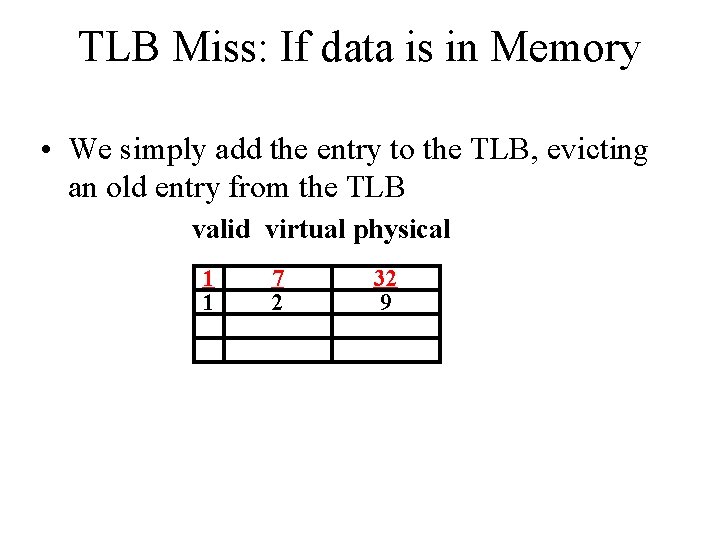

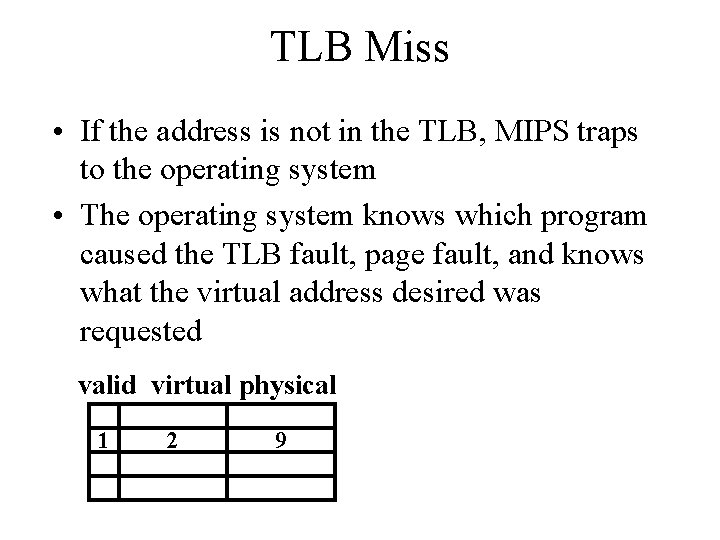

TLB Miss • If the address is not in the TLB, MIPS traps to the operating system • The operating system knows which program caused the TLB fault, page fault, and knows what the virtual address desired was requested valid virtual physical 1 2 9

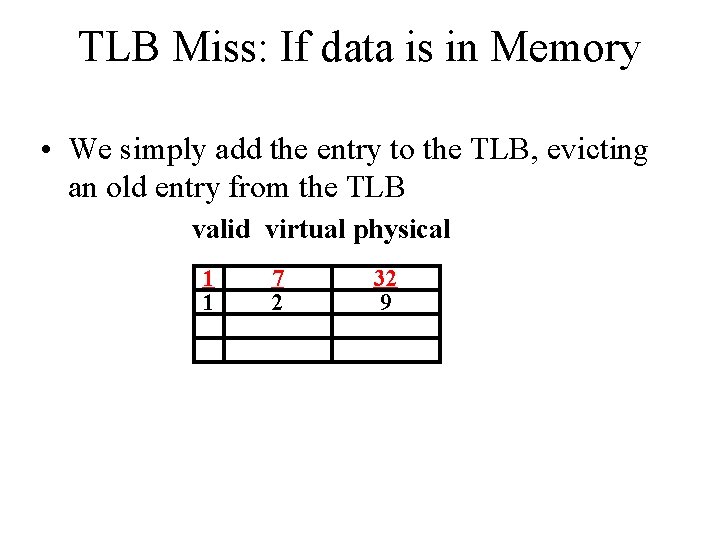

TLB Miss: If data is in Memory • We simply add the entry to the TLB, evicting an old entry from the TLB valid virtual physical 1 1 7 2 32 9

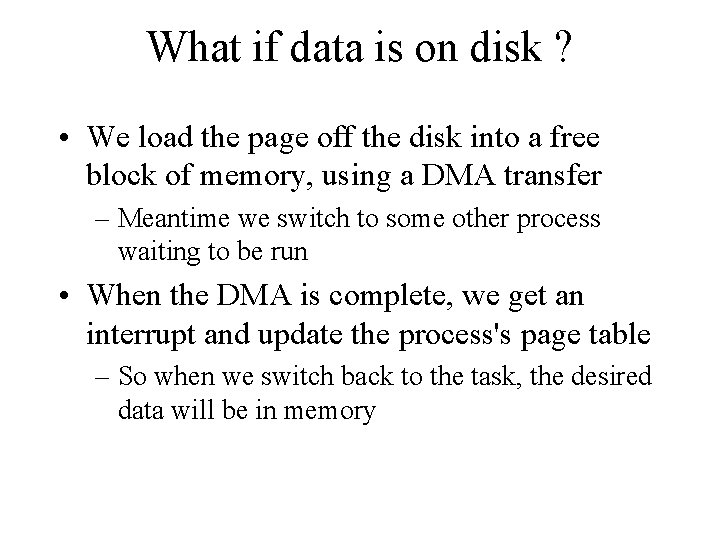

What if data is on disk ? • We load the page off the disk into a free block of memory, using a DMA transfer – Meantime we switch to some other process waiting to be run • When the DMA is complete, we get an interrupt and update the process's page table – So when we switch back to the task, the desired data will be in memory

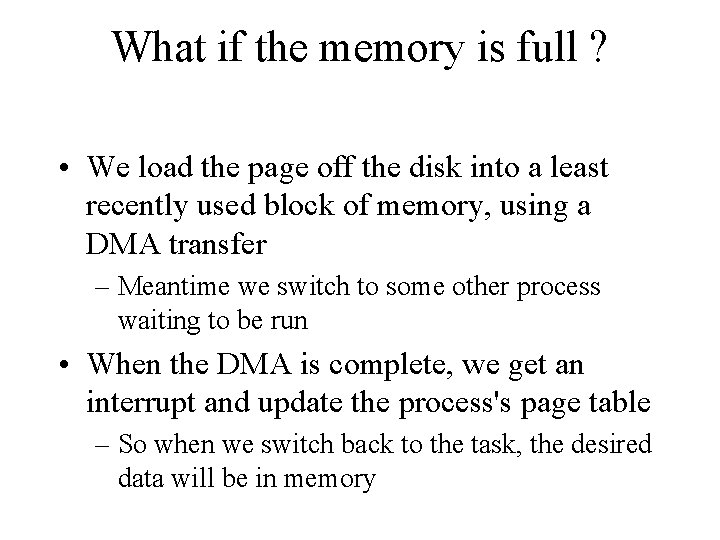

What if the memory is full ? • We load the page off the disk into a least recently used block of memory, using a DMA transfer – Meantime we switch to some other process waiting to be run • When the DMA is complete, we get an interrupt and update the process's page table – So when we switch back to the task, the desired data will be in memory

Virtual Memory Problem # 2 • Page Table too big! – 4 GB Virtual Memory ÷ 4 KB page ~ 1 million Page Table Entries 4 MB just for Page Table for 1 process, 25 processes 100 MB for Page Tables! • Variety of solutions to tradeoff memory size of mapping function for slower when miss TLB – Make TLB large enough, highly associative so rarely miss on address translation

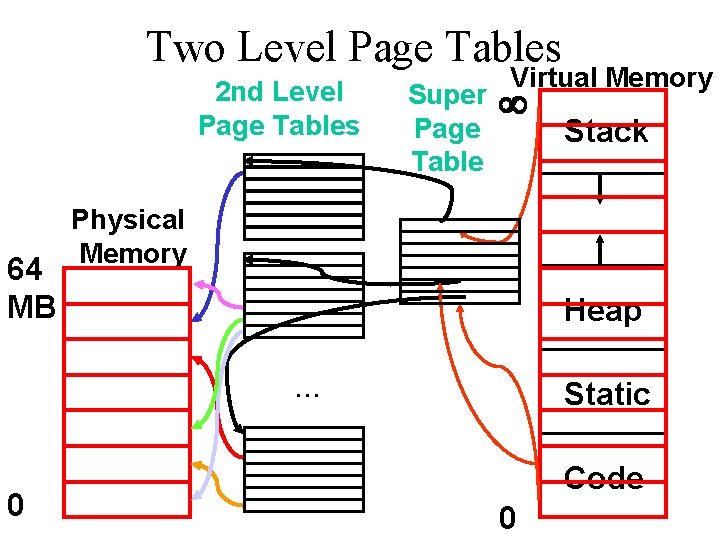

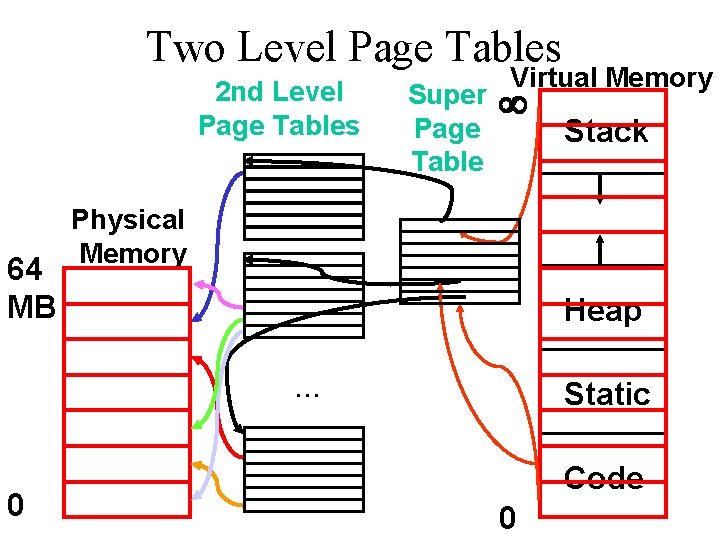

Two Level Page Tables 2 nd Level Page Tables 64 MB Super Page Table Virtual Memory ¥ Physical Memory Heap. . . 0 Stack Static Code 0

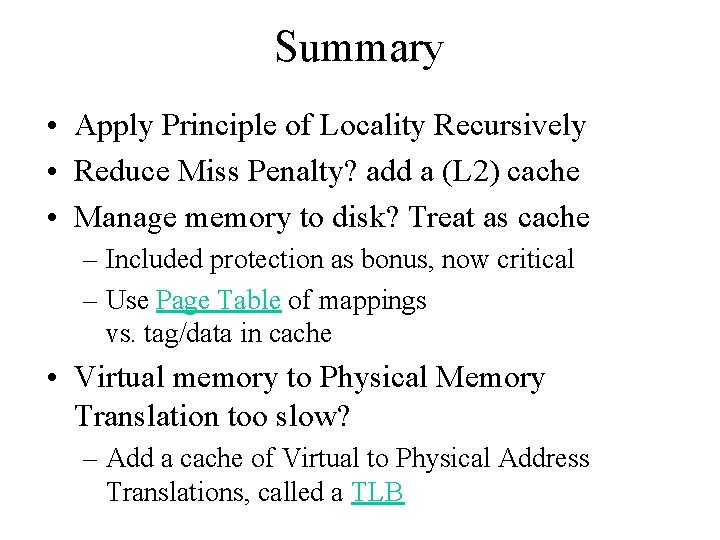

Summary • Apply Principle of Locality Recursively • Reduce Miss Penalty? add a (L 2) cache • Manage memory to disk? Treat as cache – Included protection as bonus, now critical – Use Page Table of mappings vs. tag/data in cache • Virtual memory to Physical Memory Translation too slow? – Add a cache of Virtual to Physical Address Translations, called a TLB

Summary • Virtual Memory allows protected sharing of memory between processes with less swapping to disk, less fragmentation than always swap or base/bound • Spatial Locality means Working Set of Pages is all that must be in memory for process to run fairly well • TLB to reduce performance cost of VM • Need more compact representation to reduce memory size cost of simple 1 -level page table (especially 32 - 64 -bit address)