UserCentered Design and Development Instructor Franz J Kurfess

- Slides: 25

User-Centered Design and Development Instructor: Franz J. Kurfess Computer Science Dept. Cal Poly San Luis Obispo FJK 2005

Copyright Notice • • These slides are a revised version of the originals provided with the book “Interaction Design” by Jennifer Preece, Yvonne Rogers, and Helen Sharp, Wiley, 2002. I added some material, made some minor modifications, and created a custom show to select a subset. – Slides added or modified by me are marked with my initials (FJK), unless I forgot it … FJK 2005

484 -W 09 Quarter • The slides I use in class are in the Custom Show “ 484 -W 09”. It is a subset of the whole collection in this file. • Week 6 contains slides from Chapters 10 and 11 of the textbook. • The original slides for this chapter were in much better shape than the ones for previous chapters, and I mainly made changes to better match our emphasis. FJK 2005

Chapter 10 Usability Evaluation Overview FJK 2005

Chapter Overview • • What to evaluate Why evaluate When to evaluate Hutch. World case study FJK 2005

Motivation • provide an overview of usability evaluation • introduce the context, terms, and concepts used FJK 2005

Objectives • Discuss how developers cope with real-world constraints. • Explain the concepts and terms used to discuss evaluation. • Examine how different techniques are used at different stages of development. FJK 2005

Introducing evaluation

Usability Evaluation Aims • Discuss how developers cope with real-world constraints. • Explain the concepts and terms used to discuss evaluation. • Examine how different techniques are used at different stages of development.

Two main types of evaluation • Formative evaluation is done at different stages of development to check that the product meets users’ needs. • Summative evaluation assesses the quality of a finished product. Our focus is on formative evaluation.

What to evaluate • Iterative design & evaluation is a continuous process that examines: – Early ideas for conceptual model – Early prototypes of the new system – Later, more complete prototypes • Designers need to check that they understand users’ requirements.

Why evaluate? Bruce Tognazzini (“Tog”): “Iterative design, with its repeating cycle of design and testing, is the only validated methodology in existence that will consistently produce successful results. If you don’t have user-testing as an integral part of your design process you are going to throw buckets of money down the drain. ” See Ask. Tog. com for topical discussion about design and evaluation.

Activity: Why Evaluate • In your experiences, have you encountered situations that confirm or refute Tog’s take on evaluation? – critical aspects – constraints FJK 2005

When to evaluate • Throughout design – From the first descriptions, sketches etc. of users needs through to the final product • Design proceeds through iterative cycles of ‘design-test-redesign’ • Evaluation is a key ingredient for a successful design.

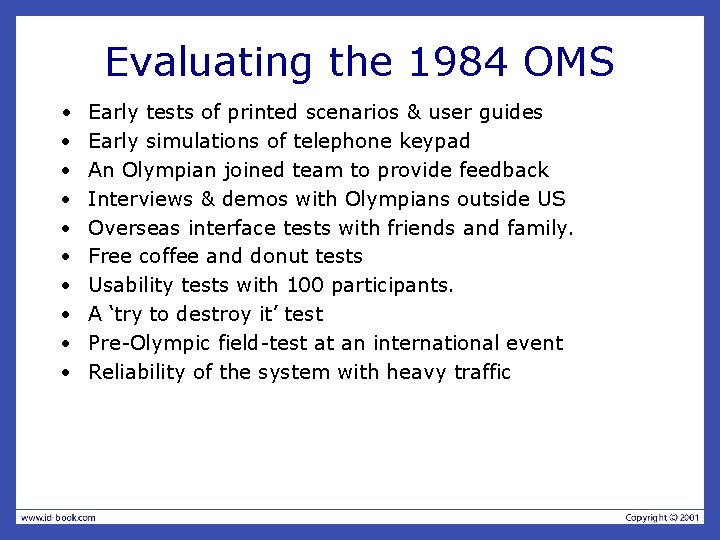

Evaluating the 1984 OMS • · · · · · Early tests of printed scenarios & user guides Early simulations of telephone keypad An Olympian joined team to provide feedback Interviews & demos with Olympians outside US Overseas interface tests with friends and family. Free coffee and donut tests Usability tests with 100 participants. A ‘try to destroy it’ test Pre-Olympic field-test at an international event Reliability of the system with heavy traffic

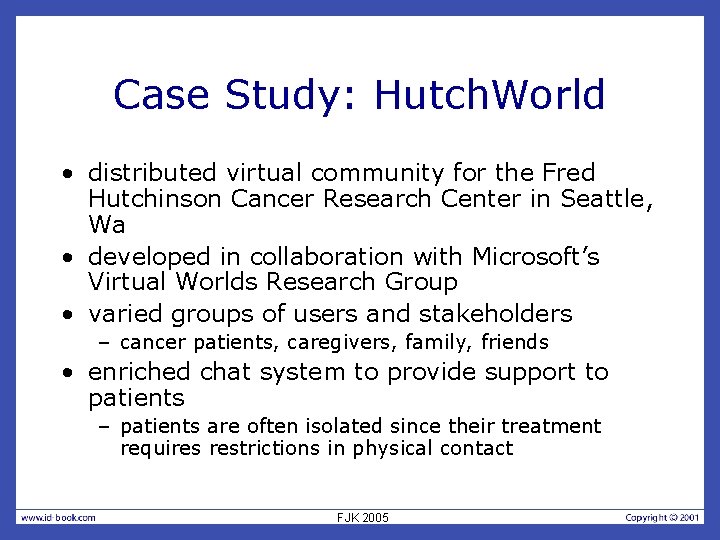

Case Study: Hutch. World • distributed virtual community for the Fred Hutchinson Cancer Research Center in Seattle, Wa • developed in collaboration with Microsoft’s Virtual Worlds Research Group • varied groups of users and stakeholders – cancer patients, caregivers, family, friends • enriched chat system to provide support to patients – patients are often isolated since their treatment requires restrictions in physical contact FJK 2005

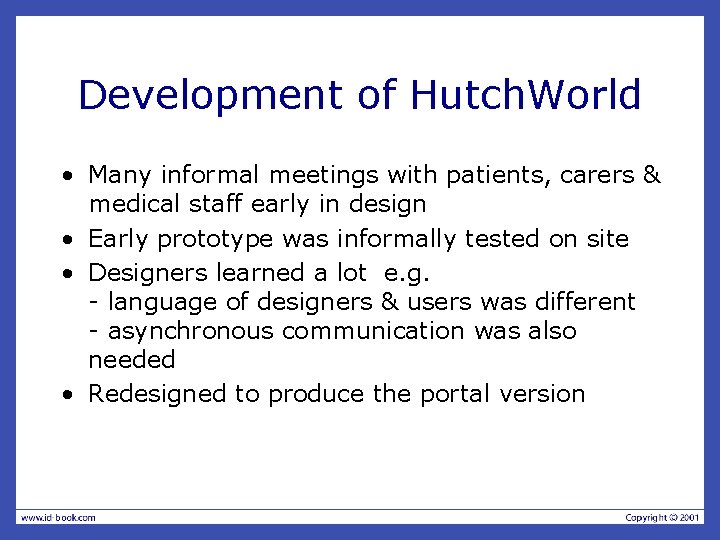

Development of Hutch. World • Many informal meetings with patients, carers & medical staff early in design • Early prototype was informally tested on site • Designers learned a lot e. g. - language of designers & users was different - asynchronous communication was also needed • Redesigned to produce the portal version

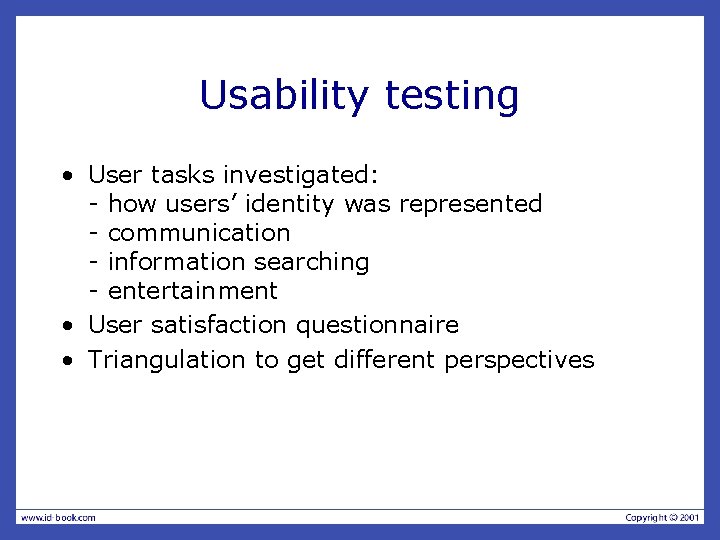

Usability testing • User tasks investigated: - how users’ identity was represented - communication - information searching - entertainment • User satisfaction questionnaire • Triangulation to get different perspectives

Findings from the usability test • The back button didn’t always work • Users didn’t pay attention to navigation buttons • Users expected all objects in the 3 -D view to be clickable. • Users did not realize that there could be others in the 3 -D world with whom to chat, • Users tried to chat to the participant list.

Key points • Evaluation & design are closely integrated in user-centered design. • Some of the same techniques are used in evaluation & requirements but they are used differently – e. g. , interviews, questionnaires • Triangulation involves using a combination of techniques to gain different perspectives • Dealing with constraints is an important skill for evaluators to develop.

Butterfly Ballot Revisited • “The Butterfly Ballot: Anatomy of disaster” – a very interesting account written by Bruce Tognazzini – go to Ask. Tog. com and look through the 2001 column – Alternatively go direct to: http: //www. asktog. com/columns/042 Butterfly. Ballot. html

Activity: Butterfly Ballot for Class Elections • Read Tog’s account and look at the picture of the ballot card. • Make a similar ballot card for a class election – ask 10 of your friends to vote using the card – after each one has voted ask who they intended to vote for and whether the card was confusing – note their comments • Redesign the card and perform the same test with 10 different people. • Report your findings.

Activity: Open. Mail Usability Evaluation Plan • develop a plan for a usability evaluation of the Open. Mail Web client – tasks and users • tasks to be examined, user groups, constraints – data collection • observation, questionnaire, interview, focus group, … – evaluation • important findings, common themes, recommendations for redesign – report • stakeholders • designers and developers

Activity: Open. Mail Usability Evaluation • based on your plan, perform a “quick and dirty” usability evaluation – split your team into users and observers – identify a core set of tasks – if you don’t have a formal questionnaire, use a less formal observation or interview method for data collection – write down the main outcome of the evaluation • problems noted – develop recommendations for improvement • for your recommendations, you can also use general observations – based on your own or anecdotal experience – should be identified as such