Thermalaware Task Placement in Data Centers part 4

![Related Work in Progress ► Waiving simplifying assumptions § Equipment heterogeneity [INFOCOM 2008] § Related Work in Progress ► Waiving simplifying assumptions § Equipment heterogeneity [INFOCOM 2008] §](https://slidetodoc.com/presentation_image/e6197329fa02bed5398188876f63f2e0/image-12.jpg)

- Slides: 18

Thermal-aware Task Placement in Data Centers (part 4) SANDEEP GUPTA Department of Computer Science and Engineering School of Computing and Informatics Ira A. Fulton School of Engineering Arizona State University Tempe, Arizona, USA sandeep. gupta@asu. edu 1

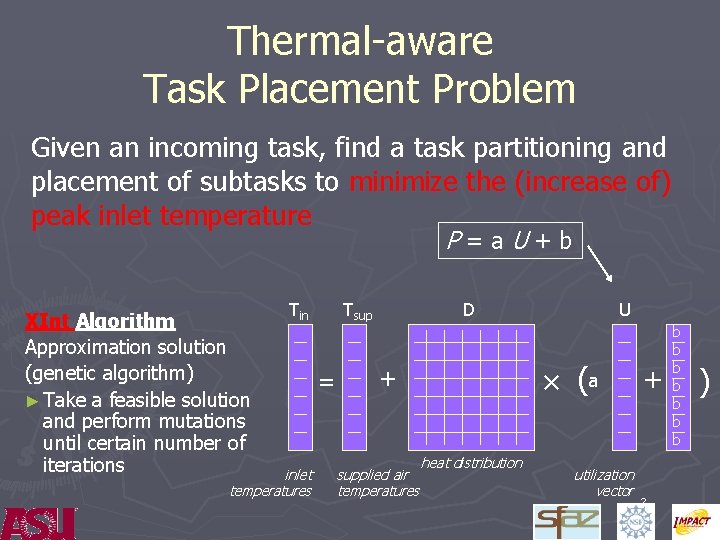

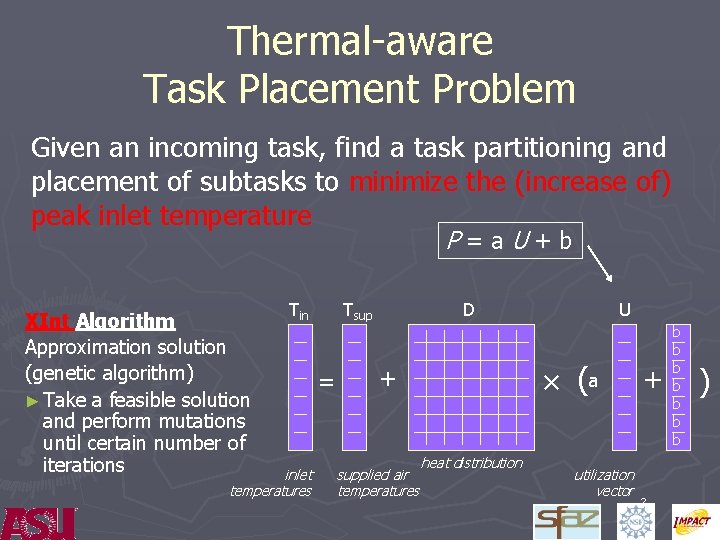

Thermal-aware Task Placement Problem Given an incoming task, find a task partitioning and placement of subtasks to minimize the (increase of) peak inlet temperature P=a. U+b XInt Algorithm Approximation solution (genetic algorithm) ► Take a feasible solution and perform mutations until certain number of iterations Tin inlet temperatures Tsup = D × (a + supplied air temperatures U heat distribution utilization vector + 2 b b b b )

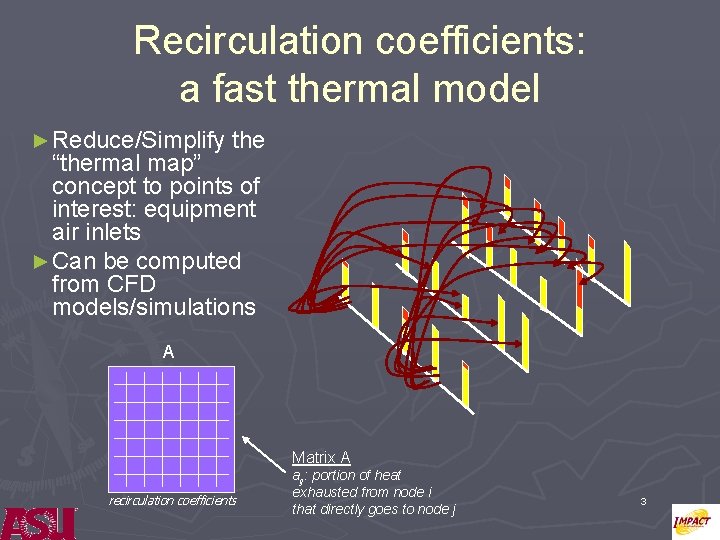

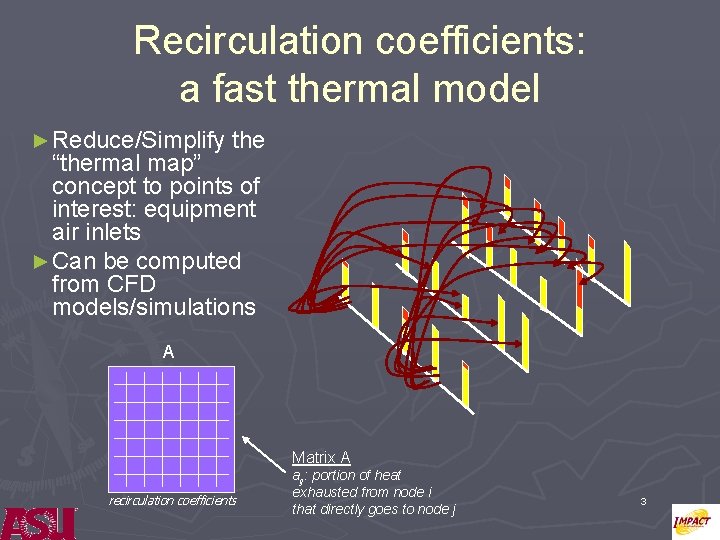

Recirculation coefficients: a fast thermal model ► Reduce/Simplify the “thermal map” concept to points of interest: equipment air inlets ► Can be computed from CFD models/simulations A Matrix A recirculation coefficients aij: portion of heat exhausted from node i that directly goes to node j 3

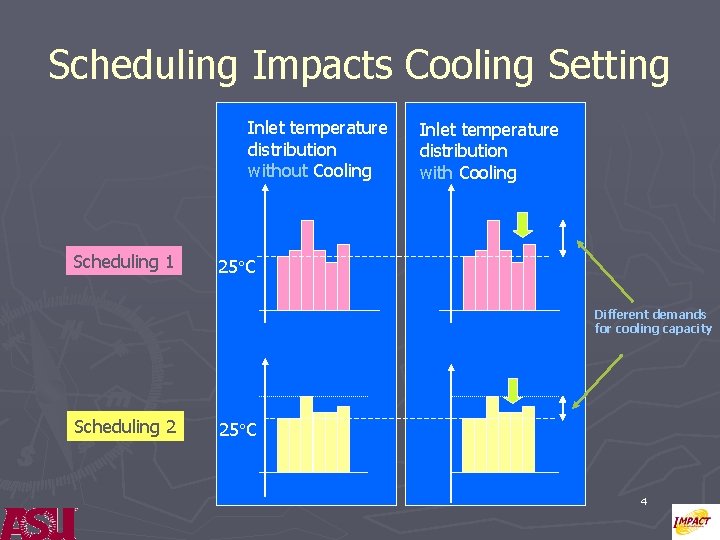

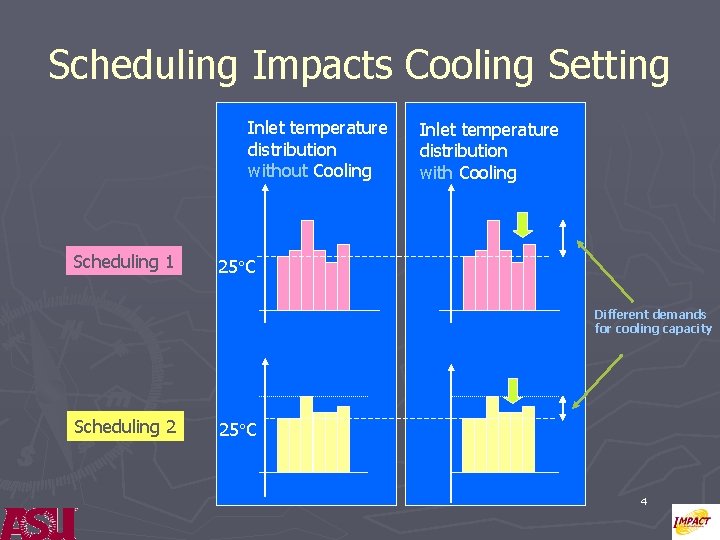

Scheduling Impacts Cooling Setting Inlet temperature distribution without Cooling Scheduling 1 Inlet temperature distribution with Cooling 25 C Different demands for cooling capacity Scheduling 2 25 C 4

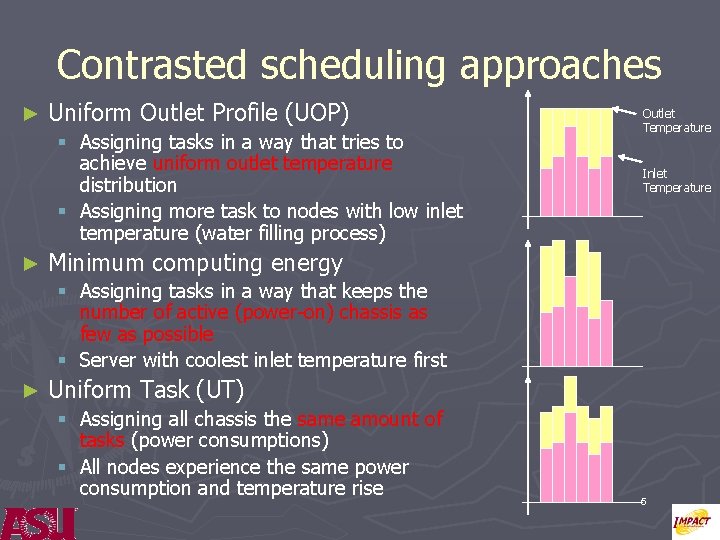

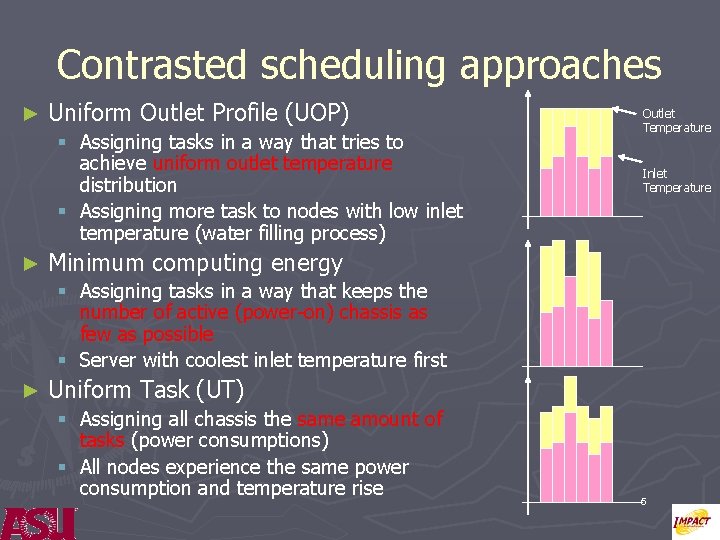

Contrasted scheduling approaches ► Uniform Outlet Profile (UOP) § Assigning tasks in a way that tries to achieve uniform outlet temperature distribution § Assigning more task to nodes with low inlet temperature (water filling process) ► Outlet Temperature Inlet Temperature Minimum computing energy § Assigning tasks in a way that keeps the number of active (power-on) chassis as few as possible § Server with coolest inlet temperature first ► Uniform Task (UT) § Assigning all chassis the same amount of tasks (power consumptions) § All nodes experience the same power consumption and temperature rise 5

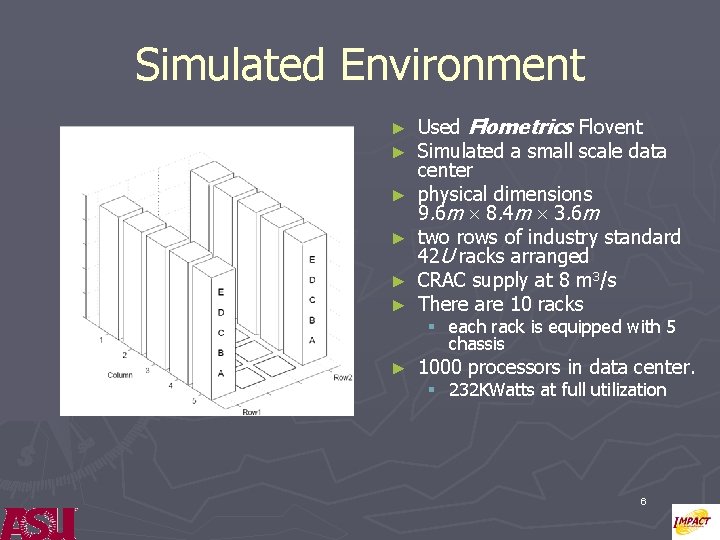

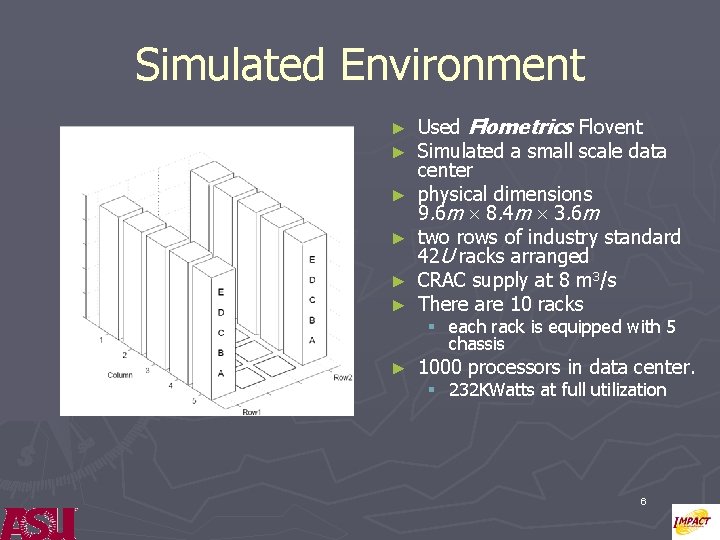

Simulated Environment ► ► ► Used Flometrics Flovent Simulated a small scale data center physical dimensions 9. 6 m 8. 4 m 3. 6 m two rows of industry standard 42 U racks arranged CRAC supply at 8 m 3/s There are 10 racks § each rack is equipped with 5 chassis ► 1000 processors in data center. § 232 KWatts at full utilization 6

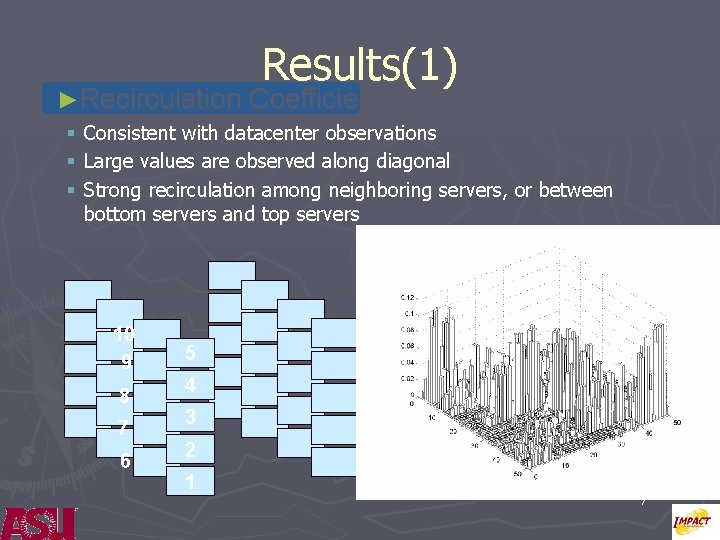

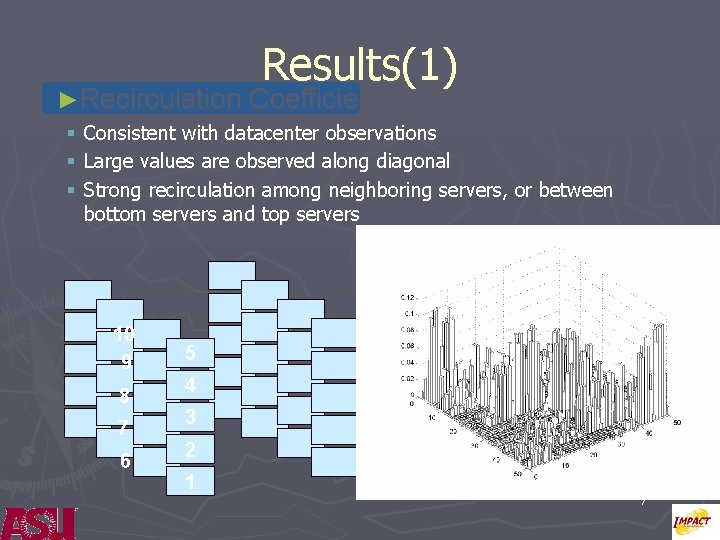

Results(1) ►Recirculation Coefficients § § § Consistent with datacenter observations Large values are observed along diagonal Strong recirculation among neighboring servers, or between bottom servers and top servers 10 9 8 7 6 5 4 3 2 1 7

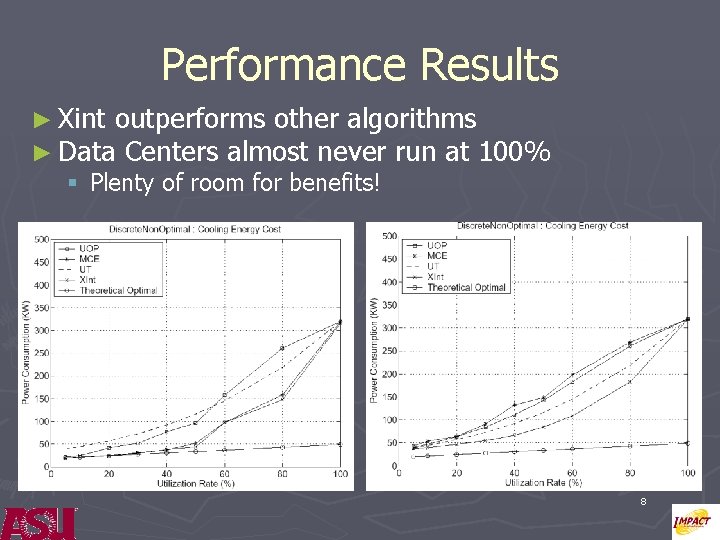

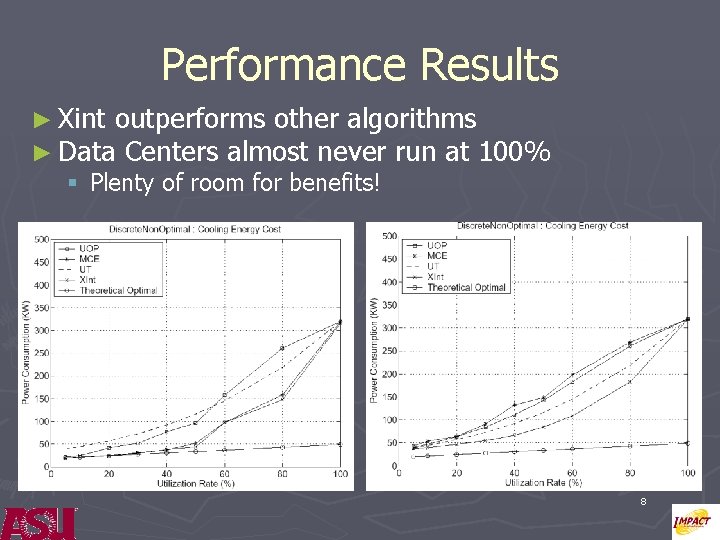

Performance Results ► Xint outperforms other algorithms ► Data Centers almost never run at 100% § Plenty of room for benefits! 8

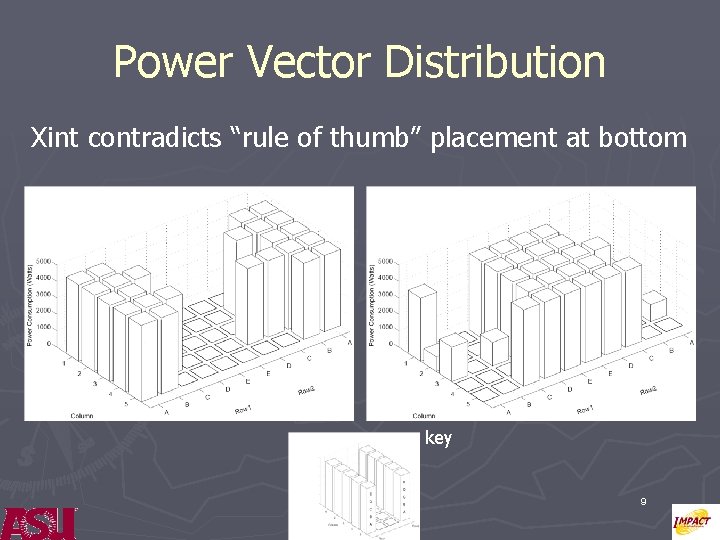

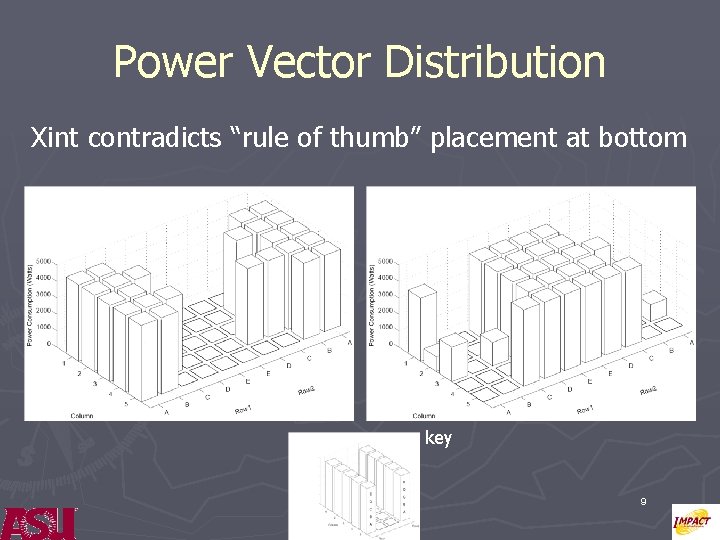

Power Vector Distribution Xint contradicts “rule of thumb” placement at bottom key 9

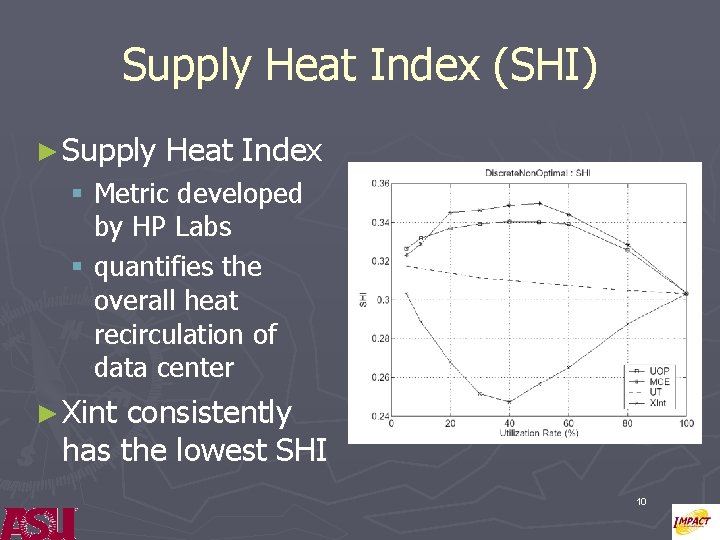

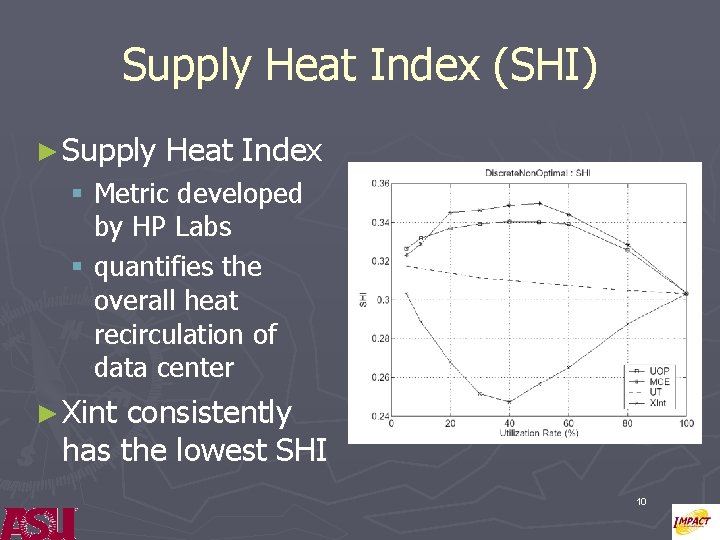

Supply Heat Index (SHI) ► Supply Heat Index § Metric developed by HP Labs § quantifies the overall heat recirculation of data center ► Xint consistently has the lowest SHI 10

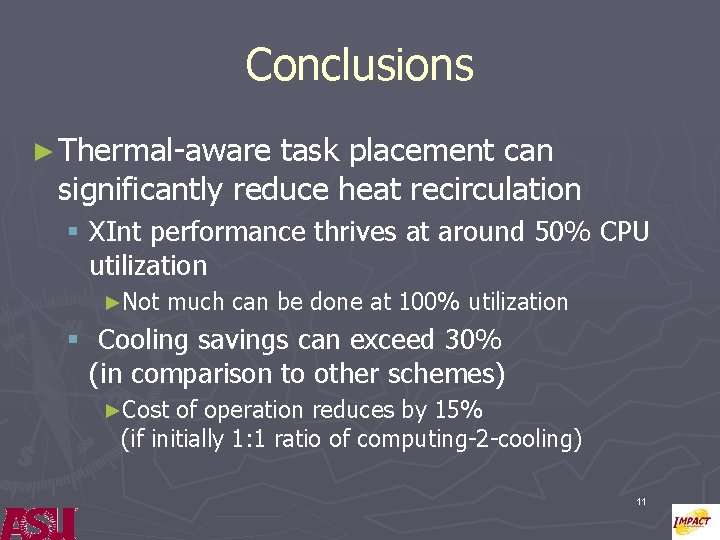

Conclusions ► Thermal-aware task placement can significantly reduce heat recirculation § XInt performance thrives at around 50% CPU utilization ►Not much can be done at 100% utilization § Cooling savings can exceed 30% (in comparison to other schemes) ►Cost of operation reduces by 15% (if initially 1: 1 ratio of computing-2 -cooling) 11

![Related Work in Progress Waiving simplifying assumptions Equipment heterogeneity INFOCOM 2008 Related Work in Progress ► Waiving simplifying assumptions § Equipment heterogeneity [INFOCOM 2008] §](https://slidetodoc.com/presentation_image/e6197329fa02bed5398188876f63f2e0/image-12.jpg)

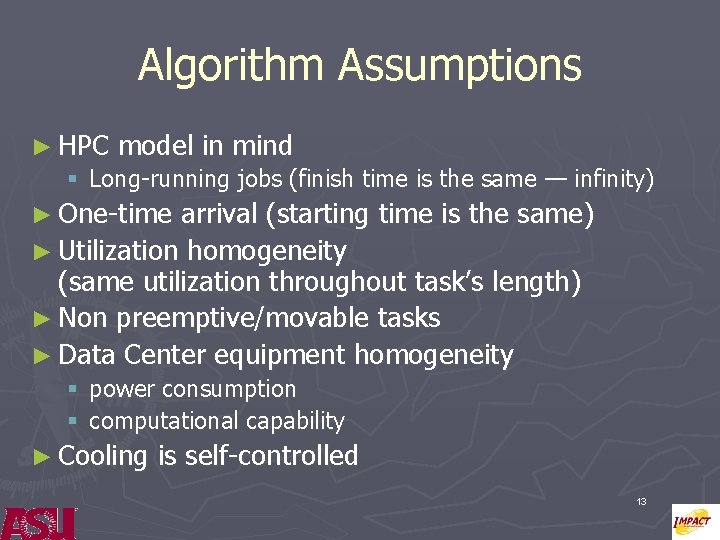

Related Work in Progress ► Waiving simplifying assumptions § Equipment heterogeneity [INFOCOM 2008] § Stochastic task arrival ► Thermal maps thru machine learning § Automated, non-invasive, cost-effective [Green. Com 2007] ► Implementations § § § Thermal-aware Moab scheduler Thermal-aware SLURM Si. Cortex product thermal management 12

Algorithm Assumptions ► HPC model in mind § Long-running jobs (finish time is the same — infinity) ► One-time arrival (starting time is the same) ► Utilization homogeneity (same utilization throughout task’s length) ► Non preemptive/movable tasks ► Data Center equipment homogeneity § power consumption § computational capability ► Cooling is self-controlled 13

Thank You ► Questions? ► Comments? ► Suggestions? http: //impact. asu. edu/ 14

References 1) AMD – Power and Cooling in the Data Center. 34146 A_PC_WP_en. pdf ► 2) HP Labs - Going beyond CPUs: The Potential of Temperature-Aware Solutions for the Data Center. ► 3) HP Labs - Making Scheduling Cool: Temperature-Aware Workload Placement in Data Centers. ► 15

Additional Slides 16

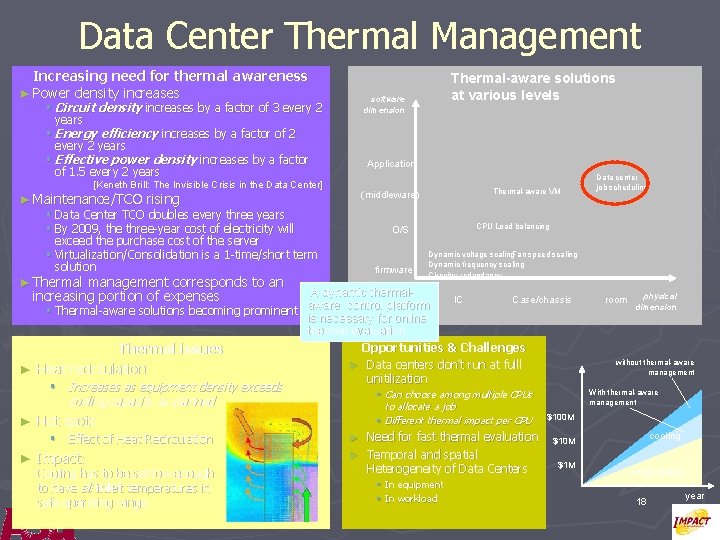

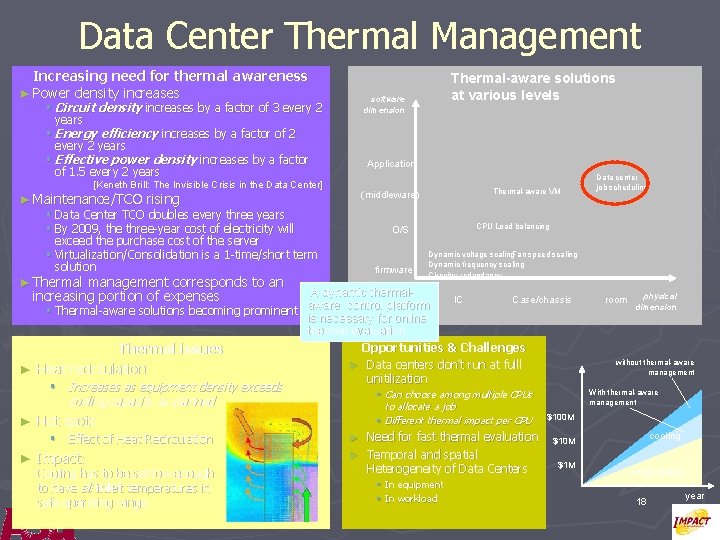

Contributions ► Developed Thermal models of Data Centers ► Developed analytical thermal models using theoretical thermodynamic formulations ► Developed online thermal models using machine learning techniques ► Designed thermal aware task placement algorithms ► Designed genetic algorithm based task placement algorithm that minimizes the heat recirculation among the servers and the peak inlet temperature. Created a software architecture for dynamic thermal management of Data Centers ► Developed CFD Models for Real World Data Centers for testing and validation of thermal models and task placement algorithms ► 17

Data Center Thermal Management Increasing need for thermal awareness ► Power density increases software dimension § Circuit density increases by a factor of 3 every 2 years § Energy efficiency increases by a factor of 2 every 2 years § Effective power density increases by a factor of 1. 5 every 2 years Thermal-aware solutions at various levels Application [Keneth Brill: The Invisible Crisis in the Data Center] (middleware) Thermal-aware VM ► Maintenance/TCO rising § Data Center TCO doubles every three years CPU Load balancing O/S § By 2009, the three-year cost of electricity will exceed the purchase cost of the server Dynamic voltage scaling. Fan speed scaling § Virtualization/Consolidation is a 1 -time/short term Dynamic frequency scaling solution firmware Circuitry redundancy ► Thermal management corresponds to an A dynamic thermalincreasing portion of expenses IC Case/chassis aware control platform § Thermal-aware solutions becoming prominent is necessary for online thermal evaluation Opportunities & Challenges Thermal issues ► Data centers don’t run at fulll ► Heat recirculation unitilization § Increases as equipment density exceeds cooling capacity as planned ► Hot spots § Effect of Heat Recirculation ► Impact: Cooling has to be set low enough to have all inlet temperatures in safe operating range § Can choose among multiple CPUs to allocate a job § Different thermal impact per CPU Need for fast thermal evaluation ► Temporal and spatial Heterogeneity of Data Centers ► § In equipment § In workload Data center job scheduling room physical dimension without thermal-aware management With thermal-aware management $100 M cooling $10 M $1 M computation 18 year