The Energy Data Collection Project Eduard Hovy USCISI

- Slides: 24

The Energy Data Collection Project Eduard Hovy, USC/ISI Judith Klavans, Columbia University …and the whole EDC team

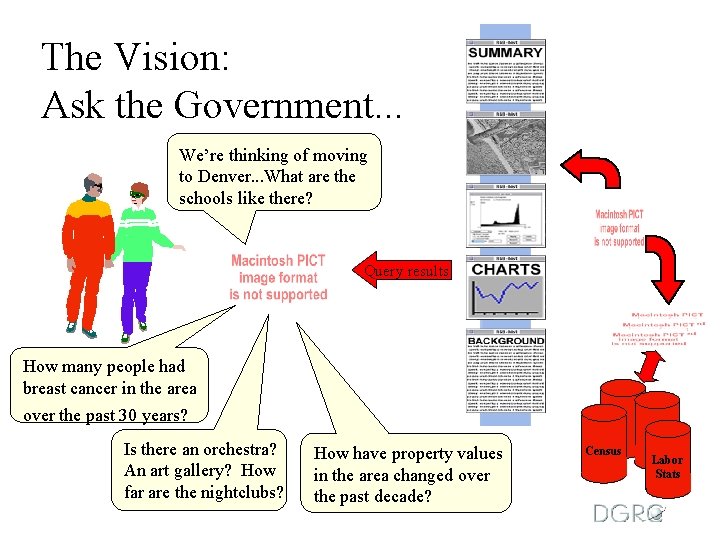

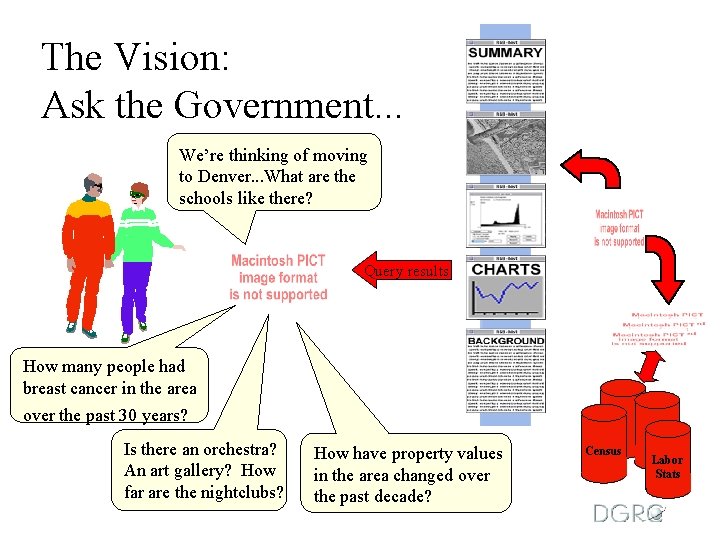

The Vision: Ask the Government. . . We’re thinking of moving to Denver. . . What are the schools like there? Query results How many people had breast cancer in the area over the past 30 years? Is there an orchestra? An art gallery? How far are the nightclubs? How have property values in the area changed over the past decade? Census Labor Stats

The problem and the solution • Problem: Fed. Stats has thousands of databases in over seventy Government agencies: – data is duplicated and near-duplicated, – even Government officials cannot find it! • Solution: Create a system to provide easy standardized access: – need multi-database access engine, – need powerful user interface, – need terminology standardization mechanism.

The purpose of DGRC Make Digital Government Happen! H Advance information systems research H Bring the benefits of cutting edge IS research to government systems H Help educate government and the community • Conduct and support research in key areas of information systems • Develop standards/interfaces • Develop infrastructure • Build pilot systems • Collaborate closely with Gvmnt service/ information providers and users

Research challenges • How do you scale to incorporate many databases? …you try to build data models automatically • How do you integrate their data models, to allow querying across sources and agencies? …you take a large ontology, link the models into it automatically, and provide a query reformulator • How do you incorporate additional information that is available from text sources? …you use language processing tools to extract it • How do you handle footnotes in the databases? …you extract them from the tables automatically

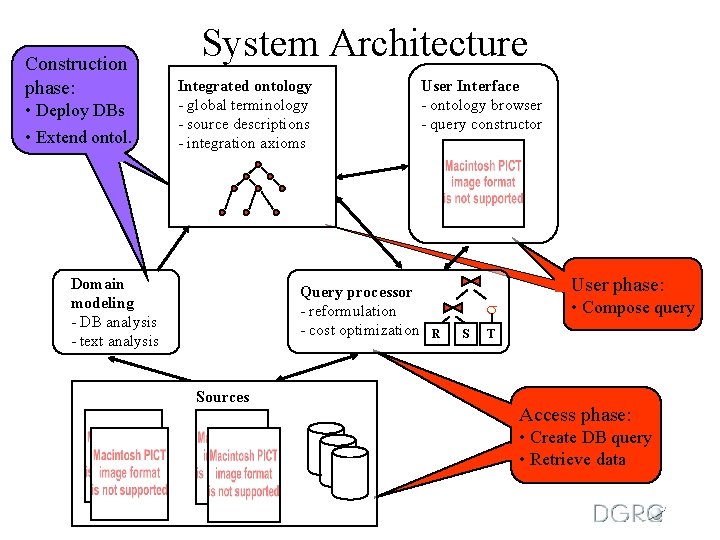

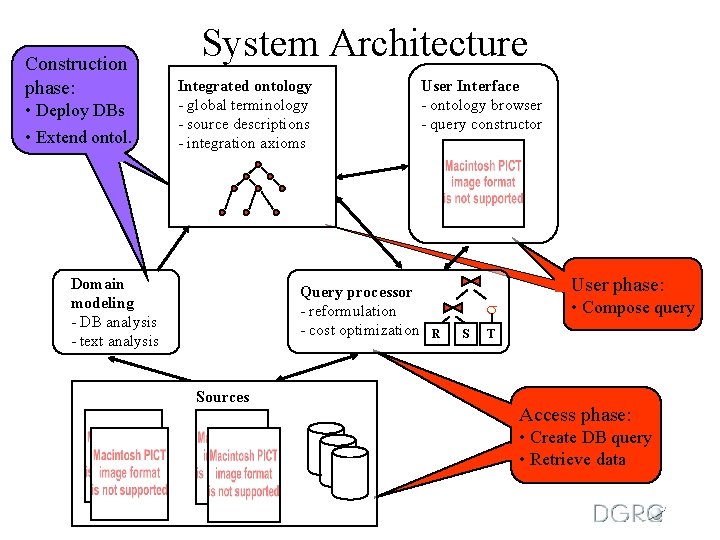

Construction phase: • Deploy DBs • Extend ontol. System Architecture Integrated ontology - global terminology - source descriptions - integration axioms Domain modeling - DB analysis - text analysis Query processor - reformulation - cost optimization Sources User Interface - ontology browser - query constructor User phase: R S • Compose query T Access phase: • Create DB query • Retrieve data

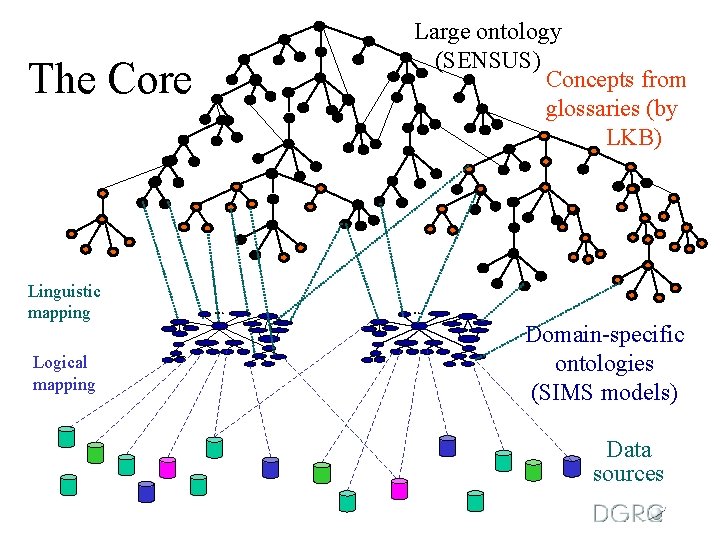

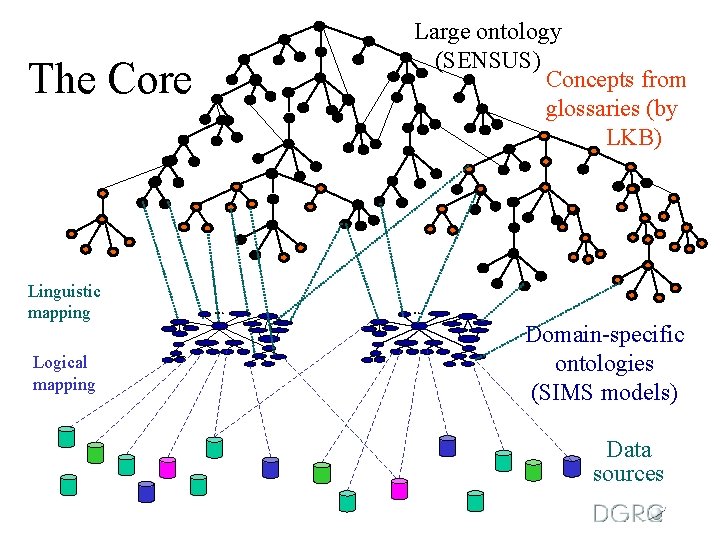

The Core Linguistic mapping Logical mapping Large ontology (SENSUS) Concepts from glossaries (by LKB) Domain-specific ontologies (SIMS models) Data sources

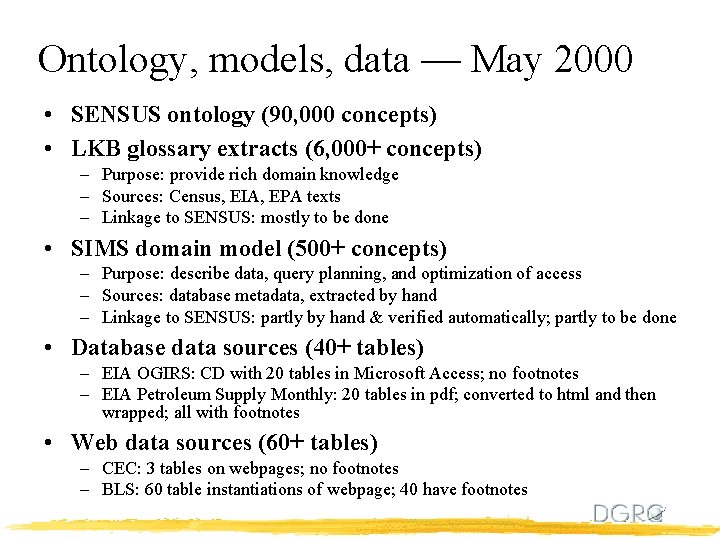

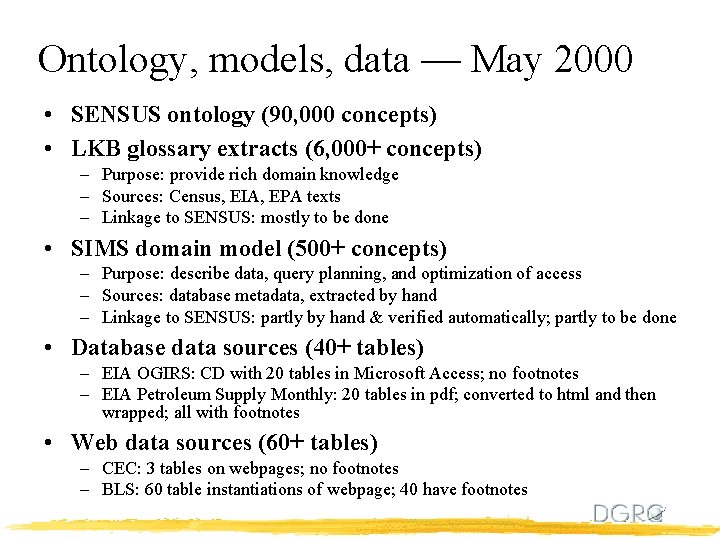

Ontology, models, data — May 2000 • SENSUS ontology (90, 000 concepts) • LKB glossary extracts (6, 000+ concepts) – Purpose: provide rich domain knowledge – Sources: Census, EIA, EPA texts – Linkage to SENSUS: mostly to be done • SIMS domain model (500+ concepts) – Purpose: describe data, query planning, and optimization of access – Sources: database metadata, extracted by hand – Linkage to SENSUS: partly by hand & verified automatically; partly to be done • Database data sources (40+ tables) – EIA OGIRS: CD with 20 tables in Microsoft Access; no footnotes – EIA Petroleum Supply Monthly: 20 tables in pdf; converted to html and then wrapped; all with footnotes • Web data sources (60+ tables) – CEC: 3 tables on webpages; no footnotes – BLS: 60 table instantiations of webpage; 40 have footnotes

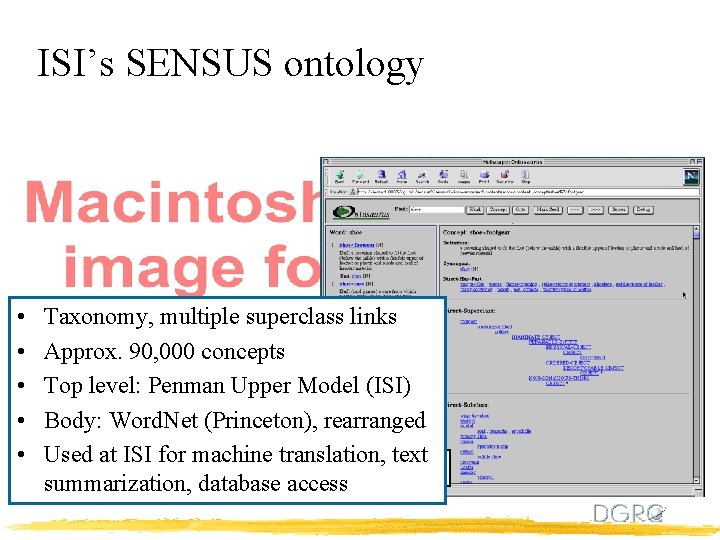

ISI’s SENSUS ontology • Taxonomy, multiple superclass links • Approx. 90, 000 concepts • Top level: Penman Upper Model (ISI) • Body: Word. Net (Princeton), rearranged • Used at ISI for machine translation, text http: //ariadne. isi. edu: 8005/sensus-edc/ summarization, database access

Ontologizing data models—plan Step 1: starting up • for each database, create SIMS domain model(s) by hand • link domain models to ontology by hand Step 2: automate linkage • create (semi-)automated linkage procedures Step 3: induce domain model • (semi-)automatically extract family of related features from data and metadata, with reference to data values, glossaries, and ontology itself where possible • extract info from text and glossaries and add to features • coalesce features into concepts and taxonomize under ontology • extract/induce inter-concept relationships • validate model, automatically and by hand

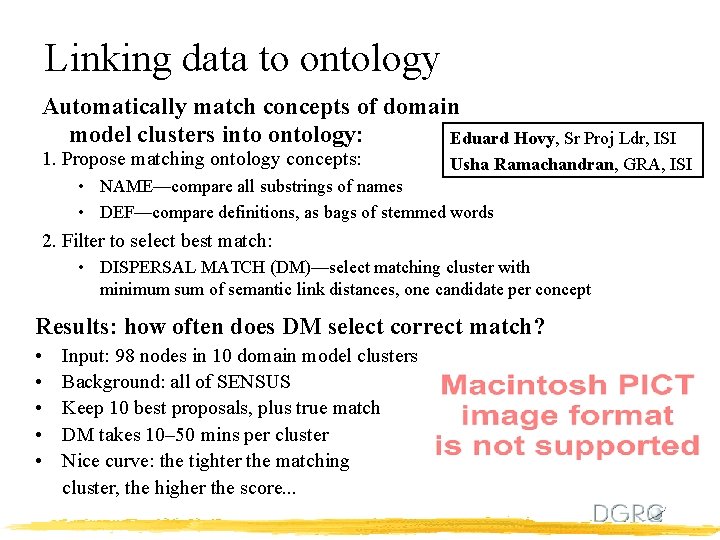

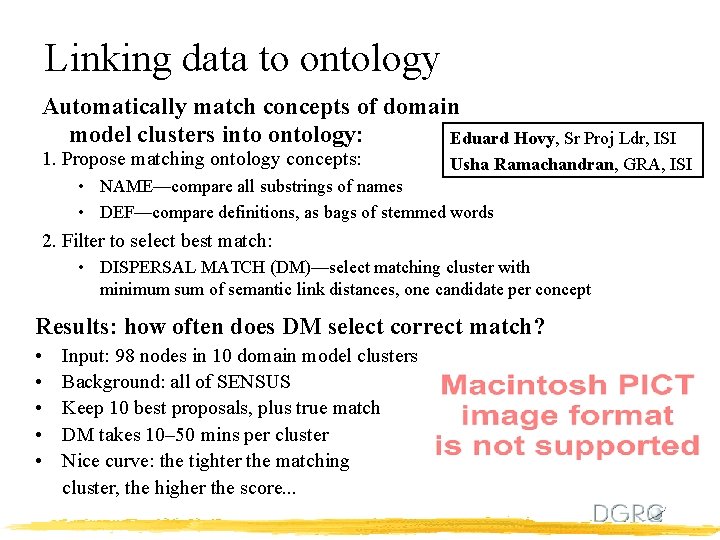

Linking data to ontology Automatically match concepts of domain model clusters into ontology: Eduard Hovy, Sr Proj Ldr, ISI 1. Propose matching ontology concepts: Usha Ramachandran, GRA, ISI • NAME—compare all substrings of names • DEF—compare definitions, as bags of stemmed words 2. Filter to select best match: • DISPERSAL MATCH (DM)—select matching cluster with minimum sum of semantic link distances, one candidate per concept Results: how often does DM select correct match? • • • Input: 98 nodes in 10 domain model clusters Background: all of SENSUS Keep 10 best proposals, plus true match DM takes 10– 50 mins per cluster Nice curve: the tighter the matching cluster, the higher the score. . .

Extracting metadata from text Problems: – – Judith Klavans, Dir of CRIA, Columbia Brian Whitman, GRA, Columbia Proliferation of terms in domain Agencies define terms differently Many refer to the same or related entity Lengthy term definitions often contain important information which is buried Example input: Motor Gasoline Blending Components: Naphthas (e. g. , straight-run gasoline, alkylate, reformate, benzene, toluene, xylene) used for blending or compounding into finished motor gasoline. These components include reformulated gasoline blendstock for oxygenate blending (RBOB) but exclude oxygenates (alcohols, ethers), butane, and pentanes plus. Note: Oxygenates are reported as individual components and are included in the total for other hydrocarbons, hydrogens, and oxygenates.

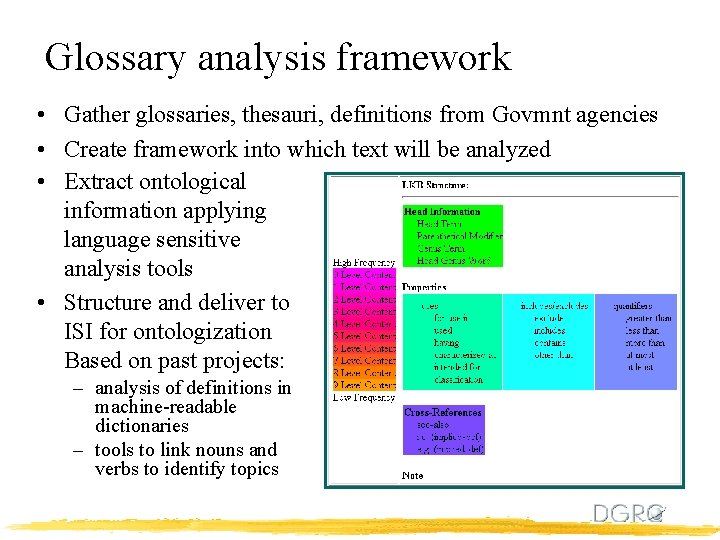

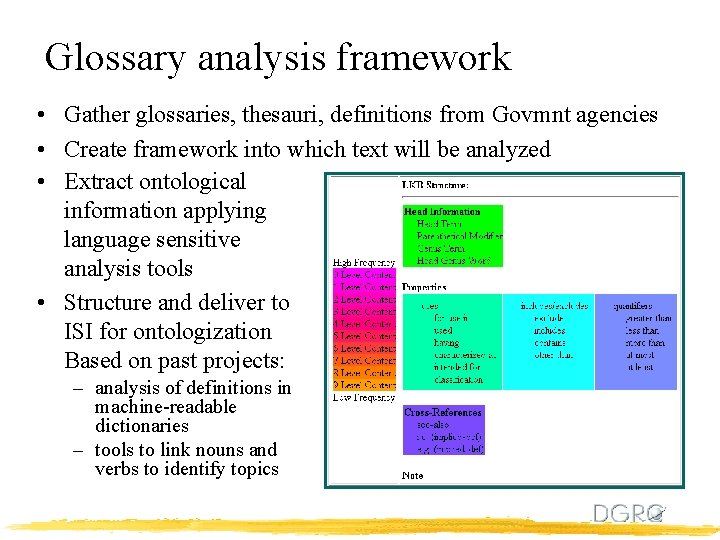

Glossary analysis framework • Gather glossaries, thesauri, definitions from Govmnt agencies • Create framework into which text will be analyzed • Extract ontological information applying language sensitive analysis tools • Structure and deliver to ISI for ontologization Based on past projects: – analysis of definitions in machine-readable dictionaries – tools to link nouns and verbs to identify topics

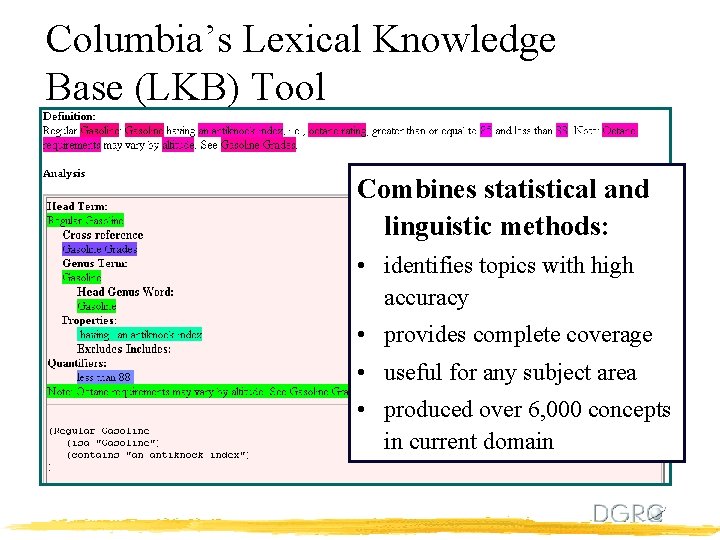

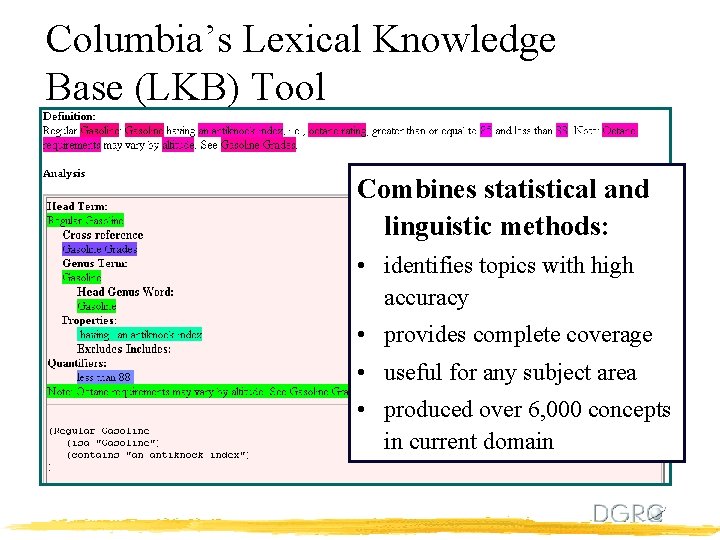

Columbia’s Lexical Knowledge Base (LKB) Tool Combines statistical and linguistic methods: • identifies topics with high accuracy • provides complete coverage • useful for any subject area • produced over 6, 000 concepts in current domain

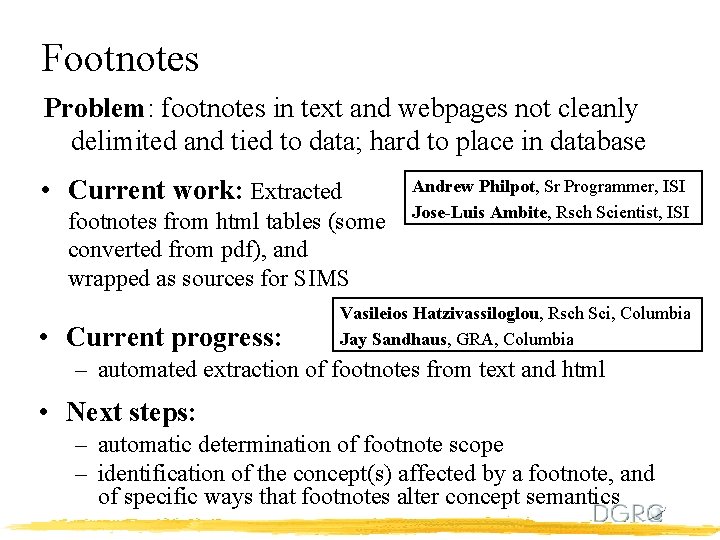

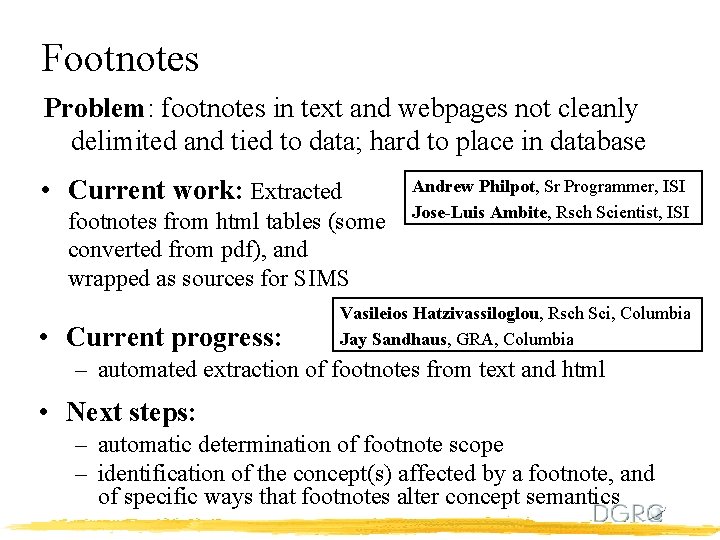

Footnotes Problem: footnotes in text and webpages not cleanly delimited and tied to data; hard to place in database • Current work: Extracted footnotes from html tables (some converted from pdf), and wrapped as sources for SIMS • Current progress: Andrew Philpot, Sr Programmer, ISI Jose-Luis Ambite, Rsch Scientist, ISI Vasileios Hatzivassiloglou, Rsch Sci, Columbia Jay Sandhaus, GRA, Columbia – automated extraction of footnotes from text and html • Next steps: – automatic determination of footnote scope – identification of the concept(s) affected by a footnote, and of specific ways that footnotes alter concept semantics

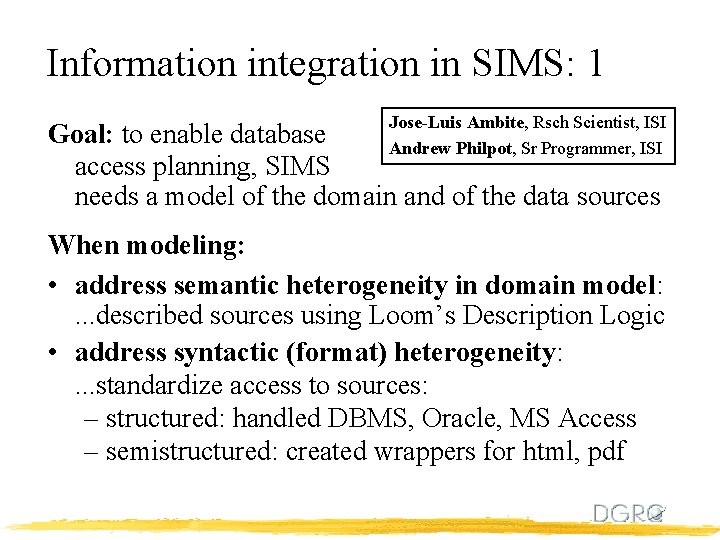

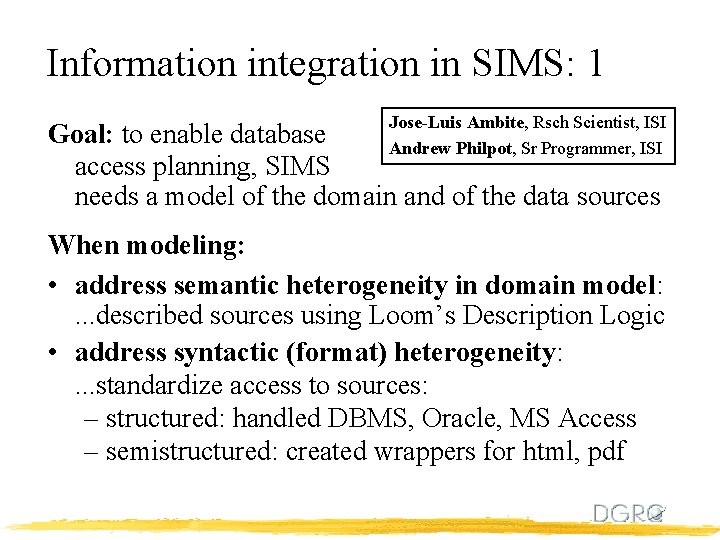

Information integration in SIMS: 1 Jose-Luis Ambite, Rsch Scientist, ISI Goal: to enable database Andrew Philpot, Sr Programmer, ISI access planning, SIMS needs a model of the domain and of the data sources When modeling: • address semantic heterogeneity in domain model: . . . described sources using Loom’s Description Logic • address syntactic (format) heterogeneity: . . . standardize access to sources: – structured: handled DBMS, Oracle, MS Access – semistructured: created wrappers for html, pdf

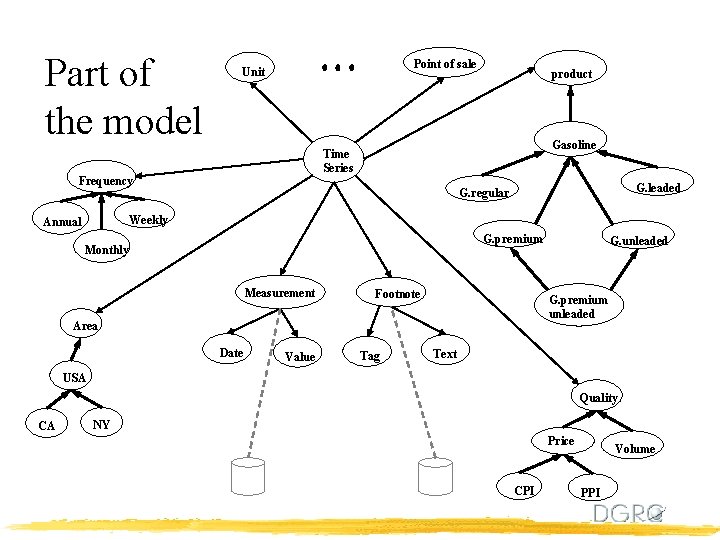

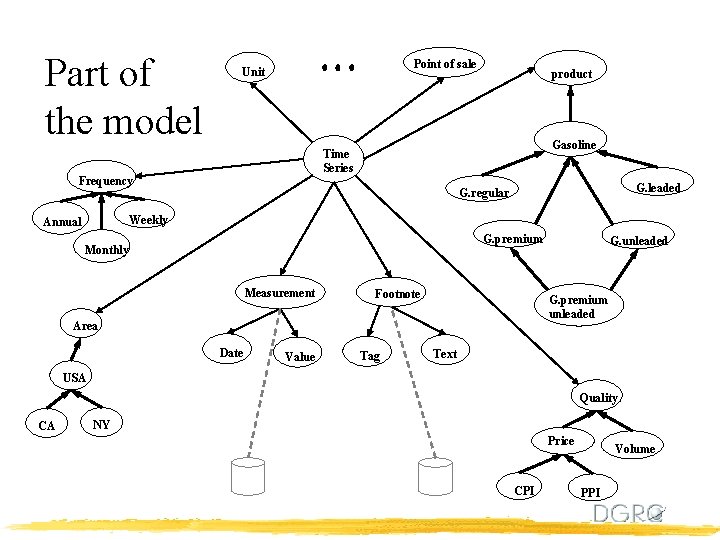

Part of the model Point of sale Unit product Gasoline Time Series Frequency G. leaded G. regular Weekly Annual G. premium Monthly Measurement Footnote G. premium unleaded Area Date Value Tag G. unleaded Text USA Quality CA NY Price CPI Volume PPI

Information integration in SIMS: 2 How to wrap sources? • Manually, for pdf, plain text, footnotes • Automatically, using parsing rules based on landmarks in html pages (Ariadne project) • Automated work will be incorporated and extended. . . SIMS query processing: • Give user equal access to data, metadata, and footnotes • Support for source combination and query optimization: – Join, Union, Selection, interpreted predicates • Challenges: – approximation: handle similar domain model concepts – aggregation: reformulate data of various granularities

Information integration: Aggregation Problem: Data is not in exactly the form the user needs (monthly, not annually; actual values, not averaged) Solution: Attempt to provide unified view of data of various granularities: – – time period geographical region product … Luis Gravano, Asst Prof, Columbia Anurag Singla, GRA, Columbia Example over BLS data: – View: monthly data available for all geographical regions – Query: monthly prices for LA in 1979 – Answer: yearly price for LA in 1979

Aggregation challenges • Different coverage along these dimensions across data sets • Users see a simple, unified view of the data; if a query cannot be answered, we answer the closest query that we have data for • Answers are always exact • Key challenges: – defining query proximity (default vs. user-specific) – communicating ‘query relaxation’ to users – defining and navigating the space of ‘answerable’ queries efficiently

System interface Components: Vasileios Hatzivassiloglou, Rsch Sci, Columbia Jay Sandhaus, GRA, Columbia 1. Query formation 2. Ontology/glossary browsing for concept navigation 3. Answer display, interaction history GUI incorporates key technologies for facilitating user access to diverse databases: – Context-sensitive menu-based input mechanism – Visualization and navigation of results and the ontology – Lightweight client runs on multiple platforms without downloads – Java/Swing implementation allows client-side processing

Demonstration

Conclusion Major research challenges: • Ontology structure: recognizing and modeling closely similar domain concepts across agencies • Semi-automated domain modeling and info extraction, and linkage to ontology • Automated data aggregation • Footnote extraction and display Major practical challenges: • Getting a lot of data into the system • Understanding users’ needs: interface, information. . .

Thank you! Any questions?

Eduard hovy

Eduard hovy Eduard hovy

Eduard hovy Pivne motto

Pivne motto Eduard slavoljub penkala thea penkala

Eduard slavoljub penkala thea penkala Eduard spranger dominant values theory

Eduard spranger dominant values theory Eduard bass prezentace

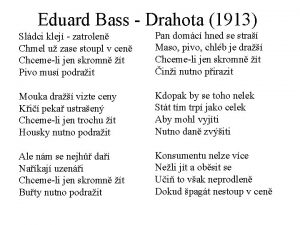

Eduard bass prezentace Eduard bass drahota

Eduard bass drahota Eduard zyuss

Eduard zyuss Eduard baltzer

Eduard baltzer Eduard mörike lebenslauf

Eduard mörike lebenslauf Jean marc itard special education

Jean marc itard special education Eduard aleksanyan

Eduard aleksanyan Nedbank classic credit card travel medical insurance

Nedbank classic credit card travel medical insurance Eduard degas

Eduard degas Eduard baco

Eduard baco Eduard heindl

Eduard heindl Eduard kontar

Eduard kontar Ludwig eduard boltzmann

Ludwig eduard boltzmann Odinets

Odinets Kterou knihu nenapsal eduard štorch

Kterou knihu nenapsal eduard štorch Kristi carr

Kristi carr Netograf.ro

Netograf.ro Eduard porosnicu

Eduard porosnicu Eduard porosnicu

Eduard porosnicu Landsat collection 1 vs collection 2

Landsat collection 1 vs collection 2