The Architecture of the ZEUS Micro Vertex Detector

- Slides: 20

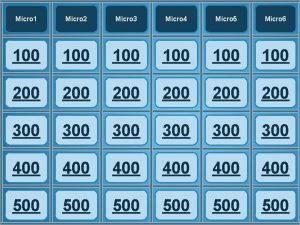

The Architecture of the ZEUS Micro Vertex Detector DAQ and Second Level Global Track Trigger Alessandro Polini DESY/Bonn ZEUS MVD and GTT Group: ANL, Bonn Univ. , DESY-Hamburg -Zeuthen, Hamburg Univ. , KEKJapan, NIKHEF, Oxford Univ. , Bologna, Firenze, Padova, Torino Univ. and INFN, UCL, Yale, York. CHEP 2003, La Jolla, 23 -28 March 2003 1 A. Polini

Outline n n n The ZEUS Silicon Micro Vertex Detector ZEUS Experiment Environment and Requirements DAQ and Control Description The Global Track Trigger Performance and first experience with real data Summary and Outlook CHEP 2003, La Jolla, 23 -28 March 2003 2 A. Polini

Detector Layout Forward Section 410 mm Barrel Section 622 mm e± p 27. 5 Ge. V 920 Ge. V The forward section consists of 4 wheels with 28 wedged silicon sensors/layer providing r- information. The Barrel section provides 3 layers of support frames (ladders) which hold 5 full modules, 600 square sensors in total, providing r- and r-z space points. CHEP 2003, La Jolla, 23 -28 March 2003 3 A. Polini

The ZEUS Detector e± 27. 5 Ge. V CTD FLT GSLT Accept/Reject Other CAL Components CTD SLT Global Second Level Trigger 5 s pipeline 920 Ge. V Other Components Global First Level Trigger Event Buffers p CAL FLT 107 Hz CTD Front End ~0. 7 s 500 Hz Event Buffers CAL Front End ~10 ms GSLT Accept/Reject CAL CTD 40 Hz Event Builder Third Level Trigger bunch crossing interval: 96 ns cpu ZEUS: 3 -Level Trigger System (Rate 500 Hz 40 5 Hz) CHEP 2003, La Jolla, 23 -28 March 2003 cpu cpu cpu 5 Hz Offline Tape 4 A. Polini

MVD DAQ and Trigger Design n ZEUS experiment designed by end of ’ 80 s – First high rate (96 ns) pipelined system – With a flexible 3 level trigger – Main building blocks were transputers (20 Mbit/s) n 10 years later the MVD: – 208. 000 analog channels – MVD available for triggering from 2 nd level trigger on n DAQ Design Choice: – Use off-the-shelf products whenever possible – VME embedded systems for readout Priority scheduling absolutely needed Lynx. OS (Real Time OS) – Commercial Fast/Ethernet Gigabit Network – Linux PC for data processing CHEP 2003, La Jolla, 23 -28 March 2003 5 A. Polini

Detector Front-end Chip HELIX 3. 0* – 128 channel analog pipelined programmable readout system specifically designed for the HERA environment. – Highly programmable for wide and flexible usage – ENC[e] 400 + 40*C[p. F] (no radiation damage included, S/N ~13). – Data read-out and multiplexed (96 ns) over the analog output – Internal Test Pulse and Failsafe Token Ring (8 chips) capability 125 mm 64 mm Front-end Hybrid Silicon Sensors * Uni. Heidelberg Nim A 447, 89 (2000) CHEP 2003, La Jolla, 23 -28 March 2003 6 A. Polini

The ADC Modules and the Readout Read-out: Custom made ADC Modules* n 9 u VME board + private bus extensions n 8 detector modules per board (~8000 channels) n 10 bit resolution n Common Mode, Pedestal and Noise Subtraction n Strip Clustering n 2 separate data buffers: – cluster data (for trigger purposes) – raw/strip data for accepted events. n Design event data sizes – Max. raw data size: 1. 5 MB event (~208. 000 ch) – Strip data: Noise threshold 3 sigma (~15 KB) – Cluster data ~ 8 KB * Kek Tokyo Nim A 436, 281 (1999) CHEP 2003, La Jolla, 23 -28 March 2003 7 A. Polini

VME Data Gathering and Control n n Data gathering and readout control using Lynx. OS 3. 01 Real Time OS on network booted Motorola MVME 2400/MV 2700 PPC VME Computers VME functionalities using developed VME driver/library uvmelib*: multiuser VME access, contiguous memory mapping and DMA transfers, VME interrupt handling and process synchronization n System interrupt driven (data transfer on ADCM data ready via DMA) n Custom designed VME “all purpose Latency clock + interrupt board” n n Full DAQ wide latency measurement system Data transfer over Fast Ethernet/Giga. Bit network using TCPIP connections Data trasfer as binary stream with an XDR header data file playback capability (Montecarlo or dumped) * http: //mvddaq. desy. de CHEP 2003, La Jolla, 23 -28 March 2003 NIM + Latency Lynx OS CPU NIM + Latency Analog. Links Lynx OS CPU GSLT 2 TP modules ADCM modules F/E Network Latency Clock GSLT VME interface 8 MVD VME Readout Crates NIM + Latency Clock + Control Lynx OS CPU Slow control + Latency Clock modules CPU Boot Server and Control A. Polini

UVMElib* Software Package n Exploit the Tundra Universe. II VME bridge features – 8 independent windows for R/W access to the VME – Flexible Interrupt and DMA capabilities n Library layered on an enhanced uvmedriver – Mapping of VME addresses AND contiguous PCI RAM segments – Each window (8 VME, N PCI) addressed by a kernel uvme_smem_t structure n Performance – 18 MB/s DMA transfer on std VMEbus – Less than 50 us response on VME IRQ n typedef struct { int id; unsigned mode; int size; unsigned physical; unsigned virtual; char name[20]; } uvme_smem_t; Addressing Mode: A 32 D 32, A 24 D 32, A 16 D 16… SYS, USR… For DMA transfer For R/W operations Symbolic Mapping Additional Features – Flexible interrupt usage via global system semaphores – Additional Semaphores for process synchronization – DMA PCI VME trasfer queuing – Easy system monitoring via semaphores status and counters CHEP 2003, La Jolla, 23 -28 March 2003 * http: //mvddaq. desy. de/ 9 A. Polini

Interfaces to the ZEUS existing environment The ZEUS Experiment is based on Transputer Interfaces for data gathering from other detectors using and connection to existing Global Second Level Trigger using ZEUS 2 TP* modules All newer components interfaces using Fast/Gbit Ethernet VME TP connections planned to be upgraded to Linux PC + PCI-TP interface * Nikhef NIM A 332, 263 (1993) CHEP 2003, La Jolla, 23 -28 March 2003 10 A. Polini

The Global Tracking Trigger Requirements – Higher quality track reconstruction and rate reduction – Z vertex resolution 9 cm (CTD only) 400 m (MVD+CTD+GTT) – Decision required within existing SLT (<15 ms) Development path – MVD participation in GFLT not feasible, readout latency too large. – Participation at GSLT possible: n n – But track and vertex information poor due to low number of planes. Expand scope to interface data from other tracking detectors: n n – Tests of pushing ADC data over Fast. Ethernet give acceptable rates/latencies performance. Initially Central Tracking Detector (CTD) - overlap with barrel detectors Later Straw Tube Tracker (STT) - overlap with wheels detectors. Implement GTT as a PC farm with TCP data and control path CHEP 2003, La Jolla, 23 -28 March 2003 11 Dijet MC event A. Polini

The MVD Data Acquisition System and GTT Central Tracking Detector Read-out Analog Data Forward Tracking, Straw Tube Tracker Read-out MVD HELIX Front-End & Patch-Boxes MVD VME Readout NIM + Analog. Links Latency Lynx OS CPU HELIX Driver Front-end Lynx OS CPU VME HELIX Driver Crate Clock + Control ADCM modules VME (C+C Slave) Crate 2 (MVD forward) NIM + Analog. Links Latency Lynx OS CPU ADCM modules VME (C+C Slave) Crate 1 (MVD bottom) Clock+ Control NIM + Analog. Links Latency Lynx OS CPU ADCM modules VME (C+C Master) Crate 0 (MVD top) NIM + Latency Clock+ Control Lynx OS CPU CTD 2 TP modules VME TP connection Data from CTD Lynx OS CPU Global First Level Trigger, Busy, Error STT 2 TP module VME TP connection Data from STT NIM + Latency Lynx OS CPU GSLT 2 TP modules Global Tracking Trigger Processors (GFLT rate 800 Hz) TP connection to the Global Second Level Trigger Lynx OS CPU Fast Ethernet/ Gigabit Network NIM + Latency ZEUS Run Control and Online Monitoring Environment GTT Control + Fan-out Global Second Level Trigger Decision Slow control + Latency Clock modules VME CPU Boot Server and Control Main MVDDAQ server, Local Network Connection to Control, Event-Builder Interface the ZEUS Event Builder 12 CHEP 2003, La (~100 Jolla, Hz) 23 -28 March 2003 A. Polini

GTT hardware Implementation – – n NIKHEF-2 TP VME-Transputer n Motorola MVME 2400 450 MHz PC farm – PC farm and switches 12 DELL Power. Edge 4400 Dual 1 GHz GTT/GSLT result interface n – 3 Motorola MVME 2400 450 MHz CTD/STT interfaces n – MVD readout n – CTD/STT interface Motorola MVME 2700 367 MHz GSLT/EVB trigger result interface n DELL Power. Edge 4400 Dual 1 GHz n DELL Poweredge 6450 Quad 700 MHz GTT/GSLT interface Network switches n EVB/GSLT result interface 3 Intel Express 480 T Fast/Giga 16 ports Thanks to Intel Corp. who provided high-performance switch and Power. Edge hardware via Yale grant. CHEP 2003, La Jolla, 23 -28 March 2003 13 A. Polini

GTT Algorithm Description n Modular Algorithm Design – Two concurrent algorithms (Barrel/Forward) foreseen – Process one event per host – multithreaded event processing: n data unpacking n concurrent algorithms n time-out – Test and Simulation results: n n 10 computing hosts required “Control Credit” distribution not Round-Robin At present barrel algorithm implemented Forward algorithm in development phase CHEP 2003, La Jolla, 23 -28 March 2003 14 A. Polini

Barrel algorithm description Find tracks in the CTD, extrapolate into the MVD to resolve pattern recognition ambiguity – Find segments in Axial and Stereo layers of CTD – Match Axial Segments to get r- tracks – Match MVD r- hits – Refit r- track including MVD r- hits After finding 2 -D tracks in r- , look for 3 -D tracks in z-axial track length, s: – Match stereo segments to track in r- to get position for z-s fit – Extrapolation to inner CTD layers – If available use coarse MVD wafer position to guide extrapolation – Match MVD z hits – Refit z-s track including z hits Constrained or unconstrained fit – Pattern recognition better with constrained tracks – Secondary vertices require unconstrained tracks Unconstrained track refit after MVD hits have been matched CHEP 2003, La Jolla, 23 -28 March 2003 15 A. Polini

First tracking results GTT event display Run 42314 Event 938 Physics data vertex distribution Dijet Montecarlo Vertex Resolution Collimator C 5 Proton-beamgas interaction Nominal Vertex Run 44569 Vertex Distribution mm Resolution including MVD from MC ~400 μm CHEP 2003, La Jolla, 23 -28 March 2003 16 A. Polini

First tracking results GTT event display Yet another background event Physics data vertex distribution Dijet Montecarlo Vertex Resolution Collimator C 5 Proton-beamgas interaction Nominal Vertex Run 44569 Vertex Distribution mm Resolution including MVD from MC ~400 μm CHEP 2003, La Jolla, 23 -28 March 2003 17 A. Polini

First GTT latency results ms ms ms MVD VME SLT readout latency CTD VME readout latency with respect to MVD n GTT latency after complete trigger processing 2002 HERA running, after lumi upgrade compromized by high background rates MVD-GTT Latency as measured by GSLT Mean GTT latency vs GFLT rate per run Hz – Mean datasizes from CTD and MVD much larger than the design n ms Low data occupancy rate tests Montecarlo Sept 2002 runs used to tune datasize cuts – Allowed GTT to run with acceptable mean latency and tails at the GSLT HERA – Design rate of 500 Hz appears possible ms CHEP 2003, La Jolla, 23 -28 March 2003 18 A. Polini

MVD Slow Control CANbus is the principle fieldbus used: 2 ESD CAN-PCI/331* dual CANbus adapter in 2 linux PCs Each SC sub-system uses a dedicated CANbus: – Silicon detector/radiation monitor bias voltage: 30 ISEG EHQ F 0025 p 16 channel supply boards**+ 4 ISEG ECH 238 L UPS 6 U EURO crates – Front-end Hybrid low voltage: Custom implementation based on the ZEUS LPS detector supplies (INFN TO) – Cooling and Temperature monitor: Custom NIKHEF SPICan Box *** – Interlock System: Frenzel+Berg EASY-30 CAN/SPS **** MVD slow control operation: – Channel monitoring performed typically every 30 s – CAN emergency messages are implimented – SC wrong state disables the experiments trigger – CERN Root based tools are used when operator control and monitoring is required *http: //www. esd. electronic **http: //www. iseg-hv. com ***http: //www. nikhef. nl/user/n 48/zeus_doc. html ****//www. frenzel-berg. de/produkte/easy. html 19 CHEP 2003, La Jolla, 23 -28 March 2003 A. Polini

Summary and Outlook n n The MVD and GTT system have been successfully integrated into the ZEUS experiment 267 runs with 3. 1 Mio events recorded between 31/10/02 and 18/02/03 with MVD on and DQM (~ 700 nb-1) The MVD DAQ and GTT architecture, built as a synthesis of custom solutions and common-off-the-shelf equipment (real Time OS + Linux PC+ Gigabit Network), works reliably The MVD DAQ and GTT performance (latency, throughput and stability) are satisfactory Next steps: n n Enable utilization of barrel algorithm result at the GSLT Finalize development and integration of the forward algorithm So far very encouraging results. Looking forward to routine high luminosity data taking. The shutdown ends in June 2003. . . CHEP 2003, La Jolla, 23 -28 March 2003 20 A. Polini

What is microprogram sequencer in computer architecture

What is microprogram sequencer in computer architecture Le micro-ordinateur et ses composants

Le micro-ordinateur et ses composants Architecture d'un micro ordinateur

Architecture d'un micro ordinateur Architecture business life cycle

Architecture business life cycle Data centered architecture

Data centered architecture Architecture

Architecture Integral product architecture example

Integral product architecture example Bus design in computer architecture

Bus design in computer architecture Detector building science olympiad cheat sheet

Detector building science olympiad cheat sheet Diffused junction

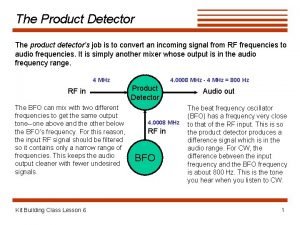

Diffused junction Product detector circuit

Product detector circuit Spaghetti detector

Spaghetti detector 011 sequence detector

011 sequence detector Walter jaeger smoke detector

Walter jaeger smoke detector Distinctive image features from scale invariant keypoints

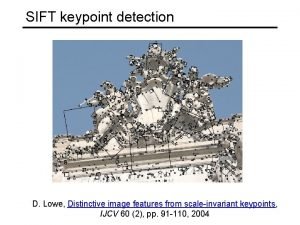

Distinctive image features from scale invariant keypoints Sequence detector 1101

Sequence detector 1101 Disadvantages of semiconductor detector

Disadvantages of semiconductor detector Cci controls lp gas detector

Cci controls lp gas detector Smoke detector use

Smoke detector use Alissa persike

Alissa persike Silicon drift detector explained

Silicon drift detector explained