TexttoSpeech 1 TexttoSpeech Text and Phonetic Analysis 2

![Text-to-Speech Letter-to-Sound Conversion • Hidden Markov models [Taylor, 2005] ► ► ► Each phoneme Text-to-Speech Letter-to-Sound Conversion • Hidden Markov models [Taylor, 2005] ► ► ► Each phoneme](https://slidetodoc.com/presentation_image/d603d4ea31874118f8228044f40d988c/image-10.jpg)

- Slides: 34

Text-to-Speech 1

Text-to-Speech Text and Phonetic Analysis 2

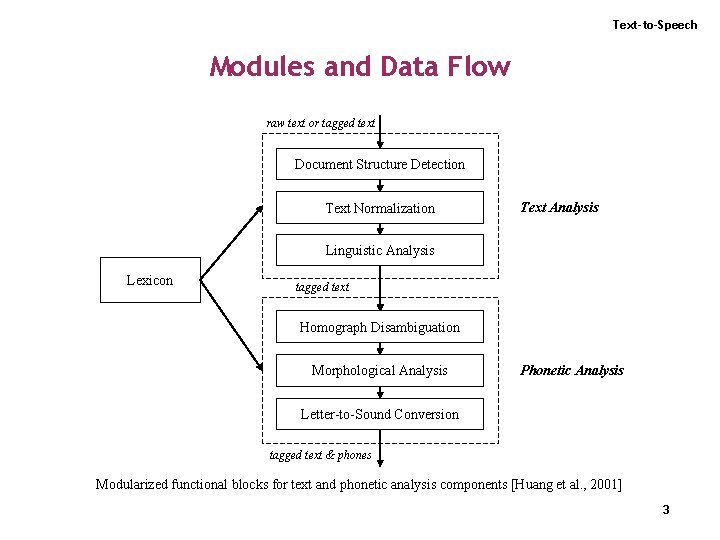

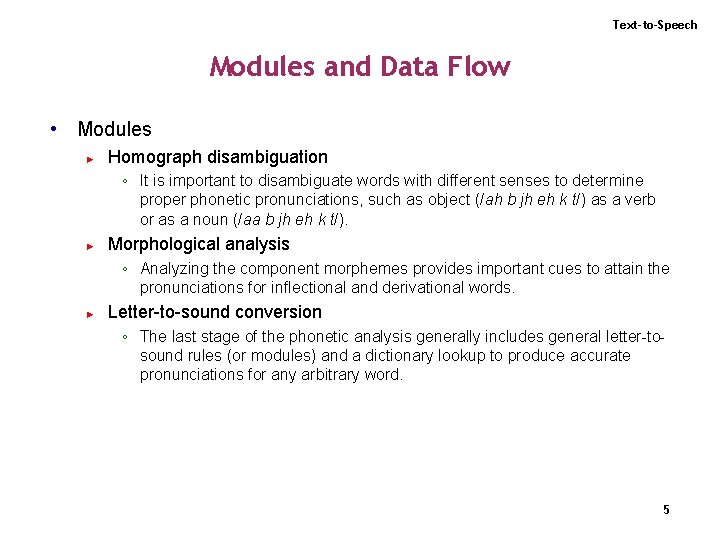

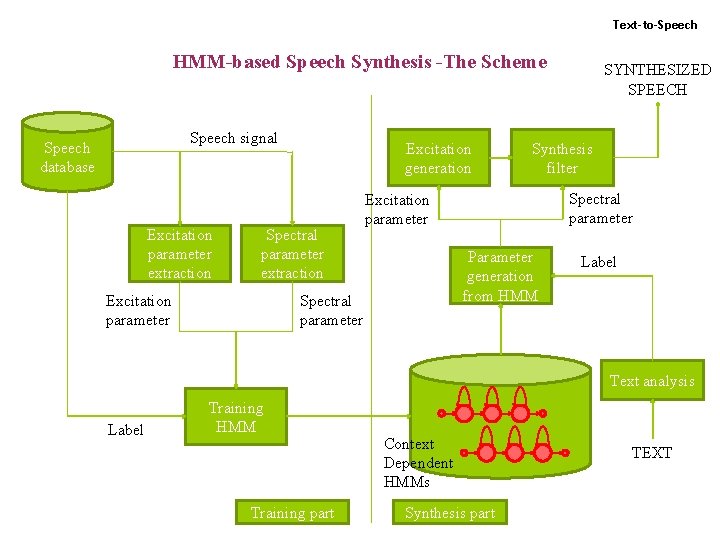

Text-to-Speech Modules and Data Flow raw text or tagged text Document Structure Detection Text Normalization Text Analysis Linguistic Analysis Lexicon tagged text Homograph Disambiguation Morphological Analysis Phonetic Analysis Letter-to-Sound Conversion tagged text & phones Modularized functional blocks for text and phonetic analysis components [Huang et al. , 2001] 3

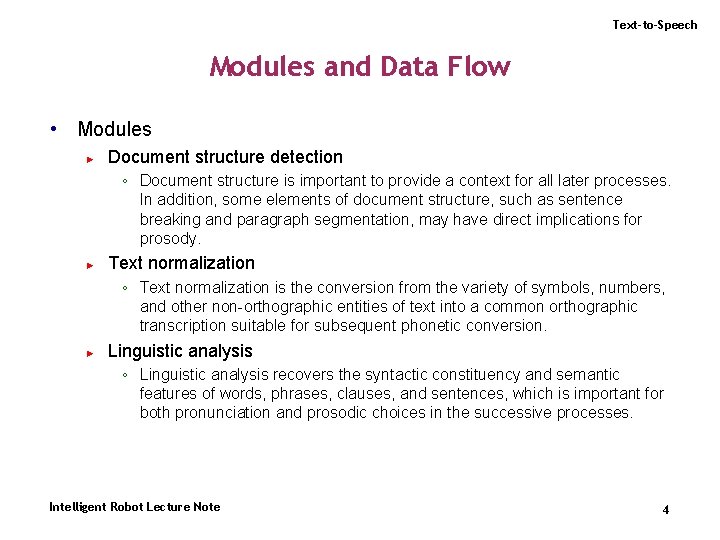

Text-to-Speech Modules and Data Flow • Modules ► Document structure detection ◦ Document structure is important to provide a context for all later processes. In addition, some elements of document structure, such as sentence breaking and paragraph segmentation, may have direct implications for prosody. ► Text normalization ◦ Text normalization is the conversion from the variety of symbols, numbers, and other non-orthographic entities of text into a common orthographic transcription suitable for subsequent phonetic conversion. ► Linguistic analysis ◦ Linguistic analysis recovers the syntactic constituency and semantic features of words, phrases, clauses, and sentences, which is important for both pronunciation and prosodic choices in the successive processes. Intelligent Robot Lecture Note 4

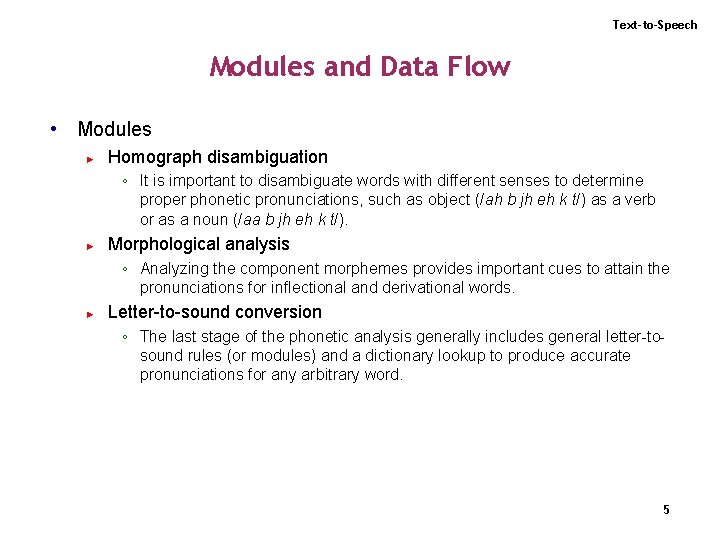

Text-to-Speech Modules and Data Flow • Modules ► Homograph disambiguation ◦ It is important to disambiguate words with different senses to determine proper phonetic pronunciations, such as object (/ah b jh eh k t/) as a verb or as a noun (/aa b jh eh k t/). ► Morphological analysis ◦ Analyzing the component morphemes provides important cues to attain the pronunciations for inflectional and derivational words. ► Letter-to-sound conversion ◦ The last stage of the phonetic analysis generally includes general letter-tosound rules (or modules) and a dictionary lookup to produce accurate pronunciations for any arbitrary word. 5

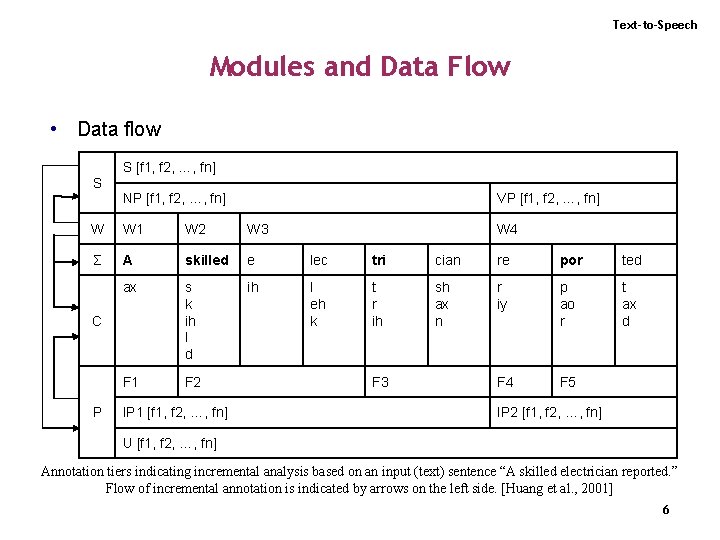

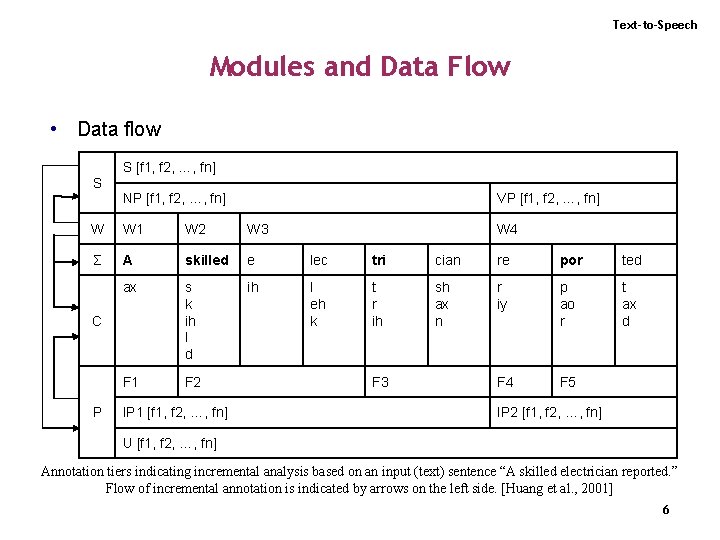

Text-to-Speech Modules and Data Flow • Data flow S S [f 1, f 2, …, fn] NP [f 1, f 2, …, fn] VP [f 1, f 2, …, fn] W W 1 W 2 W 3 Σ A skilled e lec tri cian re por ted ax s k ih l d ih l eh k t r ih sh ax n r iy p ao r t ax d F 1 F 2 F 4 F 5 C P IP 1 [f 1, f 2, …, fn] W 4 F 3 IP 2 [f 1, f 2, …, fn] U [f 1, f 2, …, fn] Annotation tiers indicating incremental analysis based on an input (text) sentence “A skilled electrician reported. ” Flow of incremental annotation is indicated by arrows on the left side. [Huang et al. , 2001] 6

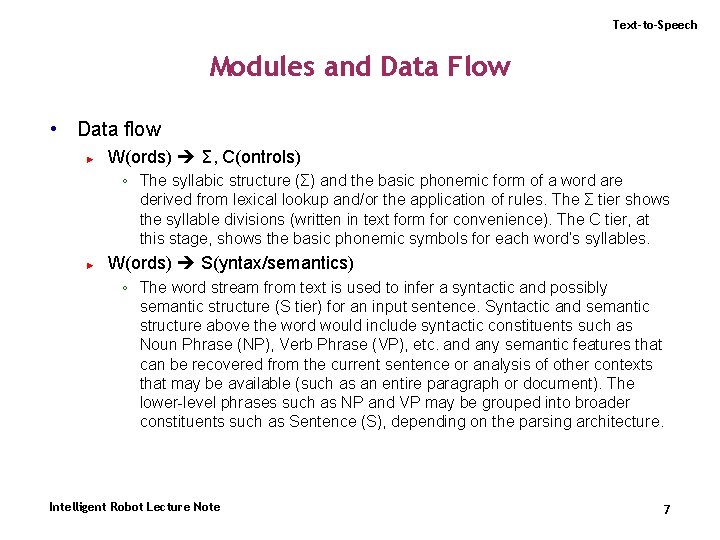

Text-to-Speech Modules and Data Flow • Data flow ► W(ords) Σ, C(ontrols) ◦ The syllabic structure (Σ) and the basic phonemic form of a word are derived from lexical lookup and/or the application of rules. The Σ tier shows the syllable divisions (written in text form for convenience). The C tier, at this stage, shows the basic phonemic symbols for each word’s syllables. ► W(ords) S(yntax/semantics) ◦ The word stream from text is used to infer a syntactic and possibly semantic structure (S tier) for an input sentence. Syntactic and semantic structure above the word would include syntactic constituents such as Noun Phrase (NP), Verb Phrase (VP), etc. and any semantic features that can be recovered from the current sentence or analysis of other contexts that may be available (such as an entire paragraph or document). The lower-level phrases such as NP and VP may be grouped into broader constituents such as Sentence (S), depending on the parsing architecture. Intelligent Robot Lecture Note 7

Text-to-Speech Modules and Data Flow • Data flow ► S(yntex/semantics) P(rosody) ◦ The P(rosodic) tier is also called the symbolic prosodic module. If a word is semantically important in a sentence, that importance can be reflected in speech with a little extra phonetic prominence, called an accent. Some synthesizers begin building a prosodic structure by placing metrical foot boundaries to the left of every accented syllable. The resulting metrical foot structure is shown as F 1, F 2, etc. Over the metrical foot structure, higherorder prosodic constituents, with their own characteristic relative pitch ranges, boundary pitch movements, etc. can be constructed, shown as intonational phrases IP 1, IP 2. Intelligent Robot Lecture Note 8

Text-to-Speech Letter-to-Sound Conversion • Phonological rules ► Assumption ◦ The pronunciation of a letter or letter substring can be found if sufficient is known of its context, i. e. the surrounding letters. ► ► The form of the rules is A[B]C D, which states that the letter substring B with left-context A and right-context C receives the pronunciation (i. e. phoneme substring) D. Because of the complexities of letter-to-sound correspondence, more than one rule generally applies at each stage of transcription. ◦ The conflicts which arise are resolved by maintaining the rules in a set of sublists, grouped by (initial) letter and with each sublist ordered by specificity. ◦ The most specific rule is usually at the top and most general at the bottom. ◦ Transcription is usually a one-pass, left-to-right process. Intelligent Robot Lecture Note 9

![TexttoSpeech LettertoSound Conversion Hidden Markov models Taylor 2005 Each phoneme Text-to-Speech Letter-to-Sound Conversion • Hidden Markov models [Taylor, 2005] ► ► ► Each phoneme](https://slidetodoc.com/presentation_image/d603d4ea31874118f8228044f40d988c/image-10.jpg)

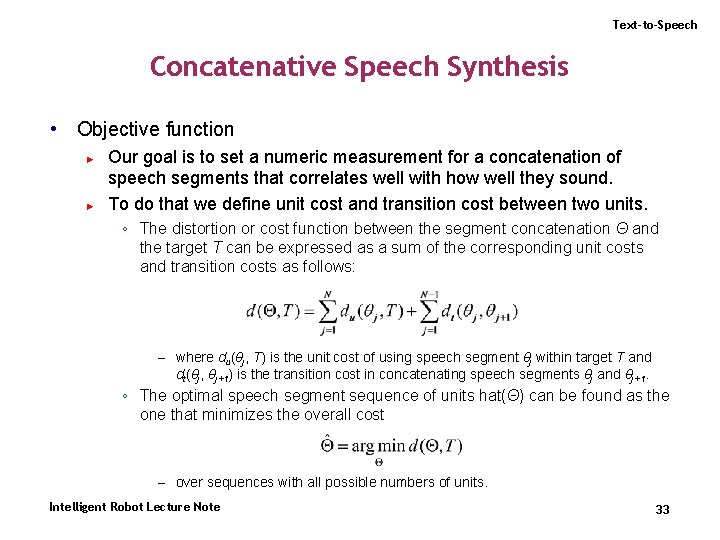

Text-to-Speech Letter-to-Sound Conversion • Hidden Markov models [Taylor, 2005] ► ► ► Each phoneme is represented by one HMM and letters are the emitted observations. The probability of transitions between models is equal to the probability of the phoneme given the previous phoneme. The objective of this method is to find the most probable sequence of hidden models (phonemes) given the observations (letters), using the probability distributions found during the model training. ◦ where p(φ) is the prior probability of a sequence of phonemes occurring, and p(g, φ) is the likelihood of grapheme sequence g given phoneme sequence φ. Intelligent Robot Lecture Note 10

Text-to-Speech Prosody Intelligent Robot Lecture Note 11

Text-to-Speech General Prosody • It is not what you said; it is how you said it! ► ► ► An important supporting role in guiding a listener’s recovery of the basic messages A starring role in signaling connotation The speaker’s attitude toward the message, toward the listener • Prosody is often defined on two different levels ► An abstract, phonological level ◦ (phrase, accent and tone structure) ► A physical phonetic level ◦ (fundamental frequency, intensity or amplitude, and duration) Intelligent Robot Lecture Note 12

Text-to-Speech General Prosody • From the listener’s point of view, prosody consists of systematic perception and recovery of a speaker’s intentions based on: ► Pauses ◦ To indicate phrases and to avoid running out of air ► Pitch ◦ Rate of vocal-fold cycling (fundamental frequency or F 0) as a function of time ► Rate/relative duration ◦ Phoneme durations, timing, and rhythm ► Loudness ◦ Relative amplitude/volume Intelligent Robot Lecture Note 13

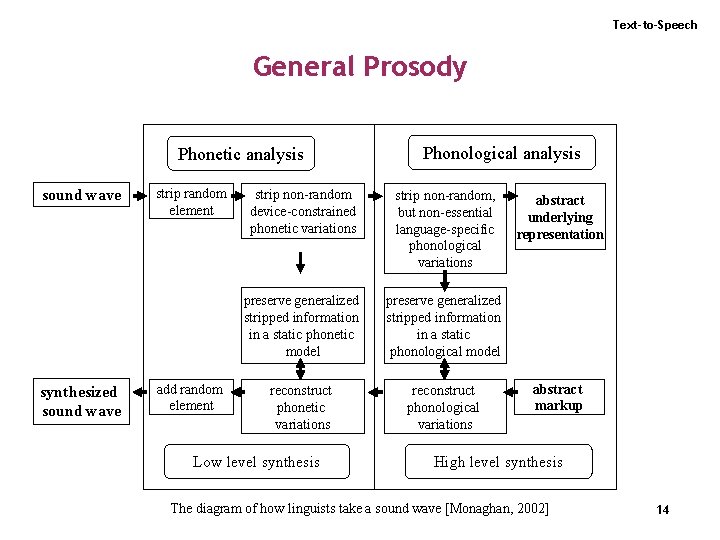

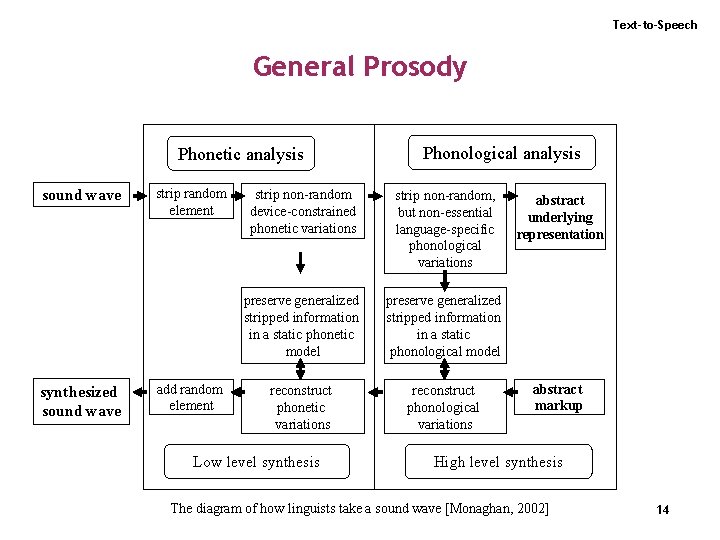

Text-to-Speech General Prosody Phonetic analysis sound wave synthesized sound wave strip random element add random element Phonological analysis strip non-random device-constrained phonetic variations strip non-random, but non-essential language-specific phonological variations preserve generalized stripped information in a static phonetic model preserve generalized stripped information in a static phonological model reconstruct phonetic variations reconstruct phonological variations Low level synthesis abstract underlying representation abstract markup High level synthesis The diagram of how linguists take a sound wave [Monaghan, 2002] 14

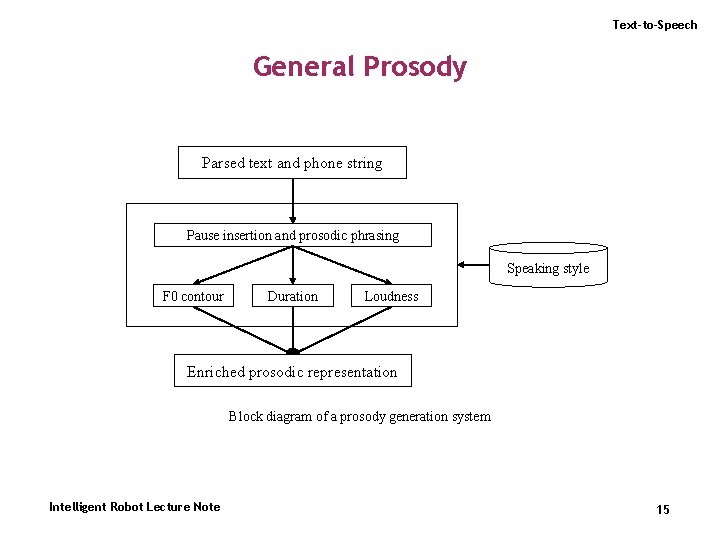

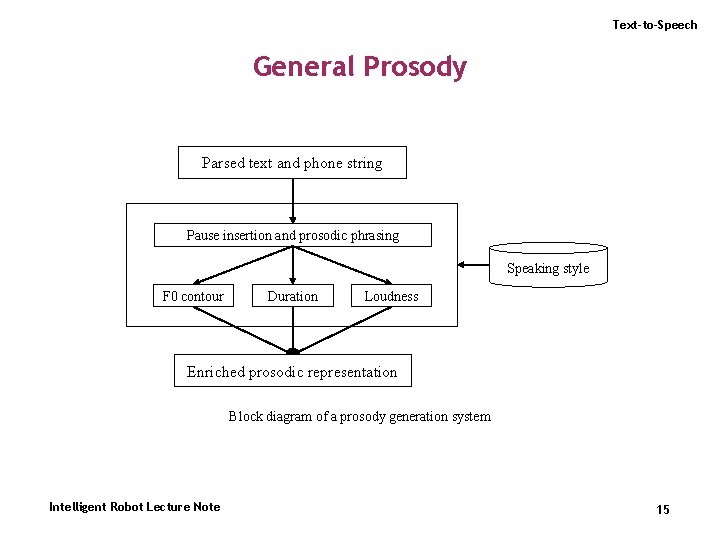

Text-to-Speech General Prosody Parsed text and phone string Pause insertion and prosodic phrasing Speaking style F 0 contour Duration Loudness Enriched prosodic representation Block diagram of a prosody generation system Intelligent Robot Lecture Note 15

Text-to-Speech Speaking Style • Prosody depends not only on the linguistic content of a sentence. • Different people generate different prosody for the same sentence. • Even the same person generates a different prosody depending on his or her mood. Intelligent Robot Lecture Note 16

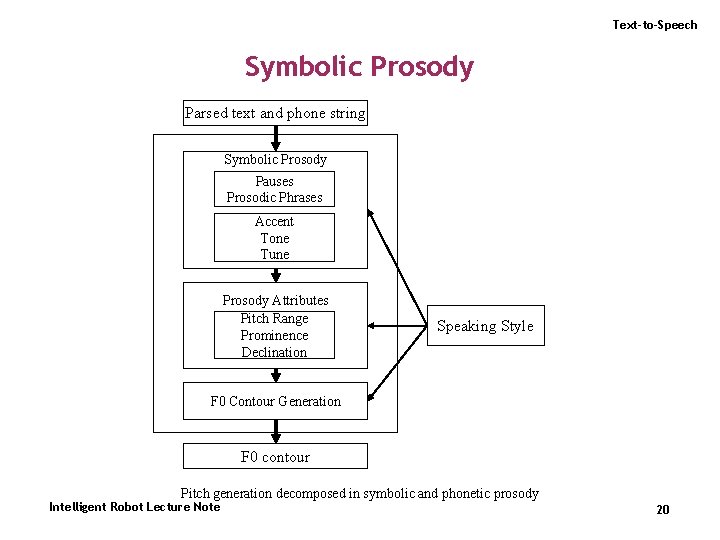

Text-to-Speech Symbolic Prosody • Abstract or symbolic prosodic structure is the link between the infinite multiplicity of pragmatic, semantic, and syntactic features of an utterance and the relatively limited F 0, phone durations, energy, and voice quality. • Symbolic prosody deals with: ► ► Braking the sentence into prosodic phrases, possibly separated by pauses Assigning labels, such as emphasis, to different syllables or words within each prosodic phrase. Intelligent Robot Lecture Note 17

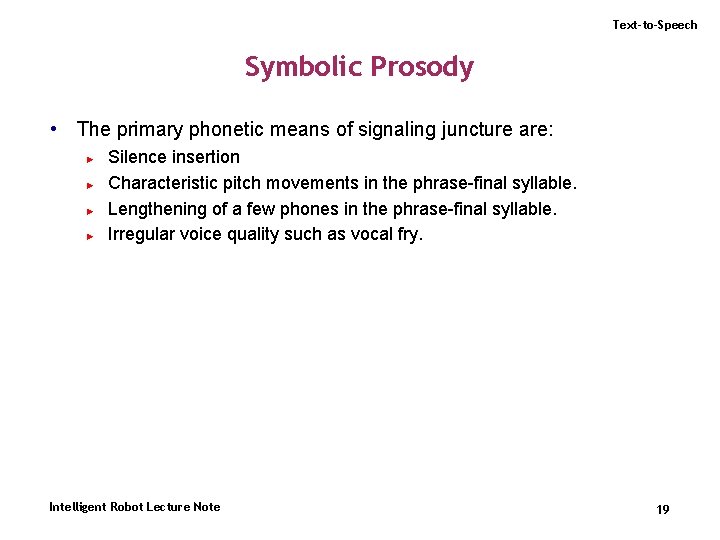

Text-to-Speech Symbolic Prosody • Words are normally spoken continuously, unless there are specific linguistic reasons to signal a discontinuity. • The term juncture refers to prosodic phrasing that is, where do words cohere, and where do prosodic breaks (pauses and/or special pitch movements) occur. • Juncture effects, expressing the degree of cohesion or discontinuity between adjacent words, are determined by physiology (running out of breath), phonetics, syntax, semantics, and pragmatics. Intelligent Robot Lecture Note 18

Text-to-Speech Symbolic Prosody • The primary phonetic means of signaling juncture are: ► ► Silence insertion Characteristic pitch movements in the phrase-final syllable. Lengthening of a few phones in the phrase-final syllable. Irregular voice quality such as vocal fry. Intelligent Robot Lecture Note 19

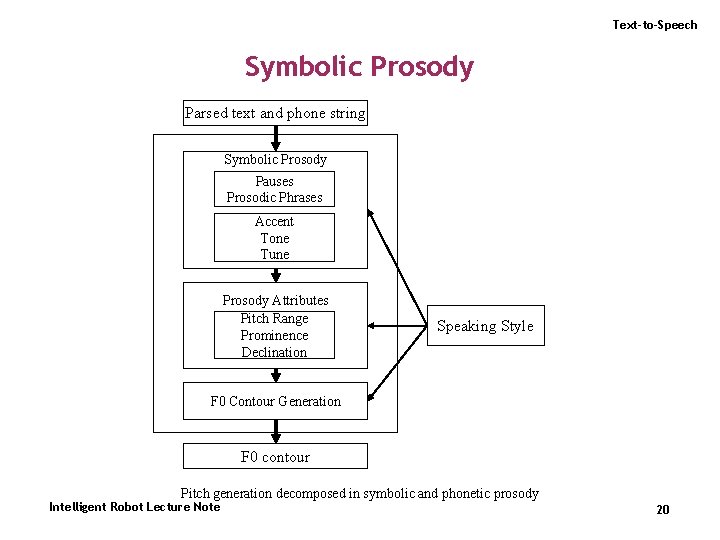

Text-to-Speech Symbolic Prosody Parsed text and phone string Symbolic Prosody Pauses Prosodic Phrases Accent Tone Tune Prosody Attributes Pitch Range Prominence Declination Speaking Style F 0 Contour Generation F 0 contour Pitch generation decomposed in symbolic and phonetic prosody Intelligent Robot Lecture Note 20

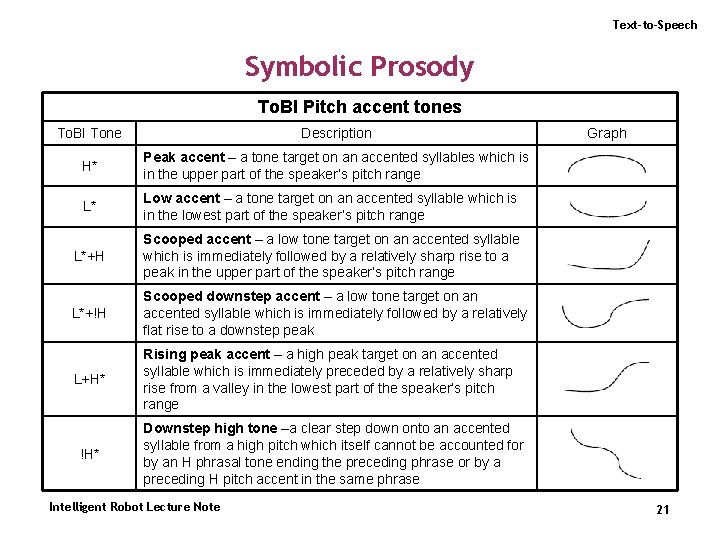

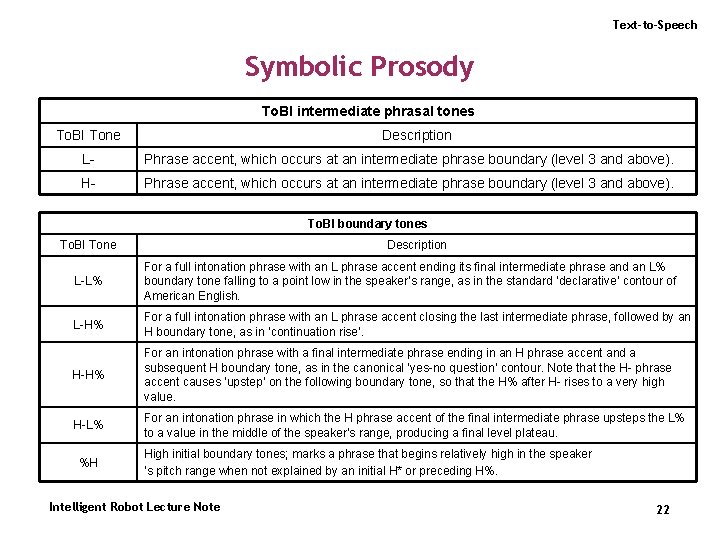

Text-to-Speech Symbolic Prosody To. BI Pitch accent tones To. BI Tone Description H* Peak accent – a tone target on an accented syllables which is in the upper part of the speaker’s pitch range L* Low accent – a tone target on an accented syllable which is in the lowest part of the speaker’s pitch range L*+H Scooped accent – a low tone target on an accented syllable which is immediately followed by a relatively sharp rise to a peak in the upper part of the speaker’s pitch range L*+!H Scooped downstep accent – a low tone target on an accented syllable which is immediately followed by a relatively flat rise to a downstep peak L+H* Rising peak accent – a high peak target on an accented syllable which is immediately preceded by a relatively sharp rise from a valley in the lowest part of the speaker’s pitch range !H* Graph Downstep high tone –a clear step down onto an accented syllable from a high pitch which itself cannot be accounted for by an H phrasal tone ending the preceding phrase or by a preceding H pitch accent in the same phrase Intelligent Robot Lecture Note 21

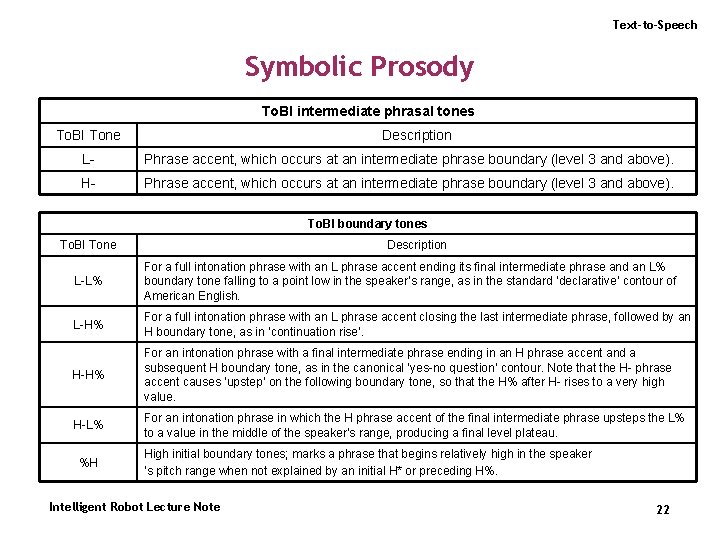

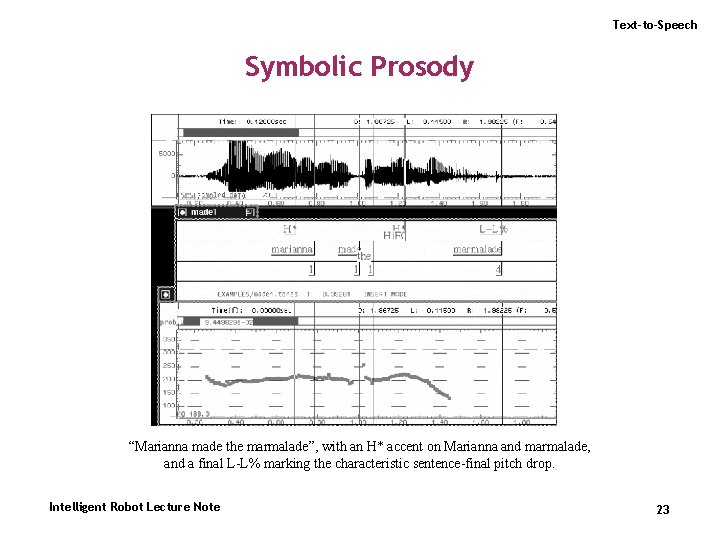

Text-to-Speech Symbolic Prosody To. BI intermediate phrasal tones To. BI Tone Description L- Phrase accent, which occurs at an intermediate phrase boundary (level 3 and above). H- Phrase accent, which occurs at an intermediate phrase boundary (level 3 and above). To. BI boundary tones To. BI Tone Description L-L% For a full intonation phrase with an L phrase accent ending its final intermediate phrase and an L% boundary tone falling to a point low in the speaker’s range, as in the standard ‘declarative’ contour of American English. L-H% For a full intonation phrase with an L phrase accent closing the last intermediate phrase, followed by an H boundary tone, as in ‘continuation rise’. H-H% For an intonation phrase with a final intermediate phrase ending in an H phrase accent and a subsequent H boundary tone, as in the canonical ‘yes-no question’ contour. Note that the H- phrase accent causes ‘upstep’ on the following boundary tone, so that the H% after H- rises to a very high value. H-L% For an intonation phrase in which the H phrase accent of the final intermediate phrase upsteps the L% to a value in the middle of the speaker’s range, producing a final level plateau. %H High initial boundary tones; marks a phrase that begins relatively high in the speaker ‘s pitch range when not explained by an initial H* or preceding H%. Intelligent Robot Lecture Note 22

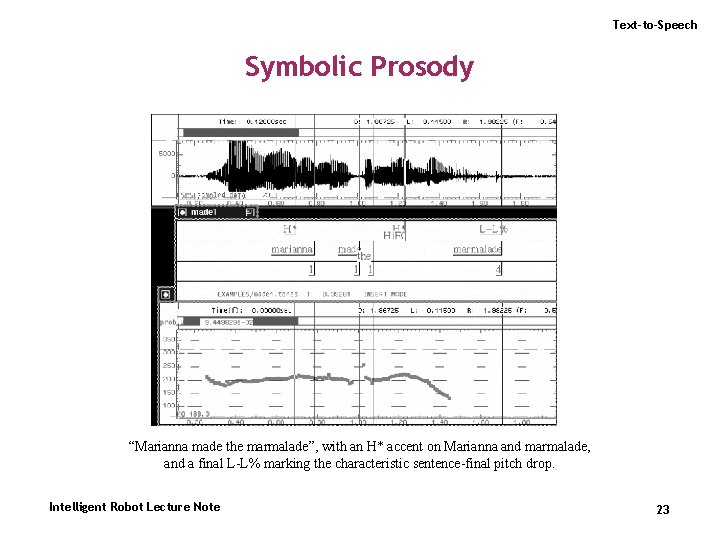

Text-to-Speech Symbolic Prosody “Marianna made the marmalade”, with an H* accent on Marianna and marmalade, and a final L-L% marking the characteristic sentence-final pitch drop. Intelligent Robot Lecture Note 23

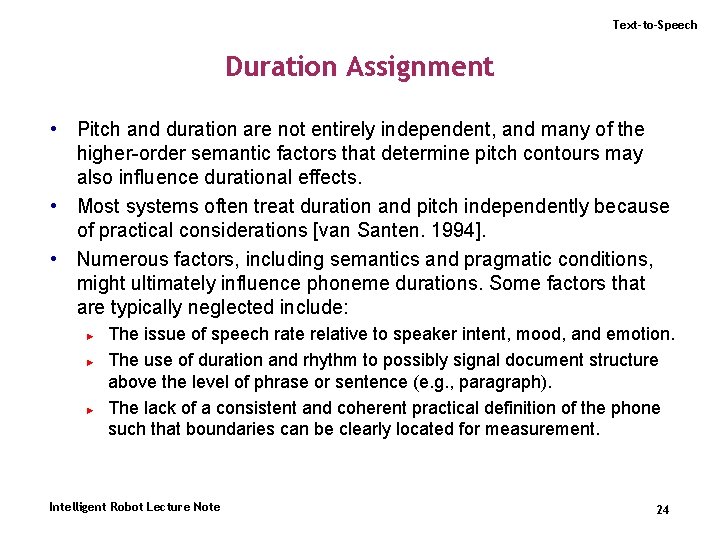

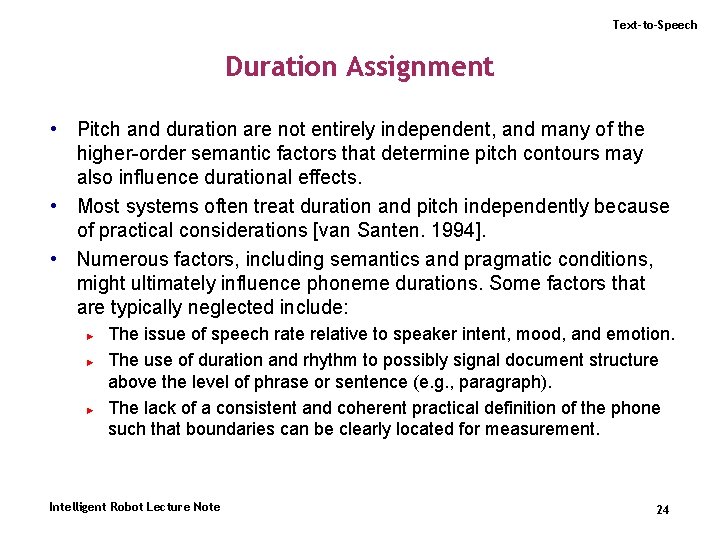

Text-to-Speech Duration Assignment • Pitch and duration are not entirely independent, and many of the higher-order semantic factors that determine pitch contours may also influence durational effects. • Most systems often treat duration and pitch independently because of practical considerations [van Santen. 1994]. • Numerous factors, including semantics and pragmatic conditions, might ultimately influence phoneme durations. Some factors that are typically neglected include: ► ► ► The issue of speech rate relative to speaker intent, mood, and emotion. The use of duration and rhythm to possibly signal document structure above the level of phrase or sentence (e. g. , paragraph). The lack of a consistent and coherent practical definition of the phone such that boundaries can be clearly located for measurement. Intelligent Robot Lecture Note 24

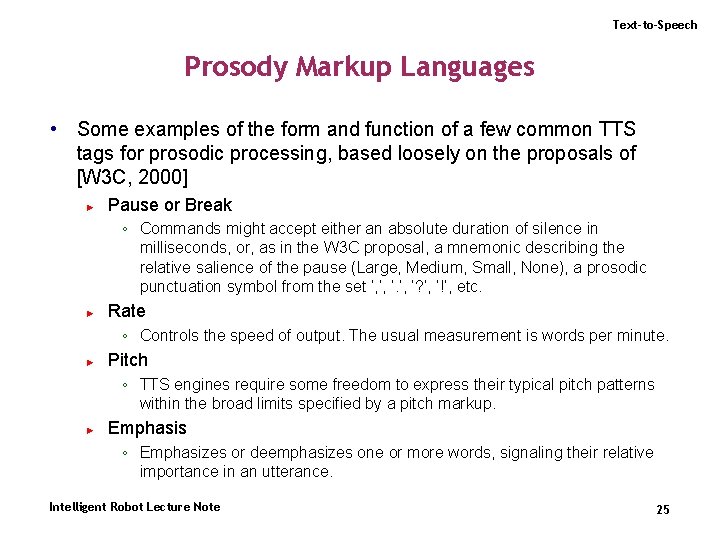

Text-to-Speech Prosody Markup Languages • Some examples of the form and function of a few common TTS tags for prosodic processing, based loosely on the proposals of [W 3 C, 2000] ► Pause or Break ◦ Commands might accept either an absolute duration of silence in milliseconds, or, as in the W 3 C proposal, a mnemonic describing the relative salience of the pause (Large, Medium, Small, None), a prosodic punctuation symbol from the set ‘, ’, ‘? ’, ‘!’, etc. ► Rate ◦ Controls the speed of output. The usual measurement is words per minute. ► Pitch ◦ TTS engines require some freedom to express their typical pitch patterns within the broad limits specified by a pitch markup. ► Emphasis ◦ Emphasizes or deemphasizes one or more words, signaling their relative importance in an utterance. Intelligent Robot Lecture Note 25

Text-to-Speech Synthesis Intelligent Robot Lecture Note 26

Text-to-Speech Synthesis • Formant Speech Synthesis • Concatenative Speech Synthesis • HMM speech synthesis Intelligent Robot Lecture Note 27

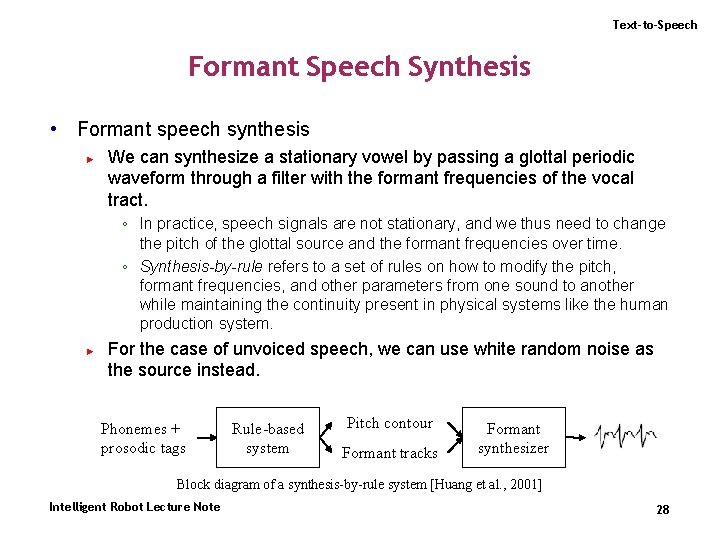

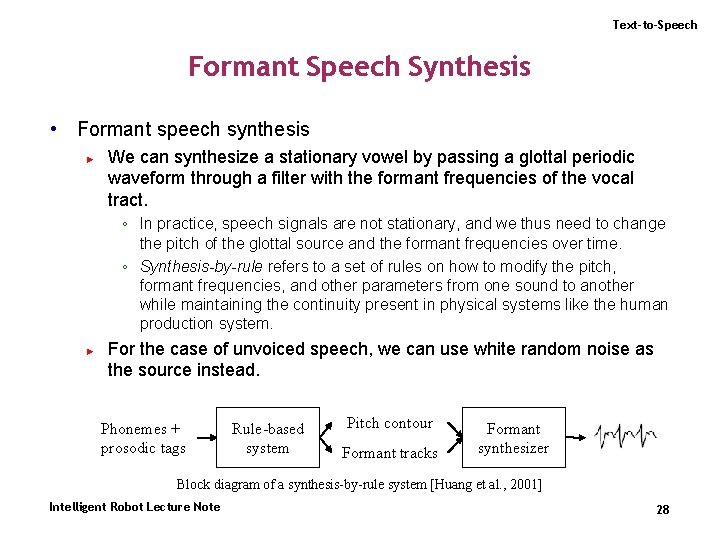

Text-to-Speech Formant Speech Synthesis • Formant speech synthesis ► We can synthesize a stationary vowel by passing a glottal periodic waveform through a filter with the formant frequencies of the vocal tract. ◦ In practice, speech signals are not stationary, and we thus need to change the pitch of the glottal source and the formant frequencies over time. ◦ Synthesis-by-rule refers to a set of rules on how to modify the pitch, formant frequencies, and other parameters from one sound to another while maintaining the continuity present in physical systems like the human production system. ► For the case of unvoiced speech, we can use white random noise as the source instead. Phonemes + prosodic tags Rule-based system Pitch contour Formant tracks Formant synthesizer Block diagram of a synthesis-by-rule system [Huang et al. , 2001] Intelligent Robot Lecture Note 28

Text-to-Speech Concatenative Speech Synthesis • Concatenative speech synthesis ► ► ► A speech segment is synthesized by simply playing back a waveform with matching phoneme string. An utterance is synthesized by concatenating together several speech segments. Issues ◦ What type of speech segment to use? ◦ How to design the acoustic inventory, or set of speech segments, from a set of recordings? ◦ How to select the best string of speech segments from a given library of segments, and given a phonetic string and its prosody? ◦ How to alter the prosody of a speech segment to best match the desired output prosody. Intelligent Robot Lecture Note 29

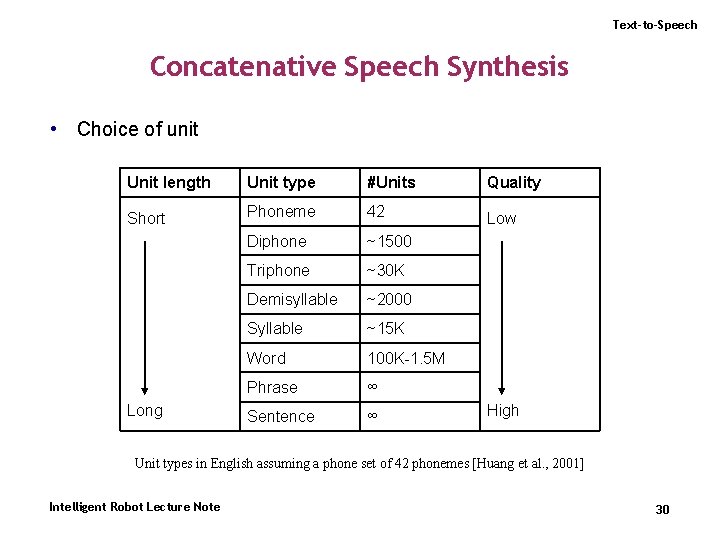

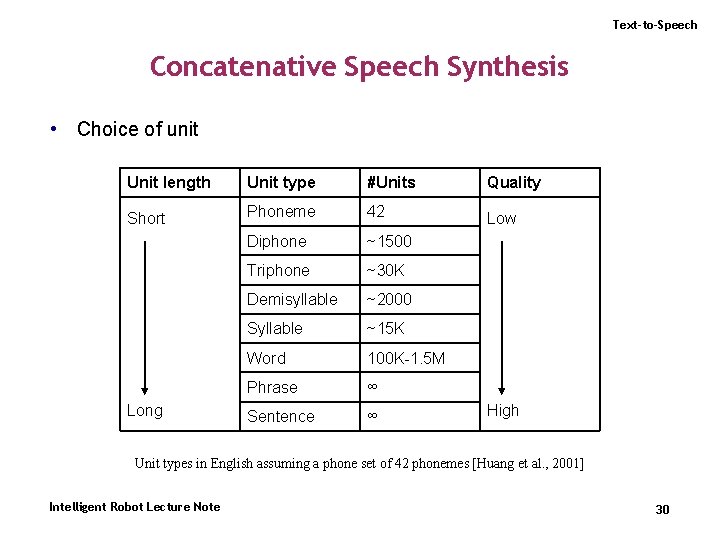

Text-to-Speech Concatenative Speech Synthesis • Choice of unit Unit length Unit type #Units Quality Short Phoneme 42 Low Diphone ~1500 Triphone ~30 K Demisyllable ~2000 Syllable ~15 K Word 100 K-1. 5 M Phrase ∞ Sentence ∞ Long High Unit types in English assuming a phone set of 42 phonemes [Huang et al. , 2001] Intelligent Robot Lecture Note 30

Text-to-Speech Concatenative Speech Synthesis • Diphone ► A type of subword unit that has been extensively used is the dyad or diphone. ◦ A diphone s-ih includes from the middle of the s phoneme to the middle of the ih phoneme, so diphones are, on the average, one phoneme long. ◦ The word hello /hh ax l ow/ can be mapped into the diphone sequence: /sil-hh/, /hh-ax/, /ax-l/, /l-ow/, /ow-sil/. ► While diphones retain the transitional information, there can be large distortions due to the difference in spectra between the stationary parts of two units obtained from different contexts. ◦ Many practical diphone systems are not purely diphone based. ◦ They do not store transitions between fricatives, or between fricatives and stops, while they store longer units that have a high level of coarticulation [Sproat, 1998]. Intelligent Robot Lecture Note 31

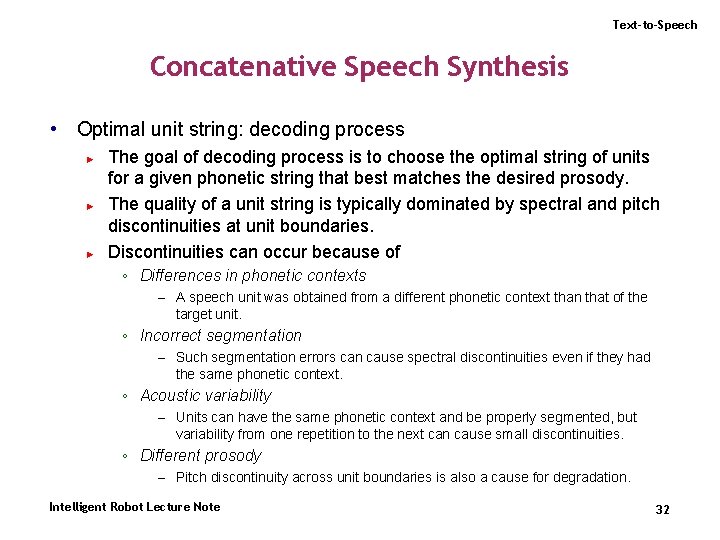

Text-to-Speech Concatenative Speech Synthesis • Optimal unit string: decoding process ► ► ► The goal of decoding process is to choose the optimal string of units for a given phonetic string that best matches the desired prosody. The quality of a unit string is typically dominated by spectral and pitch discontinuities at unit boundaries. Discontinuities can occur because of ◦ Differences in phonetic contexts – A speech unit was obtained from a different phonetic context than that of the target unit. ◦ Incorrect segmentation – Such segmentation errors can cause spectral discontinuities even if they had the same phonetic context. ◦ Acoustic variability – Units can have the same phonetic context and be properly segmented, but variability from one repetition to the next can cause small discontinuities. ◦ Different prosody – Pitch discontinuity across unit boundaries is also a cause for degradation. Intelligent Robot Lecture Note 32

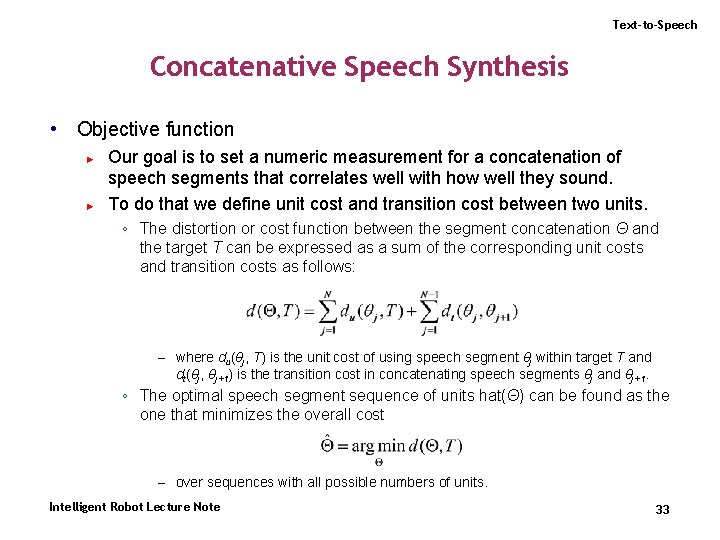

Text-to-Speech Concatenative Speech Synthesis • Objective function ► ► Our goal is to set a numeric measurement for a concatenation of speech segments that correlates well with how well they sound. To do that we define unit cost and transition cost between two units. ◦ The distortion or cost function between the segment concatenation Θ and the target T can be expressed as a sum of the corresponding unit costs and transition costs as follows: – where du(θj, T) is the unit cost of using speech segment θj within target T and dt(θj, θj+1) is the transition cost in concatenating speech segments θj and θj+1. ◦ The optimal speech segment sequence of units hat(Θ) can be found as the one that minimizes the overall cost – over sequences with all possible numbers of units. Intelligent Robot Lecture Note 33

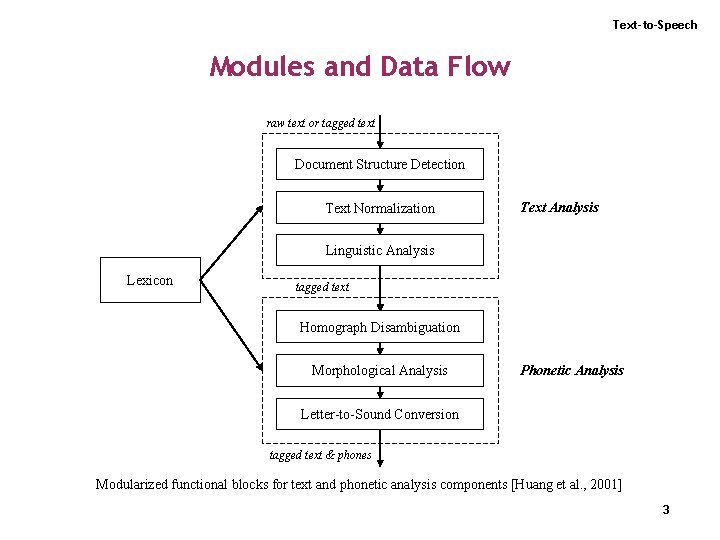

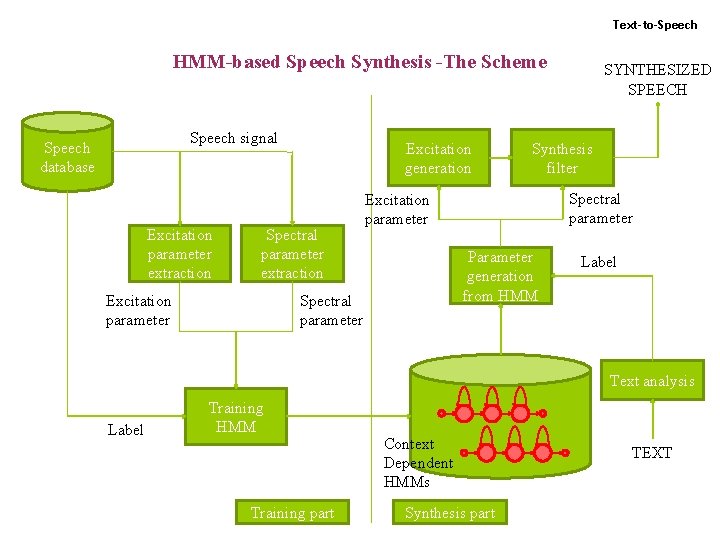

Text-to-Speech HMM-based Speech Synthesis -The Scheme Speech signal Speech database Excitation parameter extraction Excitation generation Spectral parameter extraction Excitation parameter Synthesis filter Spectral parameter Excitation parameter Parameter generation from HMM Spectral parameter SYNTHESIZED SPEECH Label Text analysis Label Training HMM Training part Context Dependent HMMs Synthesis part TEXT