Tetrad project http www phil cmu eduprojectstetradcurrent h

- Slides: 43

Tetrad project http: //www. phil. cmu. edu/projects/tetrad/current. h tml

CAUSAL MODELS IN THE COGNITIVE SCIENCES

Two uses Causal graphical models used in: Practice/methodology Focus of cognitive science on neuroimaging, but lots of other uses Framework for expressing human causal knowledge Are human causal representations “just” these causal graphical models? Also (but not today): Are other cognitive representations “just” graphical models (perhaps causal, perhaps not)?

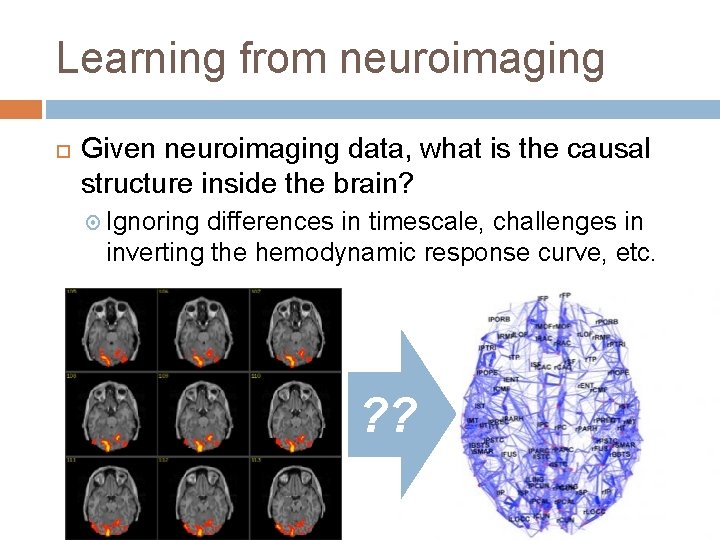

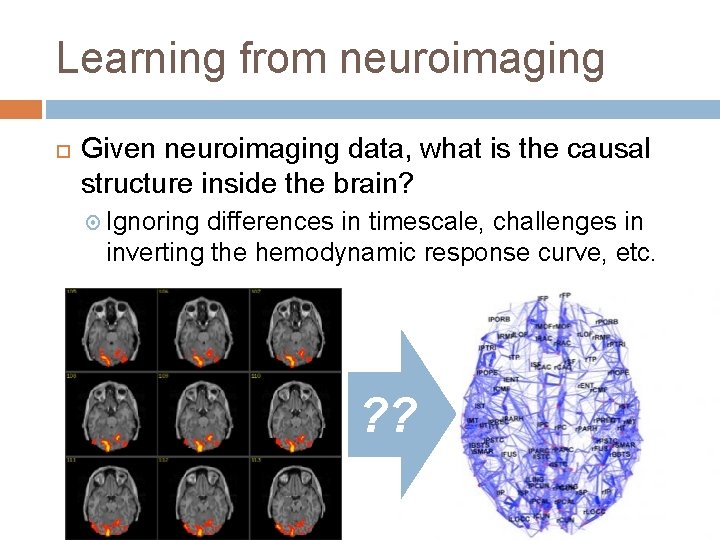

Learning from neuroimaging Given neuroimaging data, what is the causal structure inside the brain? Ignoring differences in timescale, challenges in inverting the hemodynamic response curve, etc. ? ?

Learning from neuroimaging Big challenge: people likely have (slightly) different causal structures in their brains Full dataset is really from a mixed population! ⇒ “Normal” causal search falls apart ⇒ Idea: perhaps the differences are mostly in parameters, not graphs Note that “no edge” ≡ “parameter = 0”

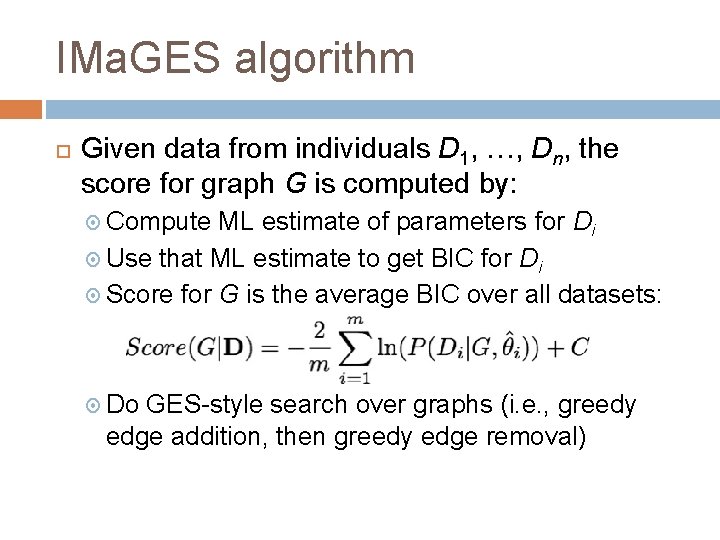

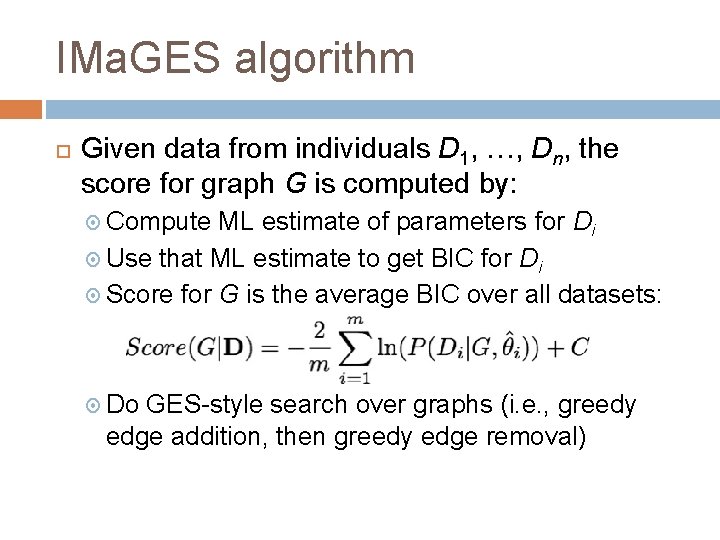

IMa. GES algorithm Given data from individuals D 1, …, Dn, the score for graph G is computed by: Compute ML estimate of parameters for Di Use that ML estimate to get BIC for Di Score for G is the average BIC over all datasets: Do GES-style search over graphs (i. e. , greedy edge addition, then greedy edge removal)

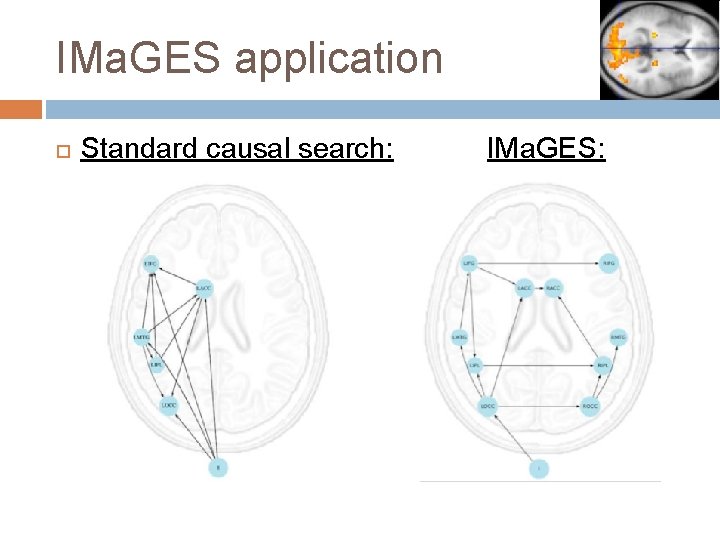

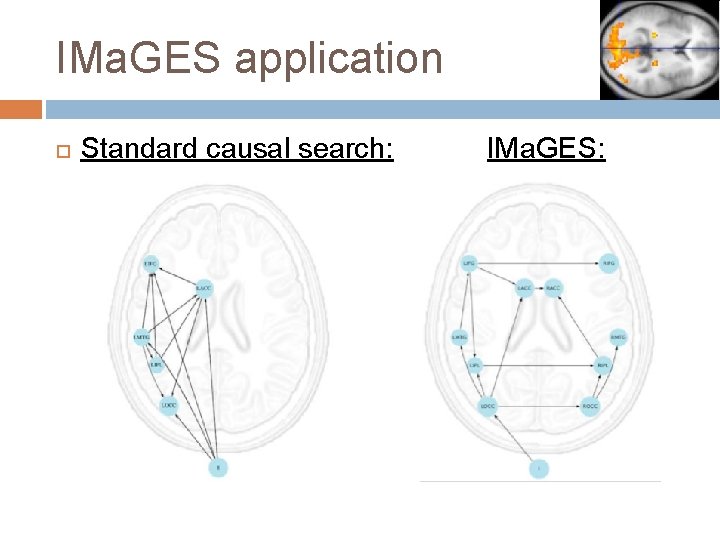

IMa. GES application Standard causal search: IMa. GES:

Causal cognition Causal inference: learning causal structure from a sequence of cases (observations or interventions) Causal perception: learning causal connections through “direct” perception Causal reasoning: using prior causal knowledge to predict, explain, control your world

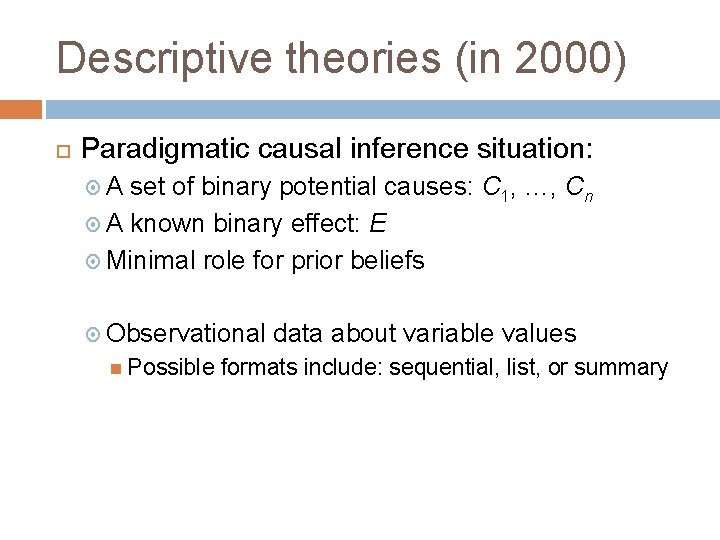

Descriptive theories (in 2000) Paradigmatic causal inference situation: A set of binary potential causes: C 1, …, Cn A known binary effect: E Minimal role for prior beliefs Observational Possible data about variable values formats include: sequential, list, or summary

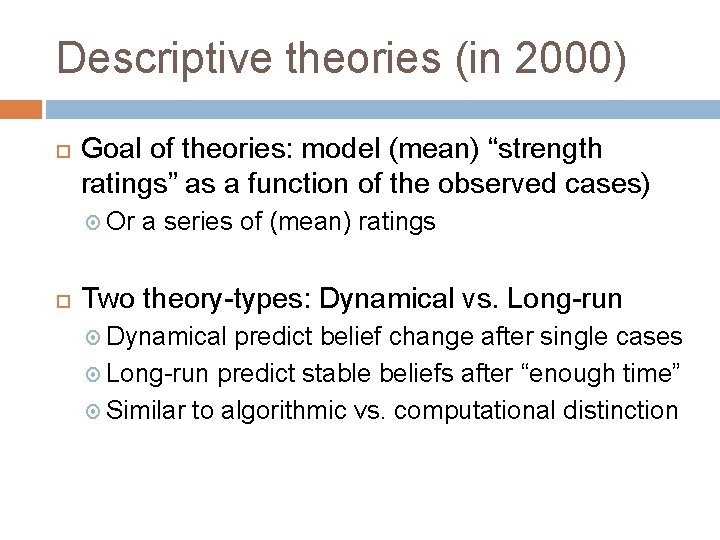

Descriptive theories (in 2000) Goal of theories: model (mean) “strength ratings” as a function of the observed cases) Or a series of (mean) ratings Two theory-types: Dynamical vs. Long-run Dynamical predict belief change after single cases Long-run predict stable beliefs after “enough time” Similar to algorithmic vs. computational distinction

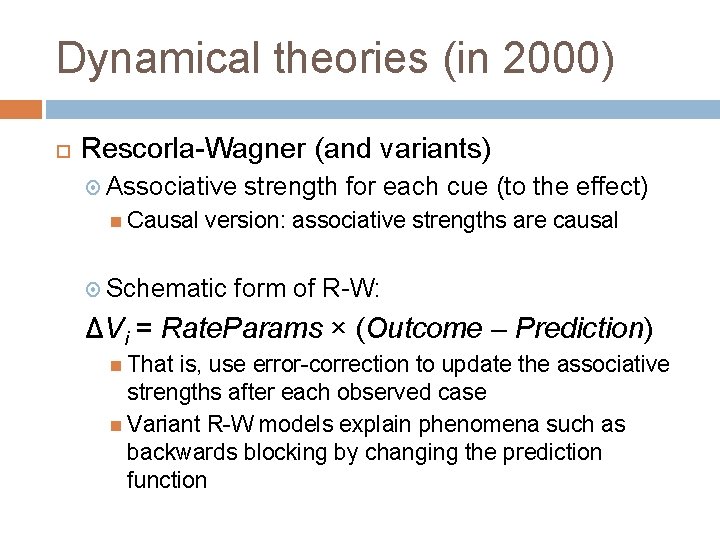

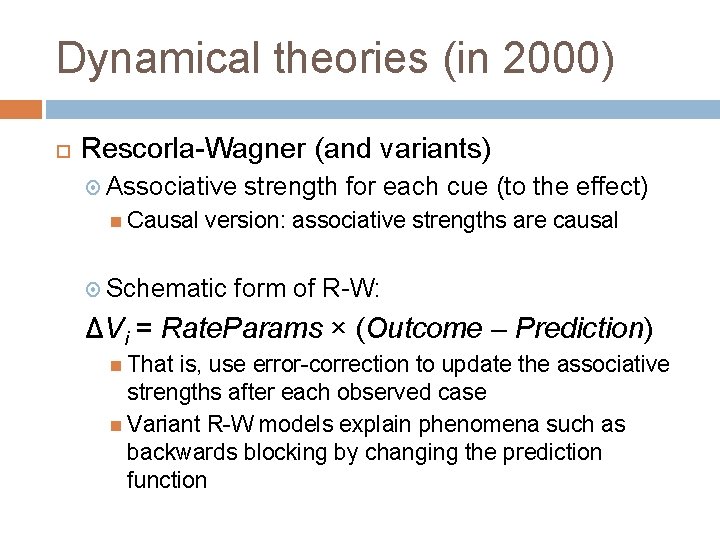

Dynamical theories (in 2000) Rescorla-Wagner (and variants) Associative Causal strength for each cue (to the effect) version: associative strengths are causal Schematic form of R-W: ΔVi = Rate. Params × (Outcome – Prediction) That is, use error-correction to update the associative strengths after each observed case Variant R-W models explain phenomena such as backwards blocking by changing the prediction function

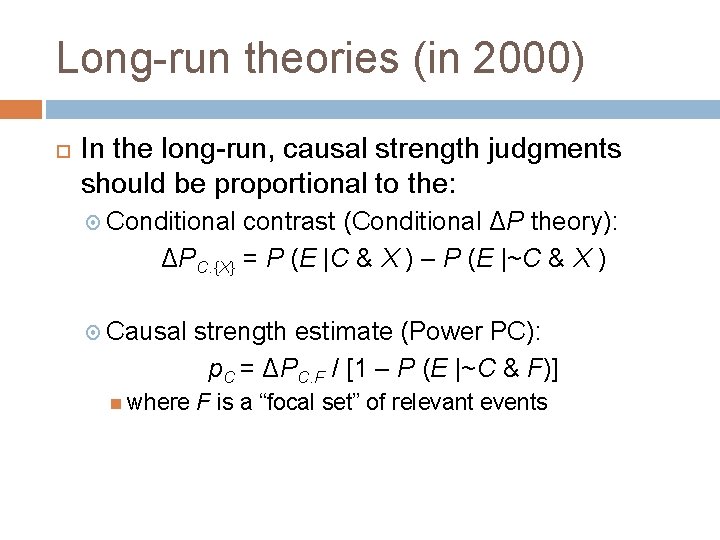

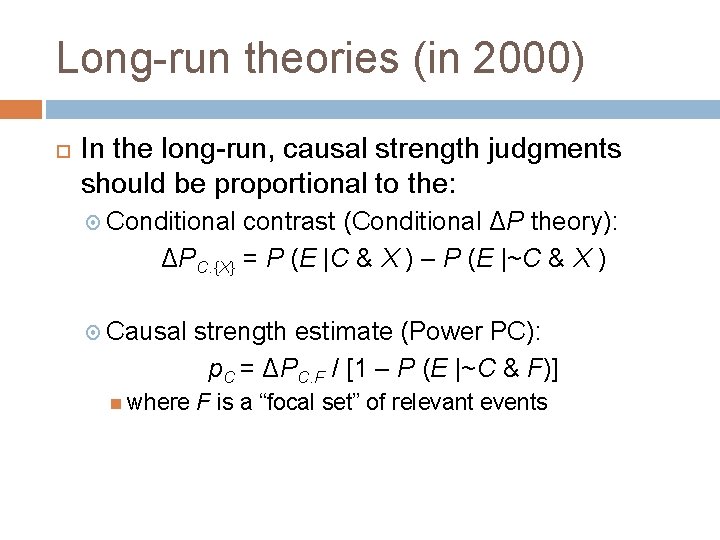

Long-run theories (in 2000) In the long-run, causal strength judgments should be proportional to the: Conditional contrast (Conditional ΔP theory): ΔPC. {X} = P (E |C & X ) – P (E |~C & X ) Causal where strength estimate (Power PC): p. C = ΔPC. F / [1 – P (E |~C & F)] F is a “focal set” of relevant events

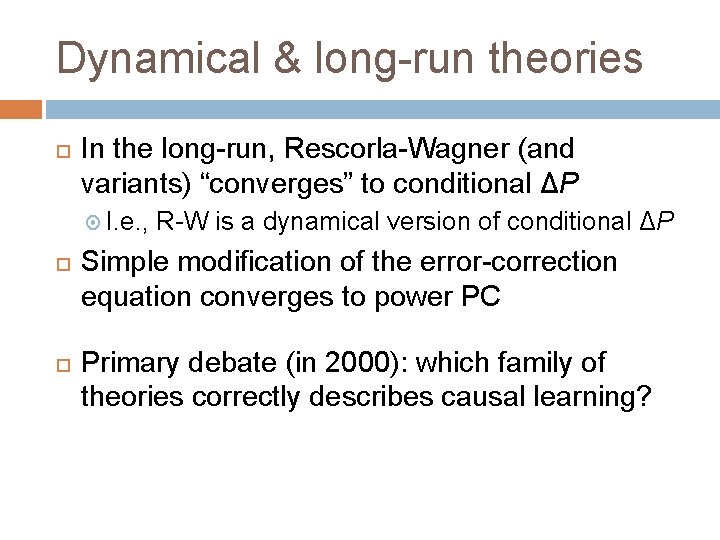

Dynamical & long-run theories In the long-run, Rescorla-Wagner (and variants) “converges” to conditional ΔP I. e. , R-W is a dynamical version of conditional ΔP Simple modification of the error-correction equation converges to power PC Primary debate (in 2000): which family of theories correctly describes causal learning?

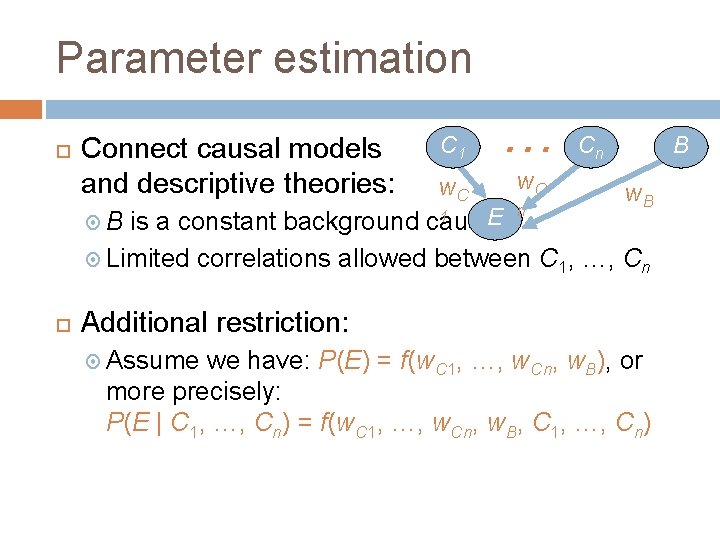

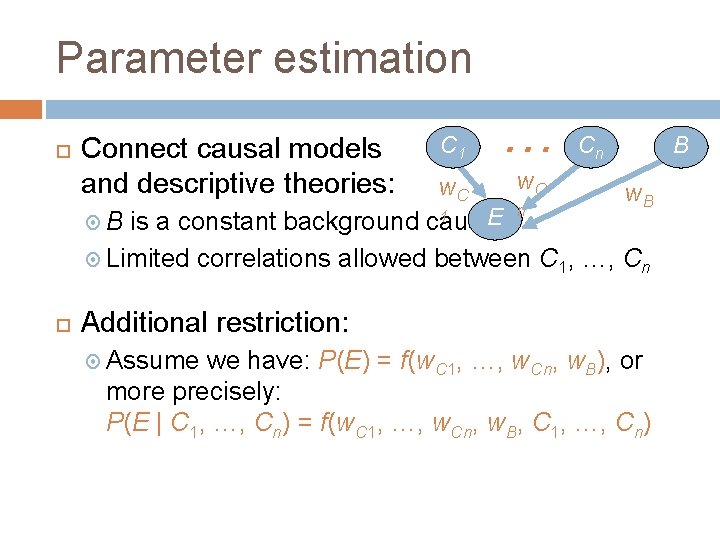

Parameter estimation Connect causal models and descriptive theories: C 1 … w. C n Cn B w. B 1 E is a constant background cause Limited correlations allowed between C 1, …, Cn B Additional restriction: Assume we have: P(E) = f(w. C 1, …, w. Cn, w. B), or more precisely: P(E | C 1, …, Cn) = f(w. C 1, …, w. Cn, w. B, C 1, …, Cn)

Parameter estimation Essentially every descriptive theory estimates the w-parameters in this causal Bayes net Different descriptive theories result from different functional forms for P(E) And all of the research on the descriptive theories implies that people can estimate parameters in this “simple” causal structure

Learning causal structure? Additional queries: From a “rational analysis” point-of-view: Can people learn structure from interventions? Or from patterns of correlations? From Is a “process model” point-of-view: there a psychologically plausible process model of causal graphical model structure learning?

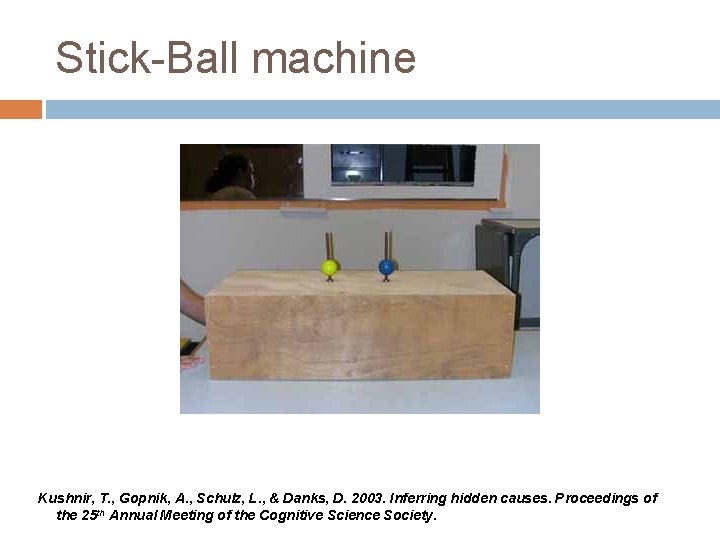

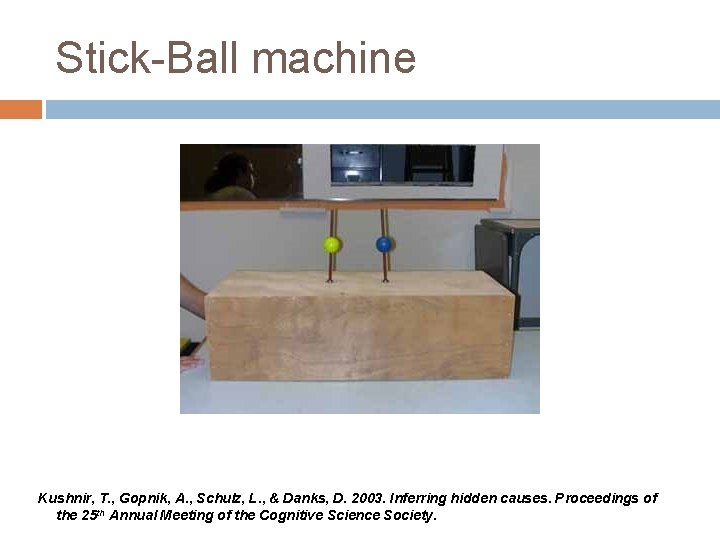

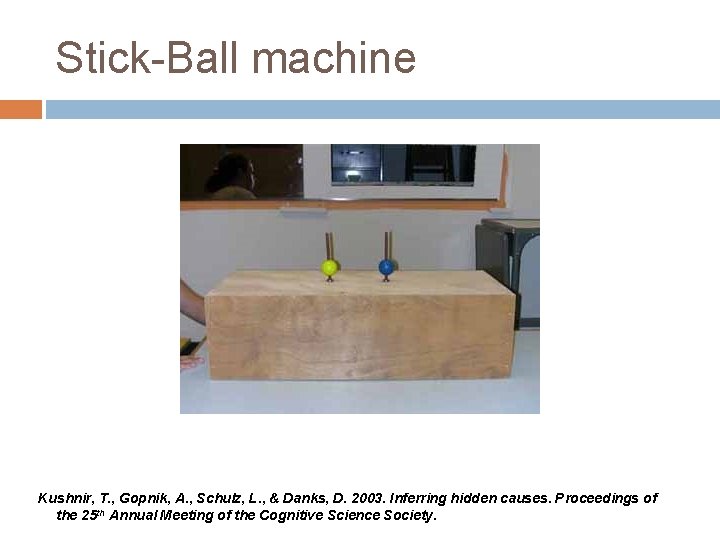

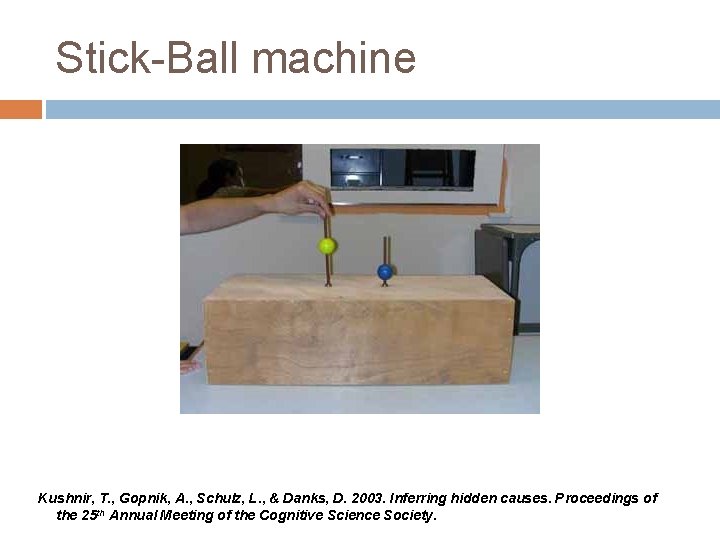

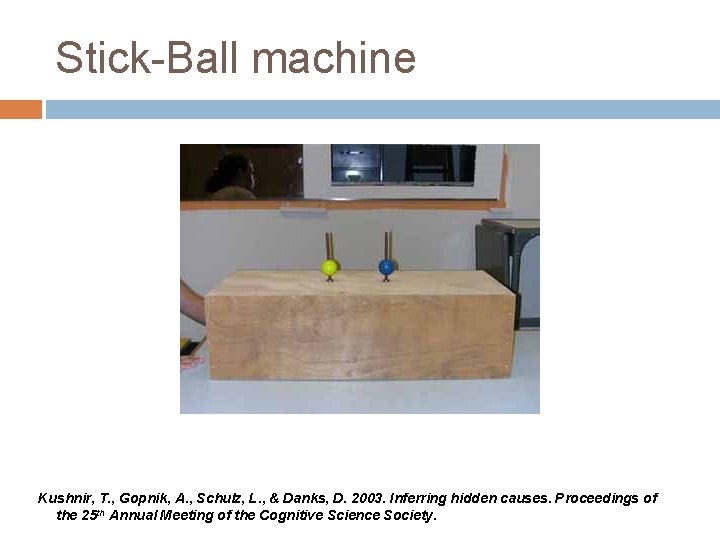

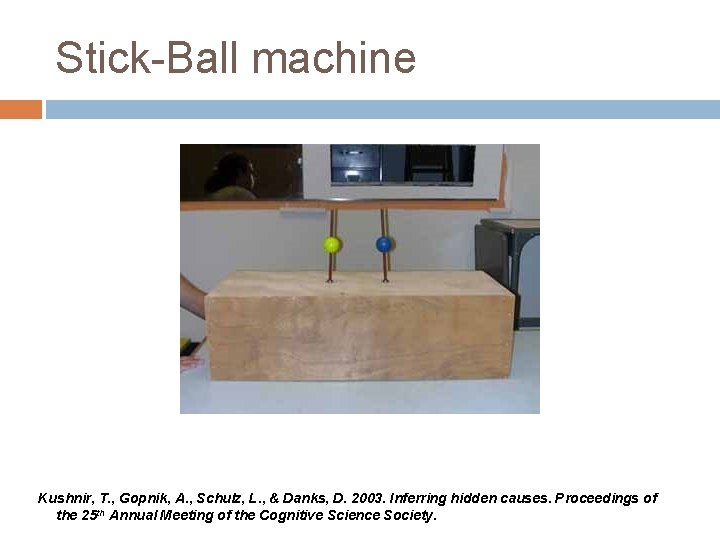

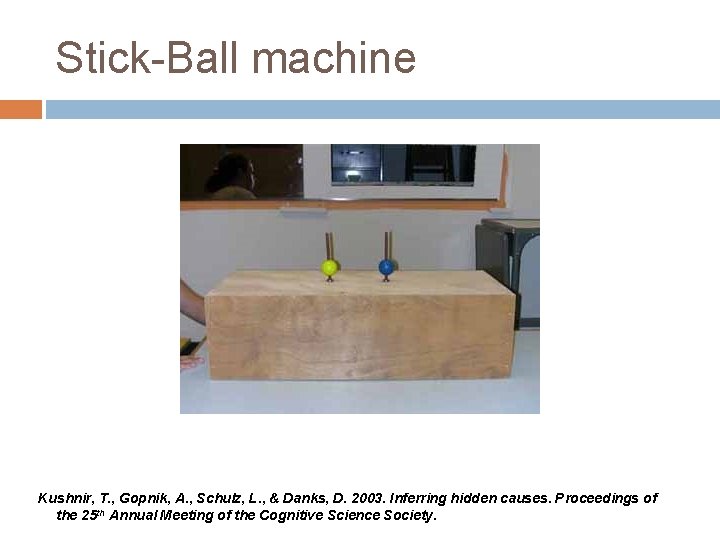

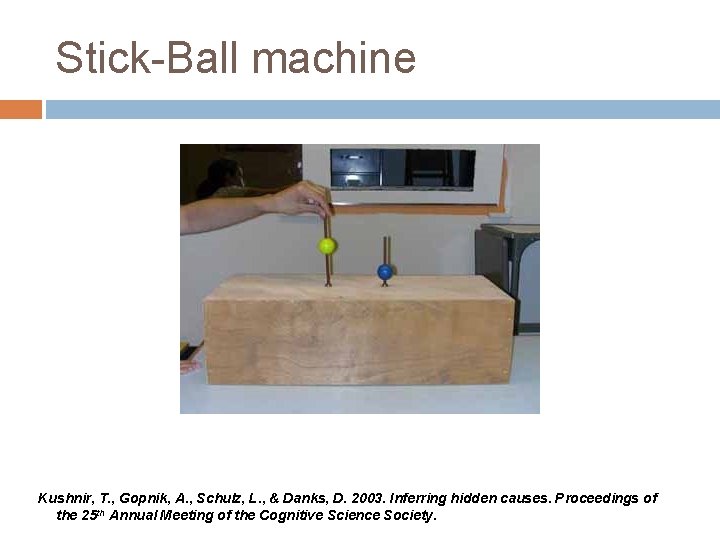

Stick-Ball machine Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

Stick-Ball machine Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

Stick-Ball machine Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

Stick-Ball machine Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

Stick-Ball machine Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

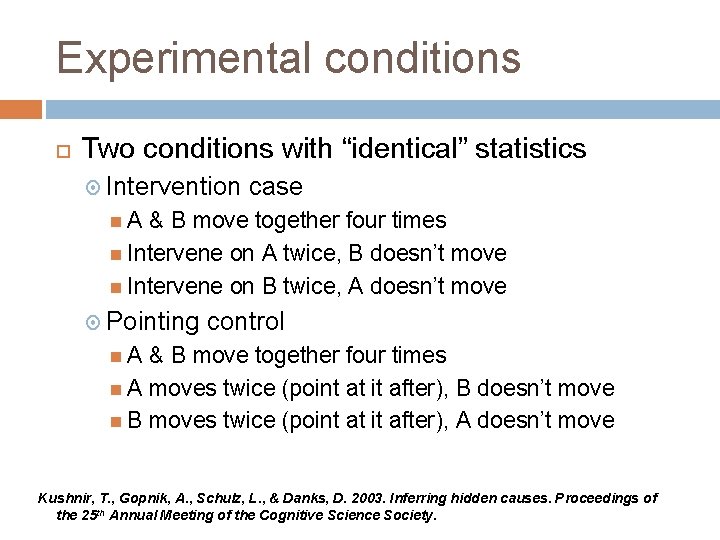

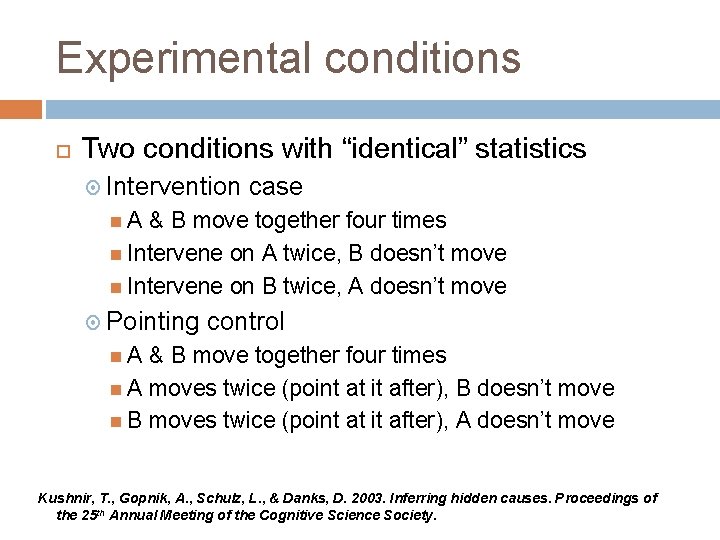

Experimental conditions Two conditions with “identical” statistics Intervention case A & B move together four times Intervene on A twice, B doesn’t move Intervene on B twice, A doesn’t move Pointing control A & B move together four times A moves twice (point at it after), B doesn’t move B moves twice (point at it after), A doesn’t move Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

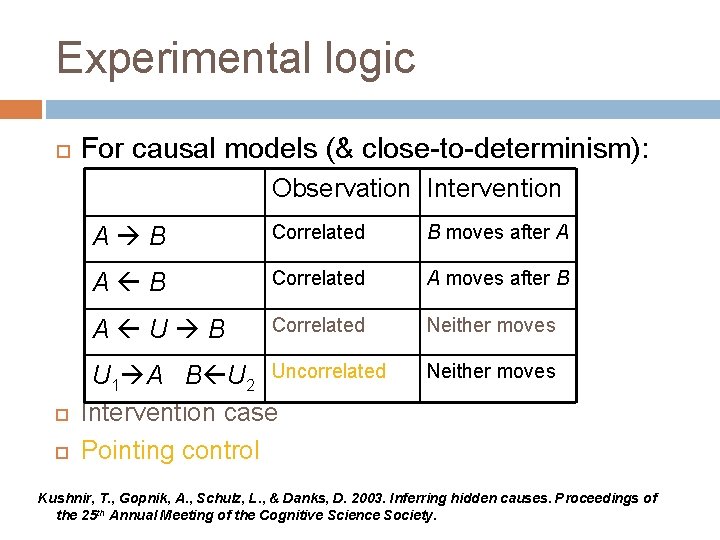

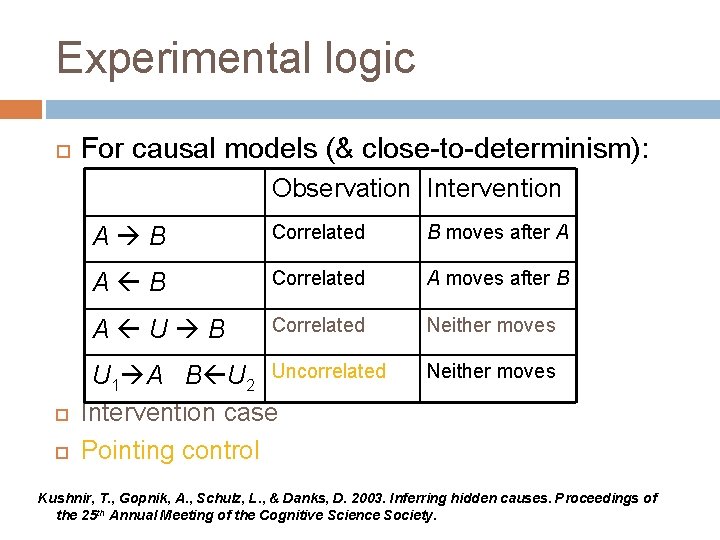

Experimental logic For causal models (& close-to-determinism): Observation Intervention A B Correlated B moves after A A B Correlated A moves after B A U B Correlated Neither moves U 1 A B U 2 Uncorrelated Intervention case Pointing control Neither moves Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

Experimental logic Non-CGM causal inference theories make no prediction for this case, as there is no cause-effect division And on plausible variants that do predict, they predict no difference between the conditions

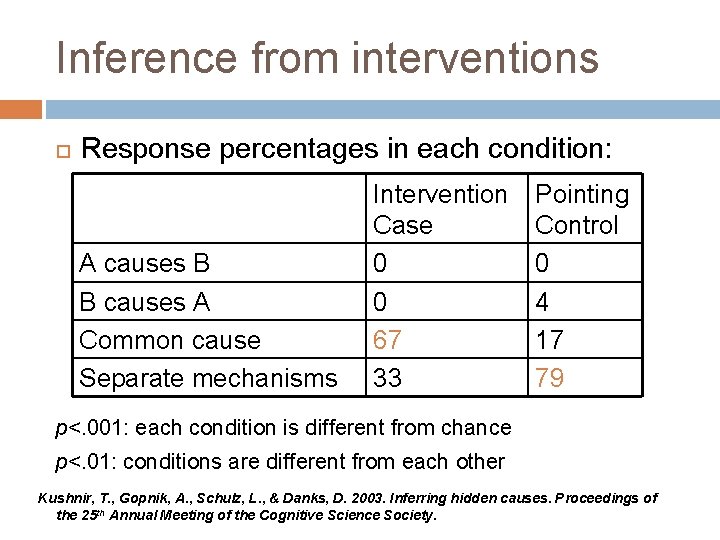

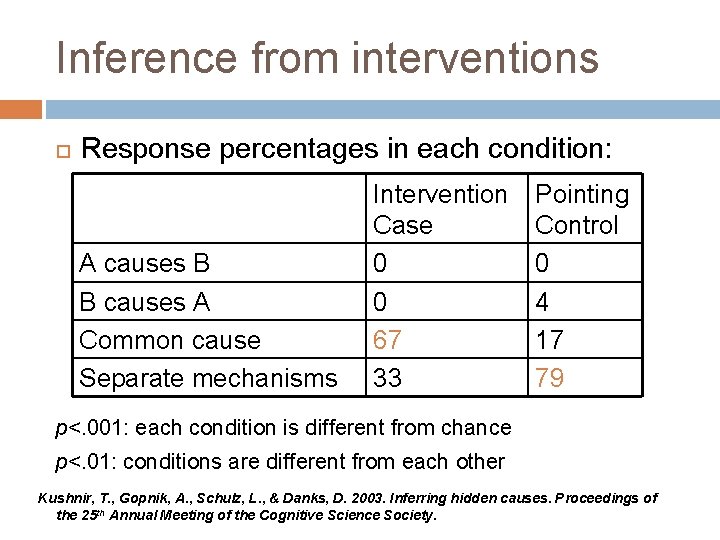

Inference from interventions Response percentages in each condition: A causes B B causes A Common cause Separate mechanisms Intervention Case 0 0 67 33 Pointing Control 0 4 17 79 p<. 001: each condition is different from chance p<. 01: conditions are different from each other Kushnir, T. , Gopnik, A. , Schulz, L. , & Danks, D. 2003. Inferring hidden causes. Proceedings of the 25 th Annual Meeting of the Cognitive Science Society.

Other learning from interventions Learning from interventions Gopnik, et al. (2004); Griffiths, et al. (2004); Sobel, et al. (2004); Steyvers, et al. (2003) And many more since 2005 Planning/predicting your own interventions Gopnik, et al. (2004); Steyvers, et al. (2003); Waldmann & Hagmayer (2005) And many more since 2005

Learning from correlations Lots of evidence that people (and even rats!) can extract causal structure from observed correlations And those structures are well-modeled as causal graphical models ⇒ Lots of empirical evidence that we act “as if” we are learning (approx. rationally) causal DAGs

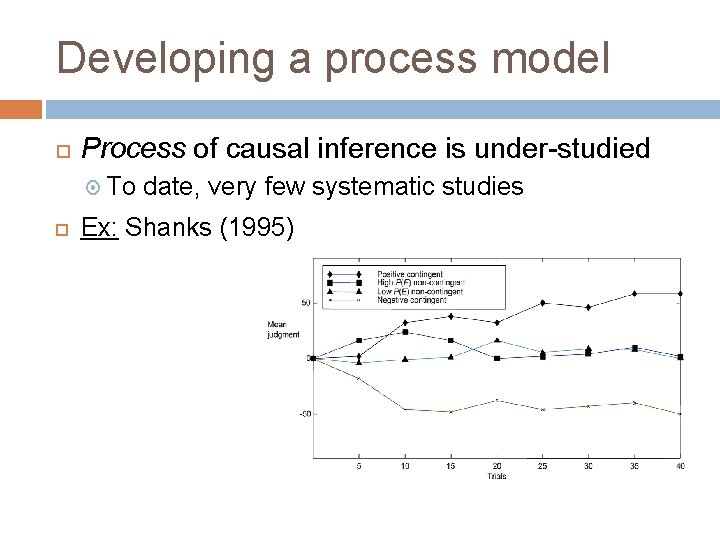

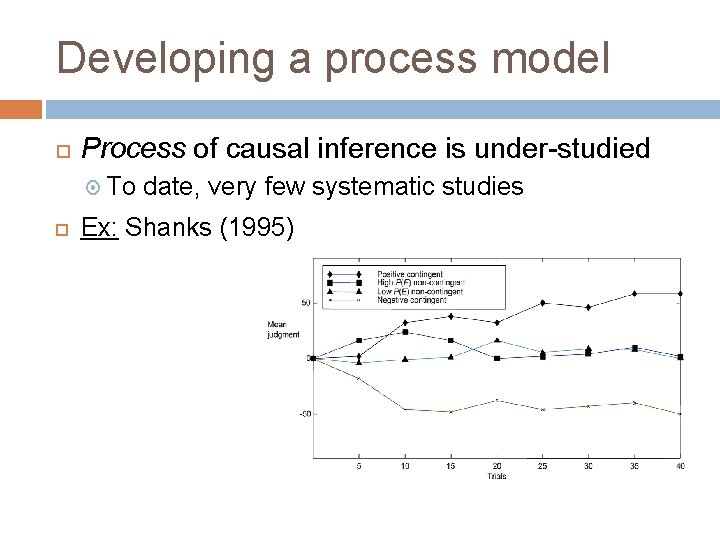

Developing a process model Process of causal inference is under-studied To date, very few systematic studies Ex: Shanks (1995)

Developing a process model Features of observed data Slow convergence Pre-asymptotic “bump” General considerations People have memory/computation bounds Error-correction models (e. g. , Rescorla-Wagner; dynamic power PC) work well for simple cases

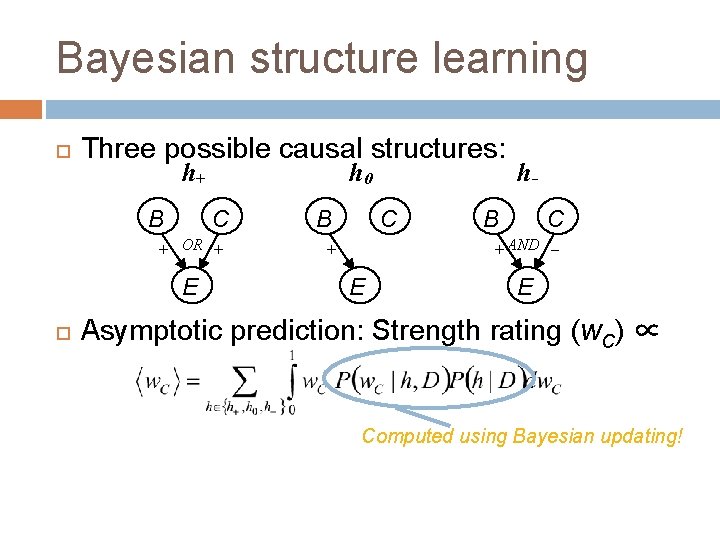

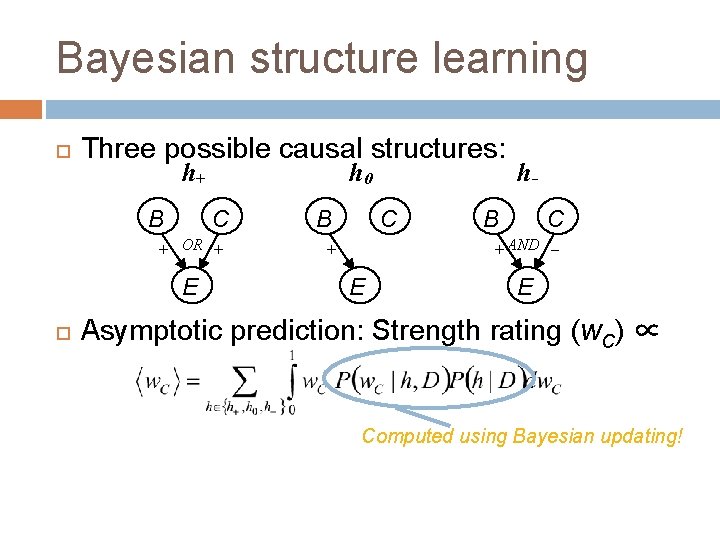

Bayesian structure learning Three possible causal structures: h+ B h 0 C + OR + E B C h– B C + AND – + E E Asymptotic prediction: Strength rating (w. C) ∝ Computed using Bayesian updating!

Bayesian dynamic learning When presented with a sequence of data, After each datapoint, update the structure and parameter probability distributions (in the standard Bayesian manner) Then use those posteriors as the prior distribution for the next datapoint Repeat ad infinitum Danks, D. , Griffiths, T. L. , & Tenenbaum, J. B. 2003. Dynamical causal learning. In Advances in Neural Information Processing Systems 15.

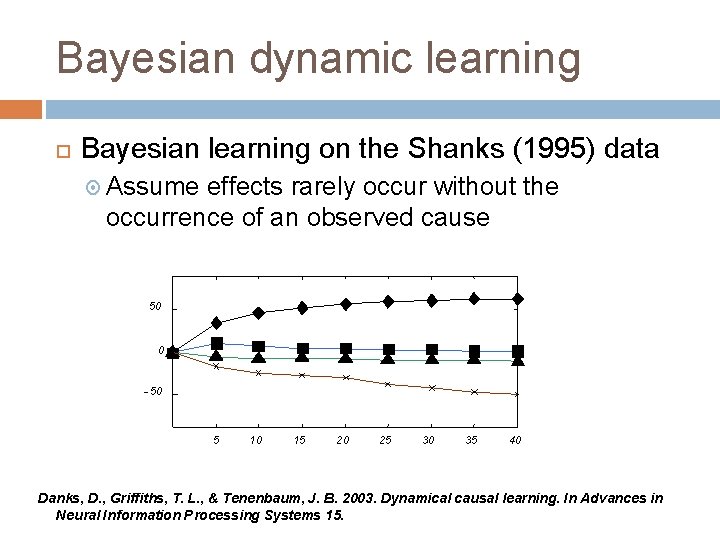

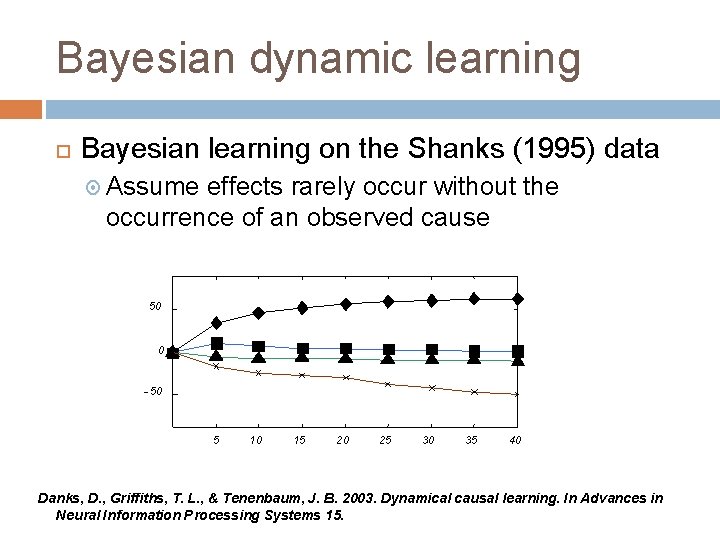

Bayesian dynamic learning Bayesian learning on the Shanks (1995) data Assume effects rarely occur without the occurrence of an observed cause 50 0 - 50 5 10 15 20 25 30 35 40 Danks, D. , Griffiths, T. L. , & Tenenbaum, J. B. 2003. Dynamical causal learning. In Advances in Neural Information Processing Systems 15.

Side-by-side comparison Shanks (1995): Bayesian: 50 0 -50 5 10 15 20 25 30 35 Danks, D. , Griffiths, T. L. , & Tenenbaum, J. B. 2003. Dynamical causal learning. In Advances in Neural Information Processing Systems 15. 40

Bayesian learning as process model Challenges: All of the terms in the Bayesian updating equation are quite computationally intensive Number of hypotheses under consideration, and information needs, grow exponentially with the number of potential causes No clear way to incorporate inference to unobserved causes

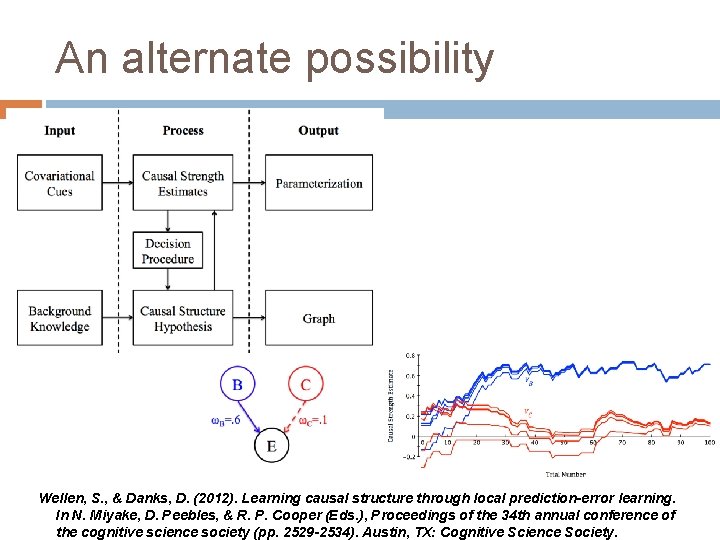

An alternate possibility Constraint-based structure learning: Given a set of independencies, determine the causal Bayes nets that predict exactly those statistical relationships Range of algorithms for a range of assumptions Idea: Use associationist models to make the necessary independence judgments Danks, D. 2004. Constraint-based human causal learning. In Proceedings of the 6 th International Conference on Cognitive Modeling (ICCM-2004).

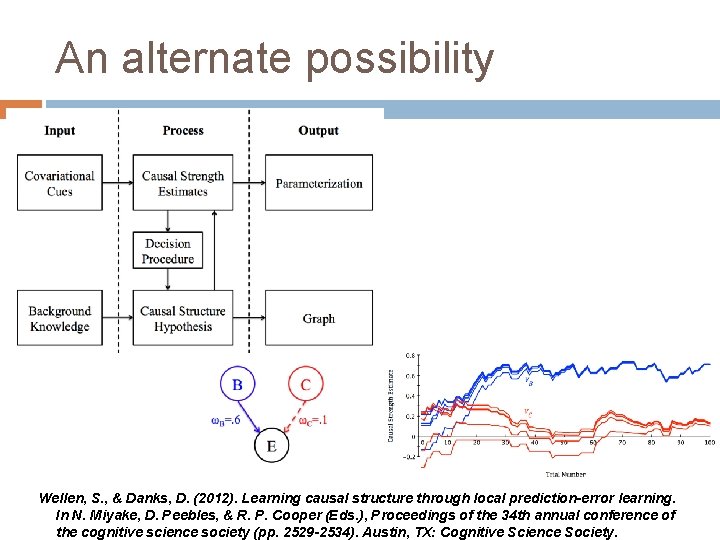

An alternate possibility Wellen, S. , & Danks, D. (2012). Learning causal structure through local prediction-error learning. In N. Miyake, D. Peebles, & R. P. Cooper (Eds. ), Proceedings of the 34 th annual conference of the cognitive science society (pp. 2529 -2534). Austin, TX: Cognitive Science Society.

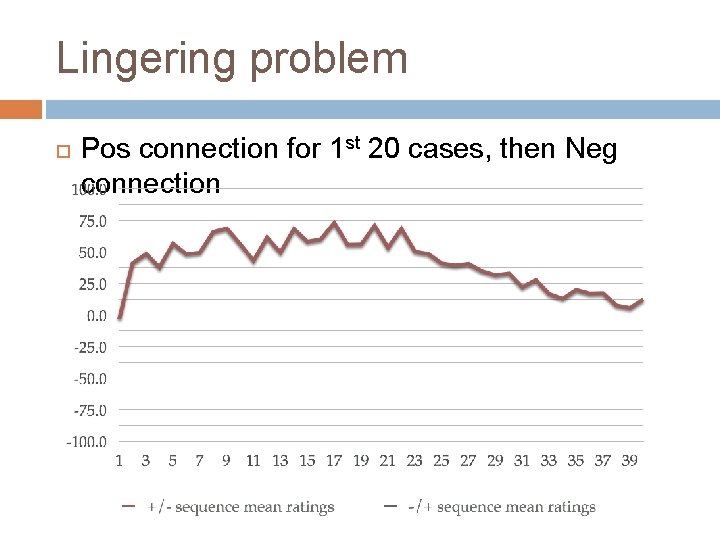

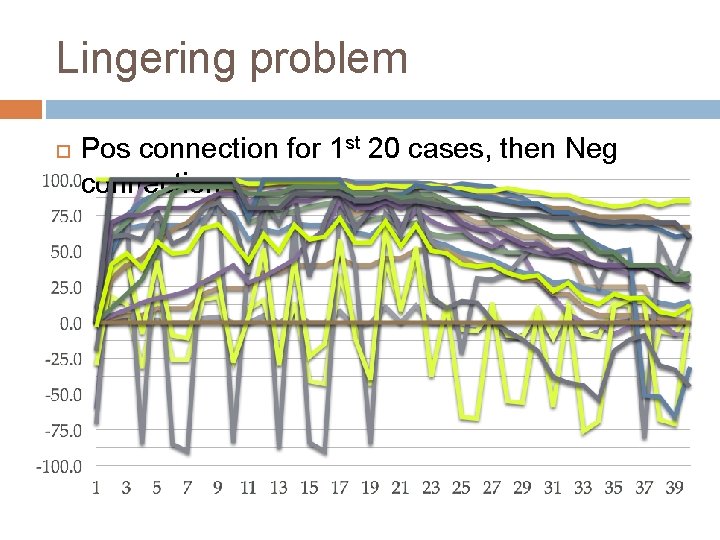

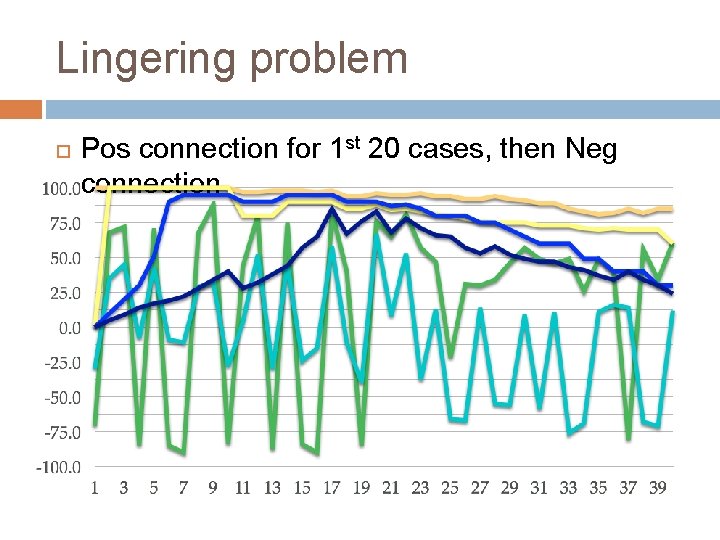

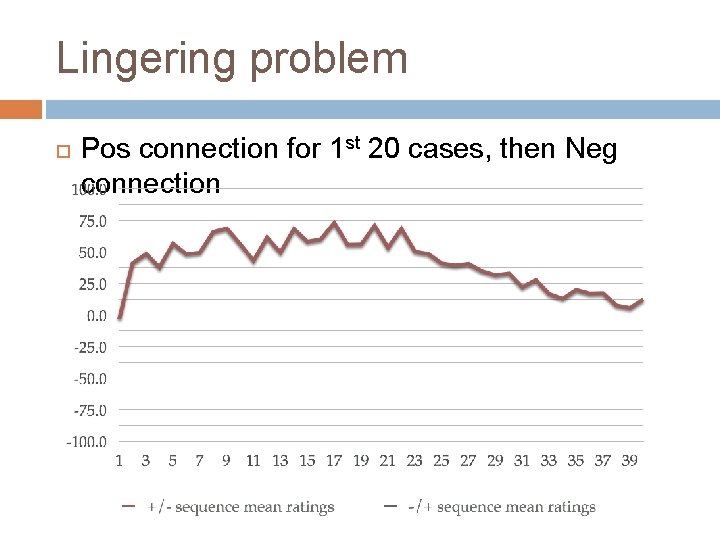

Lingering problem Pos connection for 1 st 20 cases, then Neg connection

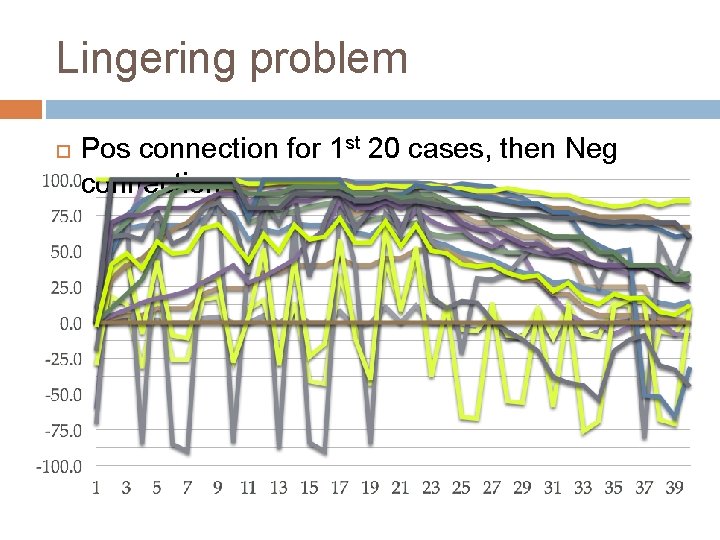

Lingering problem Pos connection for 1 st 20 cases, then Neg connection

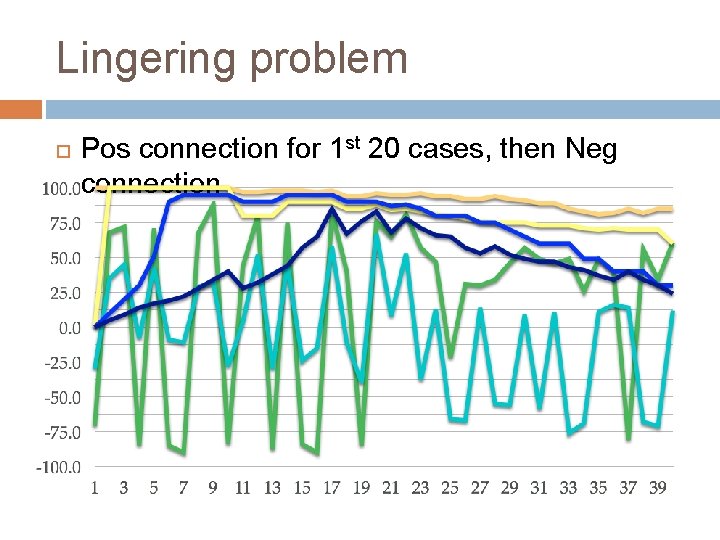

Lingering problem Pos connection for 1 st 20 cases, then Neg connection

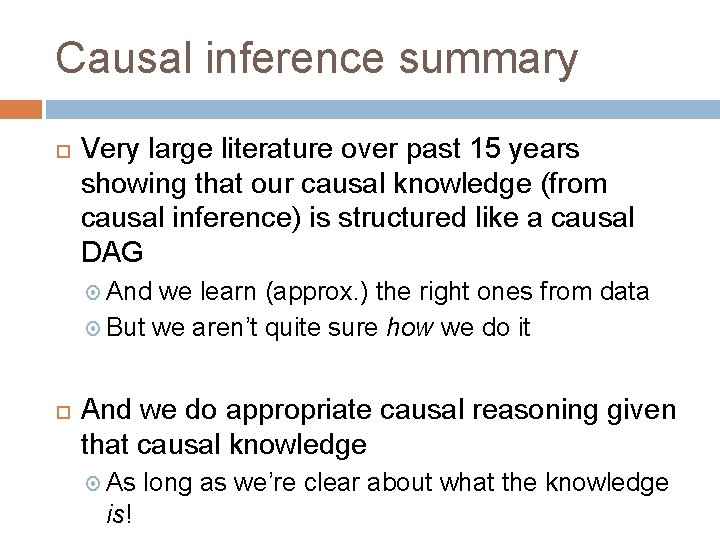

Causal inference summary Very large literature over past 15 years showing that our causal knowledge (from causal inference) is structured like a causal DAG And we learn (approx. ) the right ones from data But we aren’t quite sure how we do it And we do appropriate causal reasoning given that causal knowledge As is! long as we’re clear about what the knowledge

Causal perception Paradigmatic case: “launching effect” Similar perceptions/experiences for other causal events (e. g. , “exploding”, “dragging”, etc. ) Including social causal events (e. g. , “fleeing”)

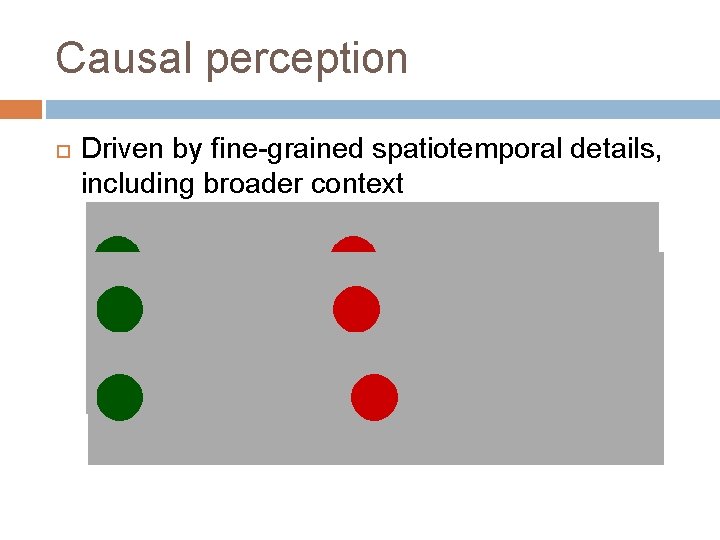

Causal perception Driven by fine-grained spatiotemporal details, including broader context

Causal perception vs. inference Behavioral evidence that they are different Both in responses & phenomenology Neuroimaging evidence that they are different Different brain regions “light up” in the different types of experiments Theoretical evidence that they are different “Best models” of the output representations differ