Technical Considerations in Alignment for Computerized Adaptive Testing

- Slides: 24

Technical Considerations in Alignment for Computerized Adaptive Testing Liru Zhang, Delaware DOE Shudong Wang, NWEA 2014 CCSSO NCSA New Orleans, LA June 25 -27, 2014

Background of Alignment • Alignment is an important attribute in the standards-based educational reform. Peer Review requires states to submit evidence demonstrating alignment between the assessment and its content standards (Standards and Assessments Peer Review Guidance, 2009) in addition to validity evidence. • For the Next Generation Assessment, alignment is defined as the degree to which expectations specified in the Common Core State Standards and the assessment are in agreement and serve in conjunction with one another to guide the system toward students learning what they are suppose to know and be able to do. • With new and innovative technology and the great potential of online tests, computerized adaptive testing (CAT) has been increasingly implemented in K-12 assessment systems.

Alignment for Linear Tests Different approaches have been employed in the past decade for evaluating the alignment of state assessment programs. Their process is similar in four ways. They are: (1) use approved content standards and implemented tests; (2) review item-by-item from a developed linear test form; (3) use professional judgments for alignment; and (4) evaluate the degree that each item matches the claim, standard, objective and/or topic, and performance expectations (e. g. , cognitive complexity and depth of knowledge) in the standards.

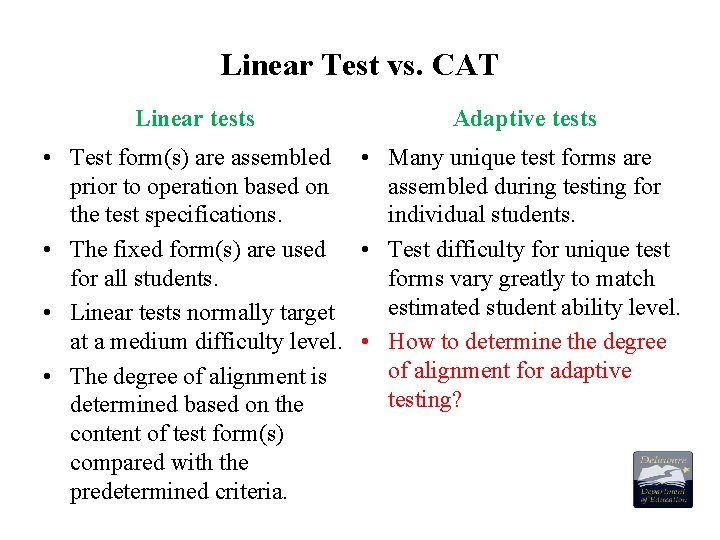

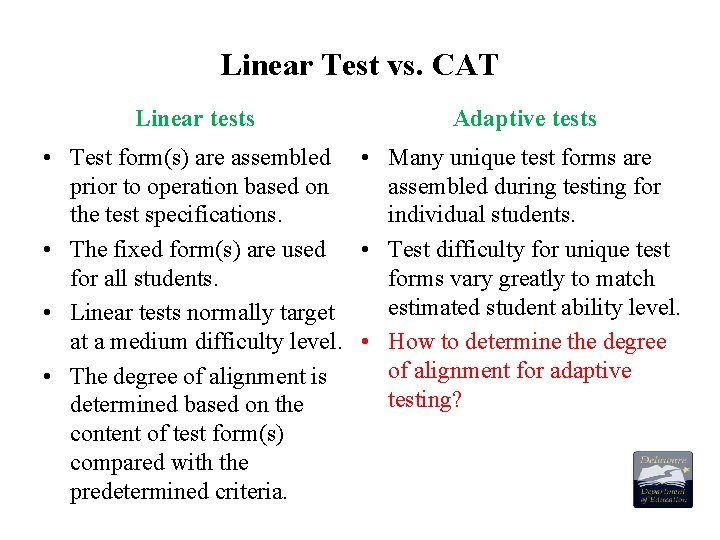

Linear Test vs. CAT Linear tests Adaptive tests • Test form(s) are assembled • Many unique test forms are prior to operation based on assembled during testing for the test specifications. individual students. • The fixed form(s) are used • Test difficulty for unique test for all students. forms vary greatly to match estimated student ability level. • Linear tests normally target at a medium difficulty level. • How to determine the degree of alignment for adaptive • The degree of alignment is testing? determined based on the content of test form(s) compared with the predetermined criteria.

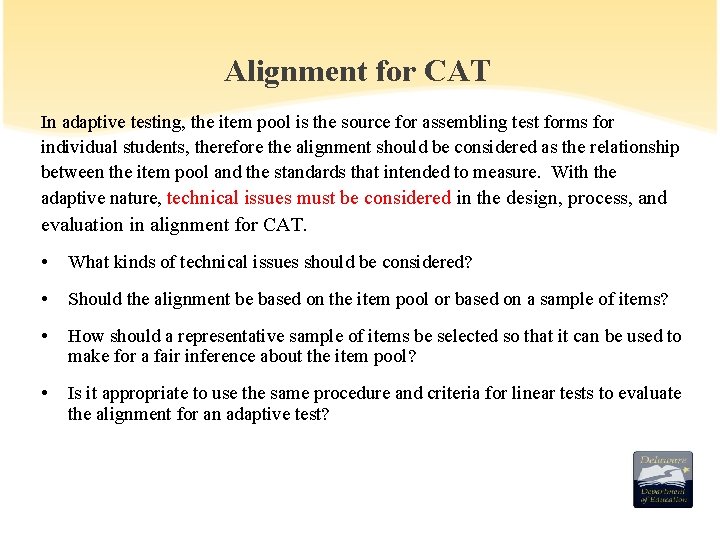

Alignment for CAT In adaptive testing, the item pool is the source for assembling test forms for individual students, therefore the alignment should be considered as the relationship between the item pool and the standards that intended to measure. With the adaptive nature, technical issues must be considered in the design, process, and evaluation in alignment for CAT. • What kinds of technical issues should be considered? • Should the alignment be based on the item pool or based on a sample of items? • How should a representative sample of items be selected so that it can be used to make for a fair inference about the item pool? • Is it appropriate to use the same procedure and criteria for linear tests to evaluate the alignment for an adaptive test?

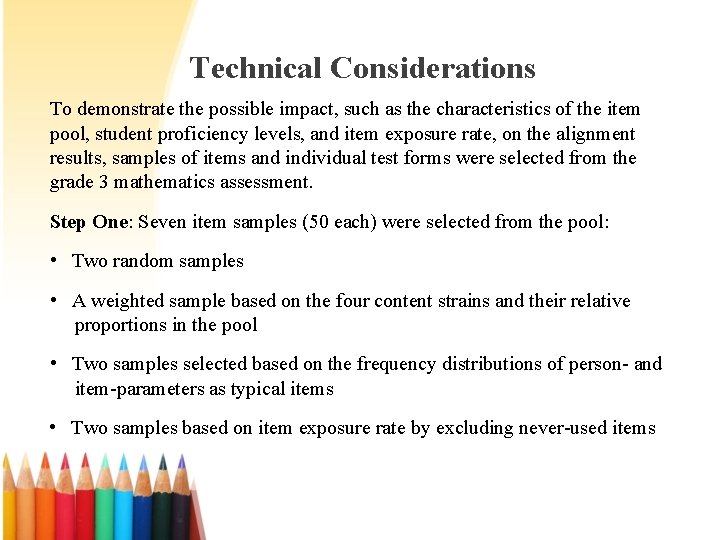

Technical Considerations To demonstrate the possible impact, such as the characteristics of the item pool, student proficiency levels, and item exposure rate, on the alignment results, samples of items and individual test forms were selected from the grade 3 mathematics assessment. Step One: Seven item samples (50 each) were selected from the pool: • Two random samples • A weighted sample based on the four content strains and their relative proportions in the pool • Two samples selected based on the frequency distributions of person- and item-parameters as typical items • Two samples based on item exposure rate by excluding never-used items

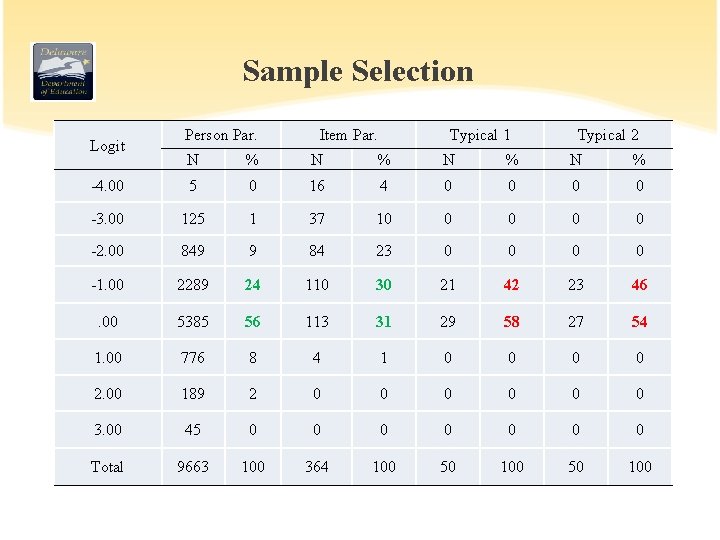

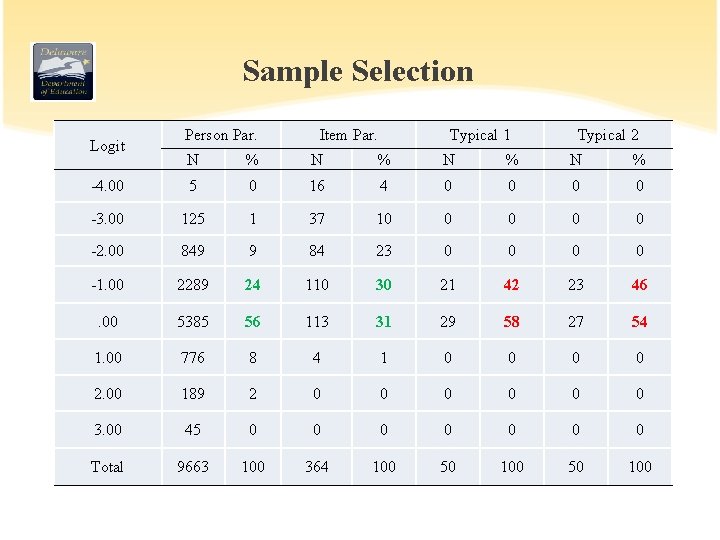

Sample Selection Logit Person Par. Item Par. Typical 1 Typical 2 N % N % -4. 00 5 0 16 4 0 0 -3. 00 125 1 37 10 0 0 -2. 00 849 9 84 23 0 0 -1. 00 2289 24 110 30 21 42 23 46 . 00 5385 56 113 31 29 58 27 54 1. 00 776 8 4 1 0 0 2. 00 189 2 0 0 0 3. 00 45 0 0 0 0 Total 9663 100 364 100 50 100

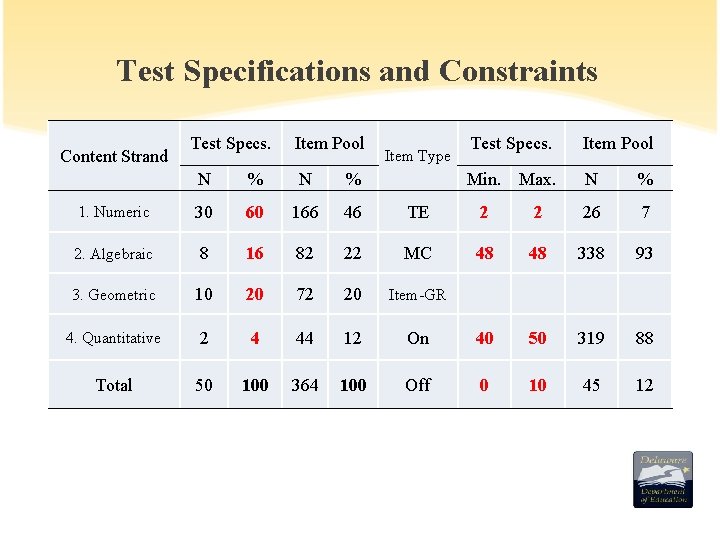

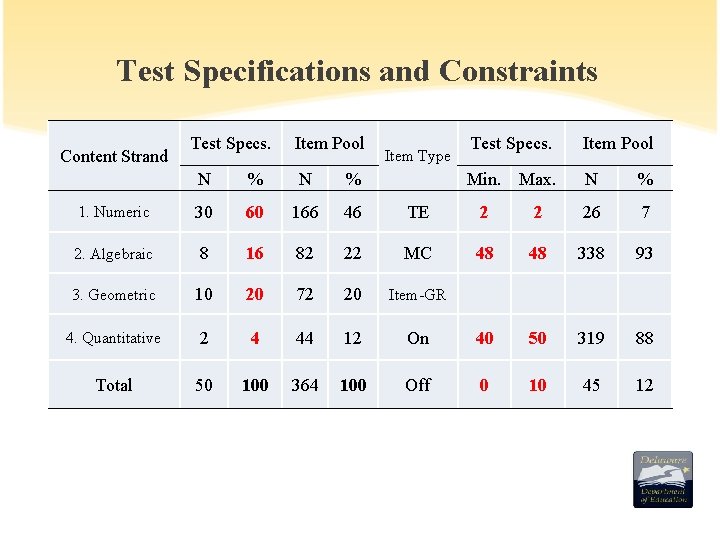

Test Specifications and Constraints Content Strand Test Specs. Item Pool Item Type Test Specs. Item Pool Min. Max. N % N % 1. Numeric 30 60 166 46 TE 2 2 26 7 2. Algebraic 8 16 82 22 MC 48 48 338 93 3. Geometric 10 20 72 20 Item-GR 4. Quantitative 2 4 44 12 On 40 50 319 88 Total 50 100 364 100 Off 0 10 45 12

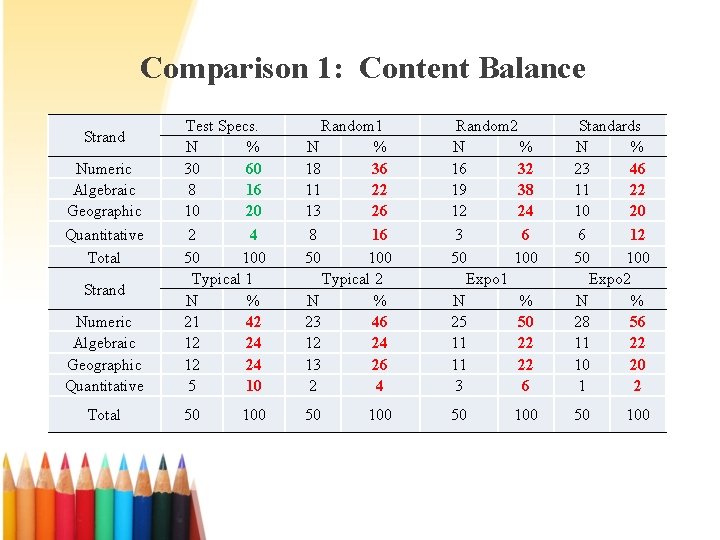

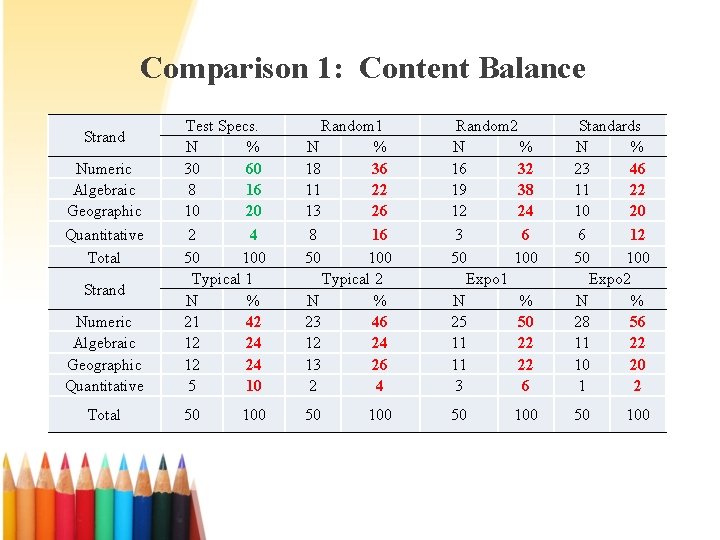

Comparison 1: Content Balance Strand Numeric Algebraic Geographic Quantitative Total Test Specs. N % 30 60 8 16 10 20 2 4 50 100 Typical 1 N % 21 42 12 24 5 10 Random 1 N % 18 36 11 22 13 26 8 16 50 100 Typical 2 N % 23 46 12 24 13 26 2 4 Random 2 N % 16 32 19 38 12 24 3 6 50 100 Expo 1 N % 25 50 11 22 3 6 Standards N % 23 46 11 22 10 20 6 12 50 100 Expo 2 N % 28 56 11 22 10 20 1 2 50 50 100 100

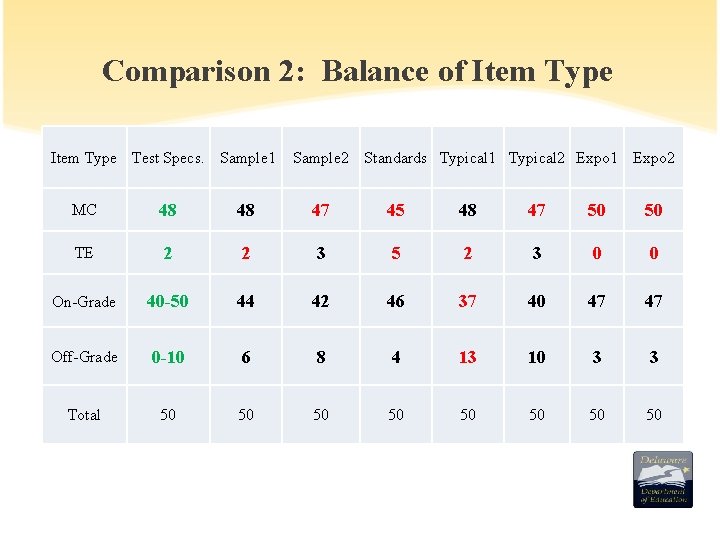

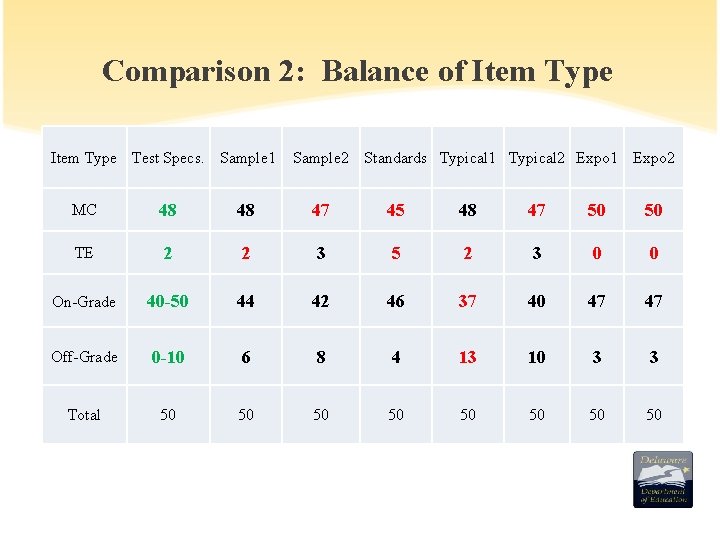

Comparison 2: Balance of Item Type Test Specs. Sample 1 Sample 2 Standards Typical 1 Typical 2 Expo 1 Expo 2 MC 48 48 47 45 48 47 50 50 TE 2 2 3 5 2 3 0 0 On-Grade 40 -50 44 42 46 37 40 47 47 Off-Grade 0 -10 6 8 4 13 10 3 3 Total 50 50

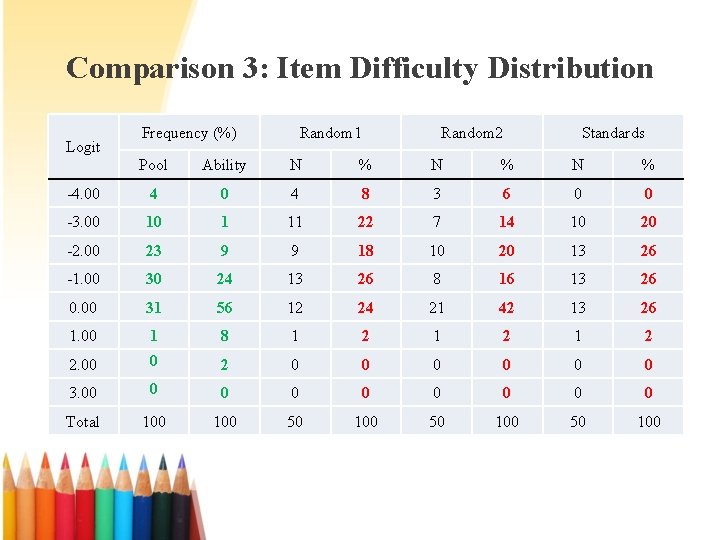

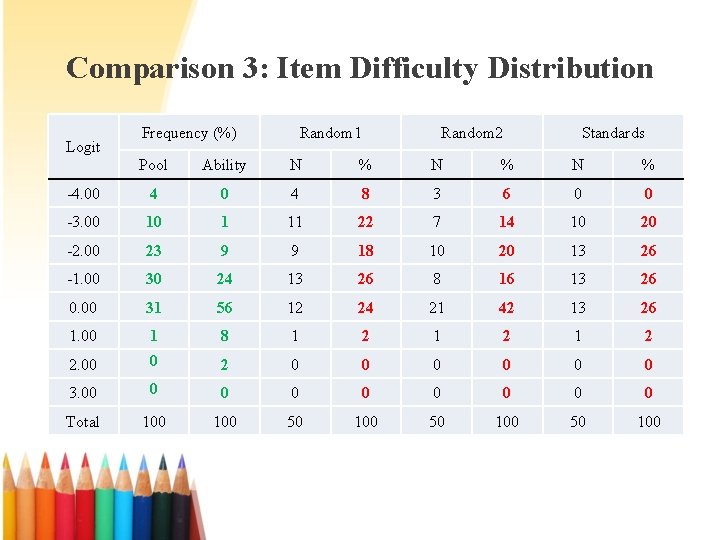

Comparison 3: Item Difficulty Distribution Logit Frequency (%) Random 1 Random 2 Standards Pool Ability N % N % -4. 00 4 8 3 6 0 0 -3. 00 10 1 11 22 7 14 10 20 -2. 00 23 9 9 18 10 20 13 26 -1. 00 30 24 13 26 8 16 13 26 0. 00 31 56 12 24 21 42 13 26 1. 00 1 8 1 2 1 2 2. 00 0 2 0 0 0 3. 00 0 0 0 0 Total 100 50 100

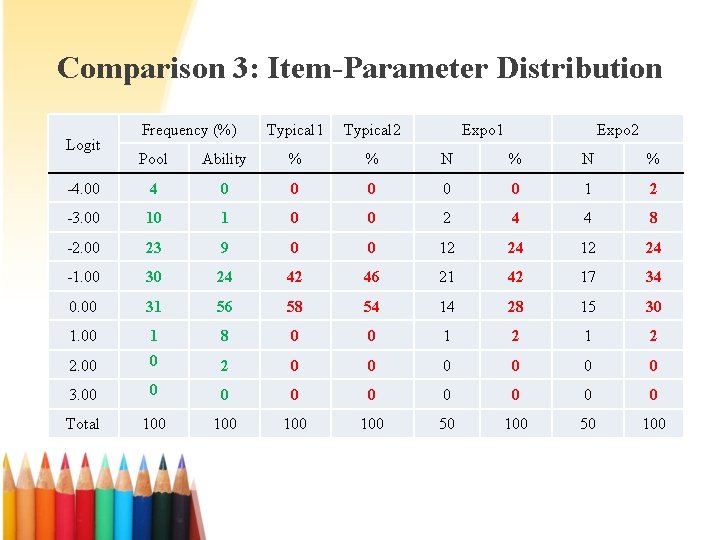

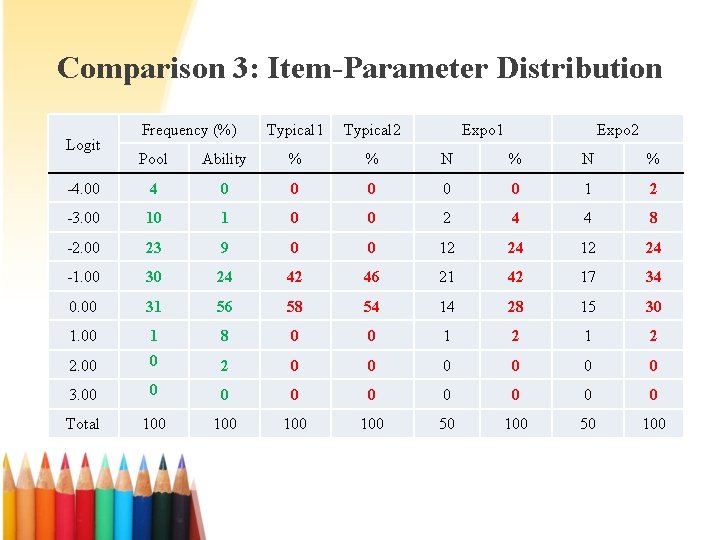

Comparison 3: Item-Parameter Distribution Logit Frequency (%) Typical 1 Typical 2 Expo 1 Expo 2 Pool Ability % % N % -4. 00 4 0 0 0 1 2 -3. 00 10 1 0 0 2 4 4 8 -2. 00 23 9 0 0 12 24 -1. 00 30 24 42 46 21 42 17 34 0. 00 31 56 58 54 14 28 15 30 1. 00 1 8 0 0 1 2 2. 00 0 2 0 0 0 3. 00 0 0 0 0 Total 100 100 50 100

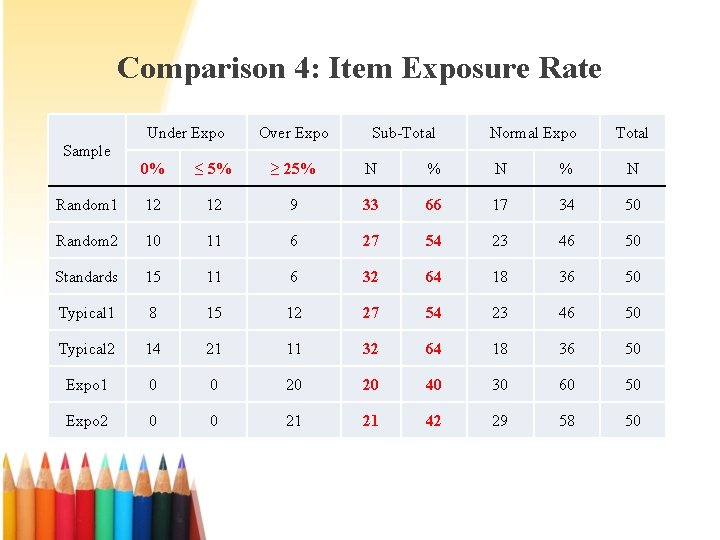

Comparison 4: Item Exposure Rate Under Expo Sample Over Expo Sub-Total Normal Expo Total 0% ≤ 5% ≥ 25% N % N Random 1 12 12 9 33 66 17 34 50 Random 2 10 11 6 27 54 23 46 50 Standards 15 11 6 32 64 18 36 50 Typical 1 8 15 12 27 54 23 46 50 Typical 2 14 21 11 32 64 18 36 50 Expo 1 0 0 20 20 40 30 60 50 Expo 2 0 0 21 21 42 29 58 50

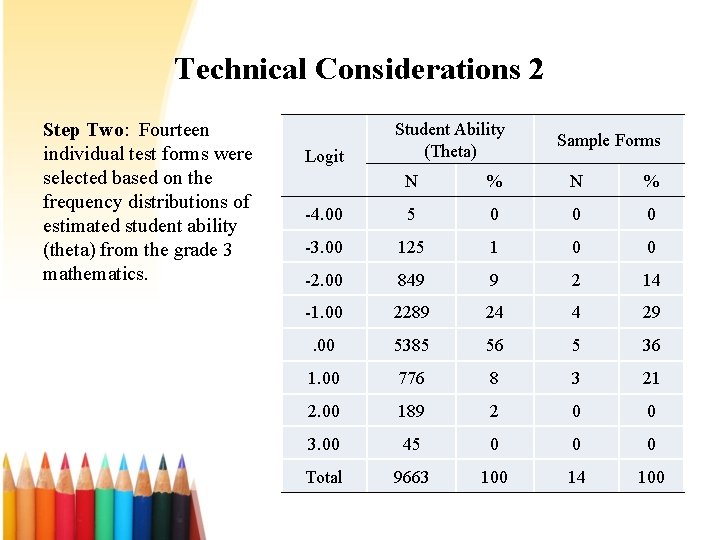

Technical Considerations 2 Step Two: Fourteen individual test forms were selected based on the frequency distributions of estimated student ability (theta) from the grade 3 mathematics. Logit Student Ability (Theta) Sample Forms N % -4. 00 5 0 0 0 -3. 00 125 1 0 0 -2. 00 849 9 2 14 -1. 00 2289 24 4 29 . 00 5385 56 5 36 1. 00 776 8 3 21 2. 00 189 2 0 0 3. 00 45 0 0 0 Total 9663 100 14 100

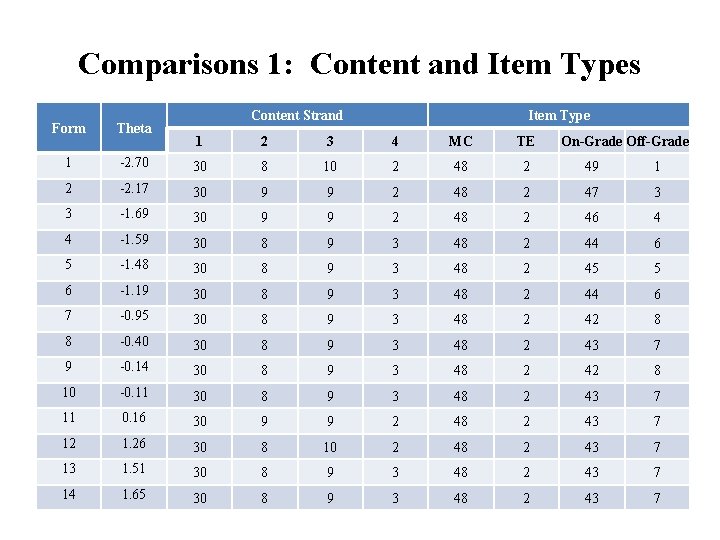

Comparisons 1: Content and Item Types Form Theta 1 Content Strand Item Type 1 2 3 4 MC TE On-Grade Off-Grade -2. 70 30 8 10 2 48 2 49 1 2 -2. 17 30 9 9 2 48 2 47 3 3 -1. 69 30 9 9 2 48 2 46 4 4 -1. 59 30 8 9 3 48 2 44 6 5 -1. 48 30 8 9 3 48 2 45 5 6 -1. 19 30 8 9 3 48 2 44 6 7 -0. 95 30 8 9 3 48 2 42 8 8 -0. 40 30 8 9 3 48 2 43 7 9 -0. 14 30 8 9 3 48 2 42 8 10 -0. 11 30 8 9 3 48 2 43 7 11 0. 16 30 9 9 2 48 2 43 7 12 1. 26 30 8 10 2 48 2 43 7 13 1. 51 30 8 9 3 48 2 43 7 14 1. 65 30 8 9 3 48 2 43 7

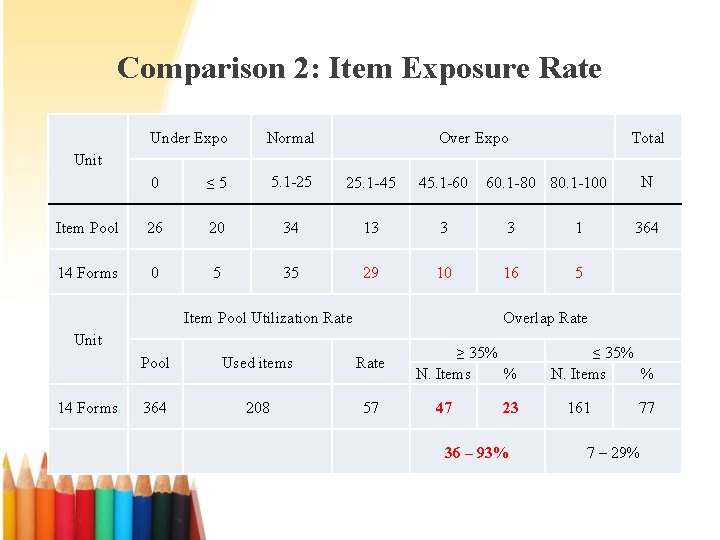

Comparison 2: Item Exposure Rate Under Expo Normal Over Expo Total 0 ≤ 5 5. 1 -25 25. 1 -45 45. 1 -60 Item Pool 26 20 34 13 3 3 1 14 Forms 0 5 35 29 10 16 5 Unit Item Pool Utilization Rate 364 Overlap Rate Unit 14 Forms N 60. 1 -80 80. 1 -100 Pool Used items Rate 364 208 57 ≥ 35% N. Items % 47 23 36 – 93% ≤ 35% N. Items % 161 77 7 – 29%

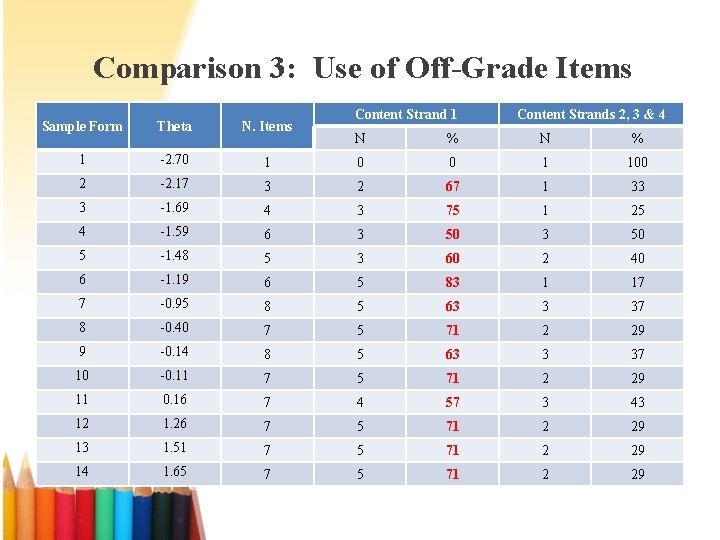

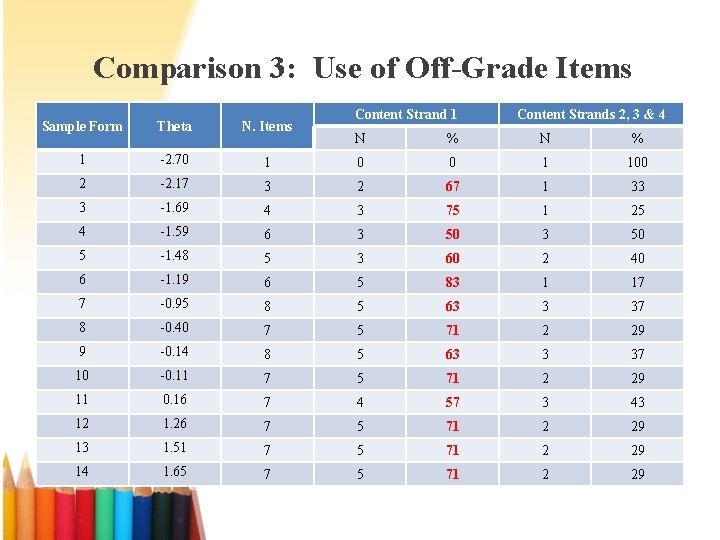

Comparison 3: Use of Off-Grade Items Sample Form Theta N. Items 1 -2. 70 2 Content Strand 1 Content Strands 2, 3 & 4 N % 1 0 0 1 100 -2. 17 3 2 67 1 33 3 -1. 69 4 3 75 1 25 4 -1. 59 6 3 50 5 -1. 48 5 3 60 2 40 6 -1. 19 6 5 83 1 17 7 -0. 95 8 5 63 3 37 8 -0. 40 7 5 71 2 29 9 -0. 14 8 5 63 3 37 10 -0. 11 7 5 71 2 29 11 0. 16 7 4 57 3 43 12 1. 26 7 5 71 2 29 13 1. 51 7 5 71 2 29 14 1. 65 7 5 71 2 29

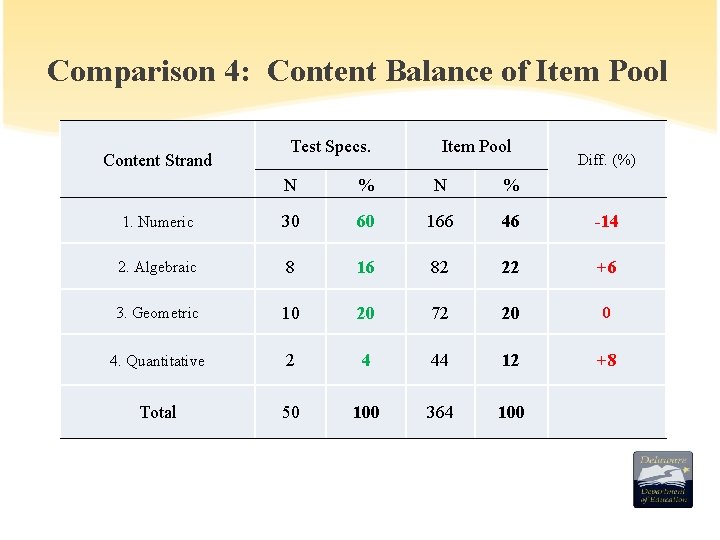

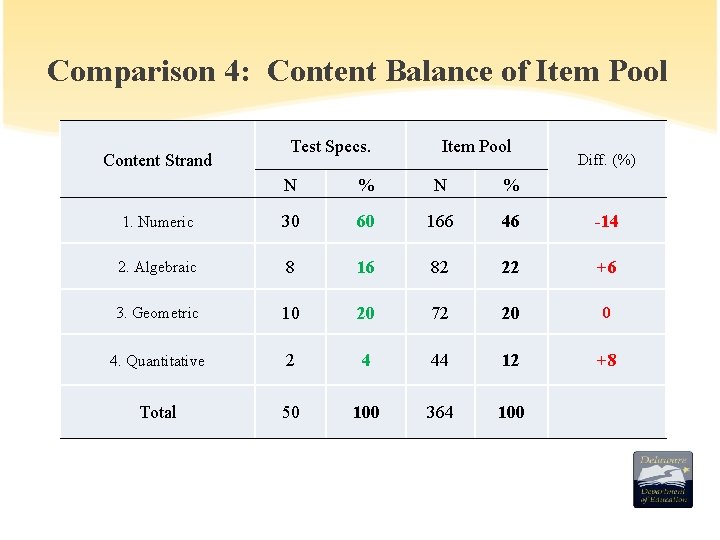

Comparison 4: Content Balance of Item Pool Content Strand Test Specs. Item Pool Diff. (%) N % 1. Numeric 30 60 166 46 -14 2. Algebraic 8 16 82 22 +6 3. Geometric 10 20 72 20 0 4. Quantitative 2 4 44 12 +8 Total 50 100 364 100

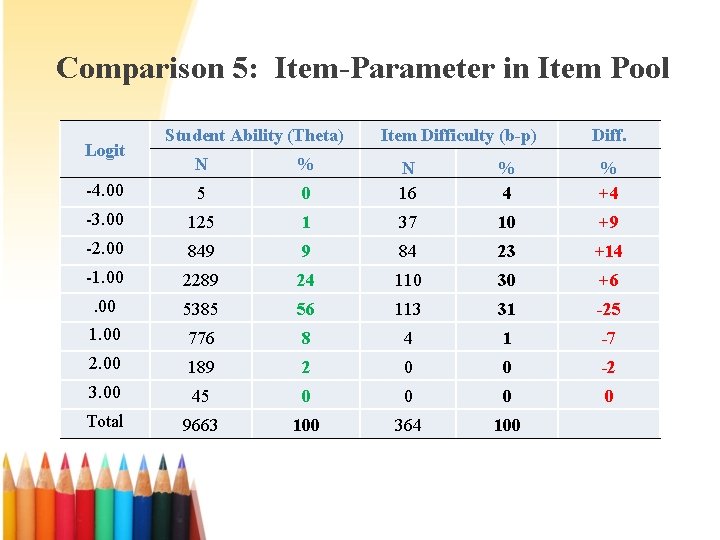

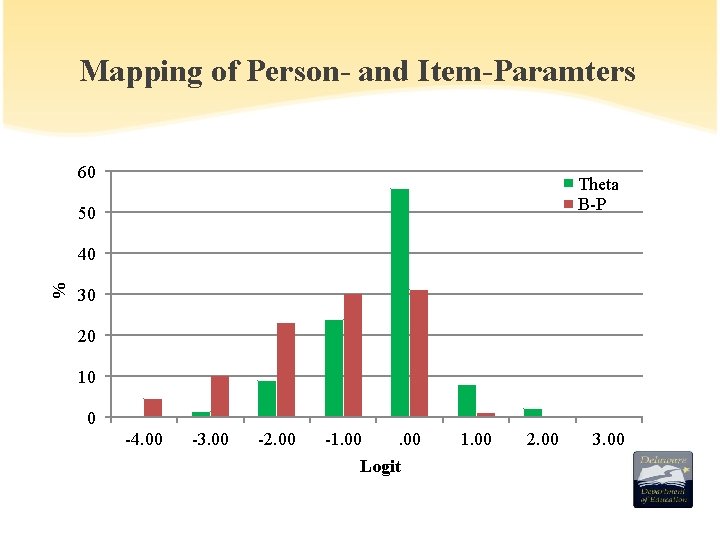

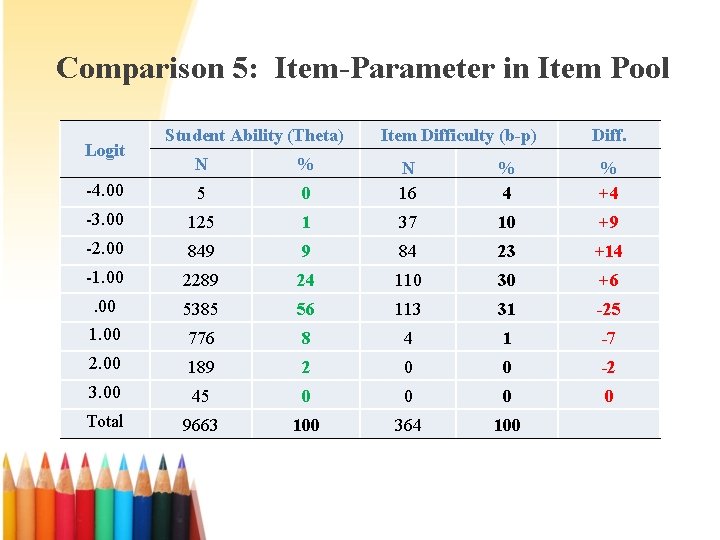

Comparison 5: Item-Parameter in Item Pool Logit Student Ability (Theta) N % -4. 00 5 -3. 00 Item Difficulty (b-p) Diff. 0 N 16 % 4 % +4 125 1 37 10 +9 -2. 00 849 9 84 23 +14 -1. 00 2289 24 110 30 +6 . 00 5385 56 113 31 -25 1. 00 776 8 4 1 -7 2. 00 189 2 0 0 -2 3. 00 45 0 0 Total 9663 100 364 100

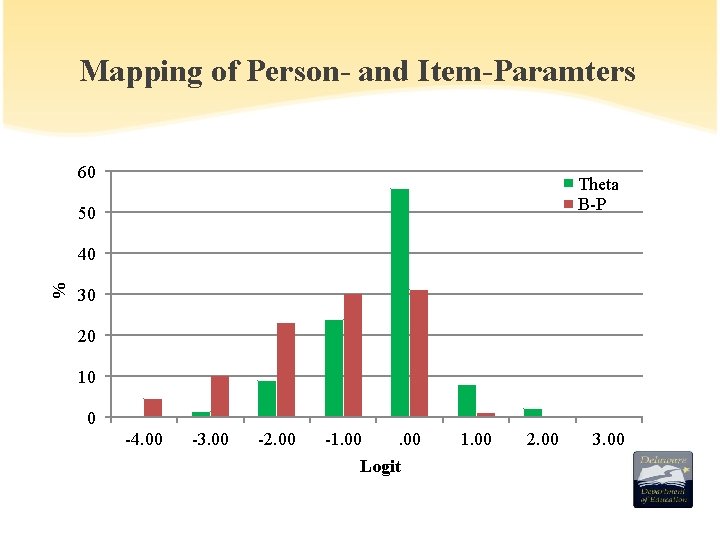

Mapping of Person- and Item-Paramters 60 Theta B-P 50 % 40 30 20 10 0 -4. 00 -3. 00 -2. 00 -1. 00 Logit 1. 00 2. 00 3. 00

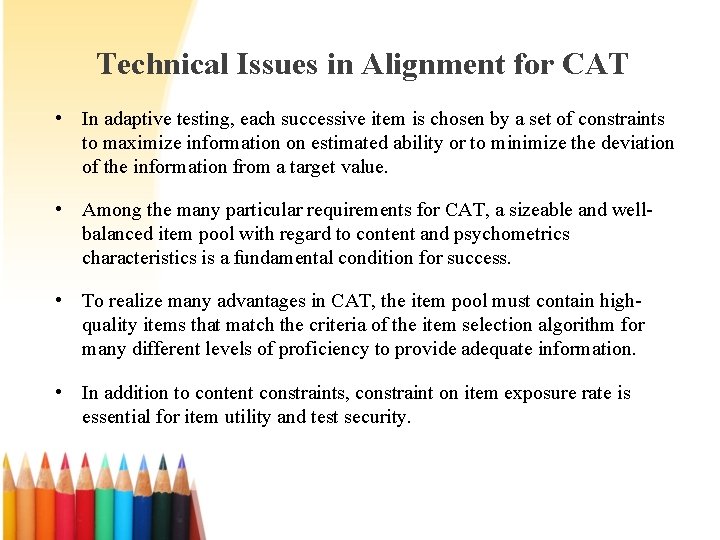

Technical Issues in Alignment for CAT • In adaptive testing, each successive item is chosen by a set of constraints to maximize information on estimated ability or to minimize the deviation of the information from a target value. • Among the many particular requirements for CAT, a sizeable and wellbalanced item pool with regard to content and psychometrics characteristics is a fundamental condition for success. • To realize many advantages in CAT, the item pool must contain highquality items that match the criteria of the item selection algorithm for many different levels of proficiency to provide adequate information. • In addition to content constraints, constraint on item exposure rate is essential for item utility and test security.

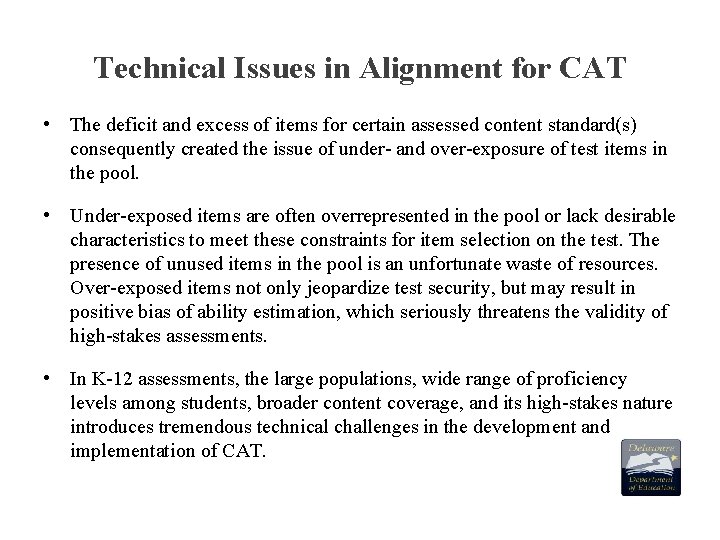

Technical Issues in Alignment for CAT • The deficit and excess of items for certain assessed content standard(s) consequently created the issue of under- and over-exposure of test items in the pool. • Under-exposed items are often overrepresented in the pool or lack desirable characteristics to meet these constraints for item selection on the test. The presence of unused items in the pool is an unfortunate waste of resources. Over-exposed items not only jeopardize test security, but may result in positive bias of ability estimation, which seriously threatens the validity of high-stakes assessments. • In K-12 assessments, the large populations, wide range of proficiency levels among students, broader content coverage, and its high-stakes nature introduces tremendous technical challenges in the development and implementation of CAT.

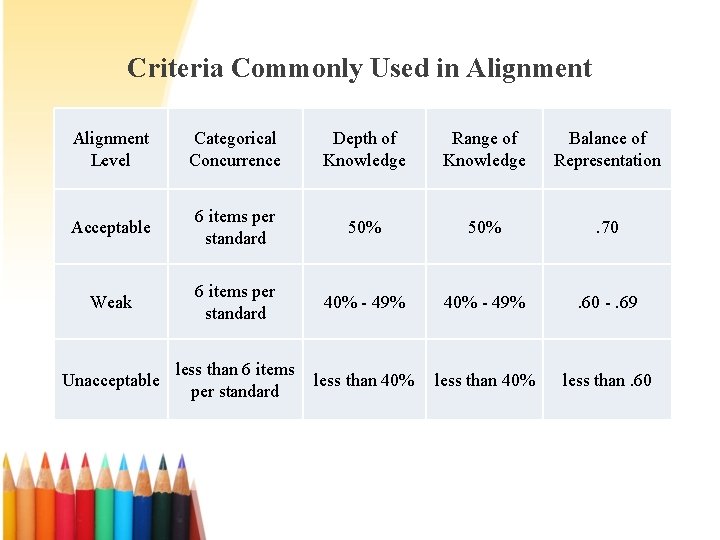

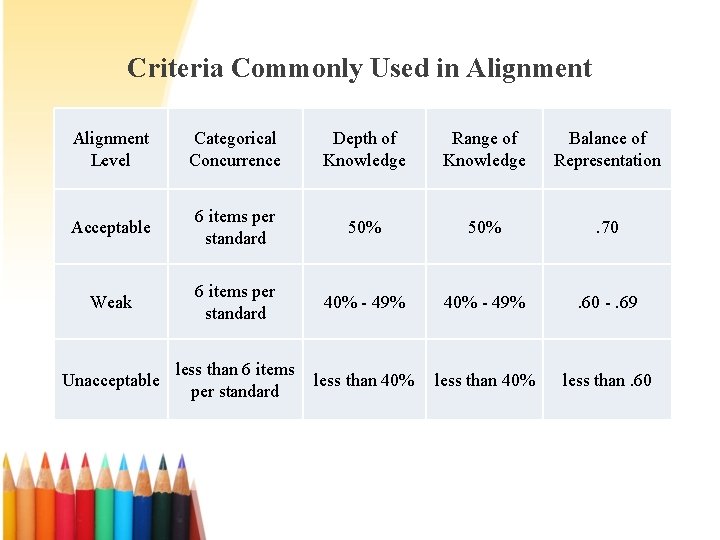

Criteria Commonly Used in Alignment Level Categorical Concurrence Depth of Knowledge Range of Knowledge Balance of Representation Acceptable 6 items per standard 50% . 70 Weak 6 items per standard 40% - 49% . 60 -. 69 Unacceptable less than 6 items per standard less than 40% less than. 60

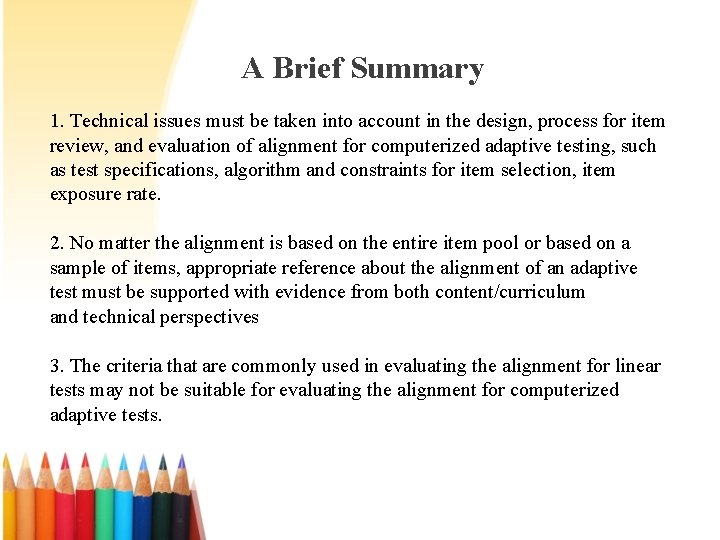

A Brief Summary 1. Technical issues must be taken into account in the design, process for item review, and evaluation of alignment for computerized adaptive testing, such as test specifications, algorithm and constraints for item selection, item exposure rate. 2. No matter the alignment is based on the entire item pool or based on a sample of items, appropriate reference about the alignment of an adaptive test must be supported with evidence from both content/curriculum and technical perspectives 3. The criteria that are commonly used in evaluating the alignment for linear tests may not be suitable for evaluating the alignment for computerized adaptive tests.