TCP Veno TCP Enhancement for Transmission Over Wireless

- Slides: 55

TCP Veno: TCP Enhancement for Transmission Over Wireless Access Networks IEEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL. 21, NO. 2, FEBRUARY 2003 Speaker : Chen Yo. Chuan May 14, 2003 1

Outline • Introduction – TCP Reno, TCP Vegas • TCP Veno algorithm • Performance Evaluation of TCP Veno • Concluding Remarks and Future Works May 14, 2003 2

Introduction • Wireless communication has been making significant progress – Usually using wired backbone networks – Many applications on the networks run TCP/IP • Transmission control protocol (TCP) – a reliable connection-oriented protocol – Implement flow control (sliding window) • TCP Reno is most popular in real network – Include SS, CA, Fast retransmission… algorithm May 14, 2003 3

Introduction • Reno treats the occurrence of packet loss as a manifestation of network congestion • In wireless environment, packet loss is often induced by noise, link error… • To tackle this problem, 2 parts of solution – How to distinguish between random loss and congestion loss? – How to make use of that information to refine the congestion-window adjustment process in Reno? • TCP Veno (modify Vegas & Reno) May 14, 2003 4

Relative work • TCP Reno • TCP Vegas May 14, 2003 5

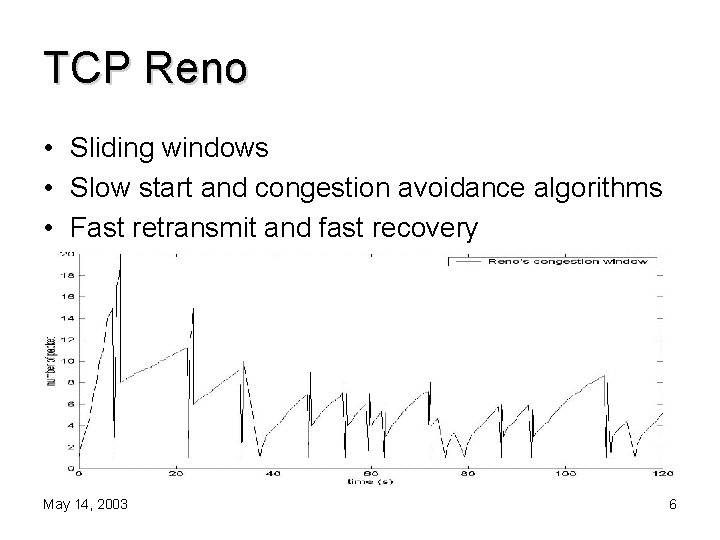

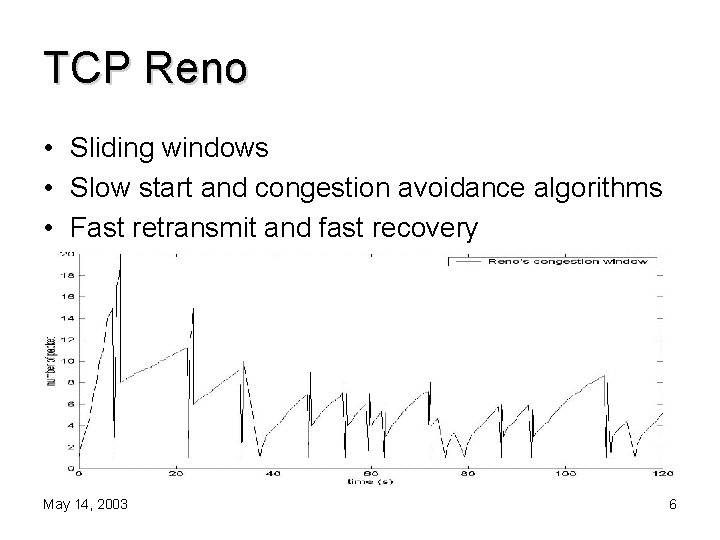

TCP Reno • Sliding windows • Slow start and congestion avoidance algorithms • Fast retransmit and fast recovery May 14, 2003 6

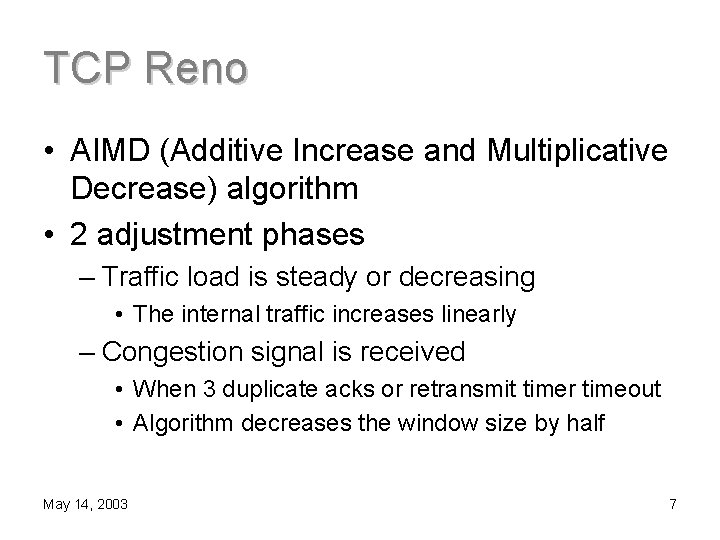

TCP Reno • AIMD (Additive Increase and Multiplicative Decrease) algorithm • 2 adjustment phases – Traffic load is steady or decreasing • The internal traffic increases linearly – Congestion signal is received • When 3 duplicate acks or retransmit timer timeout • Algorithm decreases the window size by half May 14, 2003 7

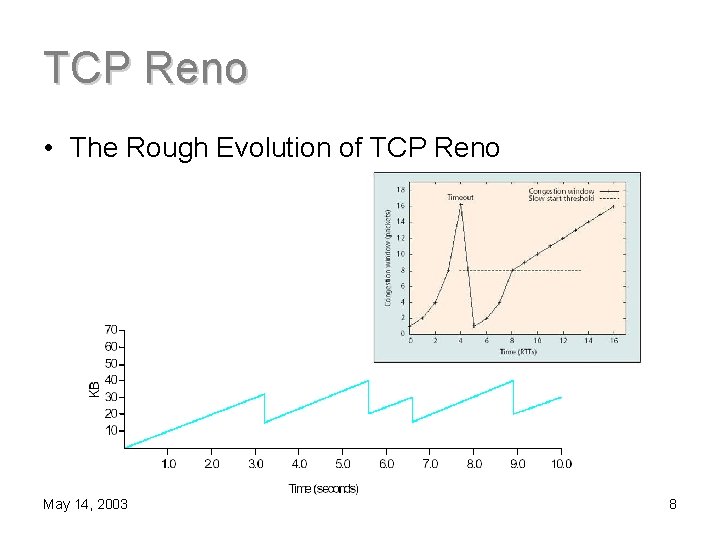

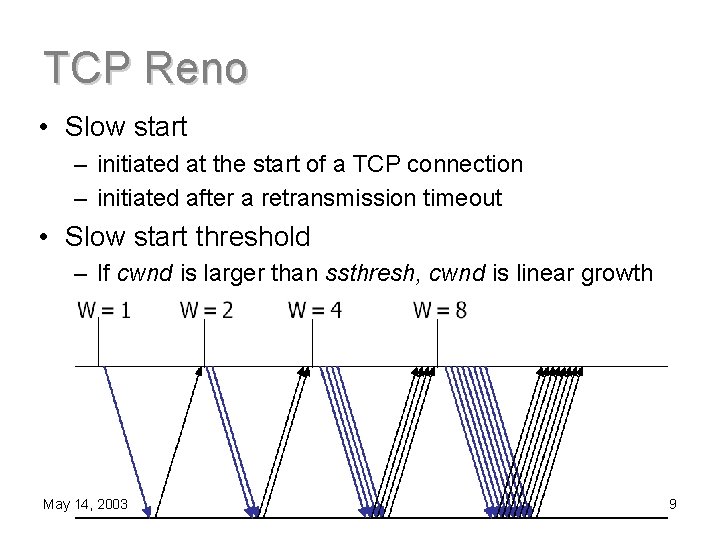

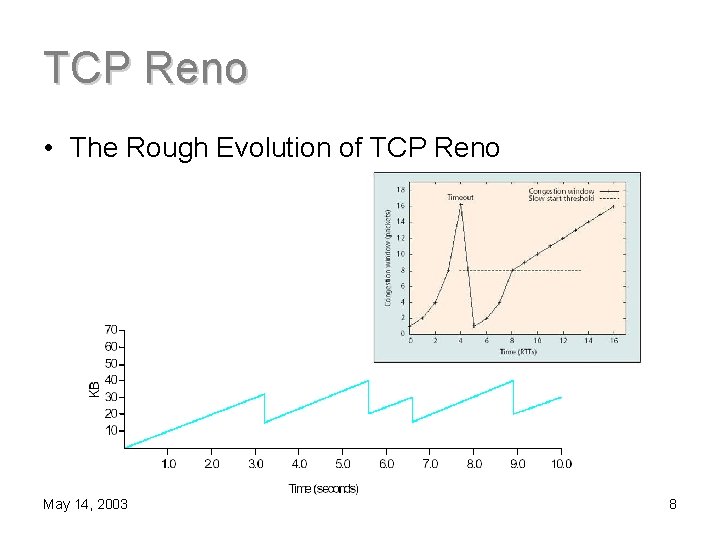

TCP Reno • The Rough Evolution of TCP Reno May 14, 2003 8

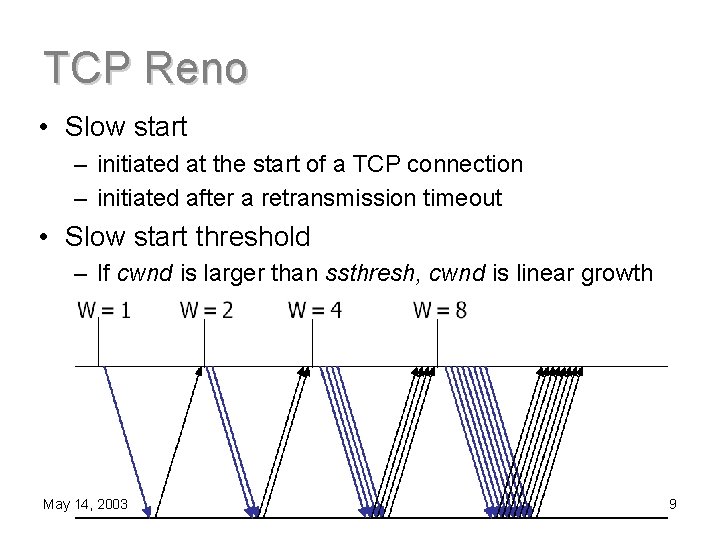

TCP Reno • Slow start – initiated at the start of a TCP connection – initiated after a retransmission timeout • Slow start threshold – If cwnd is larger than ssthresh, cwnd is linear growth May 14, 2003 9

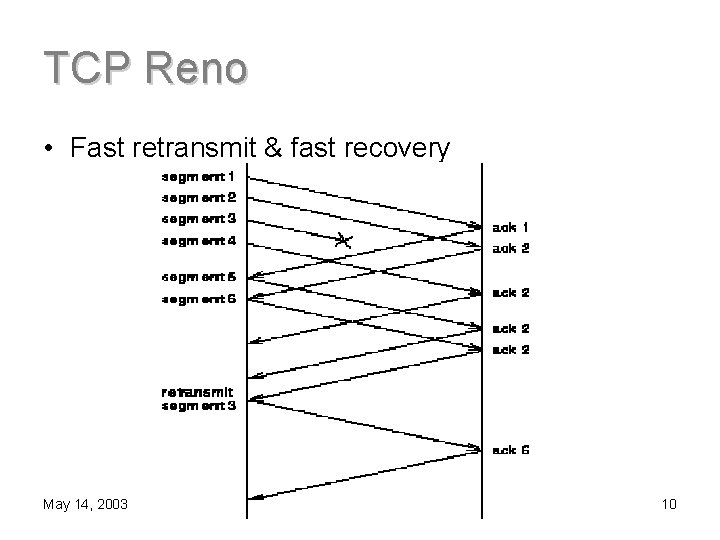

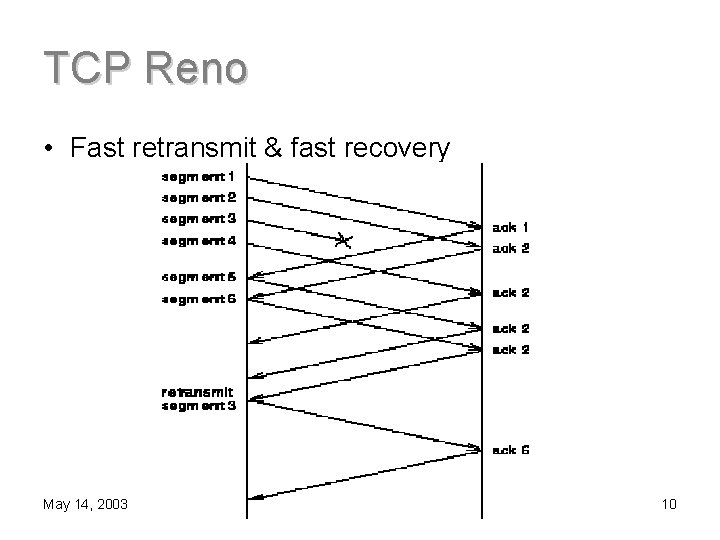

TCP Reno • Fast retransmit & fast recovery May 14, 2003 10

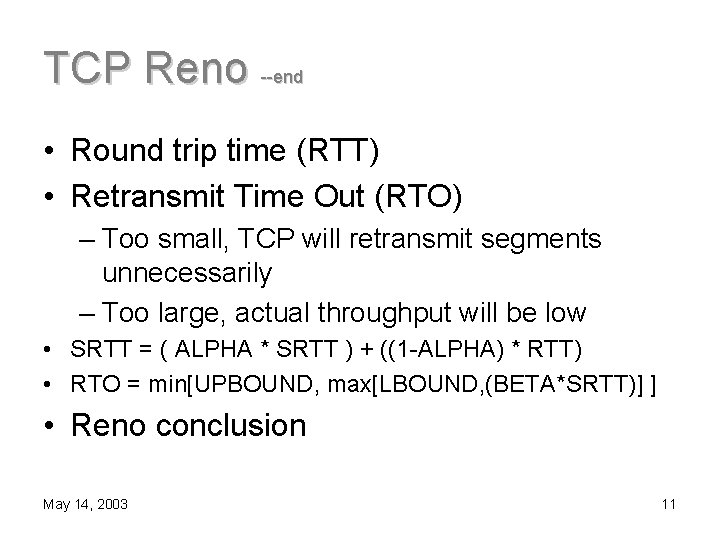

TCP Reno --end • Round trip time (RTT) • Retransmit Time Out (RTO) – Too small, TCP will retransmit segments unnecessarily – Too large, actual throughput will be low • SRTT = ( ALPHA * SRTT ) + ((1 -ALPHA) * RTT) • RTO = min[UPBOUND, max[LBOUND, (BETA*SRTT)] ] • Reno conclusion May 14, 2003 11

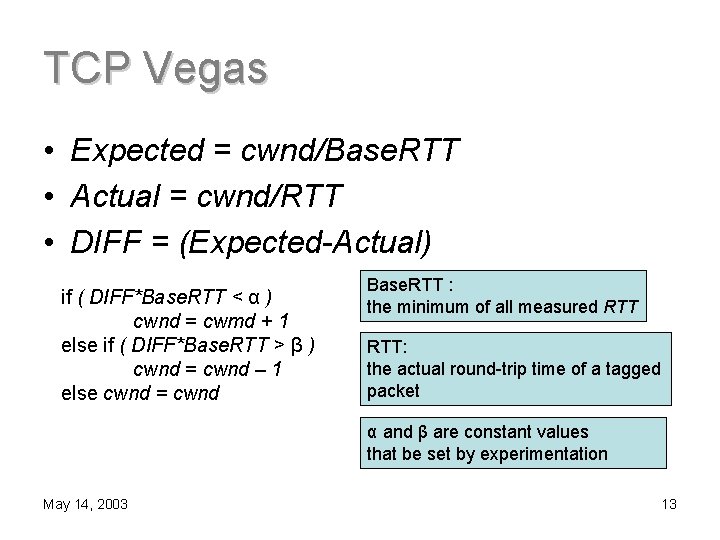

TCP Vegas • Basic ideas – Before packet loss occurs, detect the early stage of congestion in the routers between source and destination – Additively decrease the sending rate when incipient congestion is detected May 14, 2003 12

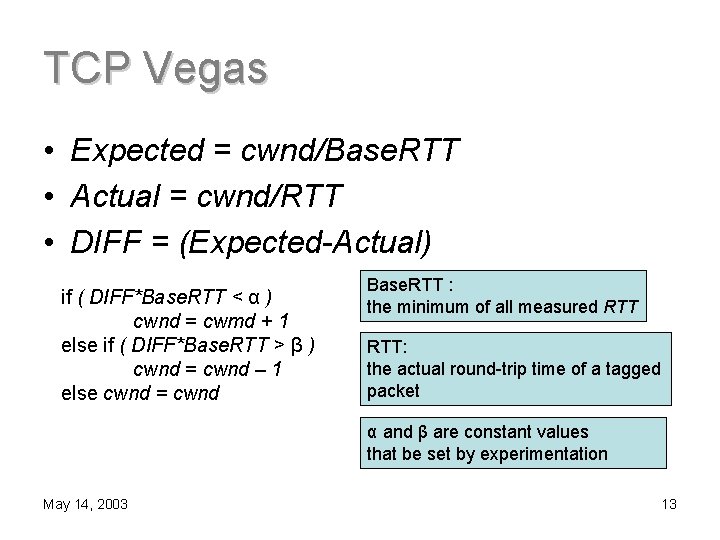

TCP Vegas • Expected = cwnd/Base. RTT • Actual = cwnd/RTT • DIFF = (Expected-Actual) if ( DIFF*Base. RTT < α ) cwnd = cwmd + 1 else if ( DIFF*Base. RTT > β ) cwnd = cwnd – 1 else cwnd = cwnd Base. RTT : the minimum of all measured RTT: the actual round-trip time of a tagged packet α and β are constant values that be set by experimentation May 14, 2003 13

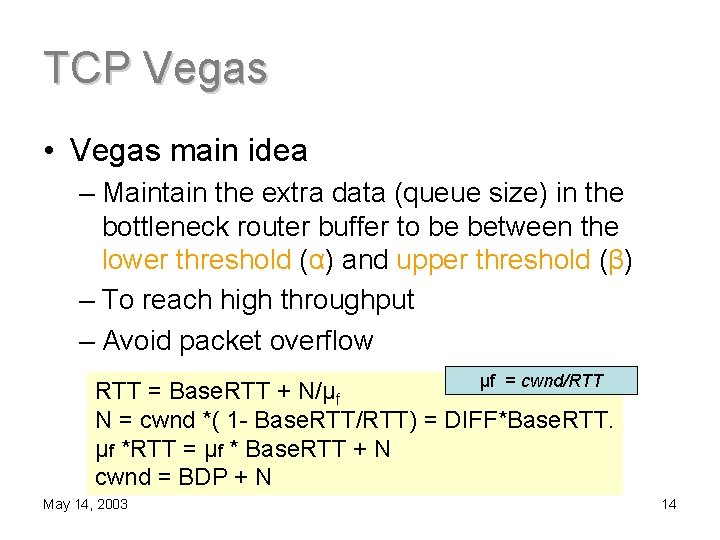

TCP Vegas • Vegas main idea – Maintain the extra data (queue size) in the bottleneck router buffer to be between the lower threshold (α) and upper threshold (β) – To reach high throughput – Avoid packet overflow μf = cwnd/RTT = Base. RTT + N/μf N = cwnd *( 1 - Base. RTT/RTT) = DIFF*Base. RTT. μf *RTT = μf * Base. RTT + N cwnd = BDP + N May 14, 2003 14

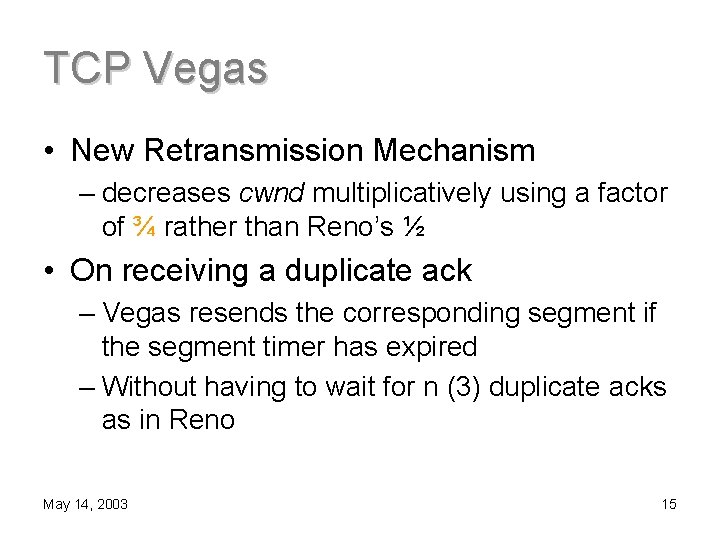

TCP Vegas • New Retransmission Mechanism – decreases cwnd multiplicatively using a factor of ¾ rather than Reno’s ½ • On receiving a duplicate ack – Vegas resends the corresponding segment if the segment timer has expired – Without having to wait for n (3) duplicate acks as in Reno May 14, 2003 15

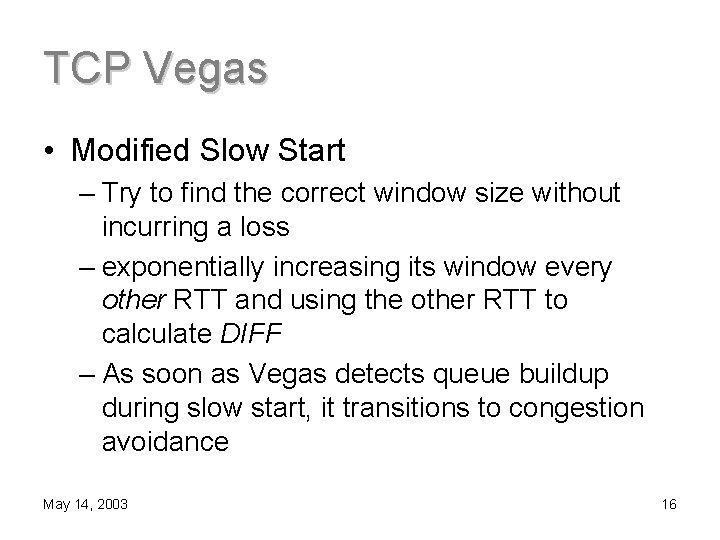

TCP Vegas • Modified Slow Start – Try to find the correct window size without incurring a loss – exponentially increasing its window every other RTT and using the other RTT to calculate DIFF – As soon as Vegas detects queue buildup during slow start, it transitions to congestion avoidance May 14, 2003 16

TCP Vegas • Problem with TCP Vegas – Asymmetry – Rerouting problem – Persistent congestion May 14, 2003 17

TCP Veno algorithm • Distinguishing between congestive & noncongestive (random) states • Using N as an indication of whether the connection is in a congestive state • N<β when packet loss is detected – Veno assume that loss is random • N>=β when packet loss is detected – Veno assume that loss is congestion May 14, 2003 18

TCP Veno algorithm • In Vegas, Base. RTT is continually updated throughout the TCP connection – with the min RTT collected so far • In this paper, Base. RTT is reset whenever packet loss is detected – either due to time-out or duplicate ACKs • To avoid Base. RTT change from time to time May 14, 2003 19

TCP Veno algorithm • Congestion Control Based on Connection State • Slow start algorithm (cwnd<sstresh) – Employ the same algorithm as Reno • Additive increase algorithm (cwnd>sstresh) – Specifically, cwnd will be increased by 1 after each RTT – cwnd is set to cwnd+1/cwnd May 14, 2003 20

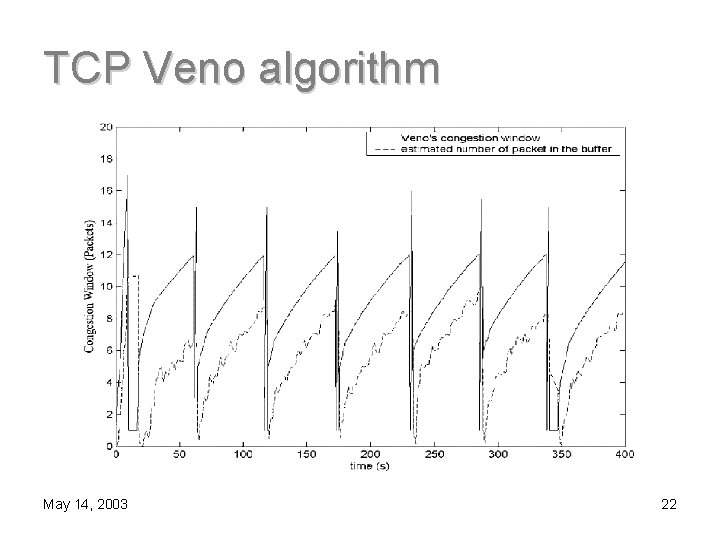

TCP Veno algorithm • Additive increase algorithm • Goal : let connection can stay in operating region longer if ( DIFF*Base. RTT < β ) // available bandwidth under-utilized cwnd=cwnd+1/cwnd when every new ack received else if (DIFF*Base. RTT ≥ β ) // available bandwidth fully utilized cwnd=cwnd+1/cwnd when every other new ack received May 14, 2003 21

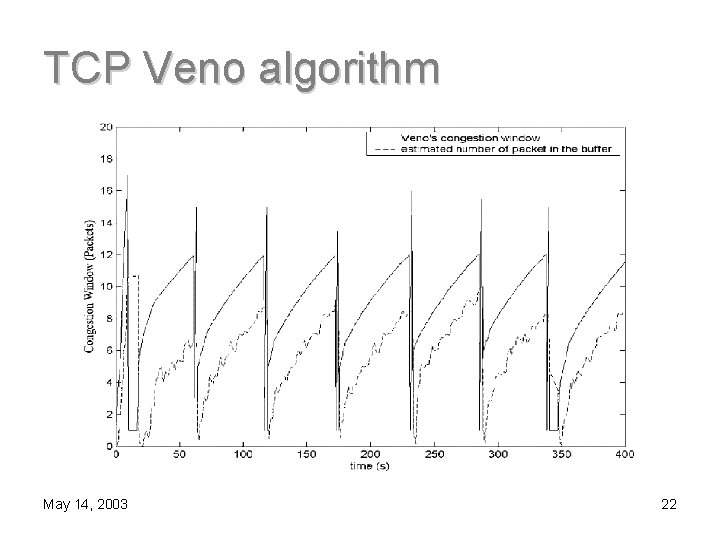

TCP Veno algorithm May 14, 2003 22

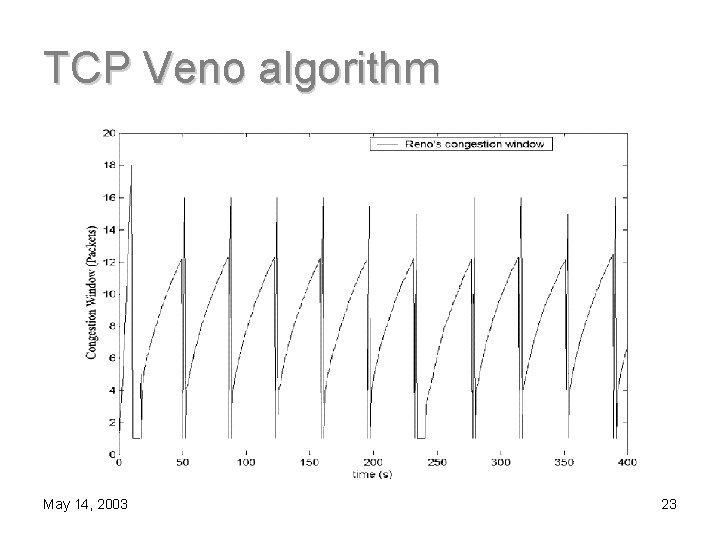

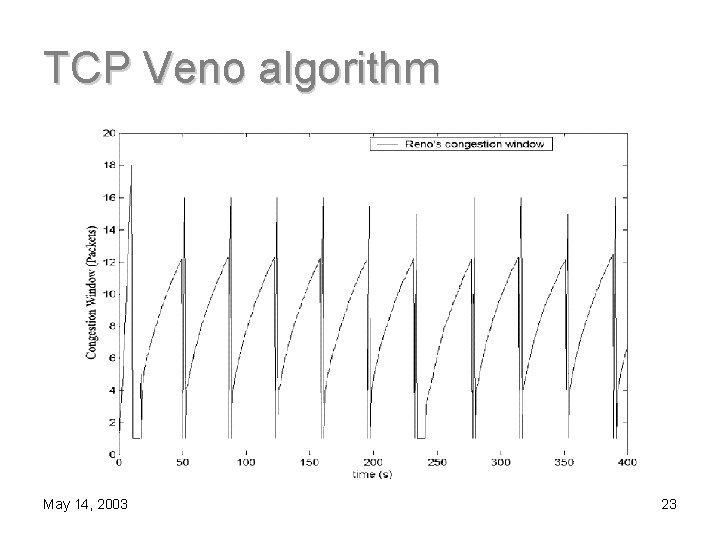

TCP Veno algorithm May 14, 2003 23

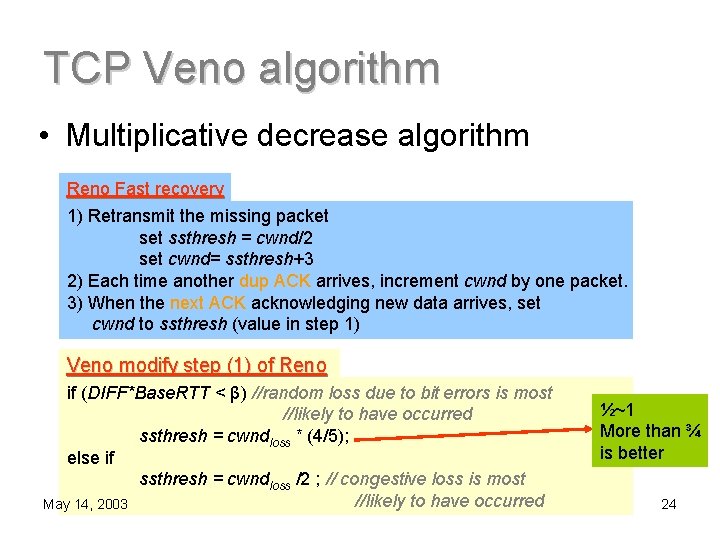

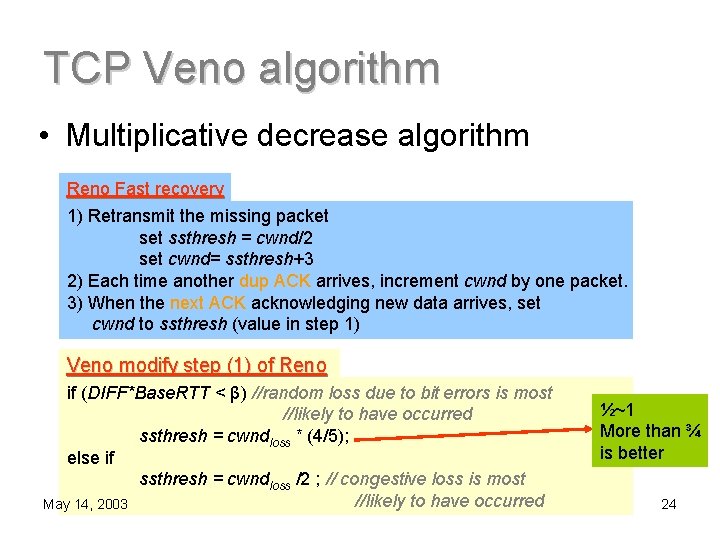

TCP Veno algorithm • Multiplicative decrease algorithm Reno Fast recovery 1) Retransmit the missing packet ssthresh = cwnd/2 set cwnd= ssthresh+3 2) Each time another dup ACK arrives, increment cwnd by one packet. 3) When the next ACK acknowledging new data arrives, set cwnd to ssthresh (value in step 1) Veno modify step (1) of Reno if (DIFF*Base. RTT < β) //random loss due to bit errors is most //likely to have occurred ssthresh = cwndloss * (4/5); else if ssthresh = cwndloss /2 ; // congestive loss is most //likely to have occurred May 14, 2003 ½~1 More than ¾ is better 24

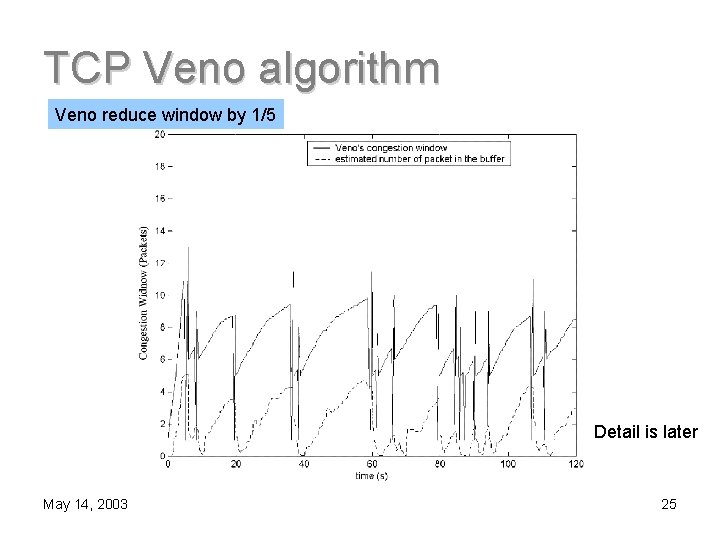

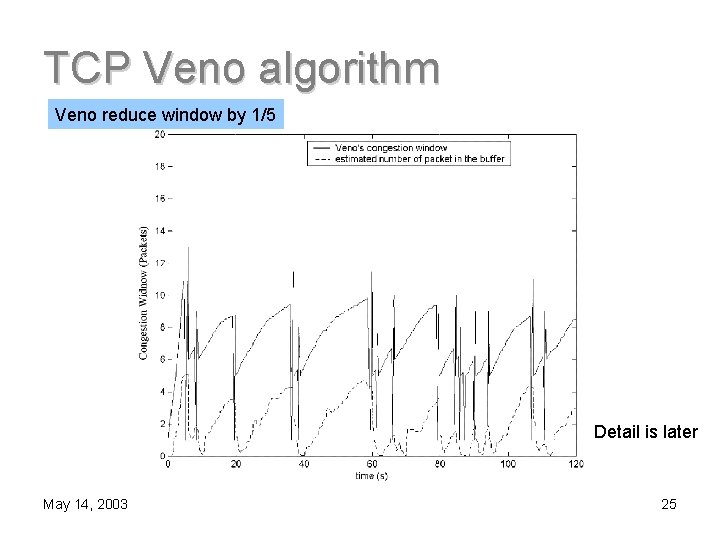

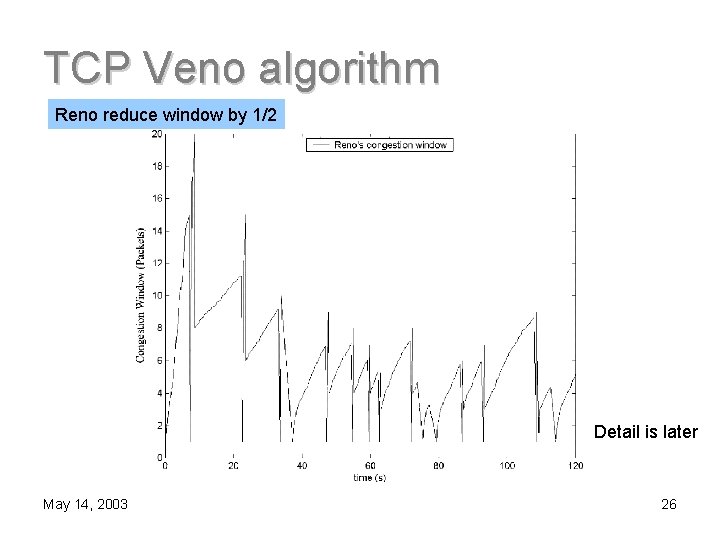

TCP Veno algorithm Veno reduce window by 1/5 Detail is later May 14, 2003 25

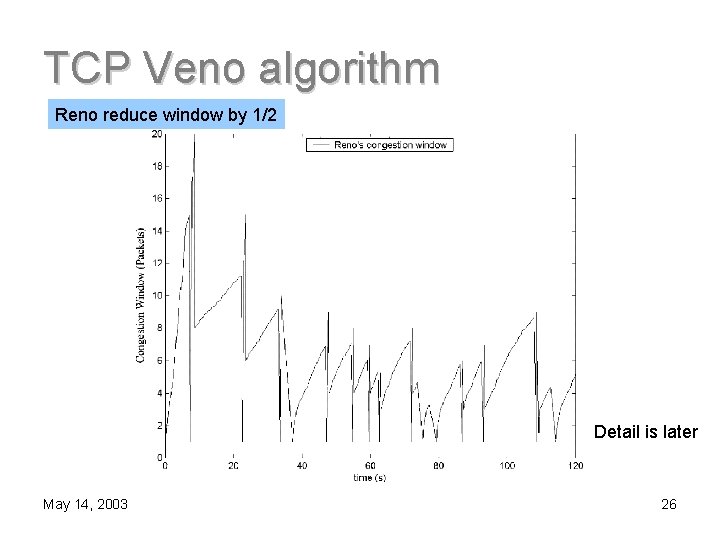

TCP Veno algorithm Reno reduce window by 1/2 Detail is later May 14, 2003 26

TCP Veno algorithm --end • In summary, veno only refines the additive increase , multiplicative decrease (AIMD) • All other parts of Reno remain intack – Including slow start, fast retransmit, fast recovery, computation of retransmission timeout algorithm May 14, 2003 27

Performance evaluation of TCP Veno Outline 1. Verification of packet loss distinguishing scheme in Veno a. Experimental Network setup b. The verification of packet loss distinguishing scheme 2. Single connection experiments 3. Experiment involving multiple co-existing connections 4. Live internet measurements May 14, 2003 28

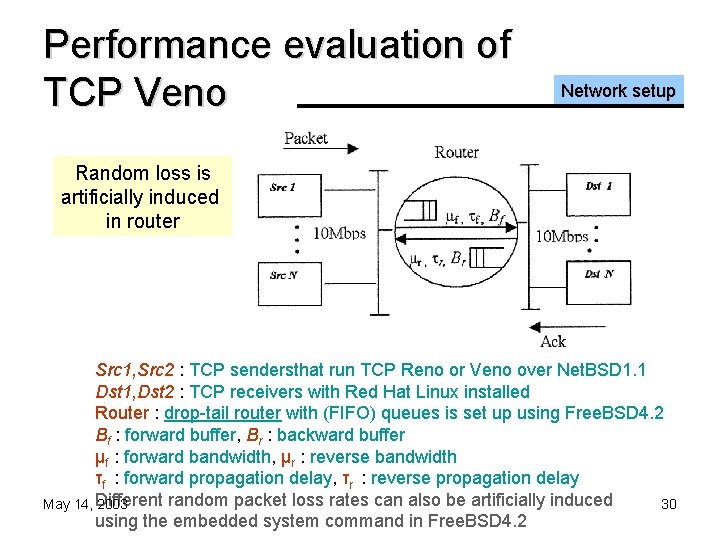

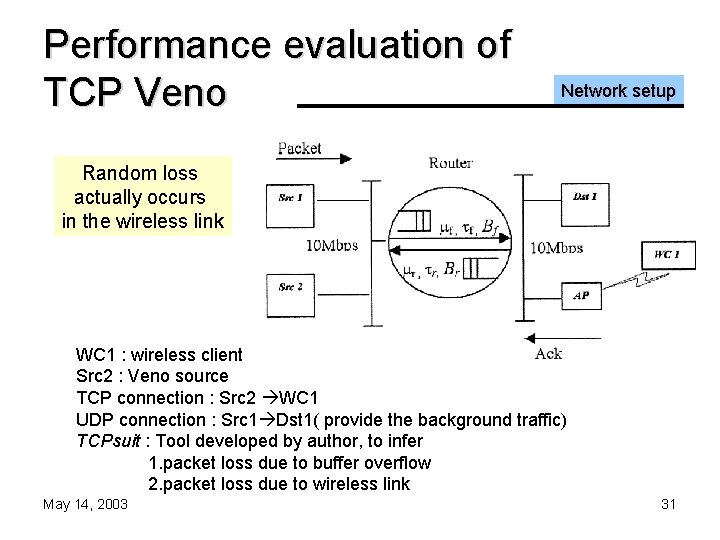

Performance evaluation of TCP Veno • Network setup Two experiments 1. Packet loss artificially induced, 2. On real wireless network where random packet loss actually occurs May 14, 2003 29

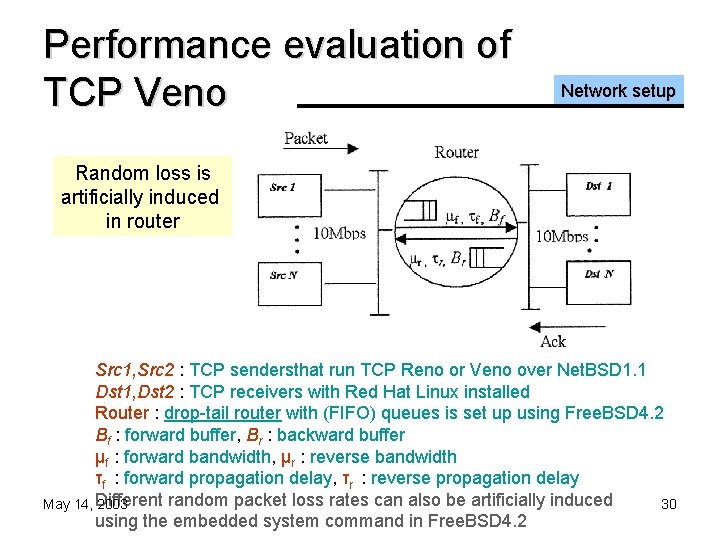

Performance evaluation of TCP Veno Network setup Random loss is artificially induced in router Src 1, Src 2 : TCP sendersthat run TCP Reno or Veno over Net. BSD 1. 1 Dst 1, Dst 2 : TCP receivers with Red Hat Linux installed Router : drop-tail router with (FIFO) queues is set up using Free. BSD 4. 2 Bf : forward buffer, Br : backward buffer μf : forward bandwidth, μr : reverse bandwidth τf : forward propagation delay, τr : reverse propagation delay random packet loss rates can also be artificially induced May 14, Different 2003 30 using the embedded system command in Free. BSD 4. 2

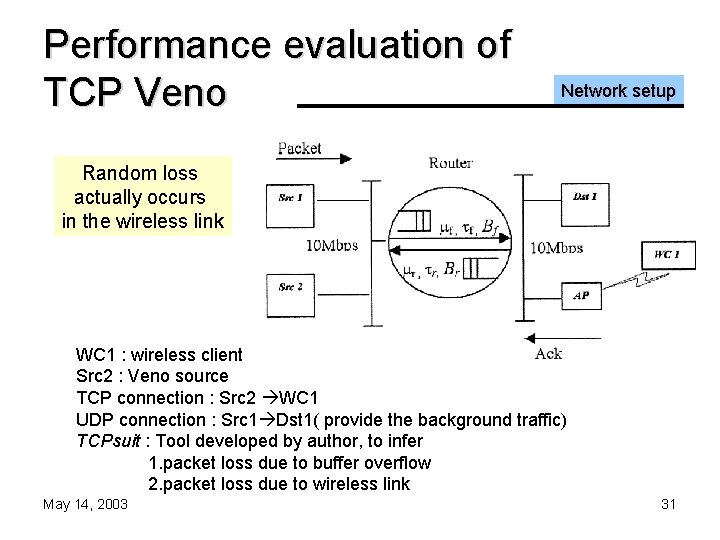

Performance evaluation of TCP Veno Network setup Random loss actually occurs in the wireless link WC 1 : wireless client Src 2 : Veno source TCP connection : Src 2 WC 1 UDP connection : Src 1 Dst 1( provide the background traffic) TCPsuit : Tool developed by author, to infer 1. packet loss due to buffer overflow 2. packet loss due to wireless link May 14, 2003 31

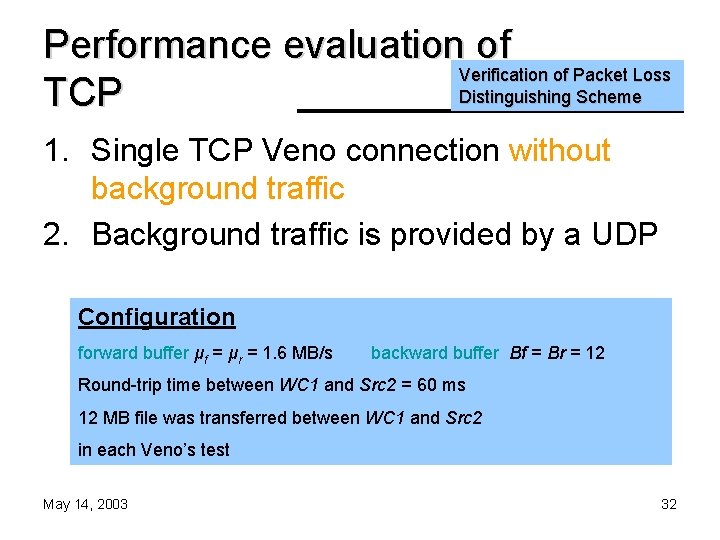

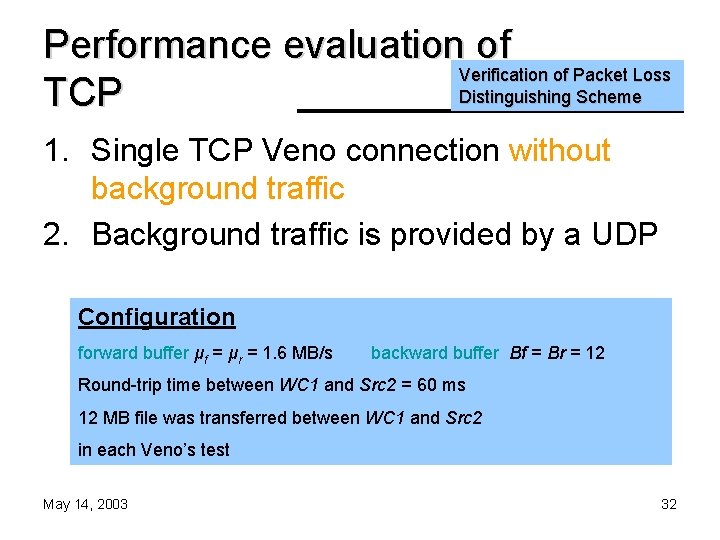

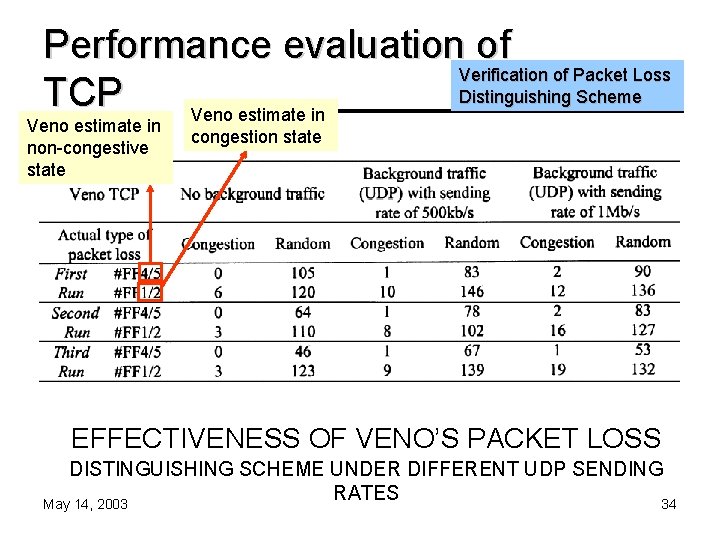

Performance evaluation of Verification of Packet Loss Distinguishing Scheme TCP 1. Single TCP Veno connection without background traffic 2. Background traffic is provided by a UDP Configuration forward buffer μf = μr = 1. 6 MB/s backward buffer Bf = Br = 12 Round-trip time between WC 1 and Src 2 = 60 ms 12 MB file was transferred between WC 1 and Src 2 in each Veno’s test May 14, 2003 32

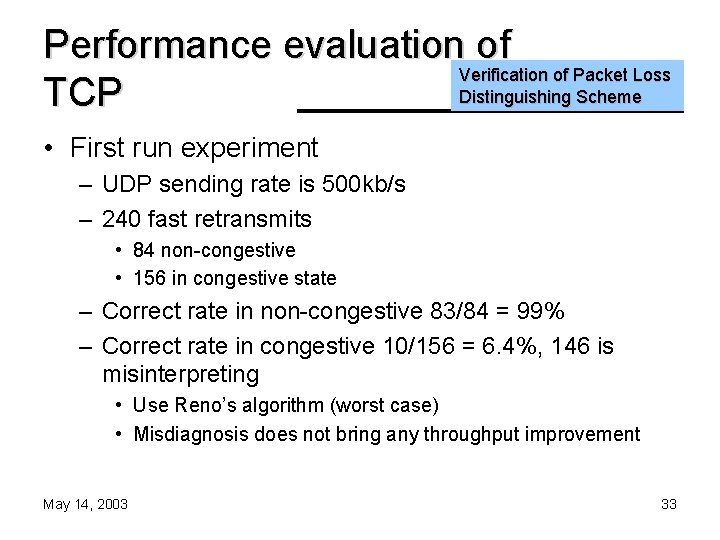

Performance evaluation of Verification of Packet Loss Distinguishing Scheme TCP • First run experiment – UDP sending rate is 500 kb/s – 240 fast retransmits • 84 non-congestive • 156 in congestive state – Correct rate in non-congestive 83/84 = 99% – Correct rate in congestive 10/156 = 6. 4%, 146 is misinterpreting • Use Reno’s algorithm (worst case) • Misdiagnosis does not bring any throughput improvement May 14, 2003 33

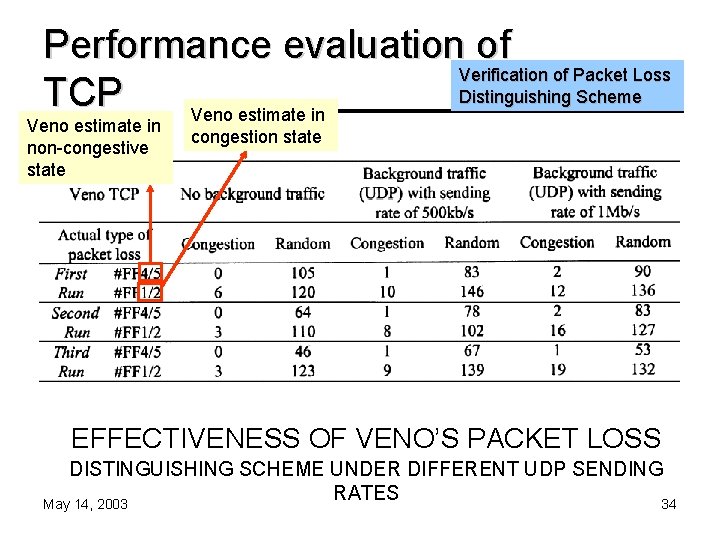

Performance evaluation of Verification of Packet Loss Distinguishing Scheme TCP Veno estimate in non-congestive state congestion state EFFECTIVENESS OF VENO’S PACKET LOSS DISTINGUISHING SCHEME UNDER DIFFERENT UDP SENDING RATES May 14, 2003 34

Performance evaluation of Verification of Packet Loss Distinguishing Scheme TCP • Window-halved reductions corresponding to these kinds of random loss may be Rright eall y? actions – Because the available bandwidth has been fully utilized in these situations May 14, 2003 35

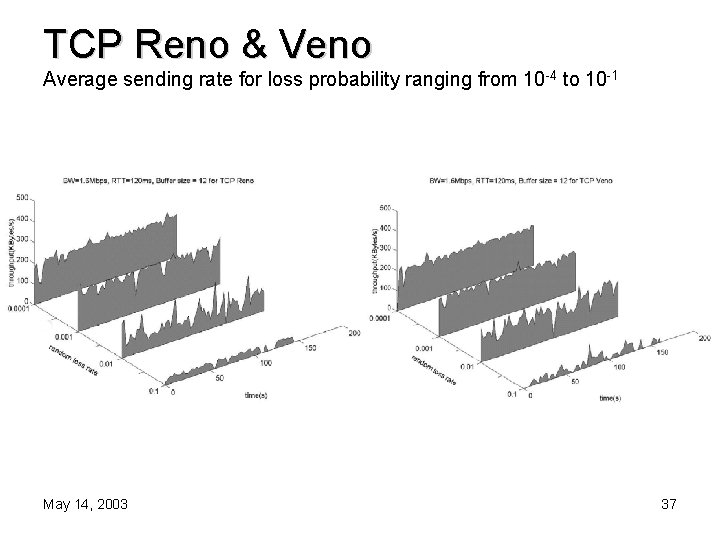

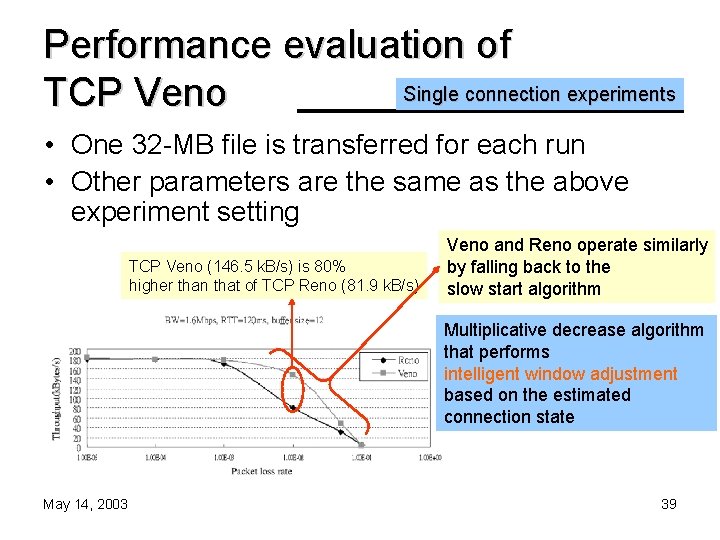

Performance evaluation of Single connection experiments TCP • Only one of the source-destination pairs is turned on • The figure below shows the evolution of the average sending rate – – – For loss probabilities ranging from 10 -4 to 10 -1 buffer size at the router is set to be 12 link speed μf = μr = 1. 6 MB/s RTT = 120 ms Maximum segment size is 1460 Byte Each data point of the sending rate divided by 160 ms May 14, 2003 36

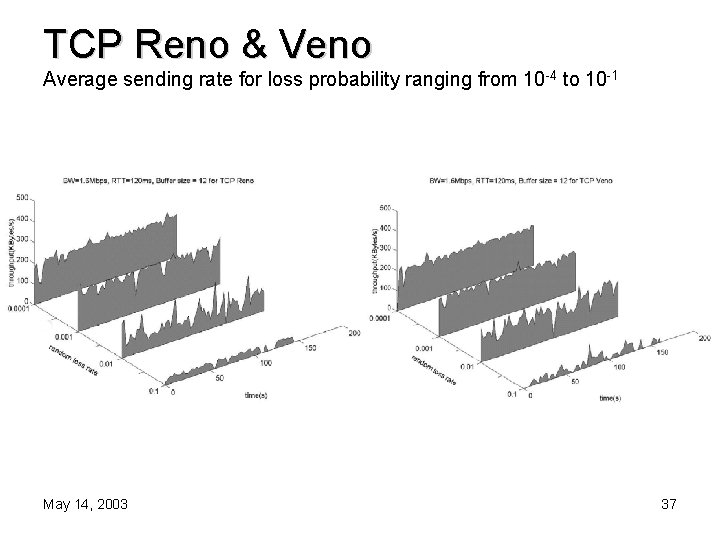

TCP Reno & Veno Average sending rate for loss probability ranging from 10 -4 to 10 -1 May 14, 2003 37

Performance evaluation of Single connection experiments TCP • When the packet loss rate is relatively smaller – The evolutions of Veno and Reno are similar • But when the loss rate is close to 10 -2 packets/s – Veno shows large improvements over Reno May 14, 2003 38

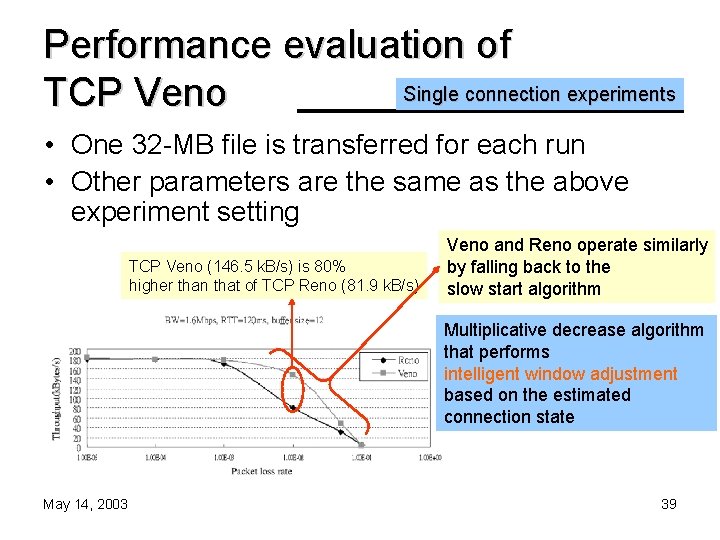

Performance evaluation of Single connection experiments TCP Veno • One 32 -MB file is transferred for each run • Other parameters are the same as the above experiment setting TCP Veno (146. 5 k. B/s) is 80% higher than that of TCP Reno (81. 9 k. B/s) Veno and Reno operate similarly by falling back to the slow start algorithm Multiplicative decrease algorithm that performs intelligent window adjustment based on the estimated connection state May 14, 2003 39

Performance evaluation of Single connection experiments TCP Veno • RFC 3002 shows that – wireless channel with IS-95 CDMA-based data service has an error rate of 1%– 2%. May 14, 2003 40

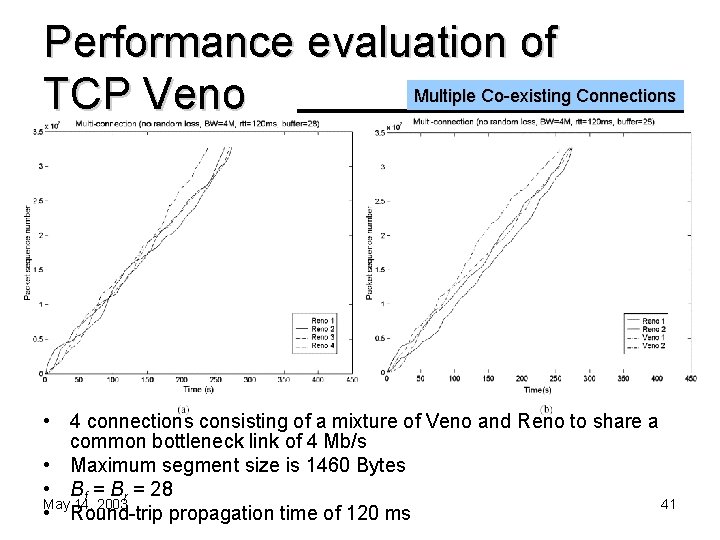

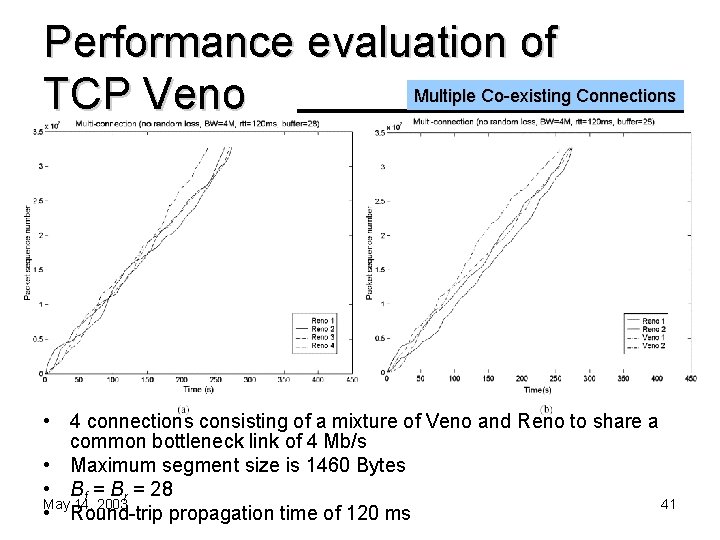

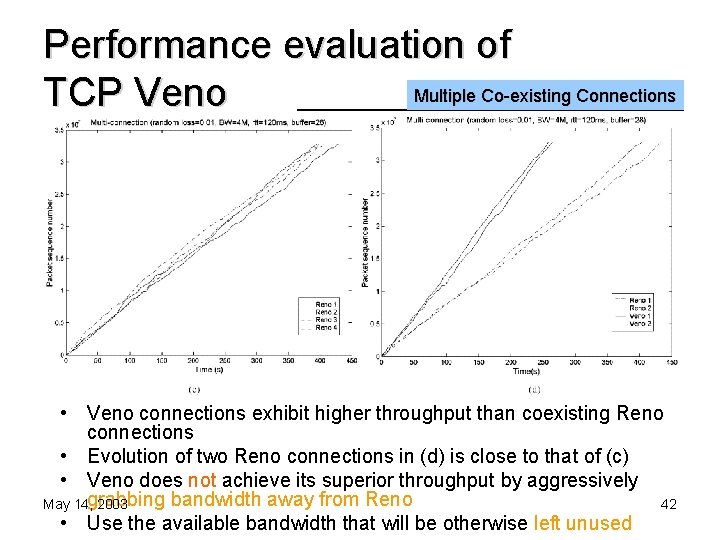

Performance evaluation of Multiple Co-existing Connections TCP Veno • 4 connections consisting of a mixture of Veno and Reno to share a common bottleneck link of 4 Mb/s • Maximum segment size is 1460 Bytes • Bf = Br = 28 May 14, 2003 41 • Round-trip propagation time of 120 ms

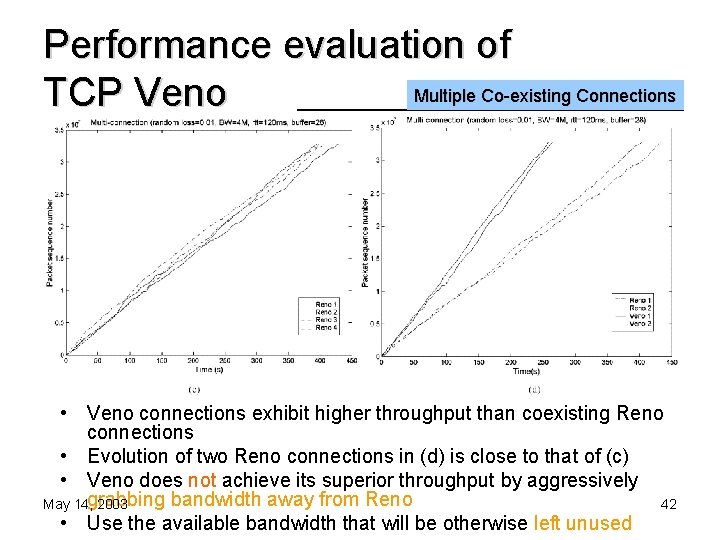

Performance evaluation of Multiple Co-existing Connections TCP Veno • Veno connections exhibit higher throughput than coexisting Reno connections • Evolution of two Reno connections in (d) is close to that of (c) • Veno does not achieve its superior throughput by aggressively bandwidth away from Reno May 14, grabbing 2003 42 • Use the available bandwidth that will be otherwise left unused

Performance evaluation of Multiple Co-existing Connections TCP Veno • Conclude : Veno is compatible with Reno • (d) are roughly the same as those in (b) – Veno connections are not adversely affected by the random loss rate of 0. 01 May 14, 2003 43

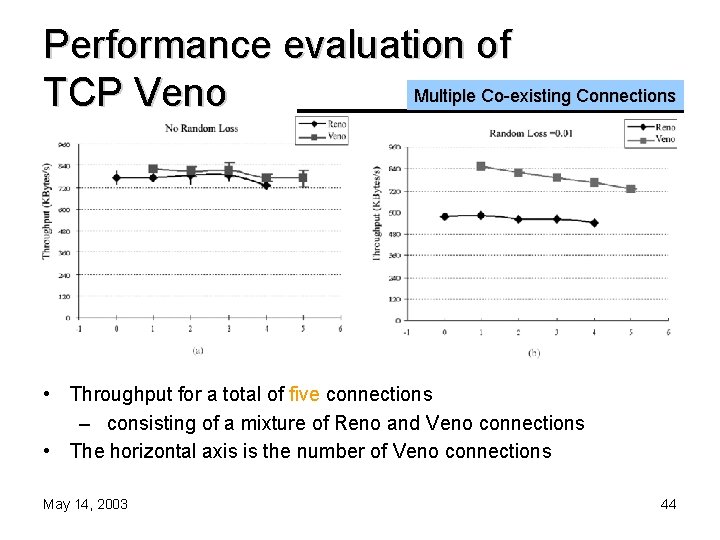

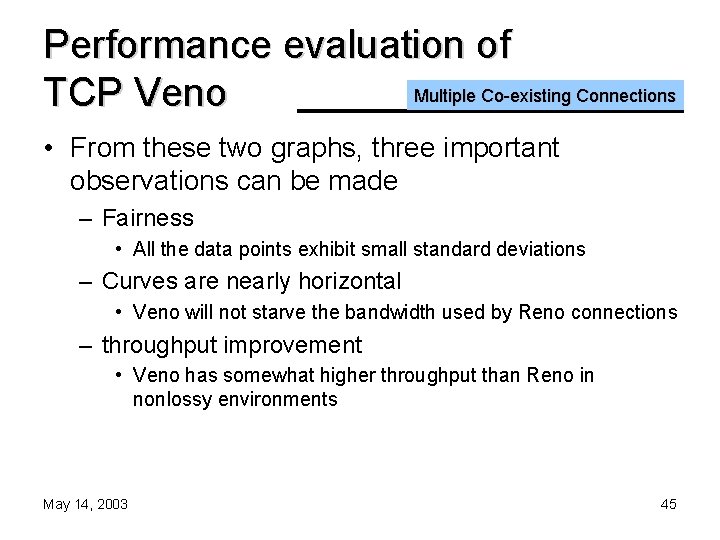

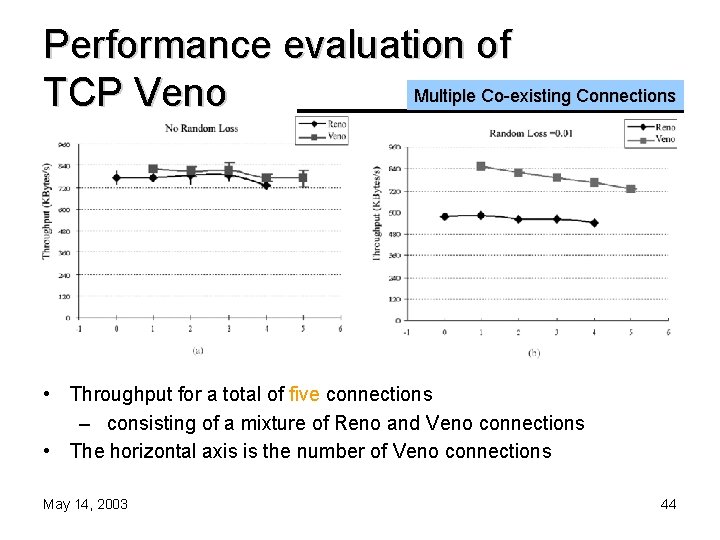

Performance evaluation of Multiple Co-existing Connections TCP Veno • Throughput for a total of five connections – consisting of a mixture of Reno and Veno connections • The horizontal axis is the number of Veno connections May 14, 2003 44

Performance evaluation of Multiple Co-existing Connections TCP Veno • From these two graphs, three important observations can be made – Fairness • All the data points exhibit small standard deviations – Curves are nearly horizontal • Veno will not starve the bandwidth used by Reno connections – throughput improvement • Veno has somewhat higher throughput than Reno in nonlossy environments May 14, 2003 45

Performance evaluation of Live Internet Measurements TCP Veno • The performance over WLAN and wide area network (WAN) May 14, 2003 46

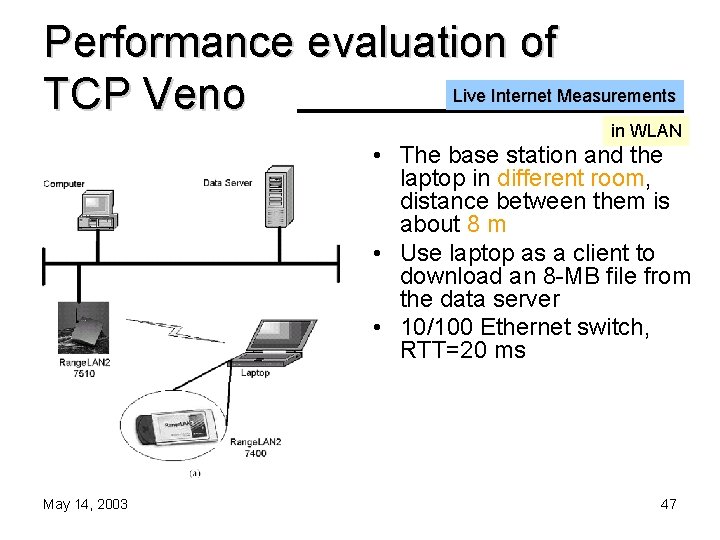

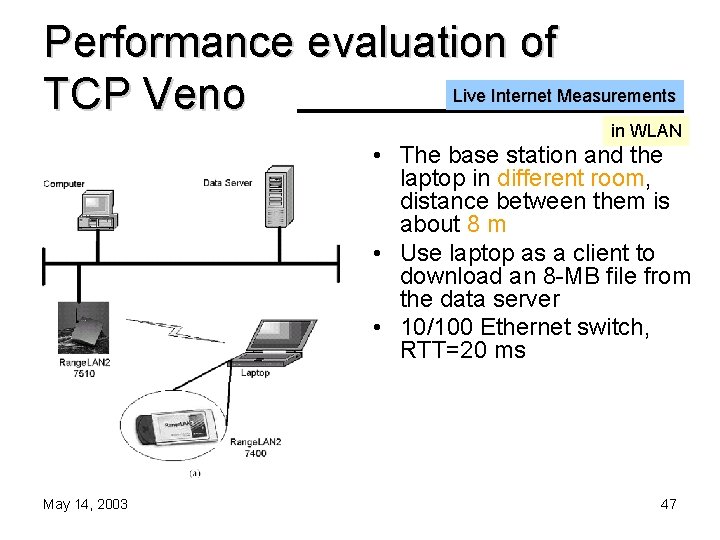

Performance evaluation of Live Internet Measurements TCP Veno in WLAN • The base station and the laptop in different room, distance between them is about 8 m • Use laptop as a client to download an 8 -MB file from the data server • 10/100 Ethernet switch, RTT=20 ms May 14, 2003 47

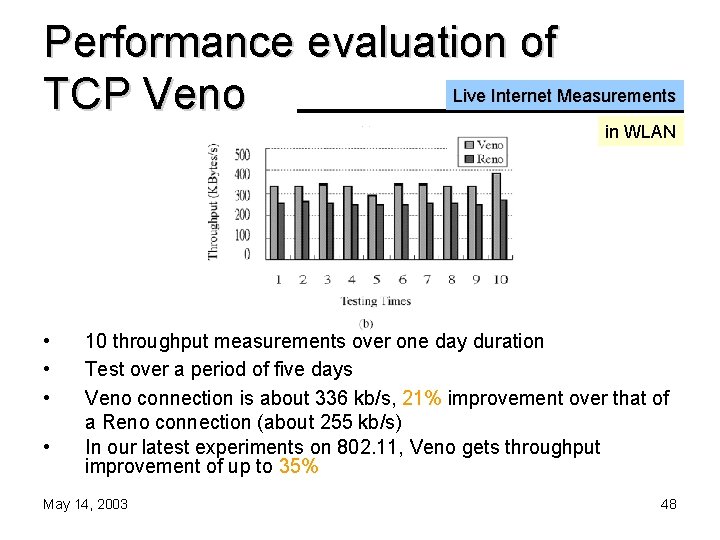

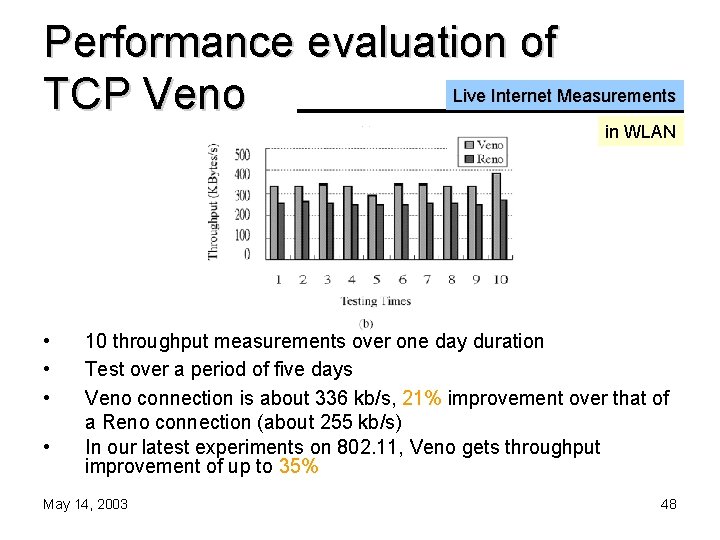

Performance evaluation of Live Internet Measurements TCP Veno in WLAN • • 10 throughput measurements over one day duration Test over a period of five days Veno connection is about 336 kb/s, 21% improvement over that of a Reno connection (about 255 kb/s) In our latest experiments on 802. 11, Veno gets throughput improvement of up to 35% May 14, 2003 48

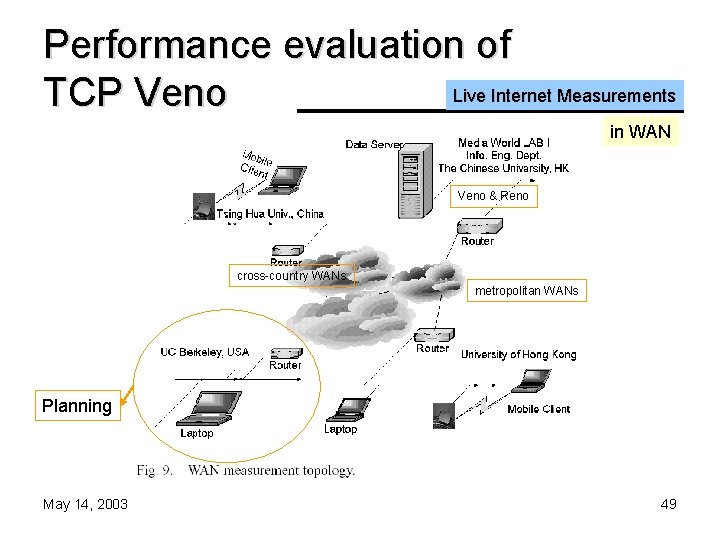

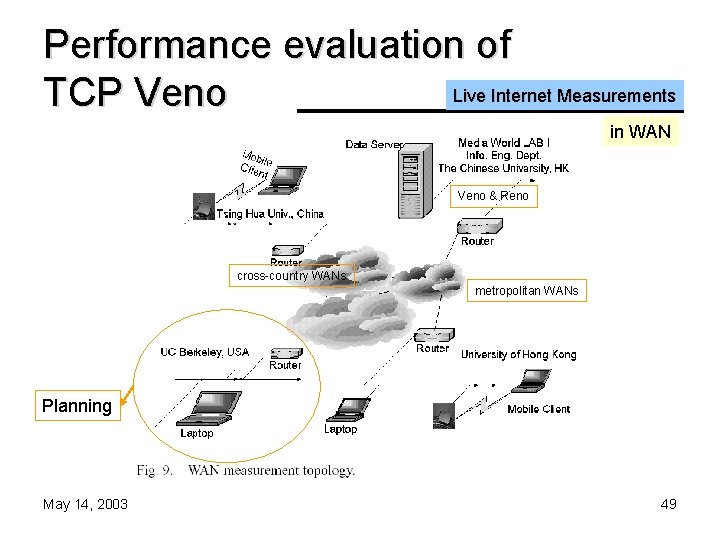

Performance evaluation of Live Internet Measurements TCP Veno in WAN Veno & Reno cross-country WANs metropolitan WANs Planning May 14, 2003 49

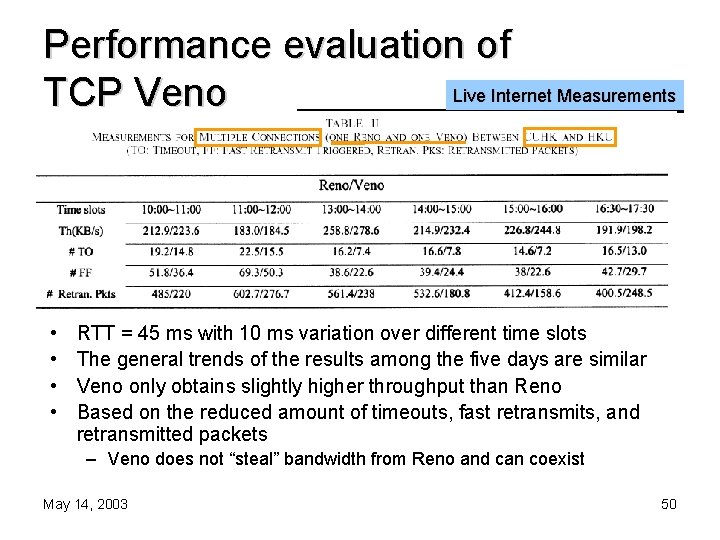

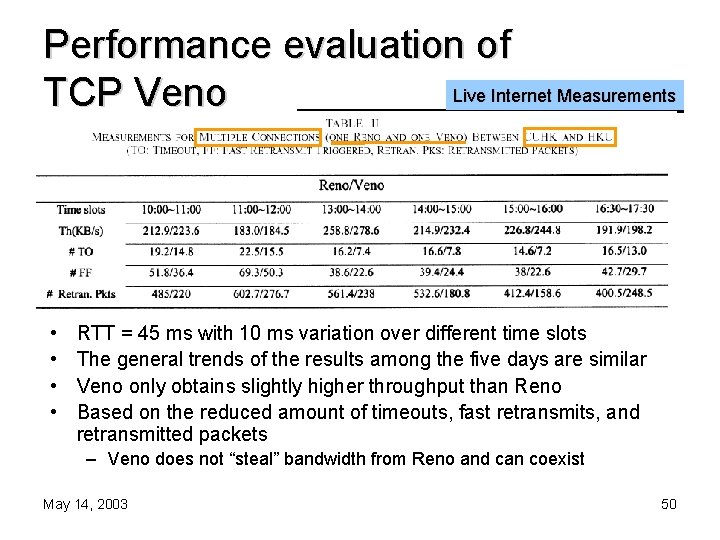

Performance evaluation of Live Internet Measurements TCP Veno • • RTT = 45 ms with 10 ms variation over different time slots The general trends of the results among the five days are similar Veno only obtains slightly higher throughput than Reno Based on the reduced amount of timeouts, fast retransmits, and retransmitted packets – Veno does not “steal” bandwidth from Reno and can coexist May 14, 2003 50

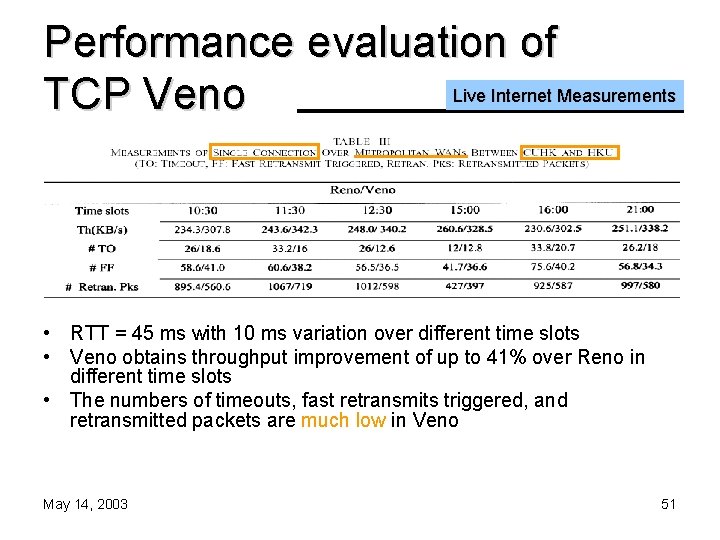

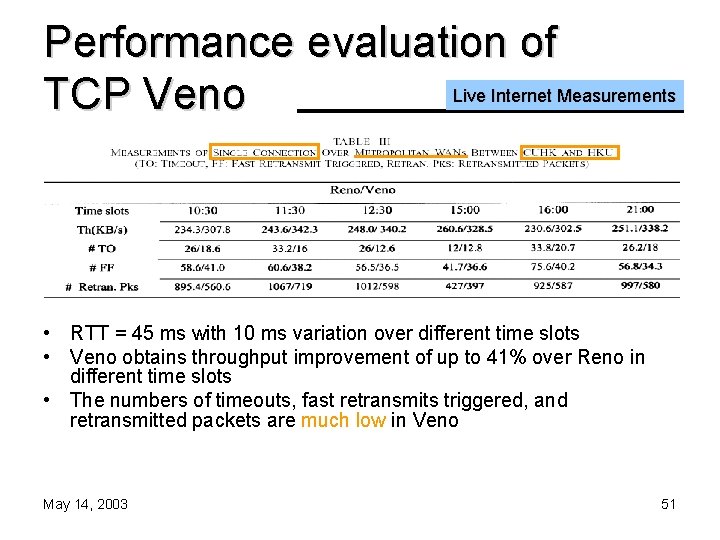

Performance evaluation of Live Internet Measurements TCP Veno • RTT = 45 ms with 10 ms variation over different time slots • Veno obtains throughput improvement of up to 41% over Reno in different time slots • The numbers of timeouts, fast retransmits triggered, and retransmitted packets are much low in Veno May 14, 2003 51

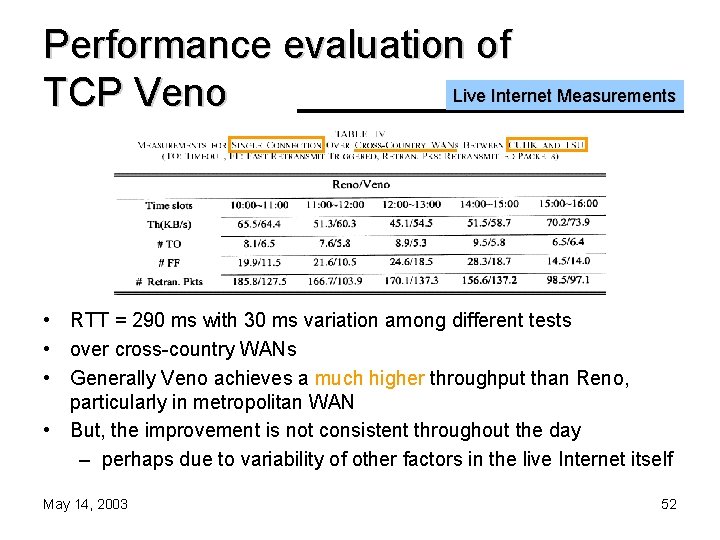

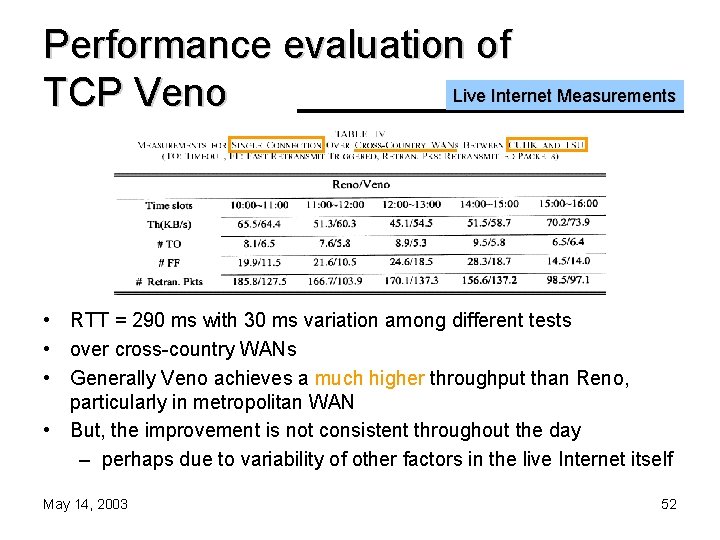

Performance evaluation of Live Internet Measurements TCP Veno • RTT = 290 ms with 30 ms variation among different tests • over cross-country WANs • Generally Veno achieves a much higher throughput than Reno, particularly in metropolitan WAN • But, the improvement is not consistent throughout the day – perhaps due to variability of other factors in the live Internet itself May 14, 2003 52

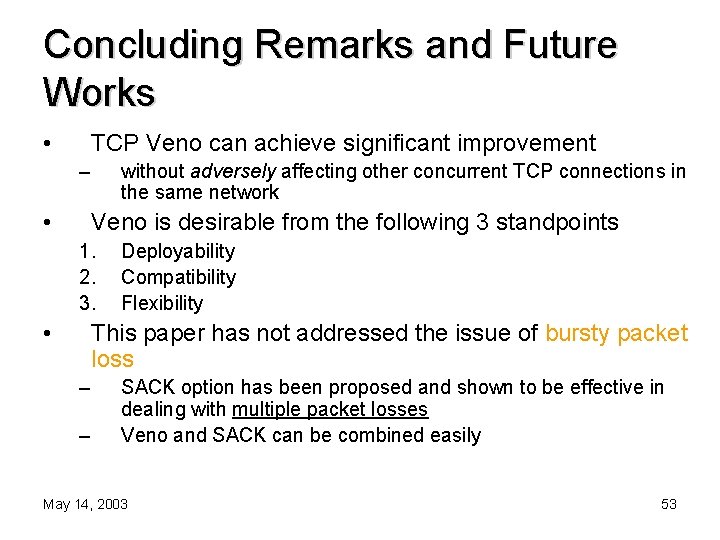

Concluding Remarks and Future Works • TCP Veno can achieve significant improvement – • without adversely affecting other concurrent TCP connections in the same network Veno is desirable from the following 3 standpoints 1. 2. 3. • Deployability Compatibility Flexibility This paper has not addressed the issue of bursty packet loss – – SACK option has been proposed and shown to be effective in dealing with multiple packet losses Veno and SACK can be combined easily May 14, 2003 53

Concluding Remarks and Future Works • Generally speaking, we can see TCP Veno – borrows the idea of congestion detection scheme in Vegas – intelligently integrates it into Reno’s additive increase phase • Future Works – how to refine additive increase at the next step – how much the window is to be reduced once fast retransmit is triggered. – we could also use other predictive congestion detections May 14, 2003 54

Ending… Auth or Cheng Peng Fu Ph. D. degree in information engineering Chinese University of Hong Kong He is from Shanghai May 14, 2003 55

Tcp veno

Tcp veno Tcp 601

Tcp 601 Telecommunications the internet and wireless technology

Telecommunications the internet and wireless technology Conclusion of wireless power transmission

Conclusion of wireless power transmission Air ionization in wireless power transmission

Air ionization in wireless power transmission Advantages of wireless communication

Advantages of wireless communication Wireless power transmission project report doc

Wireless power transmission project report doc Disadvantage of wireless communication

Disadvantage of wireless communication Wireless transmission media

Wireless transmission media Tcp (transmission control protocol) to protokół

Tcp (transmission control protocol) to protokół Over the mountains over the plains

Over the mountains over the plains Siach reciting the word over and over

Siach reciting the word over and over Handing over and taking over the watch

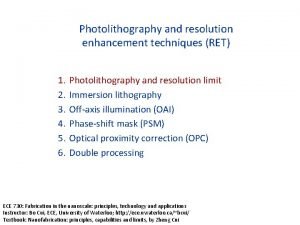

Handing over and taking over the watch Off-axis

Off-axis What is the proper procedure for applying one color monomer

What is the proper procedure for applying one color monomer Objective of image enhancement

Objective of image enhancement Environmental enhancement and mitigation program

Environmental enhancement and mitigation program Edge enhancement

Edge enhancement Combining spatial enhancement methods

Combining spatial enhancement methods Image enhancement by point processing

Image enhancement by point processing Coalition for physician enhancement

Coalition for physician enhancement Image enhancement in spatial domain

Image enhancement in spatial domain Paractactic

Paractactic Mitomycin c prk

Mitomycin c prk Combining spatial enhancement methods

Combining spatial enhancement methods Contrast enhancement

Contrast enhancement What is enhancement in the spatial domain?

What is enhancement in the spatial domain? Image quality repair

Image quality repair Academic enhancement

Academic enhancement Image negatives a gray level transformation is defined as

Image negatives a gray level transformation is defined as Image enhancement in spatial domain

Image enhancement in spatial domain Live enhancement

Live enhancement Mask mode radiography

Mask mode radiography Integrity enhancement features of sql

Integrity enhancement features of sql Nmos inverter with resistive load

Nmos inverter with resistive load Gonzalez

Gonzalez Www.dfps.state.tx.us/training/trauma informed care/

Www.dfps.state.tx.us/training/trauma informed care/ Revenue enhancement synergy

Revenue enhancement synergy Human performance enhancement

Human performance enhancement Enhancement activity

Enhancement activity Enhancement mosfet

Enhancement mosfet Mre

Mre Image enhancement in spatial domain

Image enhancement in spatial domain Input enhancement in string matching

Input enhancement in string matching It resembles the iterative enhancement model.

It resembles the iterative enhancement model. Nerolac paints price 20 liter

Nerolac paints price 20 liter Spatial filtering

Spatial filtering Image enhancement in night vision technology

Image enhancement in night vision technology Pusat bertauliah jpk

Pusat bertauliah jpk Listening and communication enhancement

Listening and communication enhancement Combining spatial enhancement methods

Combining spatial enhancement methods Image processing

Image processing Enhancement type mosfet symbol

Enhancement type mosfet symbol Credit enhancement

Credit enhancement Time interval difference subtraction

Time interval difference subtraction Digital print enhancement

Digital print enhancement