Support Vector Machines Stefano Cavuoti 11272008 SVM Support

- Slides: 16

Support Vector Machines Stefano Cavuoti 11/27/2008

SVM • • Support vector machines (SVM) are a group of supervised learning methods that can be applied to classification or regression. In a short period of time, SVM found numerous applications in a lot of scientific branches like physics, biology, chemistry. drug design (discriminating between ligands and nonligands, inhibitors and noninhibitors, etc. ), quantitative structure-activity relationships (QSAR, where SVM regression is used to predict various physical, chemical, or biological properties), chemometrics (optimization of chromatographic separation or compound concentration prediction from spectral data as examples), sensors (for qualitative and quantitative prediction from sensor data), chemical engineering (fault detection and modeling of industrial processes), text mining (automatic recognition of scientific information) etc.

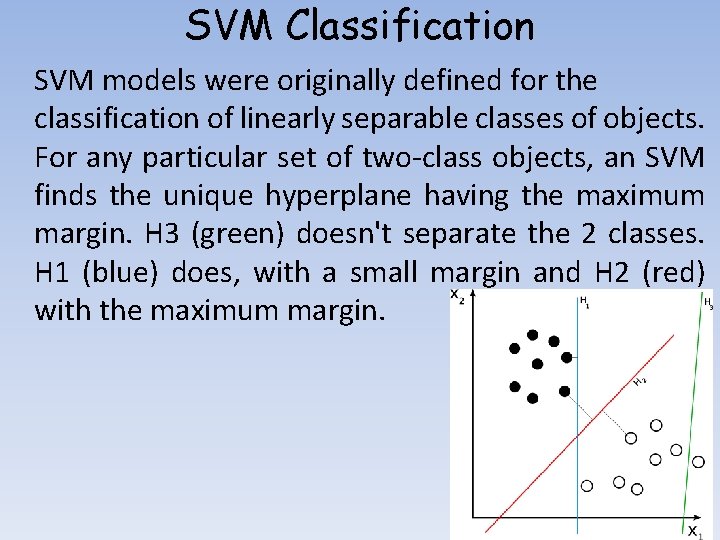

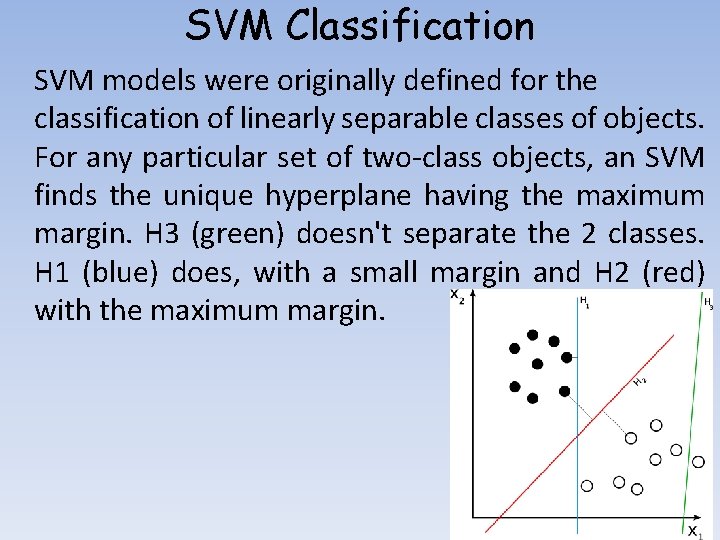

SVM Classification SVM models were originally defined for the classification of linearly separable classes of objects. For any particular set of two-class objects, an SVM finds the unique hyperplane having the maximum margin. H 3 (green) doesn't separate the 2 classes. H 1 (blue) does, with a small margin and H 2 (red) with the maximum margin.

SVM Classification The hyperplane H 1 defines the border with class +1 objects, whereas the hyperplane H 2 defines the border with class 1 objects. Two objects from class +1 define the hyperplane H 1, and three objects from class -1 define the hyperplane H 2. These objects, represented inside circles in Figure, are called support vectors. A special characteristic of SVM is that the solution to a classification problem is represented by the support vectors that determine the maximum margin hyperplane.

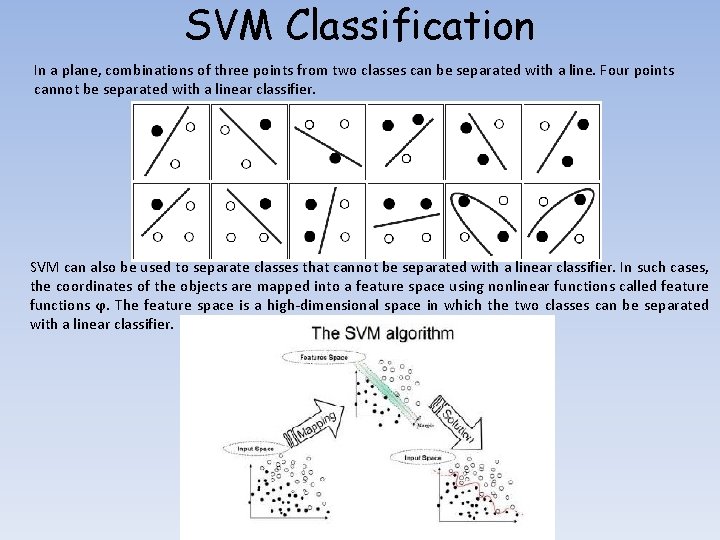

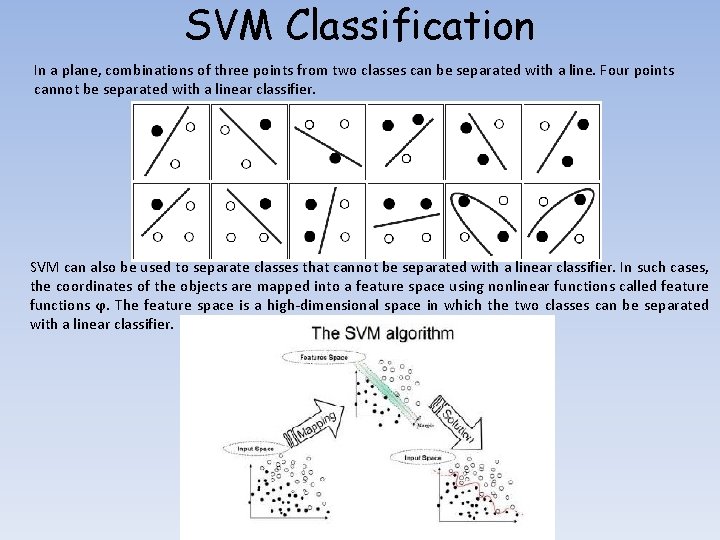

SVM Classification In a plane, combinations of three points from two classes can be separated with a line. Four points cannot be separated with a linear classifier. SVM can also be used to separate classes that cannot be separated with a linear classifier. In such cases, the coordinates of the objects are mapped into a feature space using nonlinear functions called feature functions ϕ. The feature space is a high-dimensional space in which the two classes can be separated with a linear classifier.

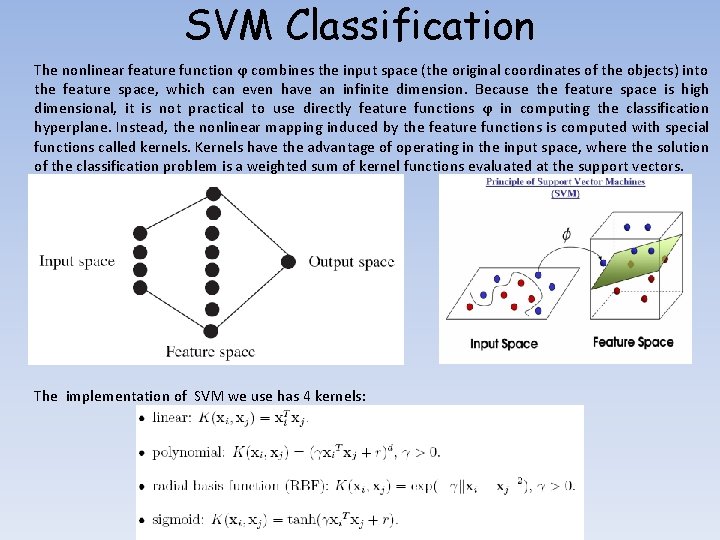

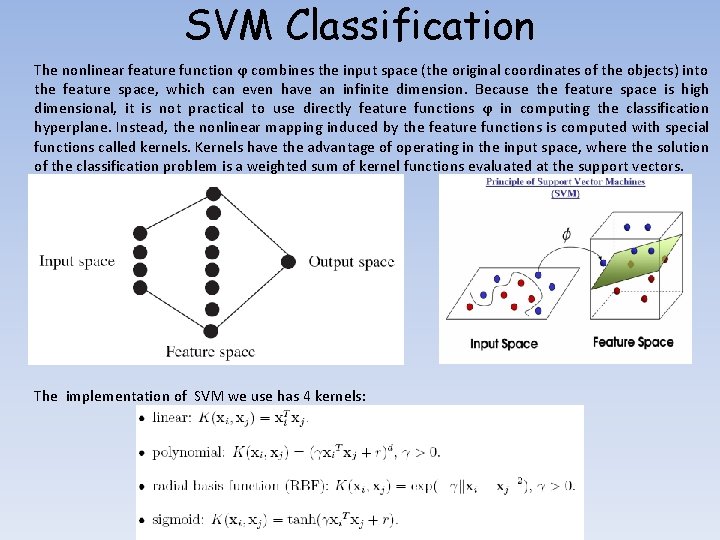

SVM Classification The nonlinear feature function ϕ combines the input space (the original coordinates of the objects) into the feature space, which can even have an infinite dimension. Because the feature space is high dimensional, it is not practical to use directly feature functions ϕ in computing the classification hyperplane. Instead, the nonlinear mapping induced by the feature functions is computed with special functions called kernels. Kernels have the advantage of operating in the input space, where the solution of the classification problem is a weighted sum of kernel functions evaluated at the support vectors. The implementation of SVM we use has 4 kernels:

SVM- TOY This is a simple toy developed by the creators of LIBSVM: Chih-Wei Hsu, Chih-Chung Chang, and Chih -Jen Lin in order of illustrate a simple case, it is nice.

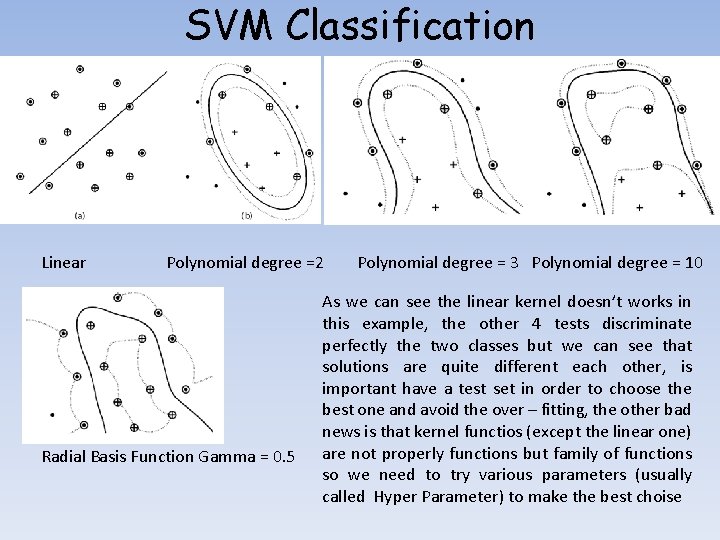

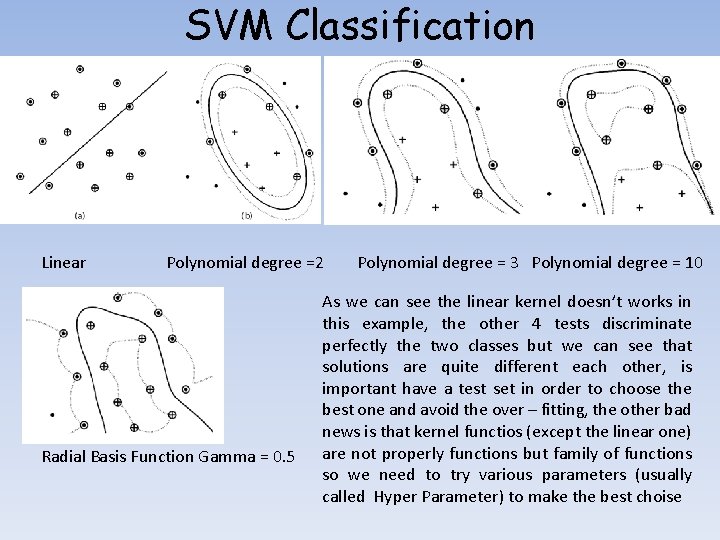

SVM Classification To illustrate the SVM capability of training nonlinear classifiers, consider the patterns from. Table. This is a synthetic dataset of two-dimensional patterns, designed to investigate the properties of the SVM classification method. In all figures, class +1 patterns are represented by + , whereas class -1 patterns are represented by black dots. The SVM hyperplane is drawn with a continuous line, whereas the margins of the SVM hyperplane are represented by dotted lines. Support vectors from the class +1 are represented as + inside a circle, whereas support vectors from the class -1 are represented as a black dot inside a circle Param 1 Param 2

SVM Classification Linear Polynomial degree =2 Radial Basis Function Gamma = 0. 5 Polynomial degree = 3 Polynomial degree = 10 As we can see the linear kernel doesn’t works in this example, the other 4 tests discriminate perfectly the two classes but we can see that solutions are quite different each other, is important have a test set in order to choose the best one and avoid the over – fitting, the other bad news is that kernel functios (except the linear one) are not properly functions but family of functions so we need to try various parameters (usually called Hyper Parameter) to make the best choise

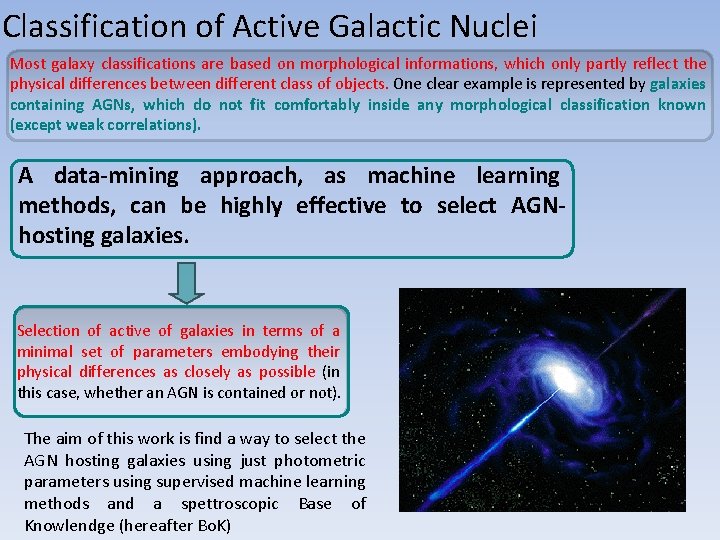

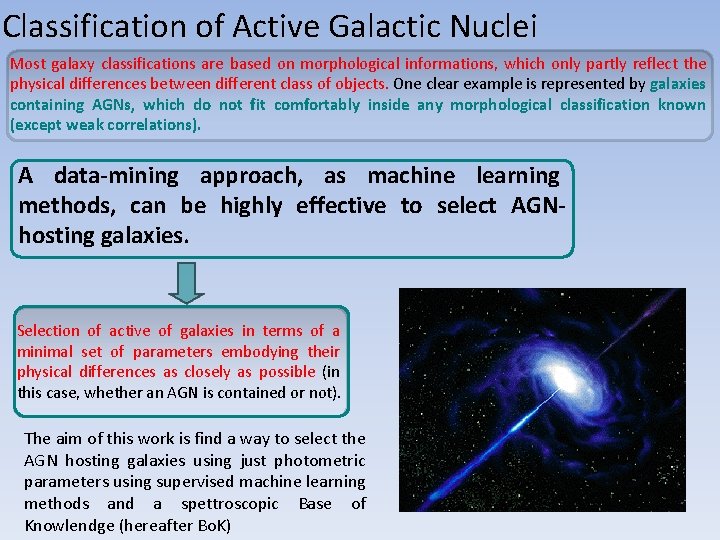

Classification of Active Galactic Nuclei Most galaxy classifications are based on morphological informations, which only partly reflect the physical differences between different class of objects. One clear example is represented by galaxies containing AGNs, which do not fit comfortably inside any morphological classification known (except weak correlations). A data-mining approach, as machine learning methods, can be highly effective to select AGNhosting galaxies. Selection of active of galaxies in terms of a minimal set of parameters embodying their physical differences as closely as possible (in this case, whether an AGN is contained or not). The aim of this work is find a way to select the AGN hosting galaxies using just photometric parameters using supervised machine learning methods and a spettroscopic Base of Knowlendge (hereafter Bo. K)

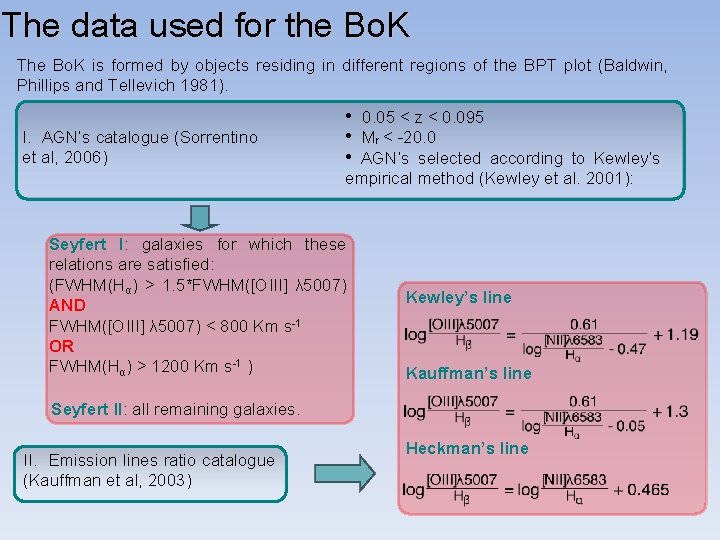

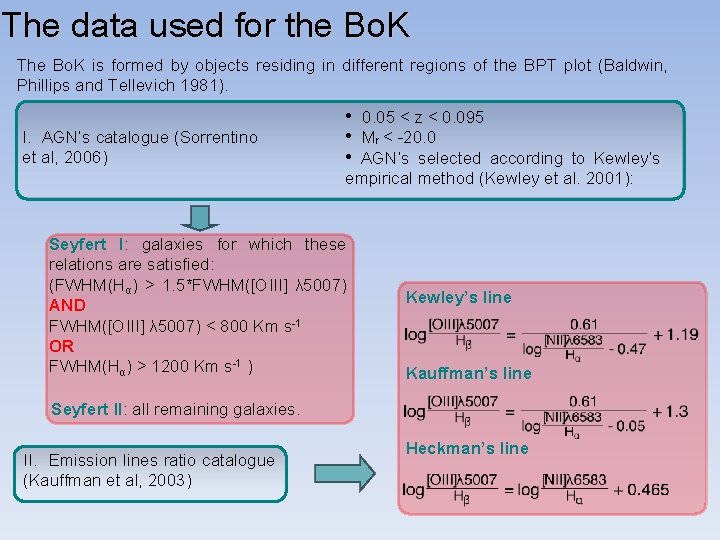

The data used for the Bo. K The Bo. K is formed by objects residing in different regions of the BPT plot (Baldwin, Phillips and Tellevich 1981). I. AGN’s catalogue (Sorrentino et al, 2006) • • • 0. 05 < z < 0. 095 Mr < -20. 0 AGN’s selected according to Kewley’s empirical method (Kewley et al. 2001): Seyfert I: galaxies for which these relations are satisfied: (FWHM(Hα) > 1. 5*FWHM([OIII] λ 5007) AND FWHM([OIII] λ 5007) < 800 Km s-1 OR FWHM(Hα) > 1200 Km s-1 ) Kewley’s line Kauffman’s line Seyfert II: all remaining galaxies. II. Emission lines ratio catalogue (Kauffman et al, 2003) Heckman’s line

The Bo. K Heckman’s line log(OIII)/Hβ Kewley’s line Kauffman’s line log(NII)/Hα

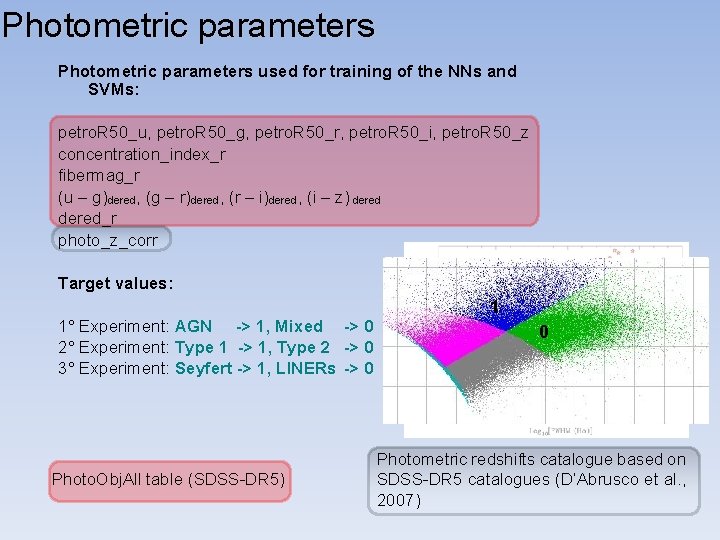

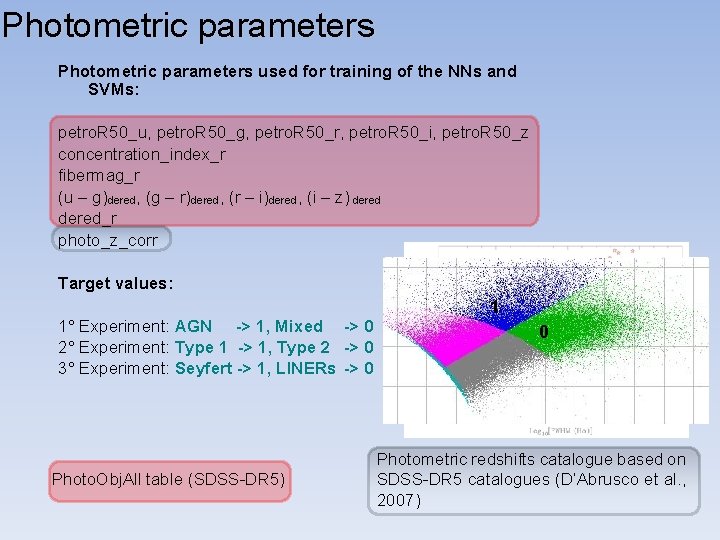

Photometric parameters used for training of the NNs and SVMs: petro. R 50_u, petro. R 50_g, petro. R 50_r, petro. R 50_i, petro. R 50_z concentration_index_r fibermag_r (u – g)dered, (g – r)dered, (r – i)dered, (i – z) dered_r photo_z_corr Target values: 1° Experiment: AGN -> 1, Mixed -> 0 2° Experiment: Type 1 -> 1, Type 2 -> 0 3° Experiment: Seyfert -> 1, LINERs -> 0 Photo. Obj. All table (SDSS-DR 5) 1 0 0 10 1 Photometric redshifts catalogue based on SDSS-DR 5 catalogues (D’Abrusco et al. , 2007)

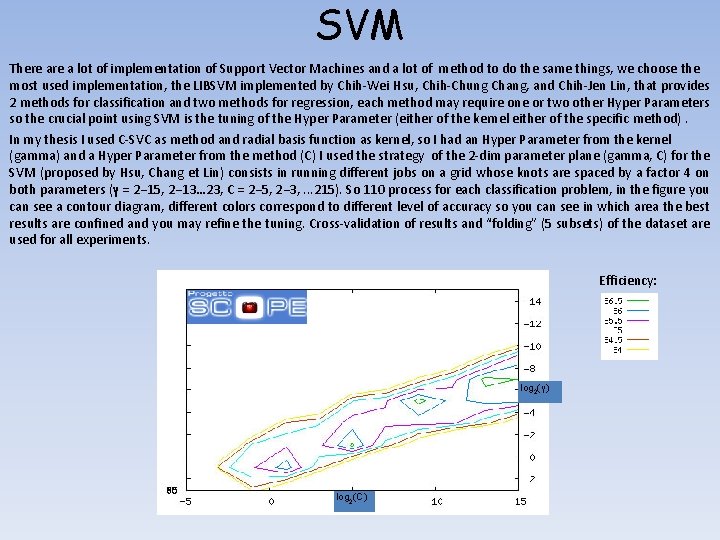

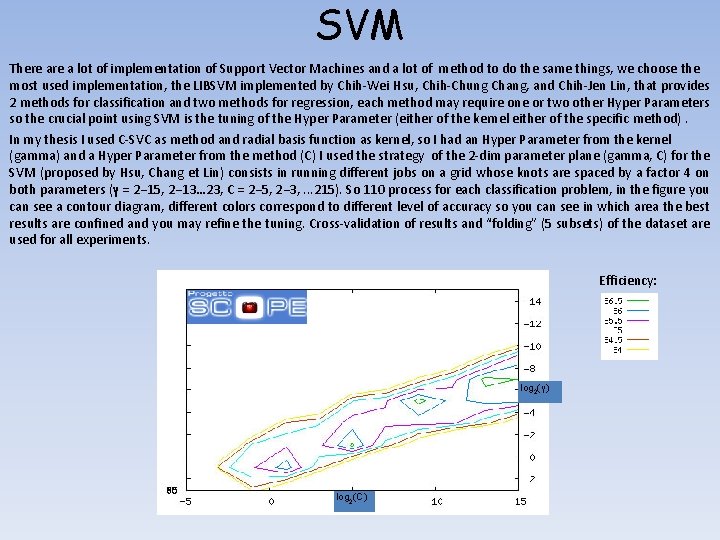

SVM There a lot of implementation of Support Vector Machines and a lot of method to do the same things, we choose the most used implementation, the LIBSVM implemented by Chih-Wei Hsu, Chih-Chung Chang, and Chih-Jen Lin, that provides 2 methods for classification and two methods for regression, each method may require one or two other Hyper Parameters so the crucial point using SVM is the tuning of the Hyper Parameter (either of the kernel either of the specific method). In my thesis I used C-SVC as method and radial basis function as kernel, so I had an Hyper Parameter from the kernel (gamma) and a Hyper Parameter from the method (C) I used the strategy of the 2 -dim parameter plane (gamma, C) for the SVM (proposed by Hsu, Chang et Lin) consists in running different jobs on a grid whose knots are spaced by a factor 4 on both parameters (γ = 2− 15, 2− 13… 23, C = 2− 5, 2− 3, . . . 215). So 110 process for each classification problem, in the figure you can see a contour diagram, different colors correspond to different level of accuracy so you can see in which area the best results are confined and you may refine the tuning. Cross-validation of results and “folding” (5 subsets) of the dataset are used for all experiments. Efficiency: log 2(γ) log 2(C)

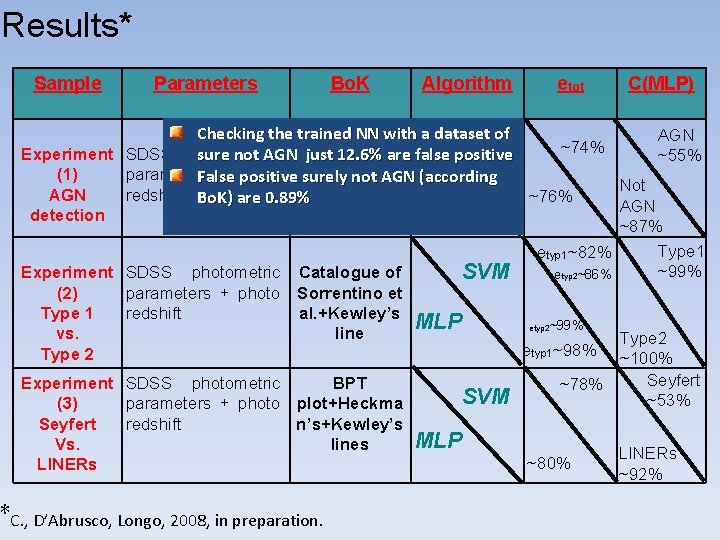

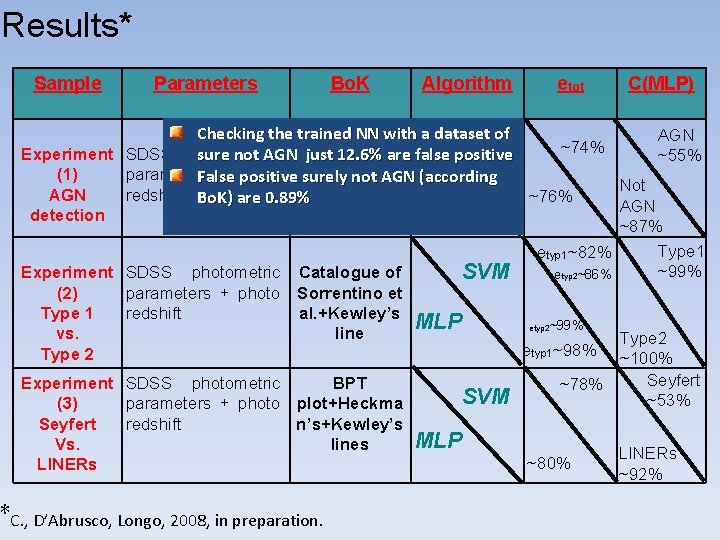

Results* Sample Parameters Bo. K Algorithm etot C(MLP) Checking the trained NN with a dataset of AGN ~74% SVM Experiment SDSS photometric BPT plot are false positive sure not AGN just 12. 6% ~55% (1) parameters + photo +Kewley’s False positive surely not AGN (according Not AGN redshift Bo. K) are 0. 89% line ~76% MLP AGN detection Experiment SDSS photometric Catalogue of (2) parameters + photo Sorrentino et Type 1 redshift al. +Kewley’s vs. line Type 2 Experiment SDSS photometric BPT (3) parameters + photo plot+Heckma Seyfert redshift n’s+Kewley’s Vs. lines LINERs ∗C. , D’Abrusco, Longo, 2008, in preparation. SVM MLP ~87% Type 1 etyp 1~82% ~99% etyp 2~86% etyp 2~99% SVM Type 2 etyp 1~98% ~100% Seyfert ~78% ~53% MLP ~80% LINERs ~92%

Ongoing work: improving AGN class. AGNs classification can be refined by: 1. Improving the accuracy of the photometric parameters deriving them directly by the images (in coll. with R. De Carvalho and F. La Barbera). 2. Improving the effectiveness of separation between different families using better spectroscopic indicators, i. e. using a better Bo. K (in coll. with P. Rafanelli and S. Ciroi) The improvement of the Base of Knowledge can be accomplished not only enhancing the quality of spectroscopic classification, but also by enlarging the wavelength range whence the Bo. K is extracted. This is a possible approach to connect different types of AGNs.