Summarization and Personal Information Management Carolyn Penstein Ros

- Slides: 30

Summarization and Personal Information Management Carolyn Penstein Rosé Language Technologies Institute/ Human-Computer Interaction Institute

Announcements Questions? n Homework 3 technically due today n ¨ Problems n with homework assignment? Plan for Today ¨ Nenkova et al. , 2006 ¨ Li et al. , 2006 ¨ Yang et al. , 2007 n Student presentation by Xiaonan Zhang

Common Themes n Evaluating alternative relevance measures ¨ Some evaluations more solid than others Avoiding redundancy n Focus on events as a unit of information n ¨ Difference in granularity – what is an event?

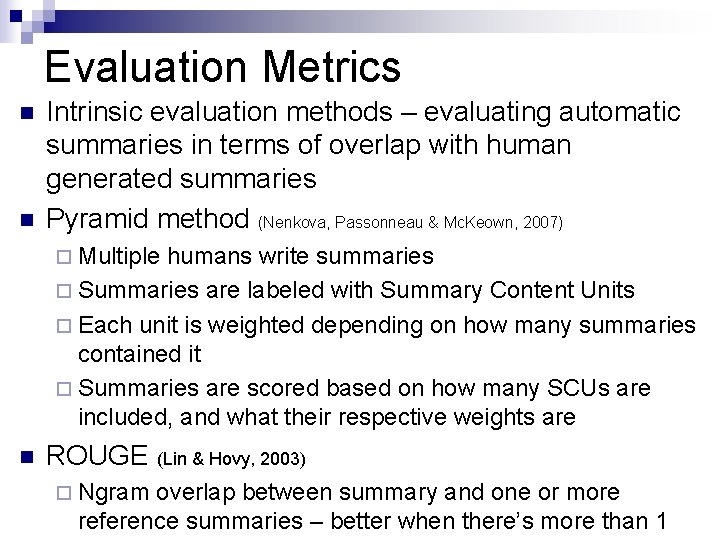

Evaluation Metrics n n Intrinsic evaluation methods – evaluating automatic summaries in terms of overlap with human generated summaries Pyramid method (Nenkova, Passonneau & Mc. Keown, 2007) ¨ Multiple humans write summaries ¨ Summaries are labeled with Summary Content Units ¨ Each unit is weighted depending on how many summaries contained it ¨ Summaries are scored based on how many SCUs are included, and what their respective weights are n ROUGE (Lin & Hovy, 2003) ¨ Ngram overlap between summary and one or more reference summaries – better when there’s more than 1

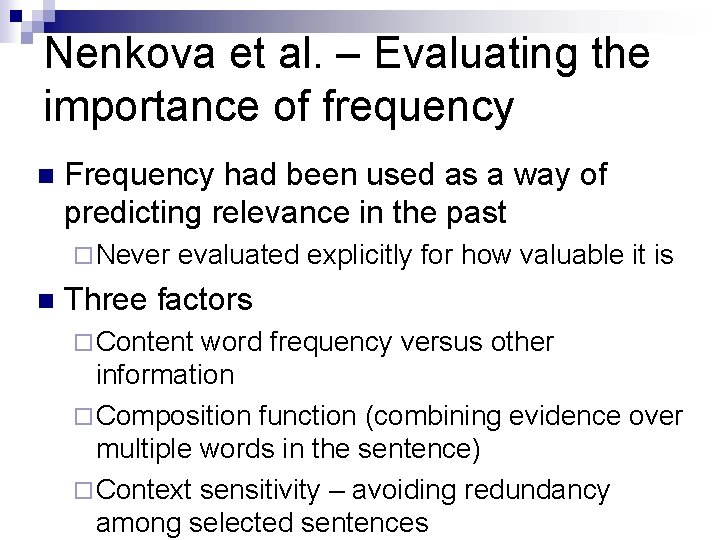

Nenkova et al. – Evaluating the importance of frequency n Frequency had been used as a way of predicting relevance in the past ¨ Never n evaluated explicitly for how valuable it is Three factors ¨ Content word frequency versus other information ¨ Composition function (combining evidence over multiple words in the sentence) ¨ Context sensitivity – avoiding redundancy among selected sentences

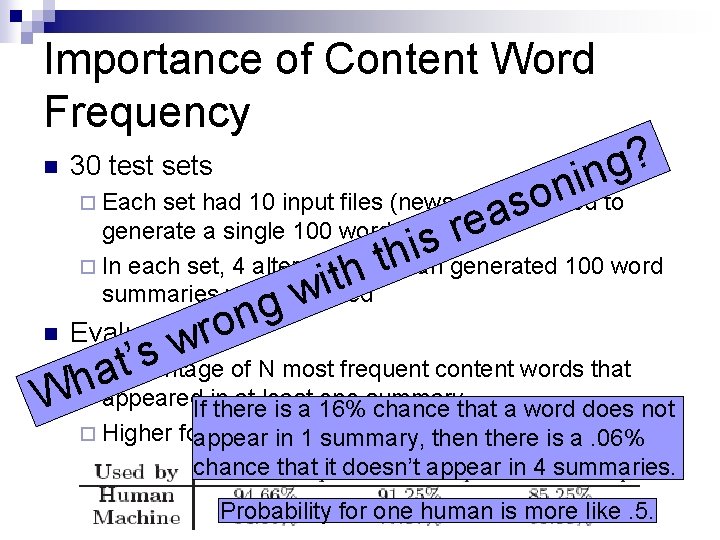

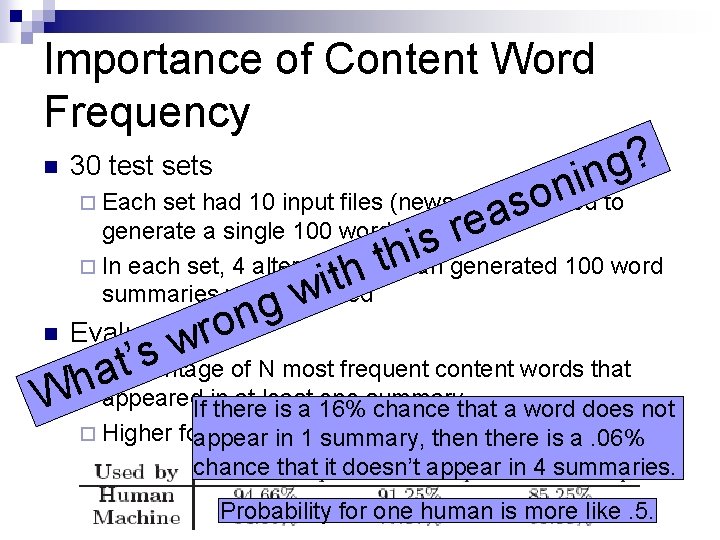

Importance of Content Word Frequency ? n 30 test sets g n i n ¨ Each set had 10 input files (news stories) used to o s a e generate a single 100 word summary r s i h t ¨ In each set, 4 alternative human generated 100 word h t i summaries were included w g n o n Evaluations r w s ’ ¨a Percentage of N most frequent content words that t h at least one summary W appeared. If inthere is a 16% chance that a word does not ¨ Higher for humans appear in 1 summary, then there is a. 06% chance that it doesn’t appear in 4 summaries. Probability for one human is more like. 5.

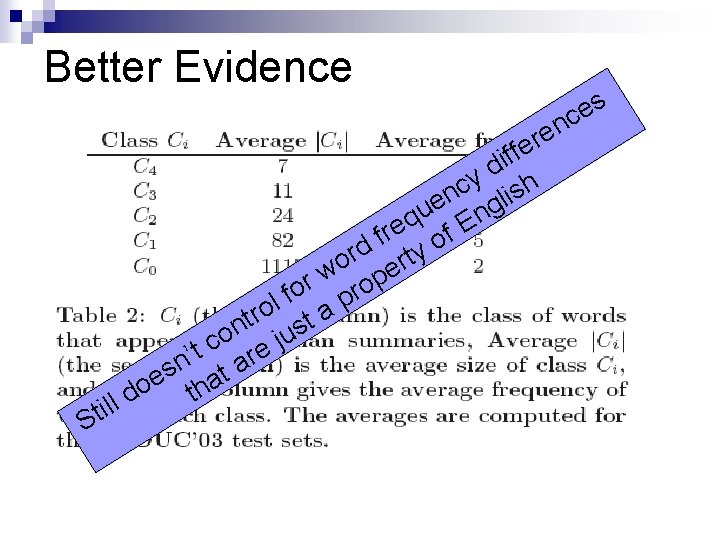

Better Evidence s e c n e r e i t S f f i d y c lish n e ng u q E e r f f o rd rty o w pe r fo pro l o ta r t n jus o c e t ’ n t ar s e ha o t ll d

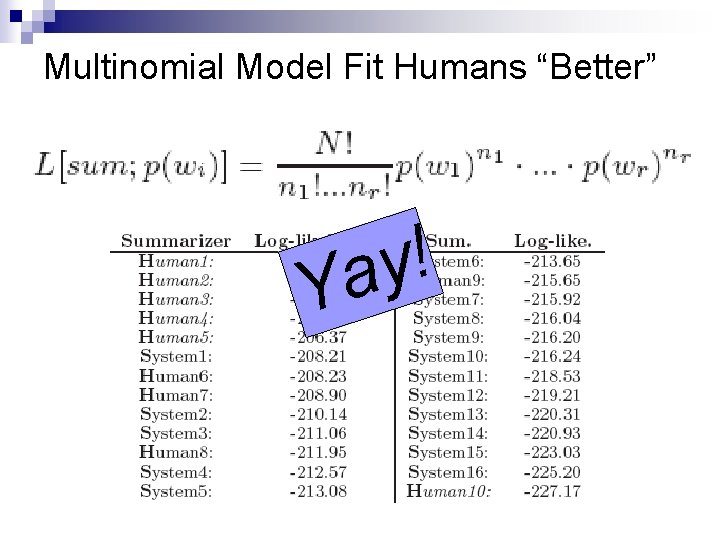

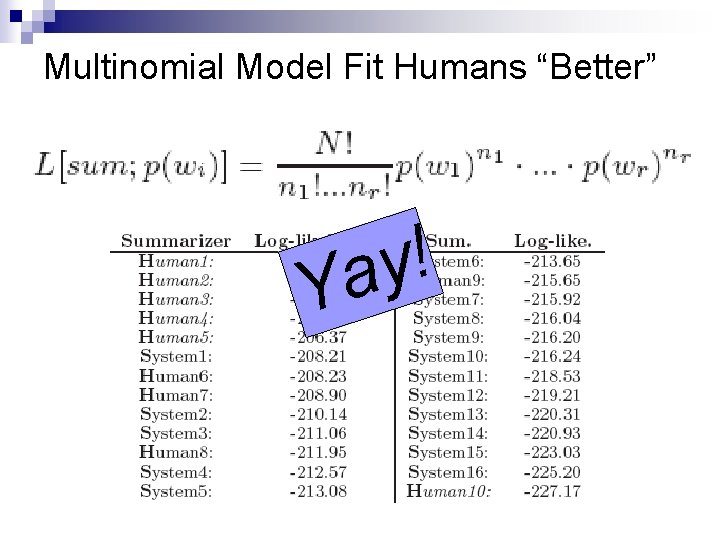

Multinomial Model Fit Humans “Better” ! y a Y

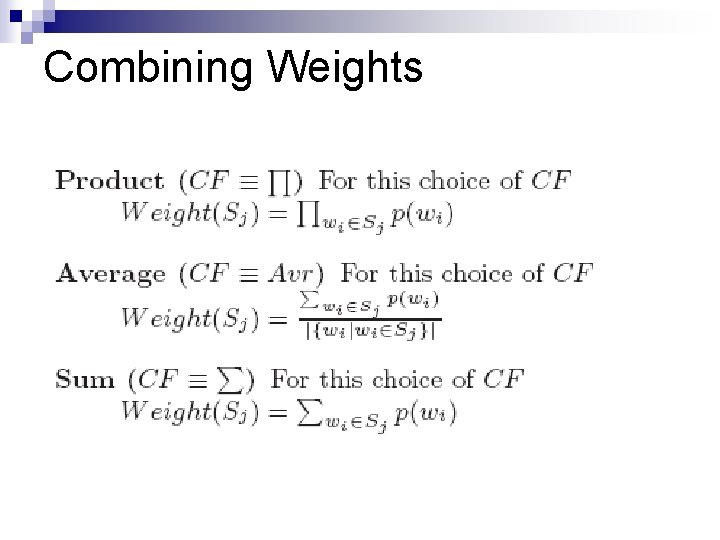

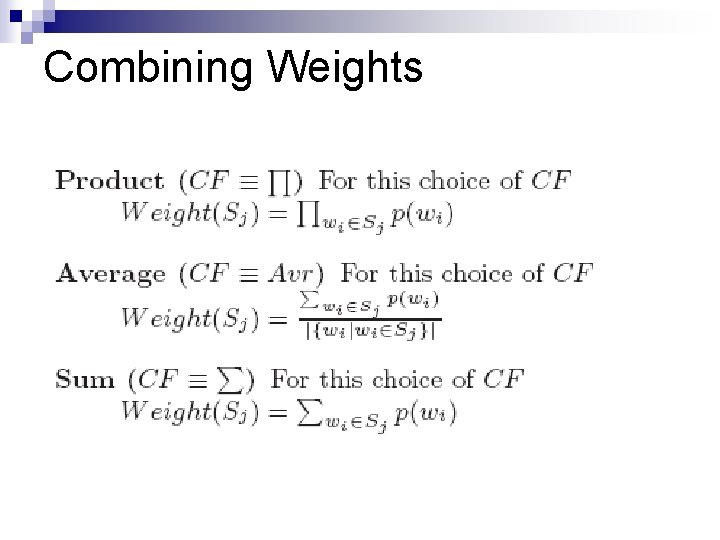

Combining Weights

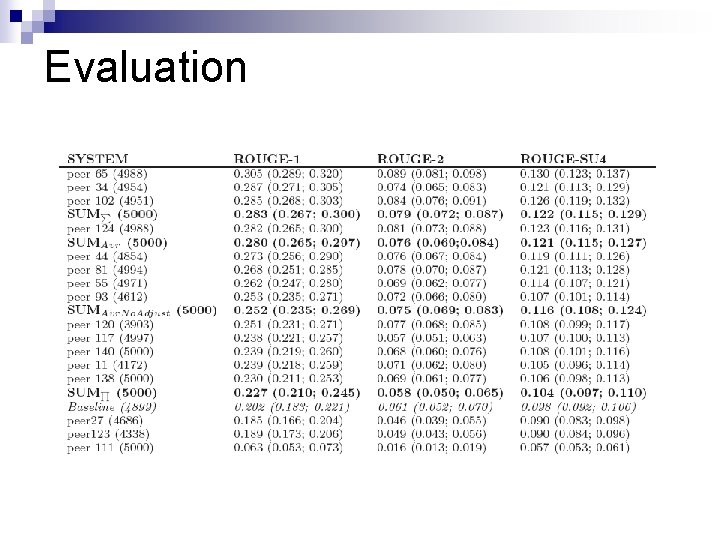

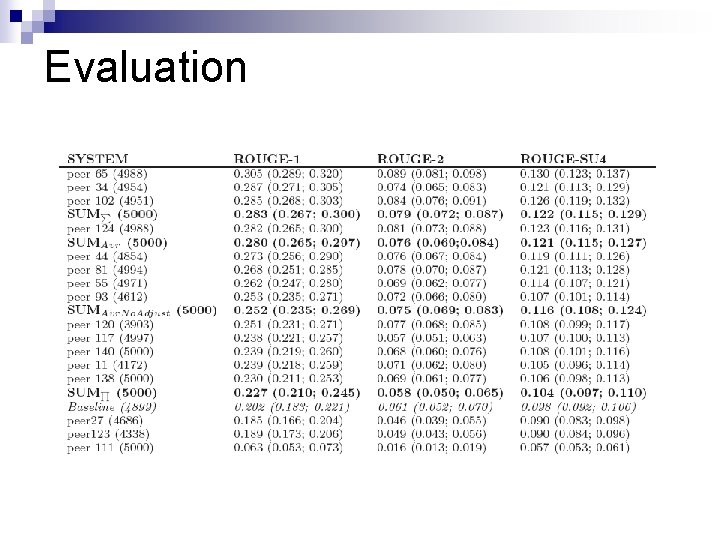

Evaluation

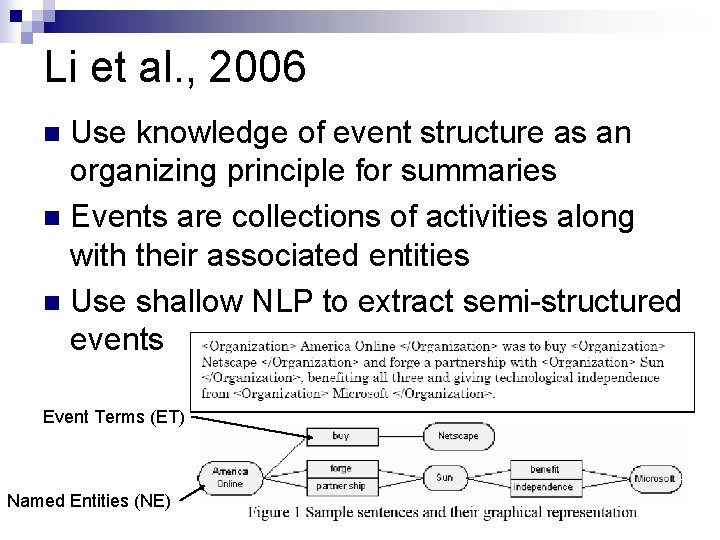

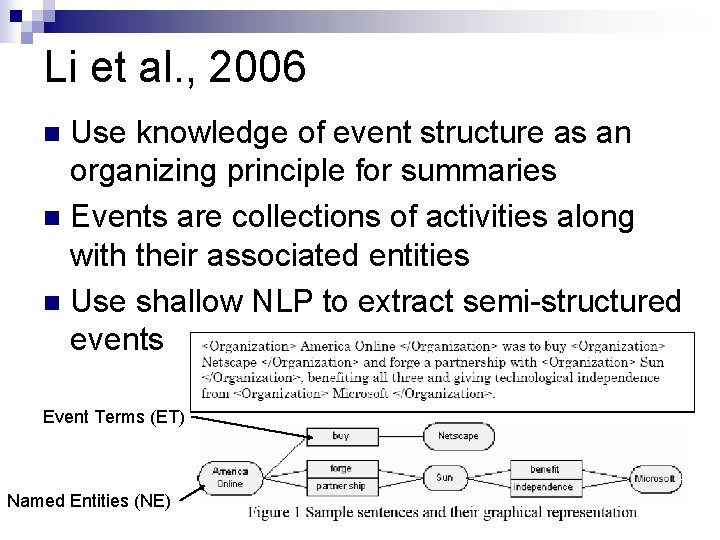

Li et al. , 2006 Use knowledge of event structure as an organizing principle for summaries n Events are collections of activities along with their associated entities n Use shallow NLP to extract semi-structured events n Event Terms (ET) Named Entities (NE)

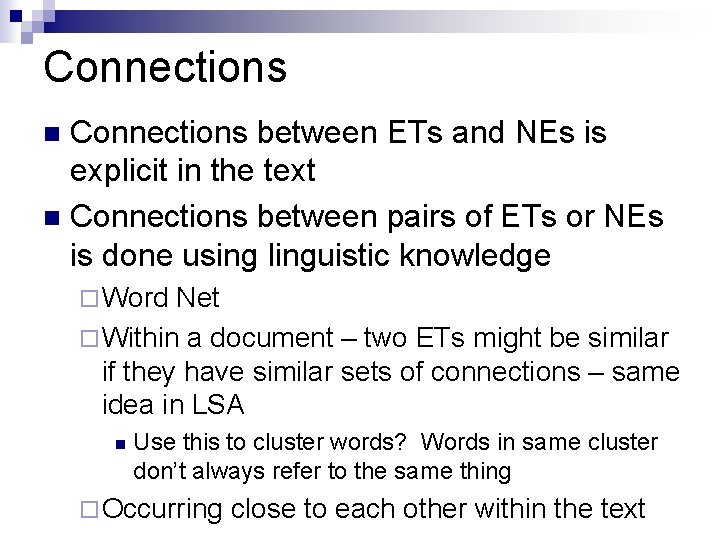

Connections between ETs and NEs is explicit in the text n Connections between pairs of ETs or NEs is done using linguistic knowledge n ¨ Word Net ¨ Within a document – two ETs might be similar if they have similar sets of connections – same idea in LSA n Use this to cluster words? Words in same cluster don’t always refer to the same thing ¨ Occurring close to each other within the text

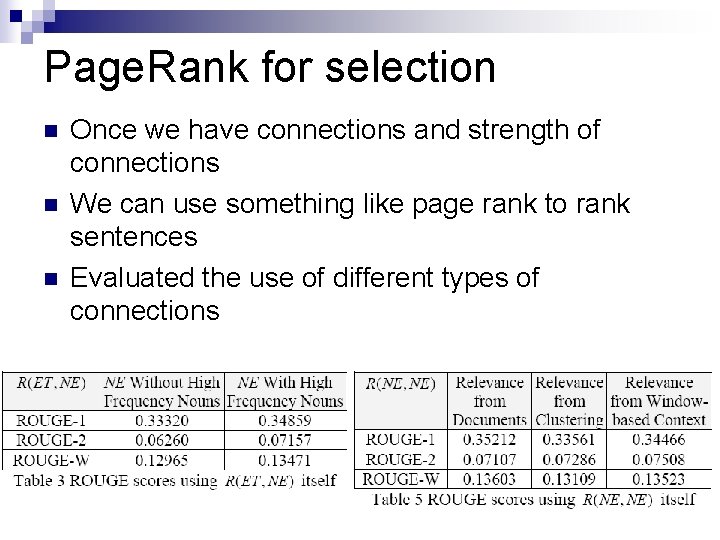

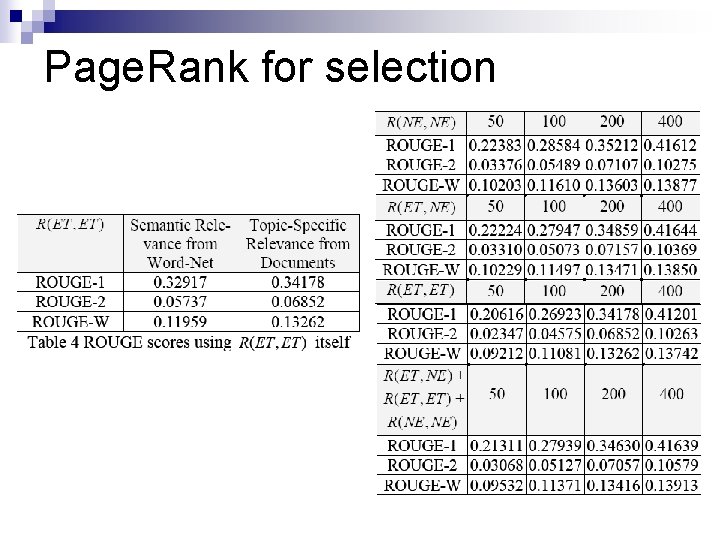

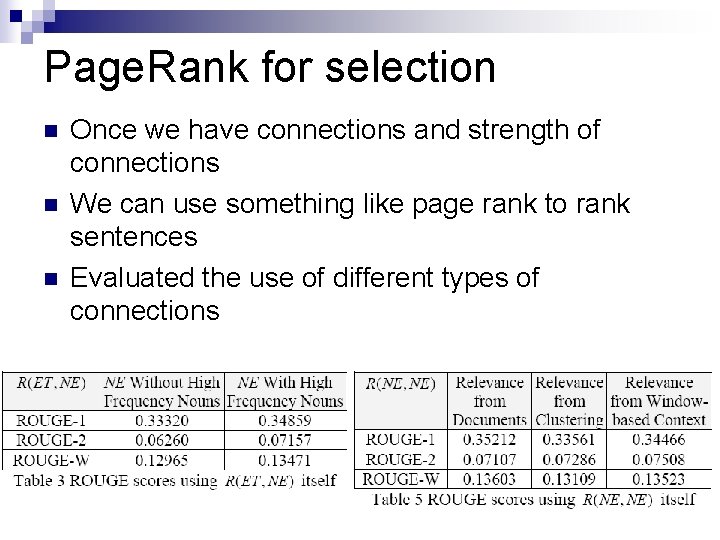

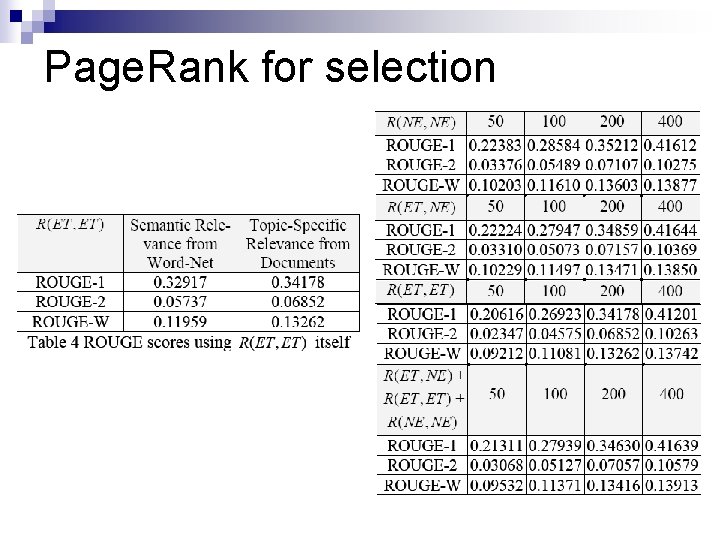

Page. Rank for selection n Once we have connections and strength of connections We can use something like page rank to rank sentences Evaluated the use of different types of connections

Page. Rank for selection

Utility-based Information Distillation Over Temporally Sequenced Documents Yang, Y. , Lad, A. , Lao, N. , Harpale, A. , Kisiel, B. , Rogati, M.

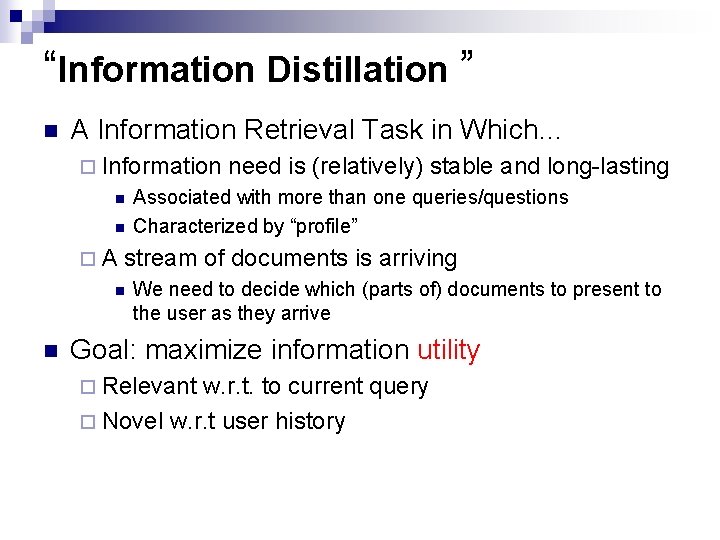

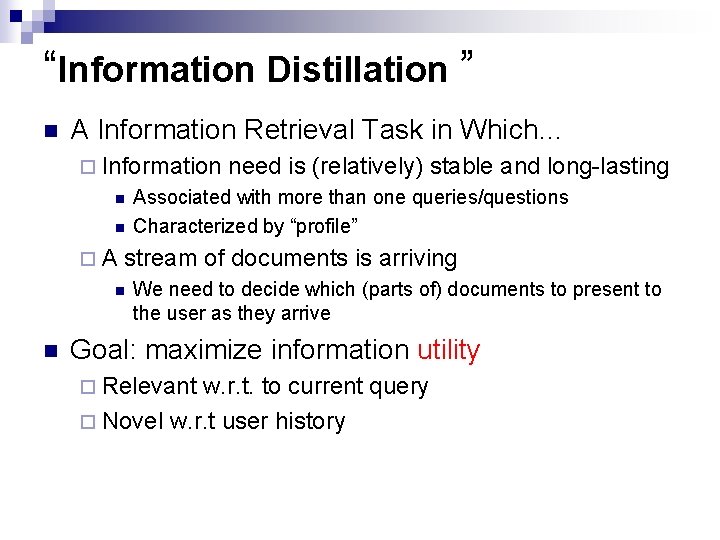

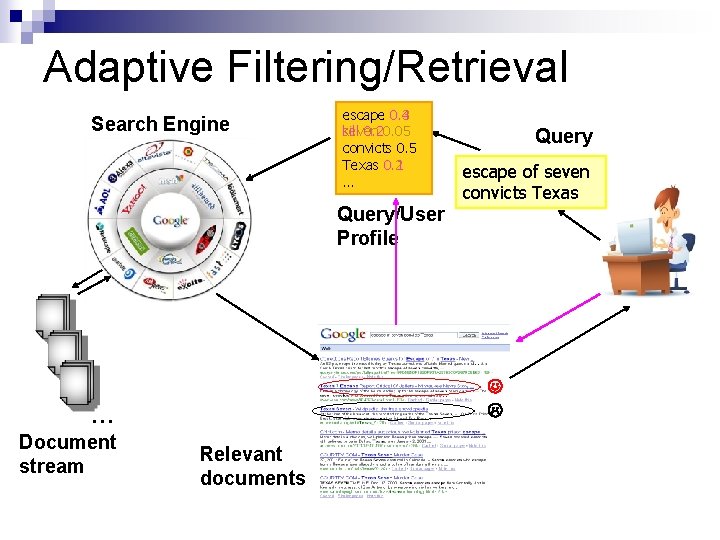

“Information Distillation ” n A Information Retrieval Task in Which… ¨ Information n n ¨A Associated with more than one queries/questions Characterized by “profile” stream of documents is arriving n n need is (relatively) stable and long-lasting We need to decide which (parts of) documents to present to the user as they arrive Goal: maximize information utility ¨ Relevant w. r. t. to current query ¨ Novel w. r. t user history

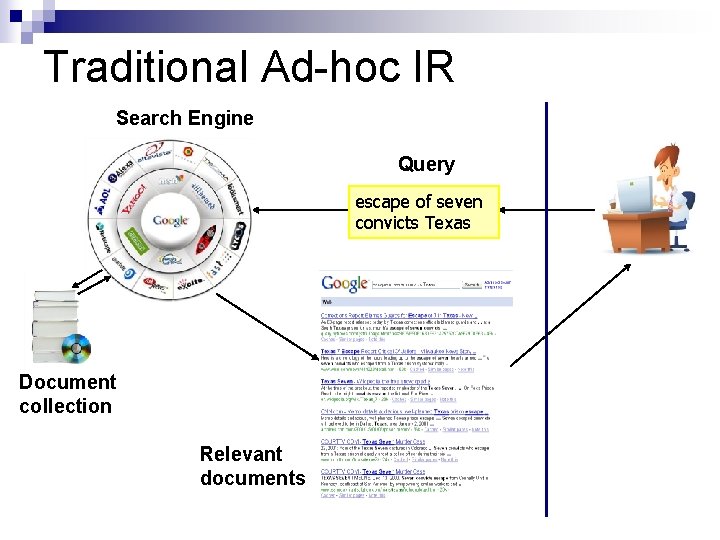

Traditional Ad-hoc IR Search Engine Query escape of seven convicts Texas Document collection Relevant documents

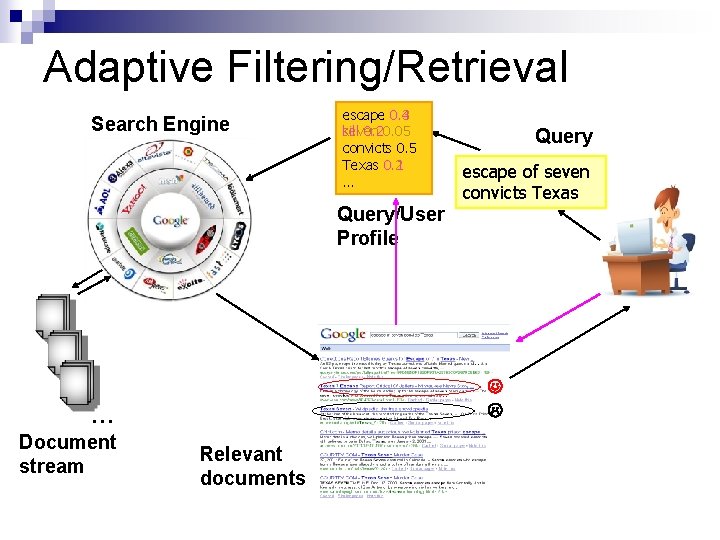

Adaptive Filtering/Retrieval Search Engine escape 0. 3 0. 4 seven kill 0. 20. 05 convicts 0. 5 Texas 0. 2 0. 1 … Query escape of seven convicts Texas Query/User Profile … Document stream Relevant documents

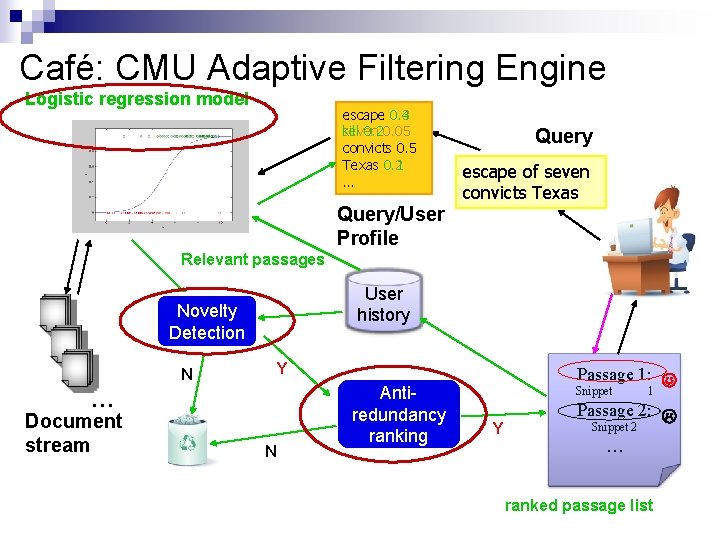

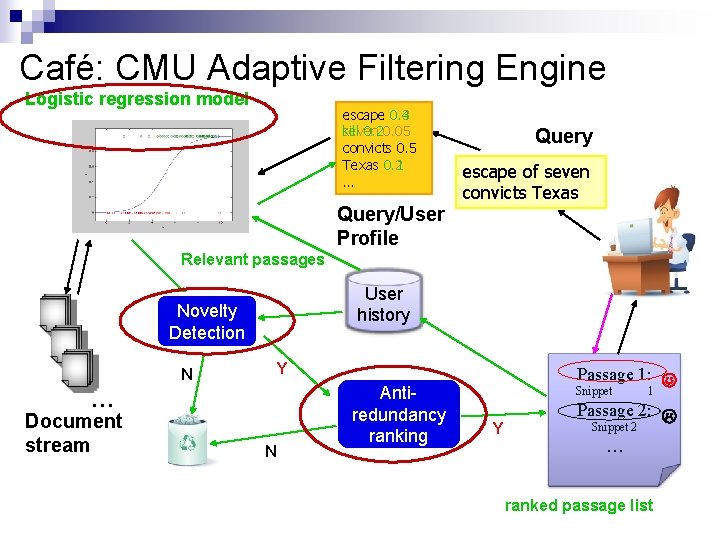

Café: CMU Adaptive Filtering Engine Logistic regression model escape 0. 3 0. 4 seven kill 0. 20. 05 convicts 0. 5 Texas 0. 2 0. 1 … Query escape of seven convicts Texas Query/User Profile Relevant passages User history Novelty Detection Y N … Document stream N Antiredundancy ranking Passage 1: Snippet 1 Y Passage 2: Snippet 2 … ranked passage list

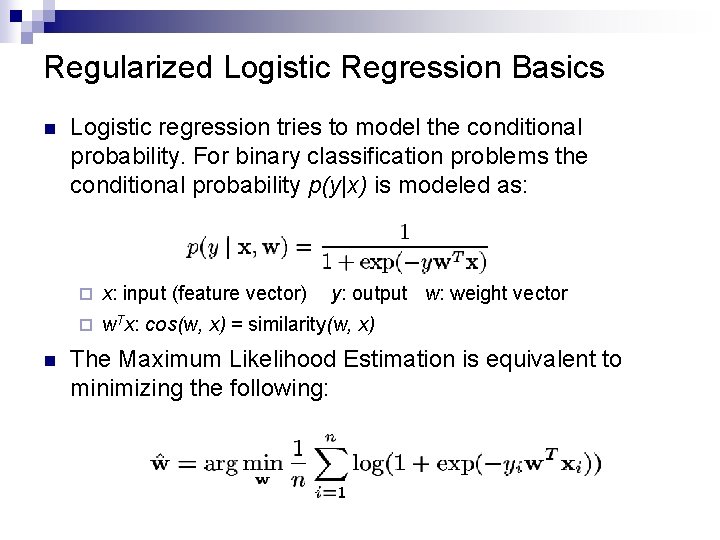

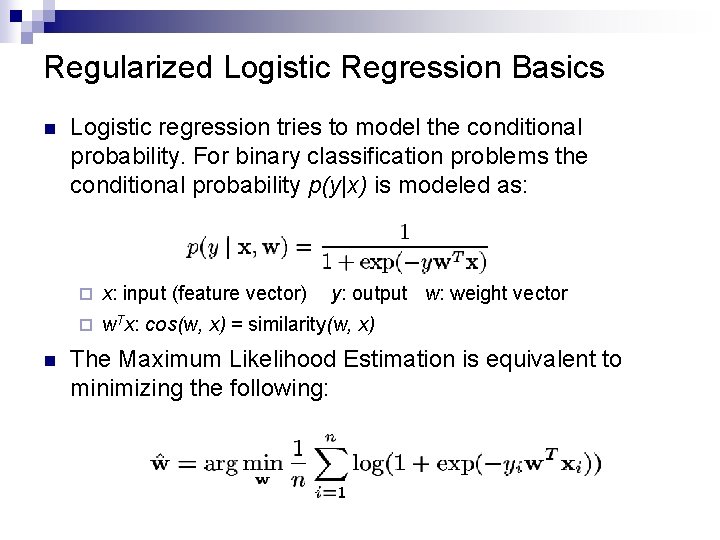

Regularized Logistic Regression Basics n n Logistic regression tries to model the conditional probability. For binary classification problems the conditional probability p(y|x) is modeled as: ¨ x: input (feature vector) y: output w: weight vector ¨ w. Tx: cos(w, x) = similarity(w, x) The Maximum Likelihood Estimation is equivalent to minimizing the following:

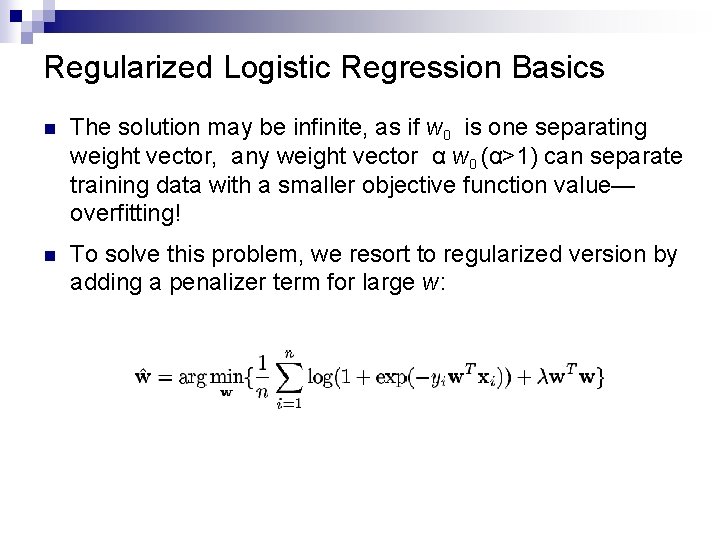

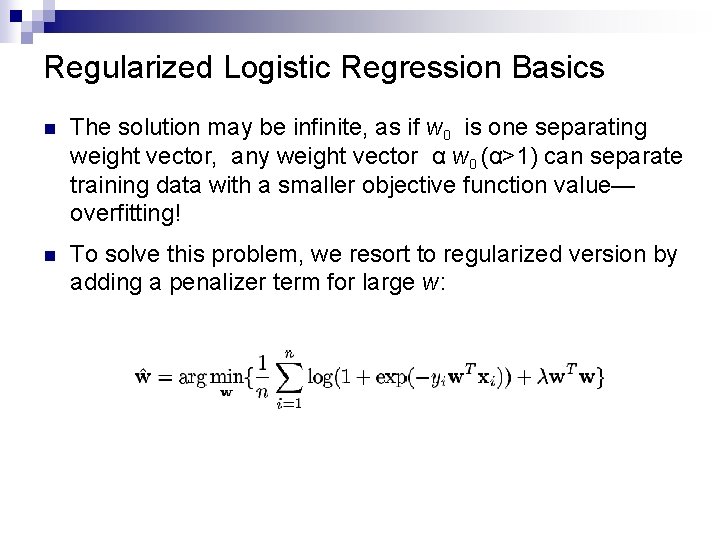

Regularized Logistic Regression Basics n The solution may be infinite, as if w 0 is one separating weight vector, any weight vector α w 0 (α>1) can separate training data with a smaller objective function value— overfitting! n To solve this problem, we resort to regularized version by adding a penalizer term for large w:

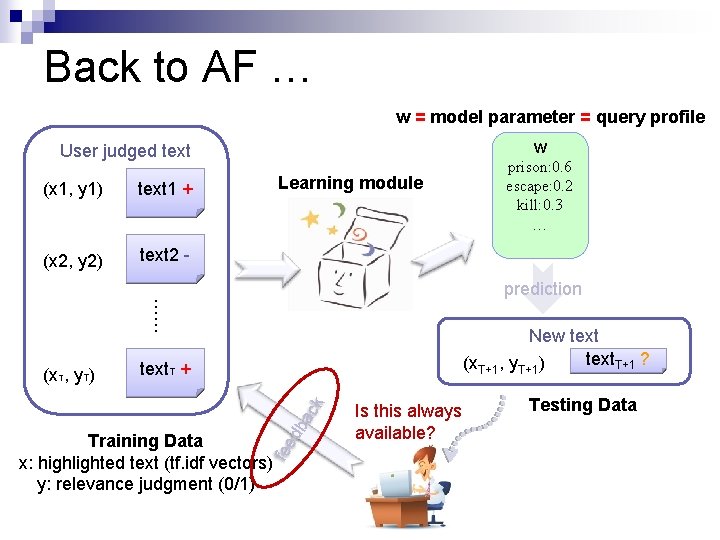

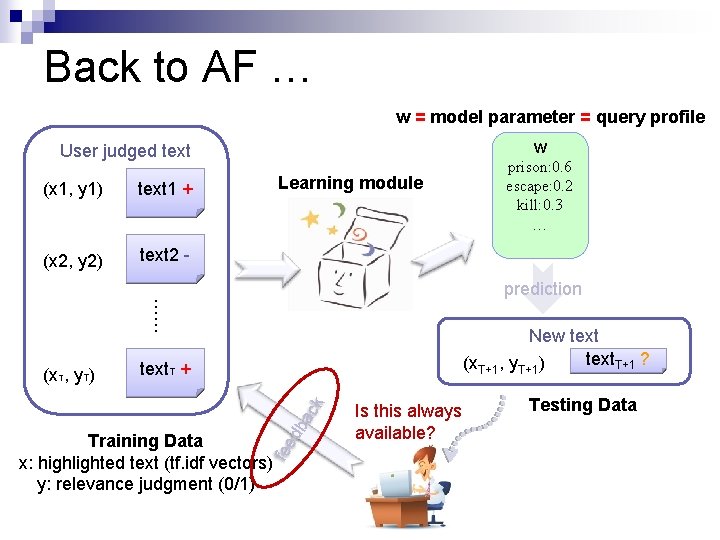

Back to AF … w = model parameter = query profile w User judged text (x 1, y 1) text 1 + (x 2, y 2) text 2 - Learning module prediction …… New text. T+1 ? (x. T+1, y. T+1) text. T + f ee Training Data x: highlighted text (tf. idf vectors) y: relevance judgment (0/1) db ac k (x. T, y. T) prison: 0. 6 escape: 0. 2 kill: 0. 3 … Is this always available? Testing Data

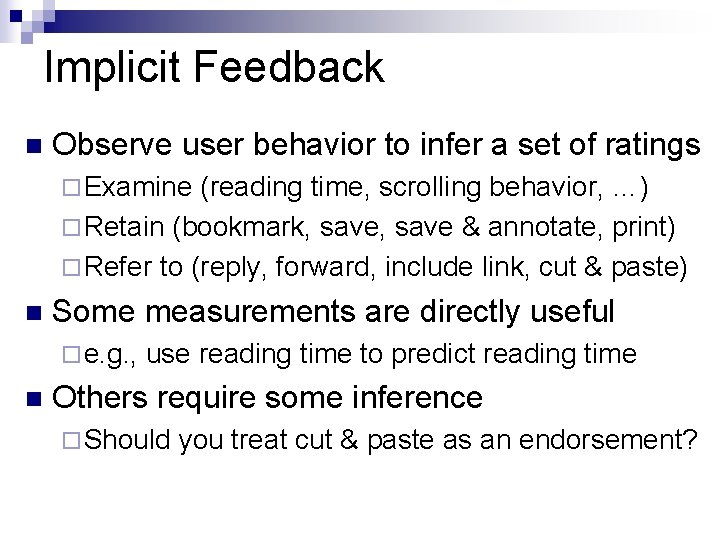

Implicit Feedback n Observe user behavior to infer a set of ratings ¨ Examine (reading time, scrolling behavior, …) ¨ Retain (bookmark, save & annotate, print) ¨ Refer to (reply, forward, include link, cut & paste) n Some measurements are directly useful ¨ e. g. , n use reading time to predict reading time Others require some inference ¨ Should you treat cut & paste as an endorsement?

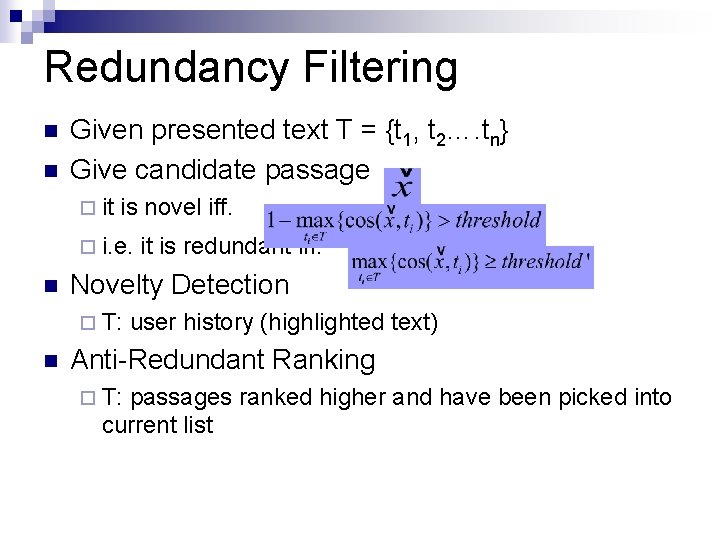

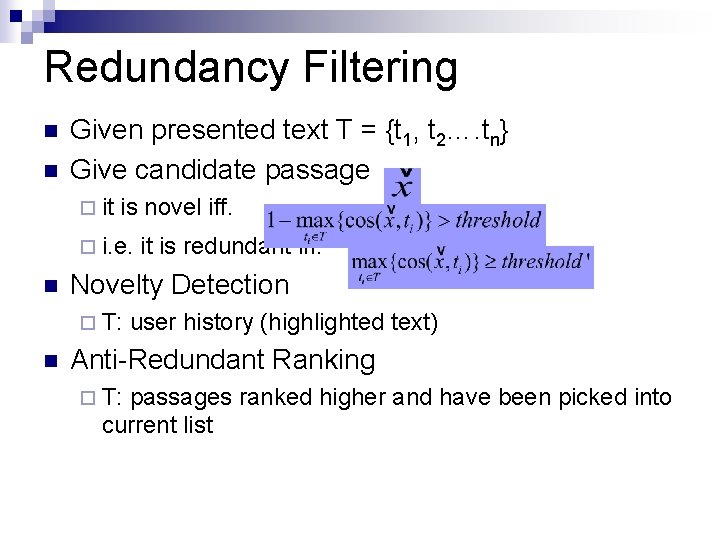

Redundancy Filtering n n Given presented text T = {t 1, t 2…. tn} Give candidate passage ¨ it is novel iff. ¨ i. e. n Novelty Detection ¨ T: n it is redundant iff. user history (highlighted text) Anti-Redundant Ranking ¨ T: passages ranked higher and have been picked into current list

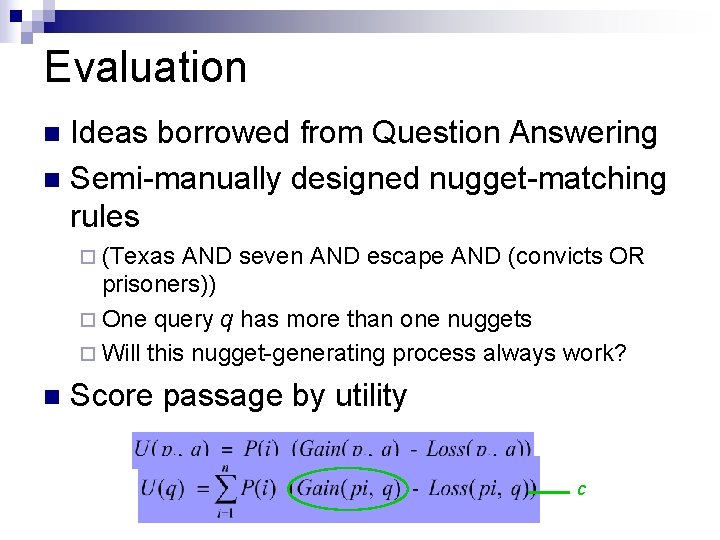

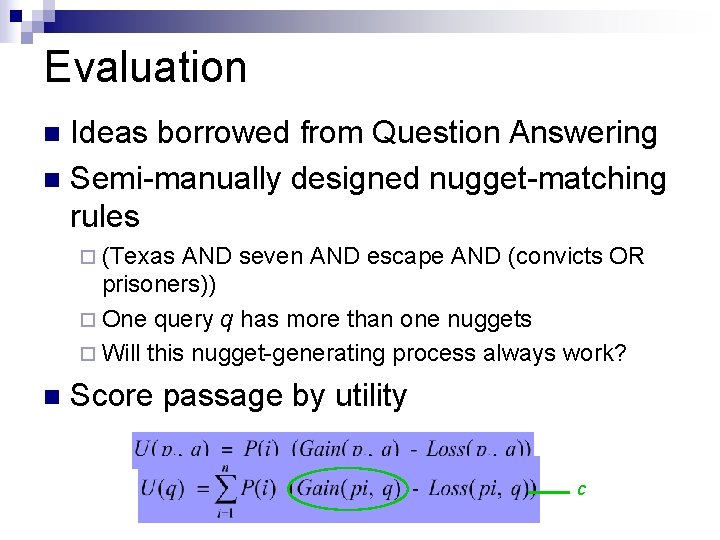

Evaluation Ideas borrowed from Question Answering n Semi-manually designed nugget-matching rules n ¨ (Texas AND seven AND escape AND (convicts OR prisoners)) ¨ One query q has more than one nuggets ¨ Will this nugget-generating process always work? n Score passage by utility c

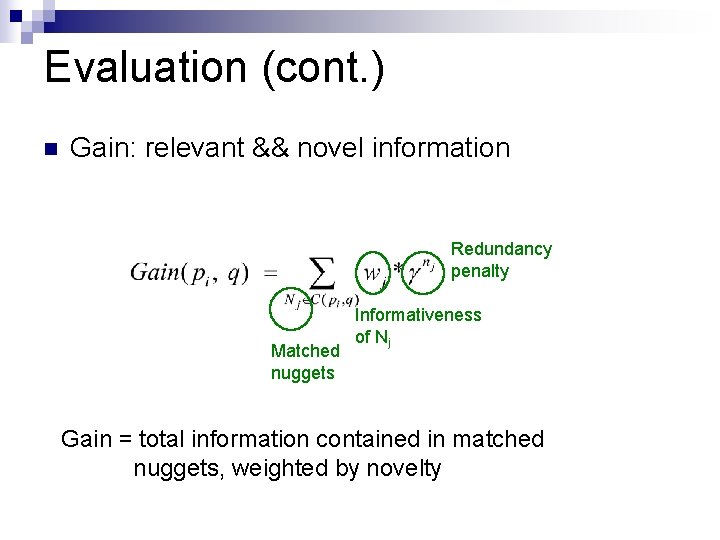

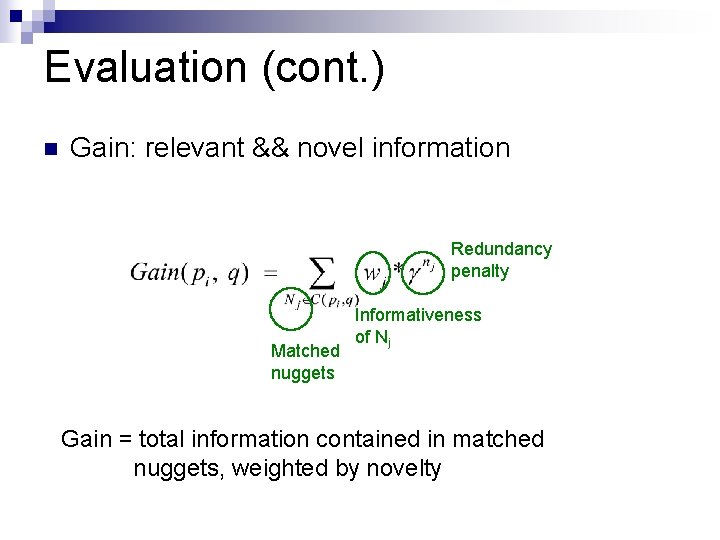

Evaluation (cont. ) n Gain: relevant && novel information Redundancy penalty Matched nuggets Informativeness of Nj Gain = total information contained in matched nuggets, weighted by novelty

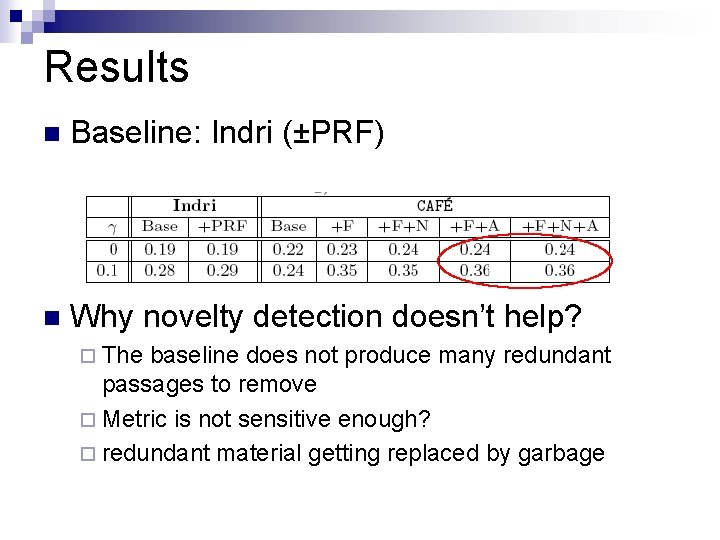

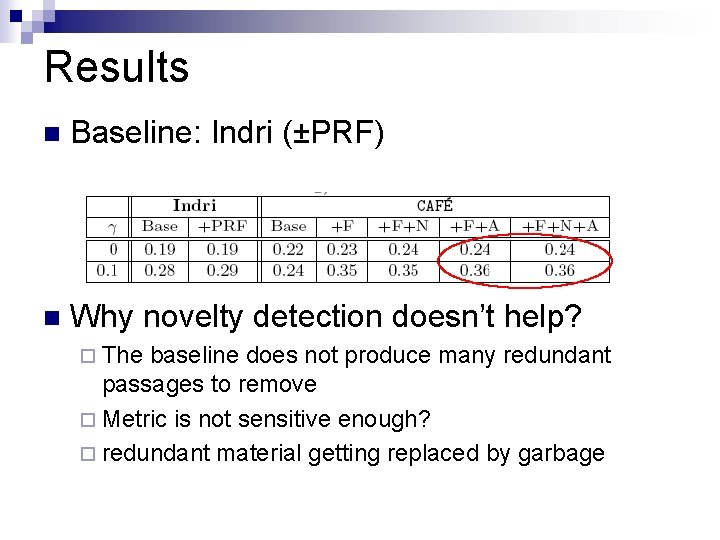

Results n Baseline: Indri (±PRF) n Why novelty detection doesn’t help? ¨ The baseline does not produce many redundant passages to remove ¨ Metric is not sensitive enough? ¨ redundant material getting replaced by garbage

Summary Finer level of retrieval n Adaptive filtering with flexible feedback + two-level redundancy filtering n Answer-key based evaluation metric n Things to think about n ¨ How to get user feedback: explicit vs. implicit ¨ How to get novelty detection work better

Student Quote n Yang et al. : This paper makes two assumptions which seem in conflict with each other. The first is that "a real user may be willing to provide more informative, fine-grained feedback via highlighting some pieces of text in a retrieved document as relevant". The second is "passages not marked by the user are taken as negative examples. " The consequence of these assumptions means that users are not allowed to be lazy -- users who do not highlight *all* relevant pieces of text are likely to see less accurate results than users who do.

Questions?

First law review

First law review Entity summarization

Entity summarization Text summarization vietnamese

Text summarization vietnamese Medical summaries for law firms

Medical summaries for law firms Text summarization vietnamese

Text summarization vietnamese Text summarization vietnamese

Text summarization vietnamese Abstractive summarization

Abstractive summarization Carolyn mendiola

Carolyn mendiola Carolyn boroden

Carolyn boroden Carolyn wells how to tell wild animals

Carolyn wells how to tell wild animals Carolyn sourek

Carolyn sourek Carolyn talbot

Carolyn talbot Carolyn johnston md

Carolyn johnston md Carolyn shread

Carolyn shread Carolyn graham jazz chants

Carolyn graham jazz chants Carolyn hanesworth

Carolyn hanesworth Levi carolyn ph

Levi carolyn ph Njapsa

Njapsa Carolyn brownawell

Carolyn brownawell Carolyn maull

Carolyn maull In my understanding

In my understanding Carolyn ells

Carolyn ells Carolyn hotchkiss

Carolyn hotchkiss Upton lake christian school

Upton lake christian school Carolyn washburn

Carolyn washburn Carolyn cherry

Carolyn cherry Carolyn knoepfler

Carolyn knoepfler Carolyn saxby facts

Carolyn saxby facts Duns and ros correlation

Duns and ros correlation Raspberry pi lidar slam

Raspberry pi lidar slam Catkin rebuild

Catkin rebuild