Sharing Cloud Networks Lucian Popa Gautam Kumar Mosharaf

- Slides: 23

Sharing Cloud Networks Lucian Popa, Gautam Kumar, Mosharaf Chowdhury Arvind Krishnamurthy, Sylvia Ratnasamy, Ion Stoica UC Berkeley

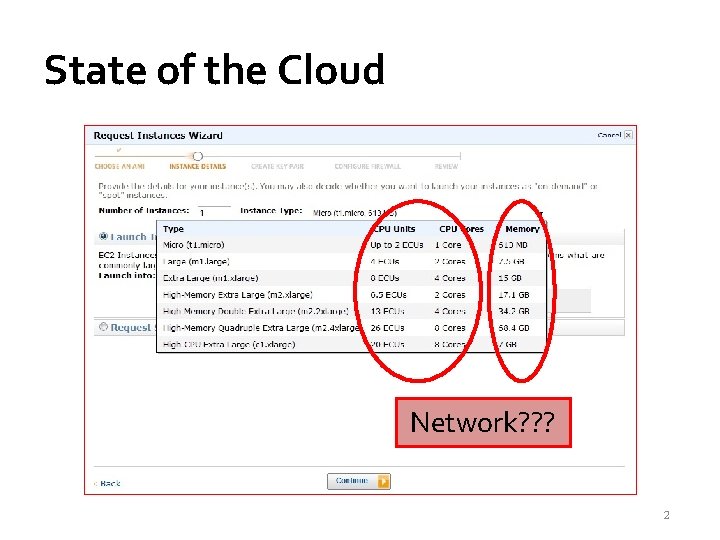

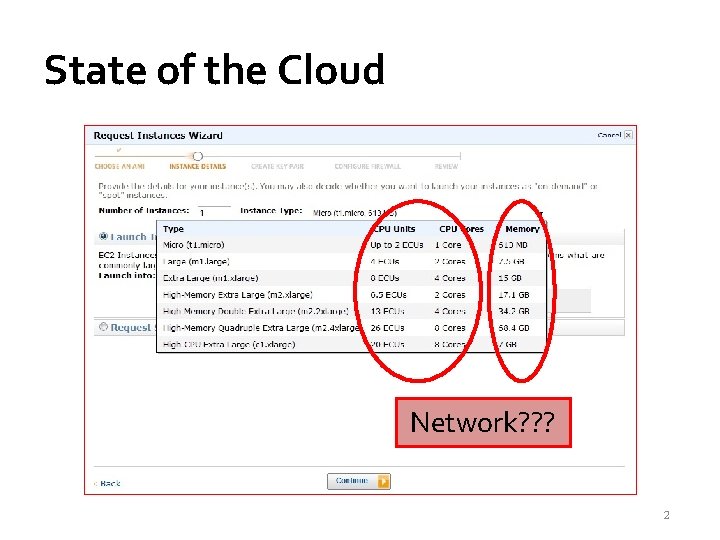

State of the Cloud Network? ? ? 2

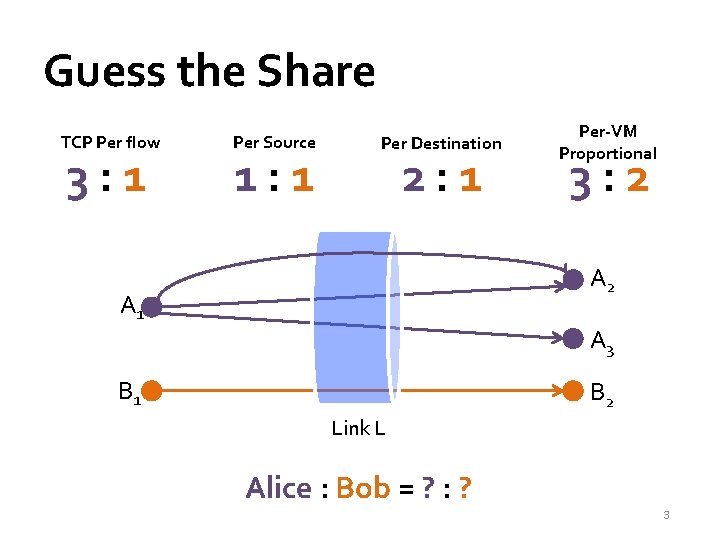

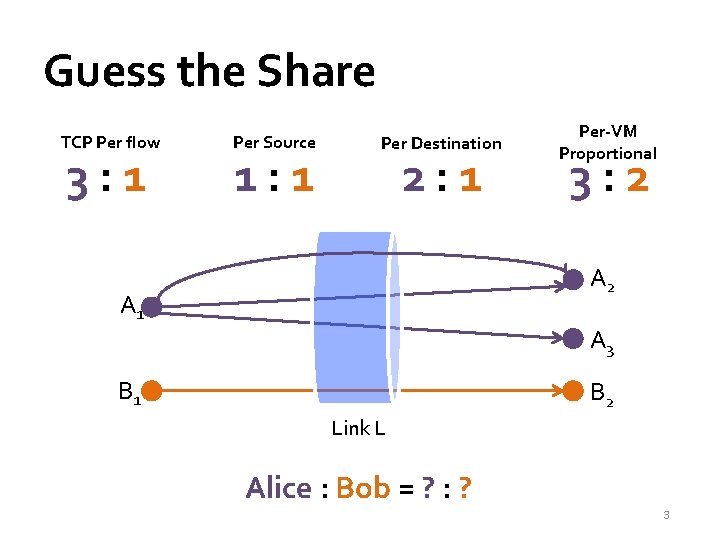

Guess the Share TCP Per flow 3: 1 Per Source 1: 1 Per Destination 2: 1 Per-VM Proportional 3: 2 A 1 A 3 B 1 B 2 Link L Alice : Bob = ? : ? 3

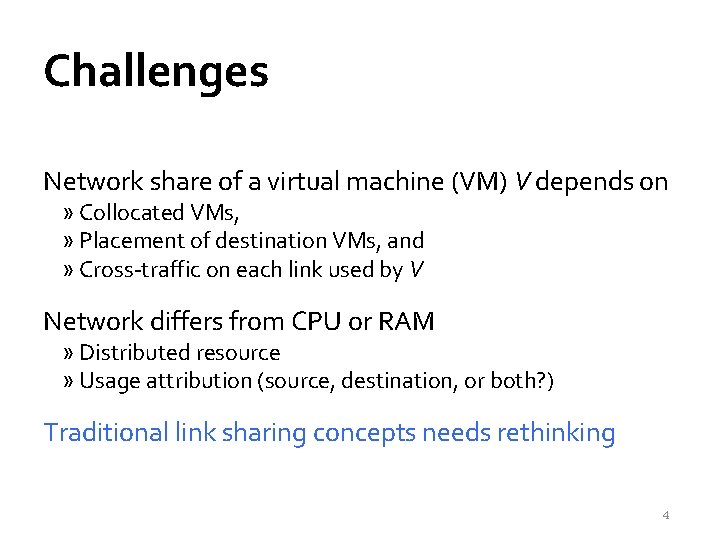

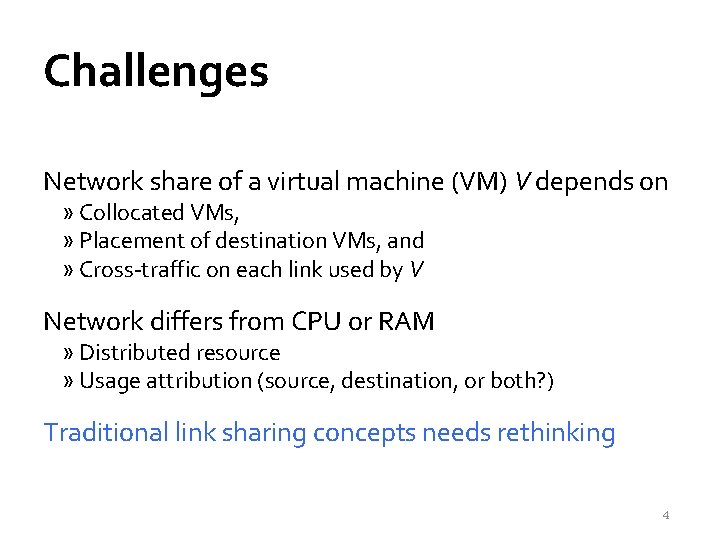

Challenges Network share of a virtual machine (VM) V depends on » Collocated VMs, » Placement of destination VMs, and » Cross-traffic on each link used by V Network differs from CPU or RAM » Distributed resource » Usage attribution (source, destination, or both? ) Traditional link sharing concepts needs rethinking 4

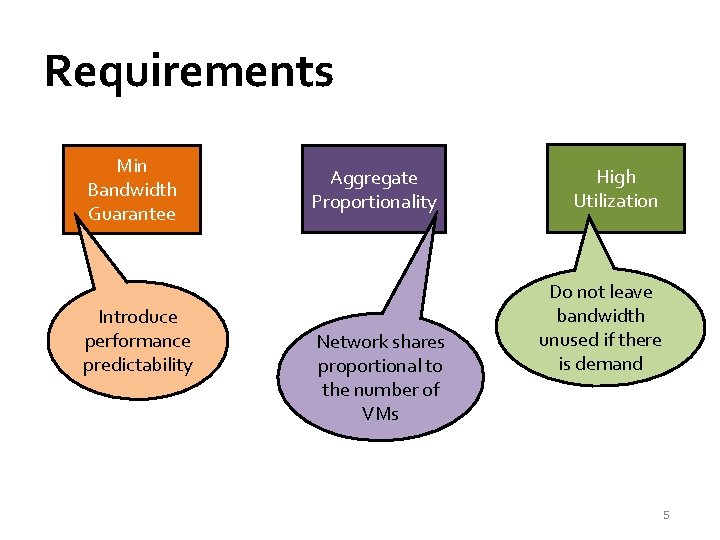

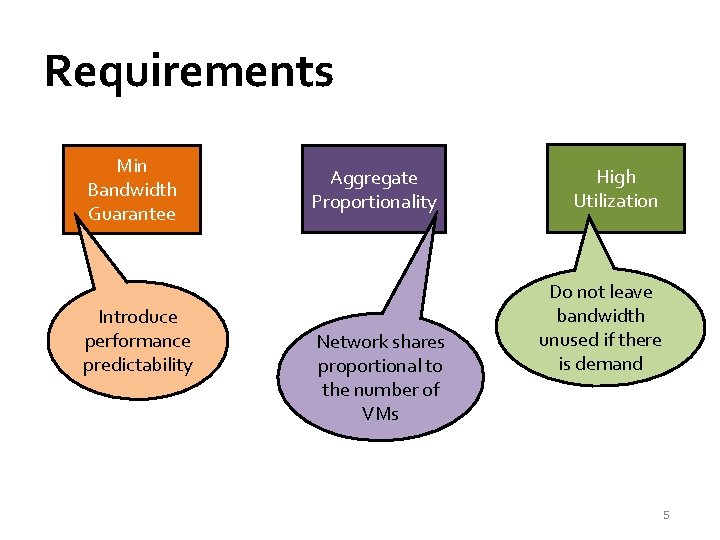

Requirements Min Bandwidth Guarantee Introduce performance predictability Aggregate Proportionality Network shares proportional to the number of VMs High Utilization Do not leave bandwidth unused if there is demand 5

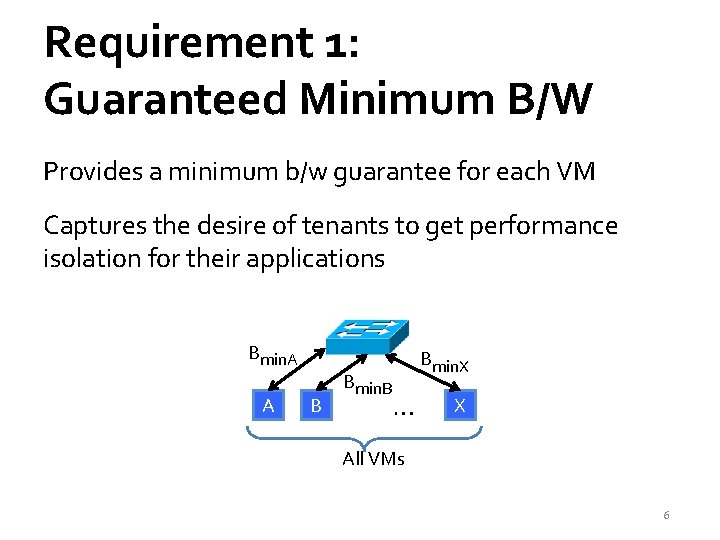

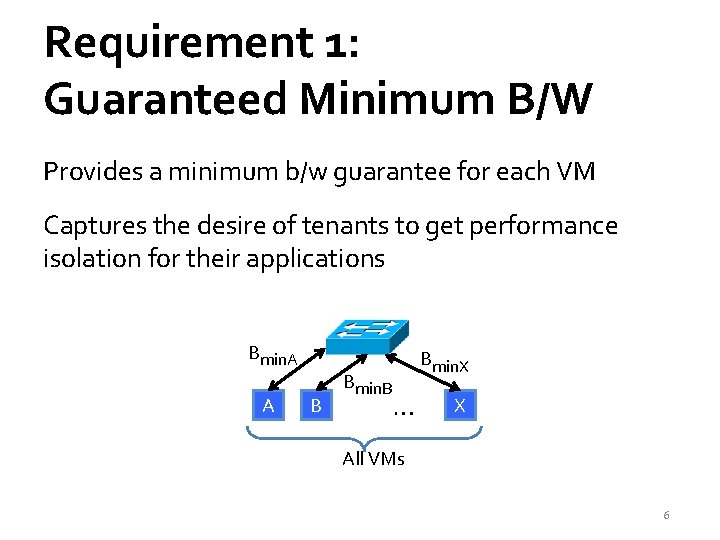

Requirement 1: Guaranteed Minimum B/W Provides a minimum b/w guarantee for each VM Captures the desire of tenants to get performance isolation for their applications Bmin. A A B Bmin. X … X All VMs 6

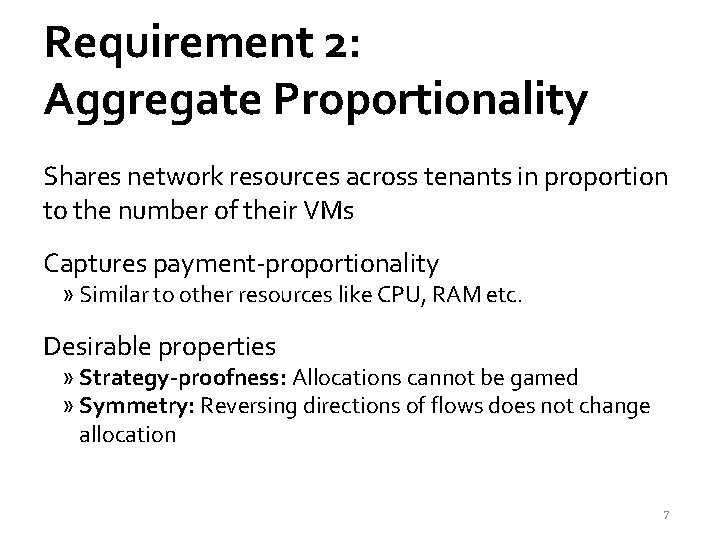

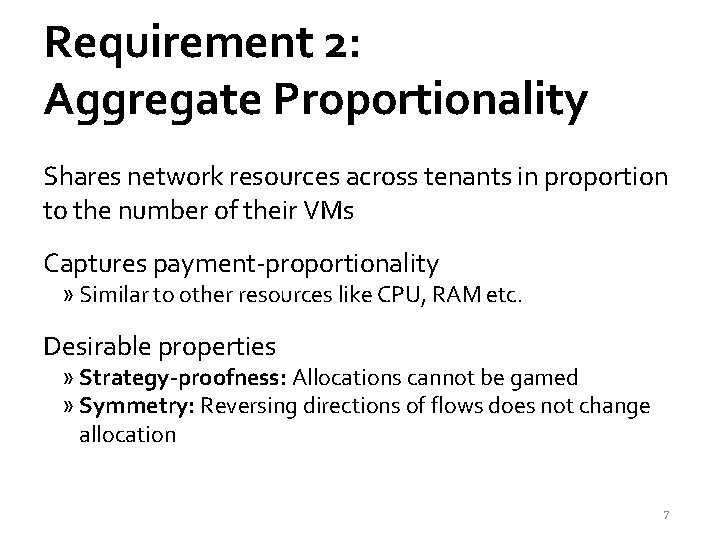

Requirement 2: Aggregate Proportionality Shares network resources across tenants in proportion to the number of their VMs Captures payment-proportionality » Similar to other resources like CPU, RAM etc. Desirable properties » Strategy-proofness: Allocations cannot be gamed » Symmetry: Reversing directions of flows does not change allocation 7

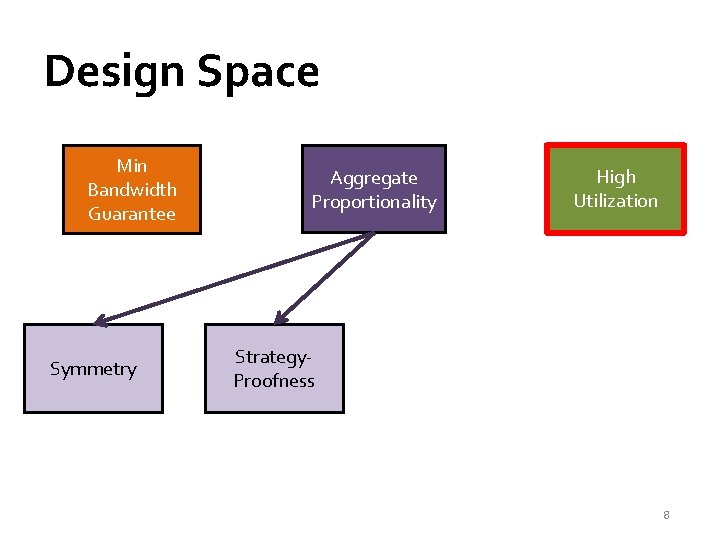

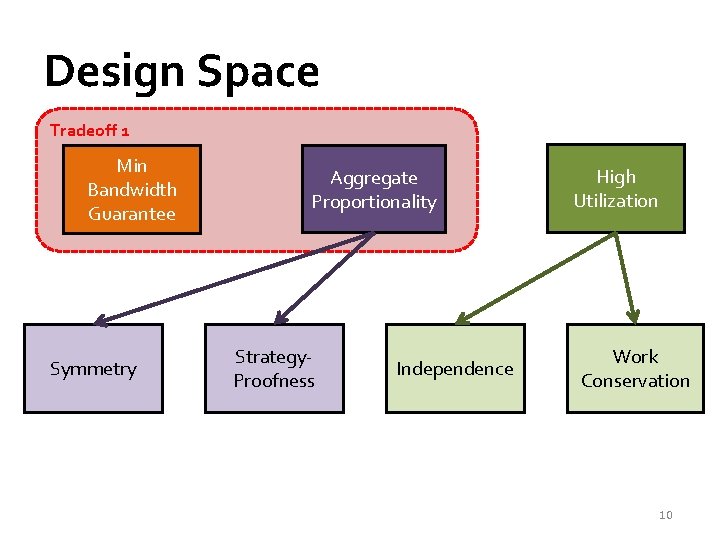

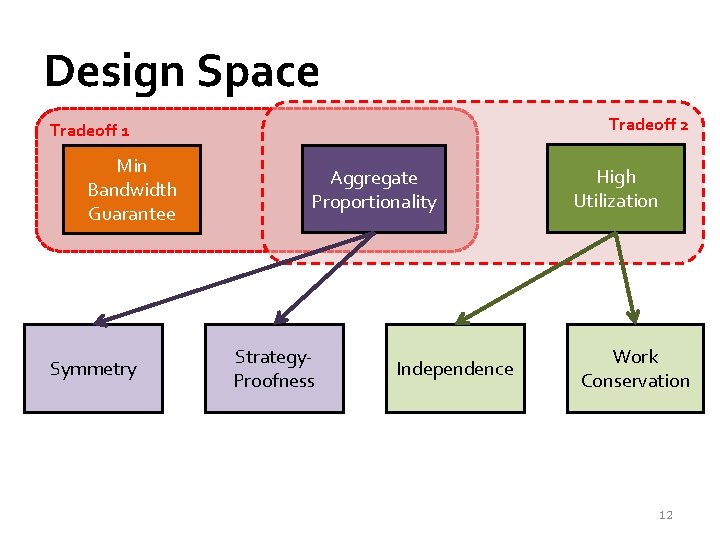

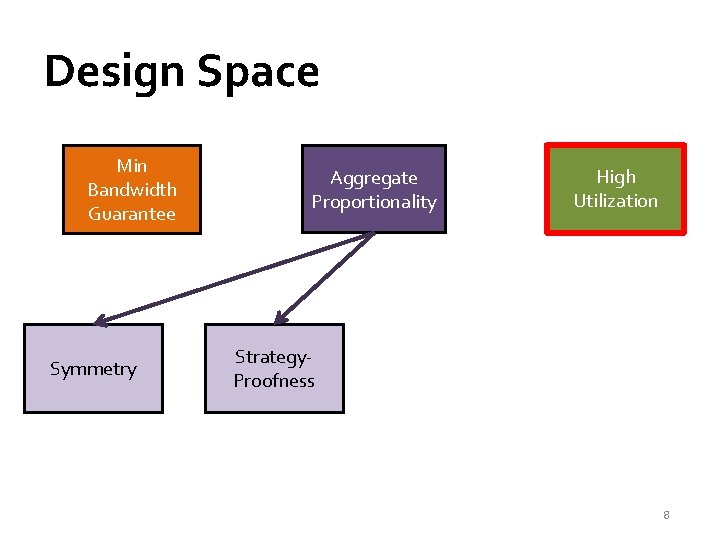

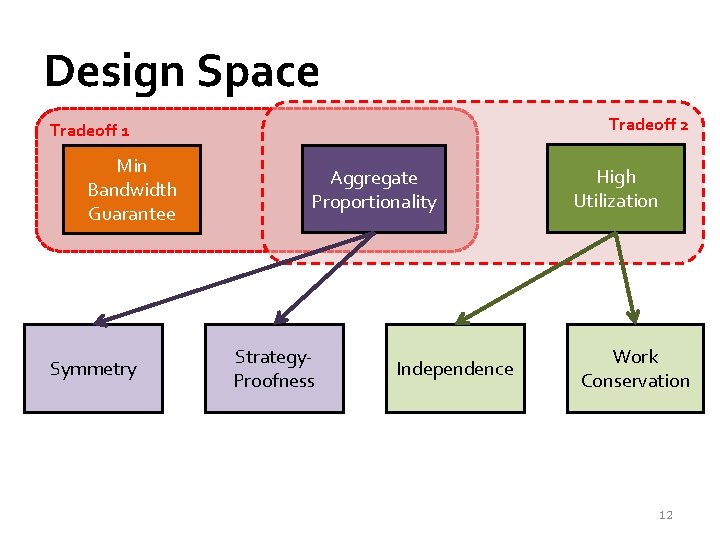

Design Space Min Bandwidth Guarantee Symmetry Aggregate Proportionality High Utilization Strategy. Proofness 8

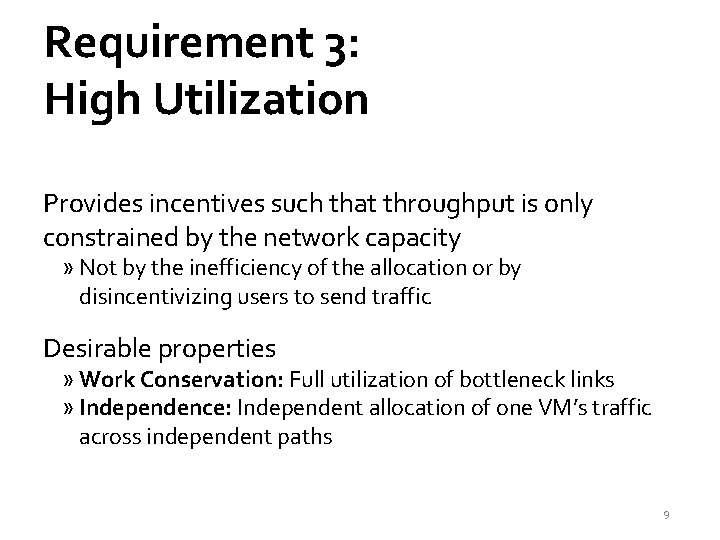

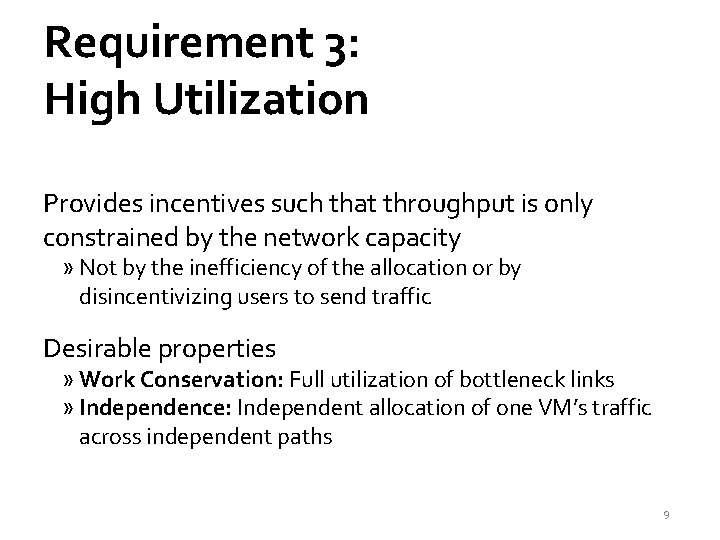

Requirement 3: High Utilization Provides incentives such that throughput is only constrained by the network capacity » Not by the inefficiency of the allocation or by disincentivizing users to send traffic Desirable properties » Work Conservation: Full utilization of bottleneck links » Independence: Independent allocation of one VM’s traffic across independent paths 9

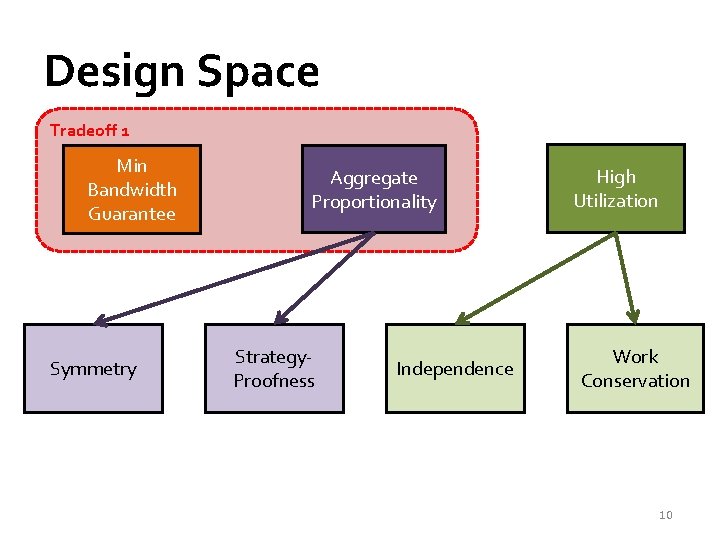

Design Space Tradeoff 1 Min Bandwidth Guarantee Symmetry Aggregate Proportionality Strategy. Proofness Independence High Utilization Work Conservation 10

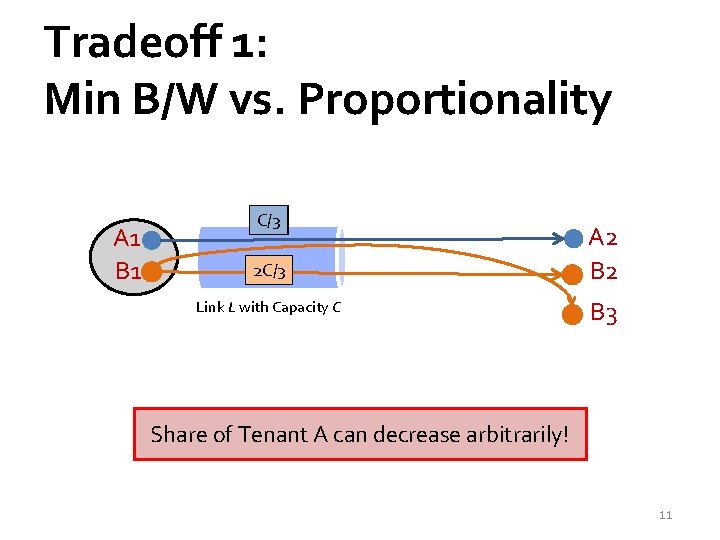

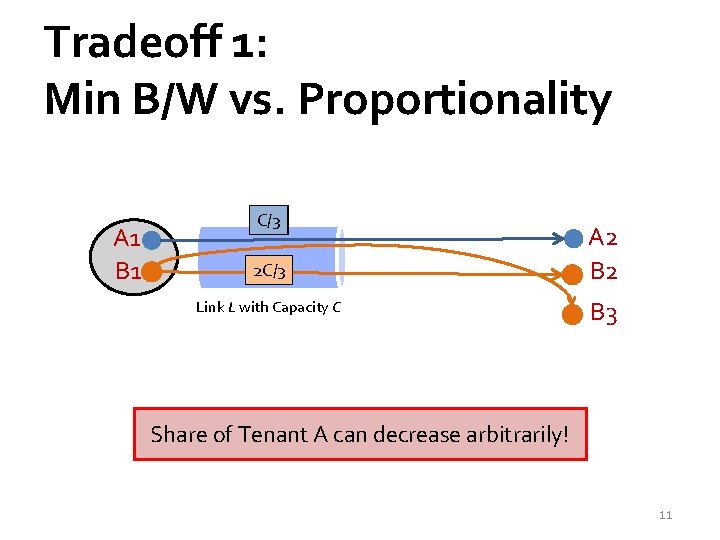

Tradeoff 1: Min B/W vs. Proportionality A 1 B 1 C/2 C/3 2 C/3 C/2 Link L with Capacity C A 2 B 3 Share of Tenant A can decrease arbitrarily! 11

Design Space Tradeoff 2 Tradeoff 1 Min Bandwidth Guarantee Symmetry Aggregate Proportionality Strategy. Proofness Independence High Utilization Work Conservation 12

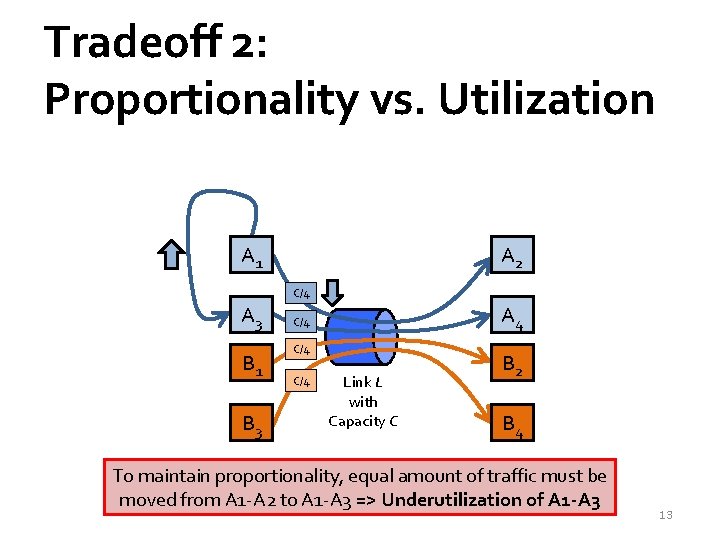

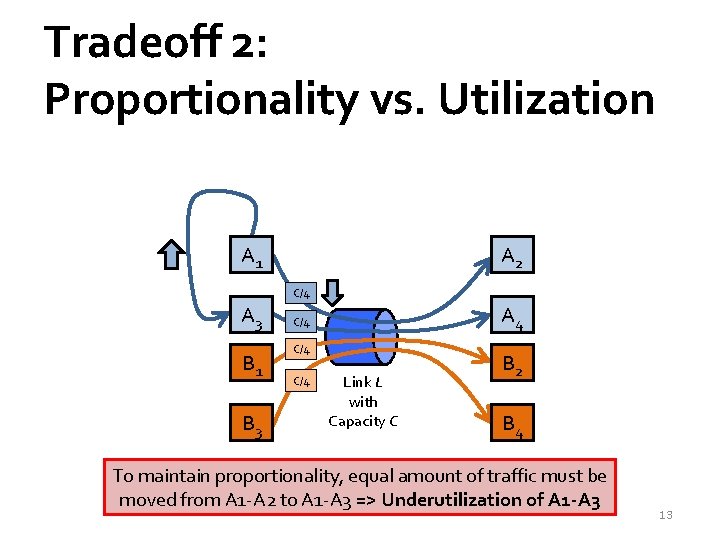

Tradeoff 2: Proportionality vs. Utilization A 1 A 2 C/4 A 3 B 1 B 3 A 4 C/4 C/4 Link L with Capacity C B 2 B 4 To maintain proportionality, equal amount of traffic must be moved from A 1 -A 2 to A 1 -A 3 => Underutilization of A 1 -A 3 13

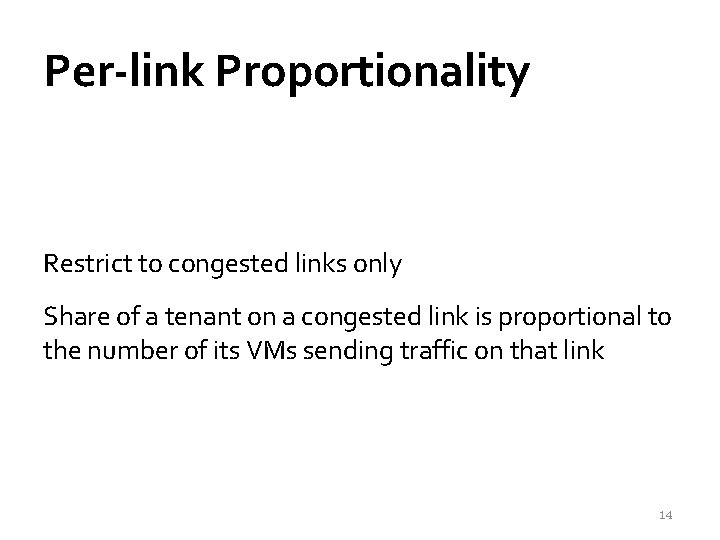

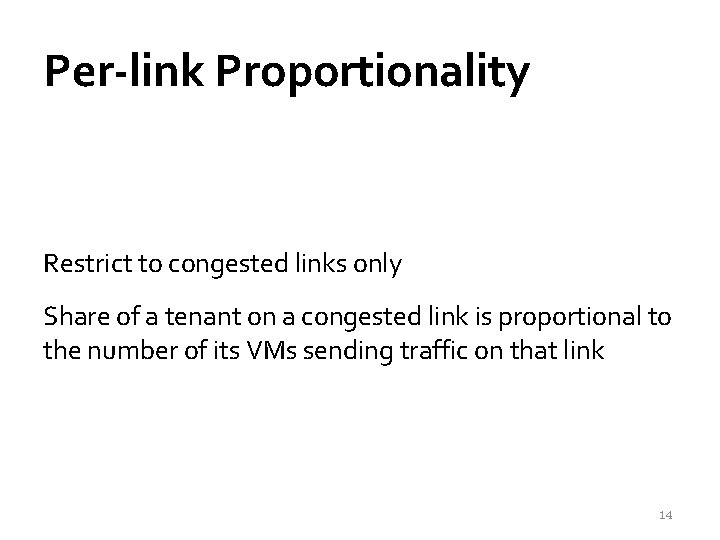

Per-link Proportionality Restrict to congested links only Share of a tenant on a congested link is proportional to the number of its VMs sending traffic on that link 14

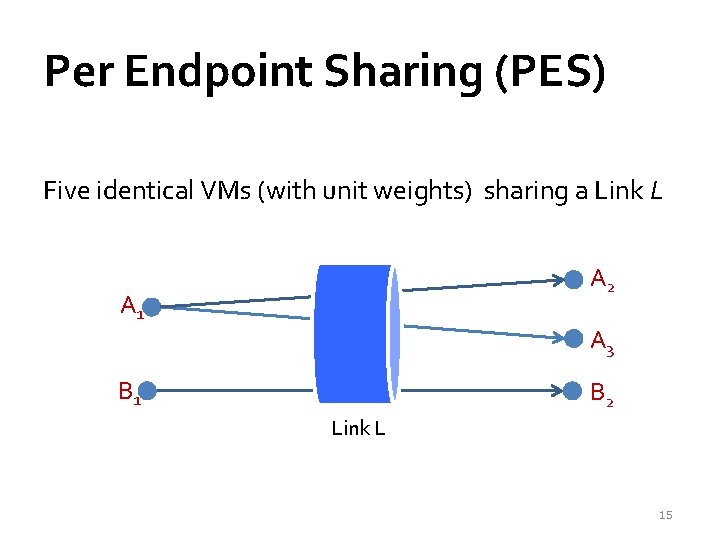

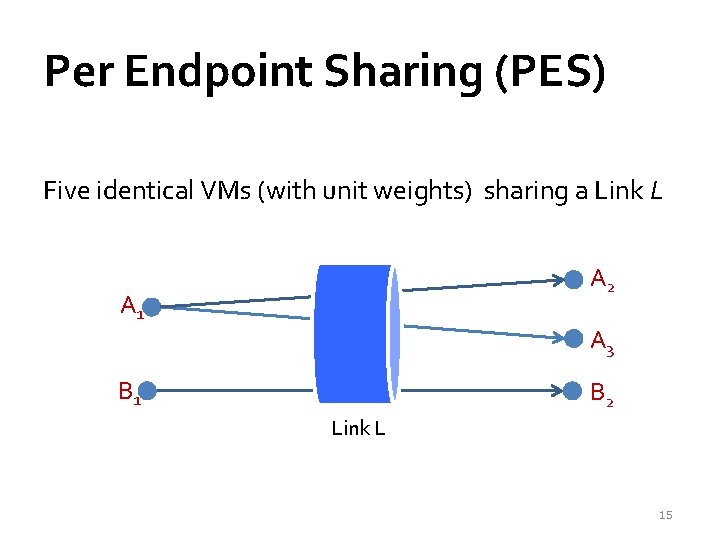

Per Endpoint Sharing (PES) Five identical VMs (with unit weights) sharing a Link L A 2 A 1 A 3 B 1 B 2 Link L 15

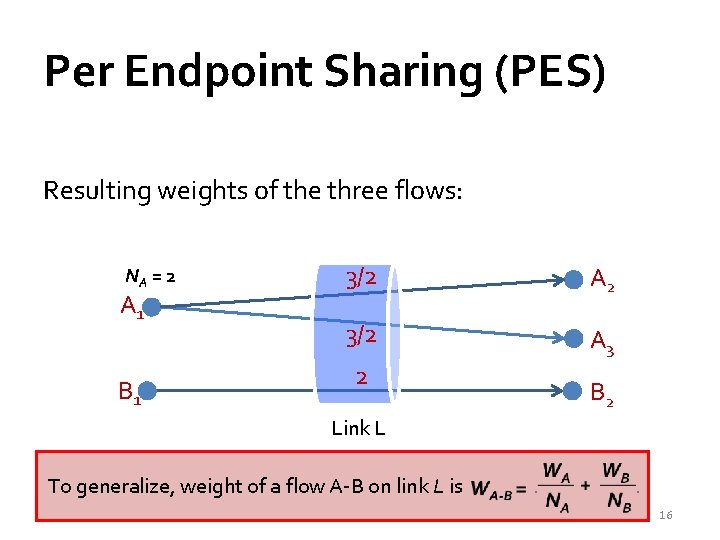

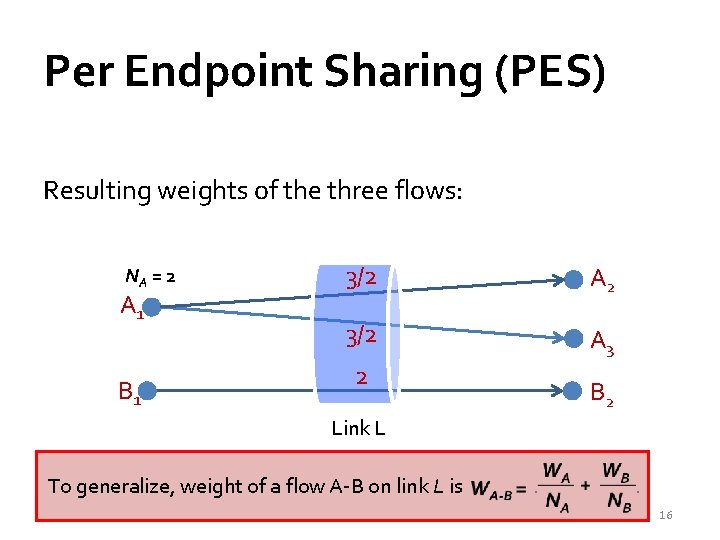

Per Endpoint Sharing (PES) Resulting weights of the three flows: NA = 2 A 1 B 1 3/2 A 2 3/2 A 3 2 B 2 Link L To generalize, weight of a flow A-B on link L is . . 16

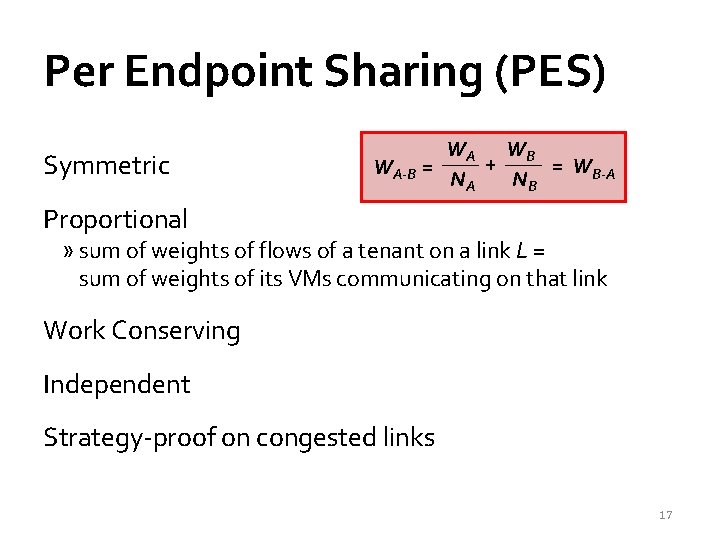

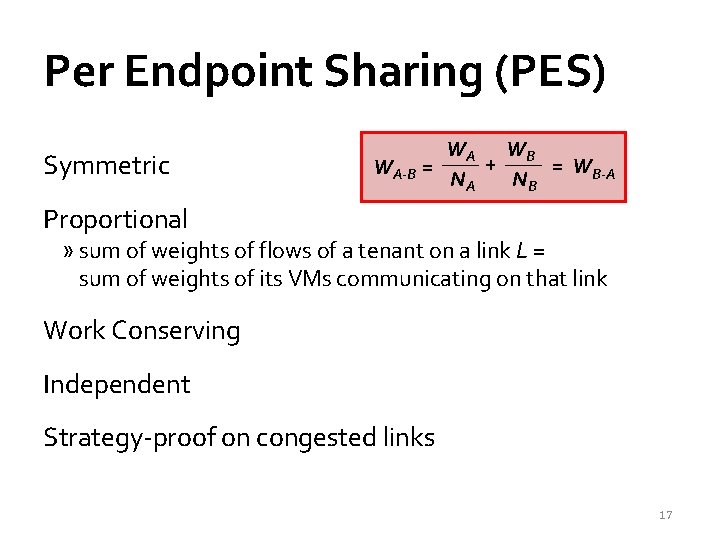

Per Endpoint Sharing (PES) Symmetric WA WB + = WB-A WA-B = NA NB Proportional » sum of weights of flows of a tenant on a link L = sum of weights of its VMs communicating on that link Work Conserving Independent Strategy-proof on congested links 17

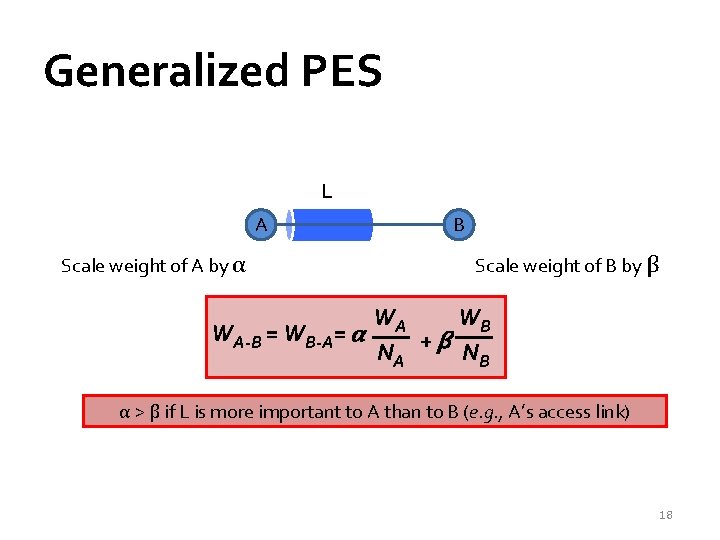

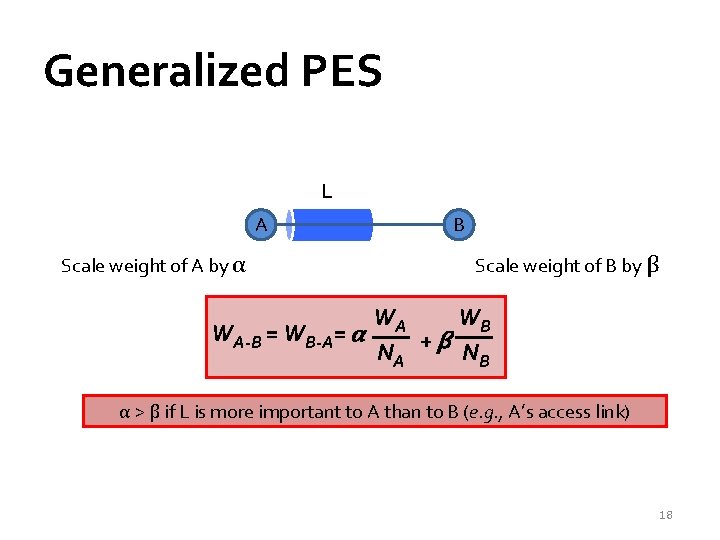

Generalized PES L A Scale weight of A by α B Scale weight of B by β WA WB WA-B = WB-A= α +β NA NB α > β if L is more important to A than to B (e. g. , A’s access link) 18

One-Sided PES (OSPES) L A Scale weight of A by α B Scale weight of B by β WA WB WA-B = WB-A= α +β NA NB Per Source closer to source Highest B/W Guarantee Per Destination closer to destination α = 1, β = 0 if A is closer to L α = 0, β = 1 if B is closer to L *In the Hose Model 19

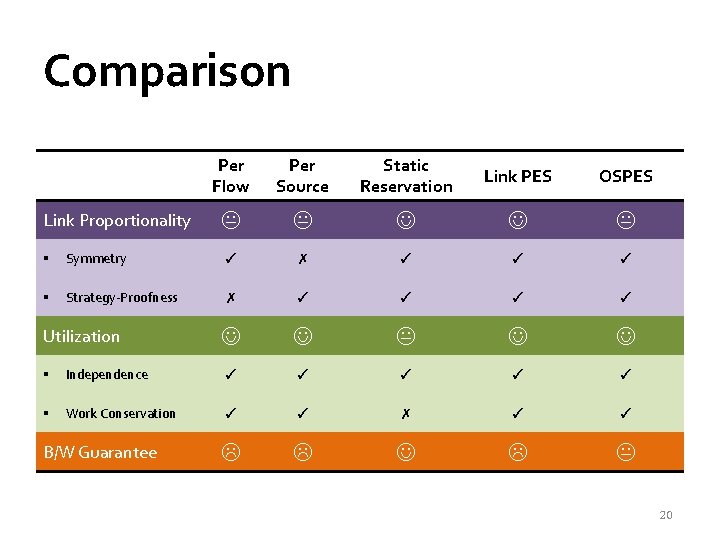

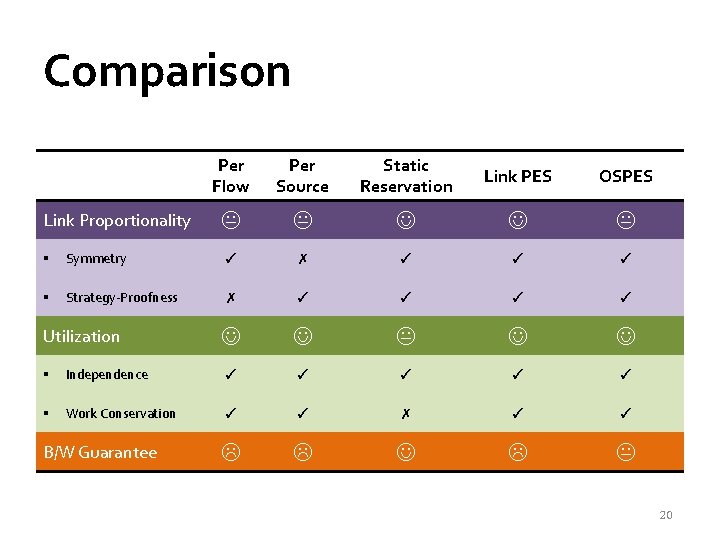

Comparison Link Proportionality Per Flow Per Source Static Reservation Link PES OSPES § Symmetry ✓ ✗ ✓ ✓ ✓ § Strategy-Proofness ✗ ✓ ✓ Utilization § Independence ✓ ✓ ✓ § Work Conservation ✓ ✓ ✗ ✓ ✓ B/W Guarantee 20

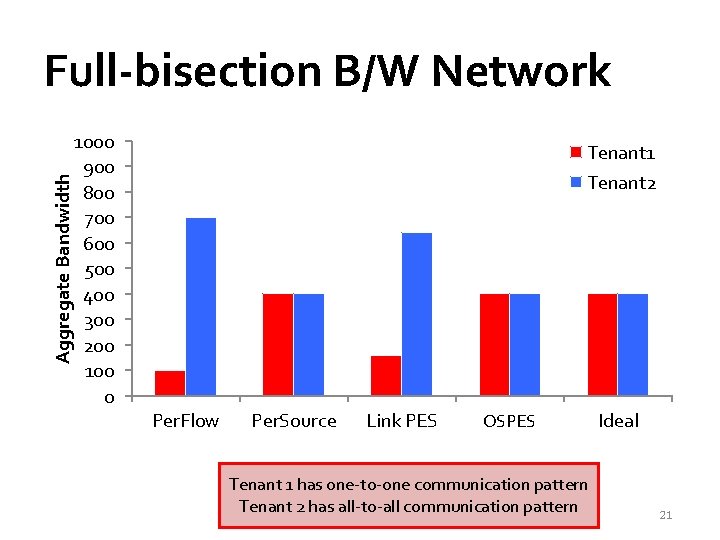

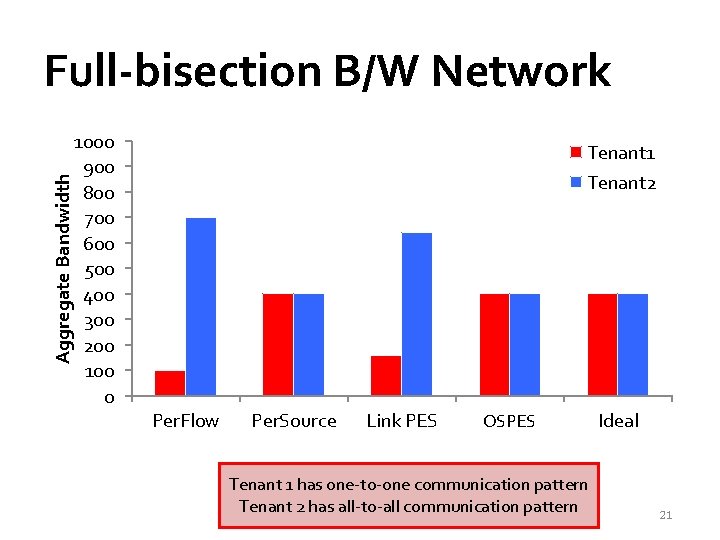

Aggregate Bandwidth Full-bisection B/W Network 1000 900 800 700 600 500 400 300 200 100 0 Tenant 1 Tenant 2 Per. Flow Per. Source Link PES Network OSPES Tenant 1 has one-to-one communication pattern Tenant 2 has all-to-all communication pattern Ideal 21

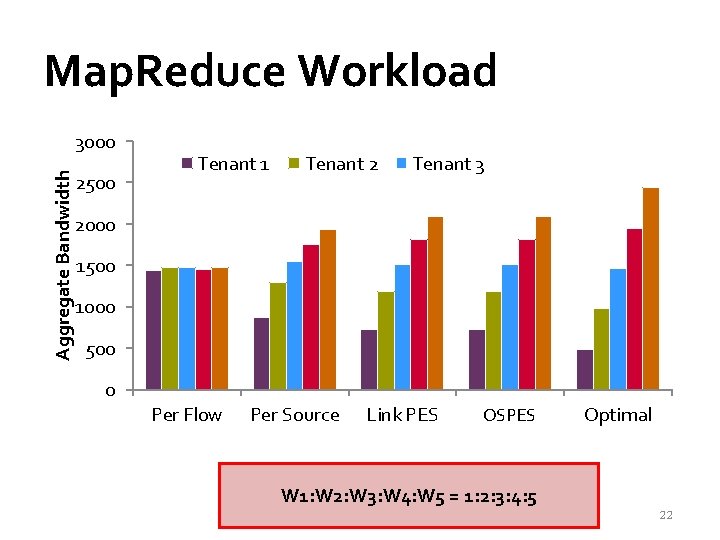

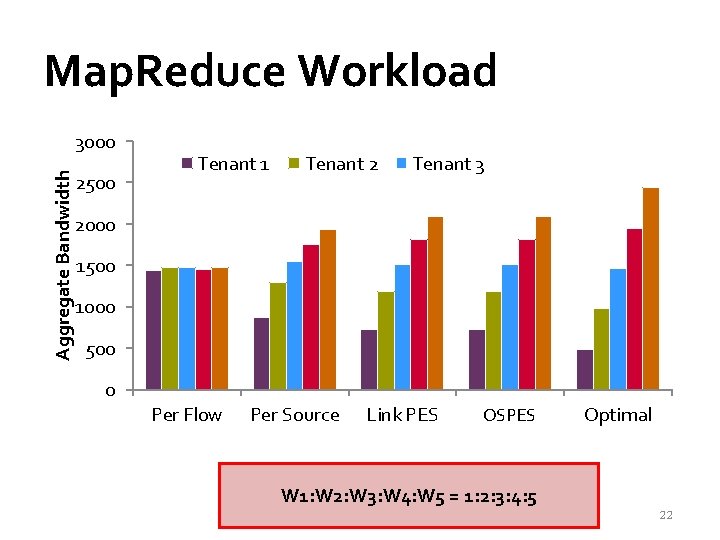

Map. Reduce Workload Aggregate Bandwidth 3000 2500 Tenant 1 Tenant 2 Tenant 3 2000 1500 1000 500 0 Per Flow Per Source Link PES Network OSPES Optimal W 1: W 2: W 3: W 4: W 5 = 1: 2: 3: 4: 5 22

Summary Sharing cloud networks is all about making tradeoffs » Min b/w guarantee VS Proportionality » Proportionality VS Utilization Desired solution is not obvious » Depends on several conflicting requirements and properties » Influenced by the end goal 23