RESEARCH INSTRUMENTS RECAP AND RESEARCH SAYS Competency Constructs

- Slides: 25

RESEARCH INSTRUMENTS

RECAP AND RESEARCH SAYS

Competency: Constructs an instrument and establishes its validity and reliability - CS_RS 12 -IIa-c-3

Activity: 1. Why is Research instrument required in Research? 2. What is the significance of using an instrument? 3. How do we know if instrument is good? 4. What are the types of instruments that you know? In Qualitative, In Quantitative?

The Research Instruments Tools refer to the questionnaire or data gathering instrument to be constructed, validated and administered. Tools can also be interview guide and/ or checklist. If the instrument is prepared by the researcher, it should be tested for validity and reliability. However, if the instrument is standardized, the student should indicate its description as to its items, scoring and qualification. The researcher must explain its parts, and how the instrument will be validated. The instrument to be used should be appended (except for standardized). For scientific and experimental researches the materials and equipment to be used in the experiment must be specified.

The qualities of a good research instrument are (1) validity, (2) reliability, and (3) usability. Validity means the degree to which an instrument measures what it intends to measure. The validity of a measuring instrument refers to has to do with its soundness, what the test or questionnaire measures its effectiveness, how it could be applied.

Types of Validity Content validity means the extent to which the content or topic of the test is truly representative of the content of the course. It involves, essentially, the systematic examination of the research instrument content to determine whether it covers a representative sample of the behavior domain to be measured. It is commonly used in evaluation achievement test. A measure of whether or not items actually measure the latent trait that they are intended to measure. This is often evaluated through expert review of items and revision in response to expert opinion.

Criterion validity is the degree to which the test agrees or correlates with a criterion set up as an acceptable measure. The criterion is always available at the time of testing. Its is applicable to tests employed for the diagnosis of existing status rather than for the prediction of future outcome. The degree to which a measure correlates with other measures of the same latent trait (also called “concurrent” validity). Generally, qualitative measures are used to establish criterion validity for quantitative instruments, although quantitative or alternative qualitative measures (i. e. , interviews) can be used to validate survey instruments

Predictive validity, as described by Aquino and Garcia (2004), is determined by showing how well predictions made from the test are confirmed by evidence gathered at some subsequent time. The criterion measure against this type of validity is important because the outcome of the subjects is predicted. Also known as Communication validity - Researchers develop surveys in order to generate an understanding of a study population. While researchers often assume that participants will interpret questions as intended, explicitly considering this aspect of instrument validity can generate important insights (e. g. , Lopez, 1996)

The construct validity of a test is the extent to which the test measures a theoretical construct or trait. This involves such tests as those of understanding, appreciation and interpretation of data. Examples are intelligence and mechanical aptitude tests. A measure of whether or not strong support for the content of items exists. This can be estimated through both convergence and divergence of theory and reality.

Reliability means the extent to which a “test is dependable, self-consistent and stable” (Merriam, 1995). In other words, the test agrees with itself. It is concerned with the consistency of responses from moment to moment. Even if a person a takes the same test twice, the test yields the same results. However, a reliable test may not always be valid.

Practicality also known as usability means the degree to which the research instrument can be satisfactorily used by teachers, researchers, supervisors and school managers without undue expenditure of time, money and effort. In other words, usability means practicability.

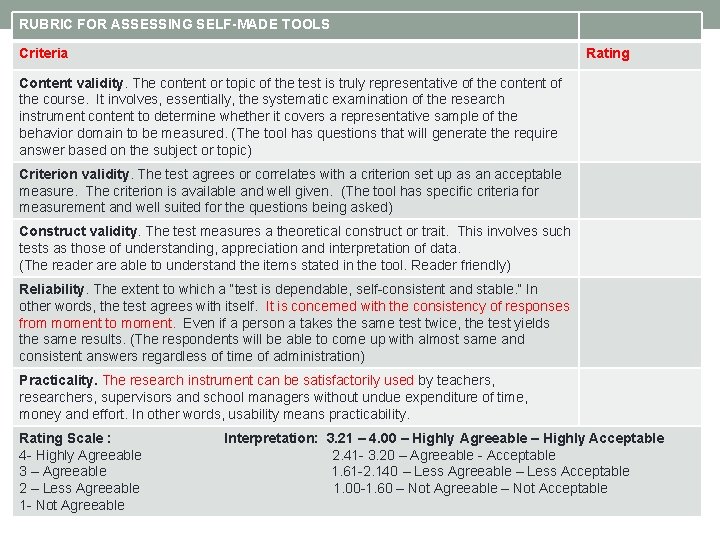

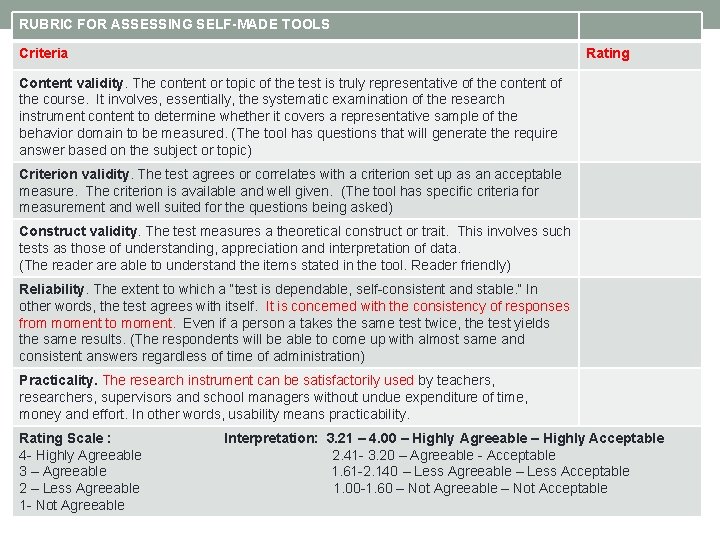

RUBRIC FOR ASSESSING SELF-MADE TOOLS Criteria Rating Content validity. The content or topic of the test is truly representative of the content of the course. It involves, essentially, the systematic examination of the research instrument content to determine whether it covers a representative sample of the behavior domain to be measured. (The tool has questions that will generate the require answer based on the subject or topic) Criterion validity. The test agrees or correlates with a criterion set up as an acceptable measure. The criterion is available and well given. (The tool has specific criteria for measurement and well suited for the questions being asked) Construct validity. The test measures a theoretical construct or trait. This involves such tests as those of understanding, appreciation and interpretation of data. (The reader are able to understand the items stated in the tool. Reader friendly) Reliability. The extent to which a “test is dependable, self-consistent and stable. ” In other words, the test agrees with itself. It is concerned with the consistency of responses from moment to moment. Even if a person a takes the same test twice, the test yields the same results. (The respondents will be able to come up with almost same and consistent answers regardless of time of administration) Practicality. The research instrument can be satisfactorily used by teachers, researchers, supervisors and school managers without undue expenditure of time, money and effort. In other words, usability means practicability. Rating Scale : Interpretation: 3. 21 – 4. 00 – Highly Agreeable – Highly Acceptable 4 - Highly Agreeable 2. 41 - 3. 20 – Agreeable - Acceptable 3 – Agreeable 1. 61 -2. 140 – Less Agreeable – Less Acceptable 2 – Less Agreeable 1. 00 -1. 60 – Not Agreeable – Not Acceptable 1 - Not Agreeable

Instruments: 1. Questionnaire 2. Interview Guide 3. Rating Scale 4. Checklist 5. Sociometry 6. Document or Content Analysis 7. Scoreboard 8. Researcher-Made tool 9. Recording the data 10. Opinionnaire 11. Observation 12. Psychological tests 13. Standardized Questions

QUESTIONNAIRE Two Forms: A. Closed Form – calls for short checkmark responses Examples: True or false, Yes or No, Multiples Choice Statement with options (strongly agree, agree etc. ) B. Open Form - unrestricted because it calls for a free response in the respondent’s own word. Examples – Essay type questions, completion test, definition of term

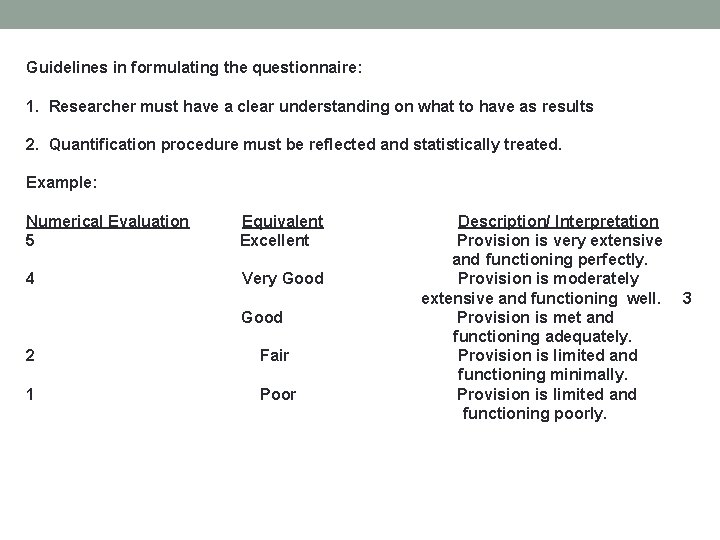

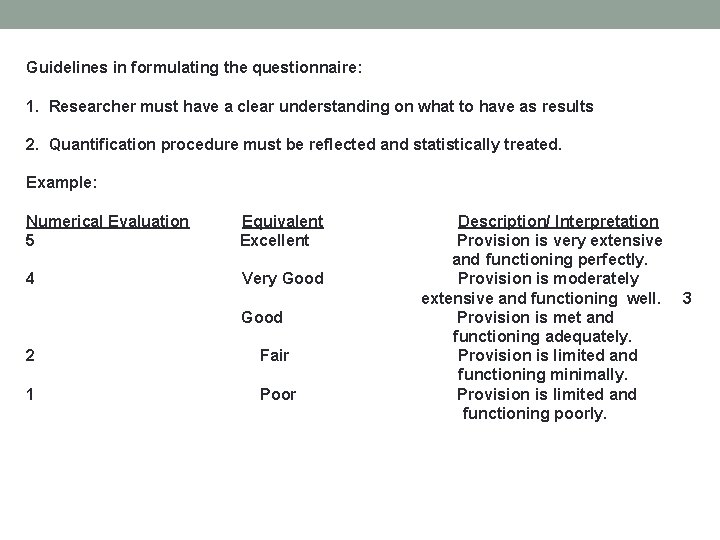

Guidelines in formulating the questionnaire: 1. Researcher must have a clear understanding on what to have as results 2. Quantification procedure must be reflected and statistically treated. Example: Numerical Evaluation Equivalent Description/ Interpretation 5 Excellent Provision is very extensive and functioning perfectly. 4 Very Good Provision is moderately extensive and functioning well. 3 Good Provision is met and functioning adequately. 2 Fair Provision is limited and functioning minimally. 1 Poor Provision is limited and functioning poorly.

3. Finding out how each item contribute to listing of specific objectives 4. Comprehensiveness is necessary 5. Applicability of items or questions. 6. Understanding of the questions.

Requirements in Formulating Questions: 1. Language must clear 2. Questions must be well framed. Correct grammar 3. Avoid leading questions 4. Researcher and respondent must have a common frame of reference about the topic. 5. Pre-testing

Disadvantages of the use of Questionnaires (Barrientos-Tan, 1997) 1. Printing and mailing are costly 2. Response rate may be low 3. Respondents may provide only socially acceptable answers. 4. There is less chance to clarify ambiguous answer. 5. Respondents must be literate and with no physical handicaps 6. Rate retrieval can be low because retrieval itself is difficult.

STANDARDIZED TEST Ready to use research instruments, usually these are products of long years of study. Examples: Stanford-Binet Test, Wechsler Intelligence Test, Rorschach Inkblot Test. Characteristics: a. very objective b. Reliable c. Valid

Validated Tool – A tool used in previous similar studies but are not standardized and published. Modified Tool – Existing Tool that are published but you want to modify to suit the nature of the research respondents and locale Researcher-Made Tool – Tool crafted by researcher to answer the problem of the study. Researcher must be an expert of the filed or must seek the expertise of the authority or professional for validation. Then must pretest the instrument to atleast 10 -20 respondents (share the same characteristics of the intended respondents). Then get the index of discrimination and difficulty.

For Profile of the respondents make sure you give the choices and options Example: Sex: ____Male ___Female ___No response Age: ____16 years old _______17 years Old _______18 years old Please specify: ____

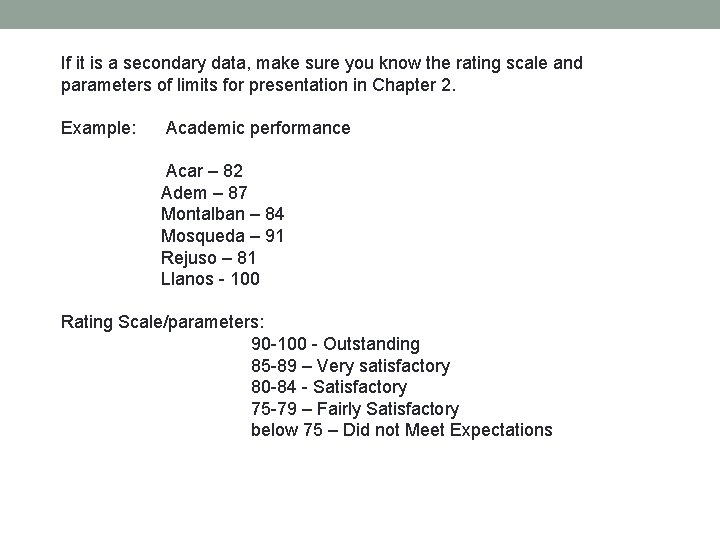

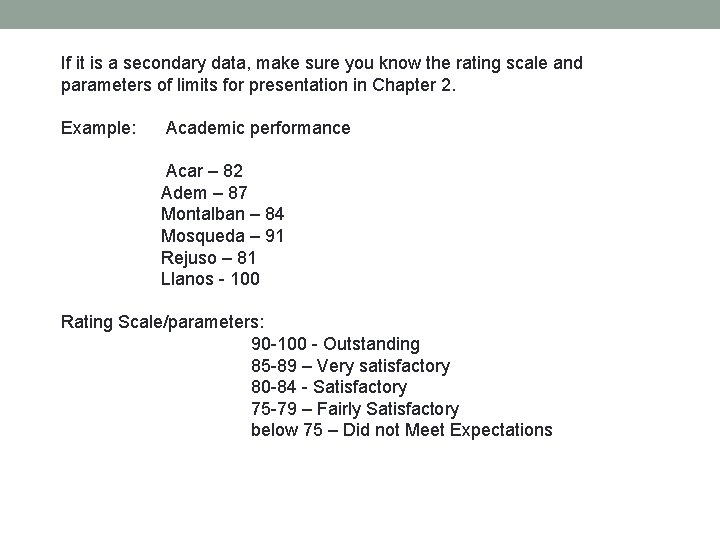

If it is a secondary data, make sure you know the rating scale and parameters of limits for presentation in Chapter 2. Example: Academic performance Acar – 82 Adem – 87 Montalban – 84 Mosqueda – 91 Rejuso – 81 Llanos - 100 Rating Scale/parameters: 90 -100 - Outstanding 85 -89 – Very satisfactory 80 -84 - Satisfactory 75 -79 – Fairly Satisfactory below 75 – Did not Meet Expectations

Application: Let us examine a 5 point likert scale with 18 items to measure Good Leadership

Application/Assignment: Go to your grouping and discuss on the probable instrument you will use. You may have a: 1. Self Made Tool 2. Validated Tool 3. Modified Tool 4. Standardized Tool Submission of questionnaire is January 5, 2016 for critiquing and acceptance.