REINFORCEMENT LEARNING WITH FUNCTION APPROXIMATION FOR TRAFFIC SIGNAL

- Slides: 10

REINFORCEMENT LEARNING WITH FUNCTION APPROXIMATION FOR TRAFFIC SIGNAL CONTROL ADITI BHAUMICK ab 3585

OBJECTIVES: To use reinforcement learning algorithm with function approximation. Feature-based state representations using a broad characterization of the level of congestion as low, medium or high are implemented. Reinforcement Learning is used as it allows the optimal strategy for signal timing to be learnt without assuming any models of the system.

OBJECTIVES: On high-dimensional state action spaces, function approximation techniques are used to achieve computational efficiency. A form of Reinforcement learning called Q Learning is used to develop a Traffic Light Control algorithm that incorporates function approximation.

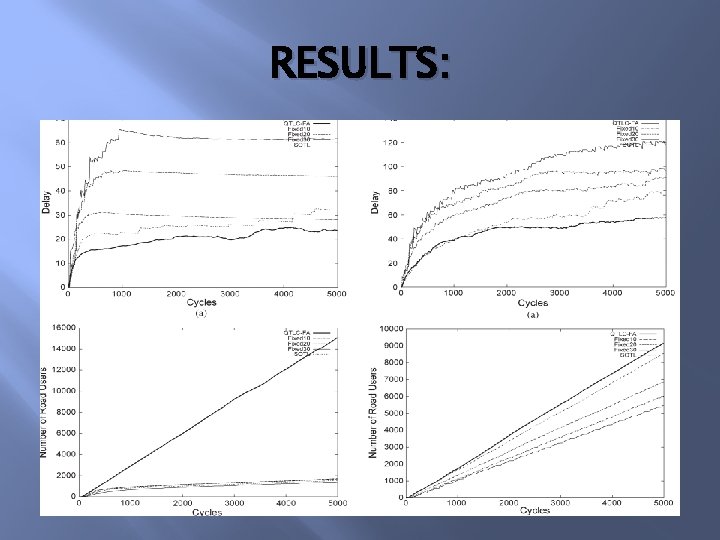

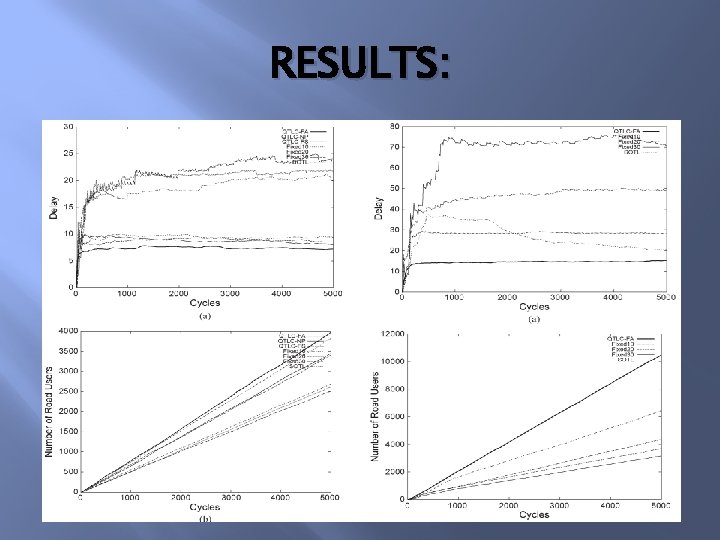

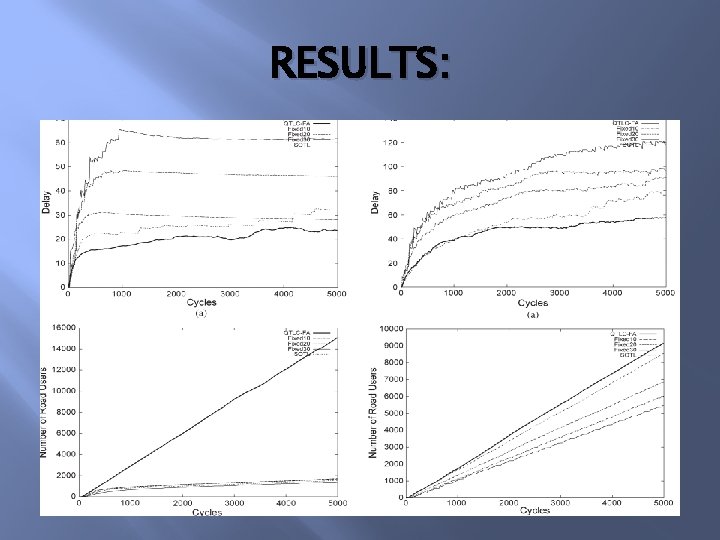

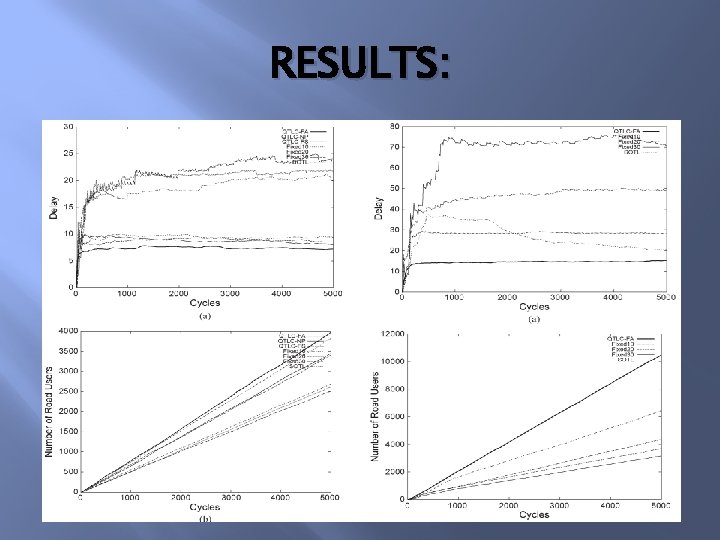

RESULTS: The Q TLC-FA is implemented. A number of other algorithms were also implemented in order to compare the Q Learning with function approximation algorithm. The algorithms are implemented on an open source Java based software called the Green Light District. The single stage cost function is k(s, a) and r 1= s 1= 0. 5 is set.

RESULTS:

RESULTS:

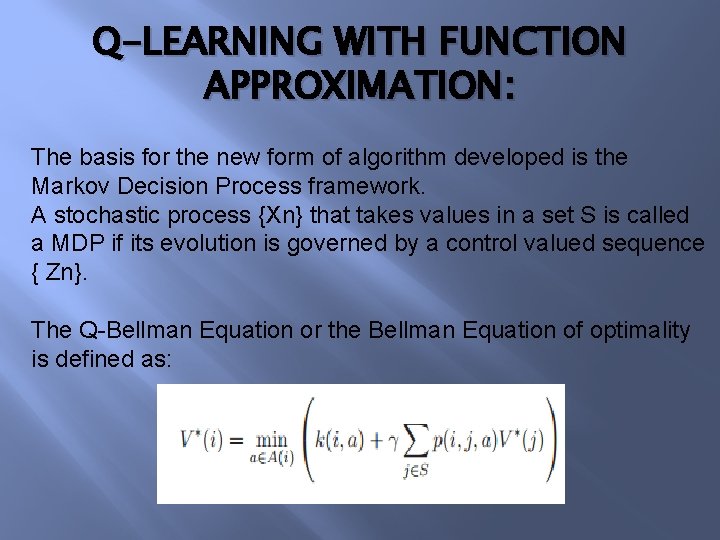

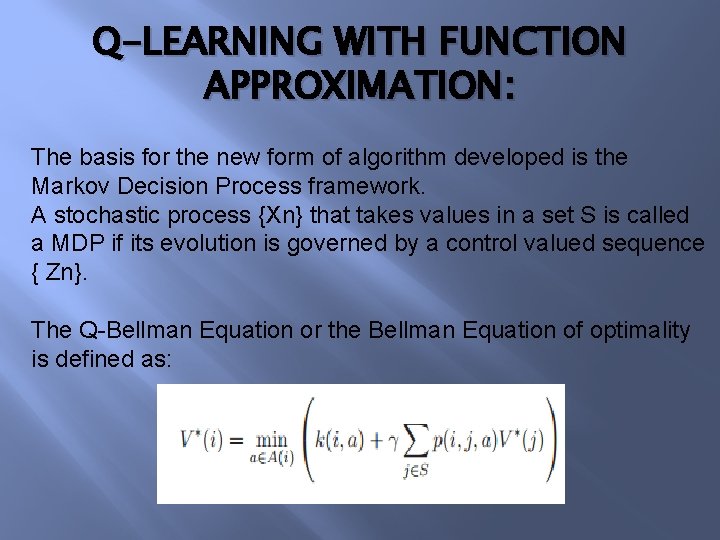

Q-LEARNING WITH FUNCTION APPROXIMATION: The basis for the new form of algorithm developed is the Markov Decision Process framework. A stochastic process {Xn} that takes values in a set S is called a MDP if its evolution is governed by a control valued sequence { Zn}. The Q-Bellman Equation or the Bellman Equation of optimality is defined as:

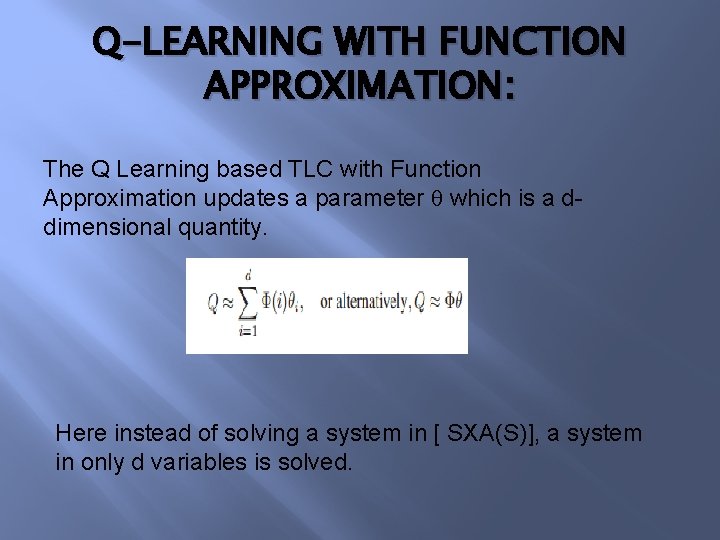

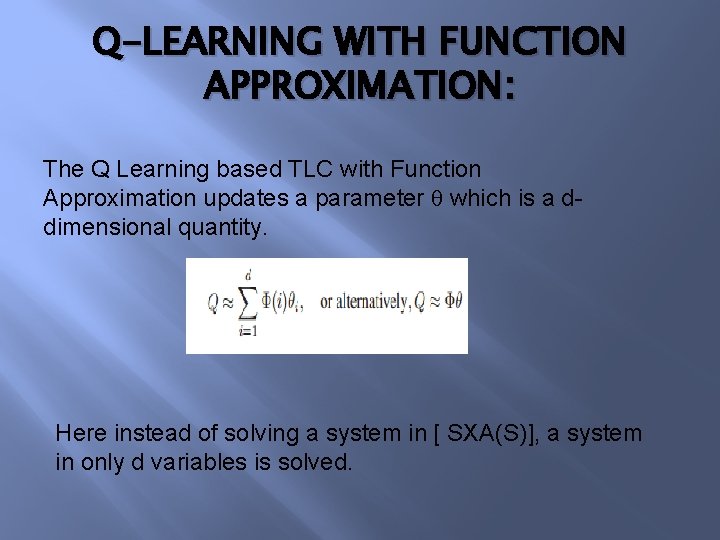

Q-LEARNING WITH FUNCTION APPROXIMATION: The Q Learning based TLC with Function Approximation updates a parameter θ which is a ddimensional quantity. Here instead of solving a system in [ SXA(S)], a system in only d variables is solved.

Q-LEARNING WITH FUNCTION APPROXIMATION: The metrics used for this algorithm are: The number of lanes, N The thresholds on queue lengths L 1 and L 2 The threshold on elapsed time T 1 None of these metrics are dependent on the full state representation of the entire network.

CONCLUSION: The QTLC-FA Algorithm outperforms all the other algorithms it is compared with. Future work would involve the application of other efficient RL algorithms with function approximation. Effects of driver behavior could also be incorporated into the framework.