Reinforcement Learning Learning algorithms Function Approximation Yishay Mansour

- Slides: 21

Reinforcement Learning: Learning algorithms Function Approximation Yishay Mansour Tel-Aviv University 1

Outline • Week I: Basics – Mathematical Model (MDP) – Planning • Value iteration • Policy iteration • Week II: Learning Algorithms – Model based – Model Free • Week III: Large state space 2

Learning Algorithms Given access only to actions perform: 1. policy evaluation. 2. control - find optimal policy. Two approaches: 1. Model based (Dynamic Programming). 2. Model free (Q-Learning, SARSA). 3

Learning: Policy improvement • Assume that we can compute: – Given a policy π, – The V and Q functions of π • Can perform policy improvement: – Π= Greedy (Q) • Process converges if estimations are accurate. 4

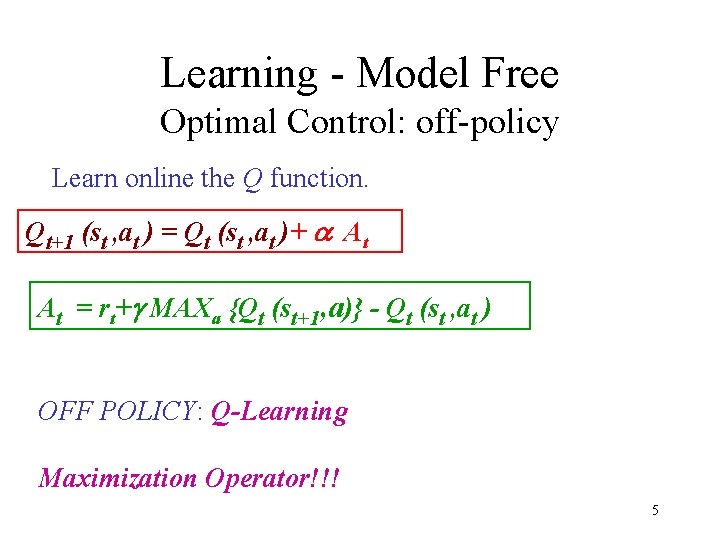

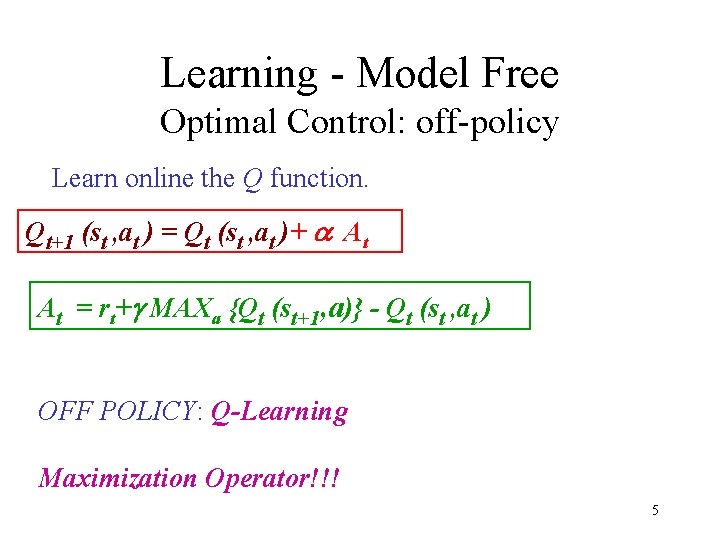

Learning - Model Free Optimal Control: off-policy Learn online the Q function. Qt+1 (st , at ) = Qt (st , at )+ a At At = rt+g MAXa {Qt (st+1, a)} - Qt (st , at ) OFF POLICY: Q-Learning Maximization Operator!!! 5

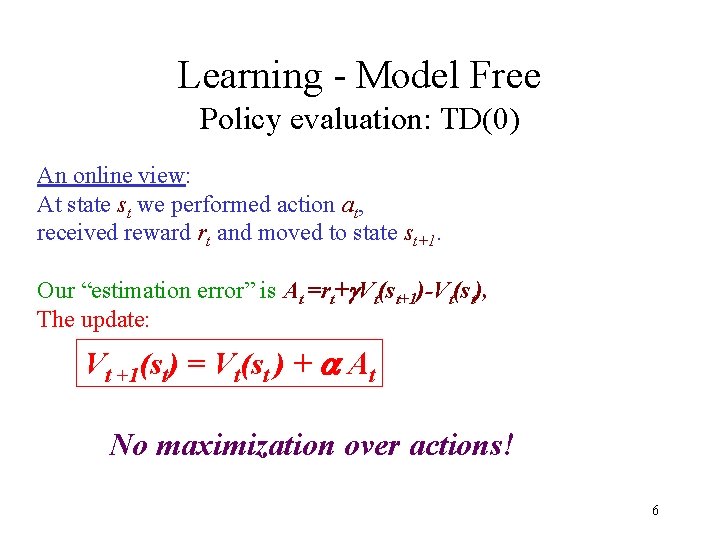

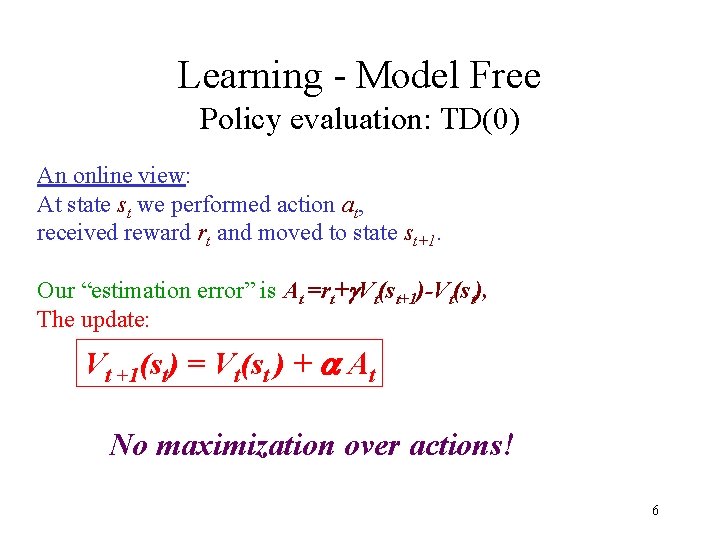

Learning - Model Free Policy evaluation: TD(0) An online view: At state st we performed action at, received reward rt and moved to state st+1. Our “estimation error” is At =rt+g. Vt(st+1)-Vt(st), The update: Vt +1(st) = Vt(st ) + a At No maximization over actions! 6

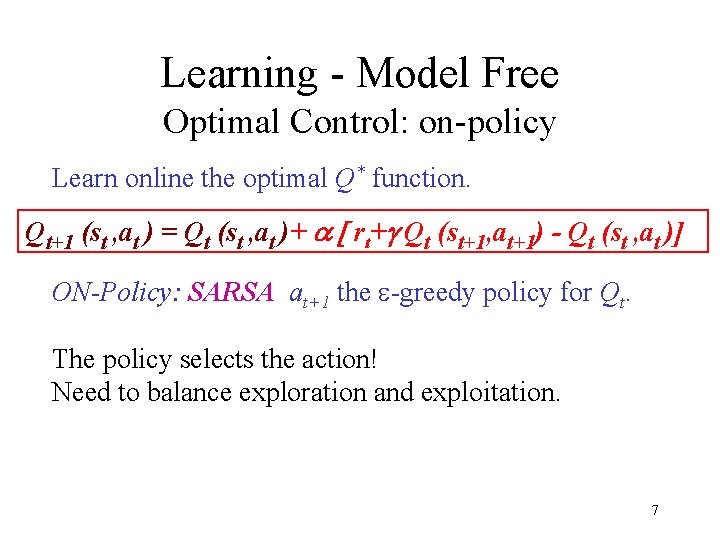

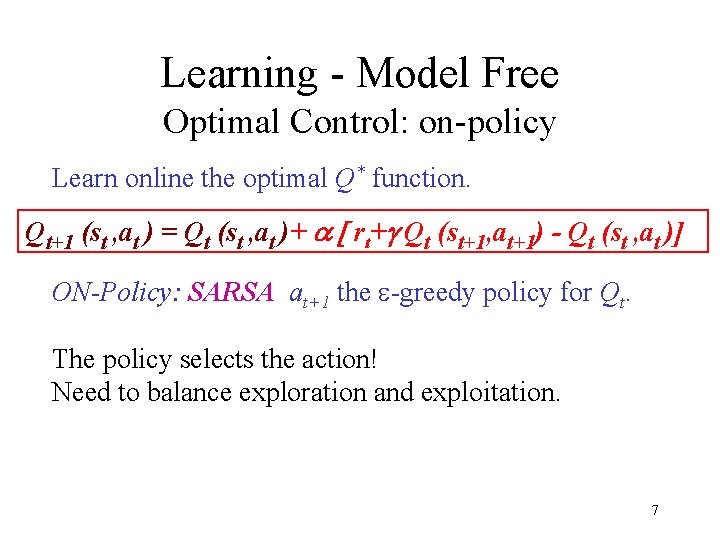

Learning - Model Free Optimal Control: on-policy Learn online the optimal Q* function. Qt+1 (st , at ) = Qt (st , at )+ a [ rt+g Qt (st+1, at+1) - Qt (st , at )] ON-Policy: SARSA at+1 the e-greedy policy for Qt. The policy selects the action! Need to balance exploration and exploitation. 7

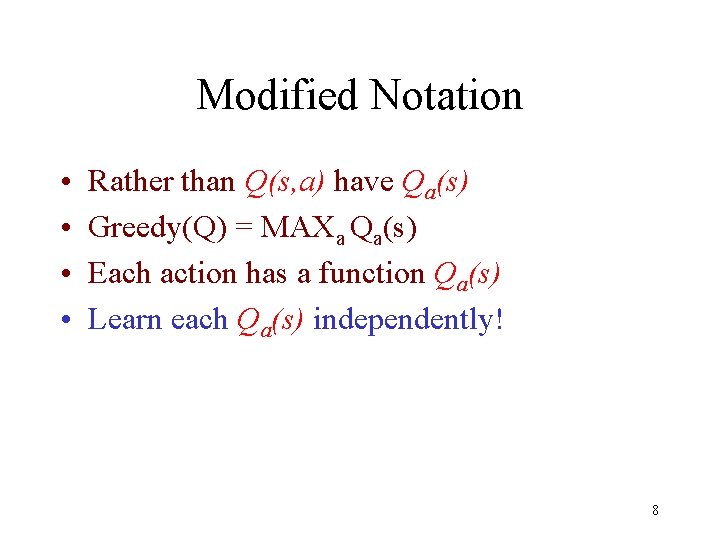

Modified Notation • • Rather than Q(s, a) have Qa(s) Greedy(Q) = MAXa Qa(s) Each action has a function Qa(s) Learn each Qa(s) independently! 8

Large state space • Reduce number of states – Symmetries (x-o) – Cluster states • Define attributes • Limited number of attributes • Some states will be identical 9

Example X-O • For each action (square) – Consider row/diagonal/column through it – The state will encode the status of “rows”: • • • Two X’s Two O’s Mixed (both X and O) One X One O empty – Only Three types of squares/actions 10

Clustering states • • Need to create attributes Attributes should be “game dependent” Different “real” states - same representation How do we run? – We estimate action value. – Consider only legal actions. – Play “best” action. 11

Function Approximation • Use a limited model for Qa(s) • Have an attribute vector: – Each state s has a vector vec(s)=x 1. . . xk – Normally k << |S| • Examples: – Neural Network – Decision tree – Linear Function • Weights = 1. . . k • Value i xi 12

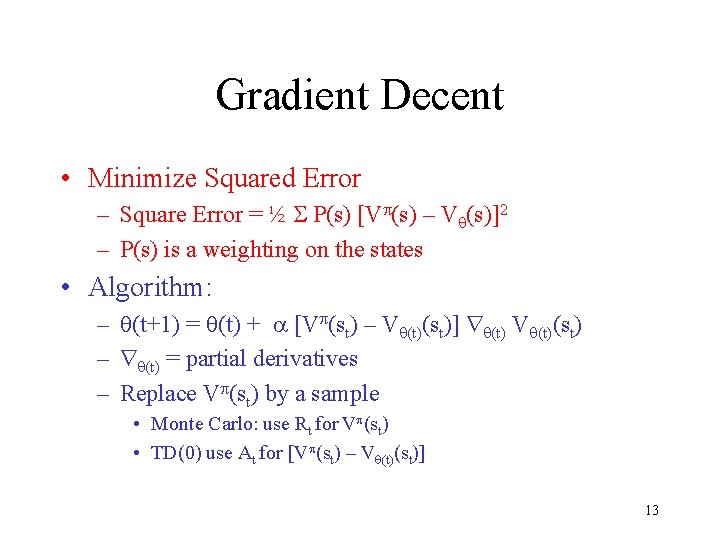

Gradient Decent • Minimize Squared Error – Square Error = ½ P(s) [V (s) – V (s)]2 – P(s) is a weighting on the states • Algorithm: – (t+1) = (t) + [V (st) – V (t)(st)] (t) V (t)(st) – (t) = partial derivatives – Replace V (st) by a sample • Monte Carlo: use Rt for V (st) • TD(0) use At for [V (st) – V (t)(st)] 13

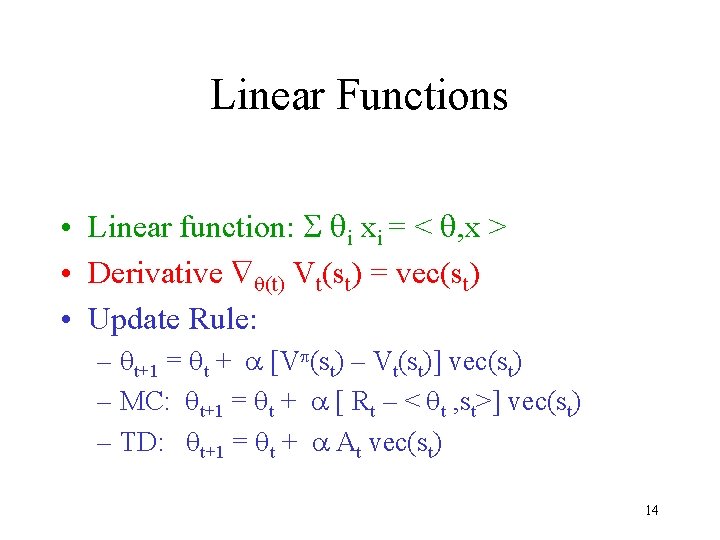

Linear Functions • Linear function: i xi = < , x > • Derivative (t) Vt(st) = vec(st) • Update Rule: – t+1 = t + [V (st) – Vt(st)] vec(st) – MC: t+1 = t + [ Rt – < t , st>] vec(st) – TD: t+1 = t + At vec(st) 14

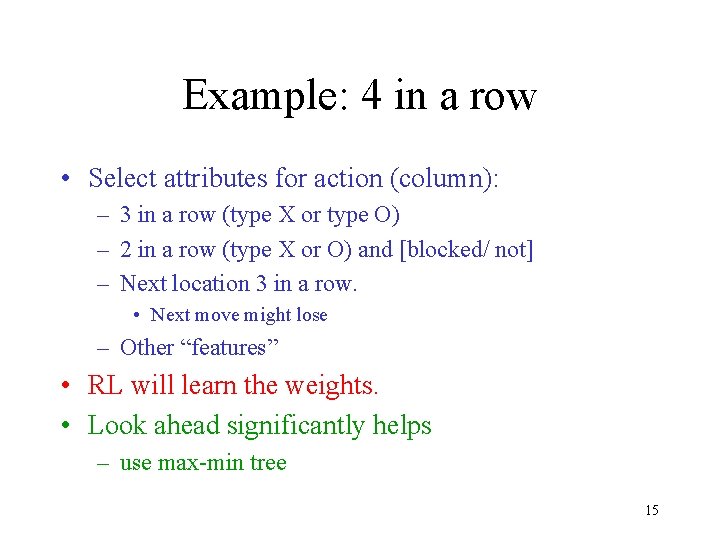

Example: 4 in a row • Select attributes for action (column): – 3 in a row (type X or type O) – 2 in a row (type X or O) and [blocked/ not] – Next location 3 in a row. • Next move might lose – Other “features” • RL will learn the weights. • Look ahead significantly helps – use max-min tree 15

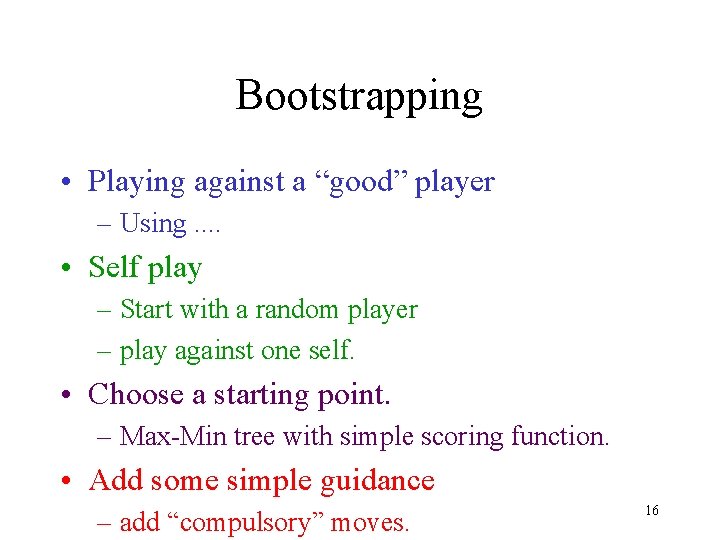

Bootstrapping • Playing against a “good” player – Using. . • Self play – Start with a random player – play against one self. • Choose a starting point. – Max-Min tree with simple scoring function. • Add some simple guidance – add “compulsory” moves. 16

Scoring Function • Checkers: – Number of pieces – Number of Queens • Chess – Weighted sum of pieces • Othello/Reversi – Difference in number of pieces • Can be used with Max-Min Tree – ( , ) pruning 17

Example: Revesrsi (Othello( • Use a simple score functions: – difference in pieces – edge pieces – corner pieces • Use Max-Min Tree • RL: optimize weights. 18

Advanced issues • Time constraints – fast and slow modes • Opening – can help • End game – many cases: few pieces, – can be solved efficiently • Train on a specific state – might be helpful/ not sure that its worth the effort. 19

What is Next? • Create teams: DONE! • Basic Implementation – Deadline Dec 17, 2012 • System specification – Project outline – High level components planning – Dec 31, 2012 – Class Presentation Jan 7, 2012 20

Schedule (more( • Build system • Project completion – April 15, 2012 • All supporting documents in html! • From next week: – Each groups works by itself. – Feel free to contact us. 21