Regression Hal Varian 10 April 2006 What is

- Slides: 16

Regression Hal Varian 10 April 2006

What is regression? n n History Curve fitting v statistics Correlation and causation Statistical models n n n Gauss-Markov theorem Maximum likelihood Conditional mean What can go wrong… Examples

Francis Galton, 1877 n Plotted first regression line: n n Diameter of sweetpeas v diameter of parents Heights of fathers v heights of sons n n Sons of unusually tall fathers tend to be tall, but shorter than their fathers. Galton called this “regression to mediocrity”. But this is also true the other way around! Regression to the mean fallacy. n n Pick the lowest scoring 10% on the midterm and give them extra tutoring If they do better on the final, what can you conclude? Did the tutoring help?

Regression analysis n Assume a linear relation between two variables and estimate unknown parameters n n n yt = a + b xt + et for t= 1, …, T observed = fitted + error or residual dependent variable ~ independent variables/predictors/correlates

Curve fitting v regression n Often choose (a, b) to minimize the sum of squared residuals (“least squares”) n n n Why not absolute value of residuals? Why not fit xt = a + b yt? How much can you trust the estimated values? Need a statistical model to answer these questions! Linear regression: linear in parameters n Nonlinear regression, local regression, general linear model, general additive model: same principles apply

Possible goals n n Estimate parameters (a , b and error variance) Test hypotheses (such as “x has no influence on y”) Make predictions about y conditional on observing a new x-value Summarize data (most common unstated goal!)

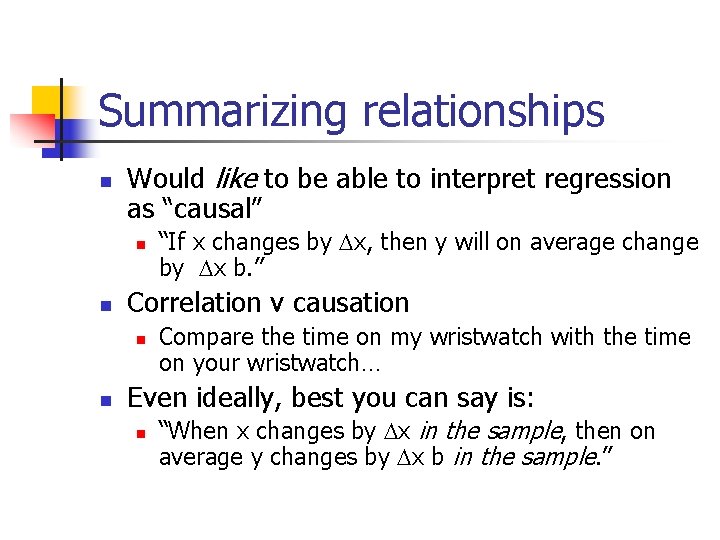

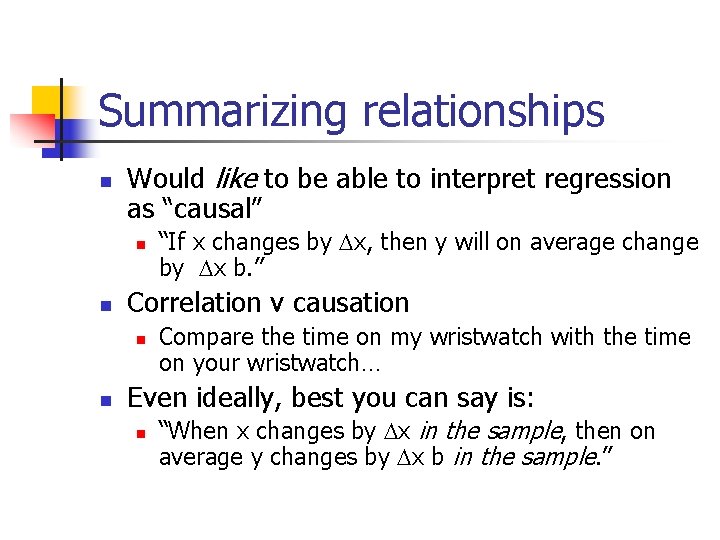

Summarizing relationships n Would like to be able to interpret regression as “causal” n n Correlation v causation n n “If x changes by Dx, then y will on average change by Dx b. ” Compare the time on my wristwatch with the time on your wristwatch… Even ideally, best you can say is: n “When x changes by Dx in the sample, then on average y changes by Dx b in the sample. ”

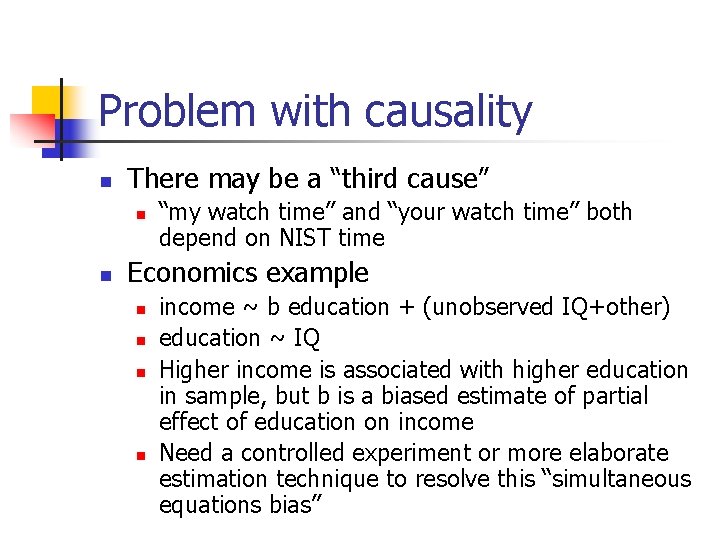

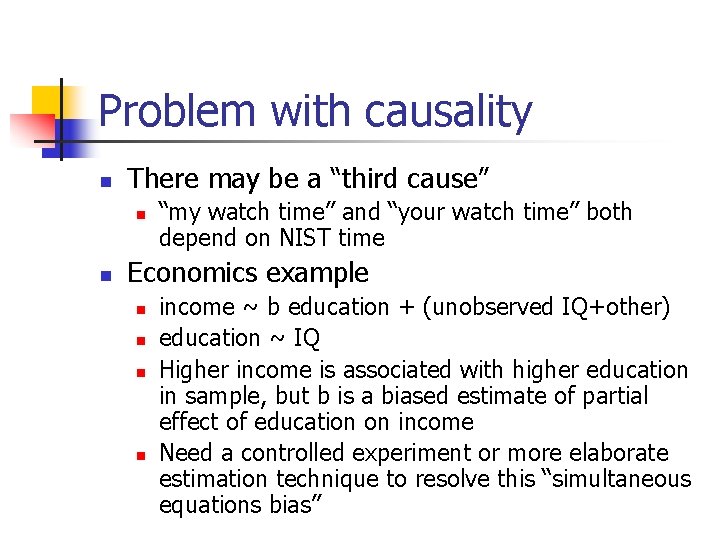

Problem with causality n There may be a “third cause” n n “my watch time” and “your watch time” both depend on NIST time Economics example n n income ~ b education + (unobserved IQ+other) education ~ IQ Higher income is associated with higher education in sample, but b is a biased estimate of partial effect of education on income Need a controlled experiment or more elaborate estimation technique to resolve this “simultaneous equations bias”

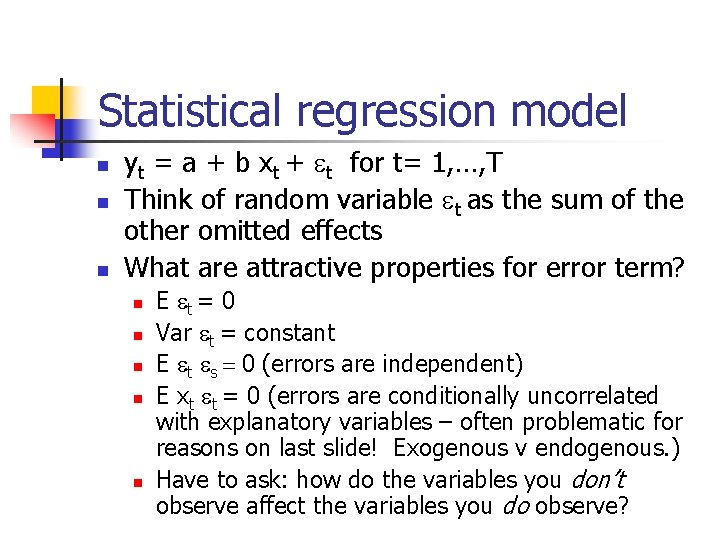

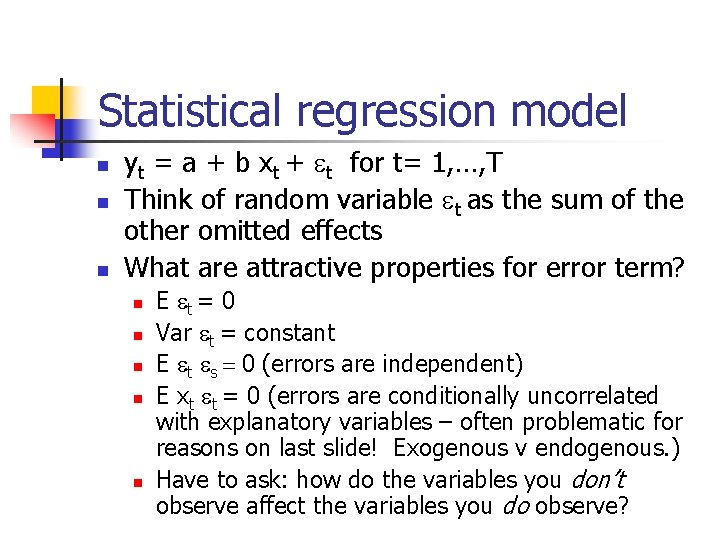

Statistical regression model n n n yt = a + b xt + et for t= 1, …, T Think of random variable et as the sum of the other omitted effects What are attractive properties for error term? n n n E et = 0 Var et = constant E et es = 0 (errors are independent) E xt et = 0 (errors are conditionally uncorrelated with explanatory variables – often problematic for reasons on last slide! Exogenous v endogenous. ) Have to ask: how do the variables you don’t observe affect the variables you do observe?

Optimality properties n n Gauss-Markov theorem: If the error term has these properties, then the linear regression estimates of (a, b) are BLUE = “best linear unbiased estimates” = out of all unbiased estimates that are linear in yt the least squares estimates have minimum variance. If et are Normal IID distributed, then the OLSQ estimates are maximum likelihood estimates

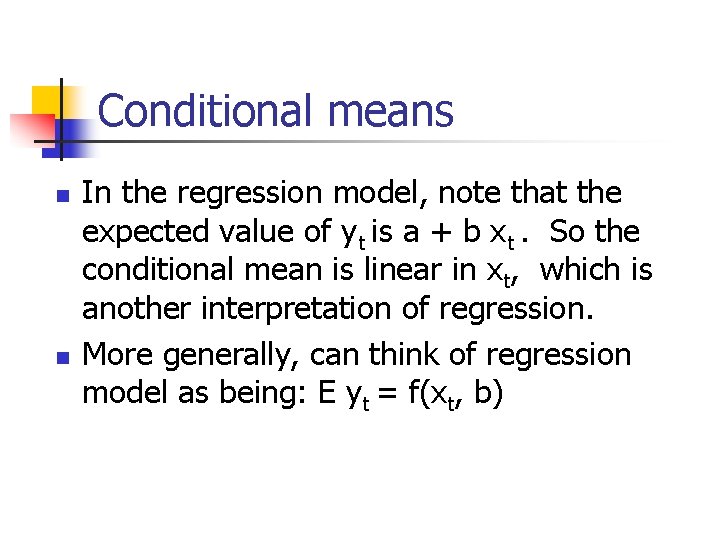

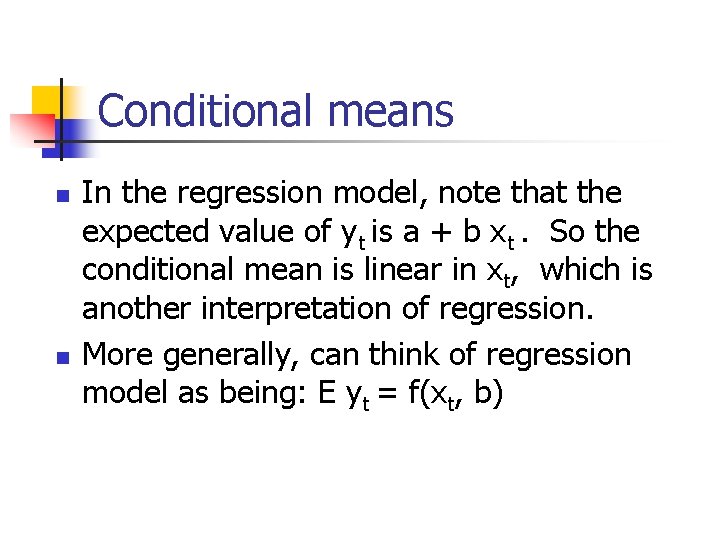

Conditional means n n In the regression model, note that the expected value of yt is a + b xt. So the conditional mean is linear in xt, which is another interpretation of regression. More generally, can think of regression model as being: E yt = f(xt, b)

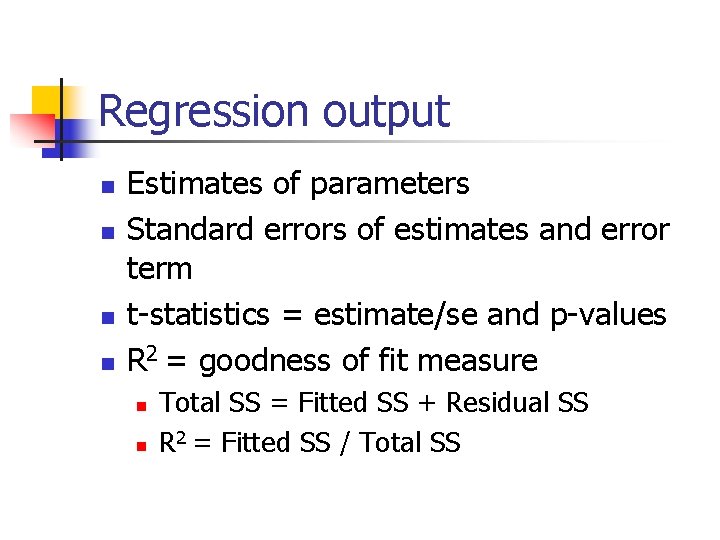

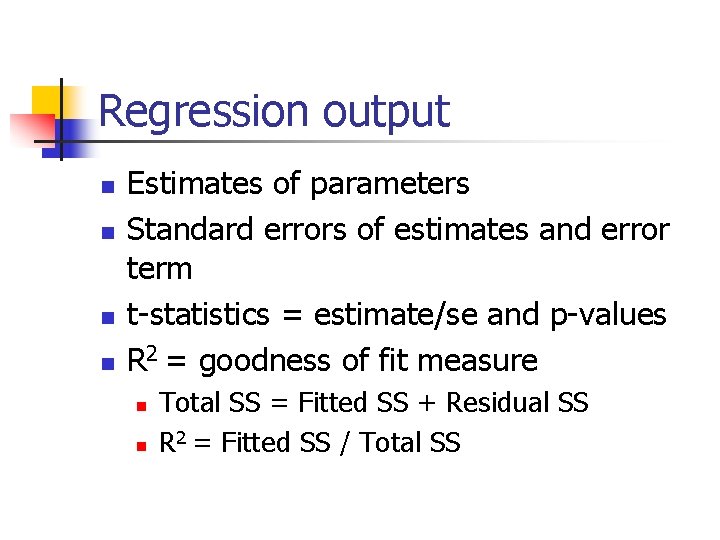

Regression output n n Estimates of parameters Standard errors of estimates and error term t-statistics = estimate/se and p-values R 2 = goodness of fit measure n n Total SS = Fitted SS + Residual SS R 2 = Fitted SS / Total SS

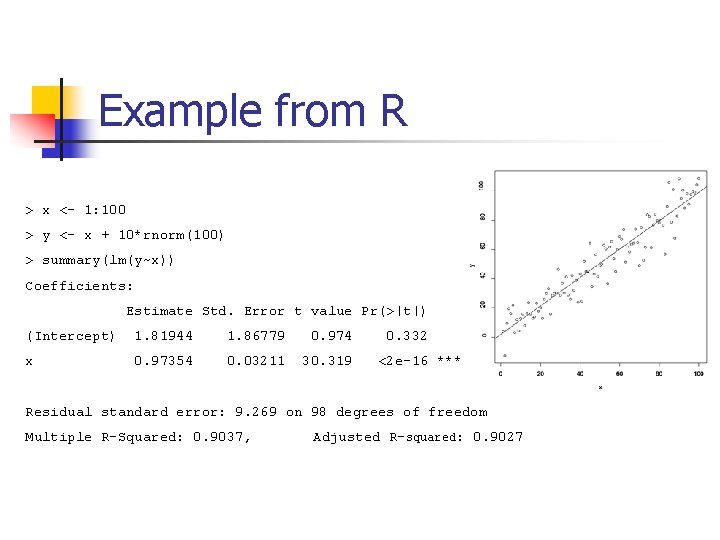

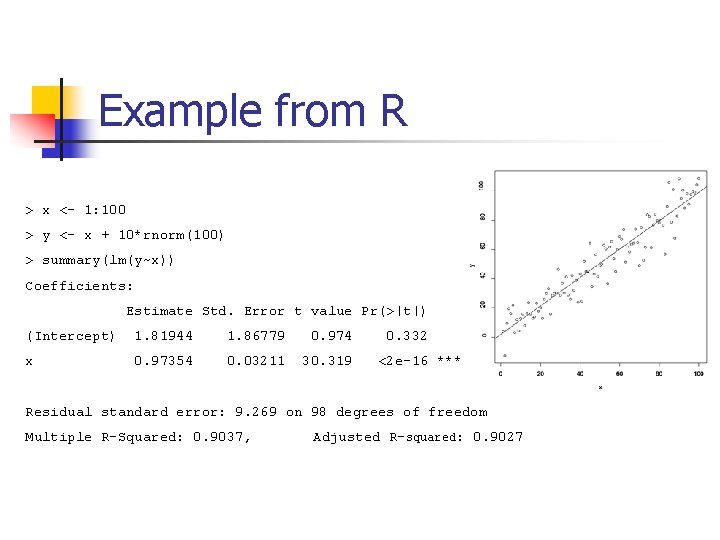

Example from R > x <- 1: 100 > y <- x + 10*rnorm(100) > summary(lm(y~x)) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 1. 81944 1. 86779 0. 974 x 0. 97354 0. 03211 30. 319 0. 332 <2 e-16 *** Residual standard error: 9. 269 on 98 degrees of freedom Multiple R-Squared: 0. 9037, Adjusted R-squared: 0. 9027

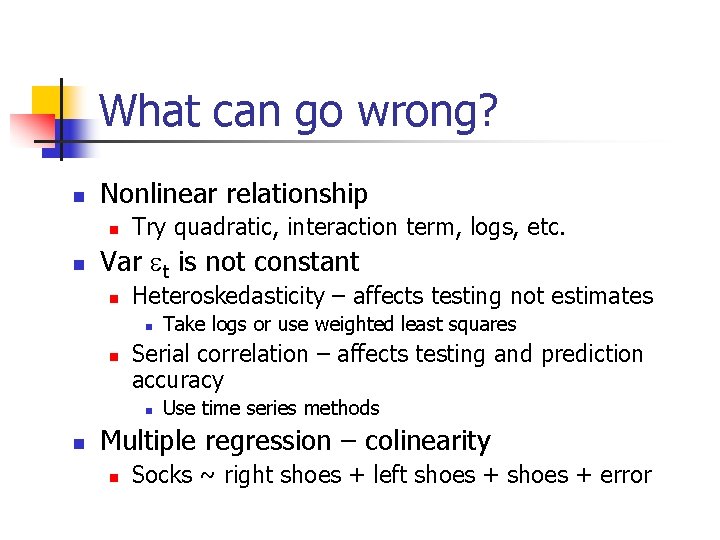

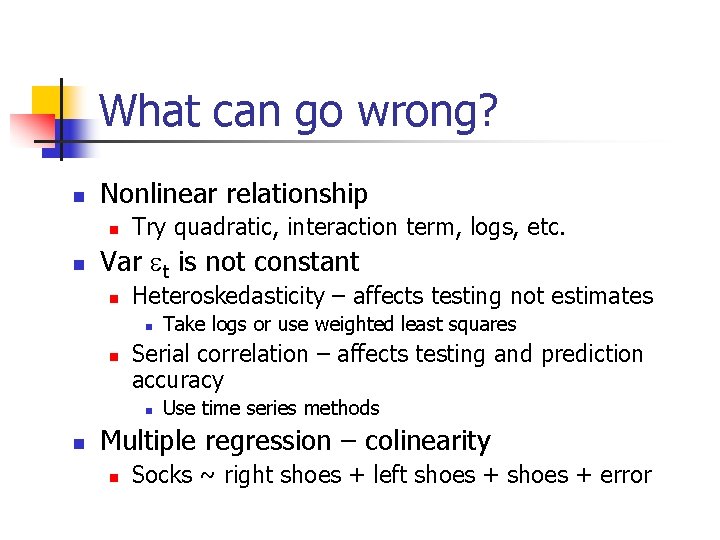

What can go wrong? n Nonlinear relationship n n Try quadratic, interaction term, logs, etc. Var et is not constant n Heteroskedasticity – affects testing not estimates n n Serial correlation – affects testing and prediction accuracy n n Take logs or use weighted least squares Use time series methods Multiple regression – colinearity n Socks ~ right shoes + left shoes + error

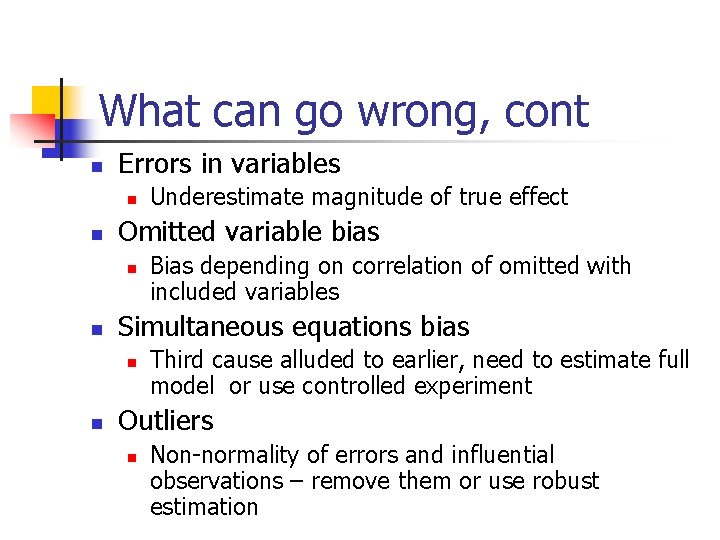

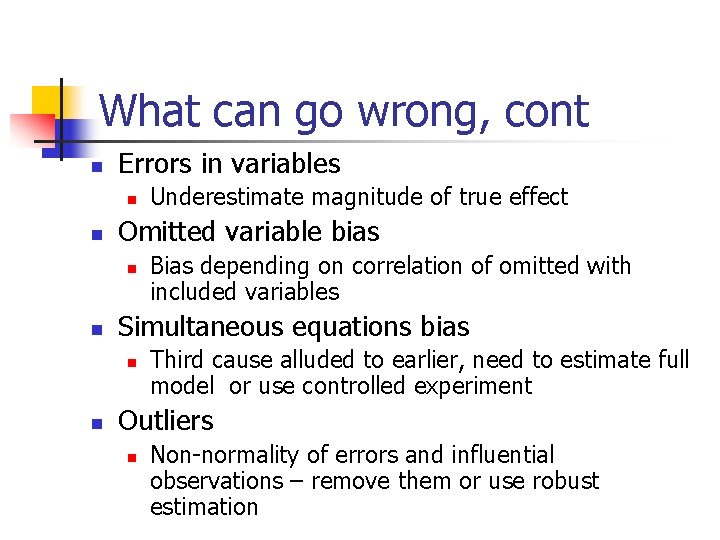

What can go wrong, cont n Errors in variables n n Omitted variable bias n n Bias depending on correlation of omitted with included variables Simultaneous equations bias n n Underestimate magnitude of true effect Third cause alluded to earlier, need to estimate full model or use controlled experiment Outliers n Non-normality of errors and influential observations – remove them or use robust estimation

Diagnostics n n Look at residuals!! R allows you to plot various regression diagnostics n n n reg <- lm(y~x) plot(reg) Examples to follow…

January 2006 calendar

January 2006 calendar 2006 calendar year

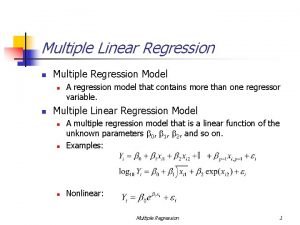

2006 calendar year Simple linear regression and multiple linear regression

Simple linear regression and multiple linear regression Multiple regression

Multiple regression Survival analysis vs logistic regression

Survival analysis vs logistic regression Logistic regression vs linear regression

Logistic regression vs linear regression Pengumuman biasanya memuat hal-hal yang bersifat

Pengumuman biasanya memuat hal-hal yang bersifat Perhatikan hal-hal berikut.

Perhatikan hal-hal berikut. Hal-hal yang perlu diperhatikan dalam membuat puisi

Hal-hal yang perlu diperhatikan dalam membuat puisi Hal hal yang membuat gambar cerita terlihat menarik adalah

Hal hal yang membuat gambar cerita terlihat menarik adalah Sebutkan hal-hal yang dicantumkan dalam surat pesanan

Sebutkan hal-hal yang dicantumkan dalam surat pesanan Perhatikan hal-hal berikut.

Perhatikan hal-hal berikut. Perhatikan hal-hal berikut.

Perhatikan hal-hal berikut. Jelaskan ruang lingkup dari layout toko

Jelaskan ruang lingkup dari layout toko Hal-hal yang perlu diinquiry dalam tes rorscach kecuali

Hal-hal yang perlu diinquiry dalam tes rorscach kecuali Hal-hal yang esensial dalam membuat lagu

Hal-hal yang esensial dalam membuat lagu Catatan mesyuarat

Catatan mesyuarat