Recovering low rank and sparse matrices from compressive

![CS-LDS • [S, et al. , SIAM J. IS*] • Low rank model – CS-LDS • [S, et al. , SIAM J. IS*] • Low rank model –](https://slidetodoc.com/presentation_image/bd32c9d4fdda38bdec07f91794e3c85d/image-24.jpg)

- Slides: 26

Recovering low rank and sparse matrices from compressive measurements Aswin C Sankaranarayanan Rice University Richard G. Baraniuk Andrew E. Waters

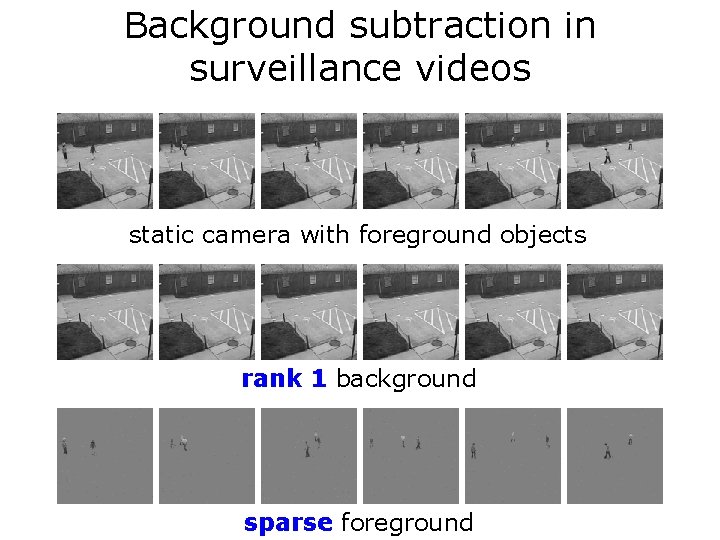

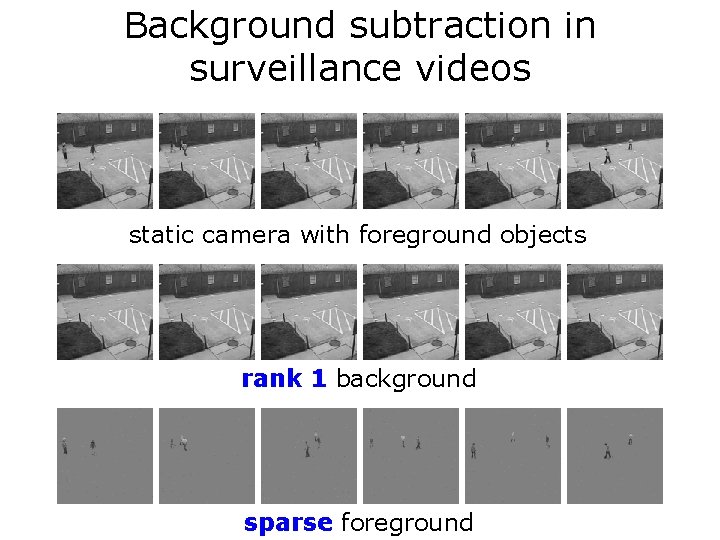

Background subtraction in surveillance videos static camera with foreground objects rank 1 background sparse foreground

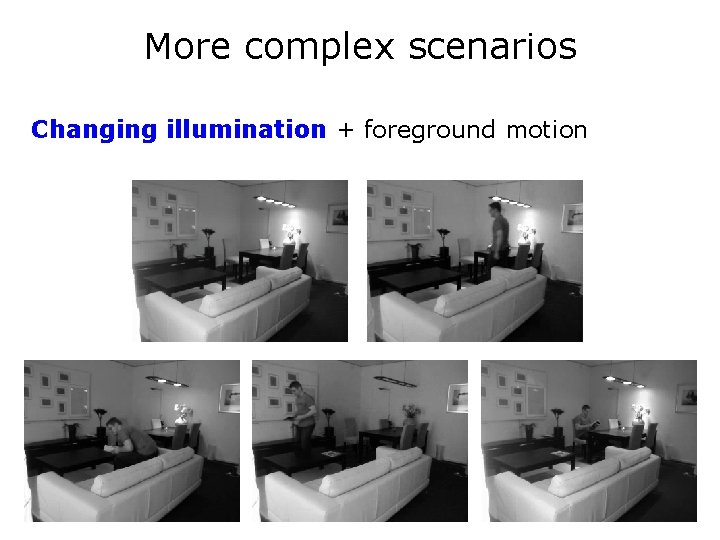

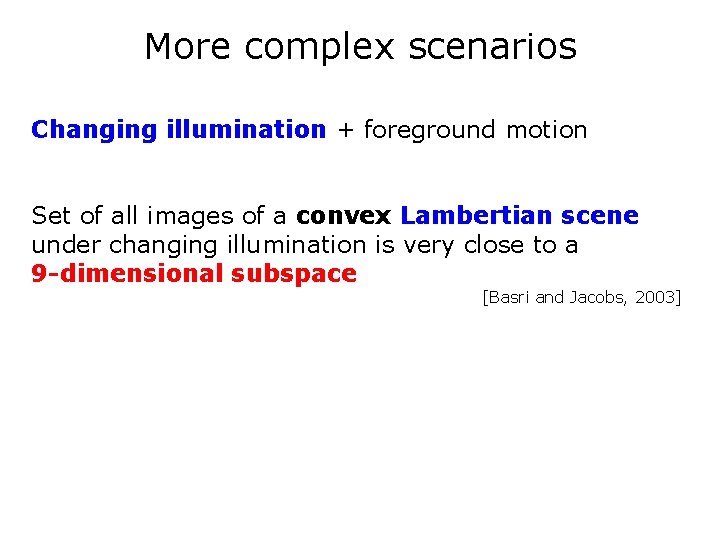

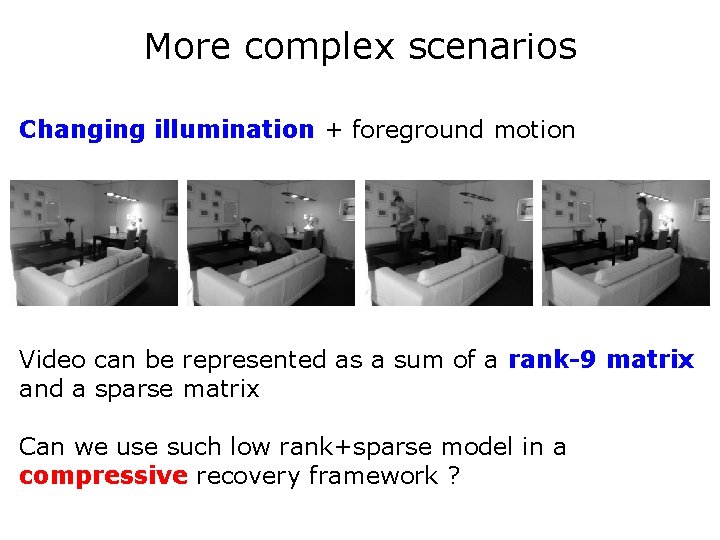

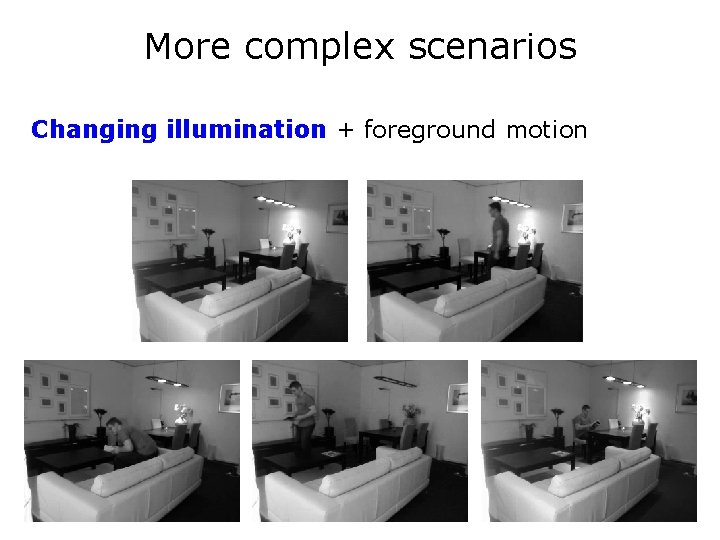

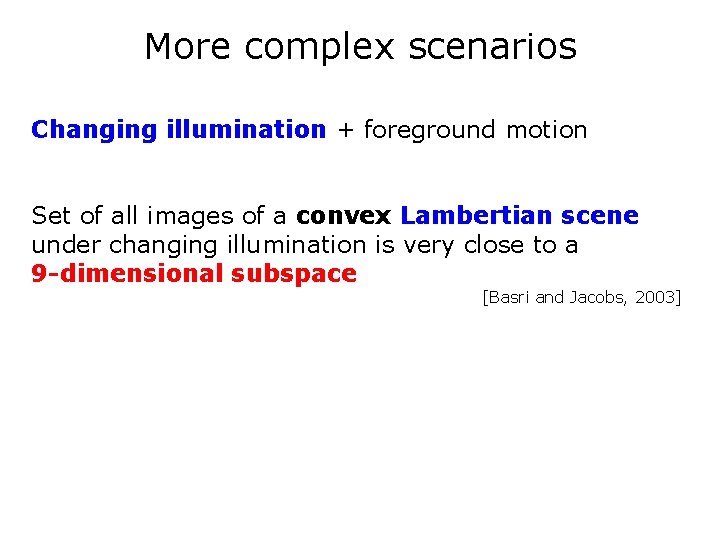

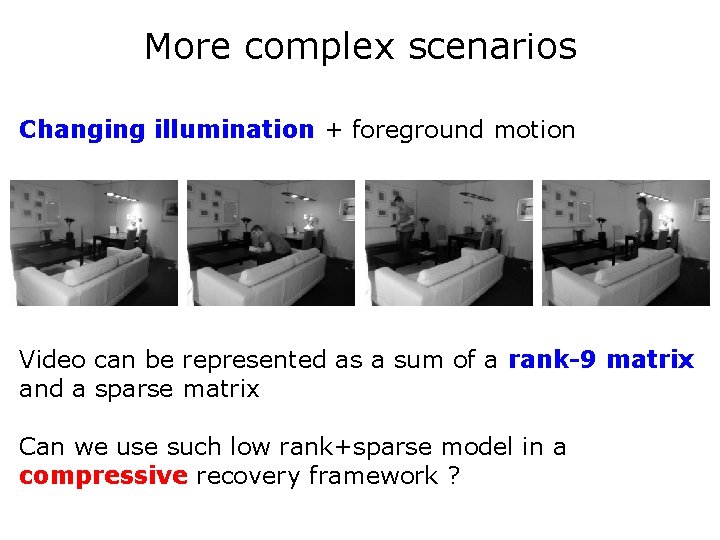

More complex scenarios Changing illumination + foreground motion

More complex scenarios Changing illumination + foreground motion Set of all images of a convex Lambertian scene under changing illumination is very close to a 9 -dimensional subspace [Basri and Jacobs, 2003]

More complex scenarios Changing illumination + foreground motion Video can be represented as a sum of a rank-9 matrix and a sparse matrix Can we use such low rank+sparse model in a compressive recovery framework ?

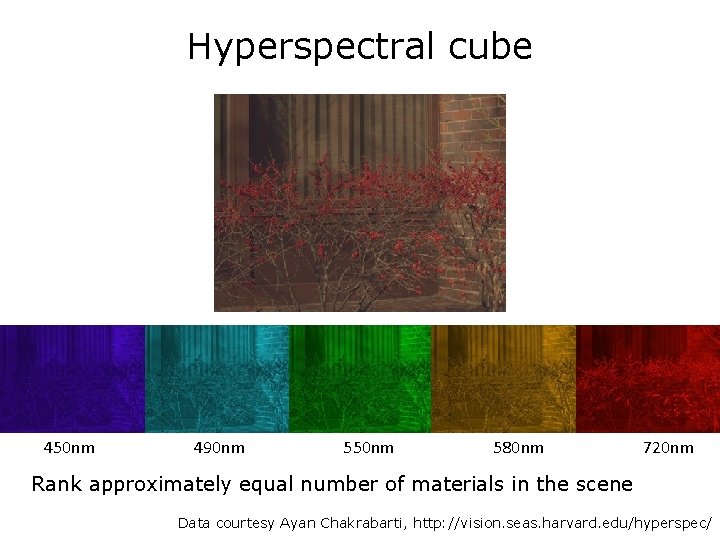

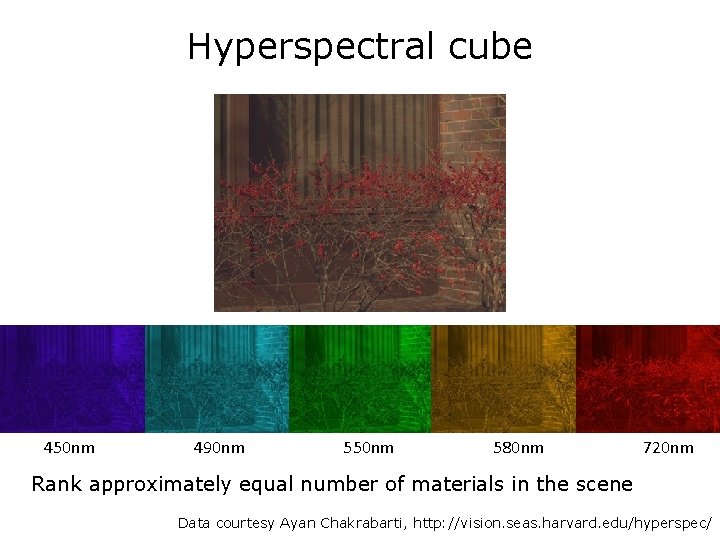

Hyperspectral cube 450 nm 490 nm 550 nm 580 nm 720 nm Rank approximately equal number of materials in the scene Data courtesy Ayan Chakrabarti, http: //vision. seas. harvard. edu/hyperspec/

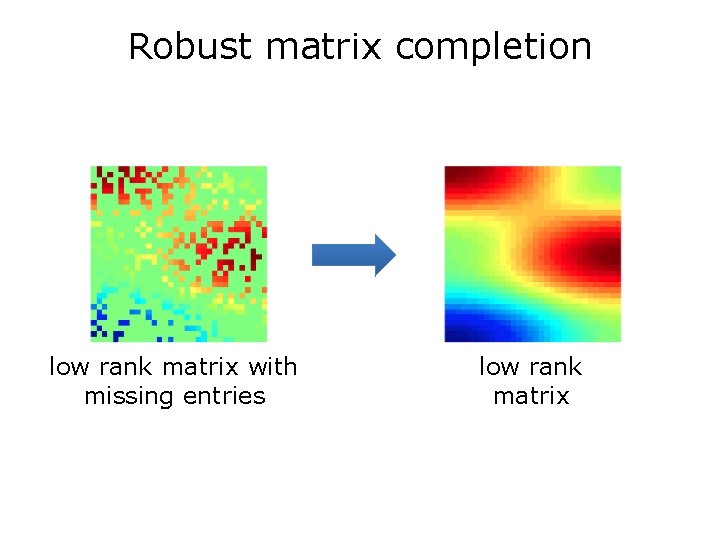

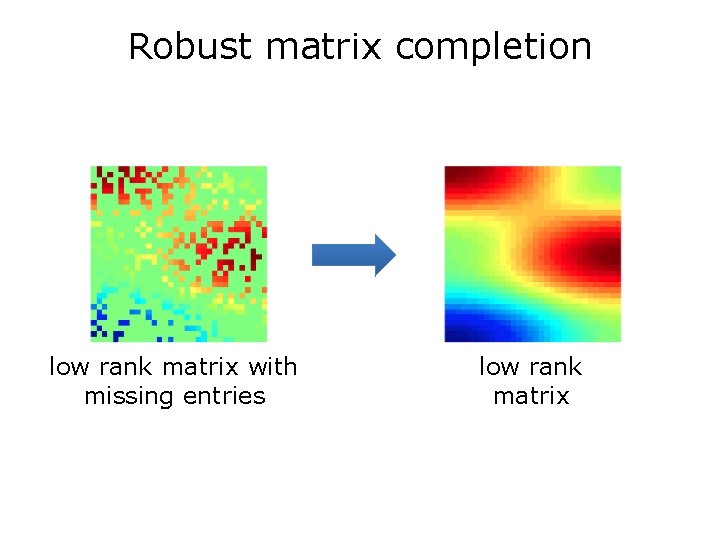

Robust matrix completion low rank matrix with missing entries low rank matrix

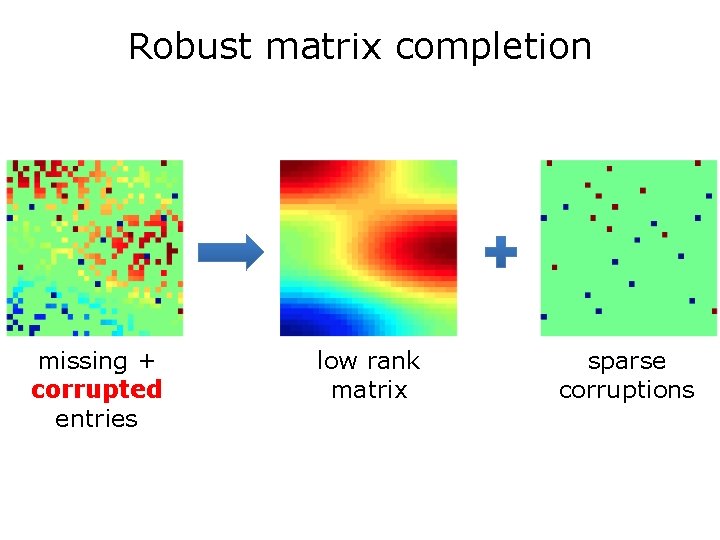

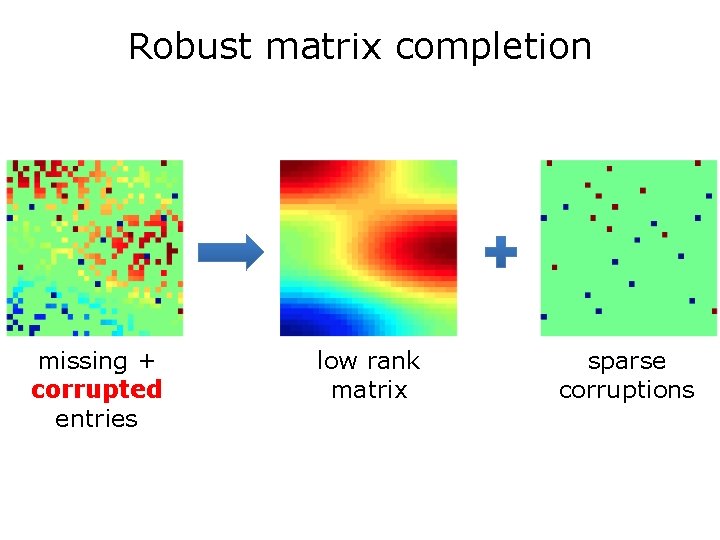

Robust matrix completion missing + corrupted entries low rank matrix sparse corruptions

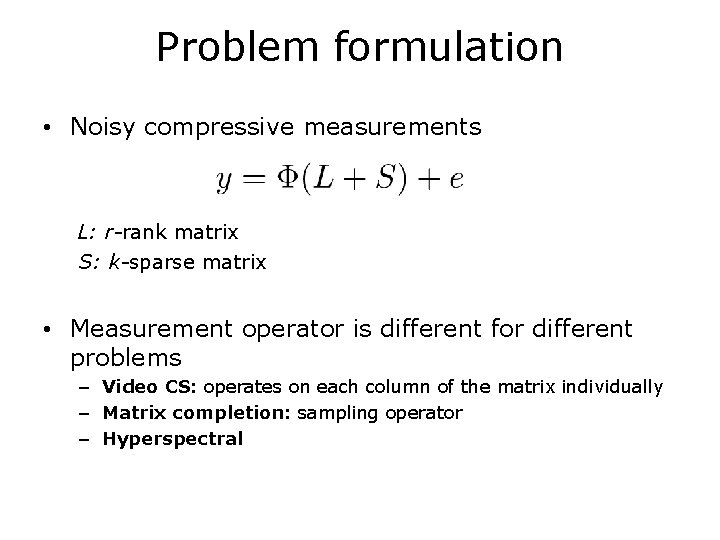

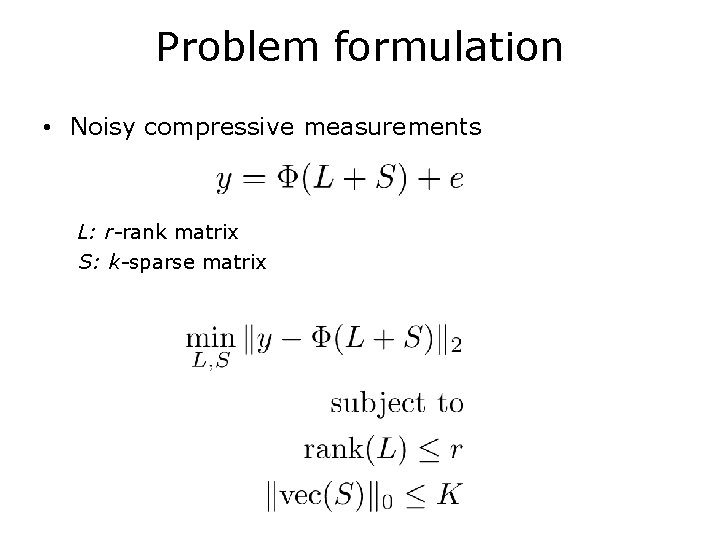

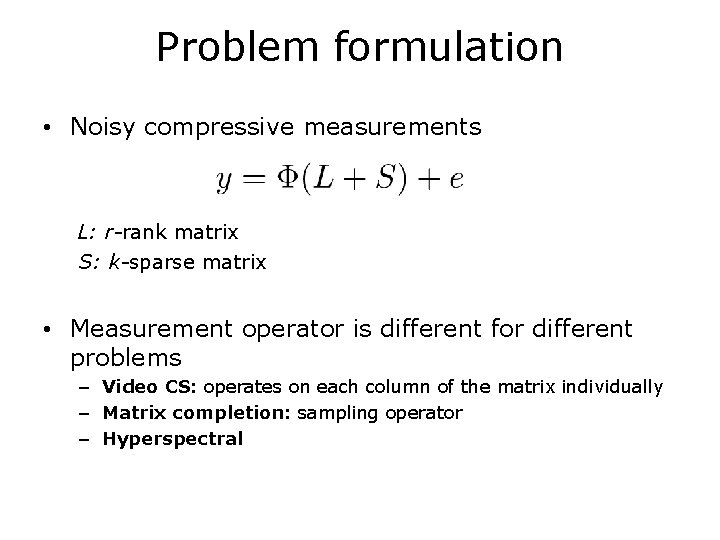

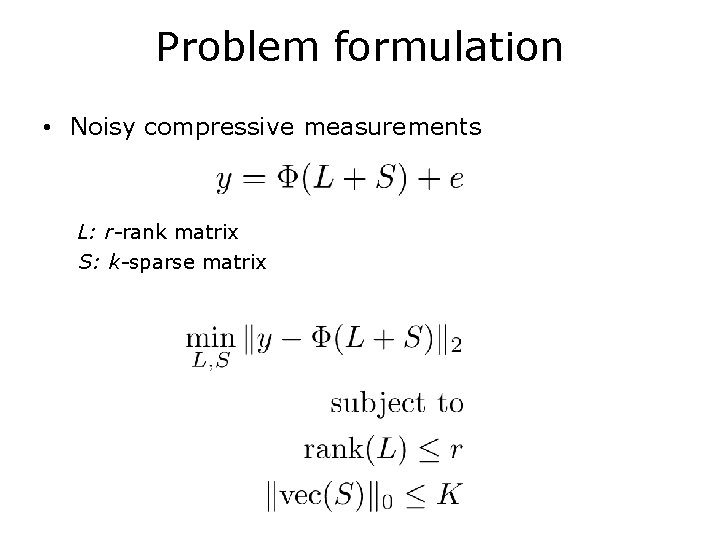

Problem formulation • Noisy compressive measurements L: r-rank matrix S: k-sparse matrix • Measurement operator is different for different problems – Video CS: operates on each column of the matrix individually – Matrix completion: sampling operator – Hyperspectral

Problem formulation • Noisy compressive measurements L: r-rank matrix S: k-sparse matrix

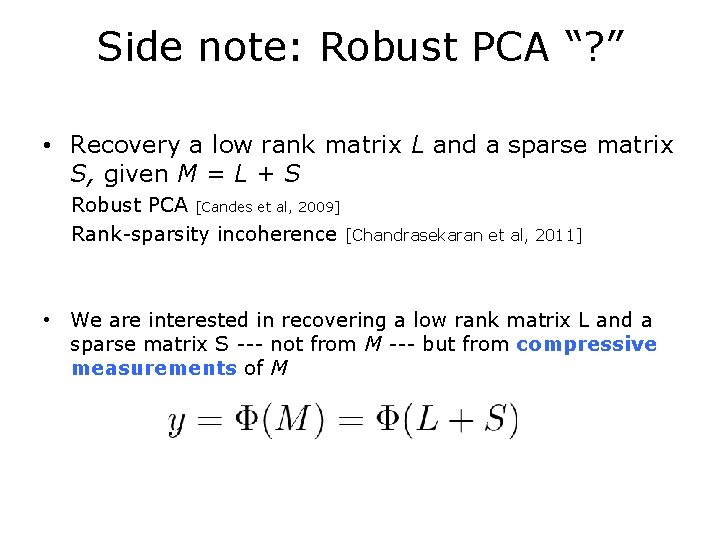

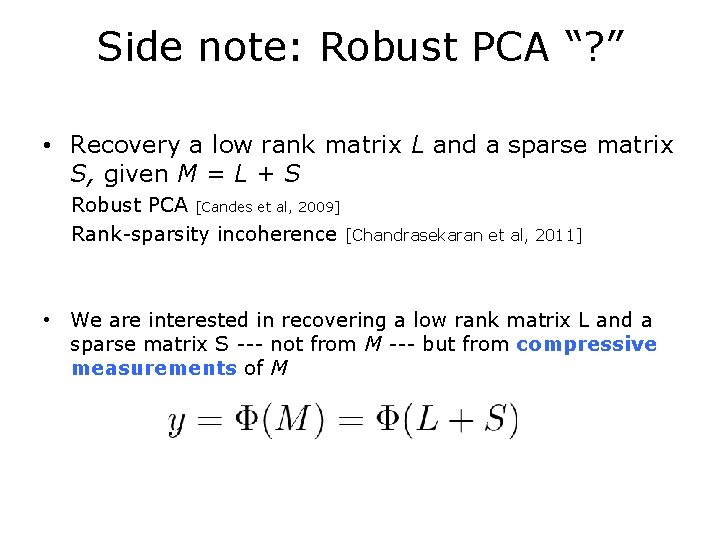

Side note: Robust PCA “? ” • Recovery a low rank matrix L and a sparse matrix S, given M = L + S Robust PCA [Candes et al, 2009] Rank-sparsity incoherence [Chandrasekaran et al, 2011] • We are interested in recovering a low rank matrix L and a sparse matrix S --- not from M --- but from compressive measurements of M

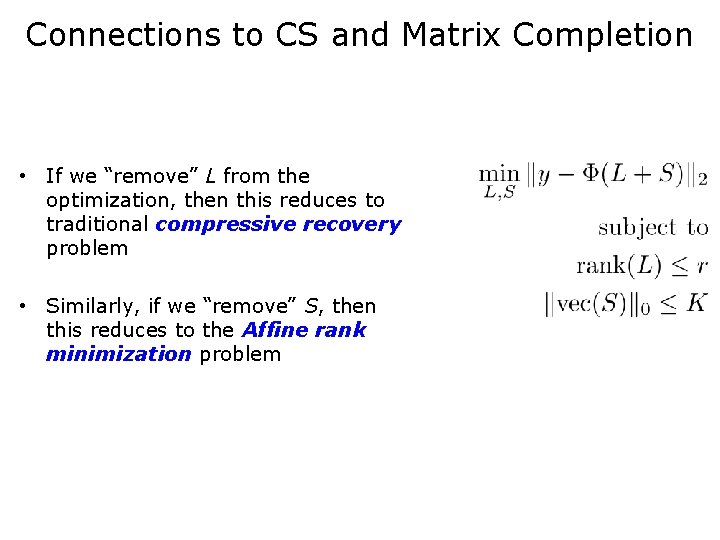

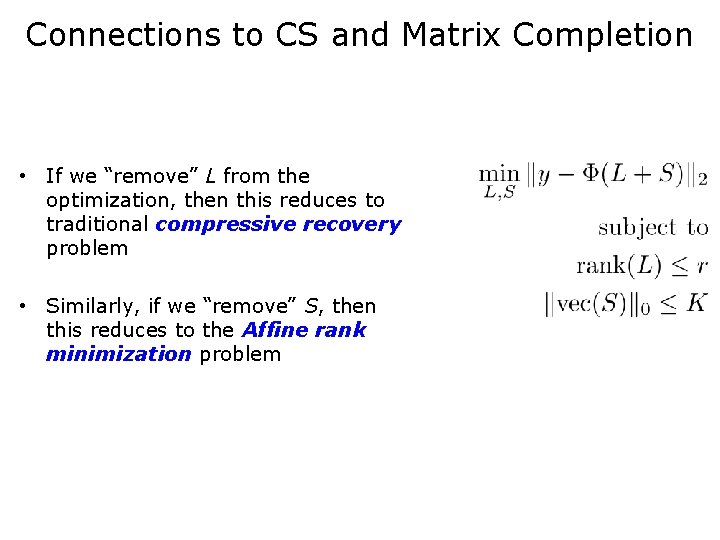

Connections to CS and Matrix Completion • If we “remove” L from the optimization, then this reduces to traditional compressive recovery problem • Similarly, if we “remove” S, then this reduces to the Affine rank minimization problem

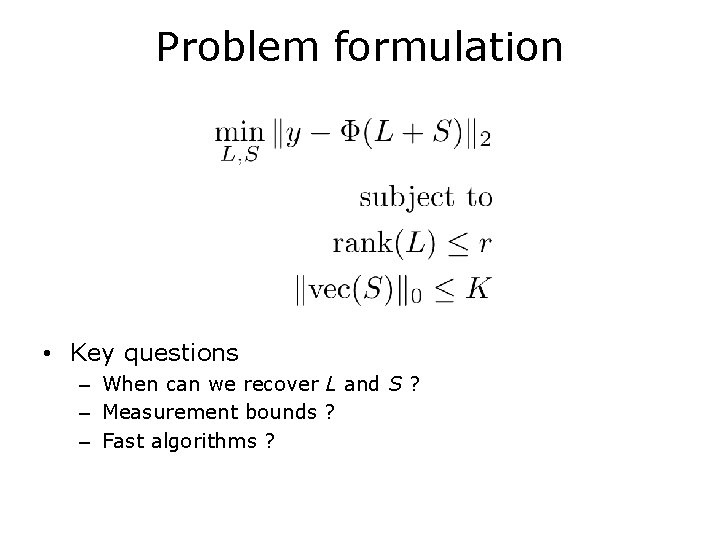

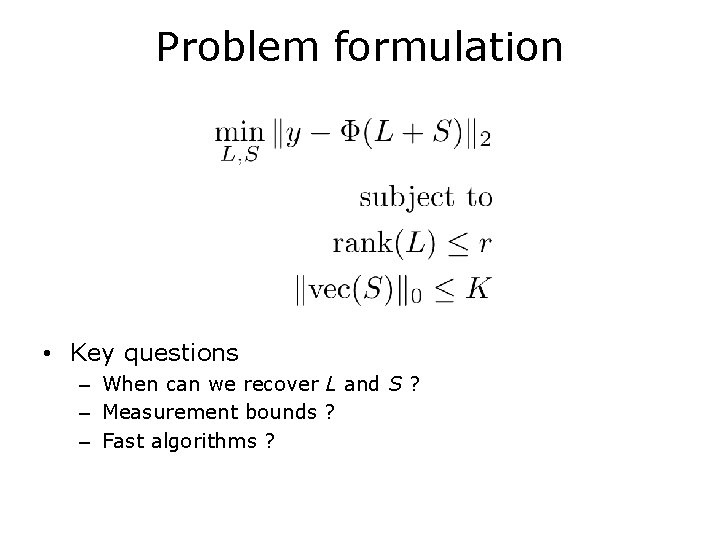

Problem formulation • Key questions – When can we recover L and S ? – Measurement bounds ? – Fast algorithms ?

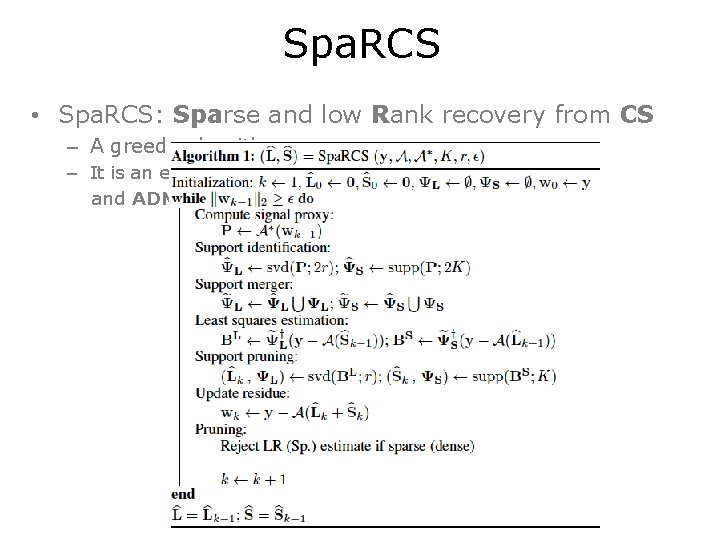

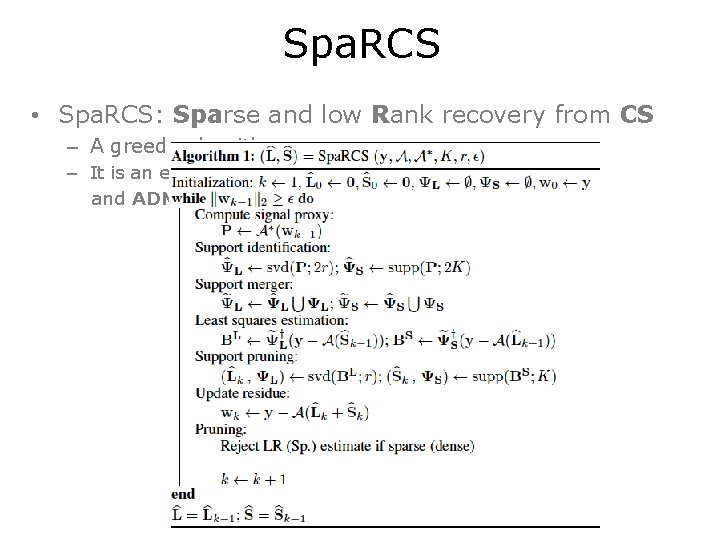

Spa. RCS • Spa. RCS: Sparse and low Rank recovery from CS – A greedy algorithm – It is an extension of Co. Sa. MP [Tropp and Needell, 2009] and ADMi. RA [Lee and Bresler, 2010]

Spa. RCS • Spa. RCS: Sparse and low Rank recovery from CS – A greedy algorithm – It is an extension of Co. SAMP [Tropp and Needell, 2009] and ADMi. RA [Lee and Bresler, 2010]

Spa. RCS • Spa. RCS: Sparse and low Rank recovery from CS – A greedy algorithm – It is an extension of Co. Sa. MP [Tropp and Needell, 2009] and ADMi. RA [Lee and Bresler, 2010] • Claim – If satisfies both RIP and rank-RIP with small constants, – and the low rank matrix is sufficiently dense, and sparse matrix has random support (or bounded col/row degree) – then, Spa. RCS converges exponentially to the right answer

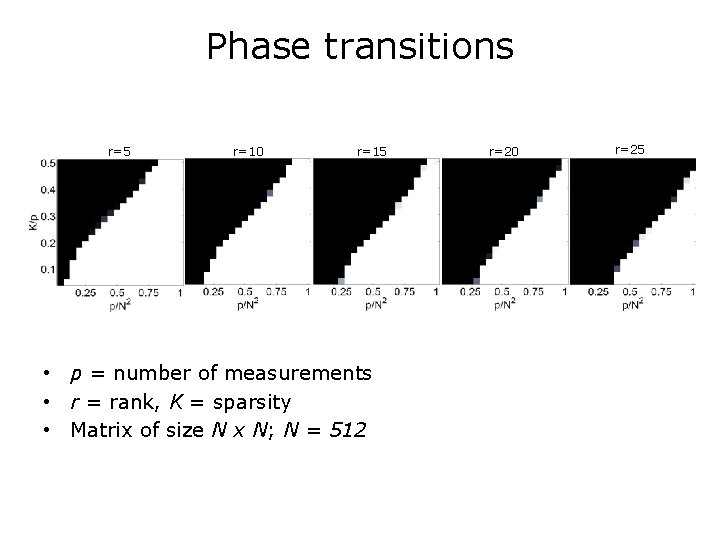

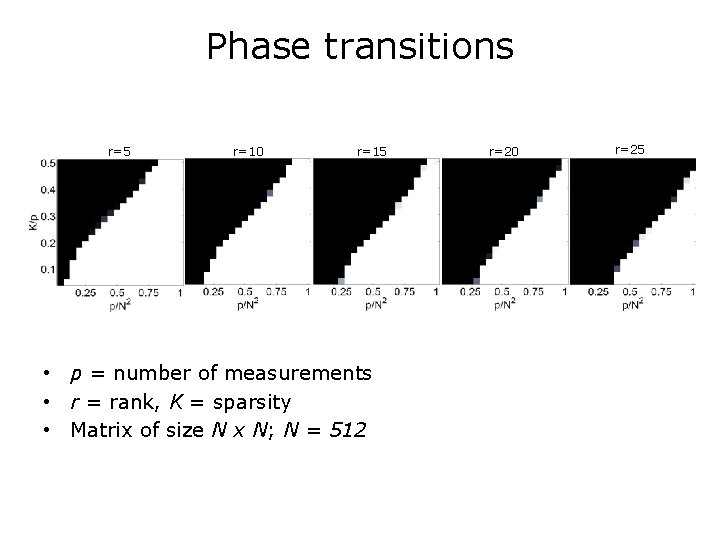

Phase transitions r=5 r=10 r=15 • p = number of measurements • r = rank, K = sparsity • Matrix of size N x N; N = 512 r=20 r=25

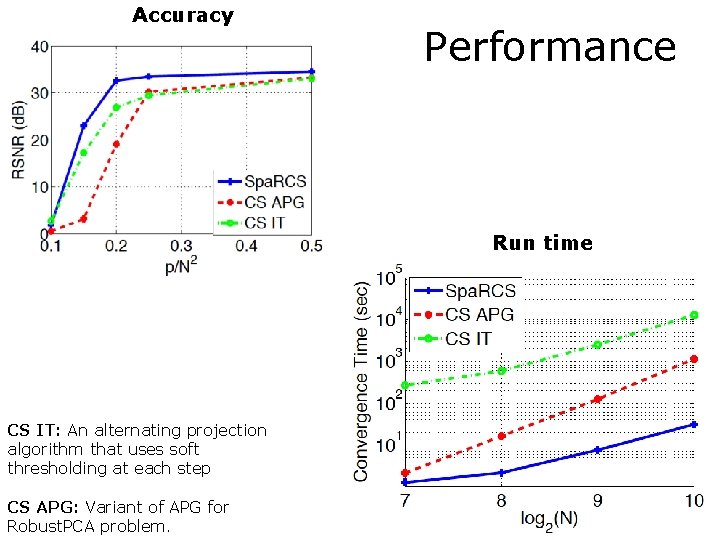

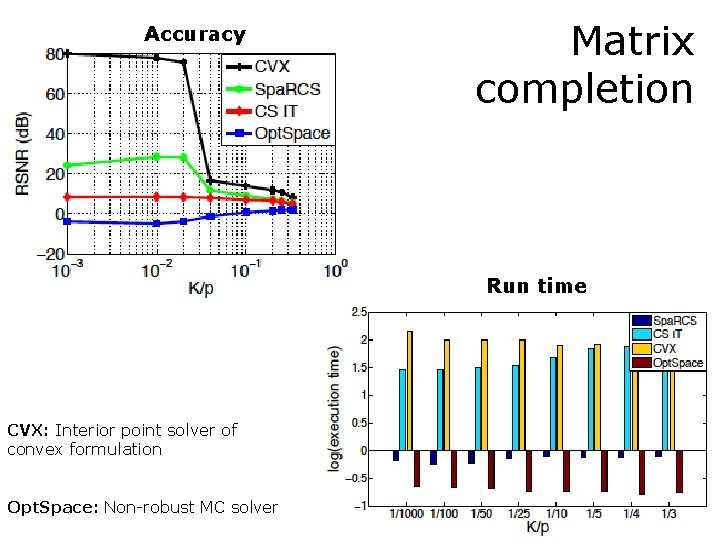

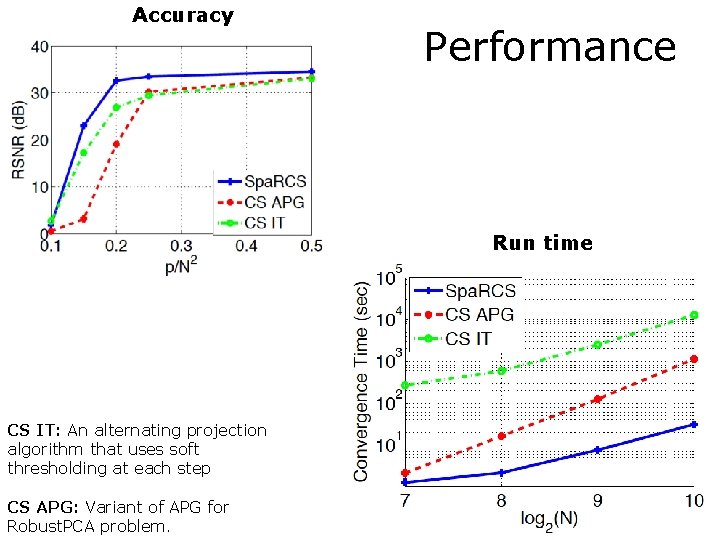

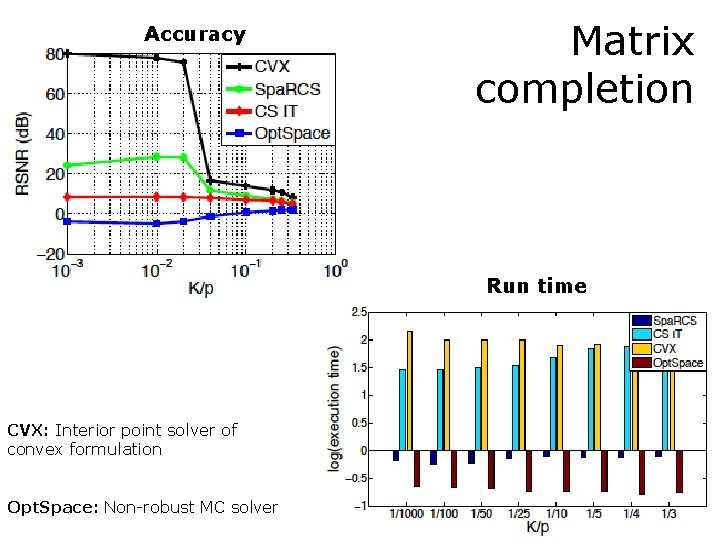

Accuracy Performance Run time CS IT: An alternating projection algorithm that uses soft thresholding at each step CS APG: Variant of APG for Robust. PCA problem.

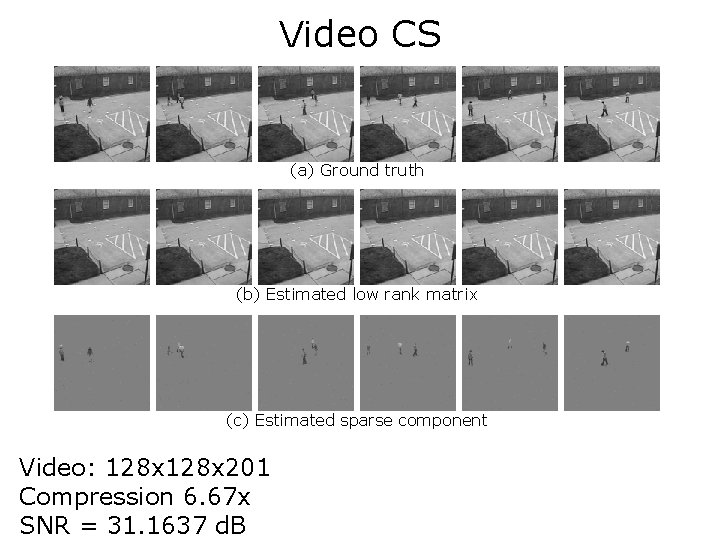

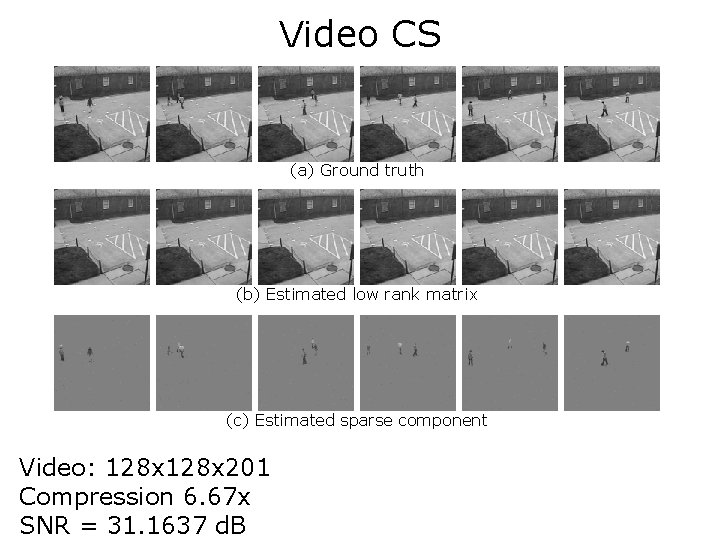

Video CS (a) Ground truth (b) Estimated low rank matrix (c) Estimated sparse component Video: 128 x 201 Compression 6. 67 x SNR = 31. 1637 d. B

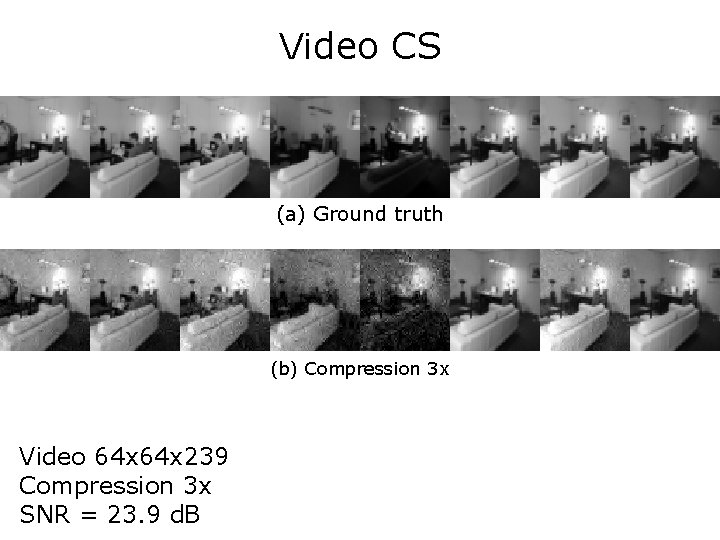

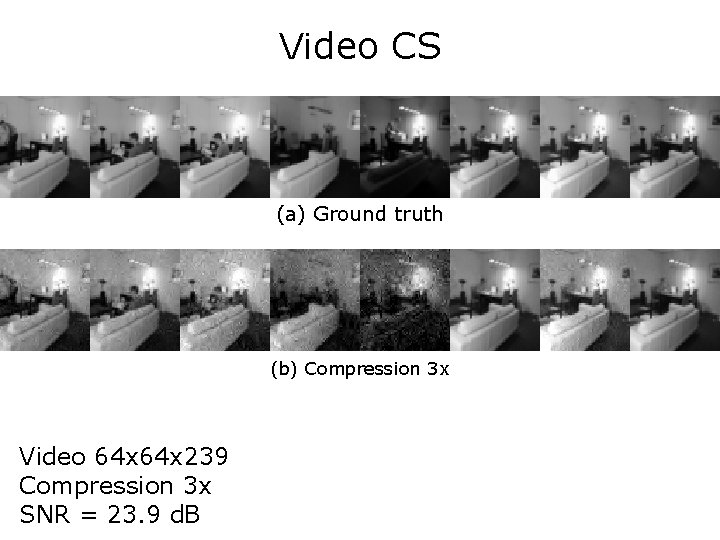

Video CS (a) Ground truth (b) Compression 3 x Video 64 x 239 Compression 3 x SNR = 23. 9 d. B

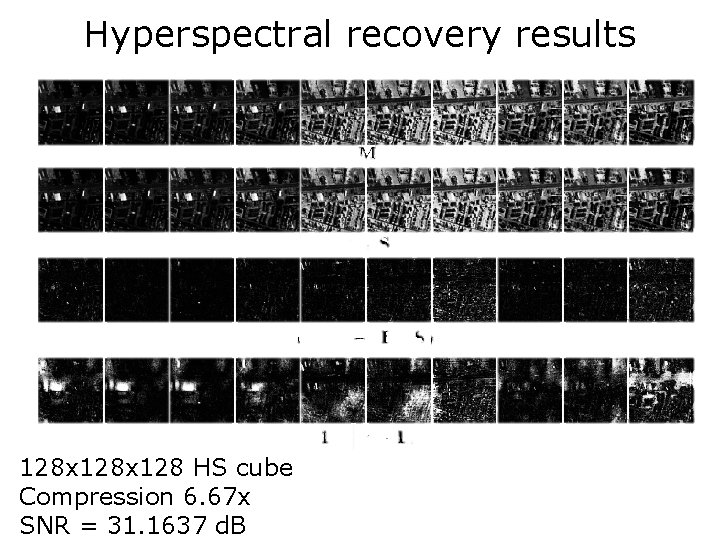

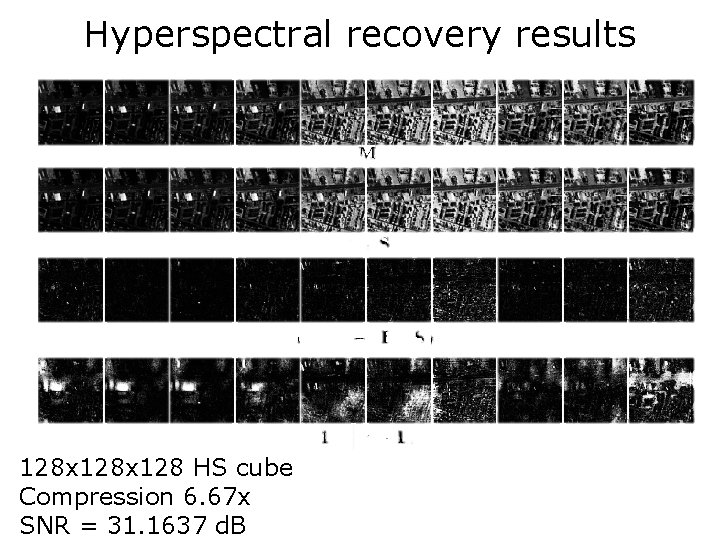

Hyperspectral recovery results 128 x 128 HS cube Compression 6. 67 x SNR = 31. 1637 d. B

Accuracy Matrix completion Run time CVX: Interior point solver of convex formulation Opt. Space: Non-robust MC solver

Open questions • Convergence results for the greedy algorithm • Low rank component is sparse/compressible in a wavelet basis – Is it even possible ?

![CSLDS S et al SIAM J IS Low rank model CS-LDS • [S, et al. , SIAM J. IS*] • Low rank model –](https://slidetodoc.com/presentation_image/bd32c9d4fdda38bdec07f91794e3c85d/image-24.jpg)

CS-LDS • [S, et al. , SIAM J. IS*] • Low rank model – Sparse rows (in a wavelet transformation) • Hyper-spectral data – 2300 Spectral bands – Spatial resolution 128 x 64 – Rank 5 Ground Truth 25. 2 d. B 24. 7 d. B 2% 1%

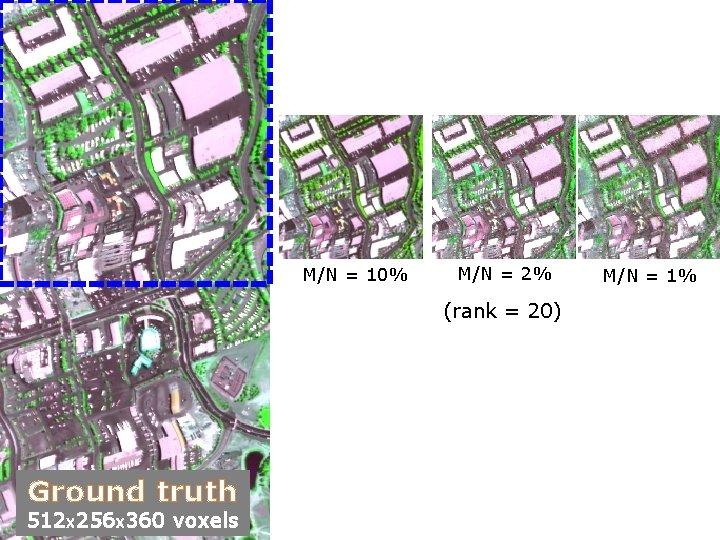

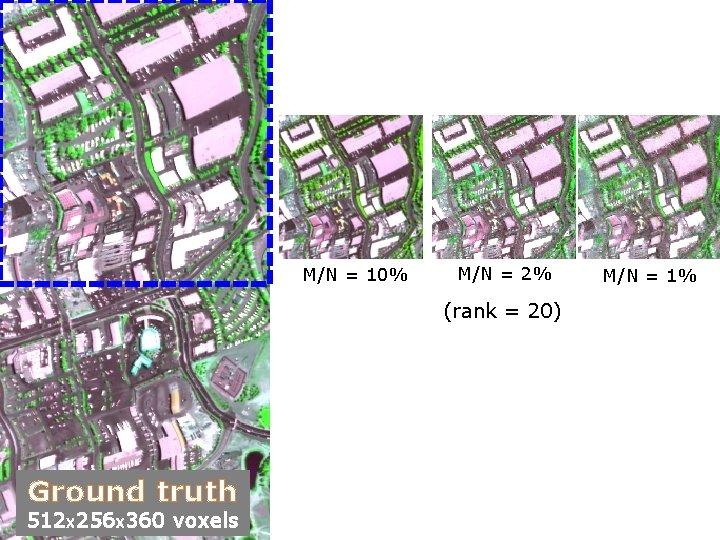

M/N = 10% M/N = 2% (rank = 20) Ground truth 512 x 256 x 360 voxels M/N = 1%

Open questions • Convergence results for the greedy algorithm • Low rank matrix is sparse/compressible in a wavelet basis – Is it even possible ? • Streaming recovery etc… dsp. rice. edu