Direct Methods for Sparse Linear Systems Lecture 4

- Slides: 39

Direct Methods for Sparse Linear Systems Lecture 4 Alessandra Nardi Thanks to Prof. Jacob White, Suvranu De, Deepak Ramaswamy, Michal Rewienski, and Karen Veroy

Last lecture review • Solution of system of linear equations • Existence and uniqueness review • Gaussian elimination basics – GE basics – LU factorization – Pivoting

Outline • Error Mechanisms • Sparse Matrices – Why are they nice – How do we store them – How can we exploit and preserve sparsity

Error Mechanisms • Round-off error – Pivoting helps • Ill conditioning (almost singular) – Bad luck: property of the matrix – Pivoting does not help • Numerical Stability of Method

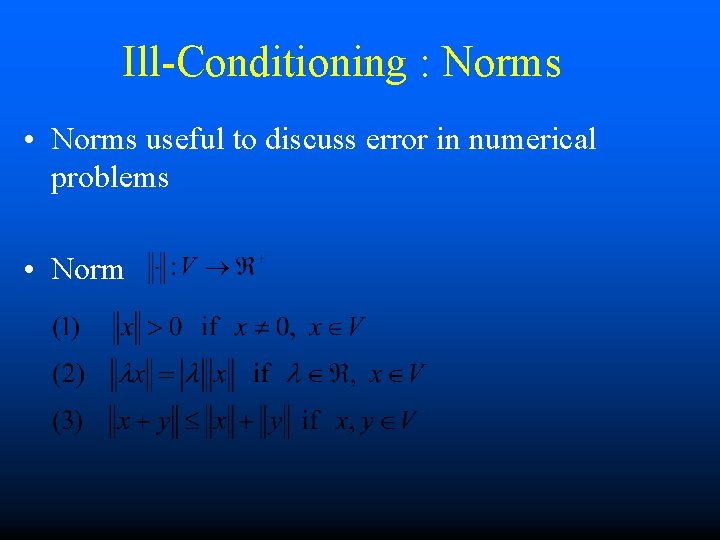

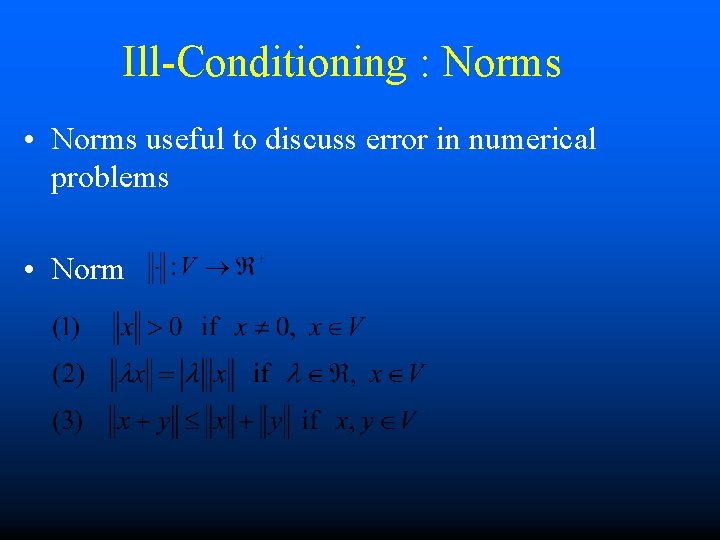

Ill-Conditioning : Norms • Norms useful to discuss error in numerical problems • Norm

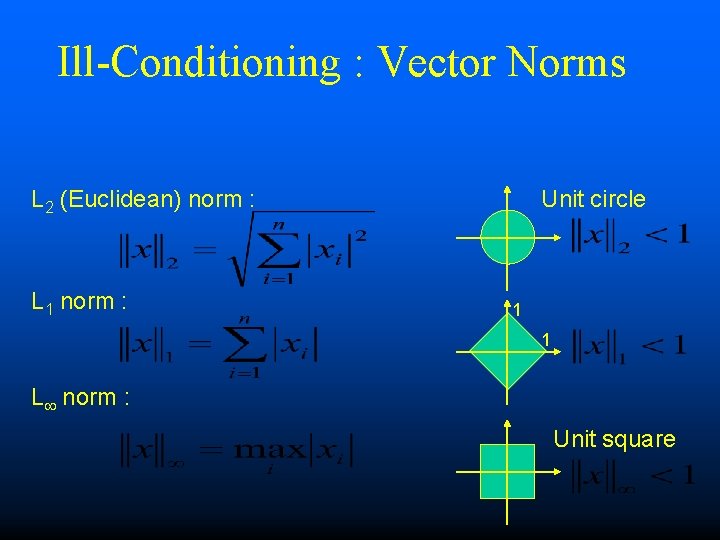

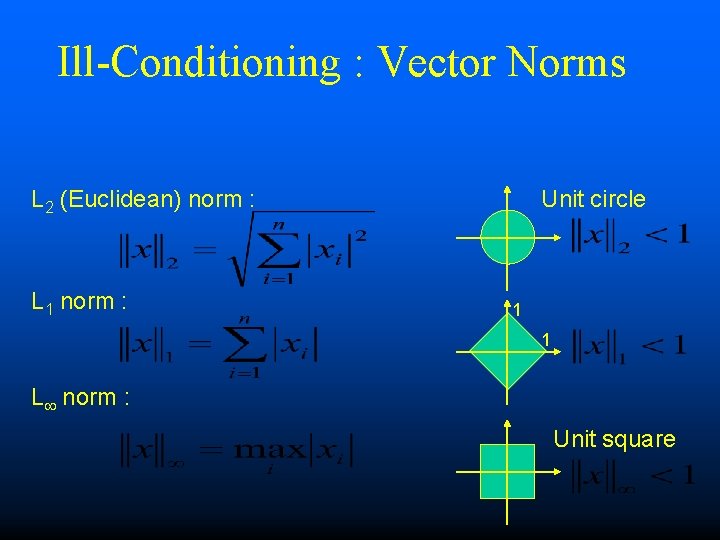

Ill-Conditioning : Vector Norms L 2 (Euclidean) norm : L 1 norm : Unit circle 1 1 L norm : Unit square

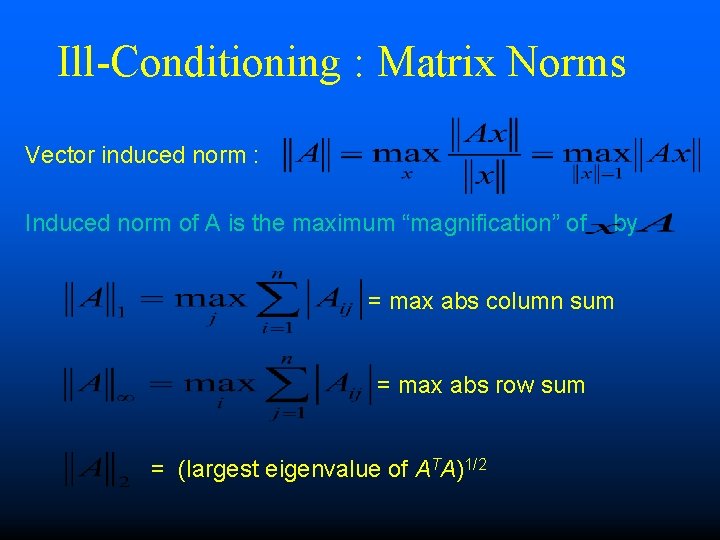

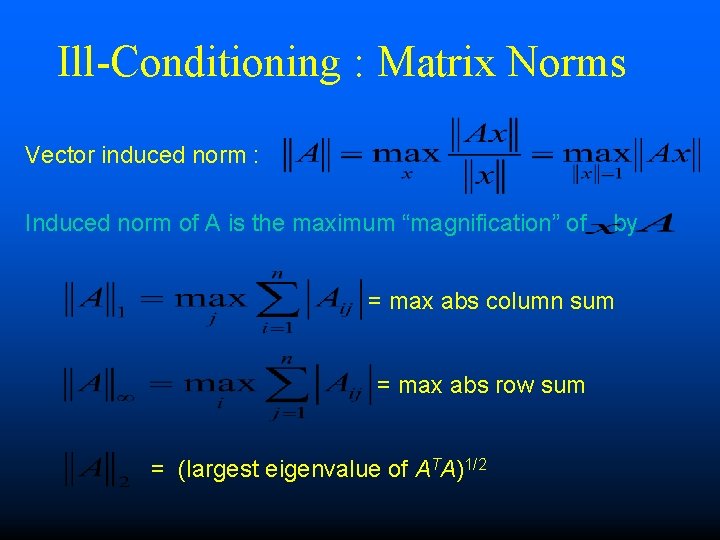

Ill-Conditioning : Matrix Norms Vector induced norm : Induced norm of A is the maximum “magnification” of by = max abs column sum = max abs row sum = (largest eigenvalue of ATA)1/2

Ill-Conditioning : Matrix Norms • More properties on the matrix norm: • Condition Number: -It can be shown that: -Large k(A) means matrix is almost singular (ill-conditioned)

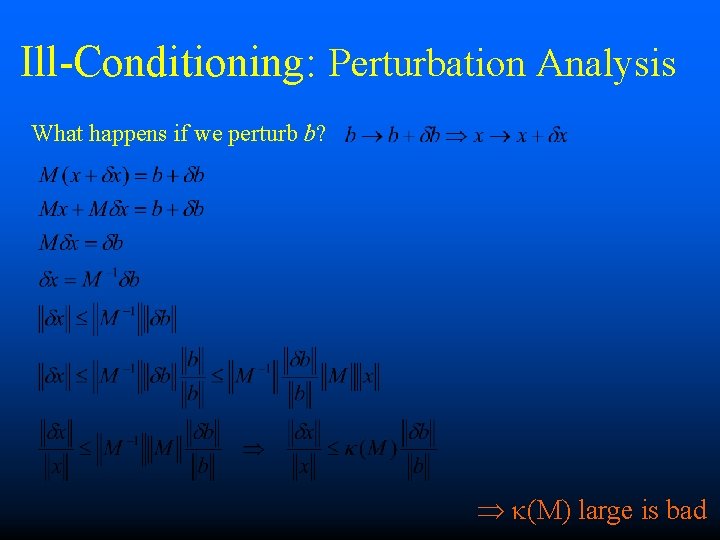

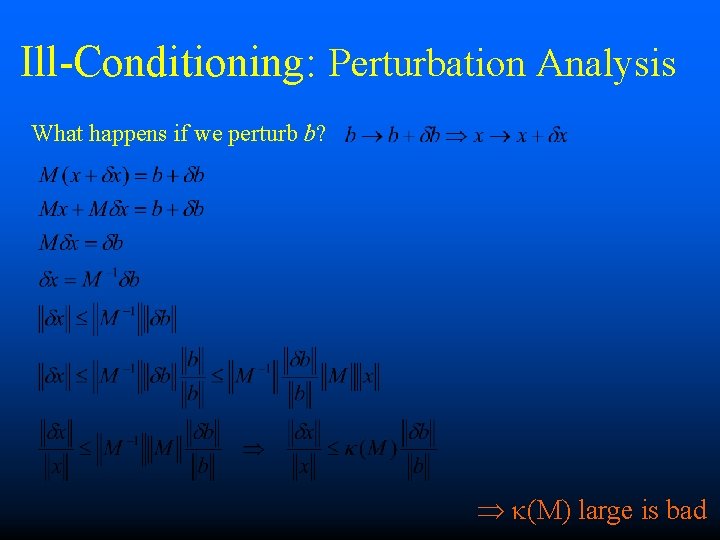

Ill-Conditioning: Perturbation Analysis What happens if we perturb b? k(M) large is bad

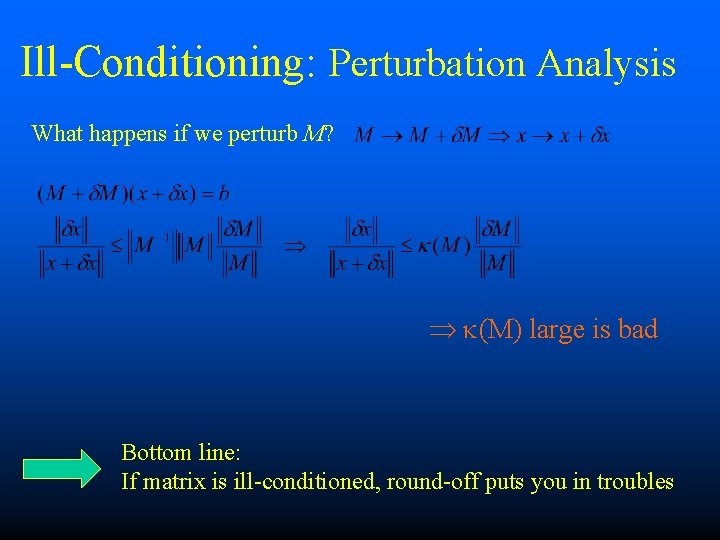

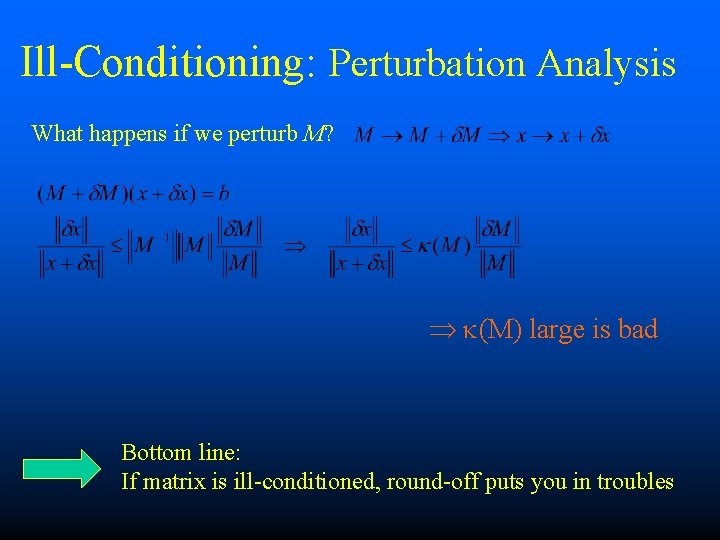

Ill-Conditioning: Perturbation Analysis What happens if we perturb M? k(M) large is bad Bottom line: If matrix is ill-conditioned, round-off puts you in troubles

Ill-Conditioning: Perturbation Analysis Geometric Approach is more intuitive Vectors are orthogonal Vectors are nearly aligned When vectors are nearly aligned, Hard to decide how much of versus how much of

Numerical Stability • Rounding errors may accumulate and propagate unstably in a bad algorithm. • Can be proven that for Gaussian elimination the accumulated error is bounded

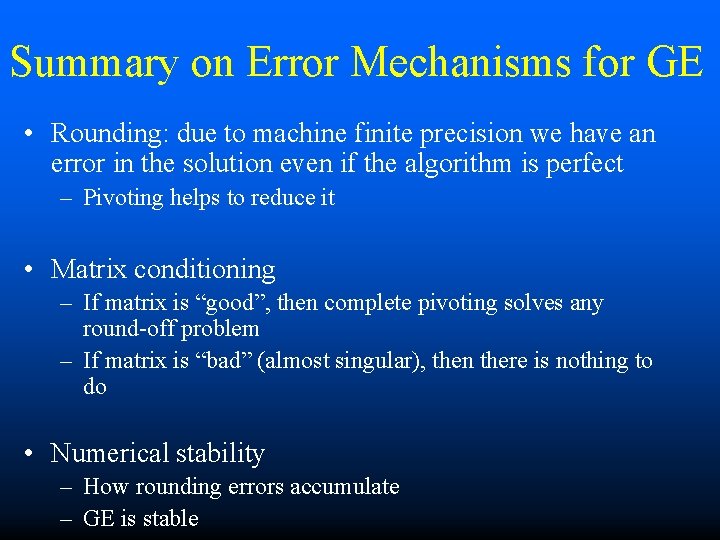

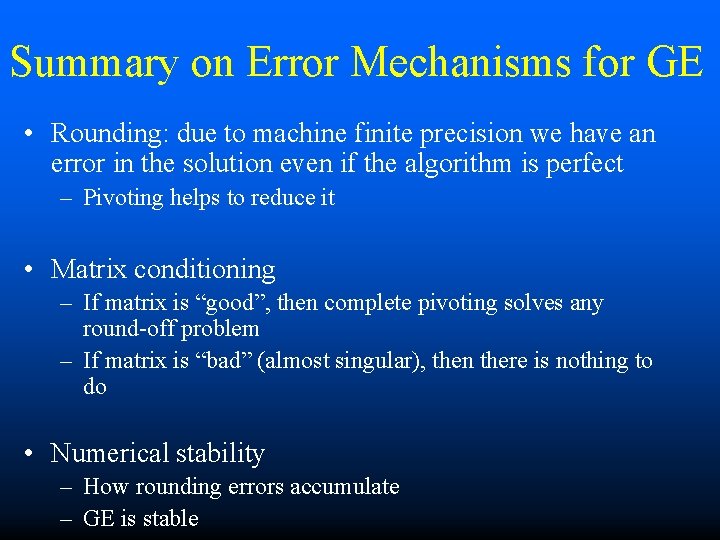

Summary on Error Mechanisms for GE • Rounding: due to machine finite precision we have an error in the solution even if the algorithm is perfect – Pivoting helps to reduce it • Matrix conditioning – If matrix is “good”, then complete pivoting solves any round-off problem – If matrix is “bad” (almost singular), then there is nothing to do • Numerical stability – How rounding errors accumulate – GE is stable

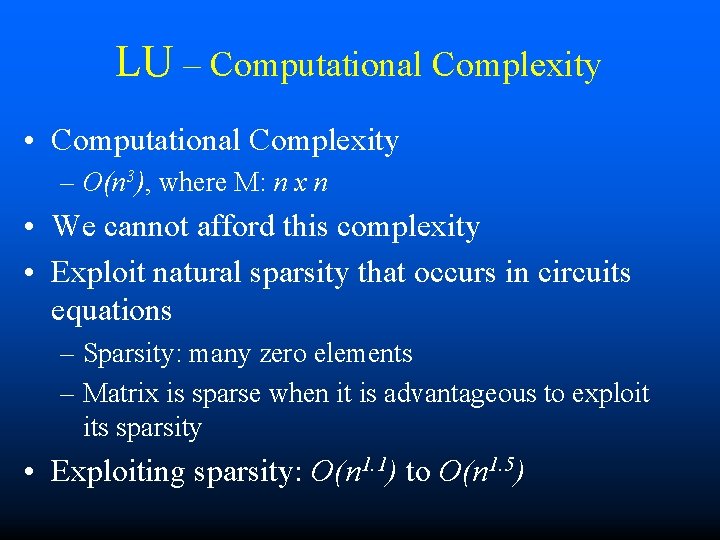

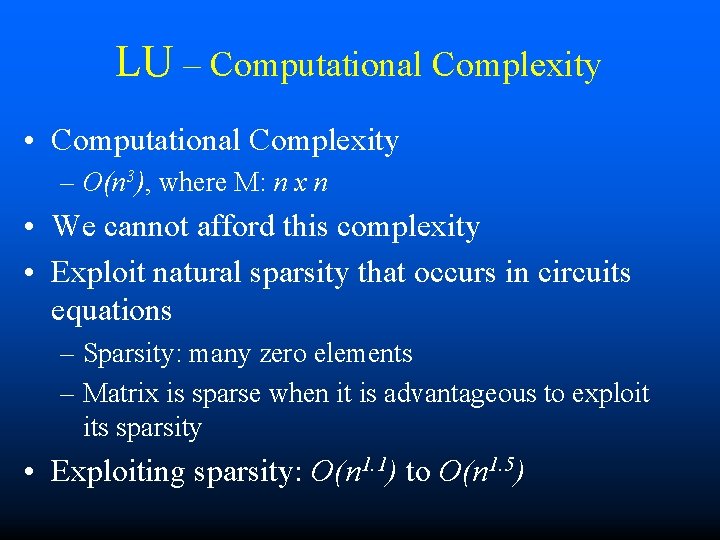

LU – Computational Complexity • Computational Complexity – O(n 3), where M: n x n • We cannot afford this complexity • Exploit natural sparsity that occurs in circuits equations – Sparsity: many zero elements – Matrix is sparse when it is advantageous to exploit its sparsity • Exploiting sparsity: O(n 1. 1) to O(n 1. 5)

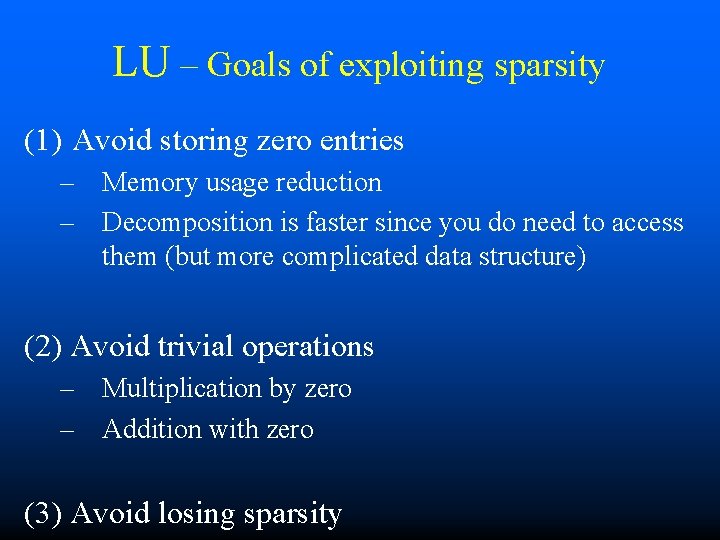

LU – Goals of exploiting sparsity (1) Avoid storing zero entries – Memory usage reduction – Decomposition is faster since you do need to access them (but more complicated data structure) (2) Avoid trivial operations – Multiplication by zero – Addition with zero (3) Avoid losing sparsity

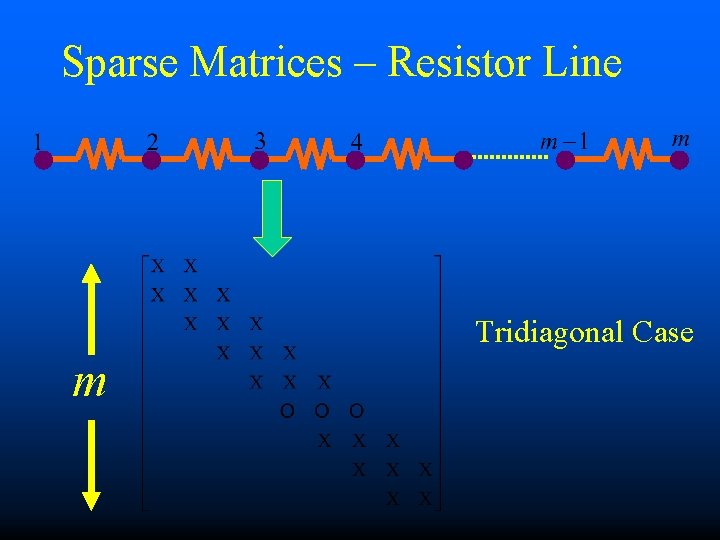

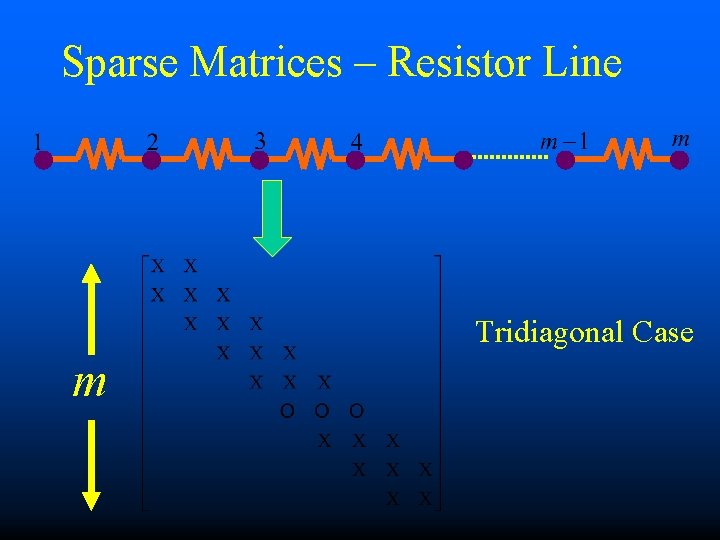

Sparse Matrices – Resistor Line Tridiagonal Case m

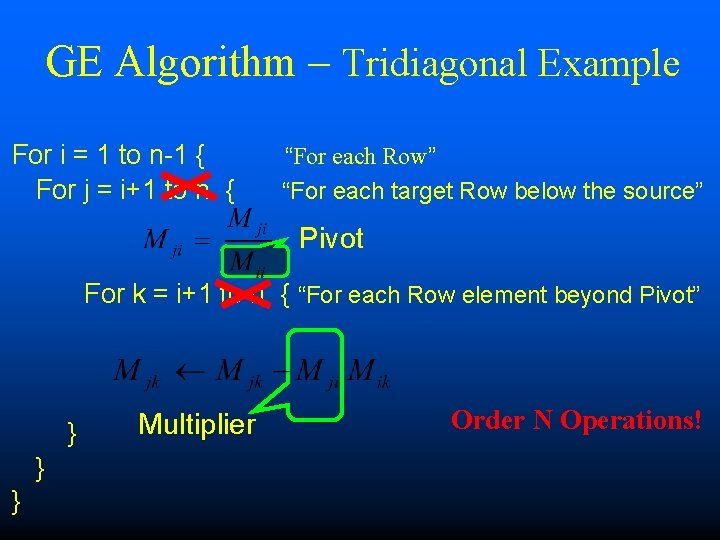

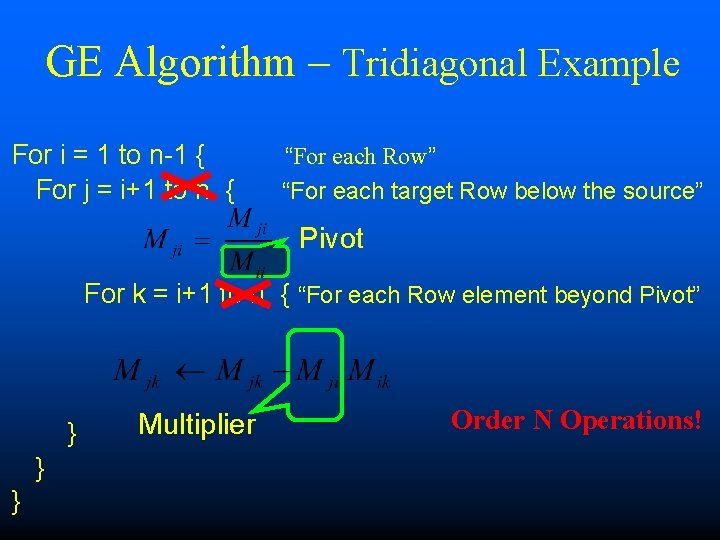

GE Algorithm – Tridiagonal Example For i = 1 to n-1 { For j = i+1 to n { “For each Row” “For each target Row below the source” Pivot For k = i+1 to n { “For each Row element beyond Pivot” } } } Multiplier Order N Operations!

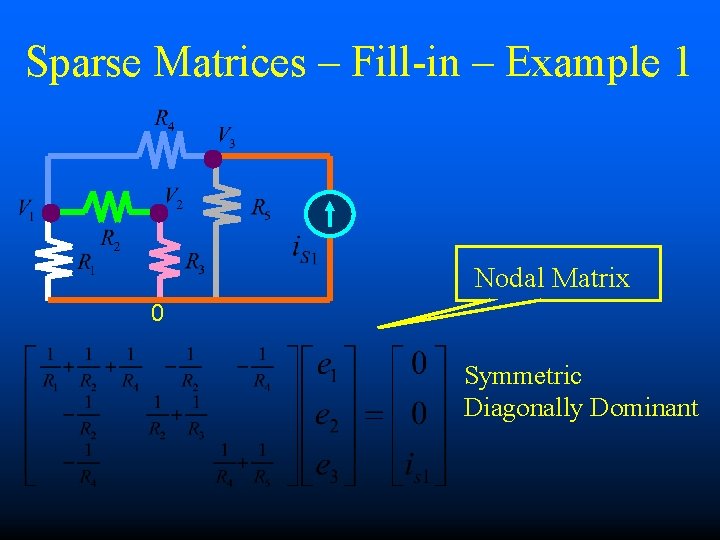

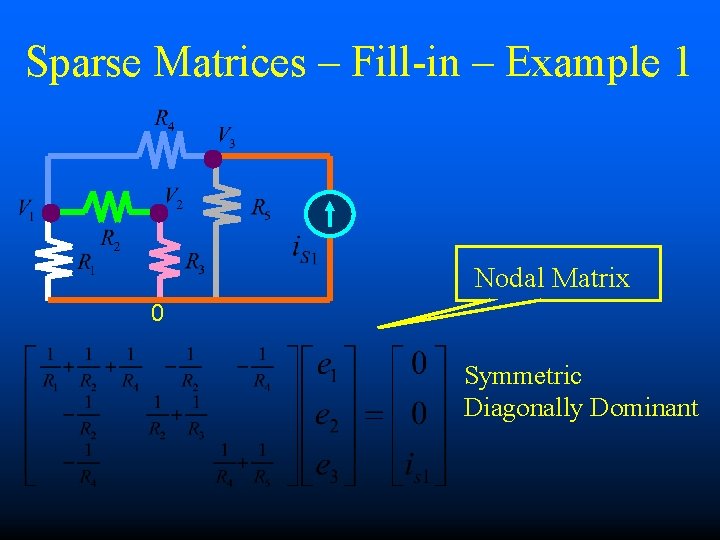

Sparse Matrices – Fill-in – Example 1 Nodal Matrix 0 Symmetric Diagonally Dominant

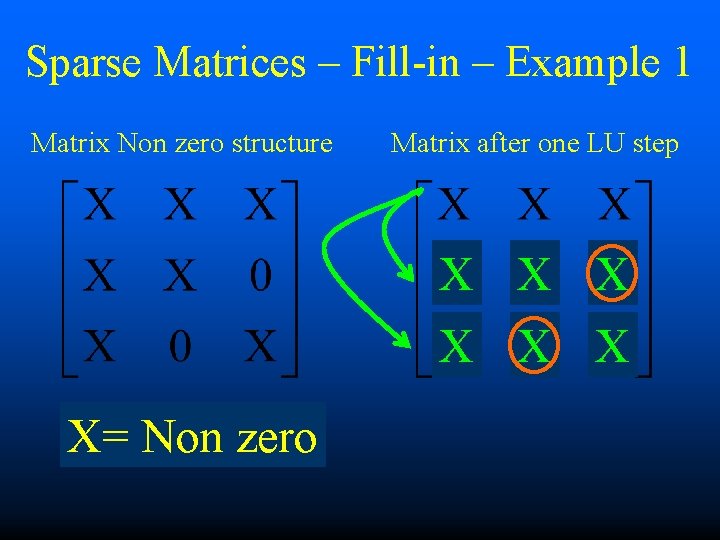

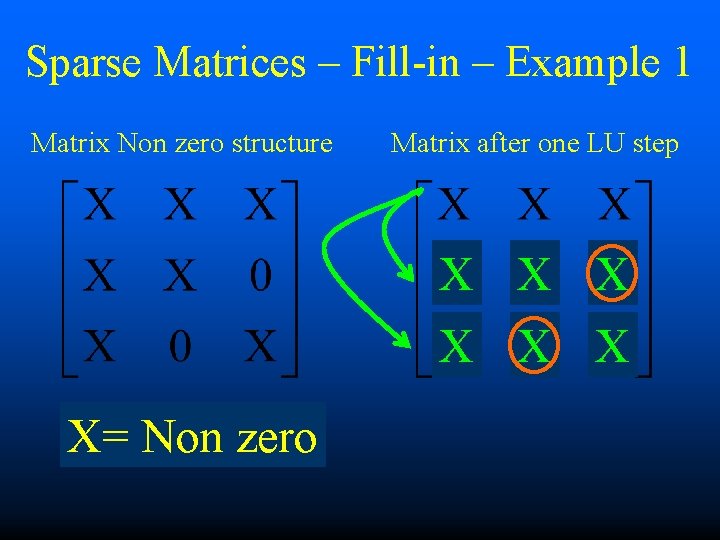

Sparse Matrices – Fill-in – Example 1 Matrix Non zero structure Matrix after one LU step X X X X= Non zero

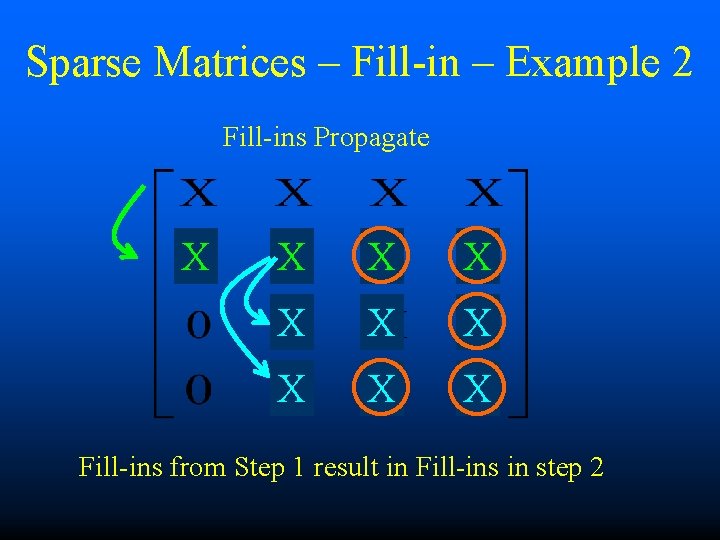

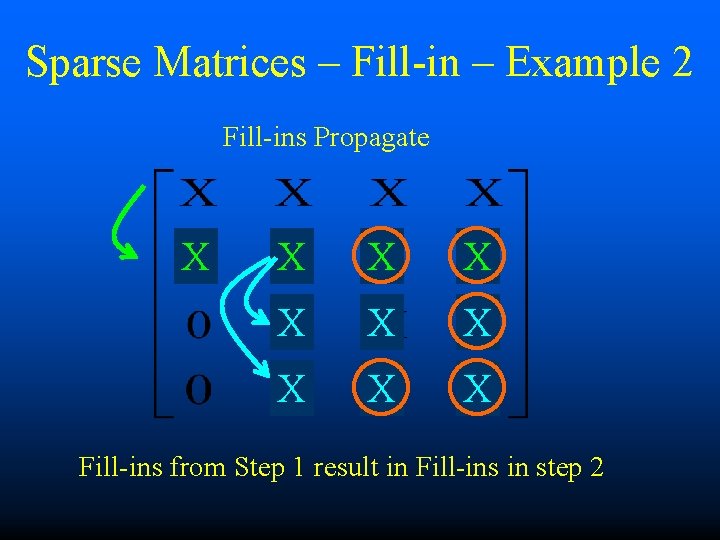

Sparse Matrices – Fill-in – Example 2 Fill-ins Propagate X X X X X Fill-ins from Step 1 result in Fill-ins in step 2

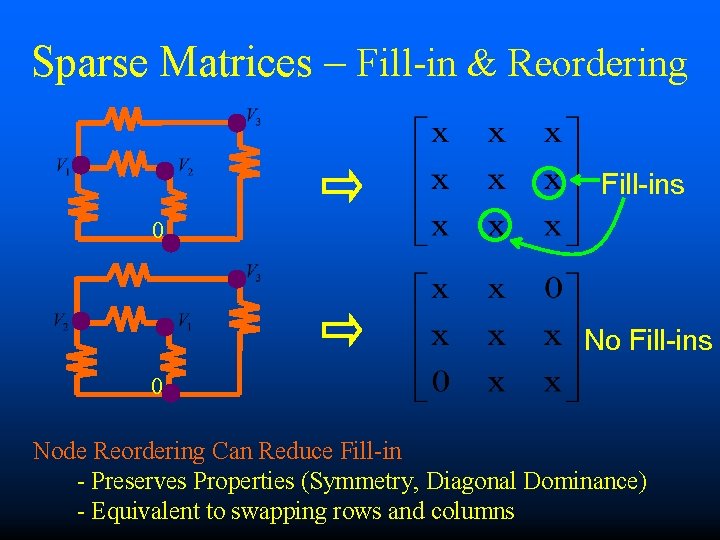

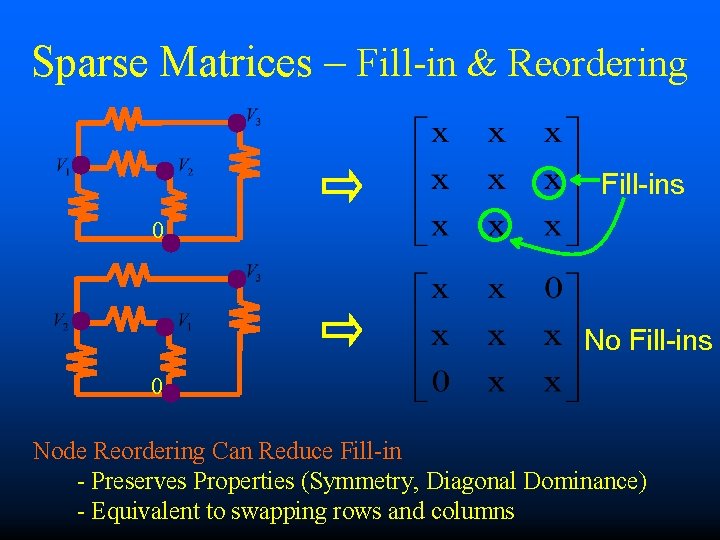

Sparse Matrices – Fill-in & Reordering Fill-ins 0 Node Reordering Can Reduce Fill-in - Preserves Properties (Symmetry, Diagonal Dominance) - Equivalent to swapping rows and columns

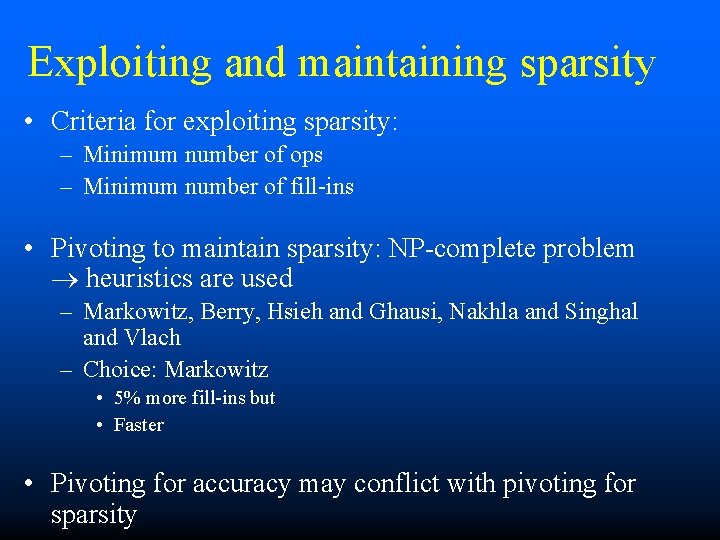

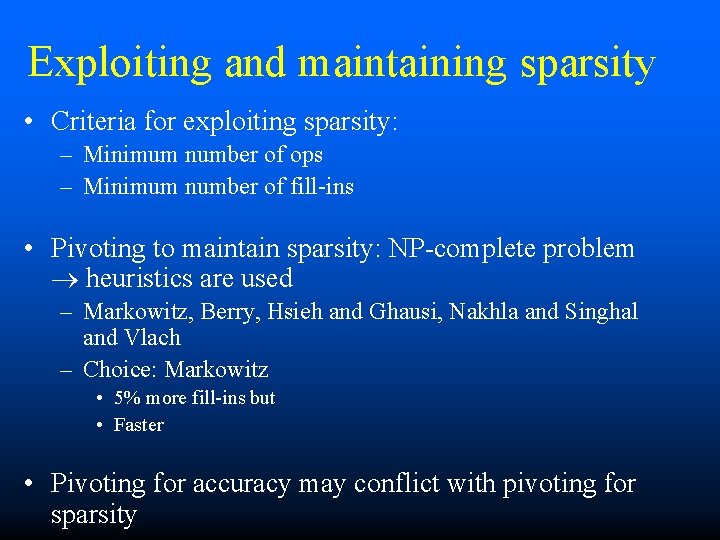

Exploiting and maintaining sparsity • Criteria for exploiting sparsity: – Minimum number of ops – Minimum number of fill-ins • Pivoting to maintain sparsity: NP-complete problem heuristics are used – Markowitz, Berry, Hsieh and Ghausi, Nakhla and Singhal and Vlach – Choice: Markowitz • 5% more fill-ins but • Faster • Pivoting for accuracy may conflict with pivoting for sparsity

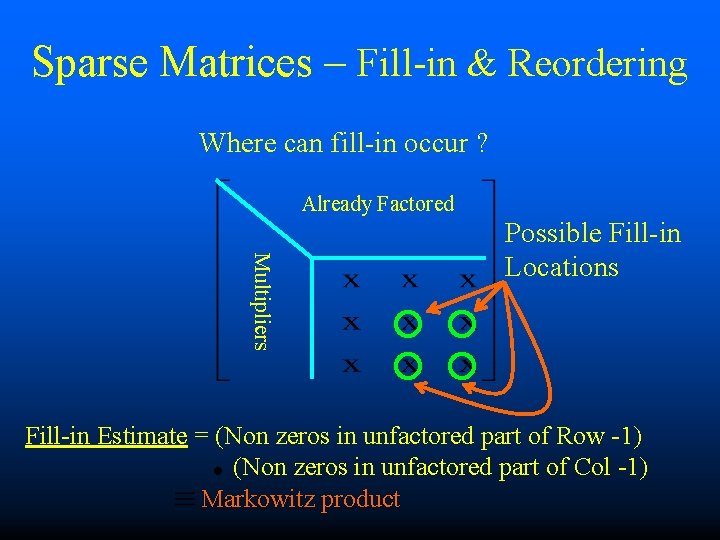

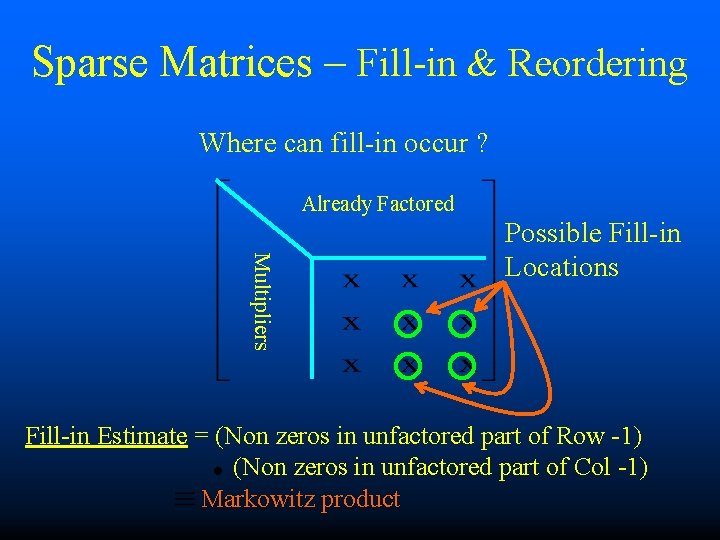

Sparse Matrices – Fill-in & Reordering Where can fill-in occur ? Already Factored Multipliers Possible Fill-in Locations Fill-in Estimate = (Non zeros in unfactored part of Row -1) (Non zeros in unfactored part of Col -1) Markowitz product

Sparse Matrices – Fill-in & Reordering Markowitz Reordering (Diagonal Pivoting) Greedy Algorithm (but close to optimal) !

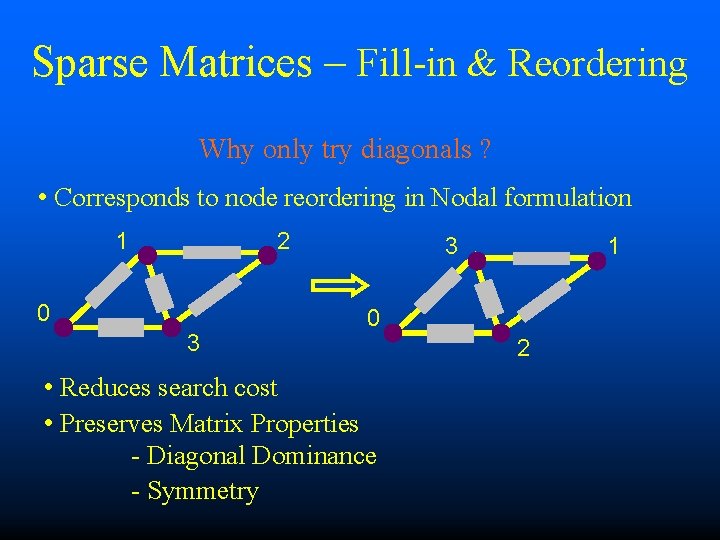

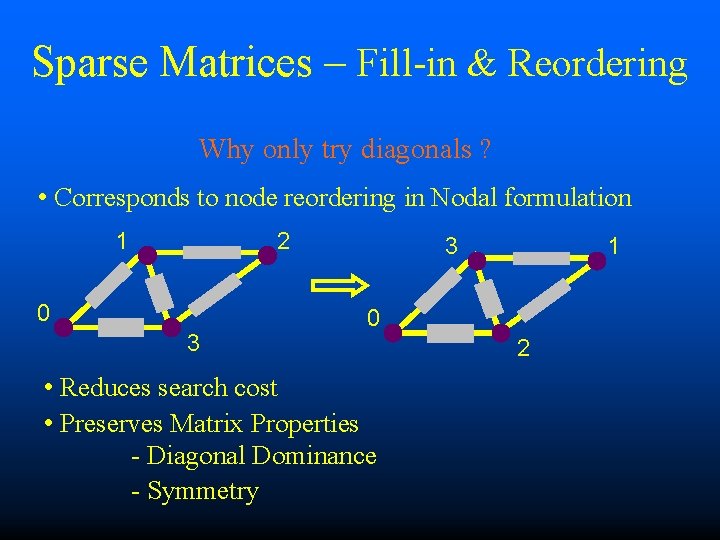

Sparse Matrices – Fill-in & Reordering Why only try diagonals ? • Corresponds to node reordering in Nodal formulation 1 2 0 3 3 1 0 • Reduces search cost • Preserves Matrix Properties - Diagonal Dominance - Symmetry 2

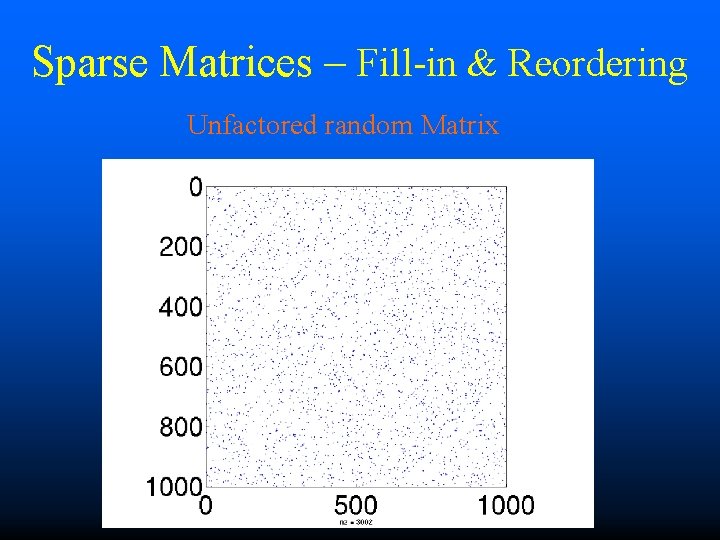

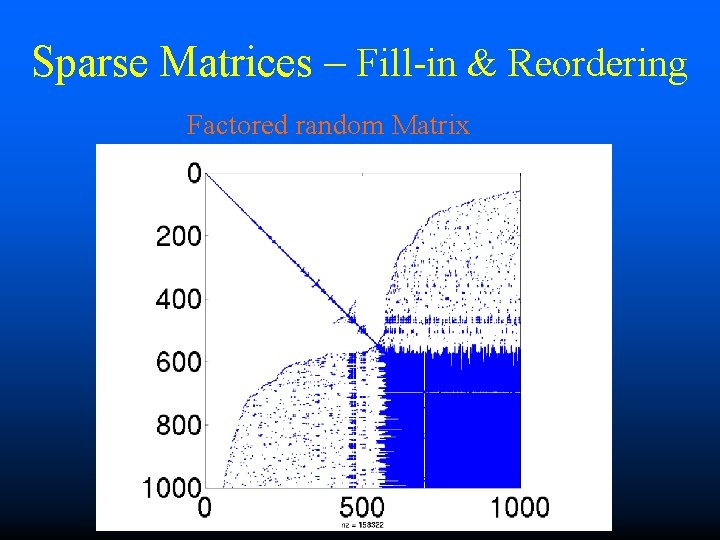

Sparse Matrices – Fill-in & Reordering Pattern of a Filled-in Matrix Very Sparse Dense

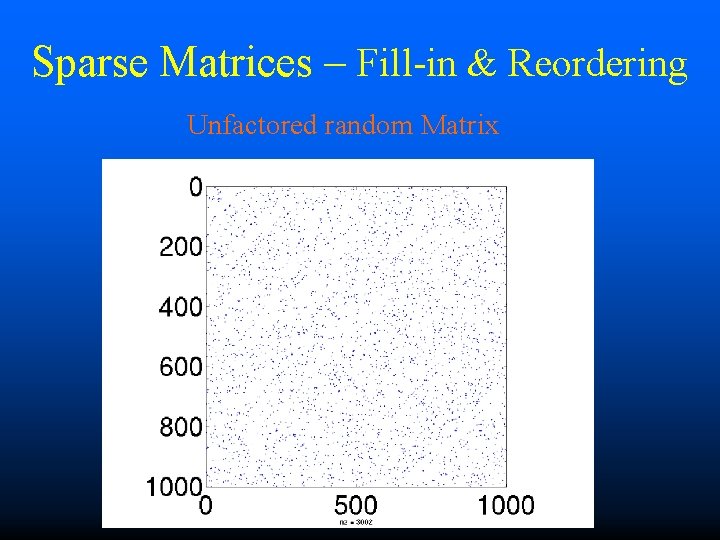

Sparse Matrices – Fill-in & Reordering Unfactored random Matrix

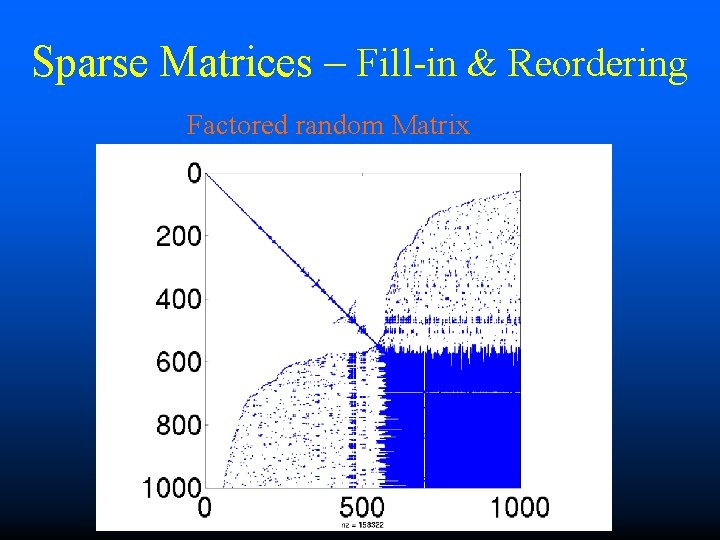

Sparse Matrices – Fill-in & Reordering Factored random Matrix

Sparse Matrices – Data Structure • Several ways of storing a sparse matrix in a compact form • Trade-off – Storage amount – Cost of data accessing and update procedures • Efficient data structure: linked list

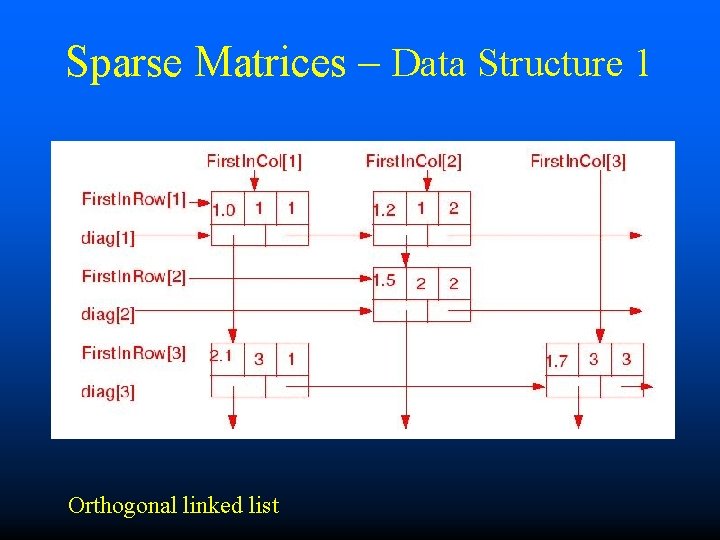

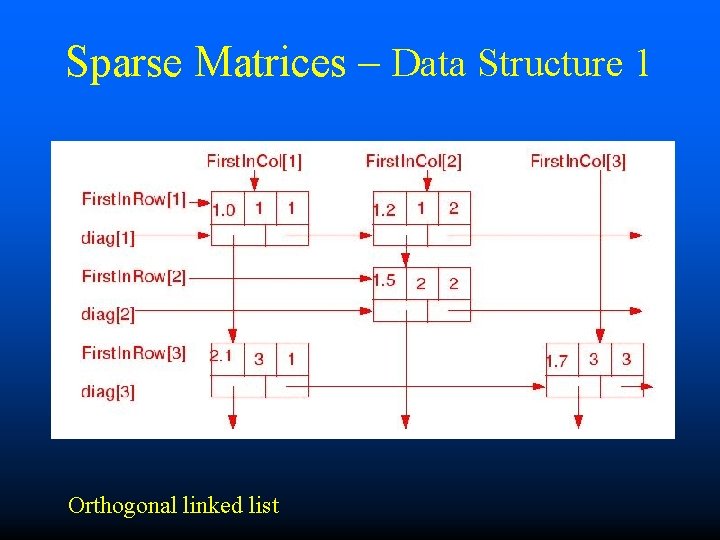

Sparse Matrices – Data Structure 1 Orthogonal linked list

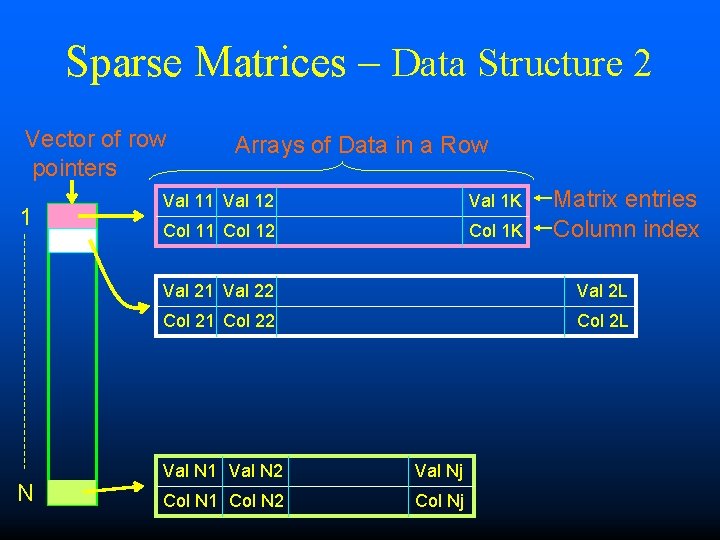

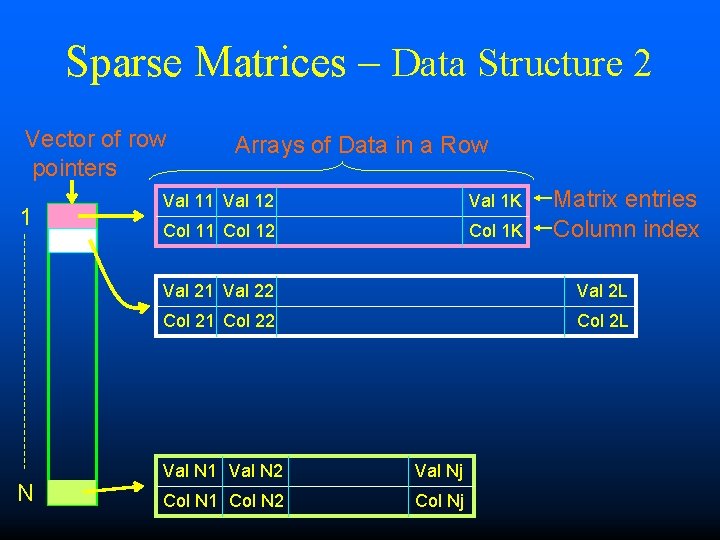

Sparse Matrices – Data Structure 2 Vector of row pointers 1 N Arrays of Data in a Row Val 11 Val 12 Val 1 K Col 11 Col 12 Col 1 K Matrix entries Column index Val 21 Val 22 Val 2 L Col 21 Col 22 Col 2 L Val N 1 Val N 2 Val Nj Col N 1 Col N 2 Col Nj

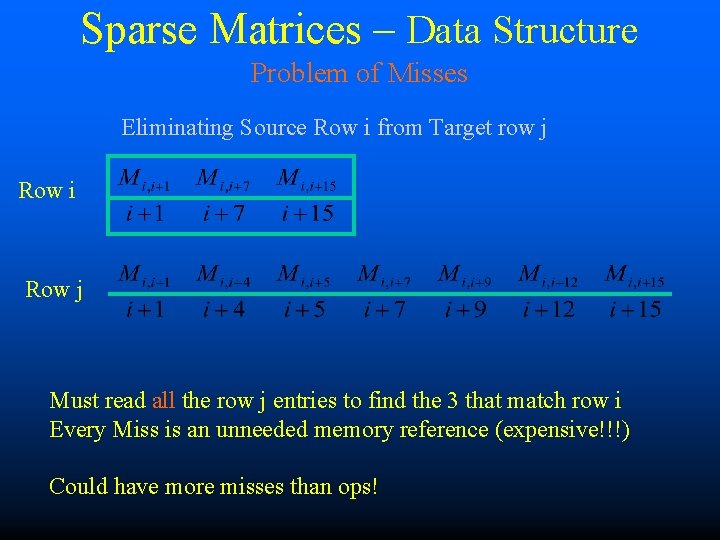

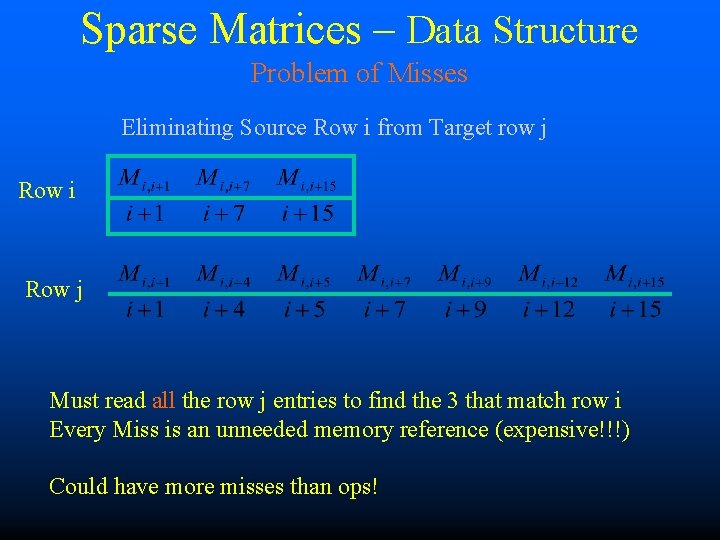

Sparse Matrices – Data Structure Problem of Misses Eliminating Source Row i from Target row j Row i Row j Must read all the row j entries to find the 3 that match row i Every Miss is an unneeded memory reference (expensive!!!) Could have more misses than ops!

Sparse Matrices – Data Structure Scattering for Miss Avoidance Row j 1) Read all the elements in Row j, and scatter them in an n-length vector 2) Access only the needed elements using array indexing!

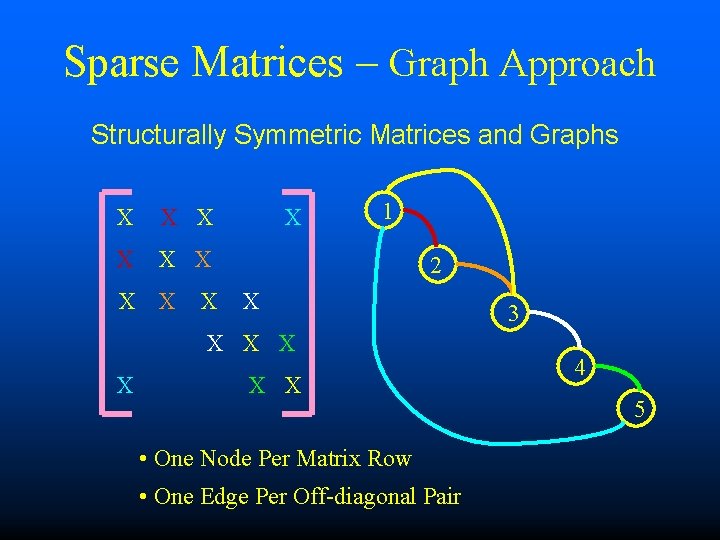

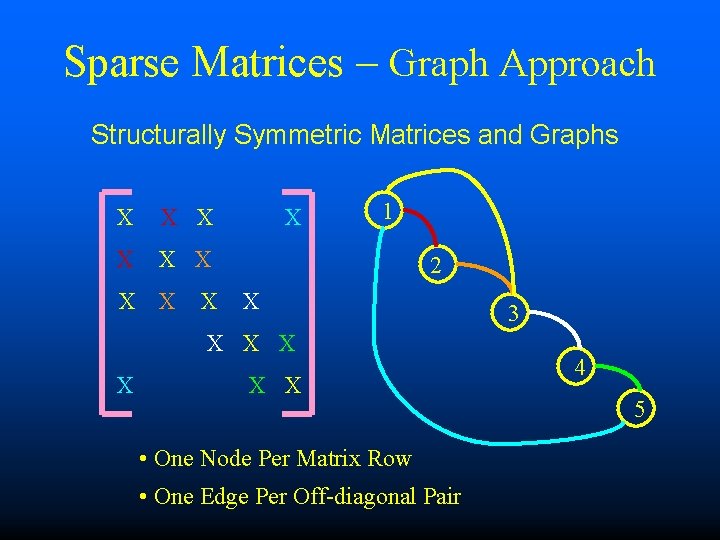

Sparse Matrices – Graph Approach Structurally Symmetric Matrices and Graphs X X X X X 1 2 X X X X • One Node Per Matrix Row • One Edge Per Off-diagonal Pair 3 4 5

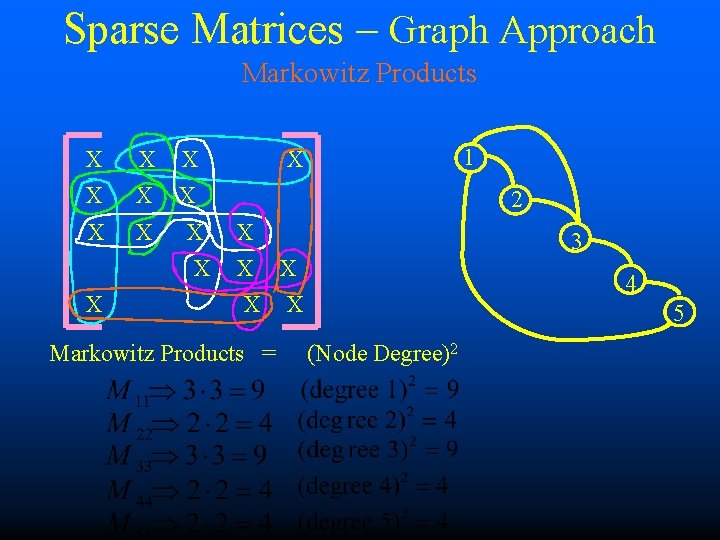

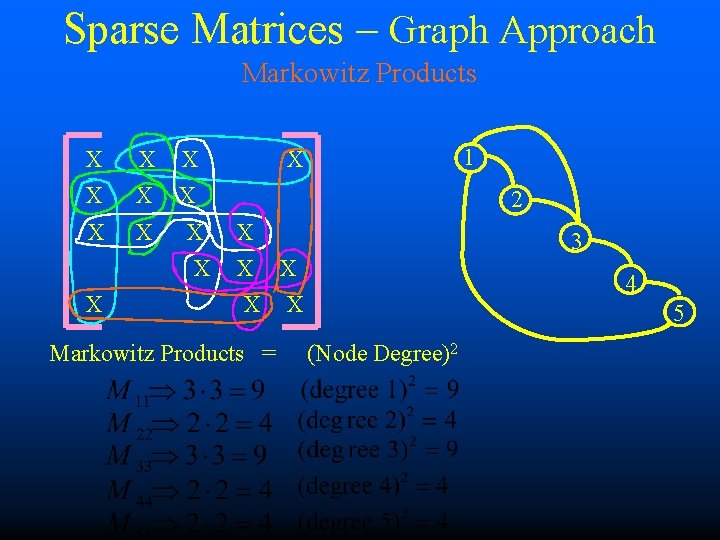

Sparse Matrices – Graph Approach Markowitz Products X X X X X 1 X 2 X X X Markowitz Products = 3 X 4 X 5 (Node Degree)2

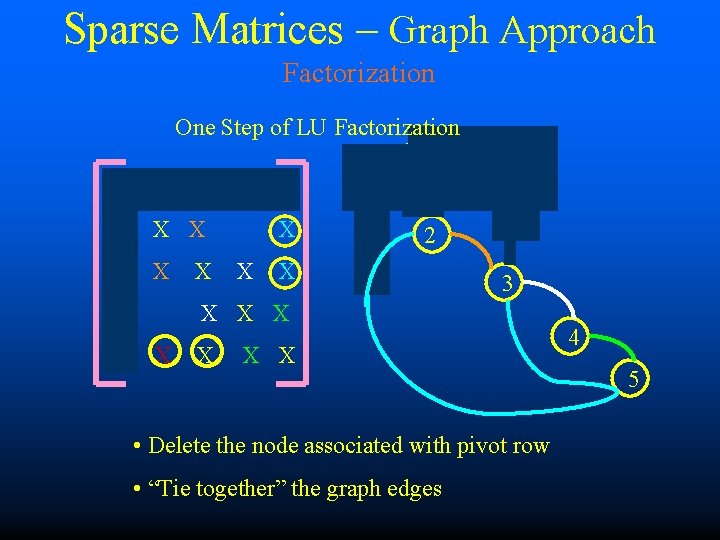

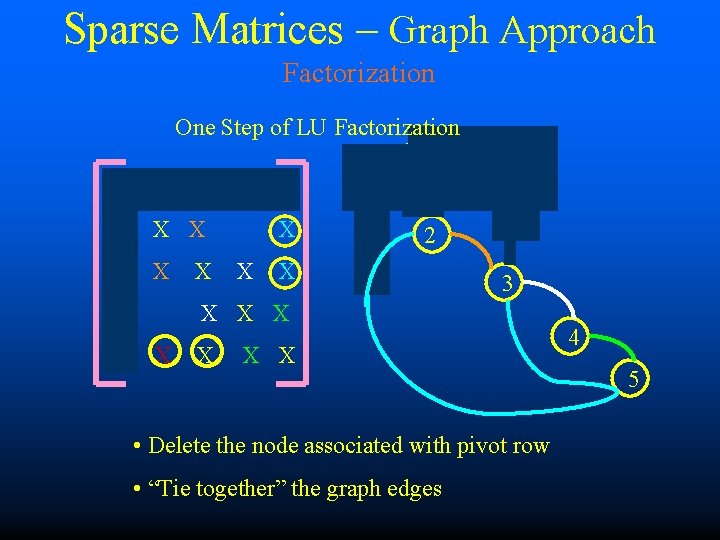

Sparse Matrices – Graph Approach Factorization One Step of LU Factorization X X X 1 2 X 3 X X X X • Delete the node associated with pivot row • “Tie together” the graph edges 4 5

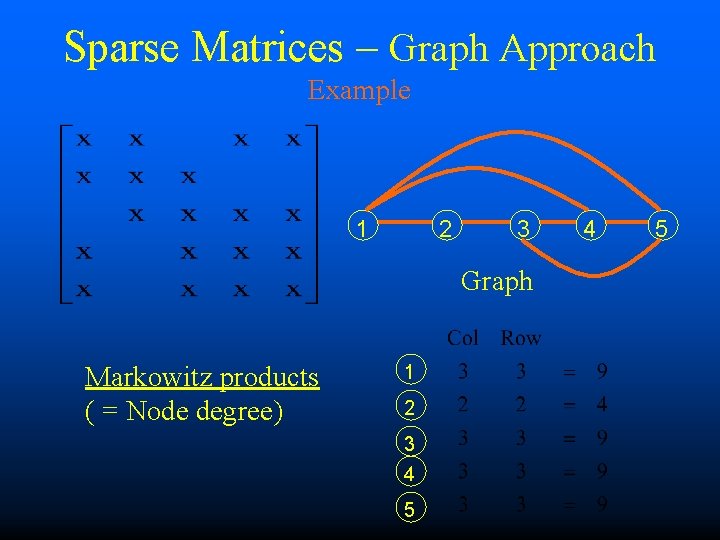

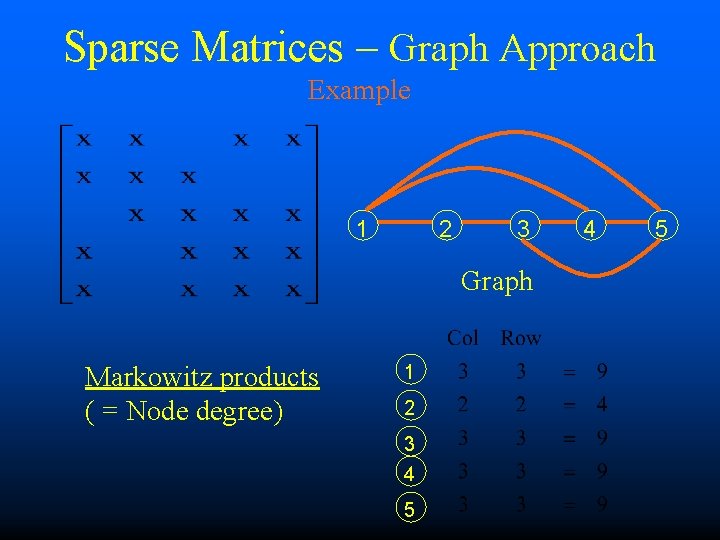

Sparse Matrices – Graph Approach Example 1 2 3 Graph Markowitz products ( = Node degree) 1 2 3 4 5

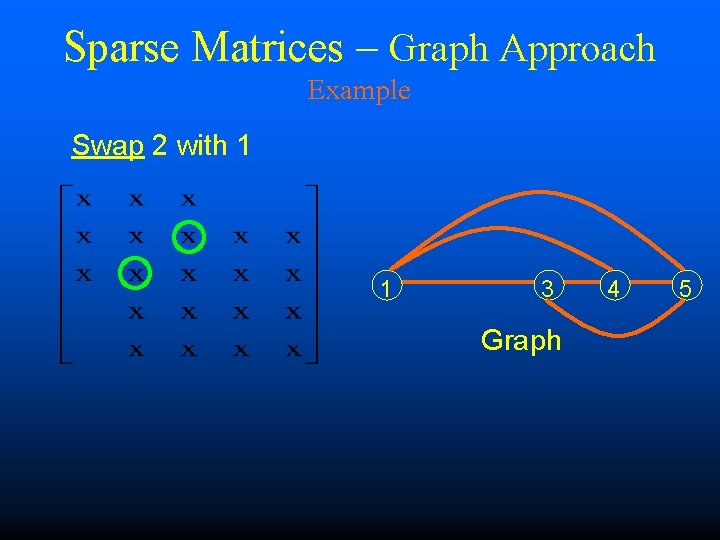

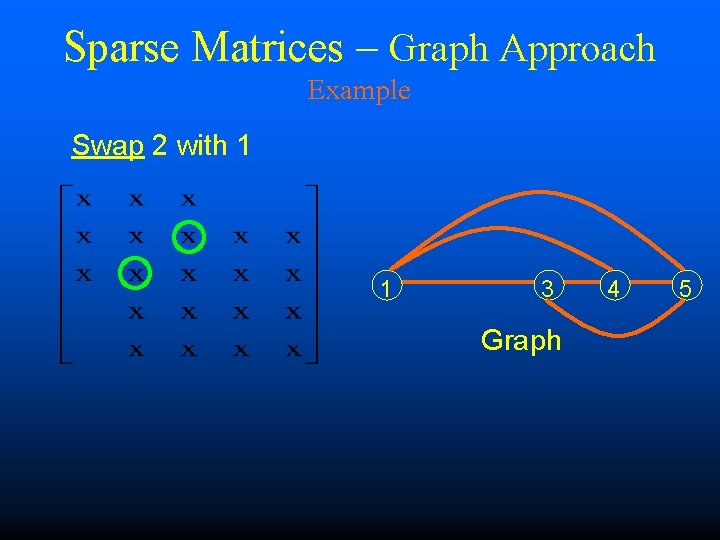

Sparse Matrices – Graph Approach Example Swap 2 with 1 1 3 Graph 4 5

Summary • Gaussian Elimination Error Mechanisms – Ill-conditioning – Numerical Stability • Gaussian Elimination for Sparse Matrices – Improved computational cost: factor in O(N 1. 5) operations (dense is O(N 3) ) – Example: Tridiagonal Matrix Factorization O(N) – Data structure – Markowitz Reordering to minimize fill-ins – Graph Based Approach