Power point presentation on Anatomy of a LargeScale

- Slides: 21

Power point presentation on Anatomy of a Large-Scale Hyper textual Web Search Engine Presented By: Vamsee Raja Jarugula for the sake of CIS 764 presentation. Kansas State University.

Presentation Overview Problem Definition. Design Goals Google Search Engine Characteristics. Google Architecture Scalability Conclusions Vamsee Raja Jarugula CIS 764

Problem Web is vast and ever expanding. It is getting flooded with data. This data is heterogeneous and consists of all forms Text Images Ascii Java applets Lists maintained by Humans cannot keep track of this. Human attention is confined to 10 -1000 documents Previous search methodologies relied on keyword matching producing inferior quality results. Vamsee Raja Jarugula CIS 764

Solution = Search Engine • Search engines facilitate users to get the text or documents of their choice within a click of mouse. ” Some examples of Search engines: Google, Altavista, Meta. Crawler, Kosmix. For comprehensive list of search engines do visit: http: //en. wikipedia. org/wiki/List_of_search_engines Vamsee Raja Jarugula CIS 764

Specific Design Goals Deliver results that have very high precision even at the expense of recall Bring search engine technology into academic realm in order to support novel research activities Make search engine technology transparent, i. e. advertising shouldn’t bias results Make system user friendly. Vamsee Raja Jarugula CIS 764

Google Search Engine Features Uses link structure of web (Page. Rank) Uses text surrounding hyperlinks to improve accurate document retrieval Other features include: Takes into account word proximity in documents Uses font size, word position, etc. to weight word Storage of full raw html pages Vamsee Raja Jarugula CIS 764

Page. Rank For Layman Imagine a web surfer doing a simple random walk on the entire web for an infinite number of steps. Occasionally, the surfer will get bored and instead of following a link pointing outward from the current page will jump to another random page. At some point, the percentage of time spent at each page will converge to a fixed value. This value is known as the Page. Rank of the page. Vamsee Raja Jarugula CIS 764

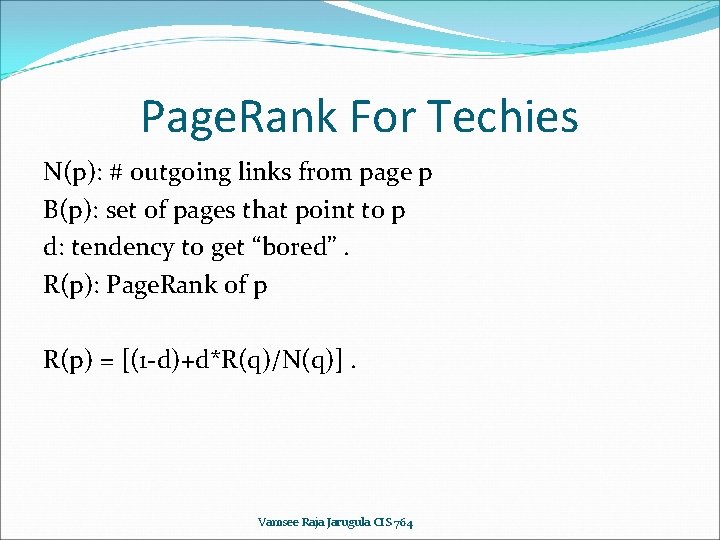

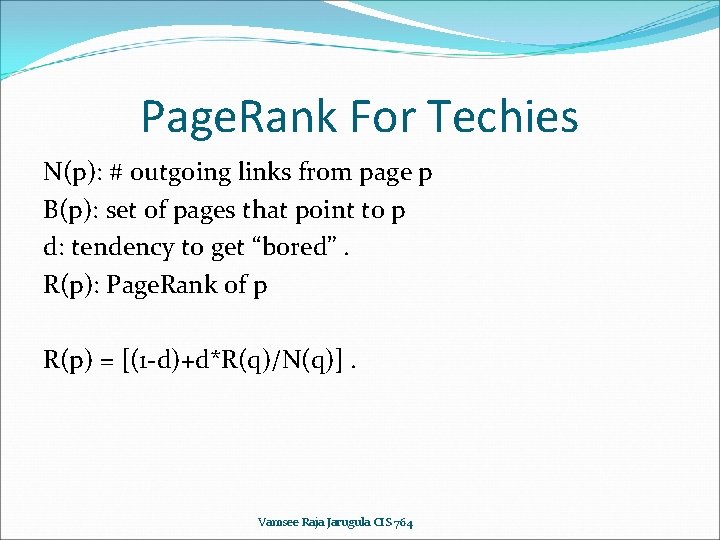

Page. Rank For Techies N(p): # outgoing links from page p B(p): set of pages that point to p d: tendency to get “bored”. R(p): Page. Rank of p R(p) = [(1 -d)+d*R(q)/N(q)]. Vamsee Raja Jarugula CIS 764

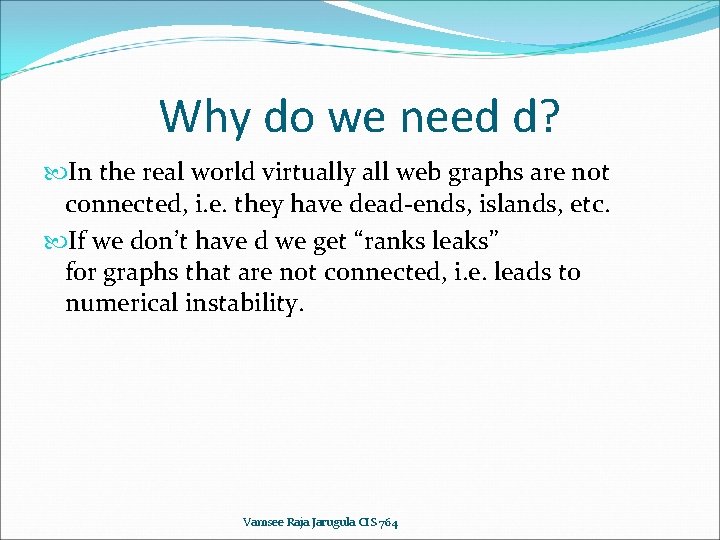

Why do we need d? In the real world virtually all web graphs are not connected, i. e. they have dead-ends, islands, etc. If we don’t have d we get “ranks leaks” for graphs that are not connected, i. e. leads to numerical instability. Vamsee Raja Jarugula CIS 764

Justifications for using Page. Rank Attempts to model user behavior Captures the notion that the more a page is pointed to by “important” pages, the more it is worth looking at Takes into account global structure of web Vamsee Raja Jarugula CIS 764

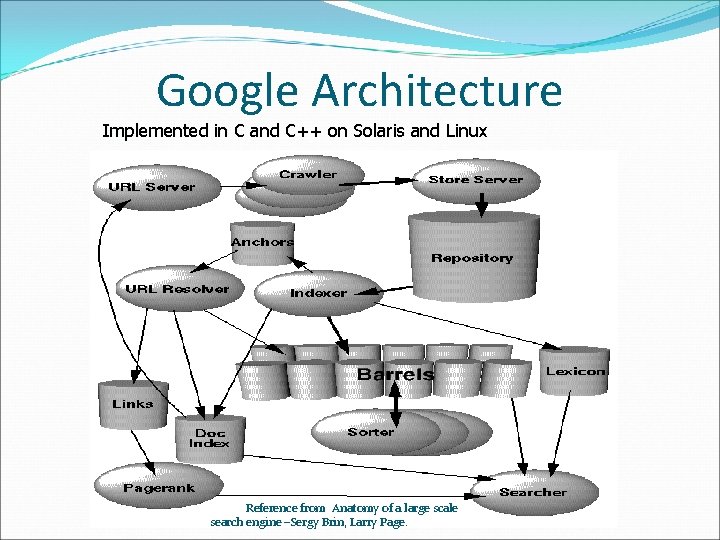

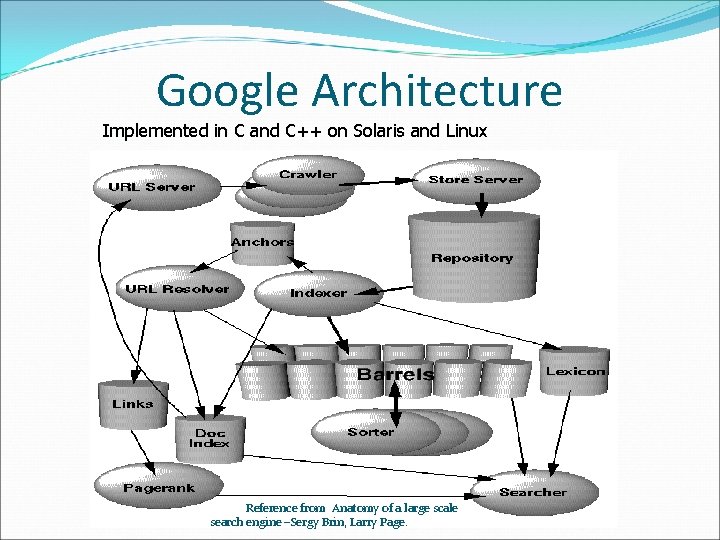

Google Architecture Implemented in C and C++ on Solaris and Linux Reference from Anatomy of a large scale search engine –Sergy Brin, Larry Page.

Preliminary “Hitlist” is defined as list of occurrences of a particular word in a particular document including additional meta info: - position of word in doc - font size - capitalization - descriptor type, e. g. title, anchor, etc. Vamsee Raja Jarugula CIS 764

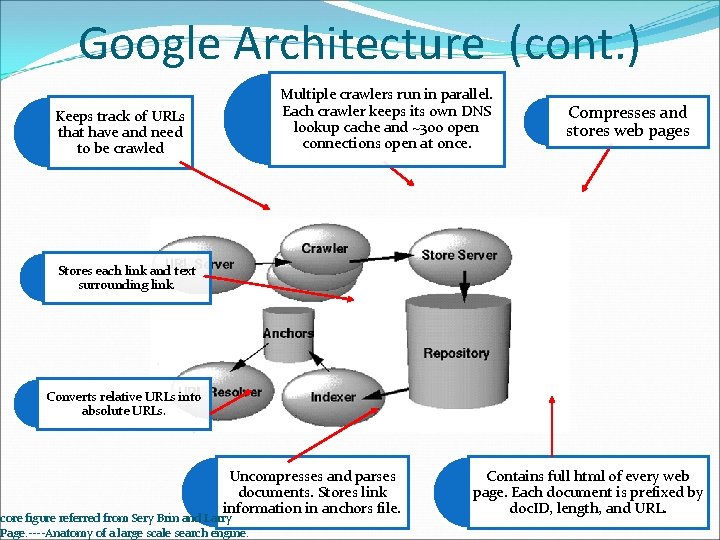

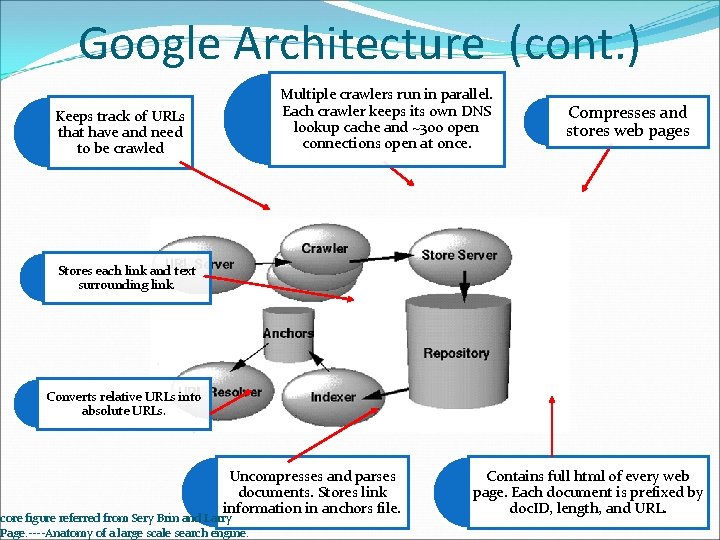

Google Architecture (cont. ) Multiple crawlers run in parallel. Each crawler keeps its own DNS lookup cache and ~300 open connections open at once. Keeps track of URLs that have and need to be crawled Compresses and stores web pages Stores each link and text surrounding link. Converts relative URLs into absolute URLs. Uncompresses and parses documents. Stores link information in anchors file. core figure referred from Sery Brin and Larry Page. ----Anatomy of a large scale search engine. Contains full html of every web page. Each document is prefixed by doc. ID, length, and URL.

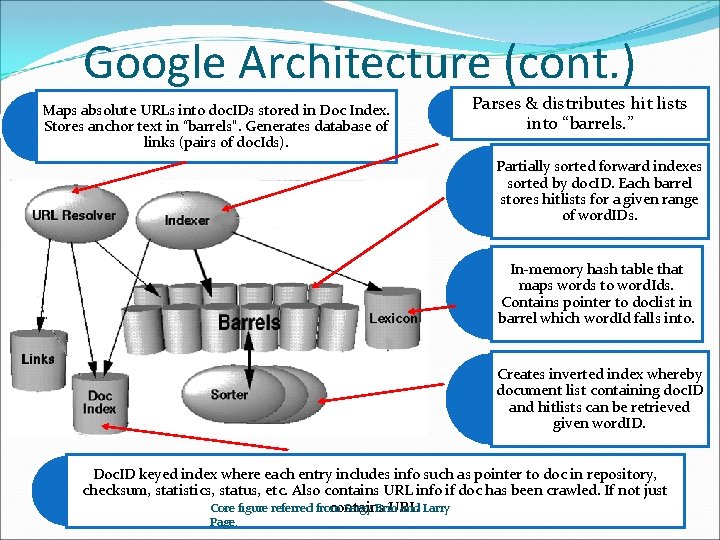

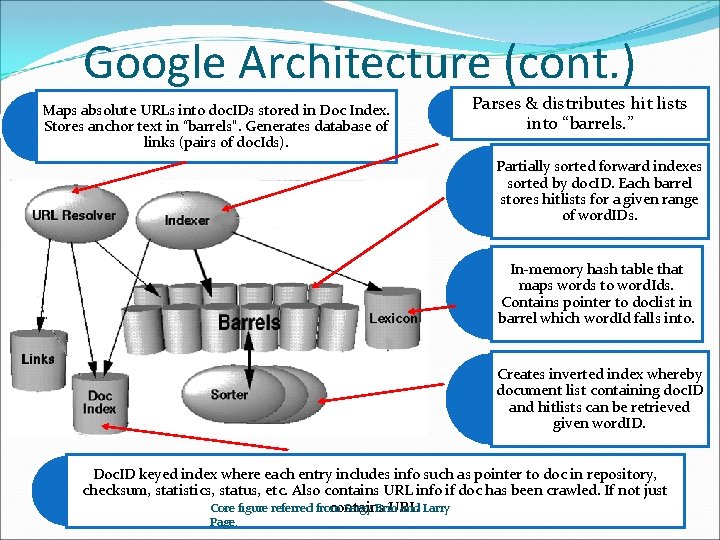

Google Architecture (cont. ) Maps absolute URLs into doc. IDs stored in Doc Index. Stores anchor text in “barrels”. Generates database of links (pairs of doc. Ids). Parses & distributes hit lists into “barrels. ” Partially sorted forward indexes sorted by doc. ID. Each barrel stores hitlists for a given range of word. IDs. In-memory hash table that maps words to word. Ids. Contains pointer to doclist in barrel which word. Id falls into. Creates inverted index whereby document list containing doc. ID and hitlists can be retrieved given word. ID. Doc. ID keyed index where each entry includes info such as pointer to doc in repository, checksum, statistics, status, etc. Also contains URL info if doc has been crawled. If not just contains URL. Core figure referred from Sergy Brin and Larry Page.

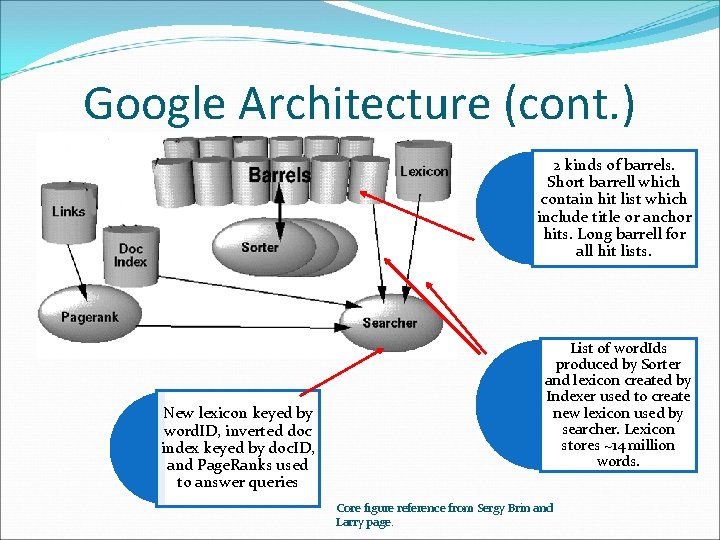

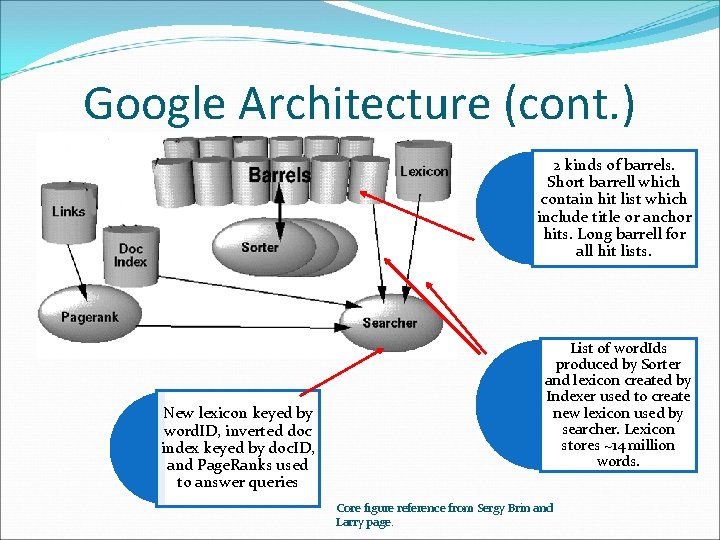

Google Architecture (cont. ) 2 kinds of barrels. Short barrell which contain hit list which include title or anchor hits. Long barrell for all hit lists. New lexicon keyed by word. ID, inverted doc index keyed by doc. ID, and Page. Ranks used to answer queries List of word. Ids produced by Sorter and lexicon created by Indexer used to create new lexicon used by searcher. Lexicon stores ~14 million words. Core figure reference from Sergy Brin and Larry page.

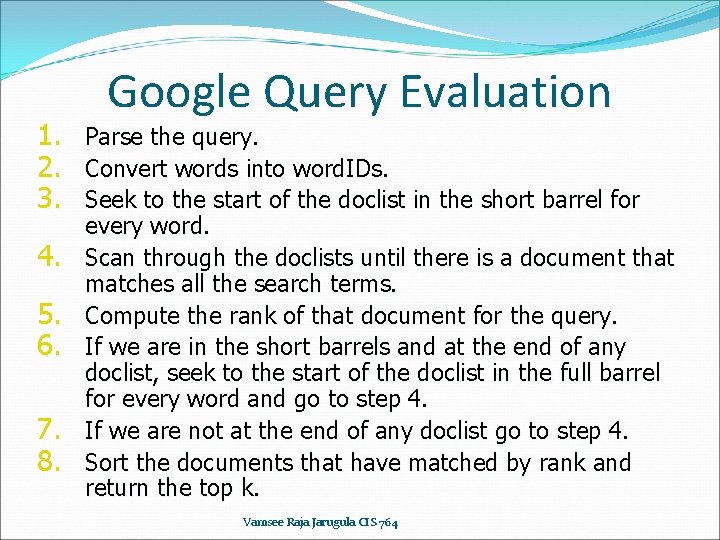

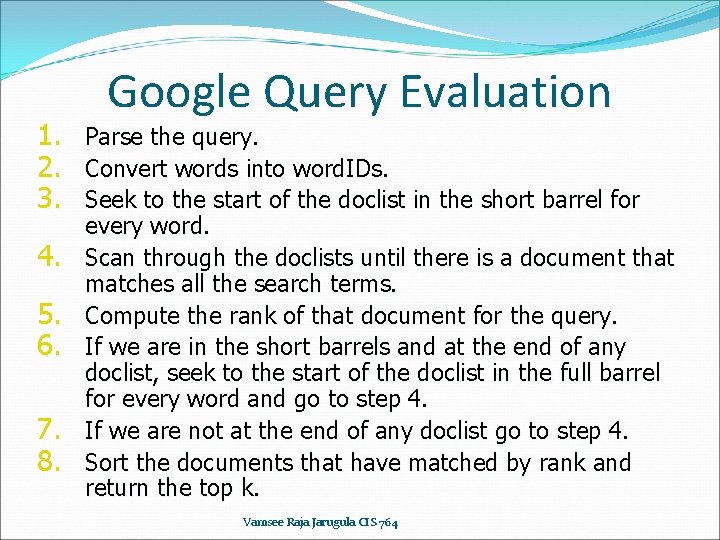

Google Query Evaluation 1. Parse the query. 2. Convert words into word. IDs. 3. Seek to the start of the doclist in the short barrel for 4. 5. 6. 7. 8. every word. Scan through the doclists until there is a document that matches all the search terms. Compute the rank of that document for the query. If we are in the short barrels and at the end of any doclist, seek to the start of the doclist in the full barrel for every word and go to step 4. If we are not at the end of any doclist go to step 4. Sort the documents that have matched by rank and return the top k. Vamsee Raja Jarugula CIS 764

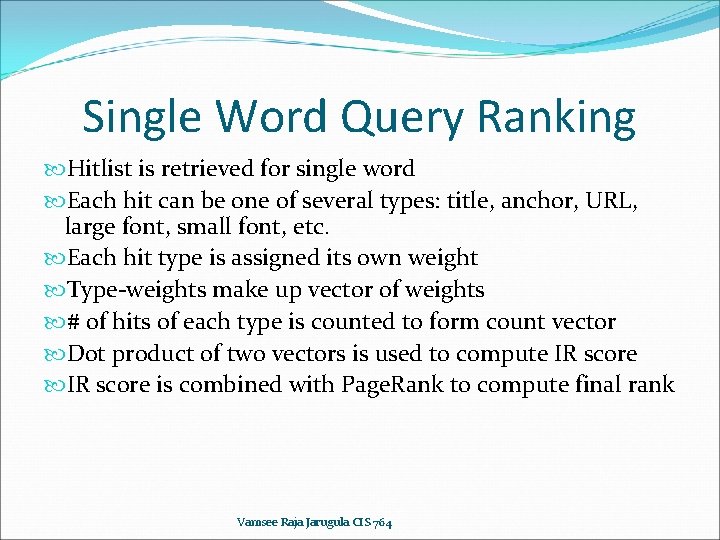

Single Word Query Ranking Hitlist is retrieved for single word Each hit can be one of several types: title, anchor, URL, large font, small font, etc. Each hit type is assigned its own weight Type-weights make up vector of weights # of hits of each type is counted to form count vector Dot product of two vectors is used to compute IR score is combined with Page. Rank to compute final rank Vamsee Raja Jarugula CIS 764

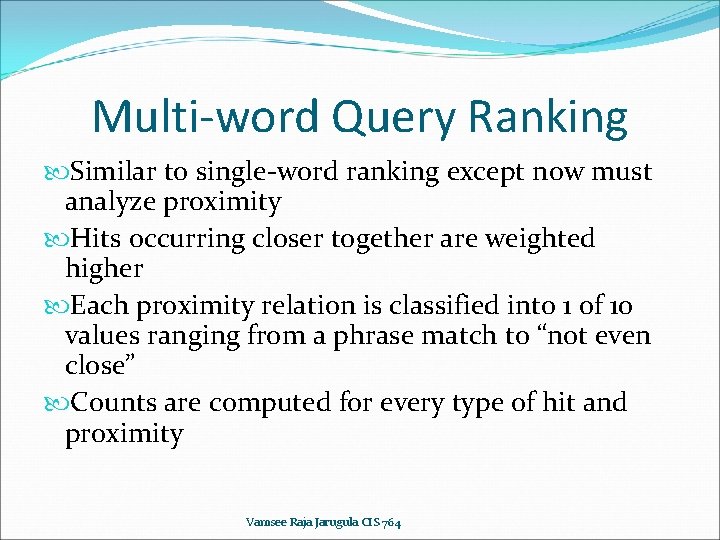

Multi-word Query Ranking Similar to single-word ranking except now must analyze proximity Hits occurring closer together are weighted higher Each proximity relation is classified into 1 of 10 values ranging from a phrase match to “not even close” Counts are computed for every type of hit and proximity Vamsee Raja Jarugula CIS 764

Scalability Cluster architecture combined with Moore’s Law make for high scalability. At time of writing: ~ 24 million documents indexed in one week ~518 million hyperlinks indexed Four crawlers collected 100 documents/sec Vamsee Raja Jarugula CIS 764

Summary of Key Optimization Techniques Each crawler maintains its own DNS lookup cache Use flex to generate lexical analyzer with own stack for parsing documents Parallelization of indexing phase In-memory lexicon Compression of repository Compact encoding of hitlists accounting for major space savings Indexer is optimized so it is just faster than the crawler so that crawling is the bottleneck Document index is updated in bulk Critical data structures placed on local disk Overall architecture designed avoid to disk seeks wherever possible Vamsee Raja Jarugula CIS 764

References: http: //video. google. com/videoplay? docid=1400721382961784115 http: //google. stanford. edu http: //en. wikipedia. org/wiki/List_of_search_engines The Anatomy of a Large-Scale Hyper textual Web Search Engine Sergey Brin and Lawrence Page(pdf). § The audio presentation of my lecture will be posted on my Home. Page and will be given to Dr. Hankley. § www. cis. ksu. edu/~vamsee Vamsee Raja Jarugula CIS 764

Presentation topics for students in hindi

Presentation topics for students in hindi Power point presentation design west vancouver

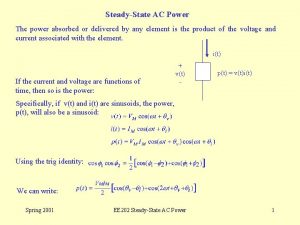

Power point presentation design west vancouver Real power and reactive power

Real power and reactive power Powerbi in powerpoint

Powerbi in powerpoint Point point power

Point point power Traub's area surface anatomy

Traub's area surface anatomy Mentovertical diameter

Mentovertical diameter Leopold maneuver

Leopold maneuver Hand and power tool safety ppt

Hand and power tool safety ppt Point of view presentation

Point of view presentation The starting point in a presentation

The starting point in a presentation Solar power satellites and microwave power transmission

Solar power satellites and microwave power transmission Potential power

Potential power Flex28024a

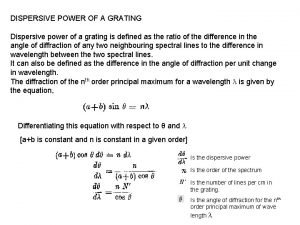

Flex28024a Dispersive power of grating value

Dispersive power of grating value Power of a power property

Power of a power property General power rule

General power rule Power angle curve in power system stability

Power angle curve in power system stability Power delivered vs power absorbed

Power delivered vs power absorbed Evangelio del domingo en power point

Evangelio del domingo en power point Aplausos sonido

Aplausos sonido Powerpoint boutique

Powerpoint boutique