Performance Measurement Community Literacy March 19 2007 Harry

- Slides: 18

Performance Measurement Community Literacy March 19, 2007 Harry P. Hatry The Urban Institute Washington DC 1

Key Distinctions • Performance Measurement vs. Program Evaluation • Performance Measurement vs. Performance Management 2

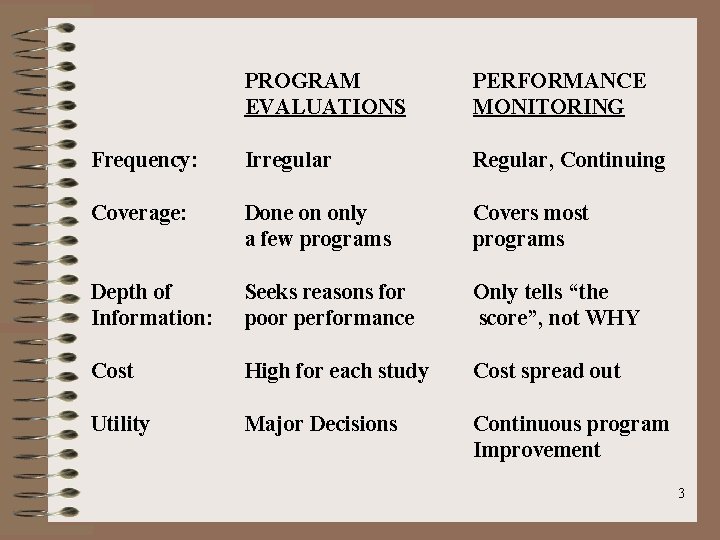

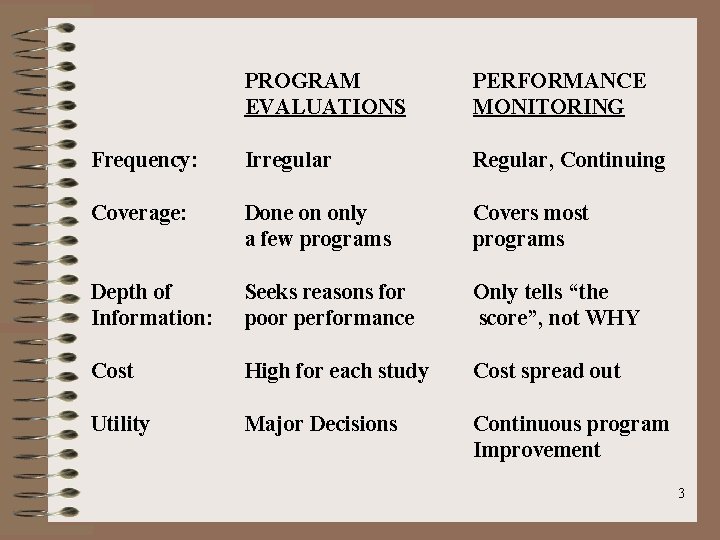

PROGRAM EVALUATIONS PERFORMANCE MONITORING Frequency: Irregular Regular, Continuing Coverage: Done on only a few programs Covers most programs Depth of Information: Seeks reasons for poor performance Only tells “the score”, not WHY Cost High for each study Cost spread out Utility Major Decisions Continuous program Improvement 3

Performance Measurement Information Plus Use of that Information to Improve Services Produces Performance Management 4

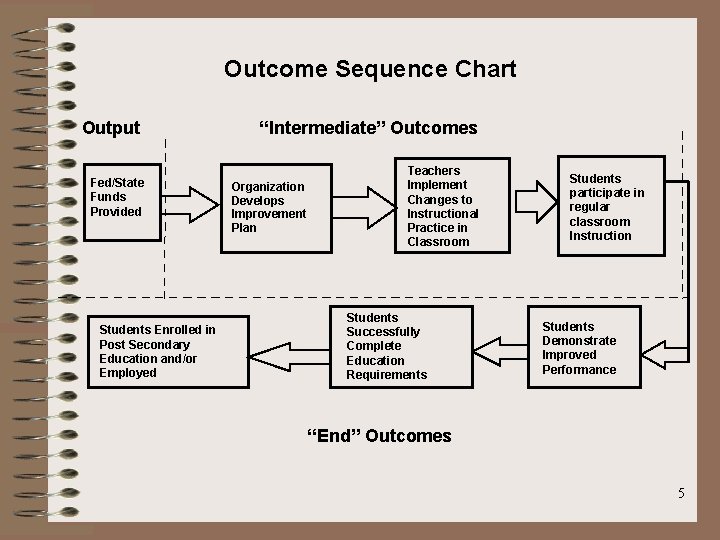

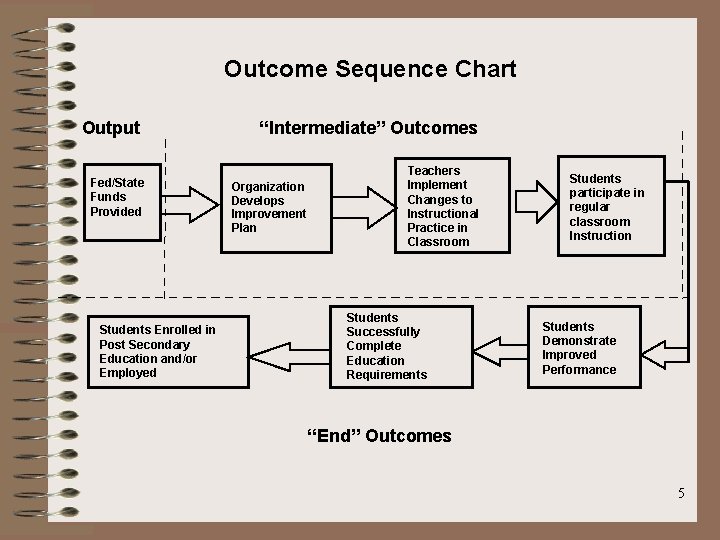

Outcome Sequence Chart Output Fed/State Funds Provided Students Enrolled in Post Secondary Education and/or Employed “Intermediate” Outcomes Organization Develops Improvement Plan Teachers Implement Changes to Instructional Practice in Classroom Students Successfully Complete Education Requirements Students participate in regular classroom Instruction Students Demonstrate Improved Performance “End” Outcomes 5

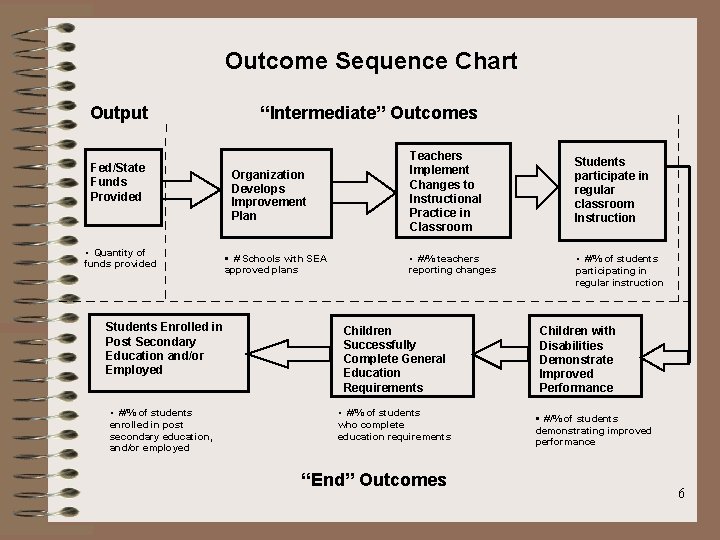

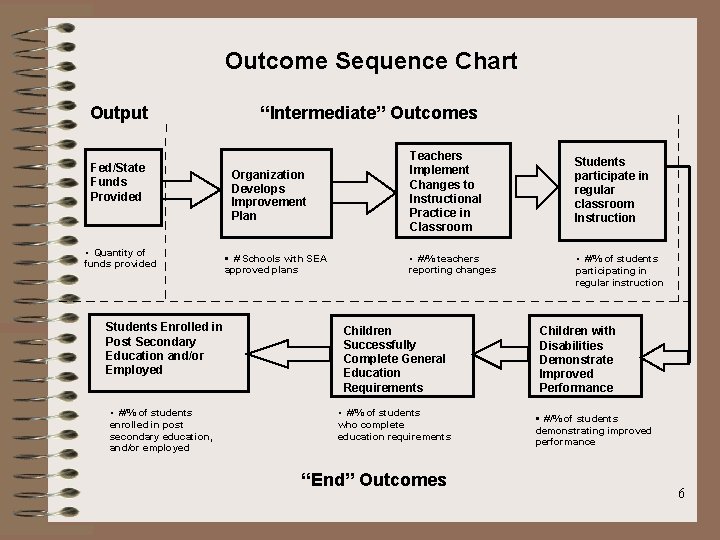

Outcome Sequence Chart Output Fed/State Funds Provided • Quantity of funds provided Students Enrolled in Post Secondary Education and/or Employed • #/% of students enrolled in post secondary education, and/or employed “Intermediate” Outcomes Organization Develops Improvement Plan • # Schools with SEA approved plans Teachers Implement Changes to Instructional Practice in Classroom • #/% teachers reporting changes Children Successfully Complete General Education Requirements • #/% of students who complete education requirements “End” Outcomes Students participate in regular classroom Instruction • #/% of students participating in regular instruction Children with Disabilities Demonstrate Improved Performance • #/% of students demonstrating improved performance 6

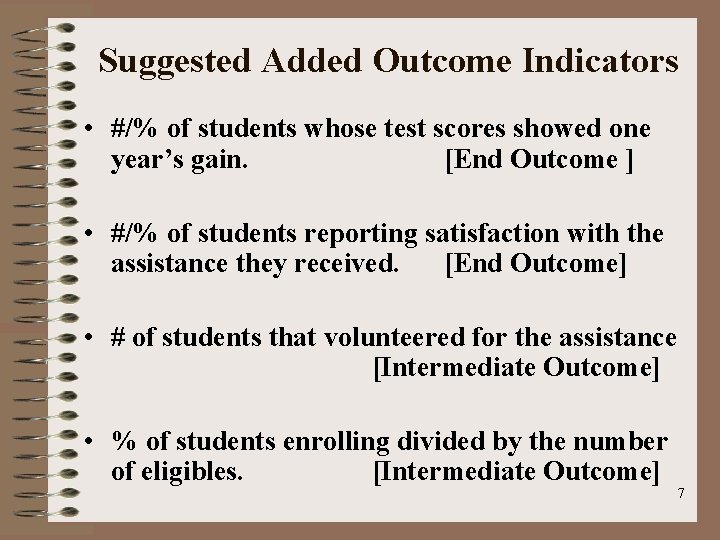

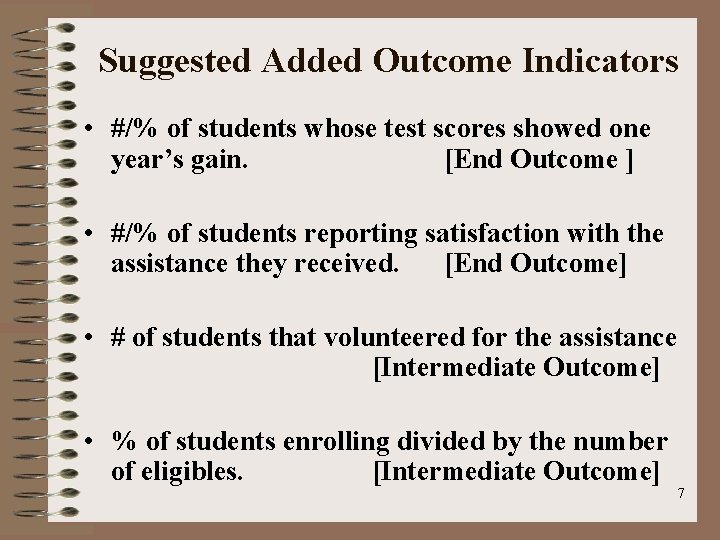

Suggested Added Outcome Indicators • #/% of students whose test scores showed one year’s gain. [End Outcome ] • #/% of students reporting satisfaction with the assistance they received. [End Outcome] • # of students that volunteered for the assistance [Intermediate Outcome] • % of students enrolling divided by the number of eligibles. [Intermediate Outcome] 7

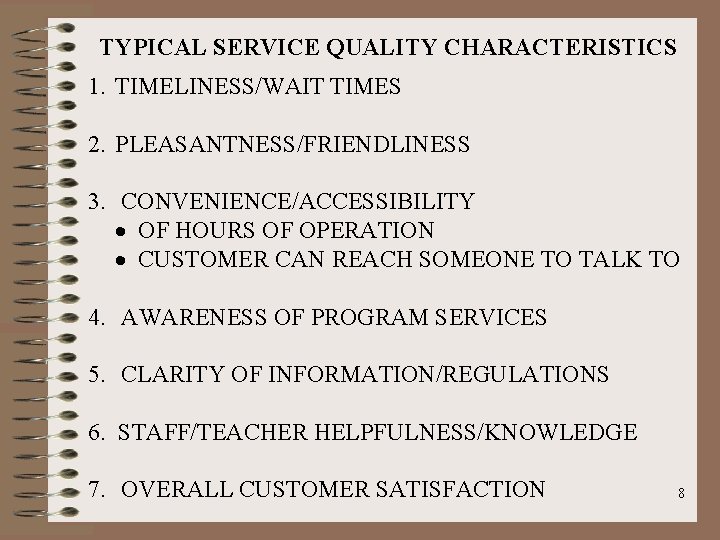

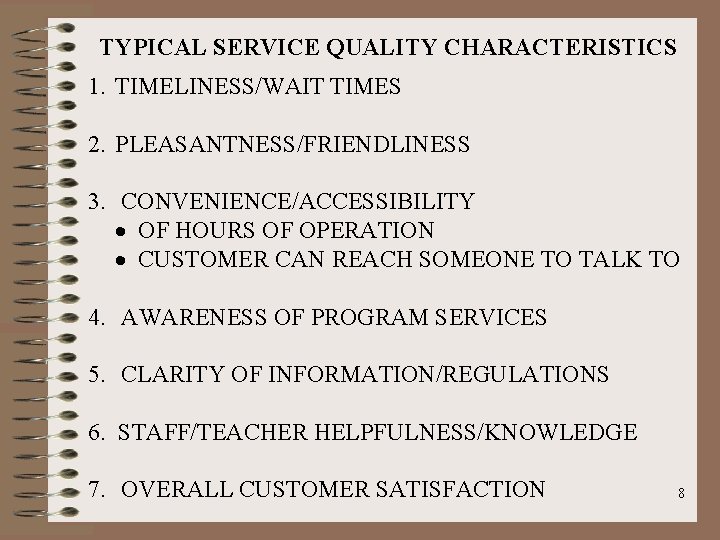

TYPICAL SERVICE QUALITY CHARACTERISTICS 1. TIMELINESS/WAIT TIMES 2. PLEASANTNESS/FRIENDLINESS 3. CONVENIENCE/ACCESSIBILITY · OF HOURS OF OPERATION · CUSTOMER CAN REACH SOMEONE TO TALK TO 4. AWARENESS OF PROGRAM SERVICES 5. CLARITY OF INFORMATION/REGULATIONS 6. STAFF/TEACHER HELPFULNESS/KNOWLEDGE 7. OVERALL CUSTOMER SATISFACTION 8

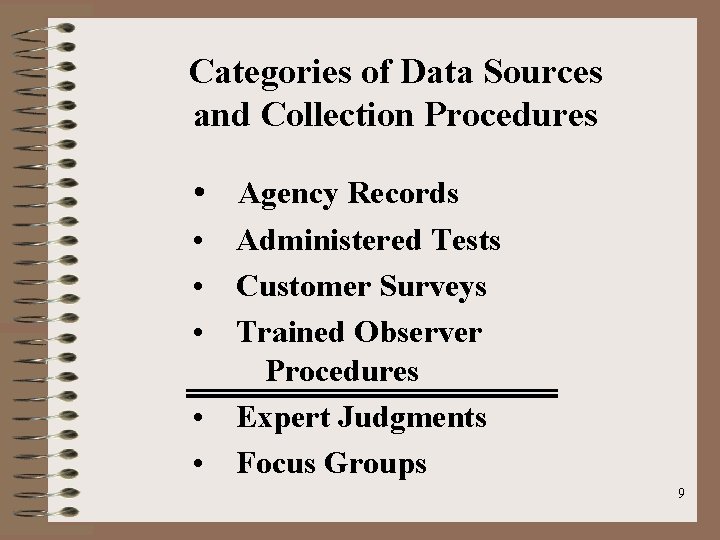

Categories of Data Sources and Collection Procedures • Agency Records • Administered Tests • Customer Surveys • Trained Observer Procedures • Expert Judgments • Focus Groups 9

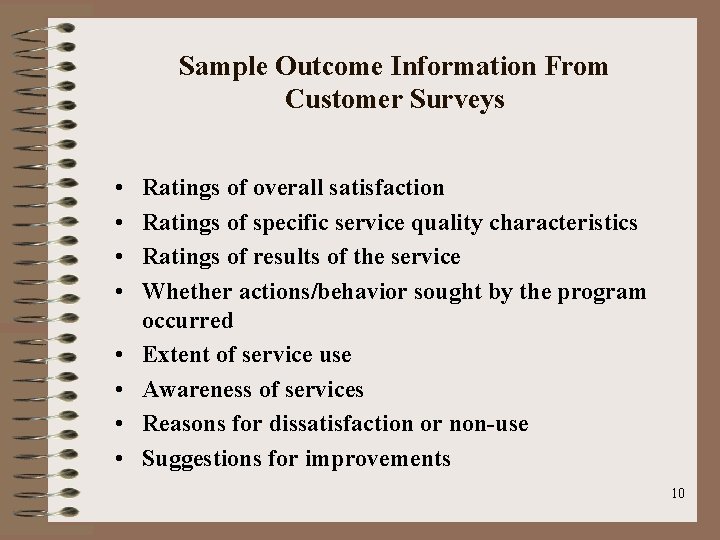

Sample Outcome Information From Customer Surveys • • Ratings of overall satisfaction Ratings of specific service quality characteristics Ratings of results of the service Whether actions/behavior sought by the program occurred Extent of service use Awareness of services Reasons for dissatisfaction or non-use Suggestions for improvements 10

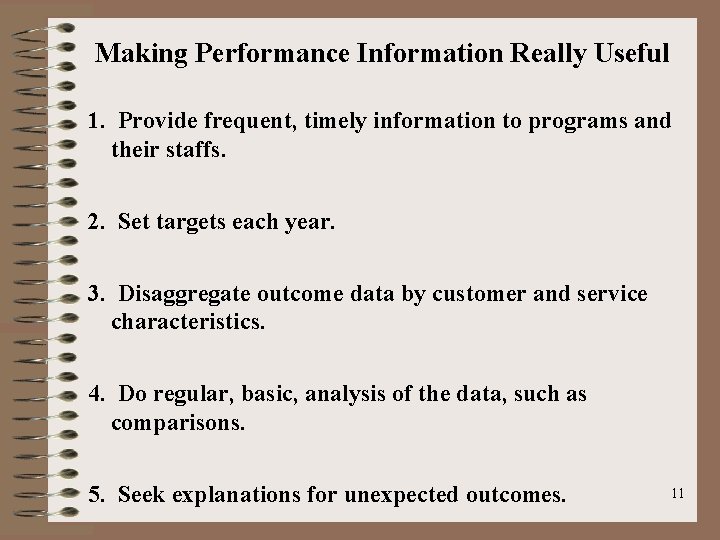

Making Performance Information Really Useful 1. Provide frequent, timely information to programs and their staffs. 2. Set targets each year. 3. Disaggregate outcome data by customer and service characteristics. 4. Do regular, basic, analysis of the data, such as comparisons. 5. Seek explanations for unexpected outcomes. 11

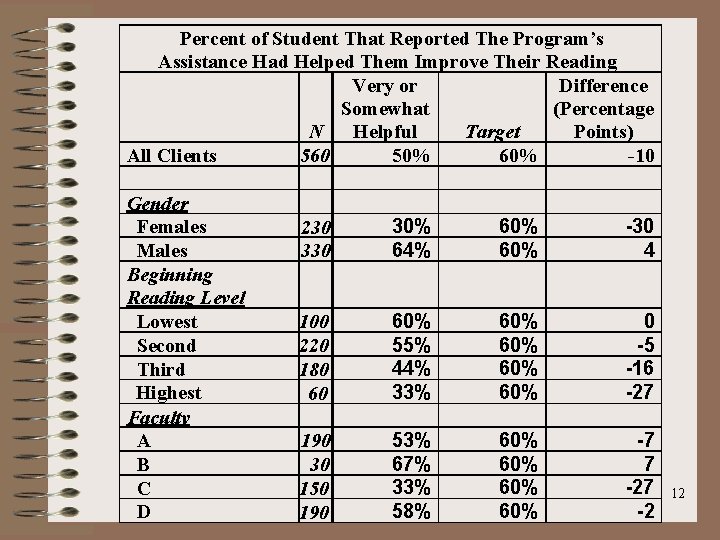

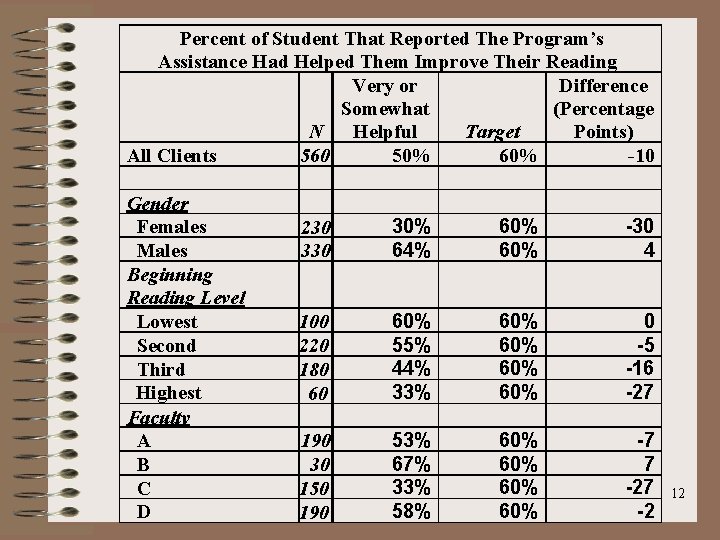

Percent of Student That Reported The Program’s Assistance Had Helped Them Improve Their Reading Very or Difference Somewhat (Percentage Helpful Points) N Target All Clients 50% 60% -10 560 Gender Females Males Beginning Reading Level Lowest Second Third Highest Faculty A B C D 230 30% 64% 60% -30 4 100 220 180 60 60% 55% 44% 33% 60% 60% 0 -5 -16 -27 190 30 150 190 53% 67% 33% 58% 60% 60% -7 7 -2 12

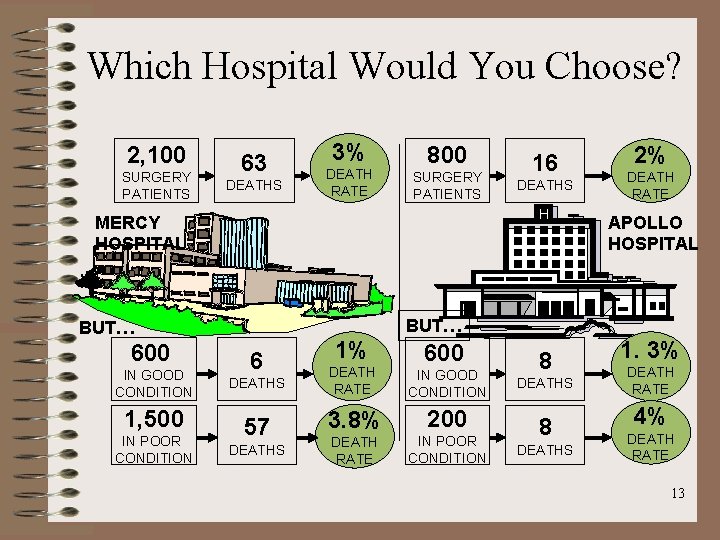

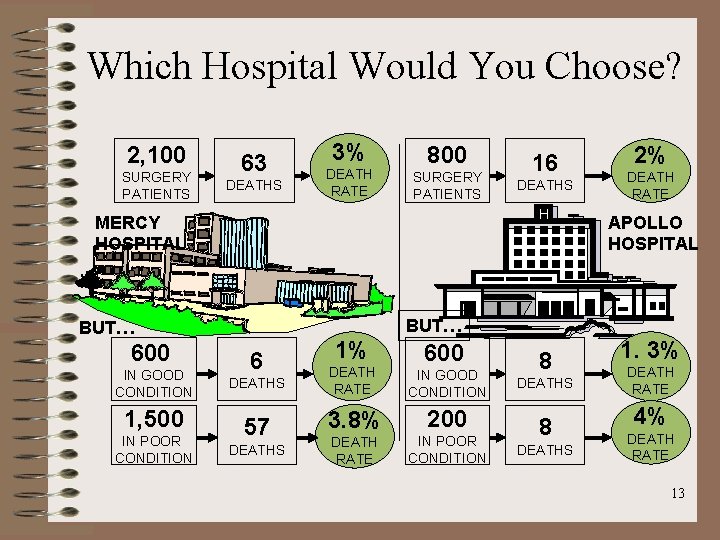

Which Hospital Would You Choose? 2, 100 SURGERY PATIENTS 63 DEATHS 3% DEATH RATE 800 SURGERY PATIENTS 16 DEATHS MERCY HOSPITAL IN GOOD CONDITION 1, 500 IN POOR CONDITION DEATH RATE APOLLO HOSPITAL BUT… 600 2% 6 DEATHS 57 DEATHS 1% 600 DEATH RATE IN GOOD CONDITION 3. 8% 200 DEATH RATE IN POOR CONDITION 8 DEATHS 1. 3% DEATH RATE 4% DEATH RATE 13

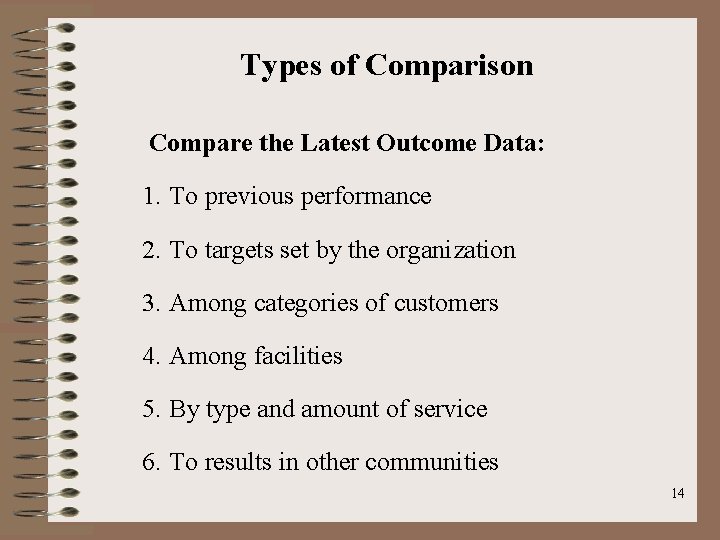

Types of Comparison Compare the Latest Outcome Data: 1. To previous performance 2. To targets set by the organization 3. Among categories of customers 4. Among facilities 5. By type and amount of service 6. To results in other communities 14

Making Performance Information Really Useful (Continued) 6. Hold “How Are We Doing? ” sessions after each performance report. 7. Prepare “Service Improvement Action Plans” for areas with low performance. 8. Provide recognition rewards. 9. Identify successful practices. 15

16

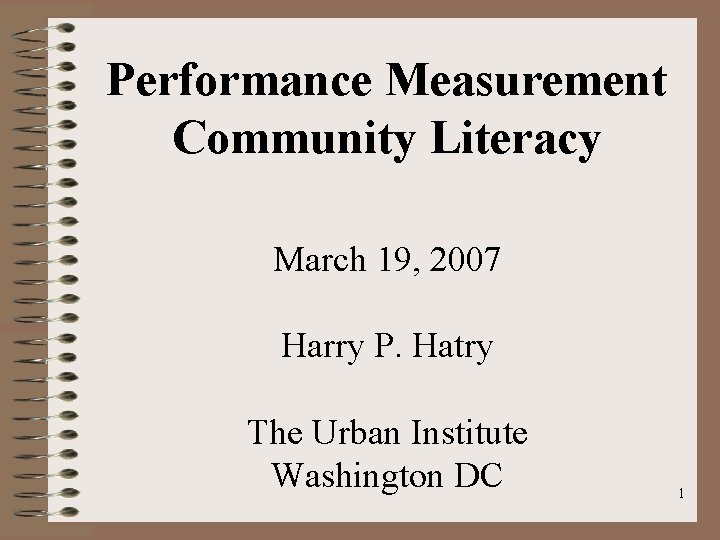

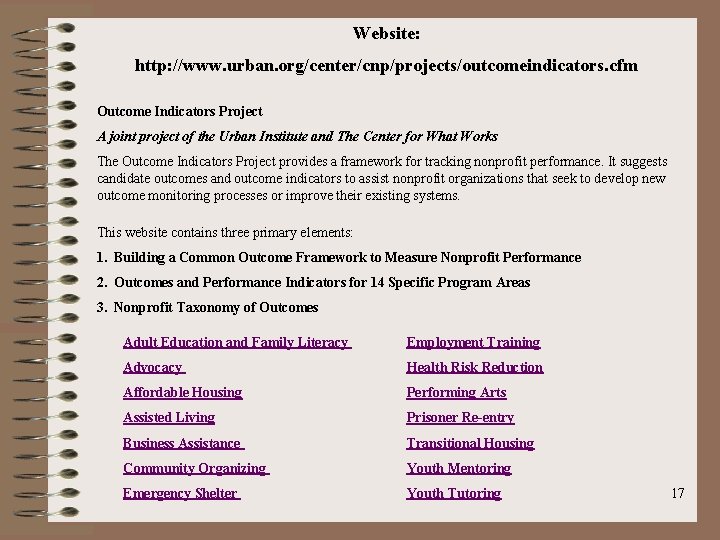

Website: http: //www. urban. org/center/cnp/projects/outcomeindicators. cfm Outcome Indicators Project A joint project of the Urban Institute and The Center for What Works The Outcome Indicators Project provides a framework for tracking nonprofit performance. It suggests candidate outcomes and outcome indicators to assist nonprofit organizations that seek to develop new outcome monitoring processes or improve their existing systems. This website contains three primary elements: 1. Building a Common Outcome Framework to Measure Nonprofit Performance 2. Outcomes and Performance Indicators for 14 Specific Program Areas 3. Nonprofit Taxonomy of Outcomes Adult Education and Family Literacy Employment Training Advocacy Health Risk Reduction Affordable Housing Performing Arts Assisted Living Prisoner Re-entry Business Assistance Transitional Housing Community Organizing Youth Mentoring Emergency Shelter Youth Tutoring 17

Crocodiles May Get You But in the End It Should be Very Worthwhile For Student Literacy 18