Open CL 1 Open CL Open Computing Language

![Code for context //Context cl_context_properties props[3]; props[0] = (cl_context_properties) CL_CONTEXT_PLATFORM; props[1] = (cl_context_properties) platform; Code for context //Context cl_context_properties props[3]; props[0] = (cl_context_properties) CL_CONTEXT_PLATFORM; props[1] = (cl_context_properties) platform;](https://slidetodoc.com/presentation_image_h2/1632343b934e930daa09aff3a58fb6c4/image-9.jpg)

![Create kernel program object const char* Open. CLSource[] = { … }; This example Create kernel program object const char* Open. CLSource[] = { … }; This example](https://slidetodoc.com/presentation_image_h2/1632343b934e930daa09aff3a58fb6c4/image-18.jpg)

- Slides: 34

Open. CL 1

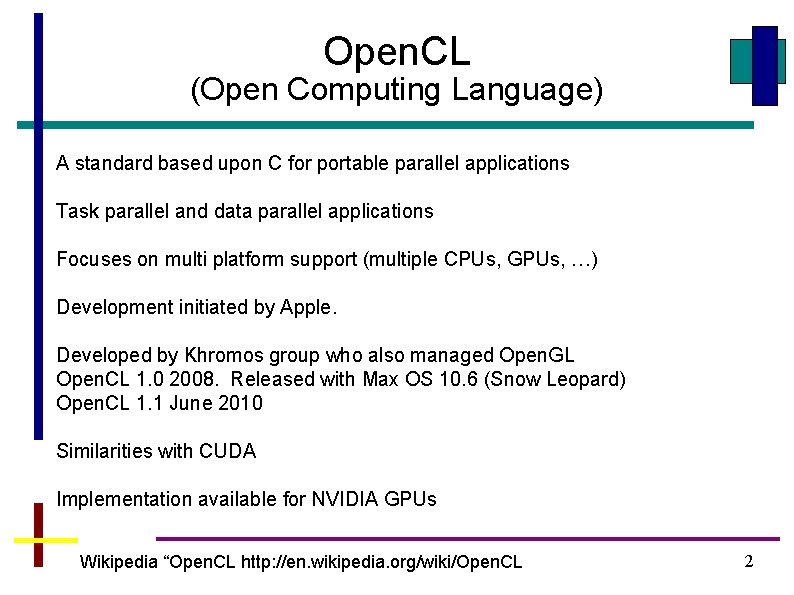

Open. CL (Open Computing Language) A standard based upon C for portable parallel applications Task parallel and data parallel applications Focuses on multi platform support (multiple CPUs, GPUs, …) Development initiated by Apple. Developed by Khromos group who also managed Open. GL Open. CL 1. 0 2008. Released with Max OS 10. 6 (Snow Leopard) Open. CL 1. 1 June 2010 Similarities with CUDA Implementation available for NVIDIA GPUs Wikipedia “Open. CL http: //en. wikipedia. org/wiki/Open. CL 2

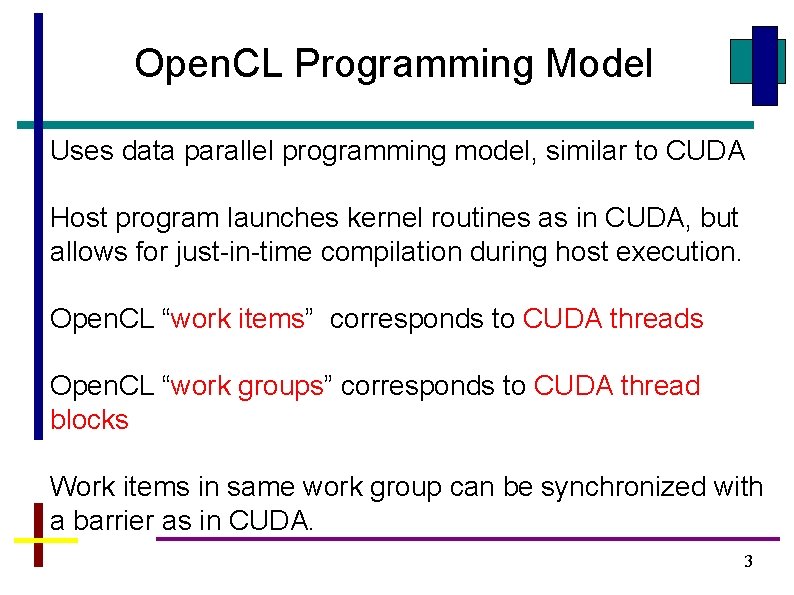

Open. CL Programming Model Uses data parallel programming model, similar to CUDA Host program launches kernel routines as in CUDA, but allows for just-in-time compilation during host execution. Open. CL “work items” corresponds to CUDA threads Open. CL “work groups” corresponds to CUDA thread blocks Work items in same work group can be synchronized with a barrier as in CUDA. 3

Sample Open. CL code to add two vectors To illustrate Open. CL commands, we will use Open. Cl code to add two vectors, A and B which are transferred to the device (GPU) and the result, C, returned to host (CPU), similar to CUDA vector addition 4

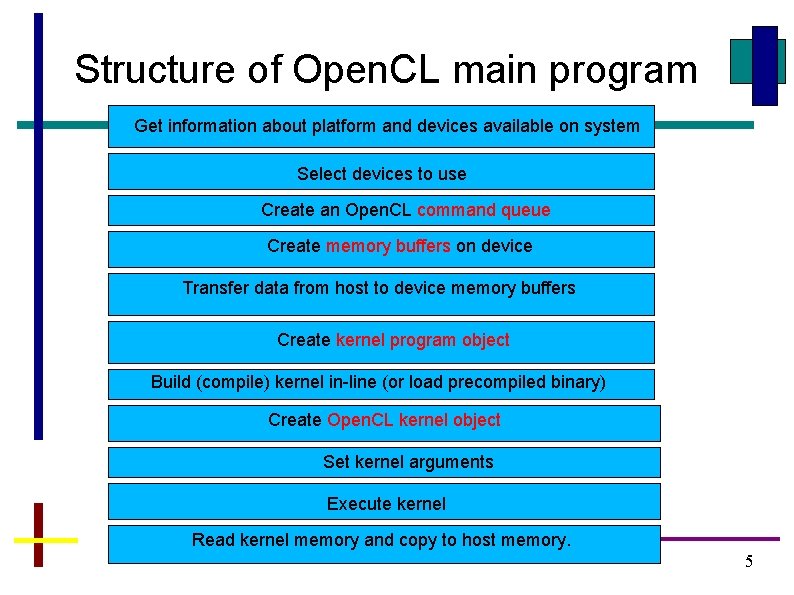

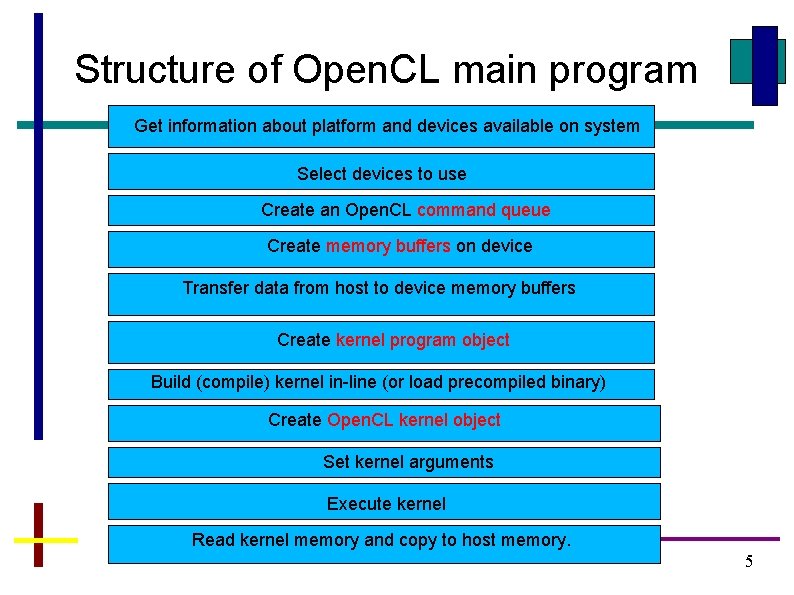

Structure of Open. CL main program Get information about platform and devices available on system Select devices to use Create an Open. CL command queue Create memory buffers on device Transfer data from host to device memory buffers Create kernel program object Build (compile) kernel in-line (or load precompiled binary) Create Open. CL kernel object Set kernel arguments Execute kernel Read kernel memory and copy to host memory. 5

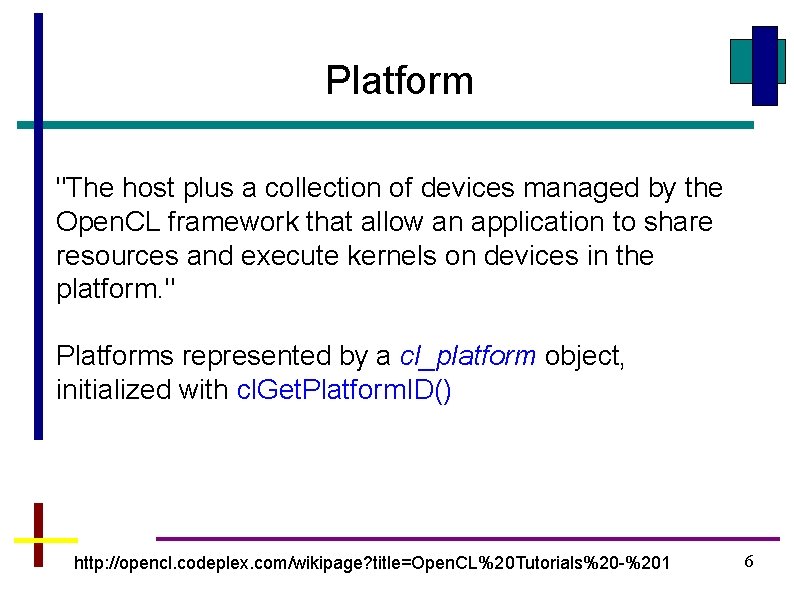

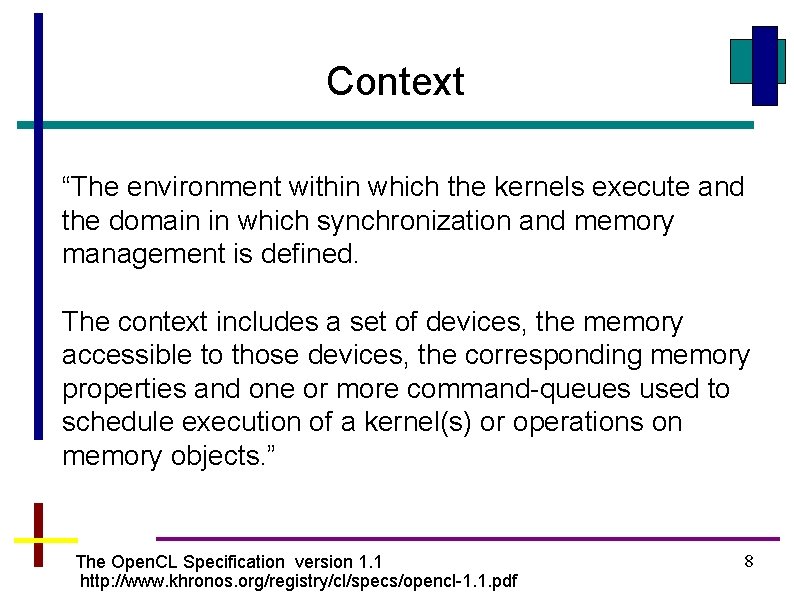

Platform "The host plus a collection of devices managed by the Open. CL framework that allow an application to share resources and execute kernels on devices in the platform. " Platforms represented by a cl_platform object, initialized with cl. Get. Platform. ID() http: //opencl. codeplex. com/wikipage? title=Open. CL%20 Tutorials%20 -%201 6

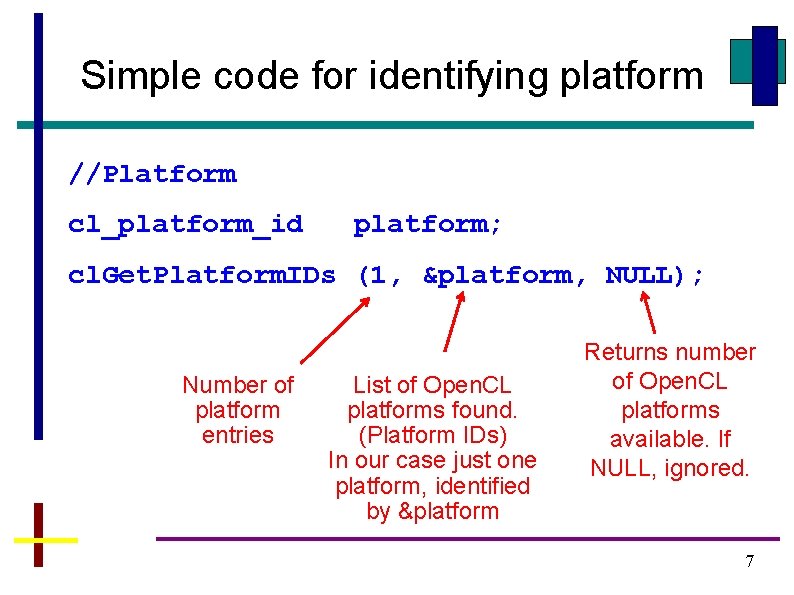

Simple code for identifying platform //Platform cl_platform_id platform; cl. Get. Platform. IDs (1, &platform, NULL); Number of platform entries List of Open. CL platforms found. (Platform IDs) In our case just one platform, identified by &platform Returns number of Open. CL platforms available. If NULL, ignored. 7

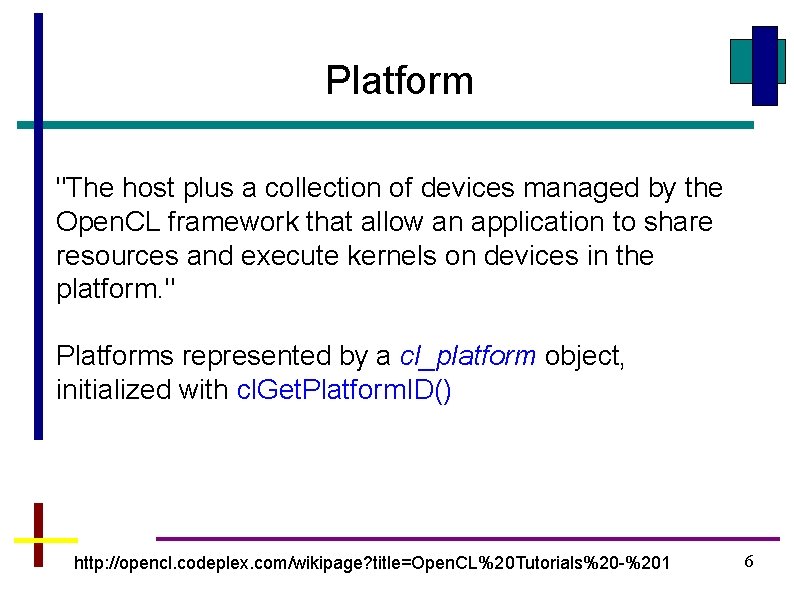

Context “The environment within which the kernels execute and the domain in which synchronization and memory management is defined. The context includes a set of devices, the memory accessible to those devices, the corresponding memory properties and one or more command-queues used to schedule execution of a kernel(s) or operations on memory objects. ” The Open. CL Specification version 1. 1 http: //www. khronos. org/registry/cl/specs/opencl-1. 1. pdf 8

![Code for context Context clcontextproperties props3 props0 clcontextproperties CLCONTEXTPLATFORM props1 clcontextproperties platform Code for context //Context cl_context_properties props[3]; props[0] = (cl_context_properties) CL_CONTEXT_PLATFORM; props[1] = (cl_context_properties) platform;](https://slidetodoc.com/presentation_image_h2/1632343b934e930daa09aff3a58fb6c4/image-9.jpg)

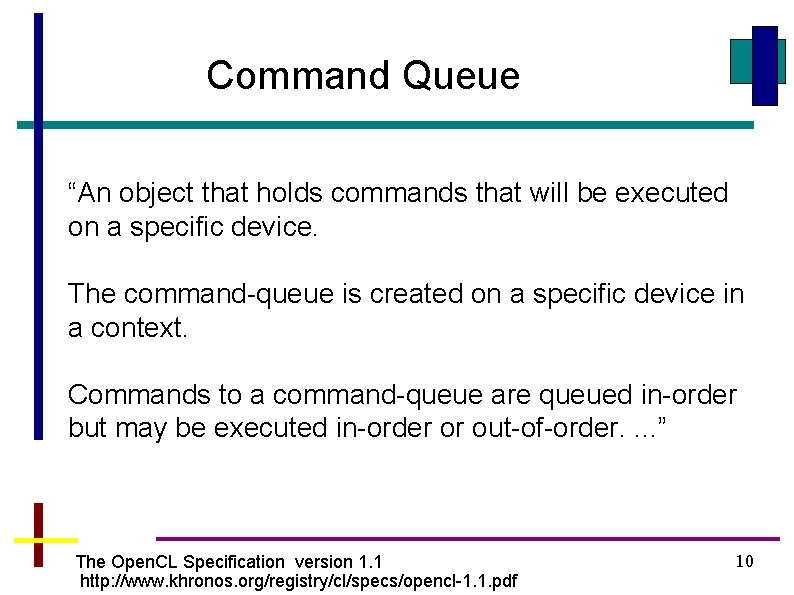

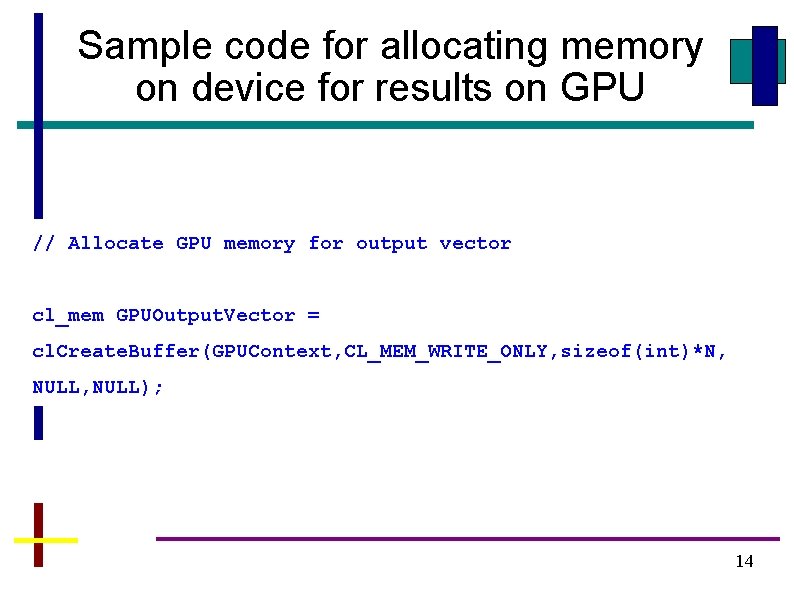

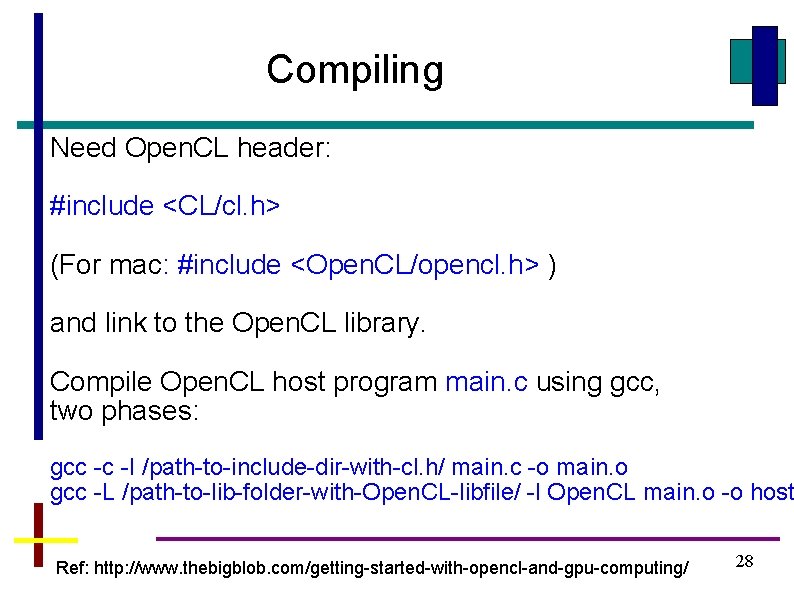

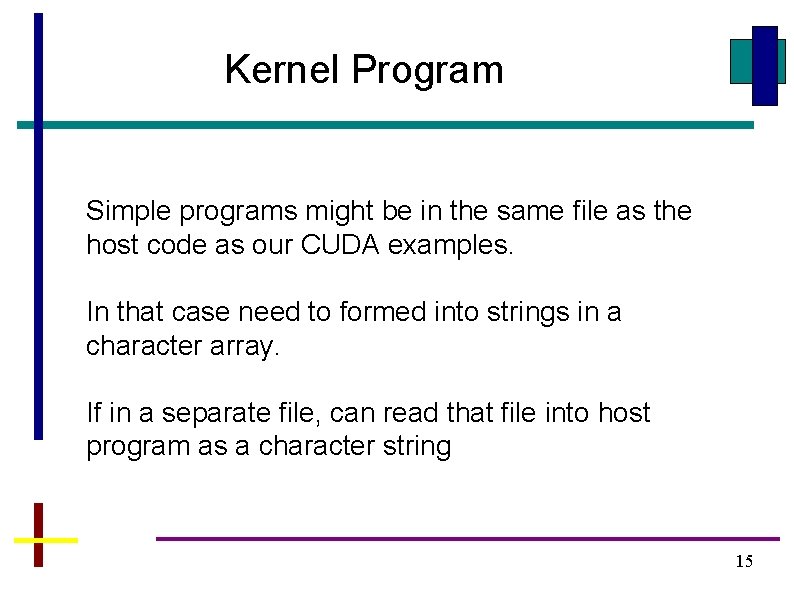

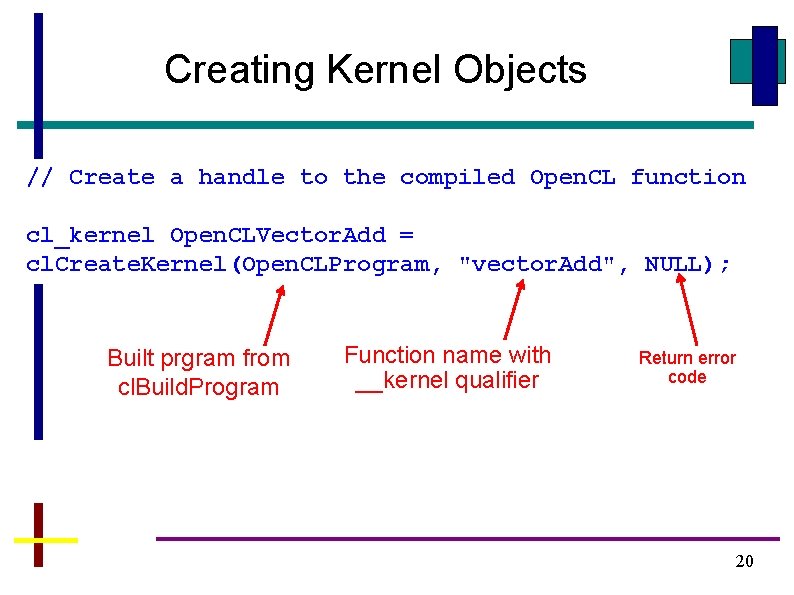

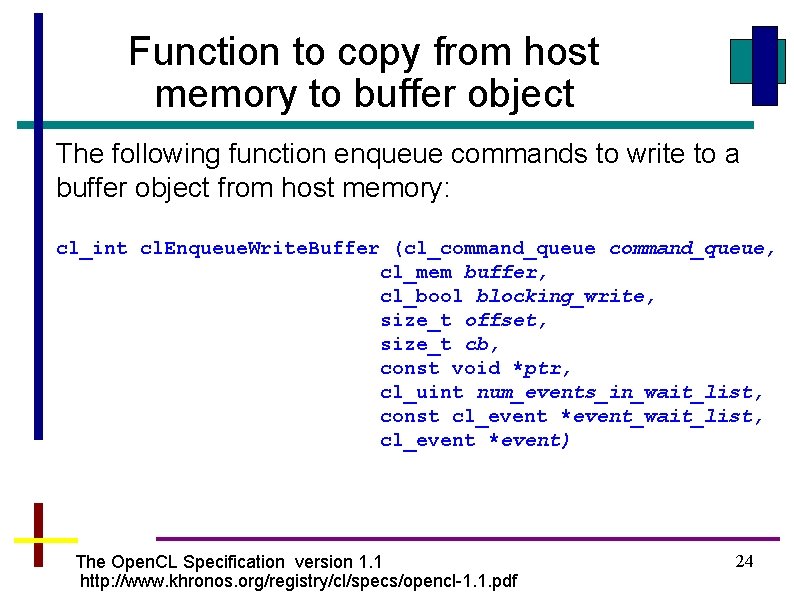

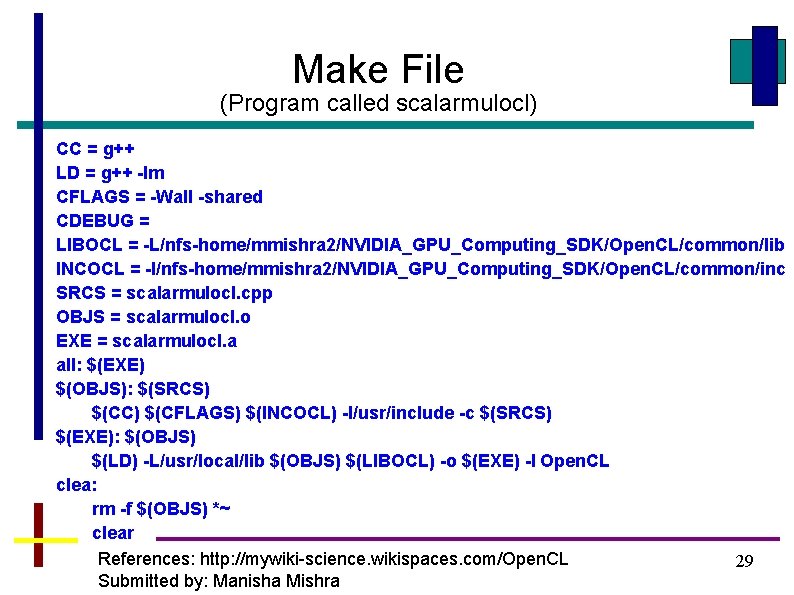

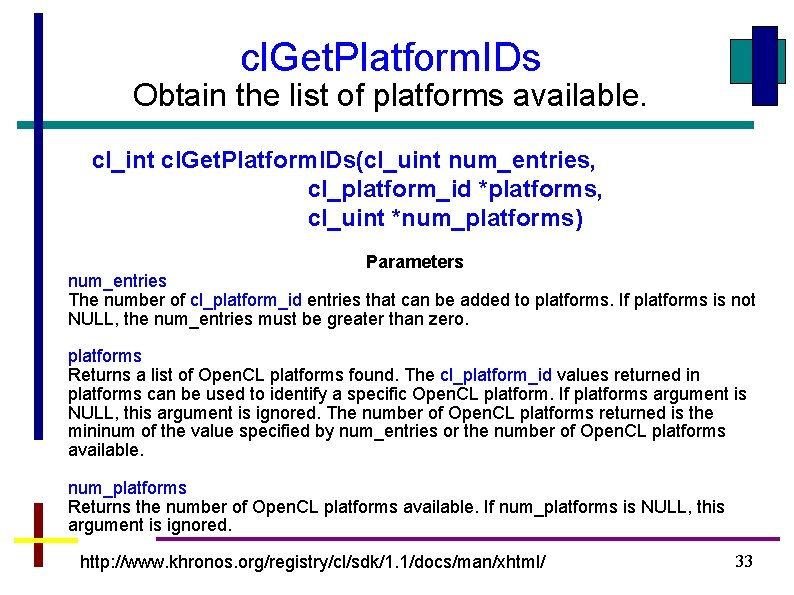

Code for context //Context cl_context_properties props[3]; props[0] = (cl_context_properties) CL_CONTEXT_PLATFORM; props[1] = (cl_context_properties) platform; props[2] = (cl_context_properties) 0; cl_context GPUContext = cl. Create. Context. From. Type(props, CL_DEVICE_TYPE_GPU, NULL, NULL); //Context info size_t Parm. Data. Bytes; cl. Get. Context. Info(GPUContext, CL_CONTEXT_DEVICES, 0, NULL, &Parm. Data. Bytes); cl_device_id* GPUDevices = (cl_device_id*)malloc(Parm. Data. Bytes); cl. Get. Context. Info(GPUContext, CL_CONTEXT_DEVICES, Parm. Data. Bytes, GPUDevices, NULL); 9

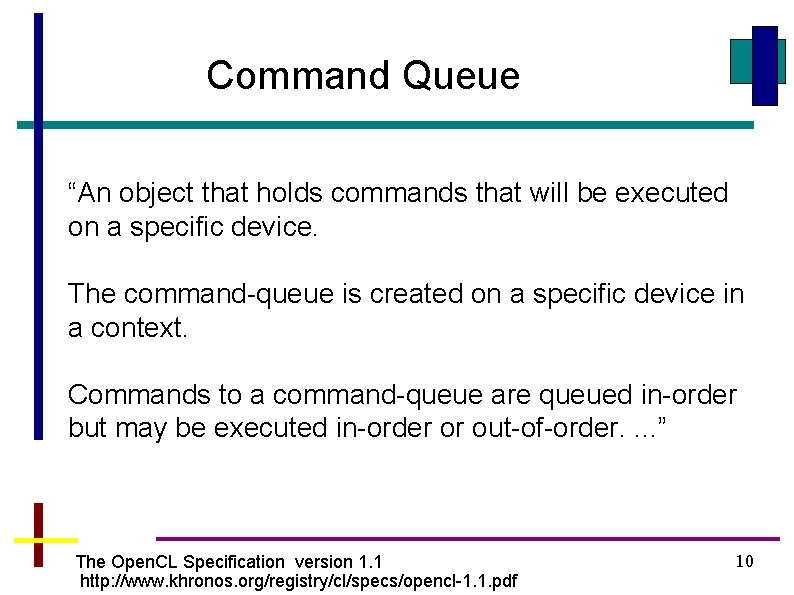

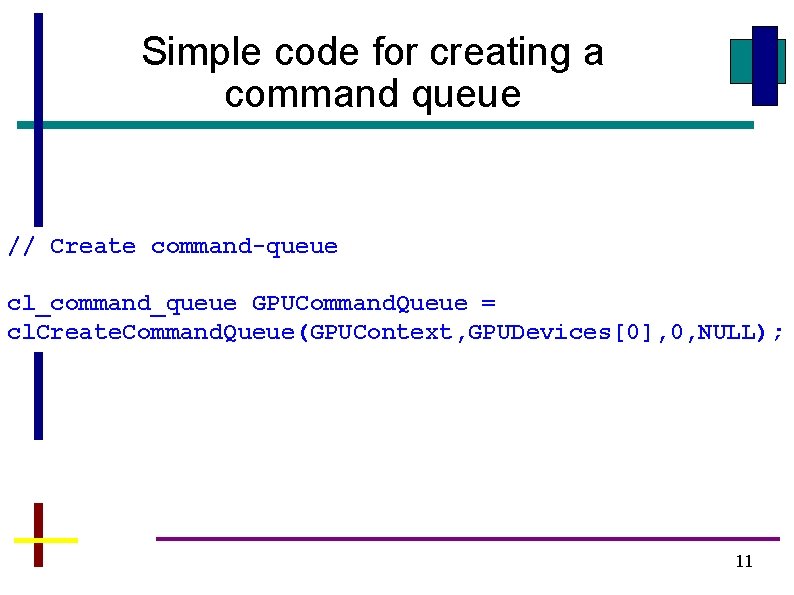

Command Queue “An object that holds commands that will be executed on a specific device. The command-queue is created on a specific device in a context. Commands to a command-queue are queued in-order but may be executed in-order or out-of-order. . ” The Open. CL Specification version 1. 1 http: //www. khronos. org/registry/cl/specs/opencl-1. 1. pdf 10

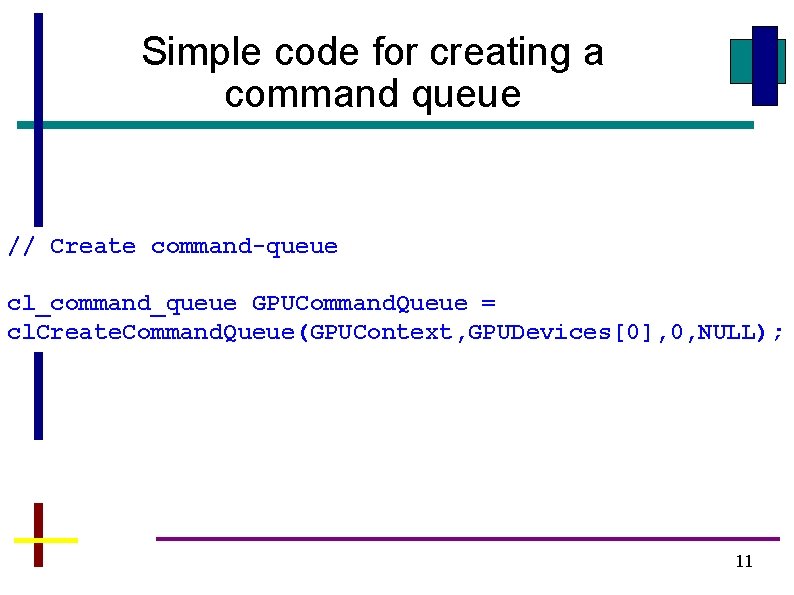

Simple code for creating a command queue // Create command-queue cl_command_queue GPUCommand. Queue = cl. Create. Command. Queue(GPUContext, GPUDevices[0], 0, NULL); 11

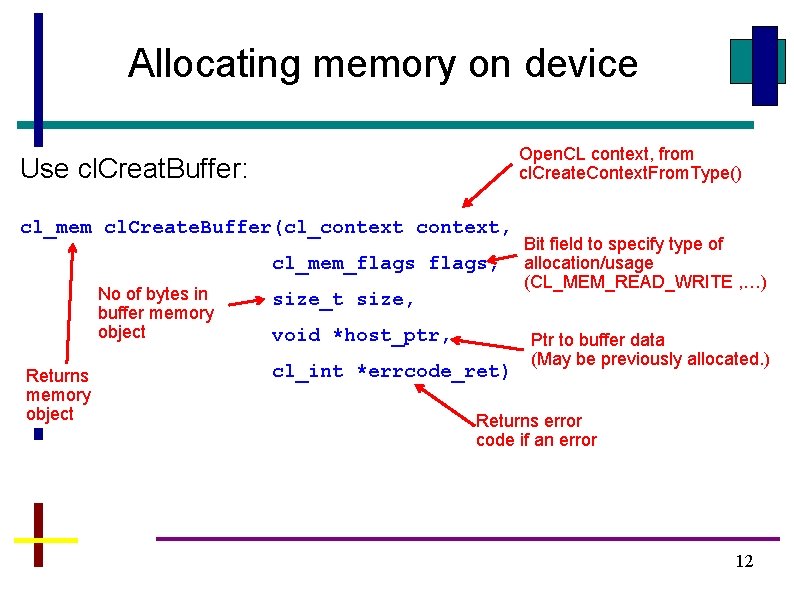

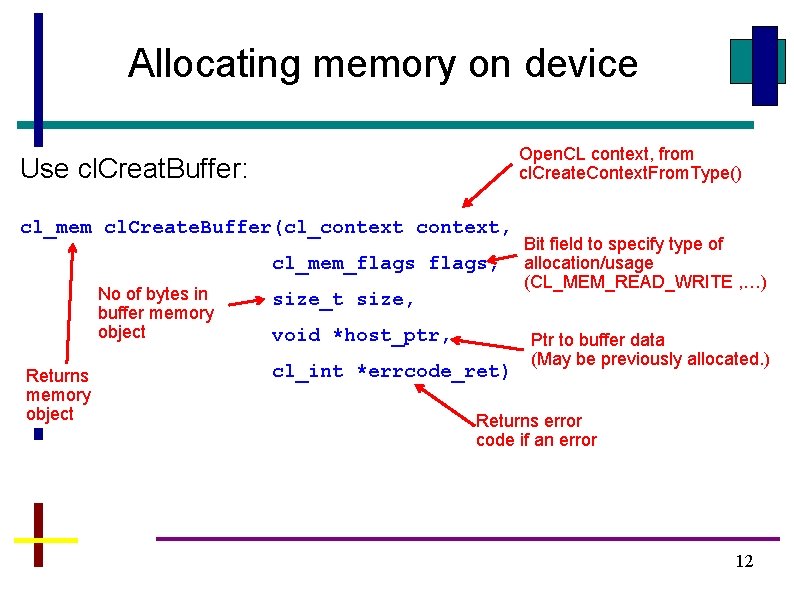

Allocating memory on device Open. CL context, from cl. Create. Context. From. Type() Use cl. Creat. Buffer: cl_mem cl. Create. Buffer(cl_context, cl_mem_flags, No of bytes in buffer memory object Returns memory object size_t size, void *host_ptr, cl_int *errcode_ret) Bit field to specify type of allocation/usage (CL_MEM_READ_WRITE , …) Ptr to buffer data (May be previously allocated. ) Returns error code if an error 12

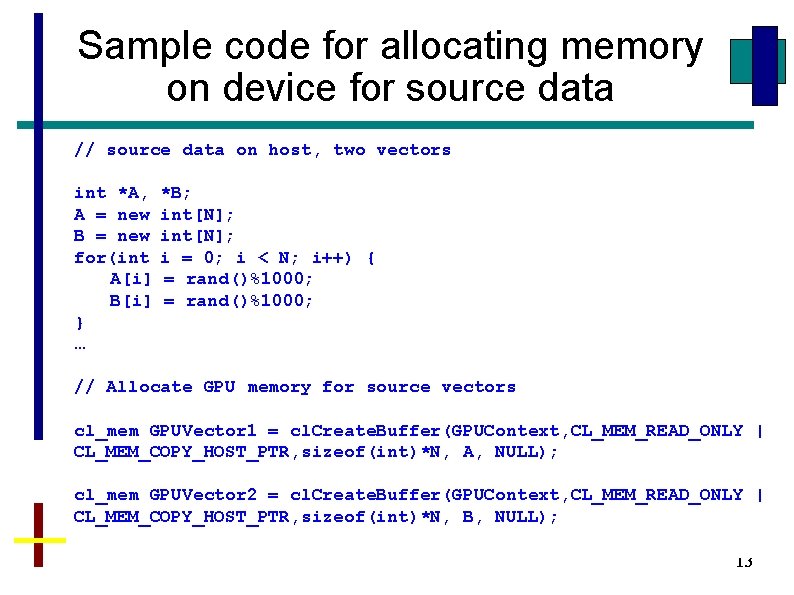

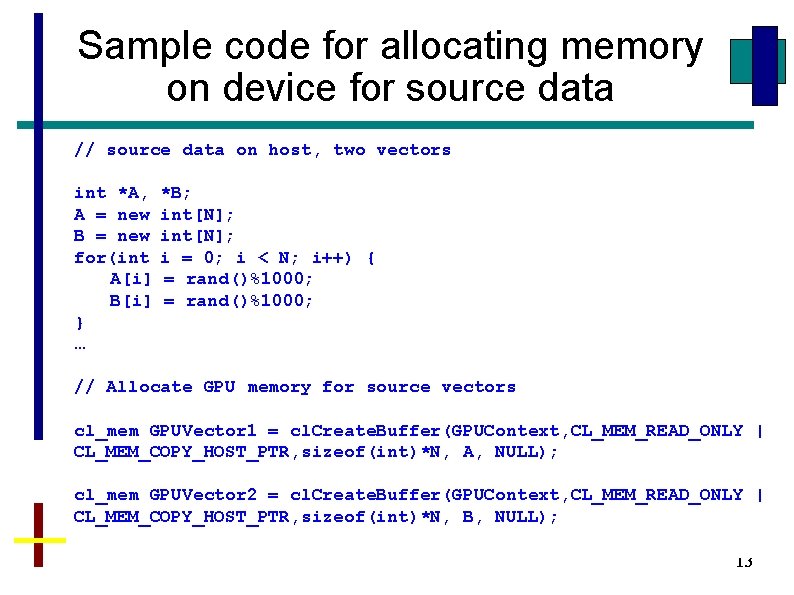

Sample code for allocating memory on device for source data // source data on host, two vectors int *A, A = new B = new for(int A[i] B[i] } … *B; int[N]; i = 0; i < N; i++) { = rand()%1000; // Allocate GPU memory for source vectors cl_mem GPUVector 1 = cl. Create. Buffer(GPUContext, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR, sizeof(int)*N, A, NULL); cl_mem GPUVector 2 = cl. Create. Buffer(GPUContext, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR, sizeof(int)*N, B, NULL); 13

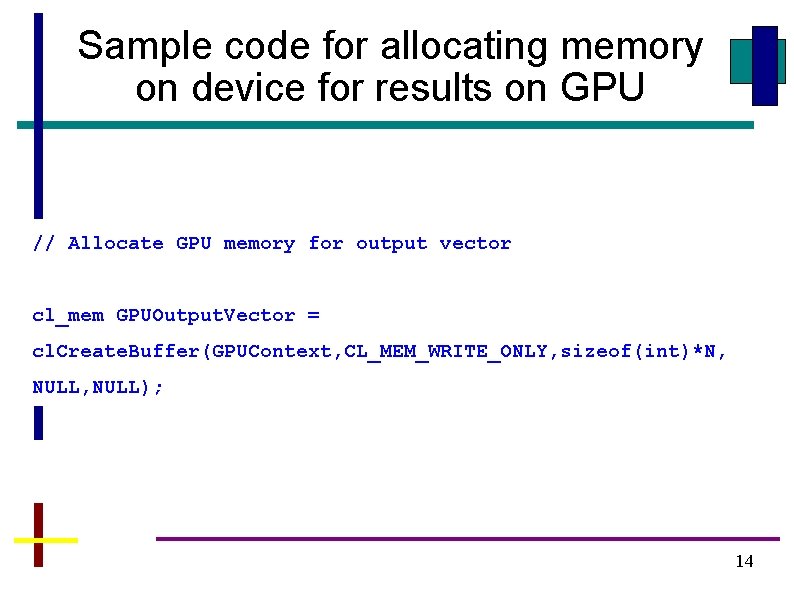

Sample code for allocating memory on device for results on GPU // Allocate GPU memory for output vector cl_mem GPUOutput. Vector = cl. Create. Buffer(GPUContext, CL_MEM_WRITE_ONLY, sizeof(int)*N, NULL); 14

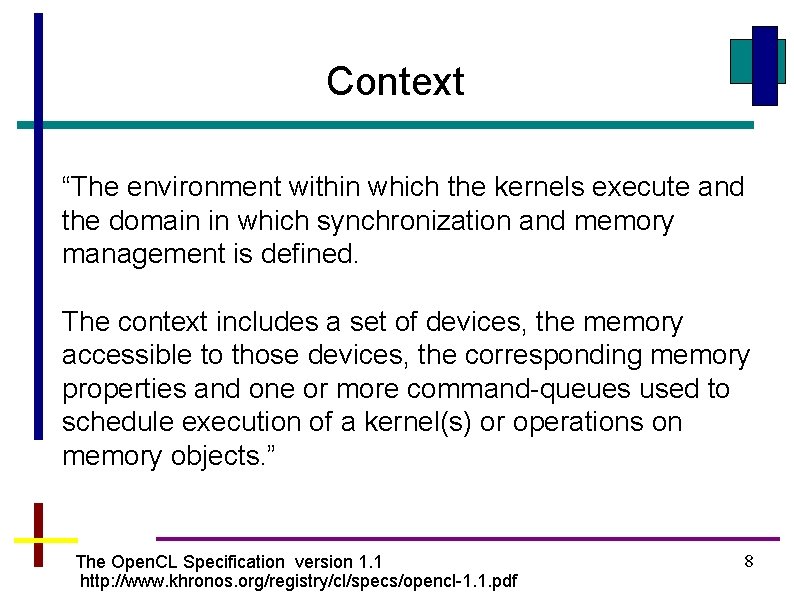

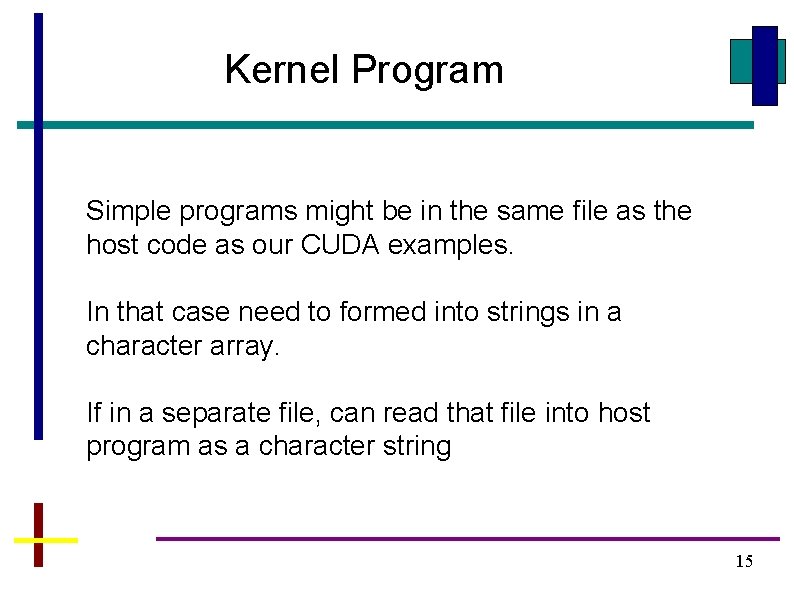

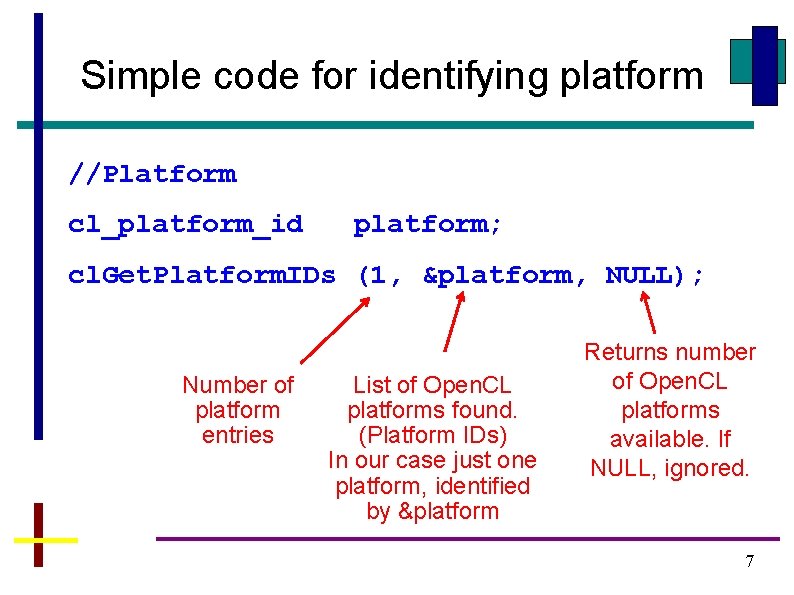

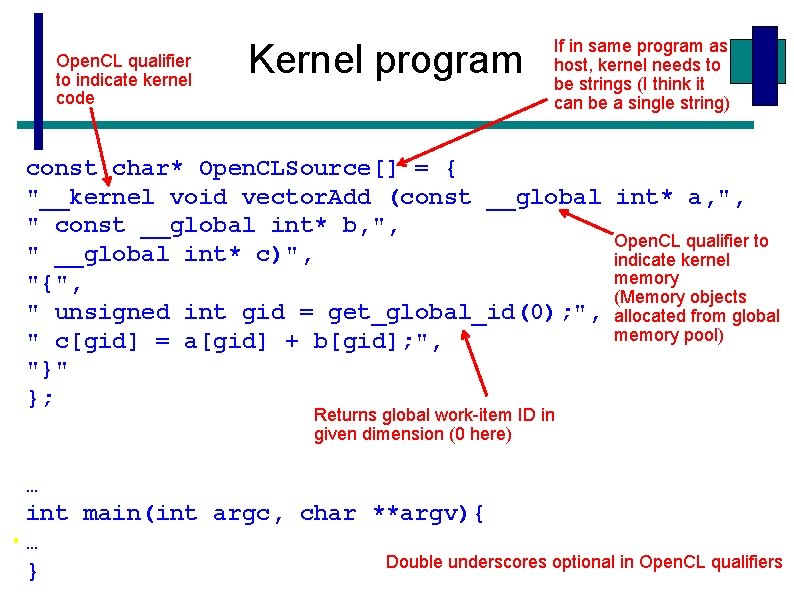

Kernel Program Simple programs might be in the same file as the host code as our CUDA examples. In that case need to formed into strings in a character array. If in a separate file, can read that file into host program as a character string 15

Open. CL qualifier to indicate kernel code Kernel program If in same program as host, kernel needs to be strings (I think it can be a single string) const char* Open. CLSource[] = { "__kernel void vector. Add (const __global int* a, ", " const __global int* b, ", Open. CL qualifier to " __global int* c)", indicate kernel memory "{", (Memory objects " unsigned int gid = get_global_id(0); ", allocated from global memory pool) " c[gid] = a[gid] + b[gid]; ", "}" }; Returns global work-item ID in given dimension (0 here) … int main(int argc, char **argv){ … Double underscores optional in Open. CL qualifiers } 16

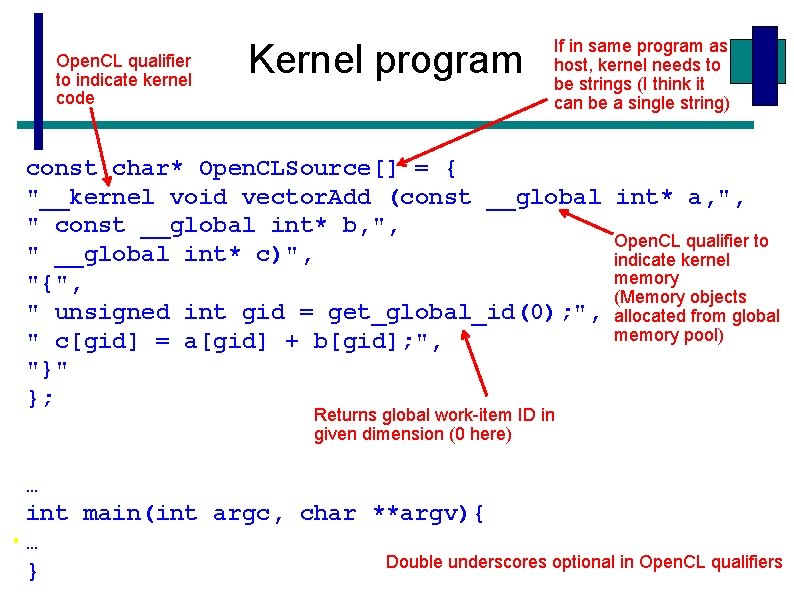

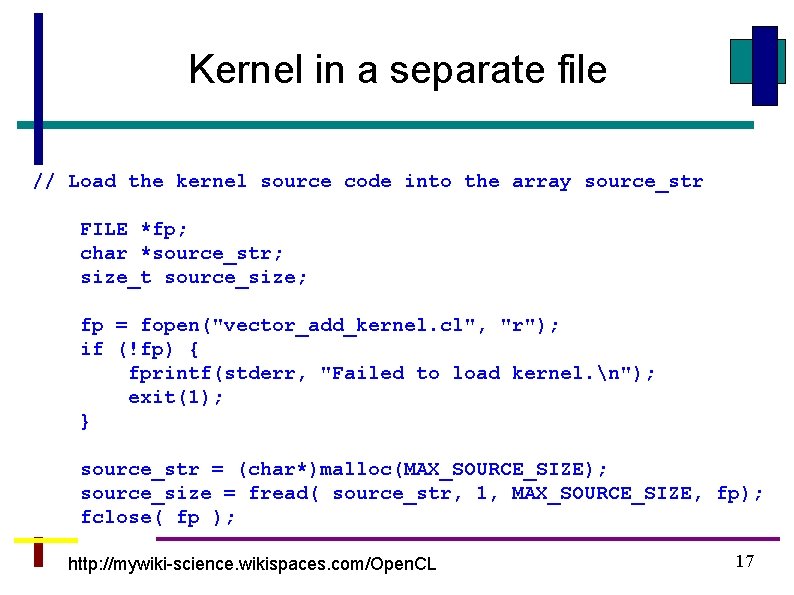

Kernel in a separate file // Load the kernel source code into the array source_str FILE *fp; char *source_str; size_t source_size; fp = fopen("vector_add_kernel. cl", "r"); if (!fp) { fprintf(stderr, "Failed to load kernel. n"); exit(1); } source_str = (char*)malloc(MAX_SOURCE_SIZE); source_size = fread( source_str, 1, MAX_SOURCE_SIZE, fp); fclose( fp ); http: //mywiki-science. wikispaces. com/Open. CL 17

![Create kernel program object const char Open CLSource This example Create kernel program object const char* Open. CLSource[] = { … }; This example](https://slidetodoc.com/presentation_image_h2/1632343b934e930daa09aff3a58fb6c4/image-18.jpg)

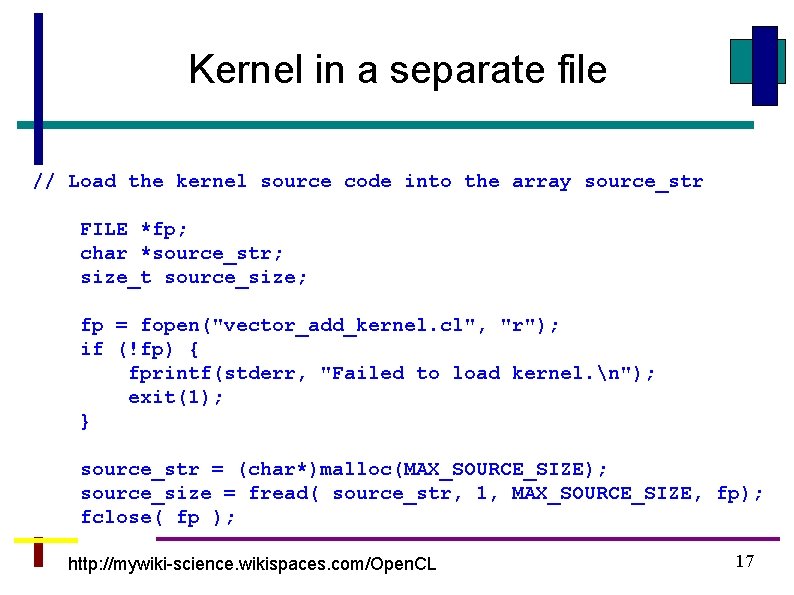

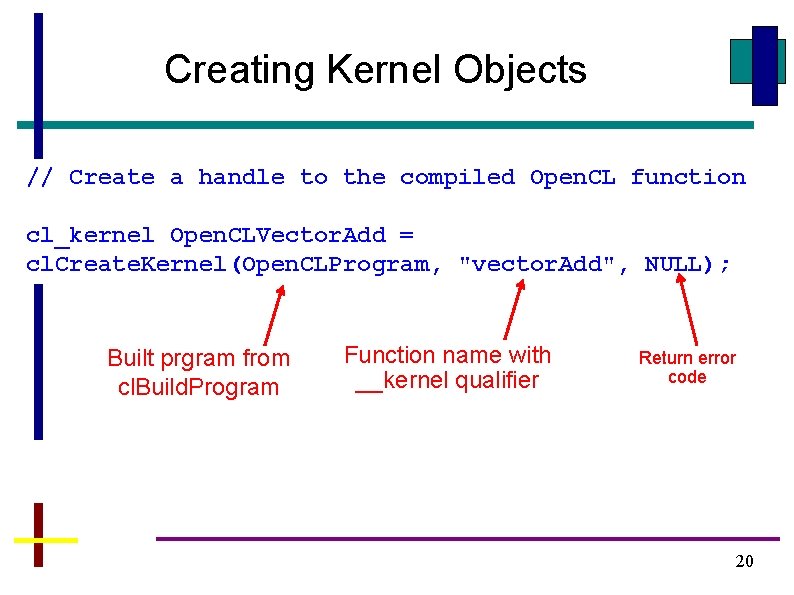

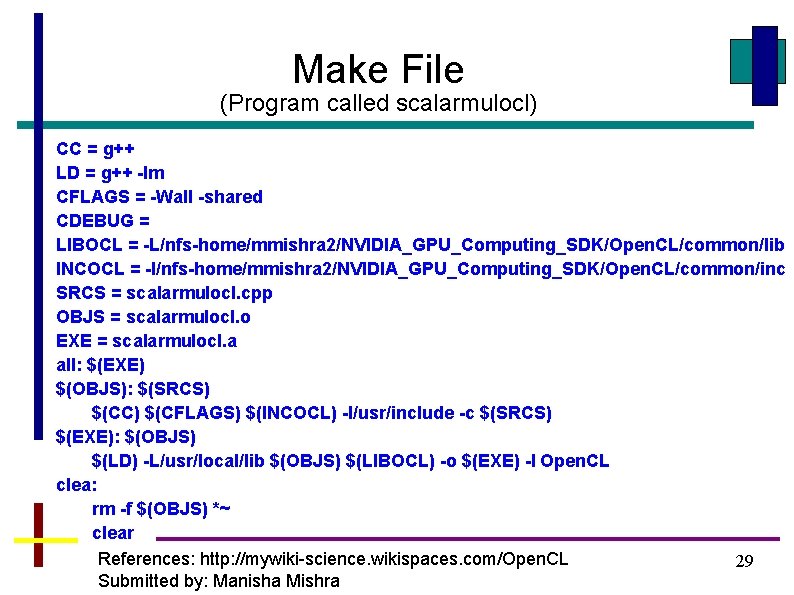

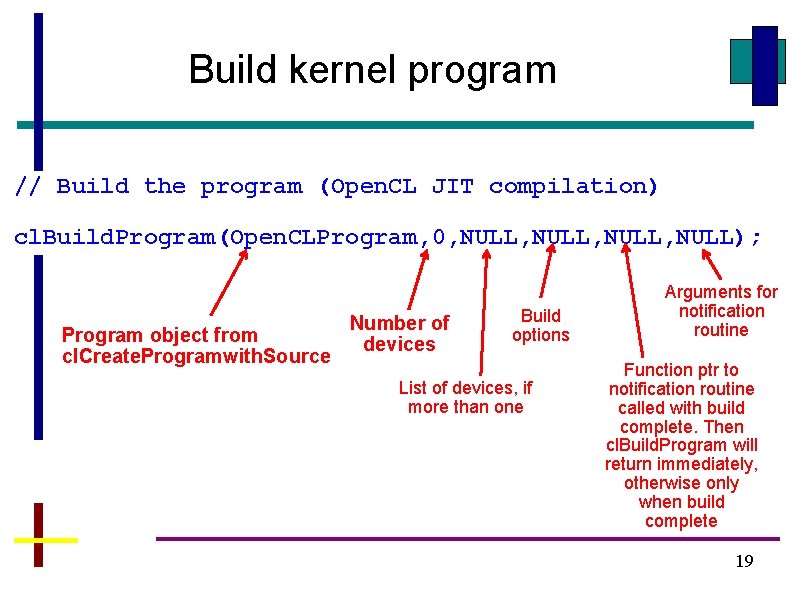

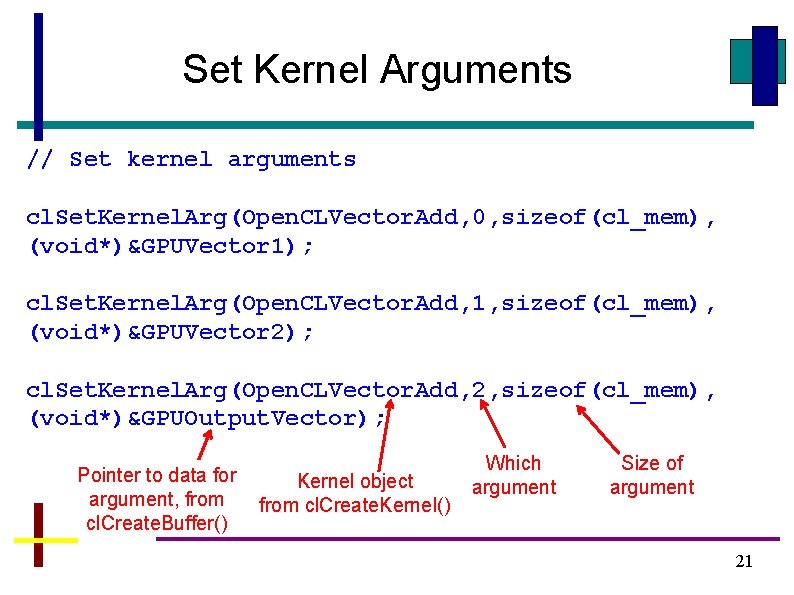

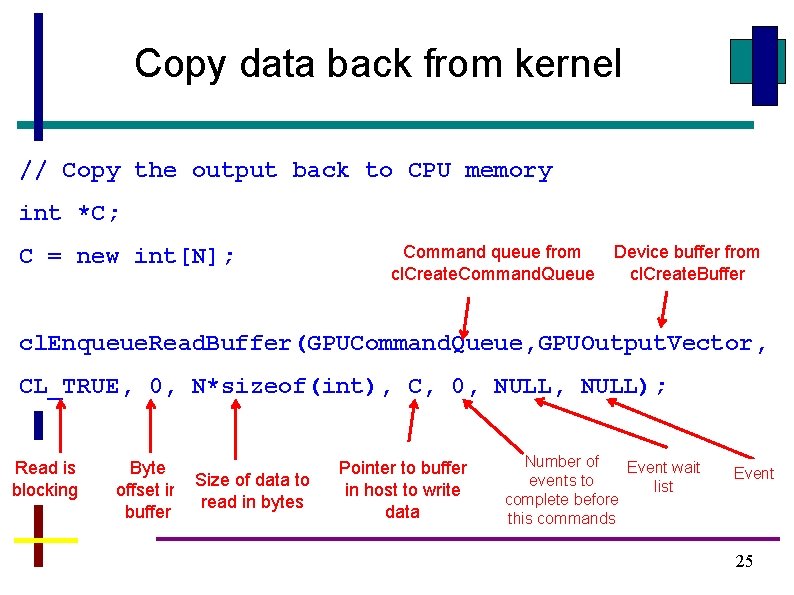

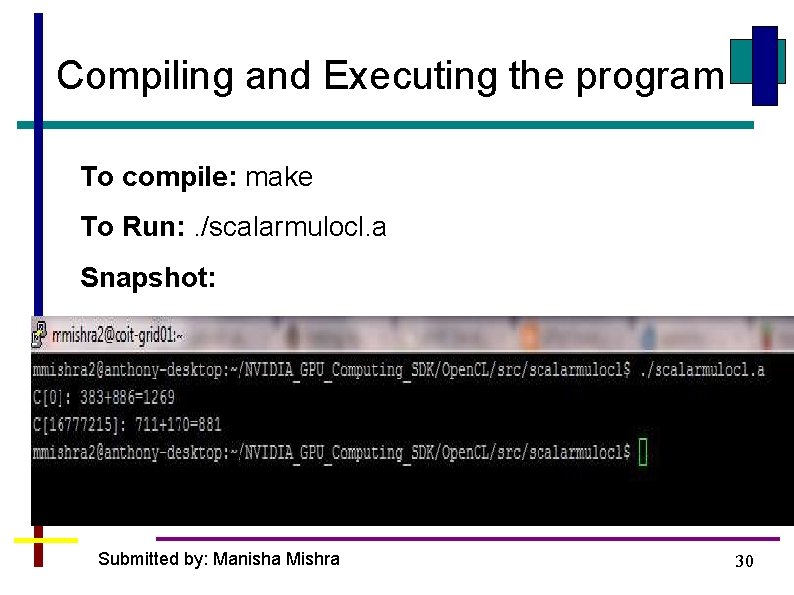

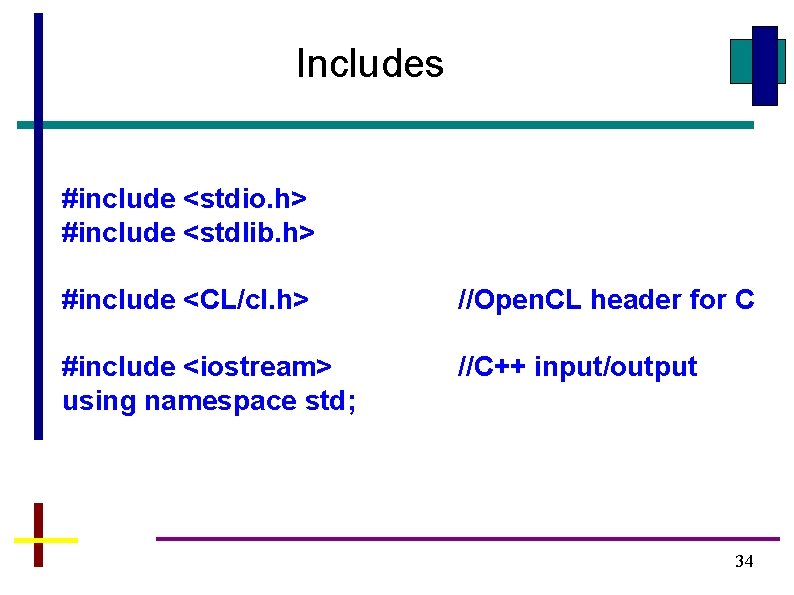

Create kernel program object const char* Open. CLSource[] = { … }; This example uses a single file for both host and kernel code. Can use cl. Createprogram. With. Source() with a separate kernel file read into host program int main(int argc, char **argv) … // Create Open. CL program object Used to return error code if error cl_program Open. CLProgram = cl. Create. Program. With. Source(GPUContext, 7, Open. CLSource, NULL); Number of strings in kernel program array Used if strings not null-terminated to given length of strings 18

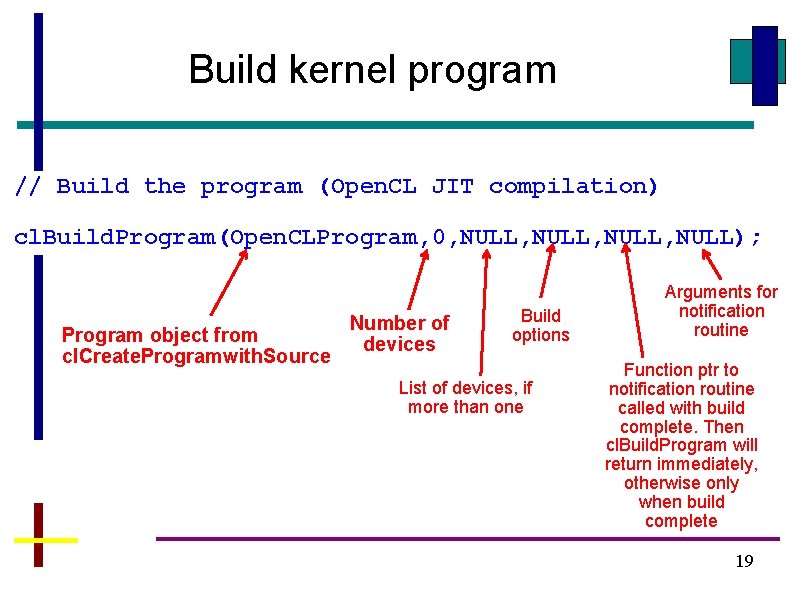

Build kernel program // Build the program (Open. CL JIT compilation) cl. Build. Program(Open. CLProgram, 0, NULL, NULL); Program object from cl. Create. Programwith. Source Number of devices Build options List of devices, if more than one Arguments for notification routine Function ptr to notification routine called with build complete. Then cl. Build. Program will return immediately, otherwise only when build complete 19

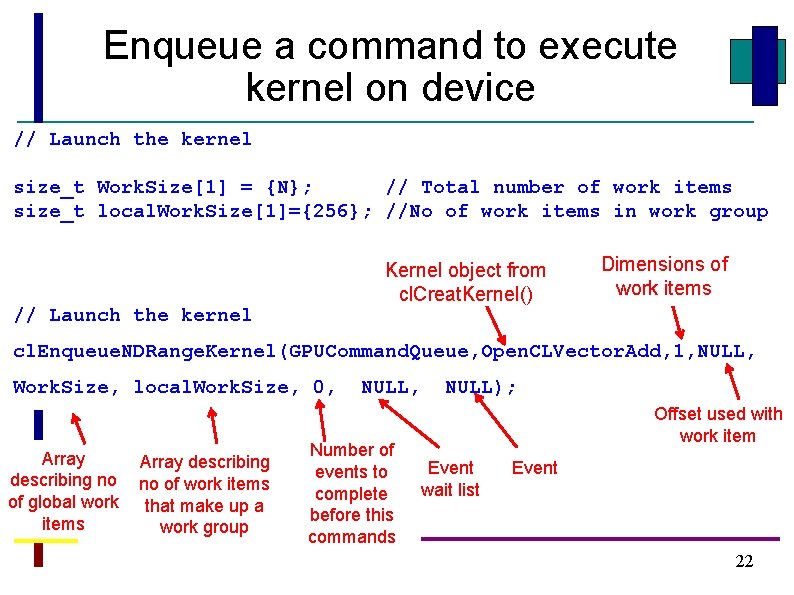

Creating Kernel Objects // Create a handle to the compiled Open. CL function cl_kernel Open. CLVector. Add = cl. Create. Kernel(Open. CLProgram, "vector. Add", NULL); Built prgram from cl. Build. Program Function name with __kernel qualifier Return error code 20

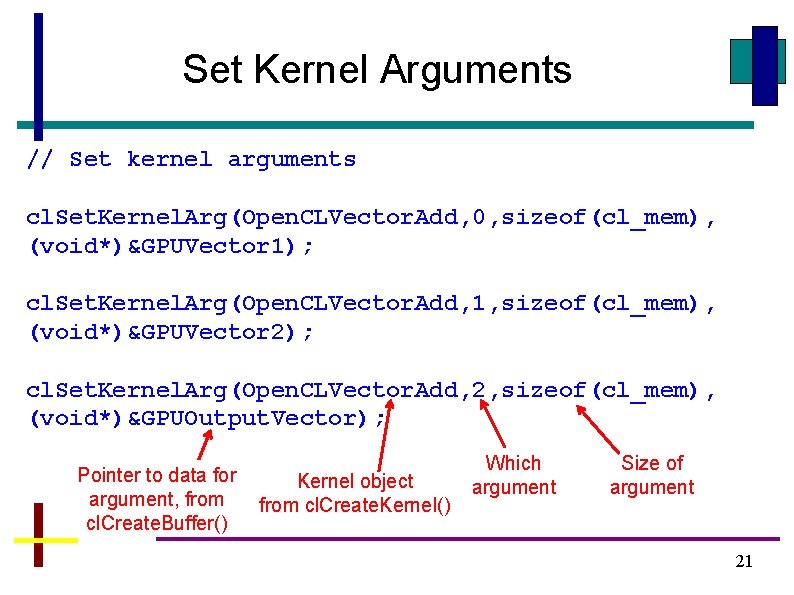

Set Kernel Arguments // Set kernel arguments cl. Set. Kernel. Arg(Open. CLVector. Add, 0, sizeof(cl_mem), (void*)&GPUVector 1); cl. Set. Kernel. Arg(Open. CLVector. Add, 1, sizeof(cl_mem), (void*)&GPUVector 2); cl. Set. Kernel. Arg(Open. CLVector. Add, 2, sizeof(cl_mem), (void*)&GPUOutput. Vector); Pointer to data for argument, from cl. Create. Buffer() Kernel object from cl. Create. Kernel() Which argument Size of argument 21

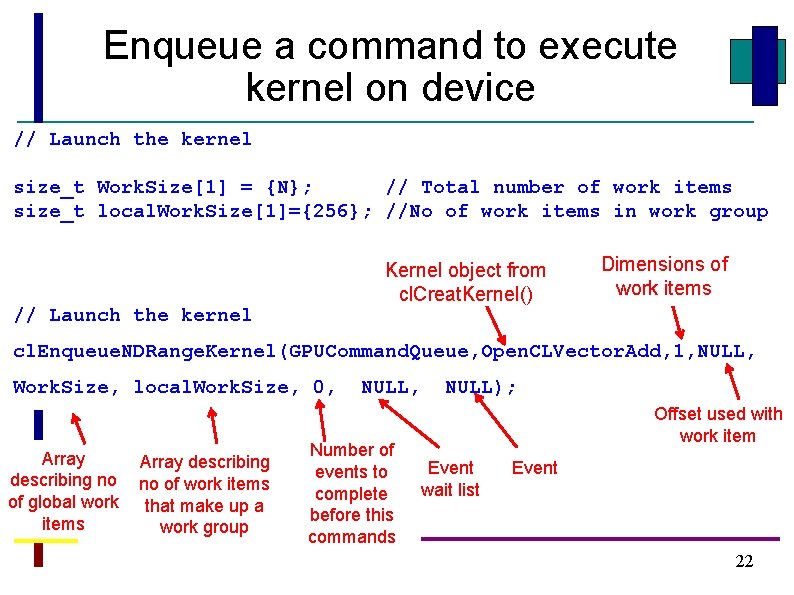

Enqueue a command to execute kernel on device // Launch the kernel size_t Work. Size[1] = {N}; // Total number of work items size_t local. Work. Size[1]={256}; //No of work items in work group Kernel object from cl. Creat. Kernel() // Launch the kernel Dimensions of work items cl. Enqueue. NDRange. Kernel(GPUCommand. Queue, Open. CLVector. Add, 1, NULL, Work. Size, local. Work. Size, 0, Array describing no of global work items Array describing no of work items that make up a work group NULL, Number of events to complete before this commands NULL); Offset used with work item Event wait list Event 22

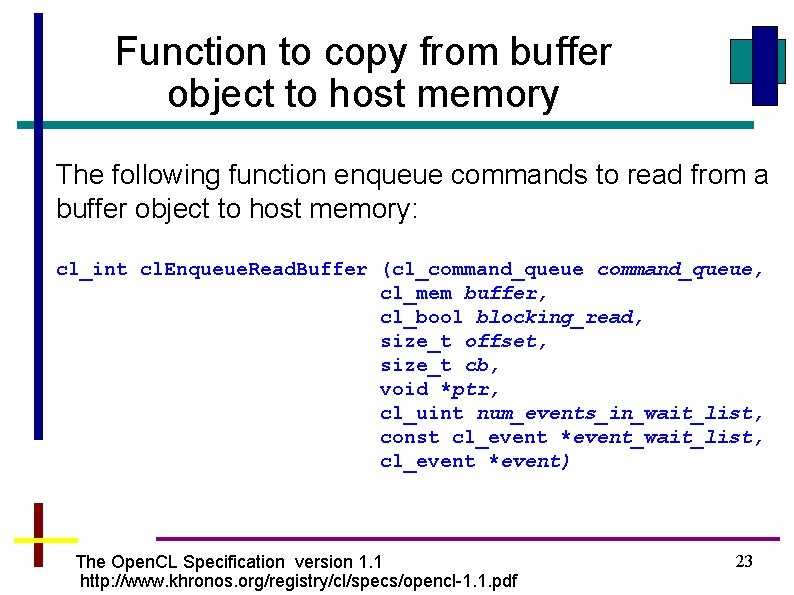

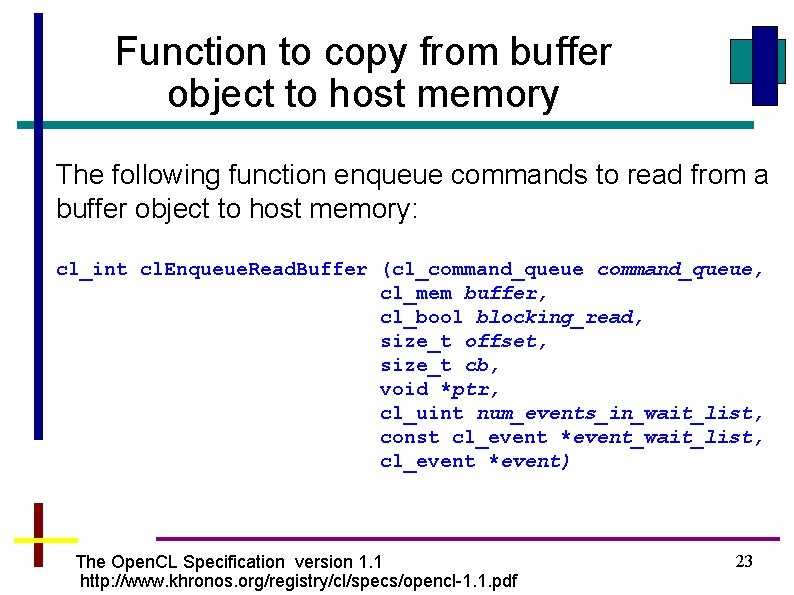

Function to copy from buffer object to host memory The following function enqueue commands to read from a buffer object to host memory: cl_int cl. Enqueue. Read. Buffer (cl_command_queue, cl_mem buffer, cl_bool blocking_read, size_t offset, size_t cb, void *ptr, cl_uint num_events_in_wait_list, const cl_event *event_wait_list, cl_event *event) The Open. CL Specification version 1. 1 http: //www. khronos. org/registry/cl/specs/opencl-1. 1. pdf 23

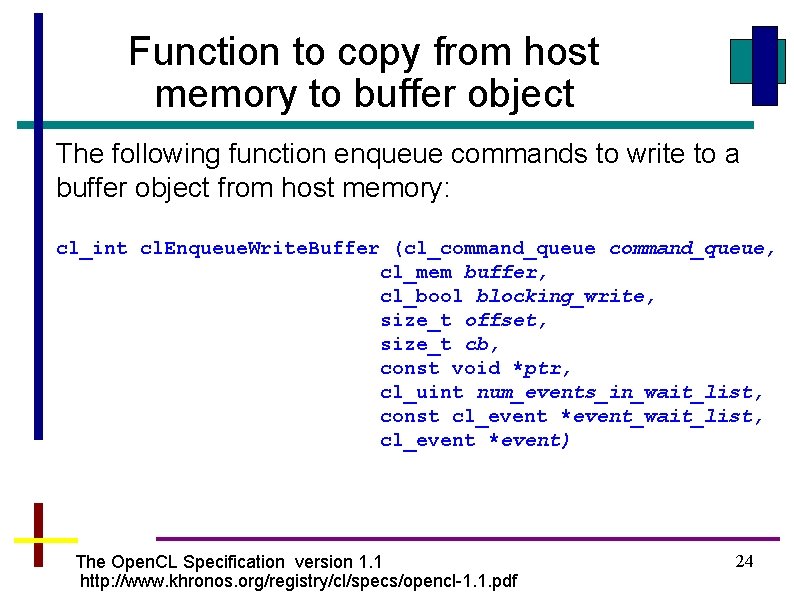

Function to copy from host memory to buffer object The following function enqueue commands to write to a buffer object from host memory: cl_int cl. Enqueue. Write. Buffer (cl_command_queue, cl_mem buffer, cl_bool blocking_write, size_t offset, size_t cb, const void *ptr, cl_uint num_events_in_wait_list, const cl_event *event_wait_list, cl_event *event) The Open. CL Specification version 1. 1 http: //www. khronos. org/registry/cl/specs/opencl-1. 1. pdf 24

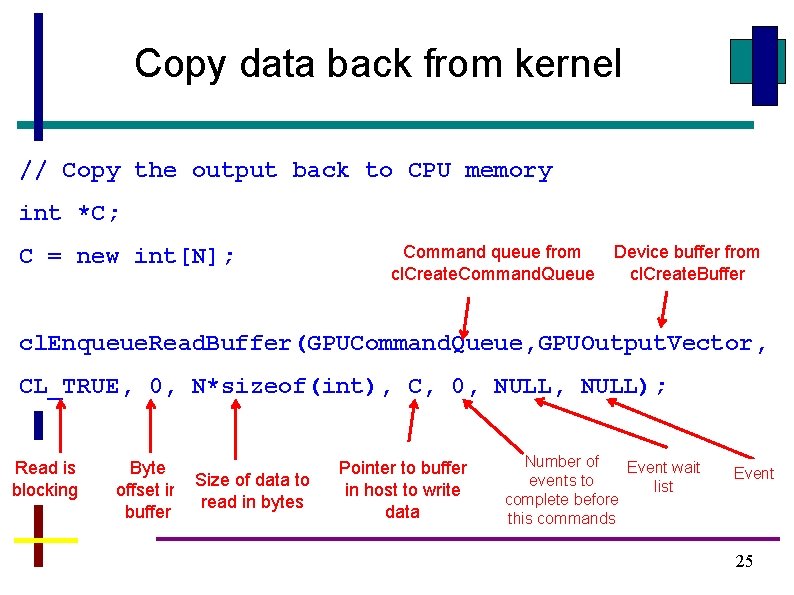

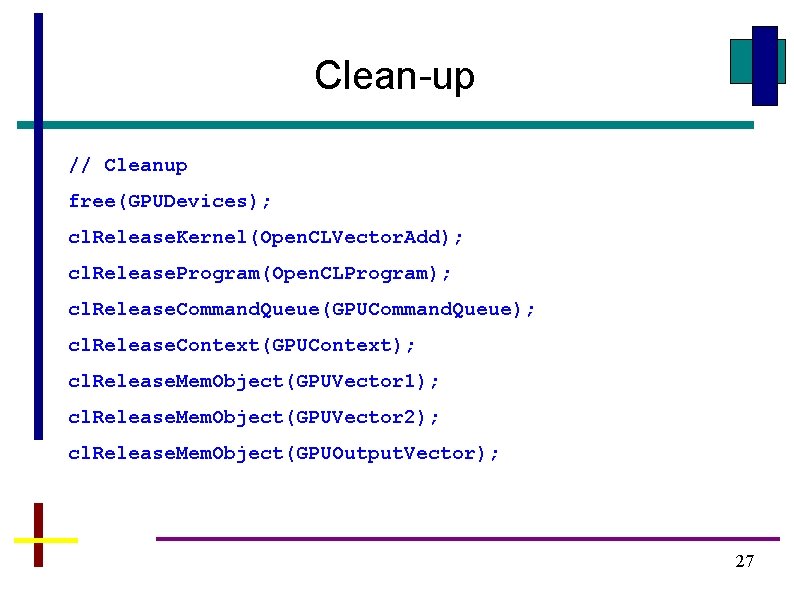

Copy data back from kernel // Copy the output back to CPU memory int *C; C = new int[N]; Command queue from cl. Create. Command. Queue Device buffer from cl. Create. Buffer cl. Enqueue. Read. Buffer(GPUCommand. Queue, GPUOutput. Vector, CL_TRUE, 0, N*sizeof(int), C, 0, NULL); Read is blocking Byte Size of data to offset in read in bytes buffer Pointer to buffer in host to write data Number of Event wait events to list complete before this commands Event 25

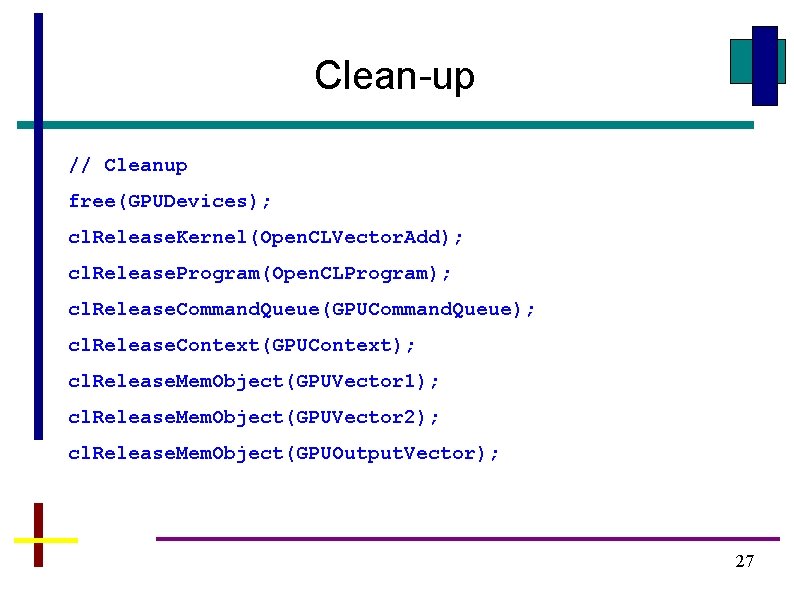

Clean-up // Cleanup free(GPUDevices); cl. Release. Kernel(Open. CLVector. Add); cl. Release. Program(Open. CLProgram); cl. Release. Command. Queue(GPUCommand. Queue); cl. Release. Context(GPUContext); cl. Release. Mem. Object(GPUVector 1); cl. Release. Mem. Object(GPUVector 2); cl. Release. Mem. Object(GPUOutput. Vector); 27

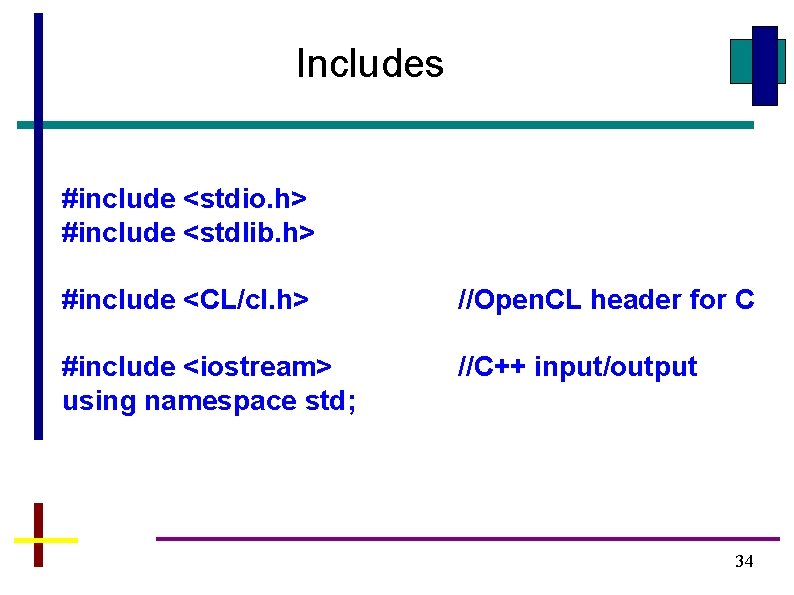

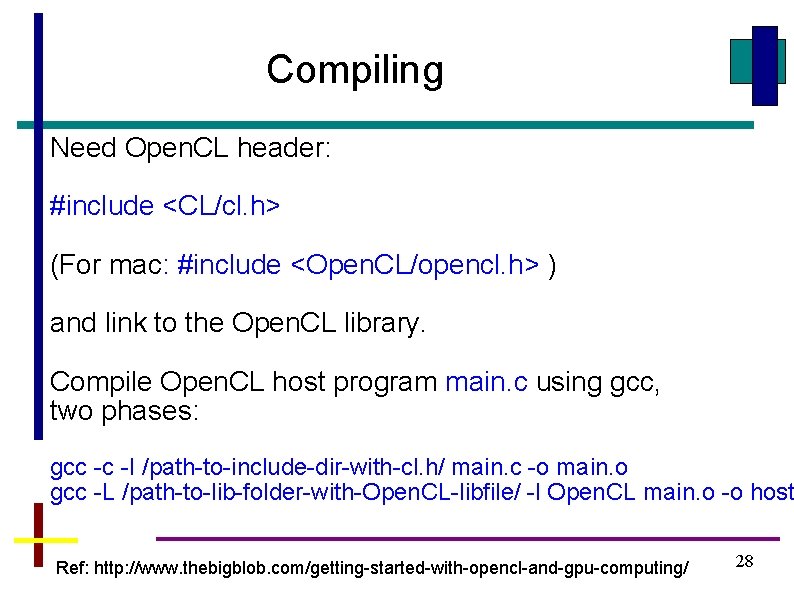

Compiling Need Open. CL header: #include <CL/cl. h> (For mac: #include <Open. CL/opencl. h> ) and link to the Open. CL library. Compile Open. CL host program main. c using gcc, two phases: gcc -c -I /path-to-include-dir-with-cl. h/ main. c -o main. o gcc -L /path-to-lib-folder-with-Open. CL-libfile/ -l Open. CL main. o -o host Ref: http: //www. thebigblob. com/getting-started-with-opencl-and-gpu-computing/ 28

Make File (Program called scalarmulocl) CC = g++ LD = g++ -lm CFLAGS = -Wall -shared CDEBUG = LIBOCL = -L/nfs-home/mmishra 2/NVIDIA_GPU_Computing_SDK/Open. CL/common/lib INCOCL = -I/nfs-home/mmishra 2/NVIDIA_GPU_Computing_SDK/Open. CL/common/inc SRCS = scalarmulocl. cpp OBJS = scalarmulocl. o EXE = scalarmulocl. a all: $(EXE) $(OBJS): $(SRCS) $(CC) $(CFLAGS) $(INCOCL) -I/usr/include -c $(SRCS) $(EXE): $(OBJS) $(LD) -L/usr/local/lib $(OBJS) $(LIBOCL) -o $(EXE) -l Open. CL clea: rm -f $(OBJS) *~ clear References: http: //mywiki-science. wikispaces. com/Open. CL 29 Submitted by: Manisha Mishra

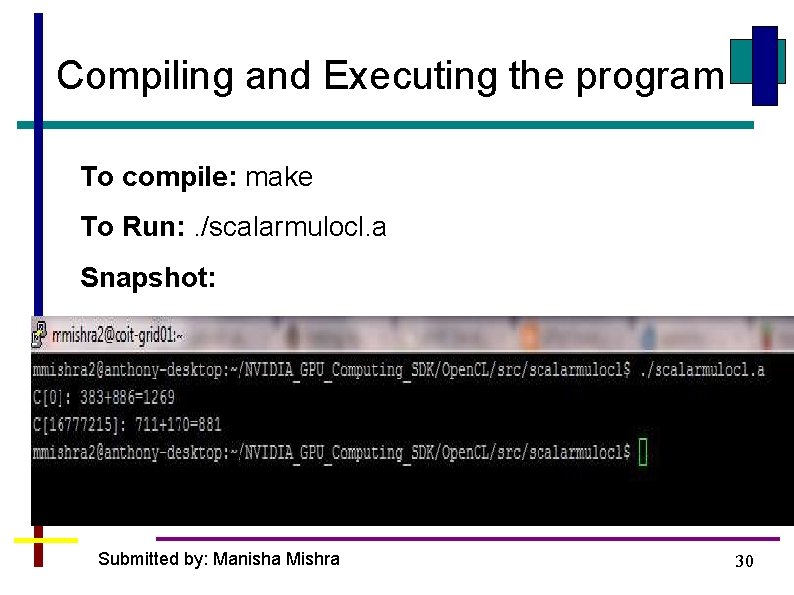

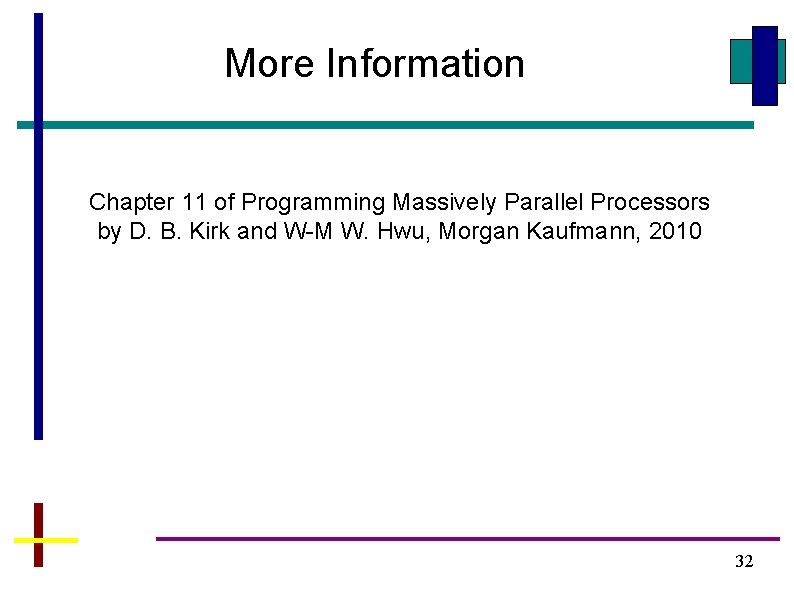

Compiling and Executing the program To compile: make To Run: . /scalarmulocl. a Snapshot: Submitted by: Manisha Mishra 30

Questions

More Information Chapter 11 of Programming Massively Parallel Processors by D. B. Kirk and W-M W. Hwu, Morgan Kaufmann, 2010 32

cl. Get. Platform. IDs Obtain the list of platforms available. cl_int cl. Get. Platform. IDs(cl_uint num_entries, cl_platform_id *platforms, cl_uint *num_platforms) Parameters num_entries The number of cl_platform_id entries that can be added to platforms. If platforms is not NULL, the num_entries must be greater than zero. platforms Returns a list of Open. CL platforms found. The cl_platform_id values returned in platforms can be used to identify a specific Open. CL platform. If platforms argument is NULL, this argument is ignored. The number of Open. CL platforms returned is the mininum of the value specified by num_entries or the number of Open. CL platforms available. num_platforms Returns the number of Open. CL platforms available. If num_platforms is NULL, this argument is ignored. http: //www. khronos. org/registry/cl/sdk/1. 1/docs/man/xhtml/ 33

Includes #include <stdio. h> #include <stdlib. h> #include <CL/cl. h> //Open. CL header for C #include <iostream> using namespace std; //C++ input/output 34