O S C A R Open Source Cluster

- Slides: 35

O. S. C. A. R. Open Source Cluster Applications Resources

Overview �What is O. S. C. A. R. ? �History �Installation �Operation �Spin-offs �Conclusions

History �CCDK (Community Cluster Development Kit) �OCG (Open Cluster Group) �OSCAR (the Open Source Cluster Application Resource) �IBM, Dell, SGI and Intel working closely together �ORNL – Oak Ridge National Laboratory

First Meeting �Tim Mattson and Stephen Scott �Decided on these: � That the adoption of clusters for mainstream, high-performance computing is inhibited by a lack of well-accepted software stacks that are robust and easy to use by the general user. � That the group embraces the open-source model of software distribution. Anything contributed to the group must be freely distributable, preferably as source code under the Berkeley open-source license. � That the group can accomplish its goals by propagating best-known practices built up through many years of hard work by cluster computing pioneers.

Initial Thoughts �Differing architectures (small, medium, large) �Two paths of progress, R&D and ease of use �Primarily for non-computer-savvy users. �Scientists �Academics �Homogeneous system

Timeline �Initial meeting in 2000 �Beta development started the same year �First distribution, OSCAR 1. 0 in 2001 at Linux. World Expo in New York City �Today up to OSCAR 5. 1 �Heterogeneous system �Far more robust �More user friendly

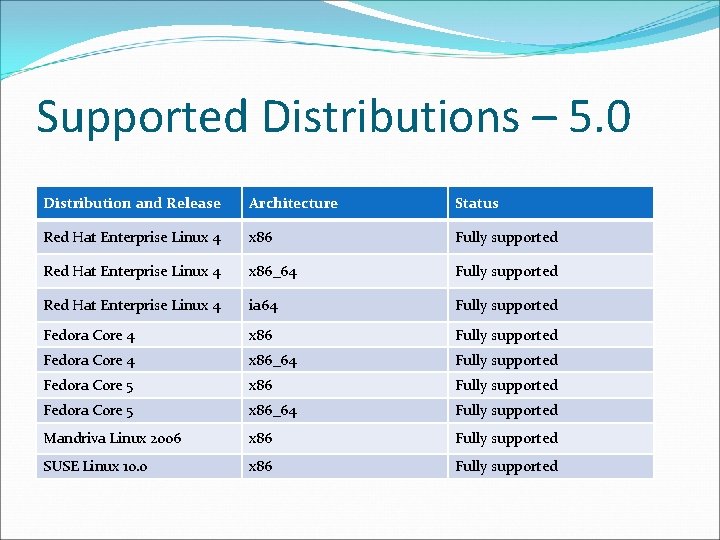

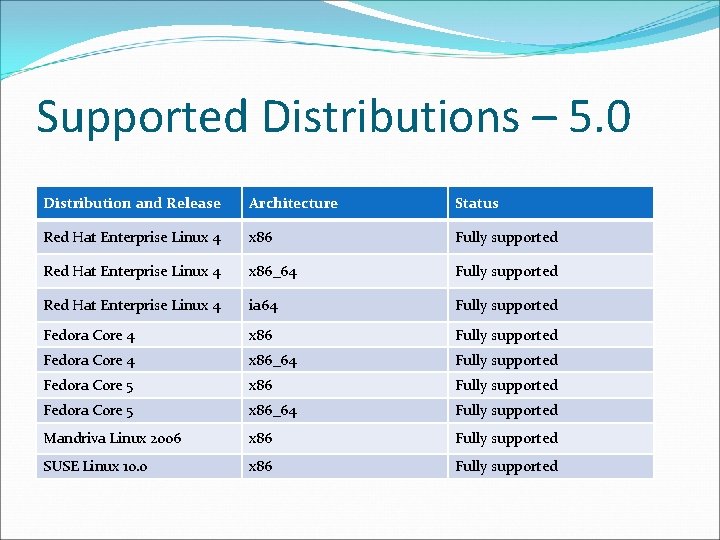

Supported Distributions – 5. 0 Distribution and Release Architecture Status Red Hat Enterprise Linux 4 x 86 Fully supported Red Hat Enterprise Linux 4 x 86_64 Fully supported Red Hat Enterprise Linux 4 ia 64 Fully supported Fedora Core 4 x 86_64 Fully supported Fedora Core 5 x 86_64 Fully supported Mandriva Linux 2006 x 86 Fully supported SUSE Linux 10. 0 x 86 Fully supported

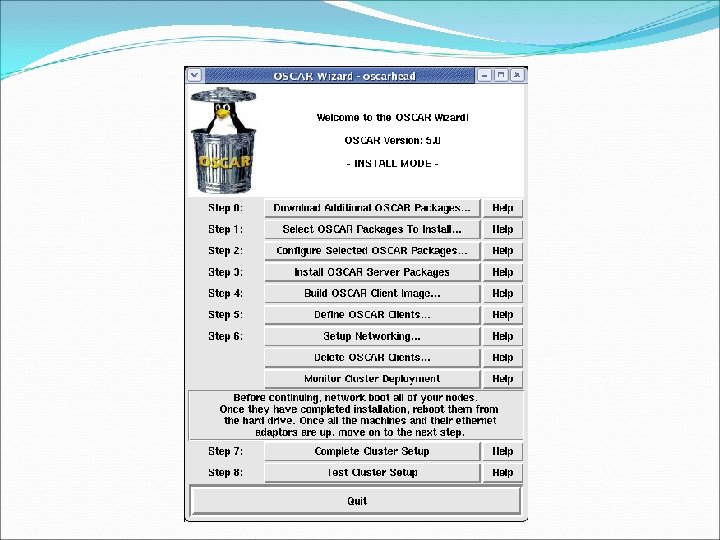

Installation �Detailed Installation notes �Detailed User guide �Basic idea: �Configure head node (server) �Configure image for client nodes �Configure network �Distribute node images �Manage your own cluster!!

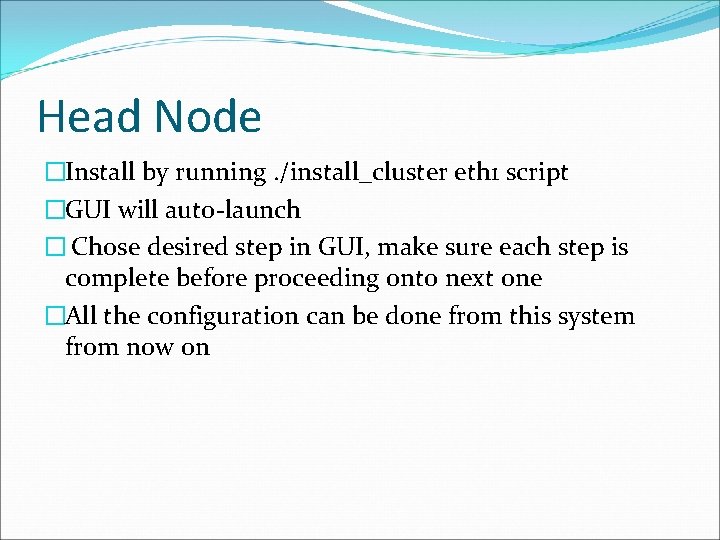

Head Node �Install by running. /install_cluster eth 1 script �GUI will auto-launch � Chose desired step in GUI, make sure each step is complete before proceeding onto next one �All the configuration can be done from this system from now on

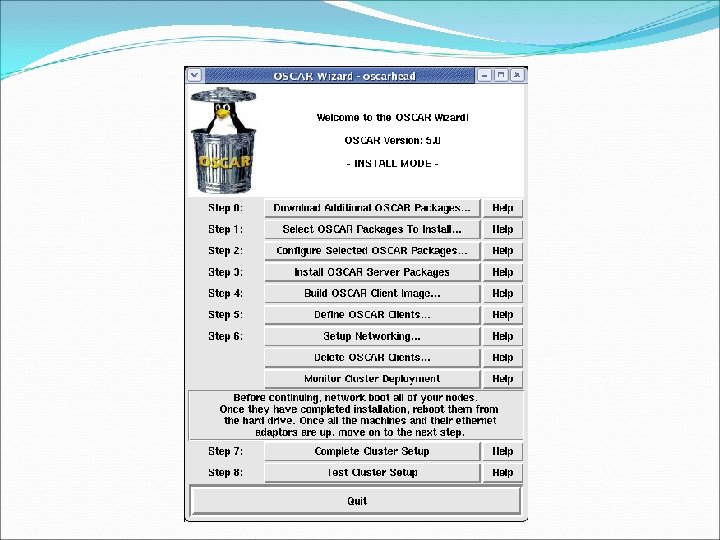

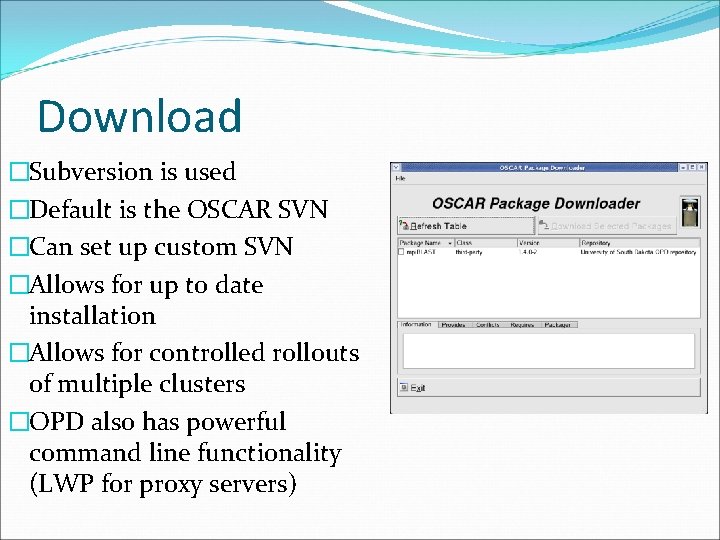

Download �Subversion is used �Default is the OSCAR SVN �Can set up custom SVN �Allows for up to date installation �Allows for controlled rollouts of multiple clusters �OPD also has powerful command line functionality (LWP for proxy servers)

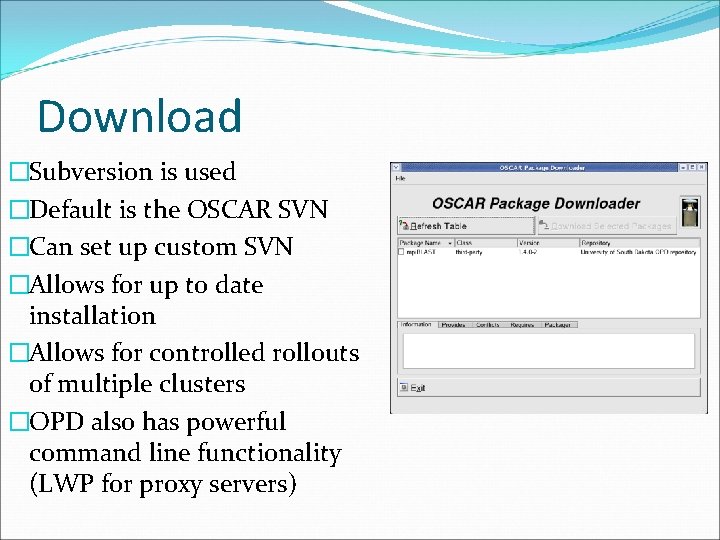

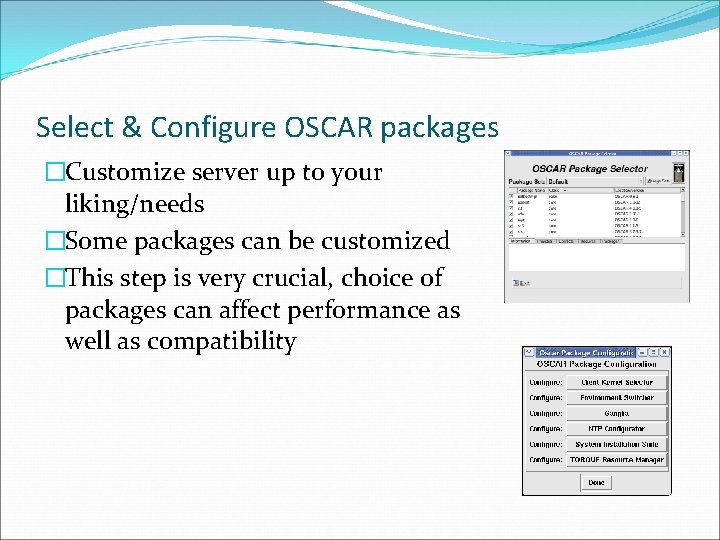

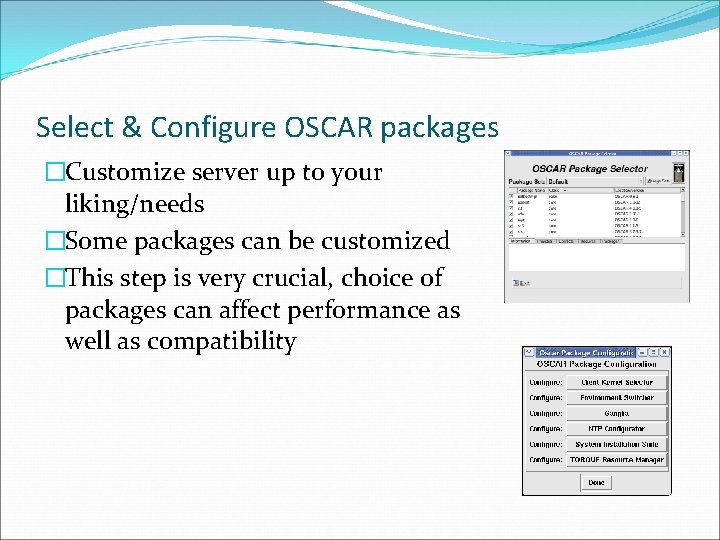

Select & Configure OSCAR packages �Customize server up to your liking/needs �Some packages can be customized �This step is very crucial, choice of packages can affect performance as well as compatibility

Installation of Server Node �Simply installs packages which were selected �Automatically configures the server node �Now the Head or Server is ready to manage, administer and schedule jobs for it’s client nodes

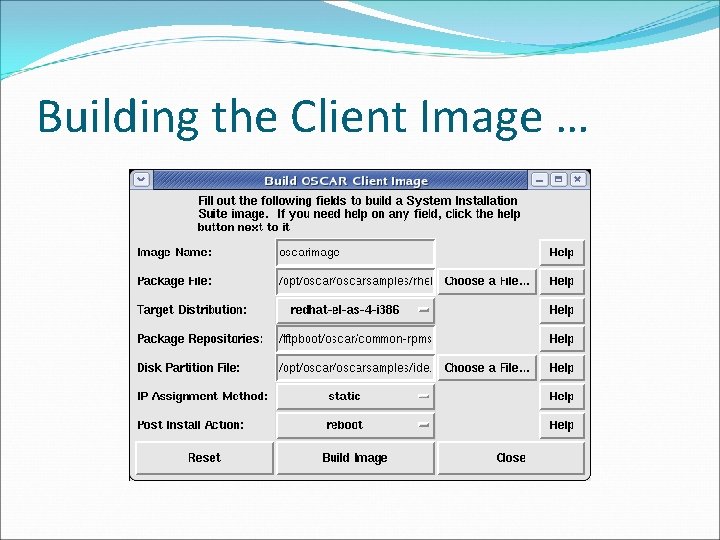

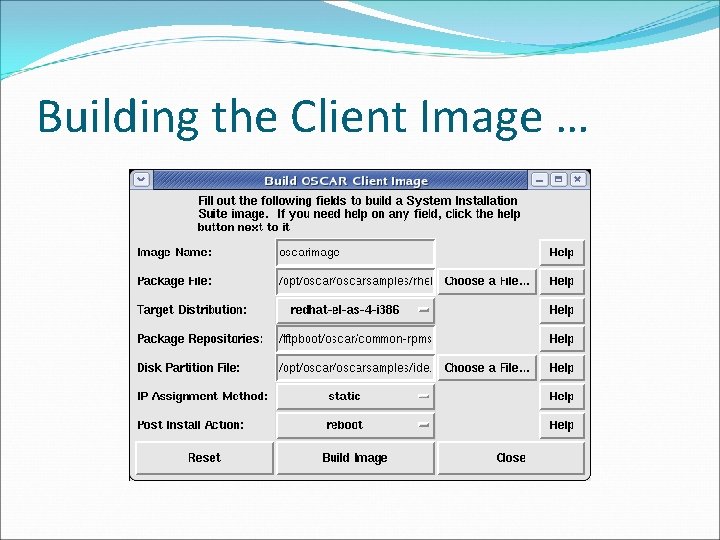

Build Client Image �Choose name �Specify packages within the package file �Specify distribution �Be wary of automatic reboot if network boot is manually selected as default

Building the Client Image …

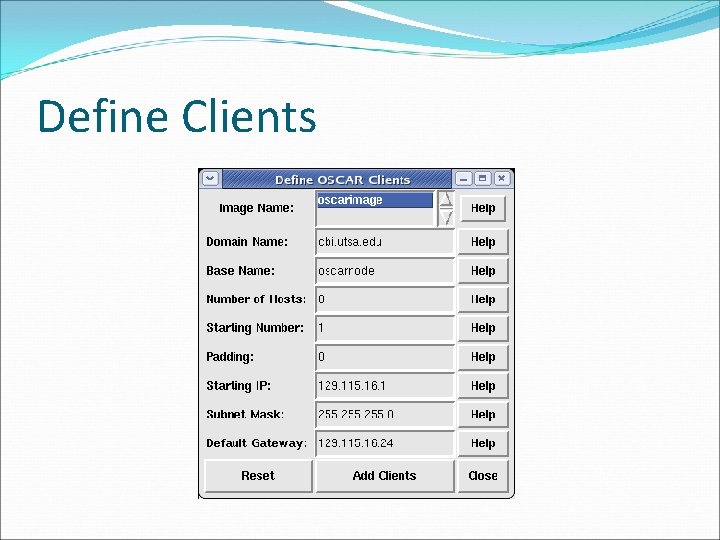

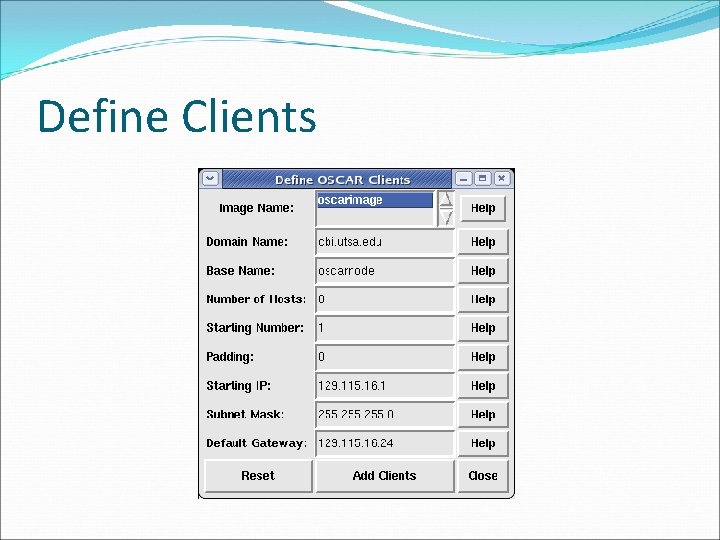

Define Clients �This step creates the network structure of the nodes �It’s advisable to assign IP based on physical links �GUI short-comings regarding multiple IP spans �Incorrect setup can lead to an error during node installation

Define Clients

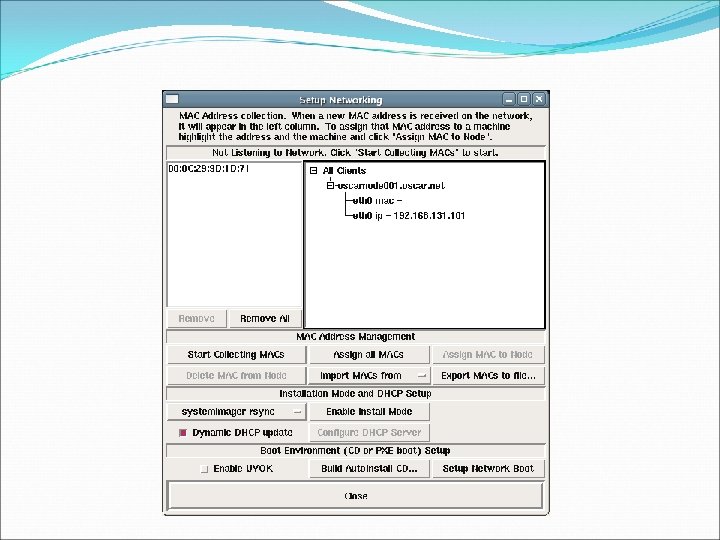

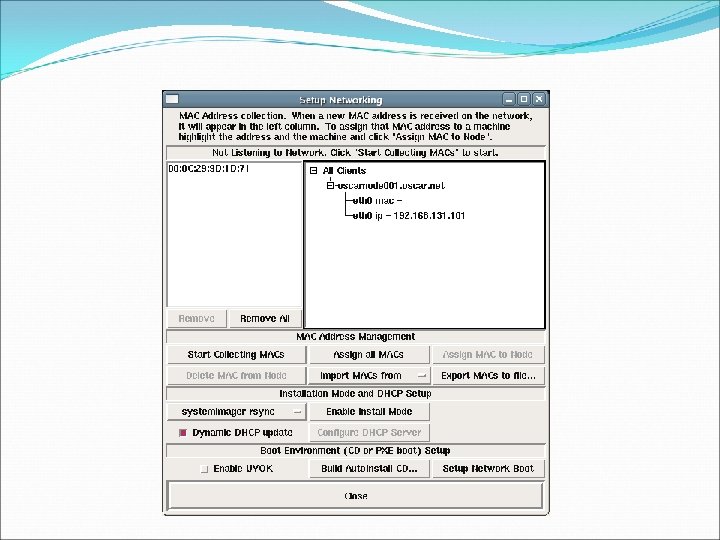

Setup Networking �SIS – System Installation Suite �System. Imager �MAC addresses are scanned for �Must link a MAC to a node �Must select network boot method (rsync, multicast, bt) �Must make sure clients support PXE boot or create boot CDs �Own Kernel can be used if the one supplied with SIS does not work

Client Installation and Test �After the network is properly configured, installation can begin �All nodes are installed and rebooted �Once the system imaging is complete, a test can be run to ensure the cluster is working properly �At this point, the cluster is ready to begin parallel job scheduling

Operation �Admin packages are: �Torque Resource Manager �Maui Scheduler �C 3 �pfilter �System Imager Suite �Switcher Environment Manager �OPIUM �Ganglia

Operation �Library packages: �LAM/MPI �Open. MPI �MPICH �PVM

Torque Resource Manager �Server on Head node �“mom” daemon on clients �Handles job submission and execution �Keeps track of cluster resources �Has own scheduler but uses Maui by default �Commands are not intuitive, documentation must be read �From Open. PBS �http: //svn. oscar. openclustergroup. org/wiki/oscar: 5. 1: a dministration_guide: ch 4. 1. 1_torque_overview

Maui Scheduler �Handles job scheduling �Sophisticated algorithms �Customizable �Much literature on it’s algorithms �Has a commercial gen. of Maui called Moab �Accepted as the unofficial HPC standard for scheduling �http: //www. clusterresources. com/pages/resources/do cumentation. php

C 3 - Cluster Command Control �Developed by ORNL �Collection of tools for cluster administration �Commands: �cget, cpush, crm, cpushimage �cexec, cexecs, ckill, cshutdown �cnum, cname, clist �Cluster Configuration Files �http: //svn. oscar. openclustergroup. org/wiki/oscar: 5. 1: a dministration_guide: ch 4. 3. 1_c 3_overview

pfilter �Cluster traffic filter �Default is that client nodes can only send outgoing communications, outside the scope of the cluster �If it is desirable to open up client nodes, pfilter config file must be modified

System Imager Suite �Tool for network Linux installations �Image based, can even chroot into image �Also has database which contains cluster configuration information �Tied in with C 3 �Can handle multiple images per cluster �Completely automated once image is created �http: //wiki. systemimager. org/index. php/Main_Page

Switcher Environment Manager �Handles “dot” files �Does not limit advanced users �Designed to help non-savvy users �Has guards in place that prevent system destruction �Which MPI to use – per user basis �Operates on two levels: user and system �Modules package is included for advanced users (and used by switcher)

OPIUM �Login is handled by the Head node �Once connection is established, client nodes do not require authentication �Synchronization run by root, at intervals �It stores hash values of the password in. shh folder along with a “salt” �Password changes must be done at the Head node as all changes propagate from there

Ganglia �Distributed Monitoring System �Low overhead per node �XML for data representation �Robust �Used in most cluster and grid solutions �http: //ganglia. info/papers/science. pdf

LAM/MPI �LAM - Local Area Multicomputer �LAM initializes the runtime environment on a select number of nodes �MPI 1 and some of MPI 2 �MPICH 2 can be used if installed �Two tiered debugging system exists: snapshot and communication log �Daemon based �http: //www. lam-mpi. org/

Open MPI �Replacement for LAM/MPI �Same team working on it �LAM/MPI relegated to upkeep only, all new development in Open MPI �Much more robust (OS, schedulers) �Full MPI-2 compliance �Much higher performance �http: //www. open-mpi. org/

PVM – Parallel Virtual Machine �Same as LAM/MPI �Can be run outside of the scope of Torque and Maui �Supports Windows nodes as well �Much better portability �Not as robust and powerful as Open MPI �http: //www. csm. ornl. gov/pvm/

Spin-offs �HA-OSCAR - http: //xcr. cenit. latech. edu/ha-oscar/ �VMware with OSCAR http: //www. vmware. com/vmtn/appliances/directory/ 341 �SSI-OSCAR - http: //ssi-oscar. gforge. inria. fr/ �SSS-OSCAR - http: //www. csm. ornl. gov/oscar/sss/

Conclusions �Future Direction �Open MPI �Windows, Mac OS?