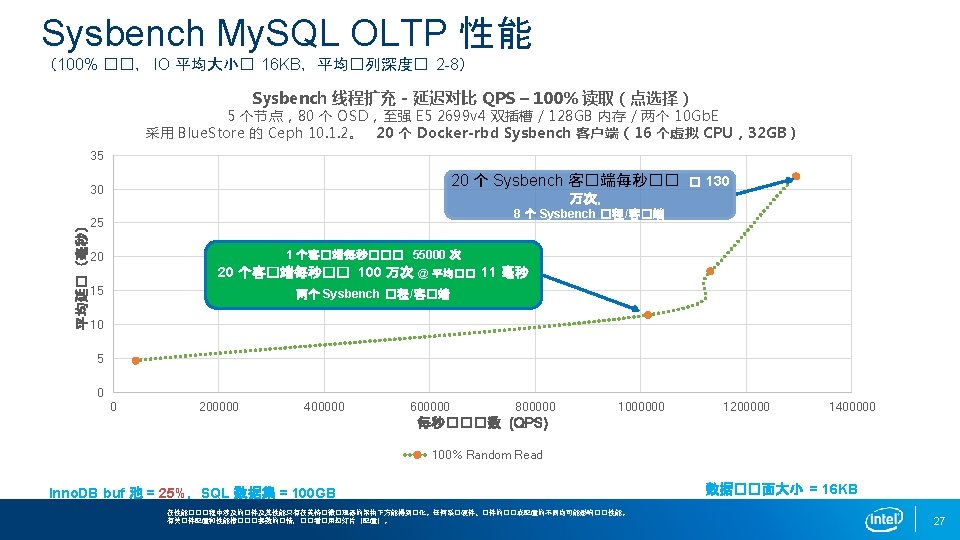

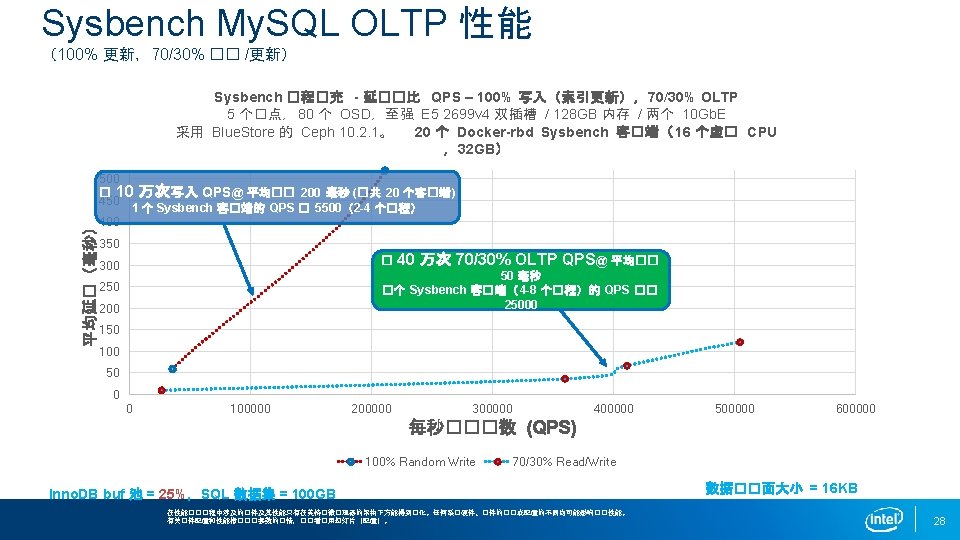

My SQL NVMe Ceph 25 http www redhat

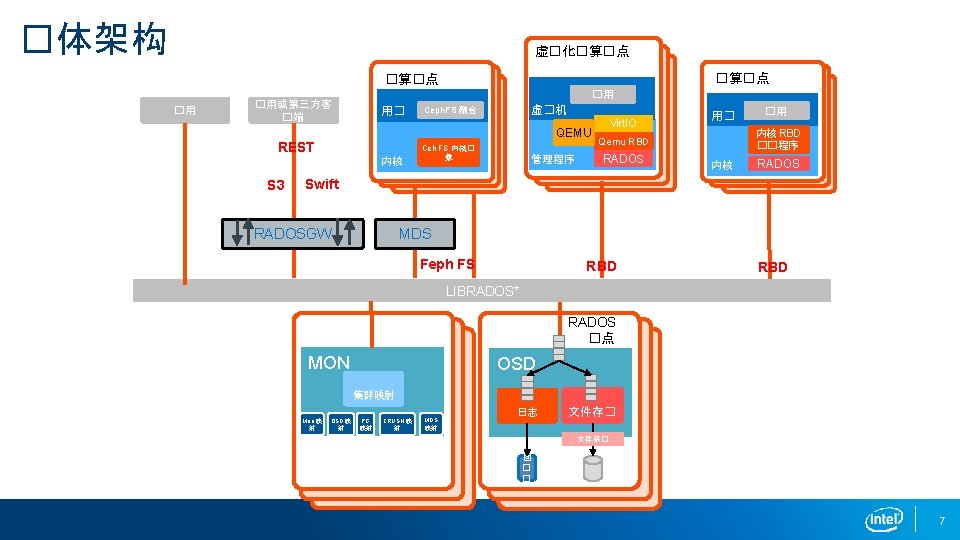

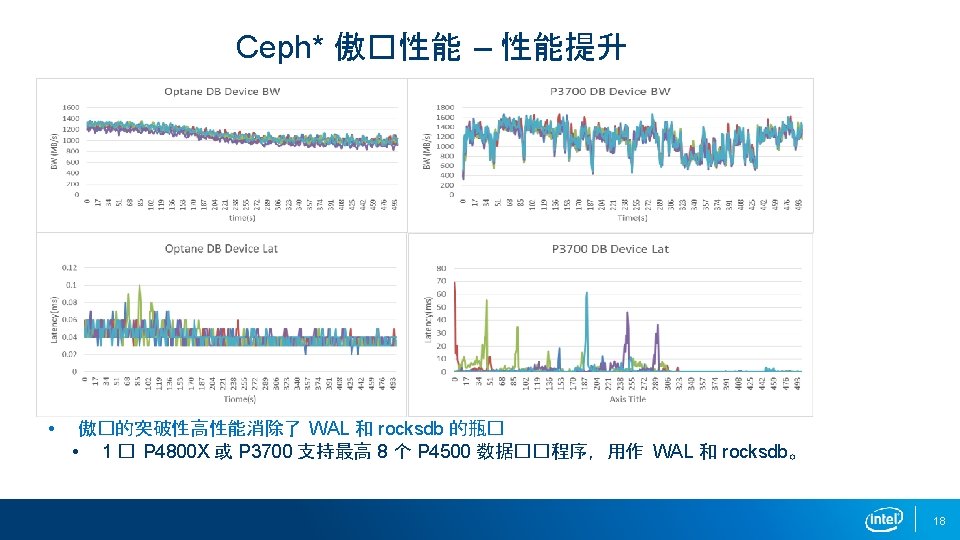

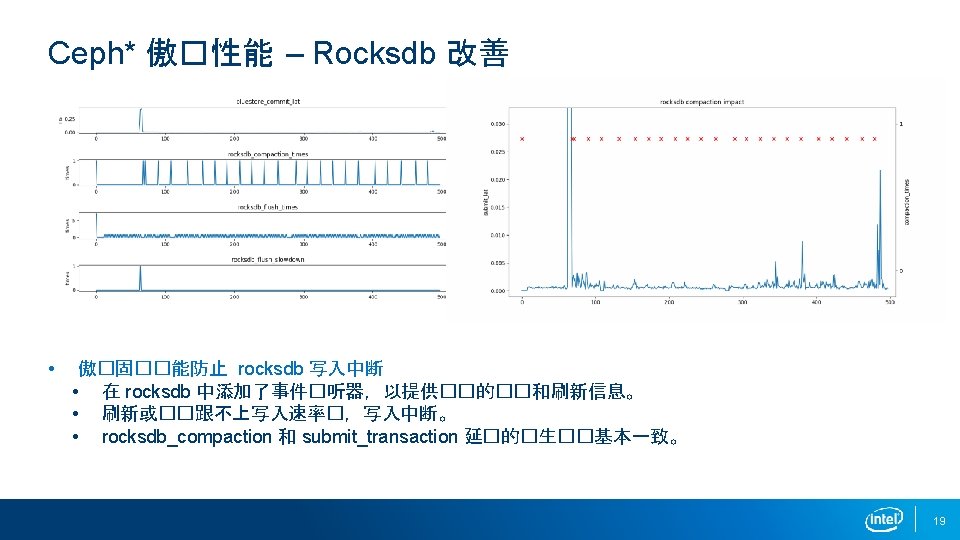

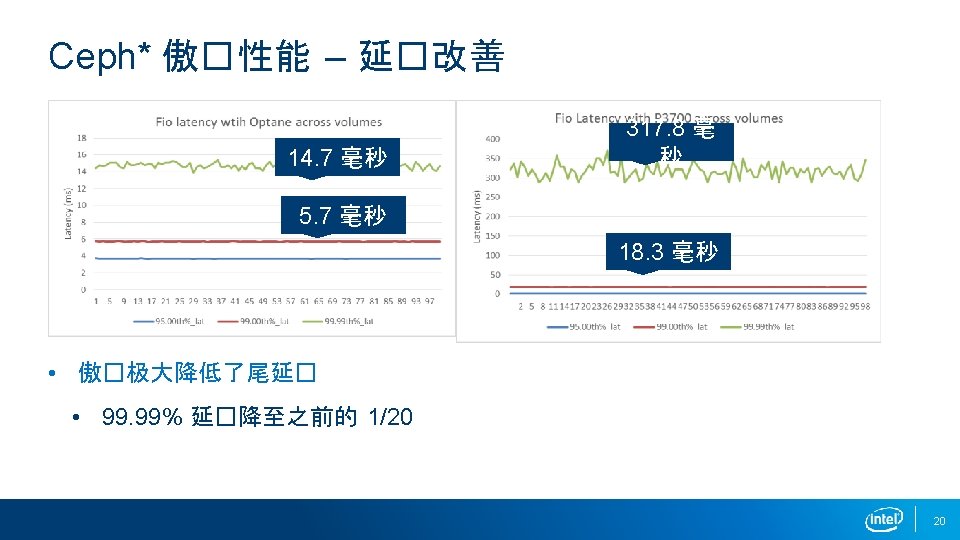

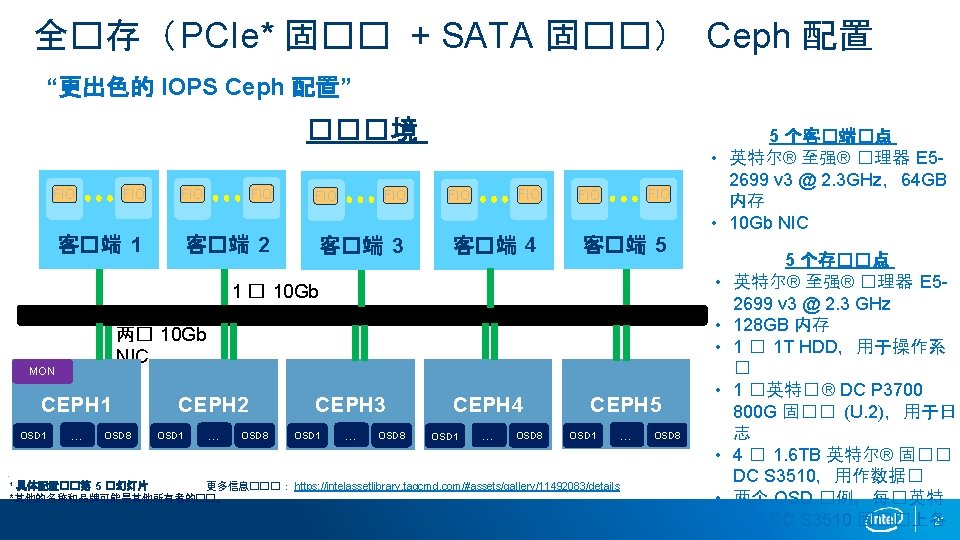

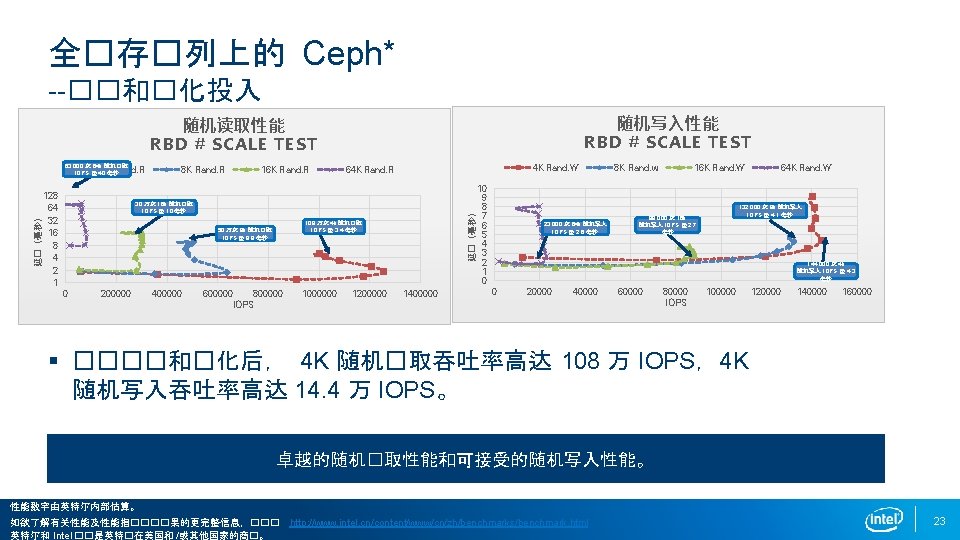

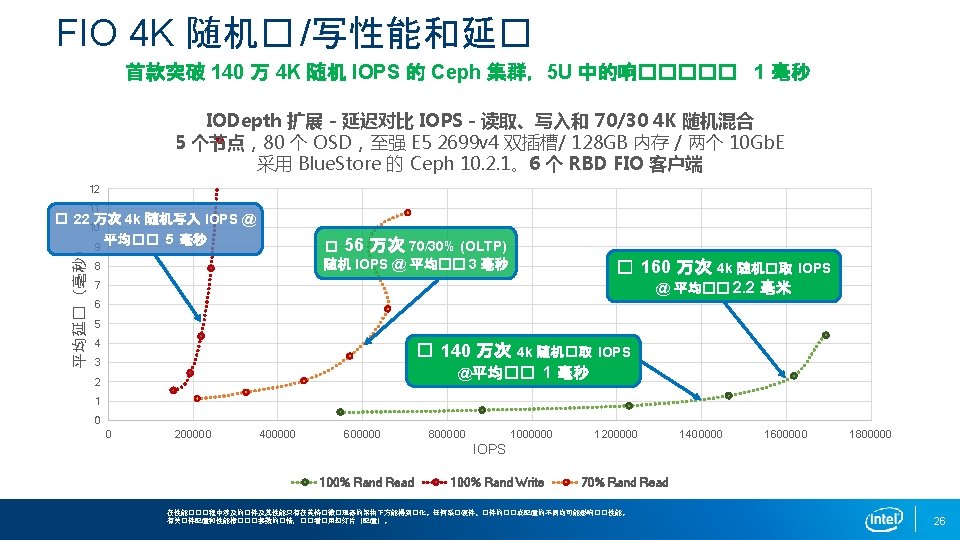

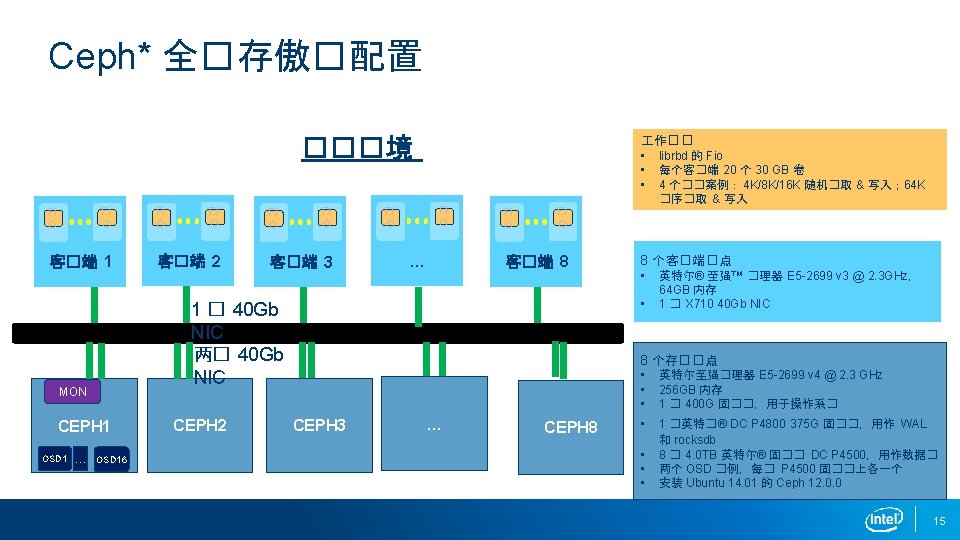

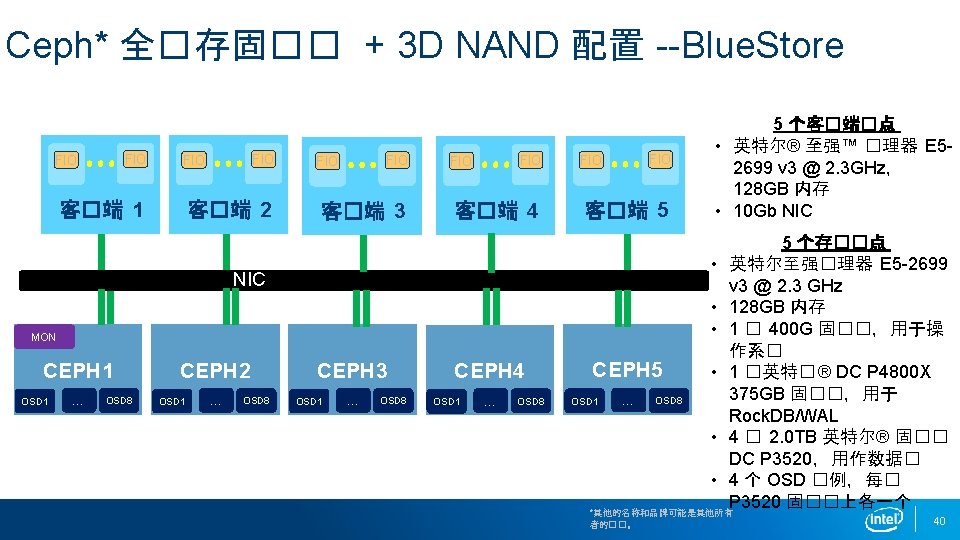

![Ceph* 全�存�� [global] debug paxos = 0/0 debug journal = 0/0 debug mds_balancer = Ceph* 全�存�� [global] debug paxos = 0/0 debug journal = 0/0 debug mds_balancer =](https://slidetodoc.com/presentation_image/39134fa8fd0ead708b3b1e64919d2c2b/image-46.jpg)

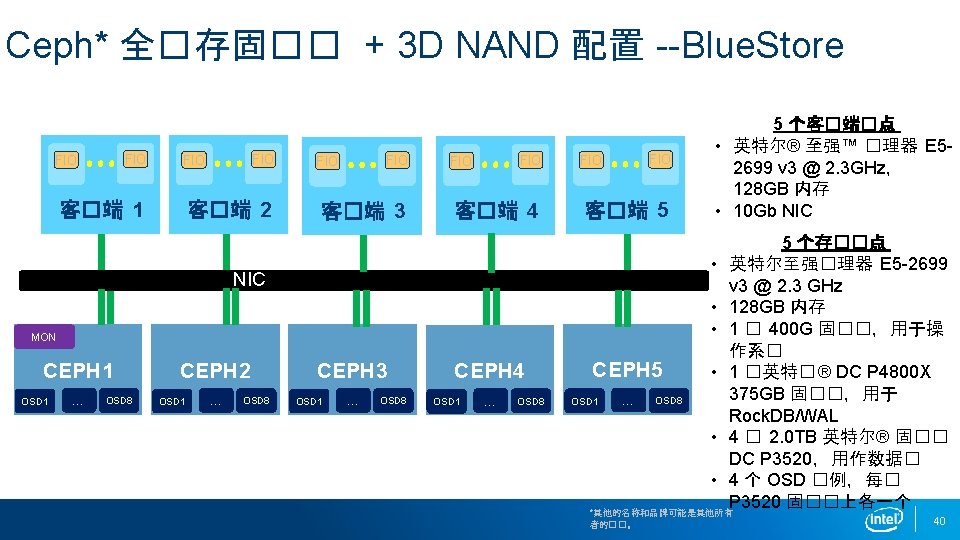

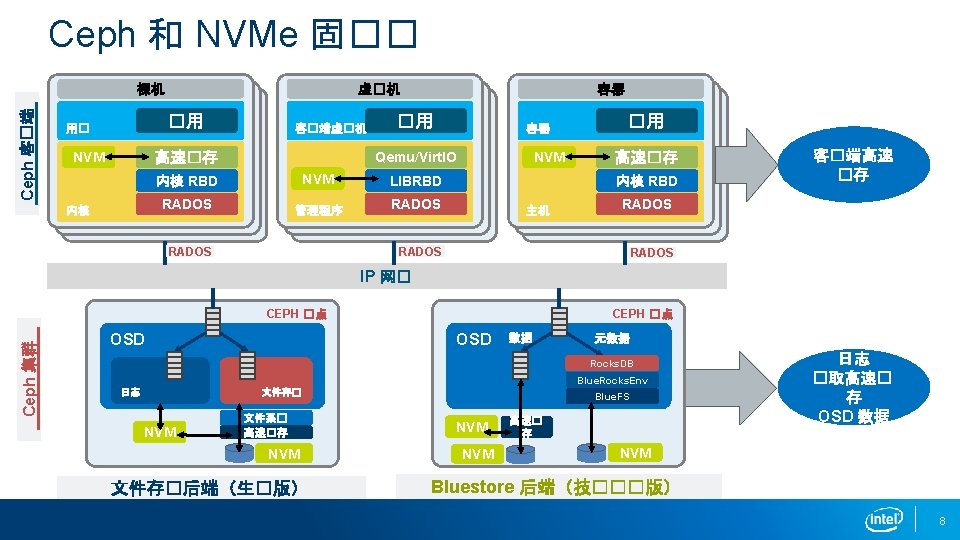

- Slides: 47

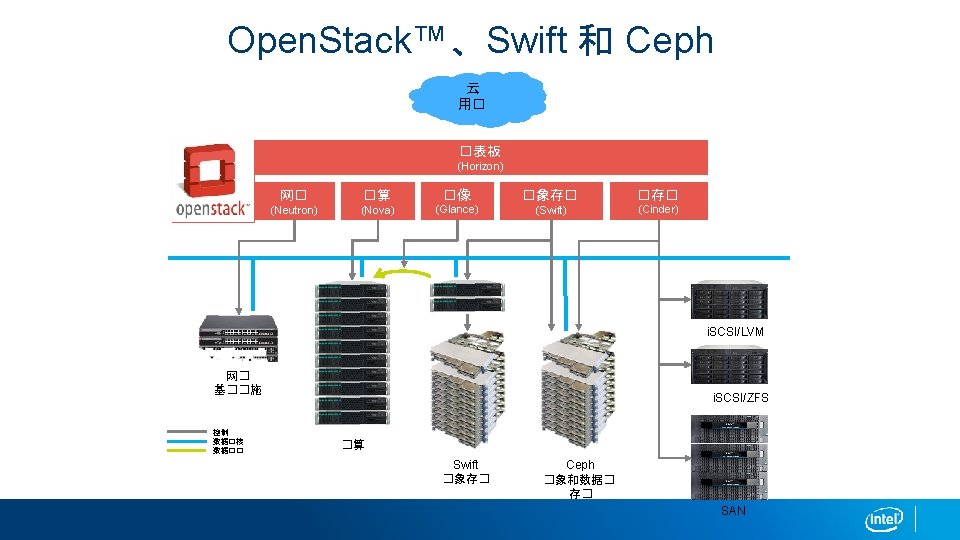

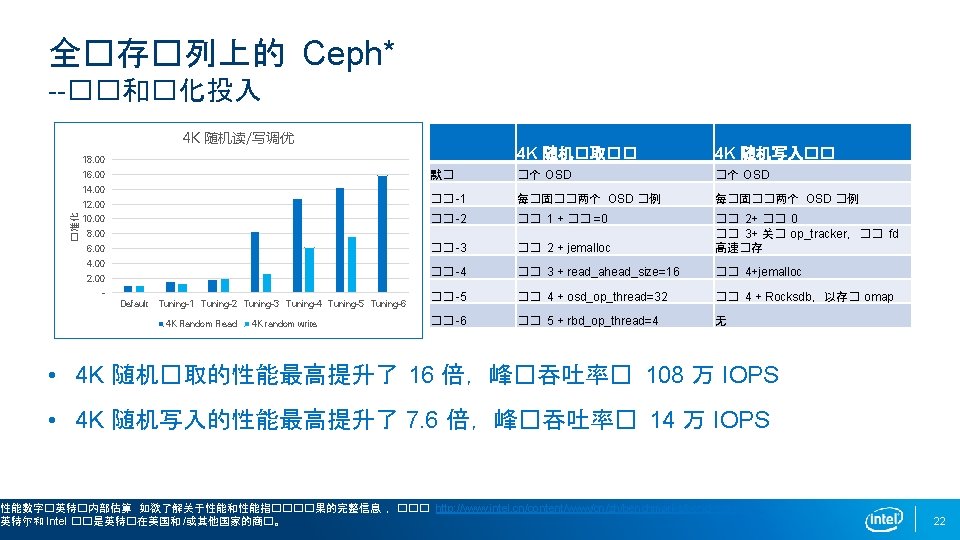

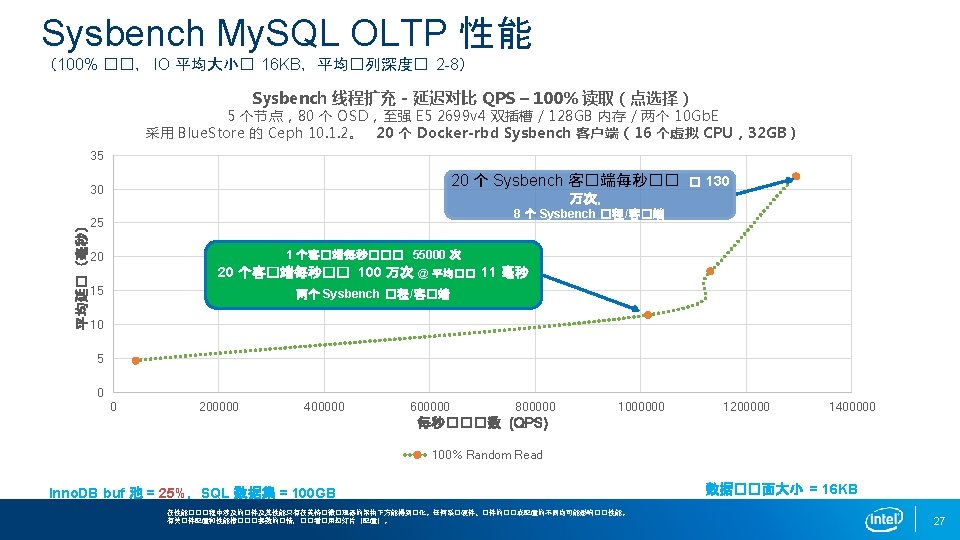

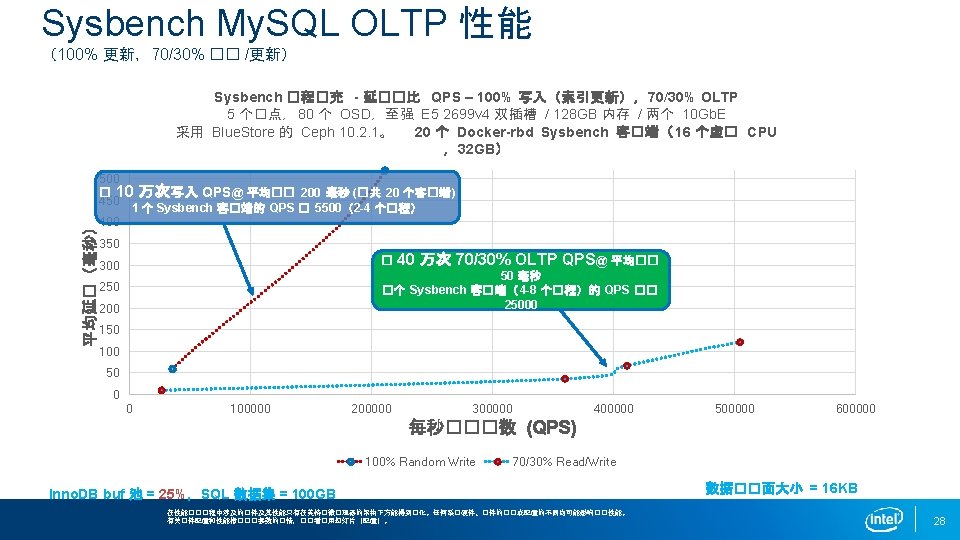

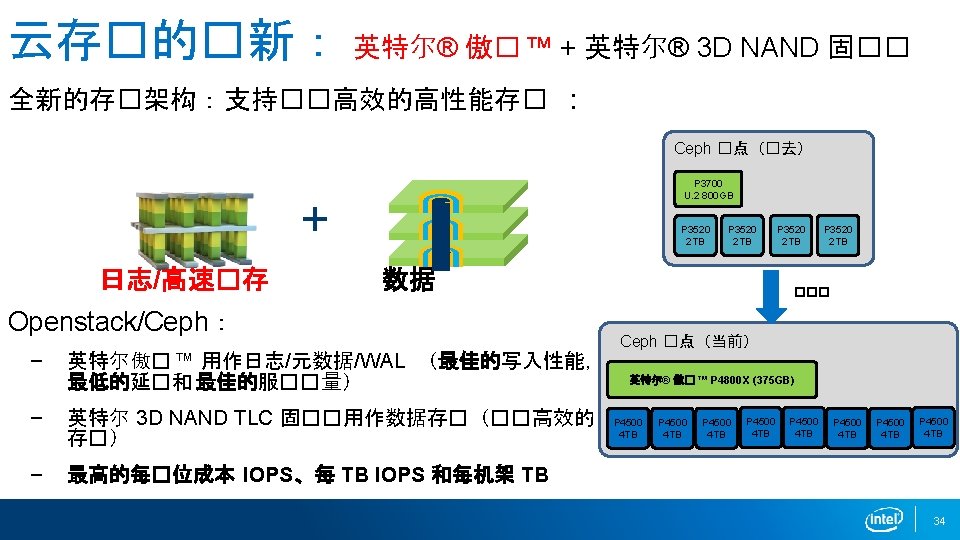

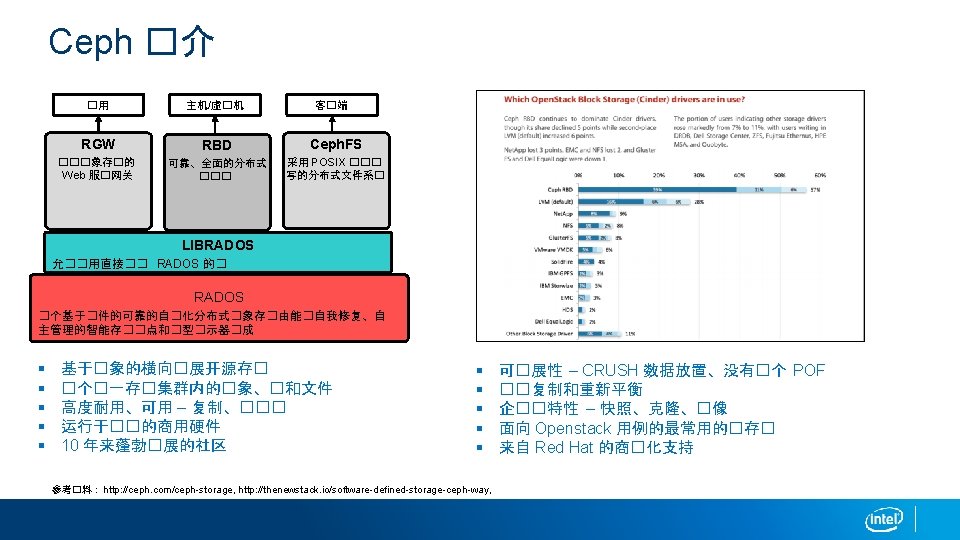

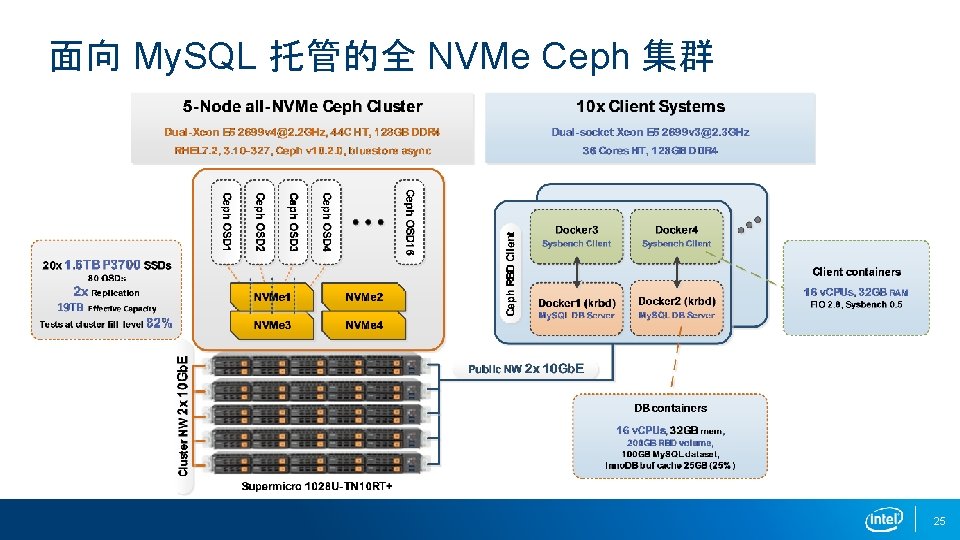

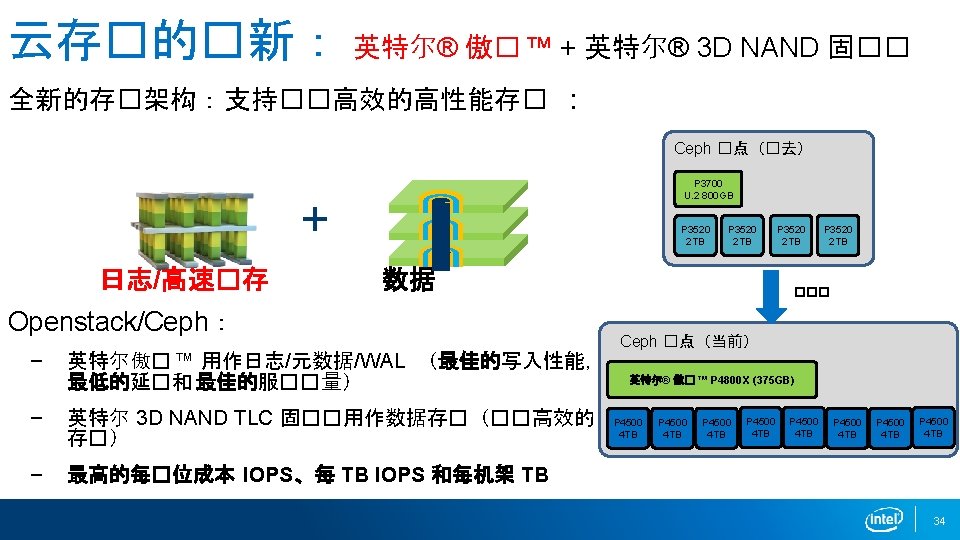

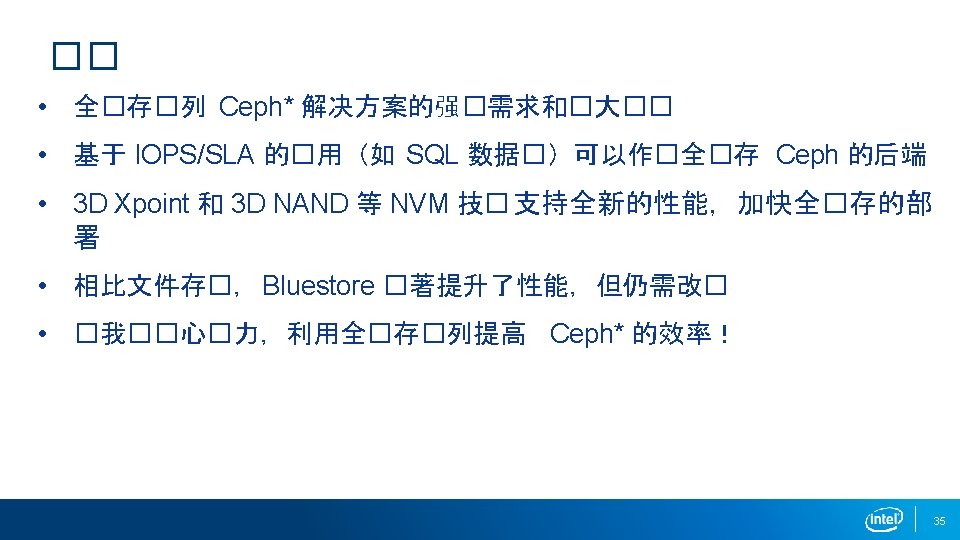

面向 My. SQL 托管的全 NVMe Ceph 集群 25

可用的参考架构(方案) • • • http: //www. redhat. com/en/files/resources/en-rhst-cephstorage-supermicro-INC 0270868_v 2_0715. pdf http: //www. qct. io/account/download? order_download_id=1065&dtype=Reference%20 Architecture https: //www. redhat. com/en/resources/red-hat-ceph-storage-hardware-configuration-guide https: //www. percona. com/resources/videos/accelerating-ceph-database-workloads-all-pcie-ssd-cluster https: //www. percona. com/resources/videos/mysql-cloud-head-performance-lab https: //www. thomas-krenn. com/en/products/storage-systems/suse-enterprise-storage/ses-appliance-performance. html • https: //intelassetlibrary. tagcmd. com/#assets/gallery/11492083

![Ceph 全存 global debug paxos 00 debug journal 00 debug mdsbalancer Ceph* 全�存�� [global] debug paxos = 0/0 debug journal = 0/0 debug mds_balancer =](https://slidetodoc.com/presentation_image/39134fa8fd0ead708b3b1e64919d2c2b/image-46.jpg)

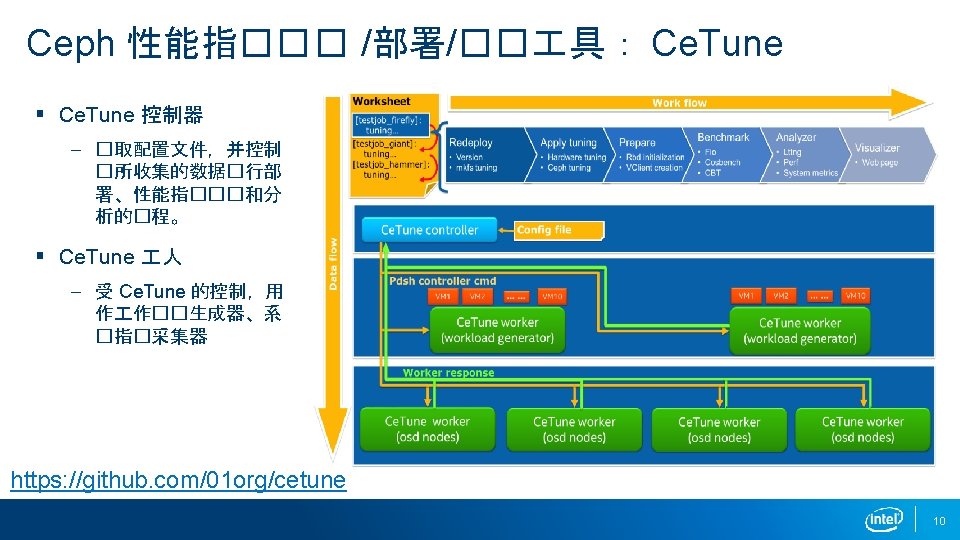

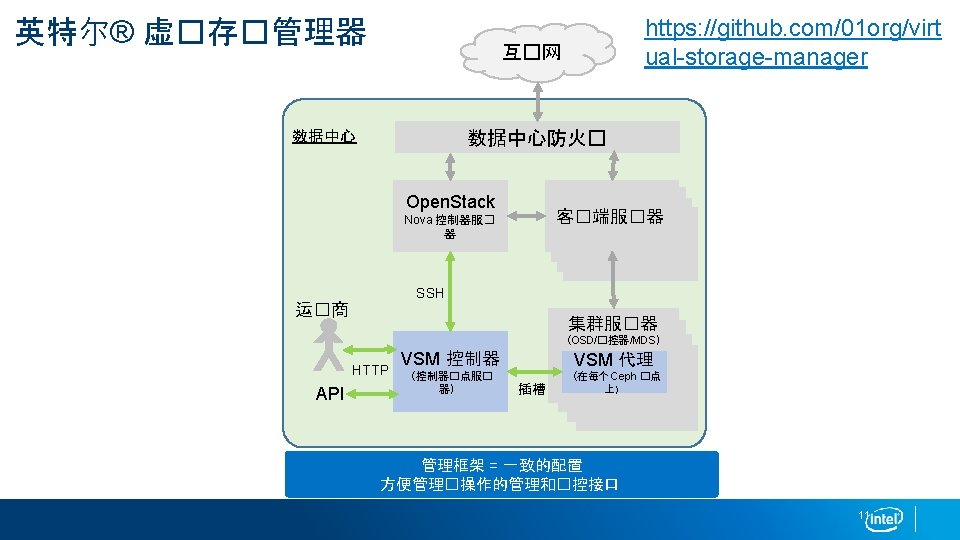

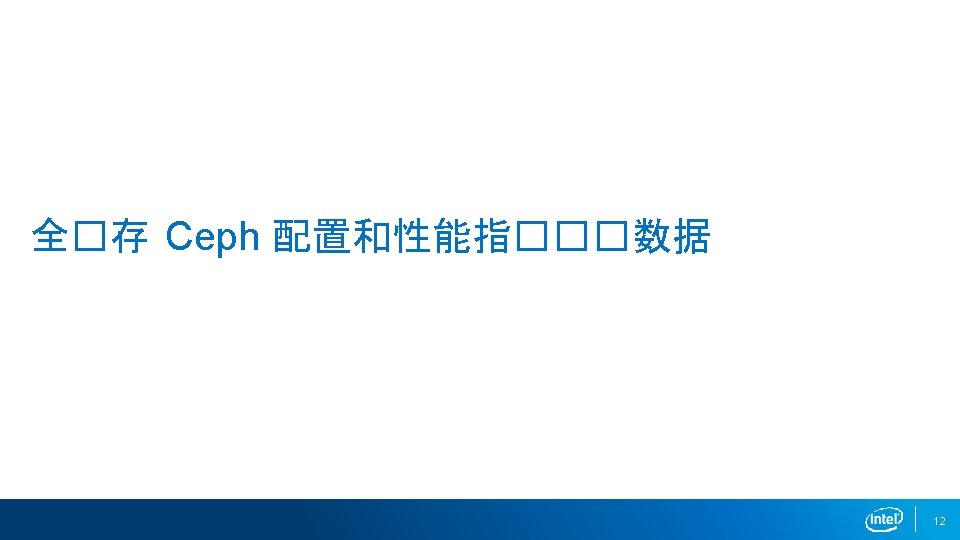

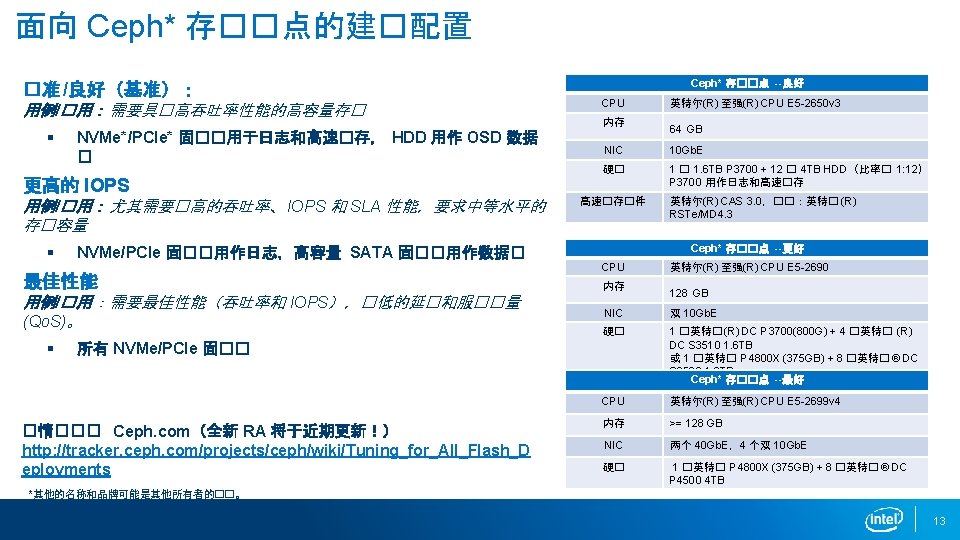

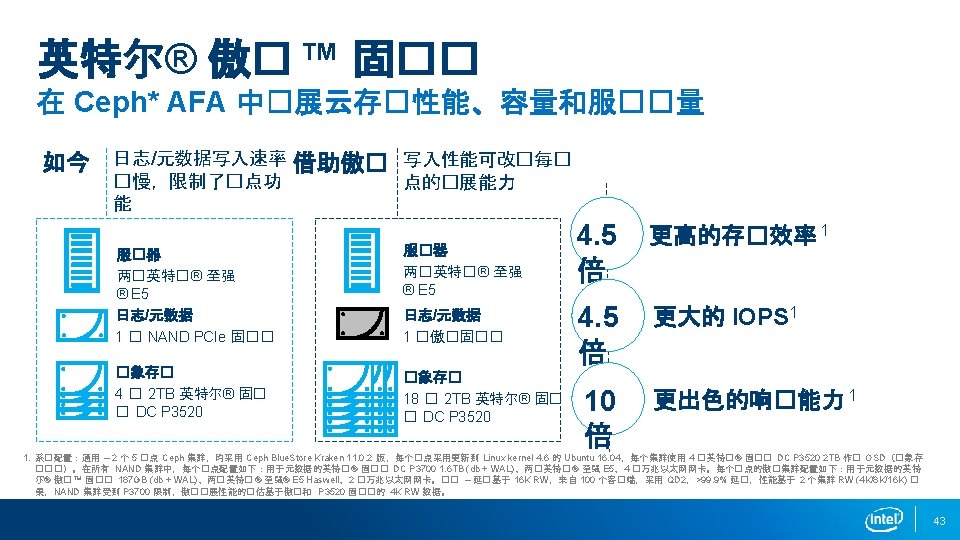

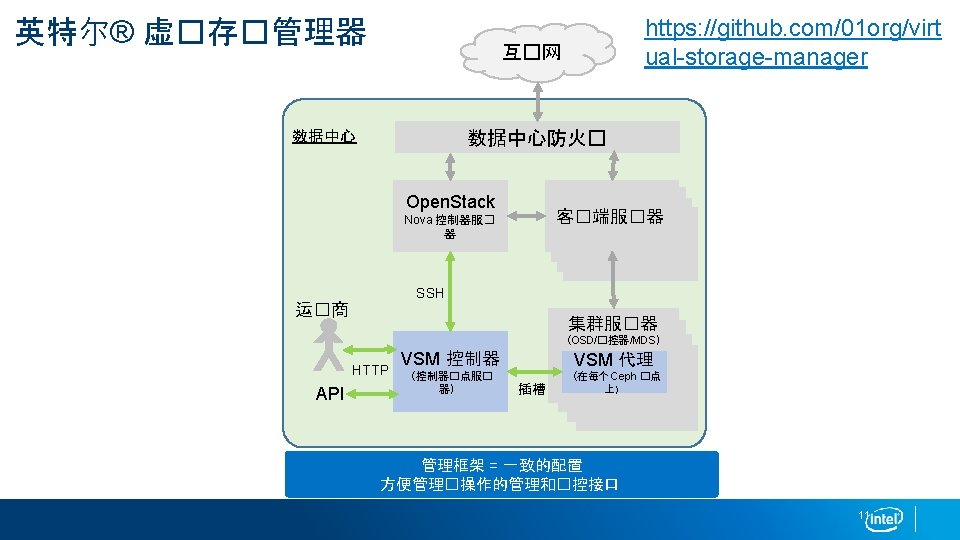

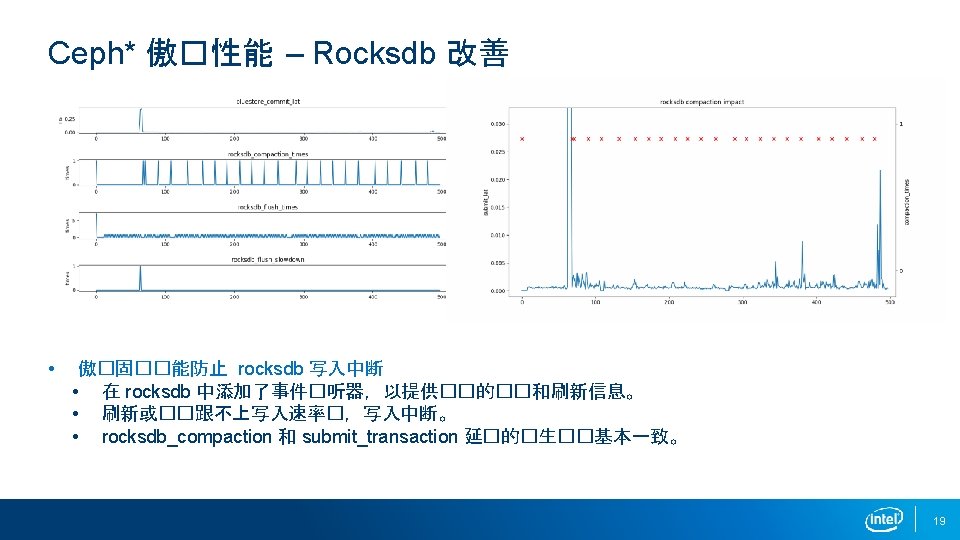

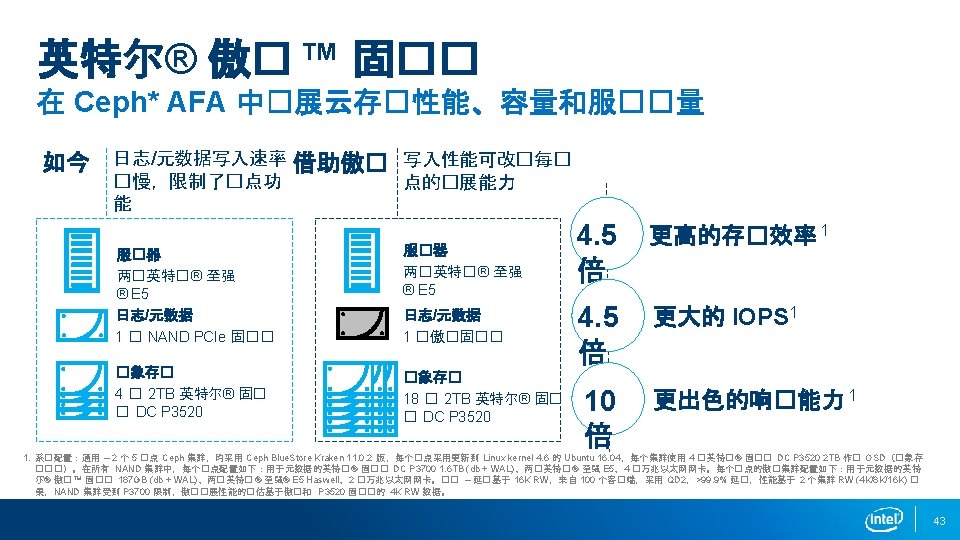

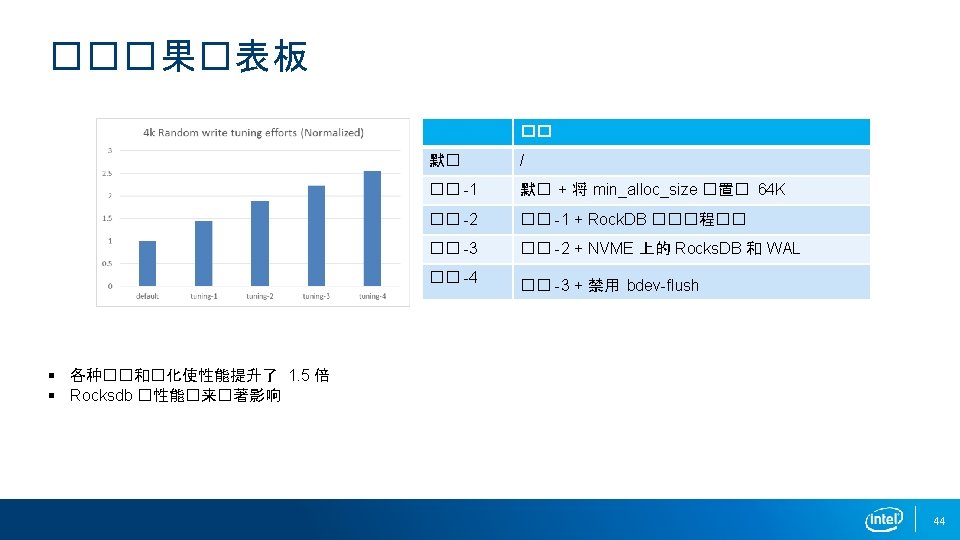

Ceph* 全�存�� [global] debug paxos = 0/0 debug journal = 0/0 debug mds_balancer = 0/0 debug mds = 0/0 mon_pg_warn_max_per_osd = 10000 debug lockdep = 0/0 debug auth = 0/0 debug mds_log = 0/0 debug mon = 0/0 debug perfcounter = 0/0 debug monc = 0/0 debug rbd = 0/0 debug throttle = 0/0 debug mds_migrator = 0/0 debug client = 0/0 debug rgw = 0/0 debug finisher = 0/0 debug journaler = 0/0 debug ms = 0/0 debug hadoop = 0/0 debug mds_locker = 0/0 debug tp = 0/0 debug context = 0/0 debug osd = 0/0 debug bluestore = 0/0 debug objclass = 0/0 debug objecter = 0/0 debug log = 0 debug filer = 0/0 debug mds_log_expire = 0/0 debug crush = 0/0 debug optracker = 0/0 debug rados = 0/0 debug heartbeatmap = 0/0 debug buffer = 0/0 debug asok = 0/0 debug objectcacher = 0/0 debug filestore = 0/0 debug timer = 0/0 mutex_perf_counter = True rbd_cache = False ms_crc_header = False ms_crc_data = False osd_pool_default_pgp_num = 32768 osd_pool_default_size = 2 rbd_op_threads = 4 Ceph*x require signatures = False Ceph*x sign messages = False osd_pool_default_pg_num = 32768 throttler_perf_counter = False auth_service_required = none auth_cluster_required = none auth_client_required = none osd_mount_options_xfs = rw, noatime, inode 64, logbsize=256 k, delaylog osd_mkfs_type = xfs filestore_queue_max_ops = 5000 osd_client_message_size_cap = 0 objecter_infilght_op_bytes = 1048576000 ms_dispatch_throttle_bytes = 1048576000 osd_mkfs_options_xfs = -f -i size=2048 filestore_wbthrottle_enable = True filestore_fd_cache_shards = 64 objecter_inflight_ops = 1024000 filestore_queue_committing_max_bytes = 1048576000 osd_op_num_threads_per_shard = 2 filestore_queue_max_bytes = 10485760000 osd_op_threads = 32 osd_op_num_shards = 16 filestore_max_sync_interval = 10 filestore_op_threads = 16 osd_pg_object_context_cache_count = 10240 journal_queue_max_ops = 3000 journal_queue_max_bytes = 10485760000 journal_max_write_entries = 1000 filestore_queue_committing_max_ops = 5000 journal_max_write_bytes = 1048576000 osd_enable_op_tracker = False filestore_fd_cache_size = 10240 osd_client_message_cap = 0 46