Cost PI CostEffective Performance Isolation for Shared NVMe

- Slides: 35

Cost. PI: Cost-Effective Performance Isolation for Shared NVMe SSDs Jiahao Liu, Fang Wang, Dan Feng WNLO, KLISS, Ministry of Education of China School of Computer Science and Technology, HUST ICPP 2019: Proceedings of the 48 th International Conference on Parallel Processing 1

Outline • Introduction • Background and Motivation • Design • Evaluation • Conclusion 2

Introduction(1/2) • NVMe SSDs have been wildly adopted to provide storage services in cloud platforms where diverse workloads are colocated. • To achieve performance isolation, existing solutions partition the shared SSD into multiple isolated regions and assign each workload a separate region. • However, these isolation solutions could result in inefficient resource utilization and imbalanced wear. 3

Introduction(2/2) • In this paper, we present Cost. PI to improve isolation and resource utilization by providing 1. latency -sensitive workloads with dedicated resources 2. throughput-oriented and capacity-oriented workloads with shared resources. 4

Outline • Introduction • Background and Motivation • Design • Evaluation • Conclusion 5

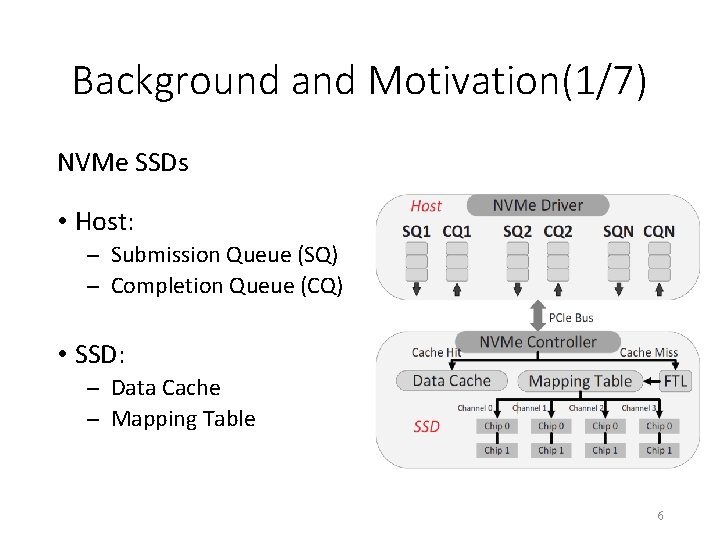

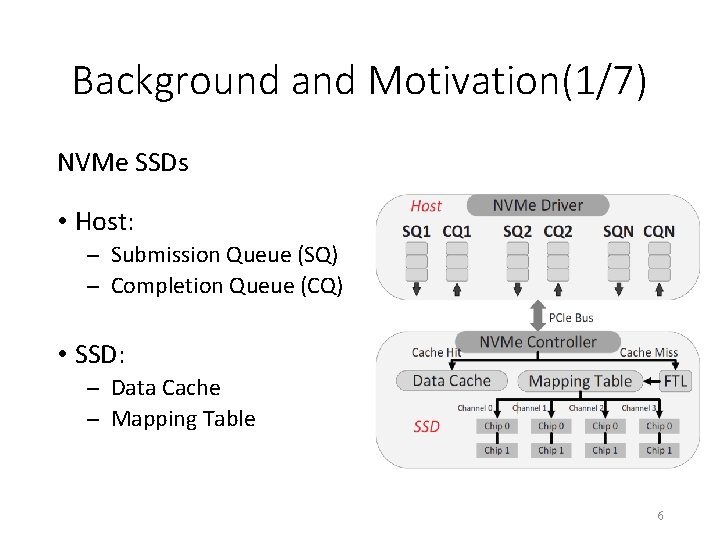

Background and Motivation(1/7) NVMe SSDs • Host: Submission Queue (SQ) Completion Queue (CQ) • SSD: Data Cache Mapping Table 6

Background and Motivation(2/7) • Diverse workloads on the shared NVMe SSDs. 1. Performance interference of cache contention a) Data cache contention b) Mapping table cache contention 2. Inefficient resource utilization 3. Wear-imbalance for the shared SSD 7

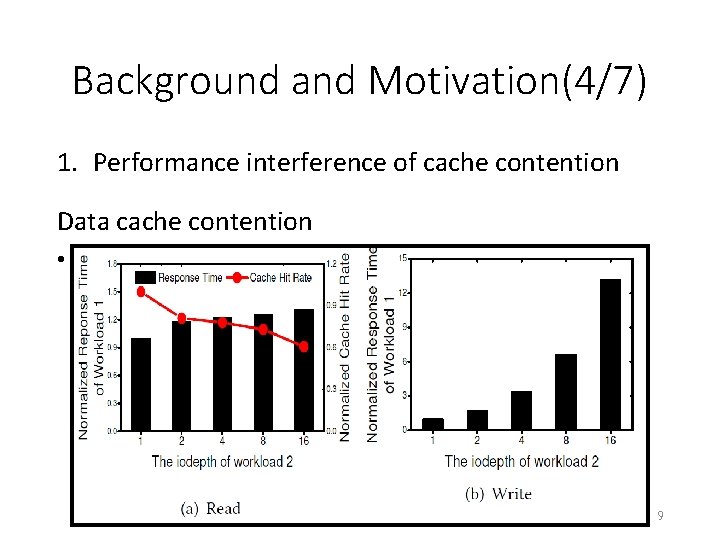

Background and Motivation(3/7) 1. Performance interference of cache contention Experiment setup • Use MQSim to generate two workloads and each workload comprises dedicated mapping table cache. • Isolate workloads at the hardware level • Both two workloads issue 4 KB random request 8

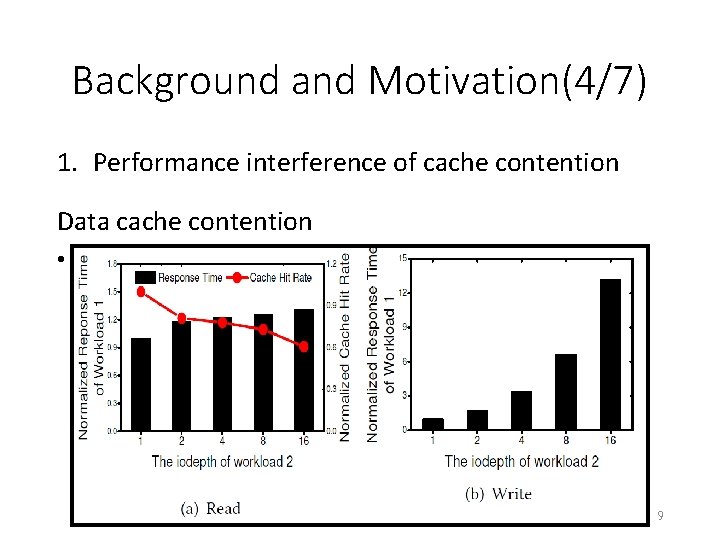

Background and Motivation(4/7) 1. Performance interference of cache contention Data cache contention • The iodepth a) Workload 1: 1 b) Workload 2: varies from 1 to 16 9

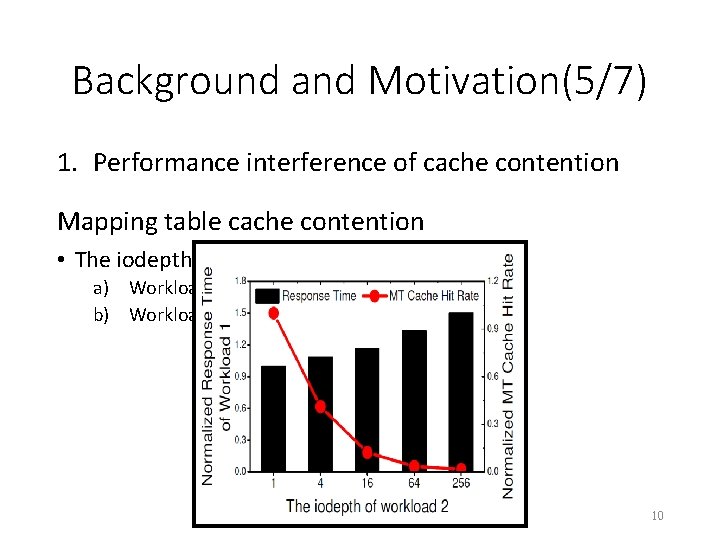

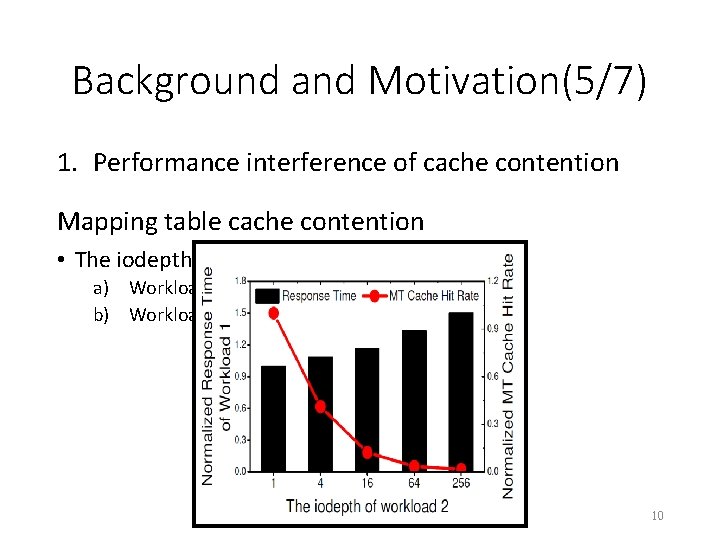

Background and Motivation(5/7) 1. Performance interference of cache contention Mapping table cache contention • The iodepth a) Workload 1: 1 b) Workload 2: varies from 1 to 256 10

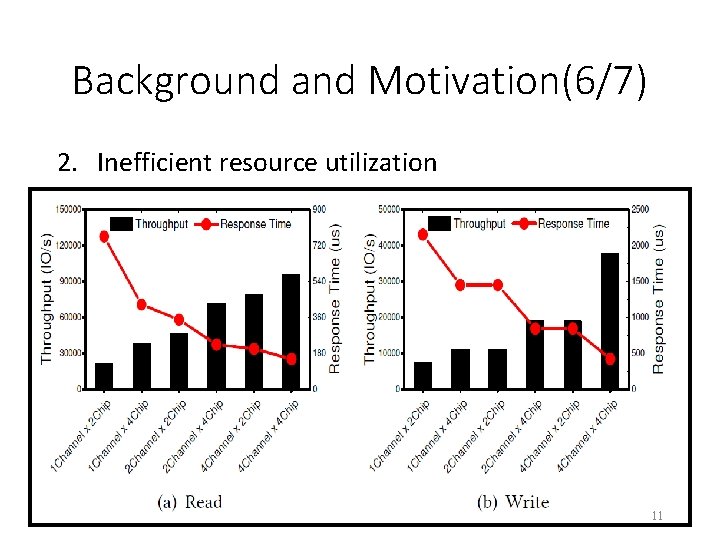

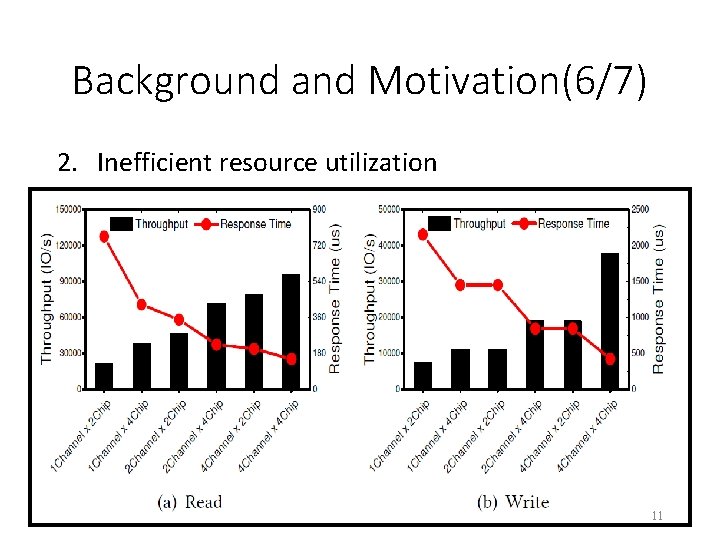

Background and Motivation(6/7) 2. Inefficient resource utilization • Higher capacity comes with higher throughput and lower response time. • However, the physical isolation solutions bind parallelism and capacity together, which may lead to the predicament of being unable to achieve parallelismefficient and capacity-efficient simultaneously. • This is because the performance requirements of workloads are not always adaptive to their capacity requirements. 11

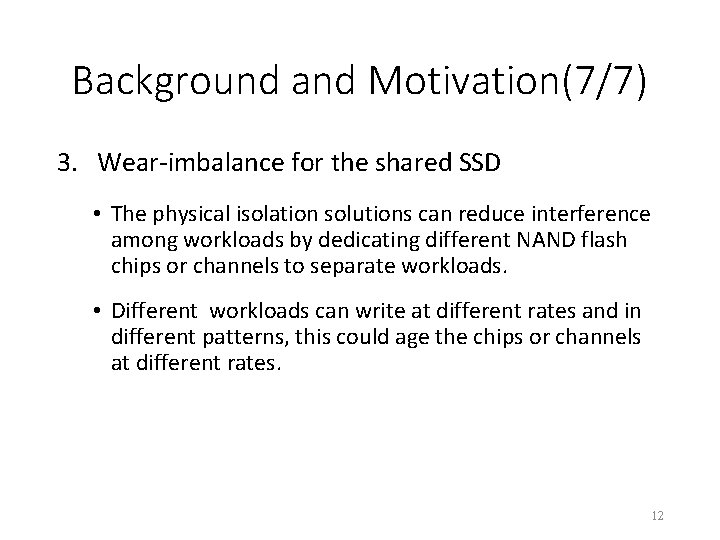

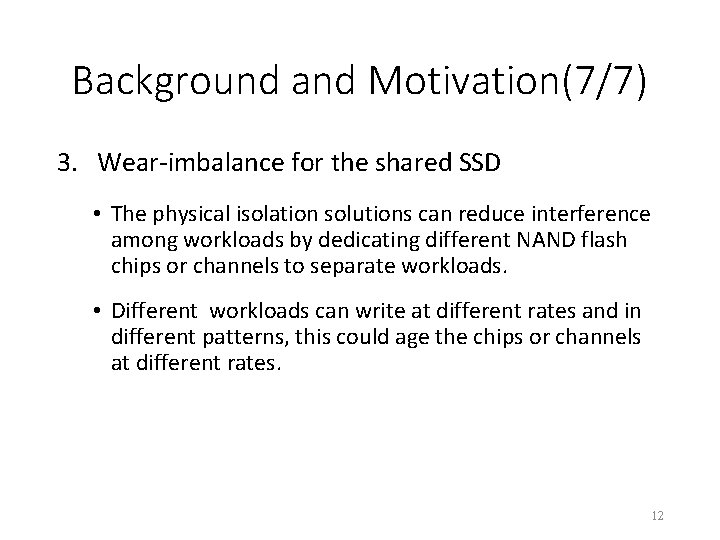

Background and Motivation(7/7) 3. Wear-imbalance for the shared SSD • The physical isolation solutions can reduce interference among workloads by dedicating different NAND flash chips or channels to separate workloads. • Different workloads can write at different rates and in different patterns, this could age the chips or channels at different rates. 12

Outline • Introduction • Background and Motivation • Design • Evaluation • Conclusion 13

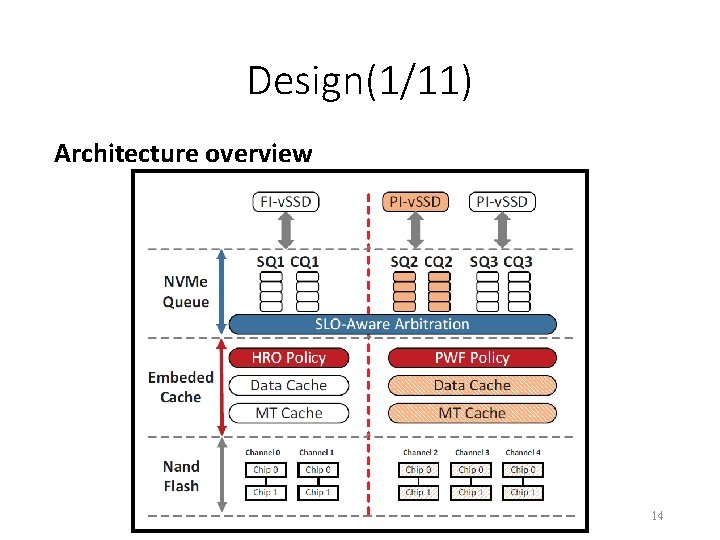

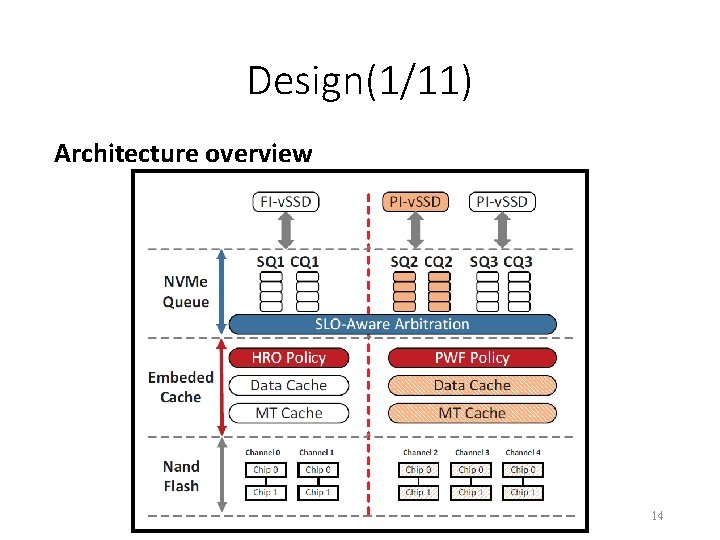

Design(1/11) Architecture overview 14

Design(2/11) Architecture overview 1. full-isolated virtualized SSDs (FI-v. SSDs) • FI-v. SSDs provide storage services for latency-sensitive workloads. 2. para-isolated virtualized SSDs (PI-SSDs) • PI-v. SSDs provide storage services for throughputoriented workloads and capacity-oriented workloads. 15

Design(3/11) Architecture overview 1. Hit Rate Oriented (HRO) cache • For dedicated data cache • For latency-sensitive workloads. 2. Prioritized Write-Friendly (PWF) cache • For shared data cache • For throughput-oriented workloads and capacityoriented workloads. • The PWF policy can prioritize cache pages of different workloads and reduce the amount of flash writes. 16

Design(4/11) Architecture overview 1. SLO-aware Arbitration 2. Asymmetric Cache Allocation 3. Channel-granularity NAND Flash Allocation 17

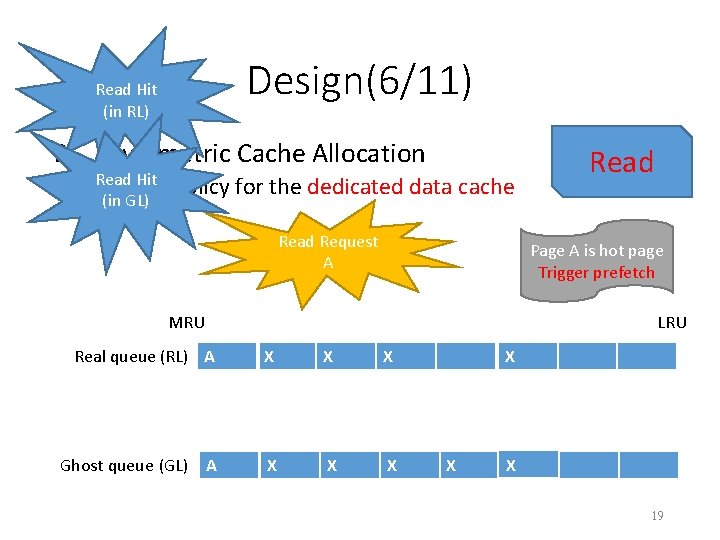

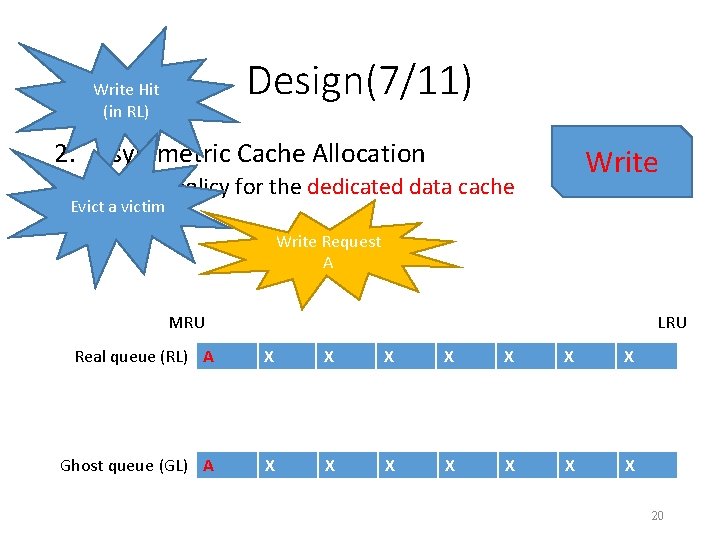

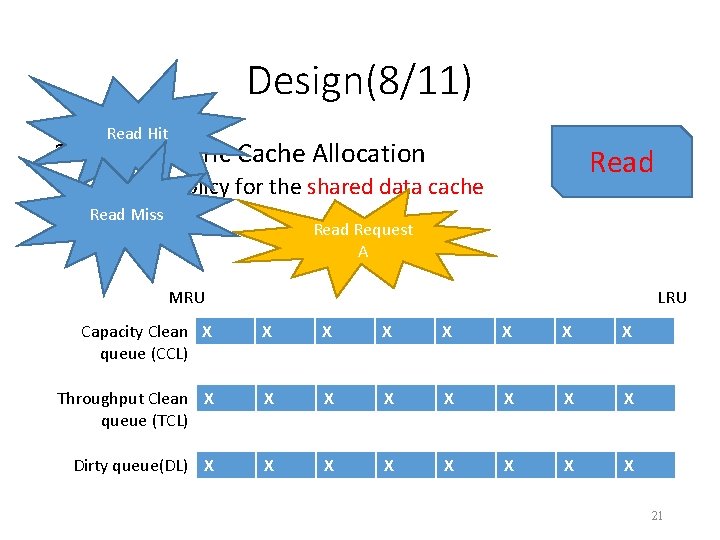

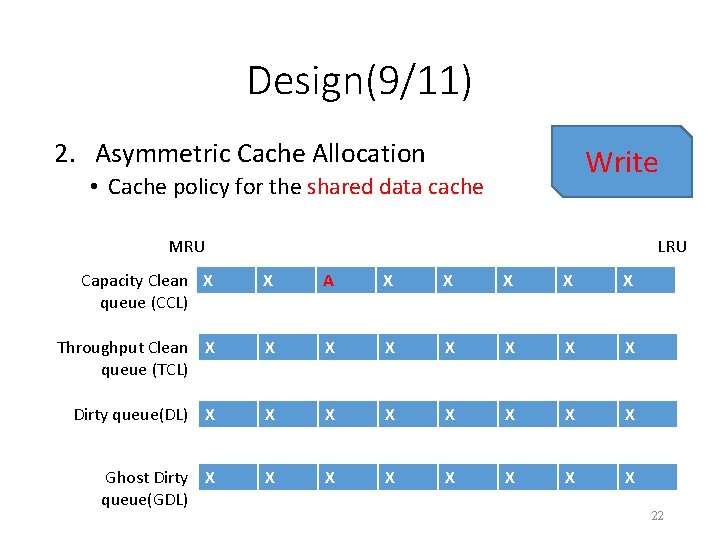

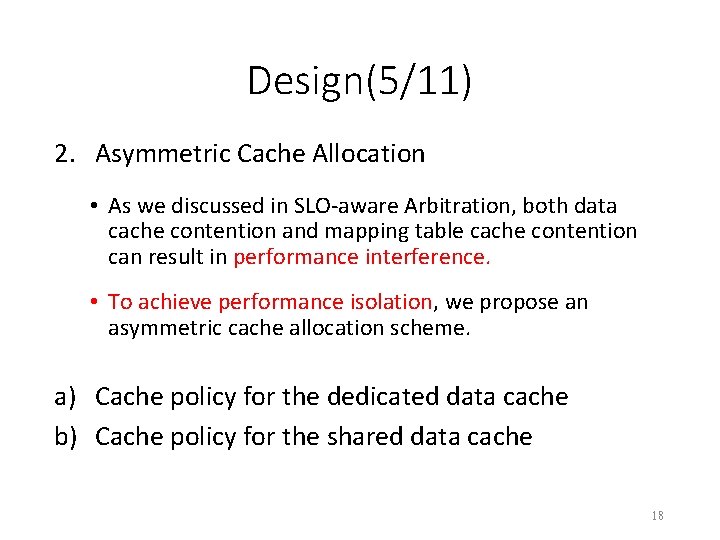

Design(5/11) 2. Asymmetric Cache Allocation • As we discussed in SLO-aware Arbitration, both data cache contention and mapping table cache contention can result in performance interference. • To achieve performance isolation, we propose an asymmetric cache allocation scheme. a) Cache policy for the dedicated data cache b) Cache policy for the shared data cache 18

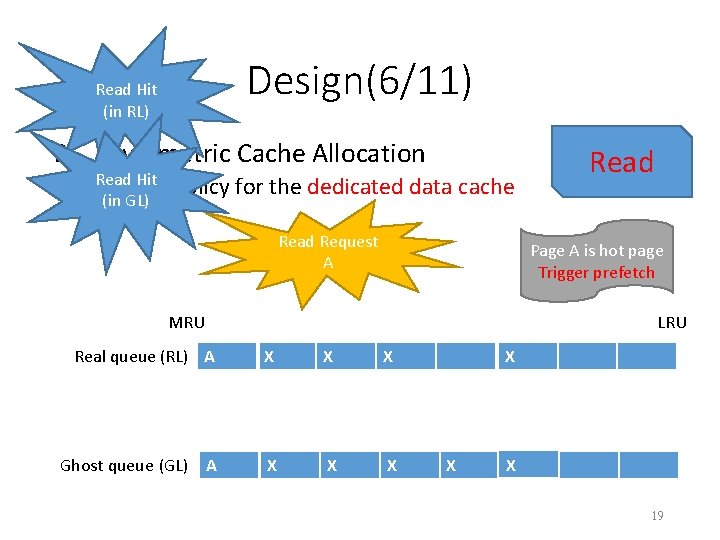

Design(6/11) Read Hit (in RL) 2. Asymmetric Cache Allocation Hit • Read Cache (in GL) policy for the dedicated data cache Read Request A Read Page A is hot page Trigger prefetch MRU LRU Real queue (RL) XA X X X X Ghost queue (GL) X A XA X XX XX X X 19

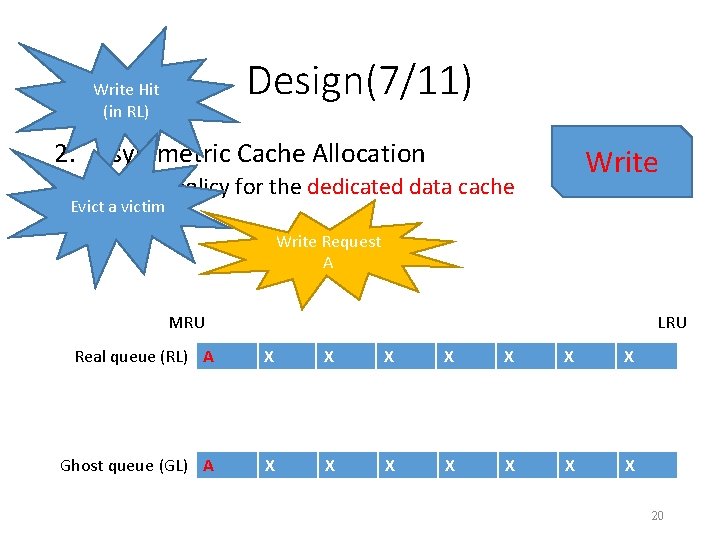

Design(7/11) Write Hit (in RL) 2. Asymmetric Cache Allocation Write • Cache policy for the dedicated data cache Evict a victim Write Request A MRU LRU Real queue (RL) A X X X X X B Ghost queue (GL) AX X X XX X X 20

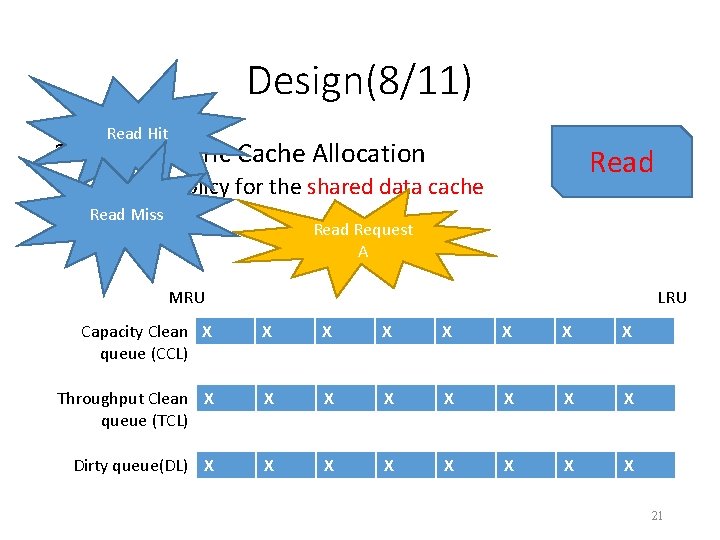

Design(8/11) Read Hit 2. Asymmetric Cache Allocation Read • Cache policy for the shared data cache Read Miss Read Request A MRU LRU Capacity Clean XA queue (CCL) X XA X X X Throughput Clean X queue (TCL) X X X X Dirty queue(DL) X X X X 21

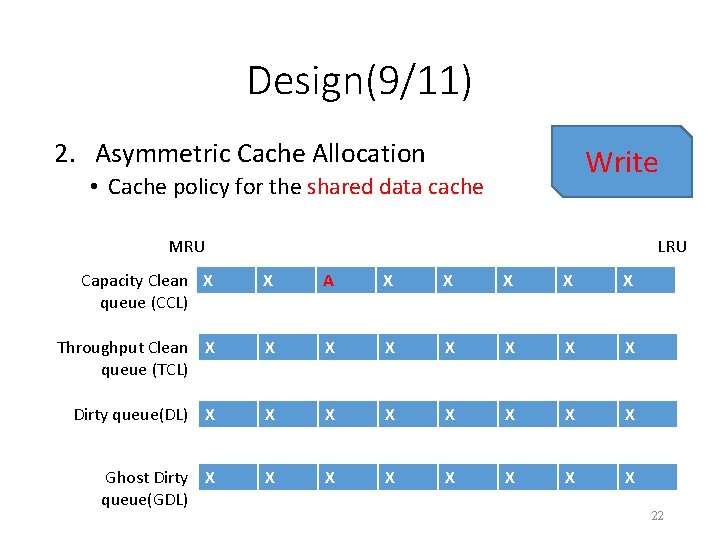

Design(9/11) 2. Asymmetric Cache Allocation Write • Cache policy for the shared data cache MRU LRU Capacity Clean X queue (CCL) X A X X X Throughput Clean X queue (TCL) X X X X Dirty queue(DL) X X X X Ghost Dirty X queue(GDL) X X X X 22

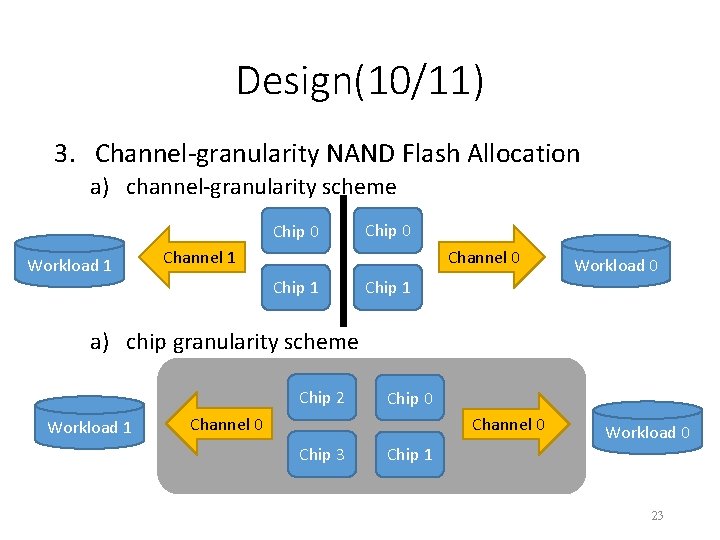

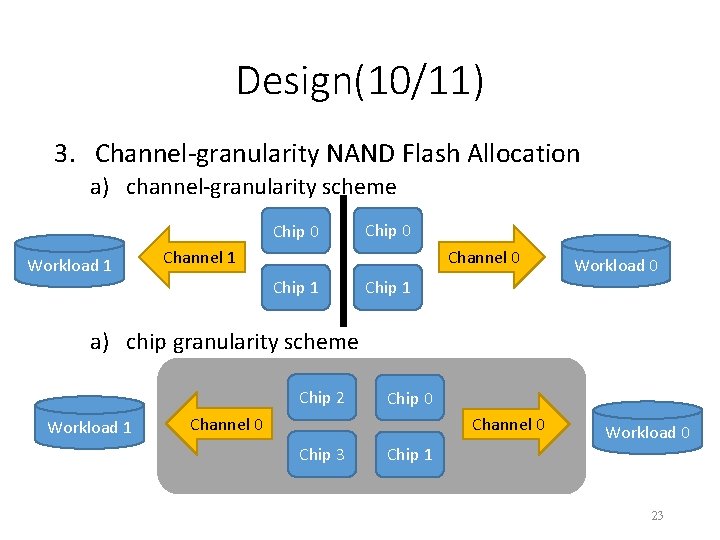

Design(10/11) 3. Channel-granularity NAND Flash Allocation a) channel-granularity scheme Chip 0 Workload 1 Chip 0 Channel 1 Channel 0 Chip 1 Workload 0 a) chip granularity scheme Chip 2 Workload 1 Chip 0 Channel 0 Chip 3 Workload 0 Chip 1 23

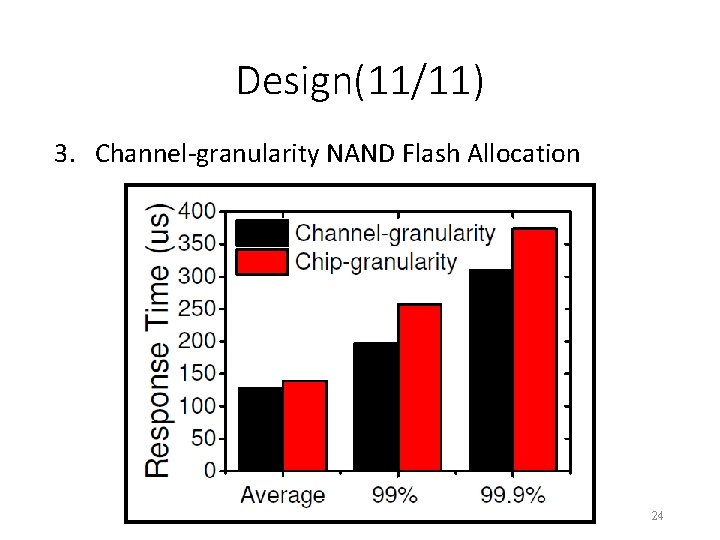

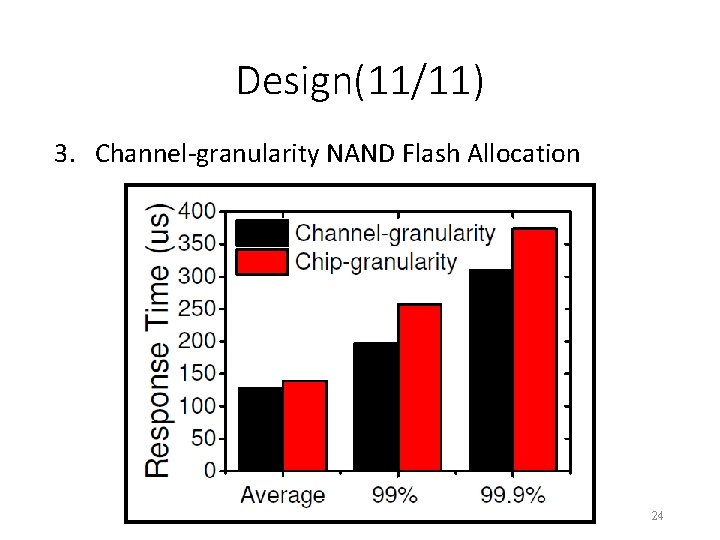

Design(11/11) 3. Channel-granularity NAND Flash Allocation 24

Outline • Introduction • Background and Motivation • Design • Evaluation • Conclusion 25

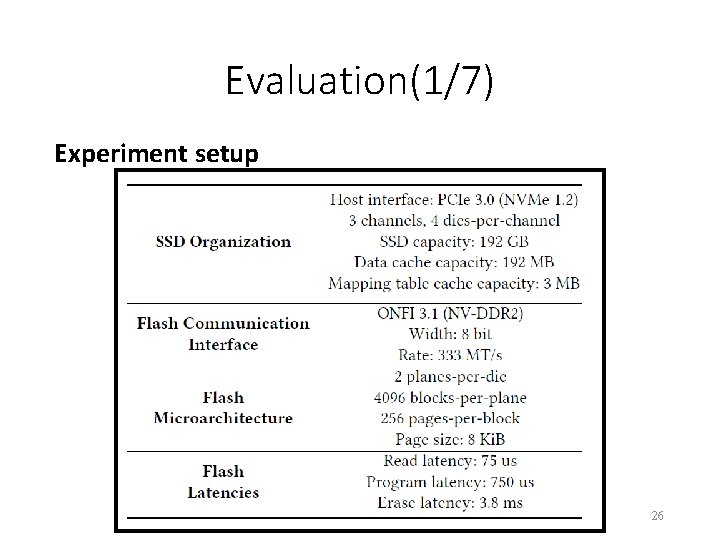

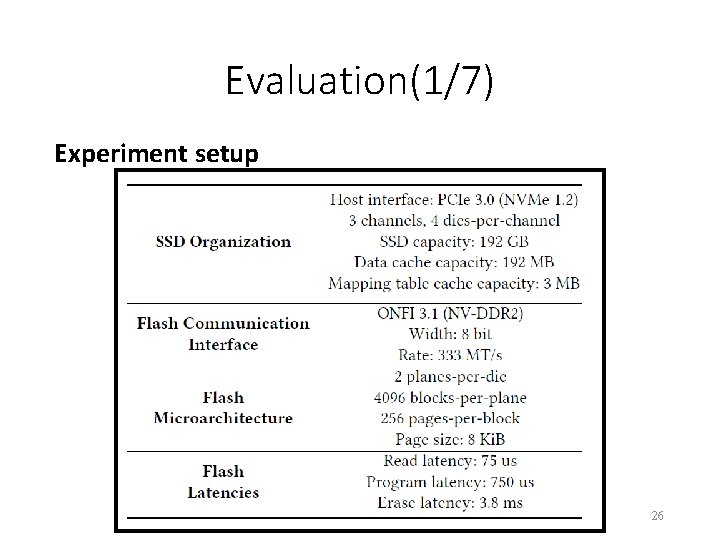

Evaluation(1/7) Experiment setup 26

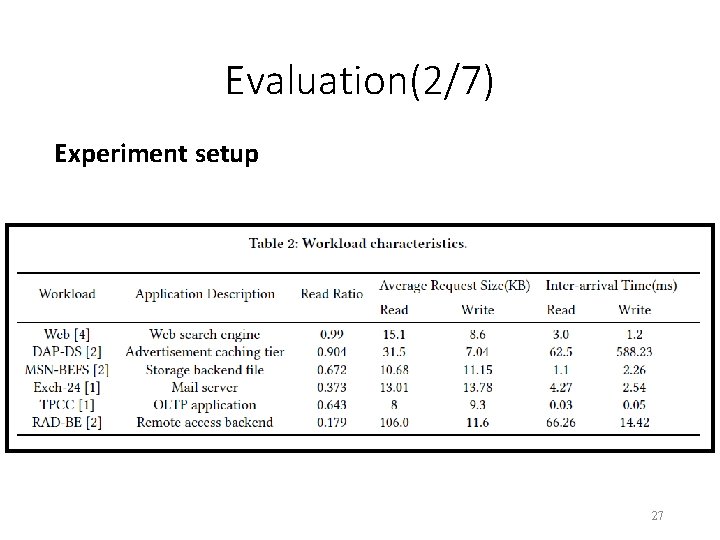

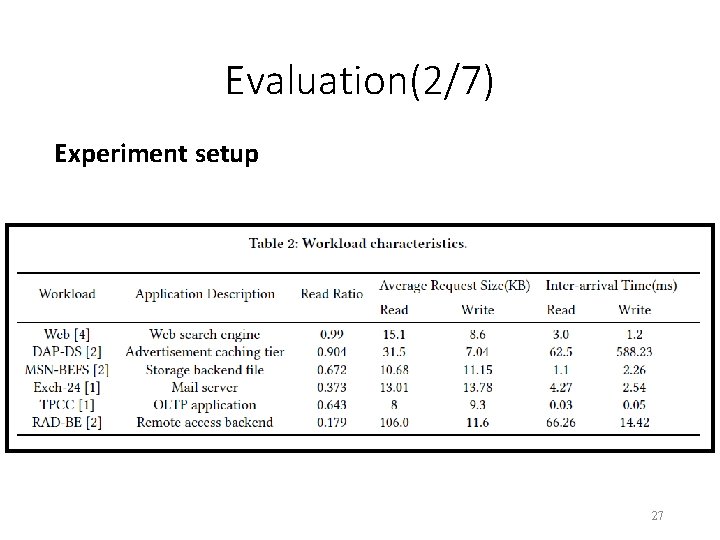

Evaluation(2/7) Experiment setup 27

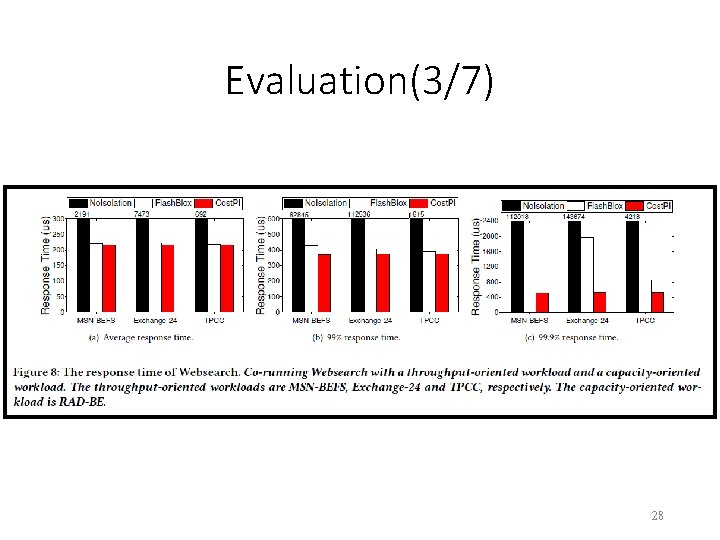

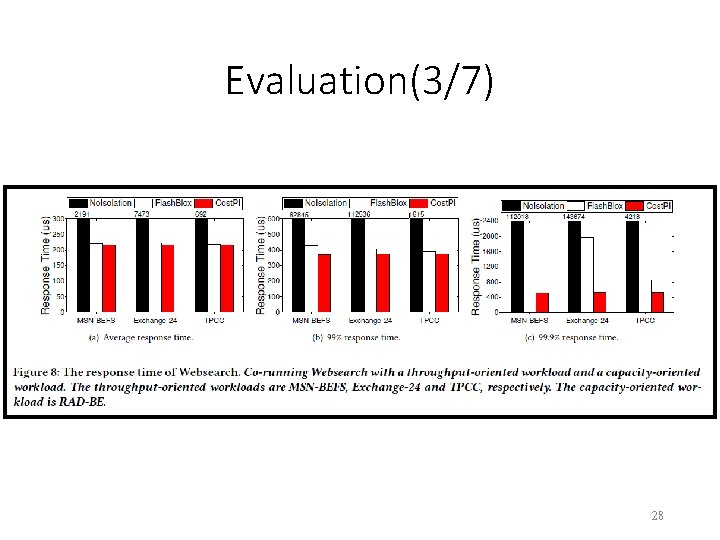

Evaluation(3/7) 28

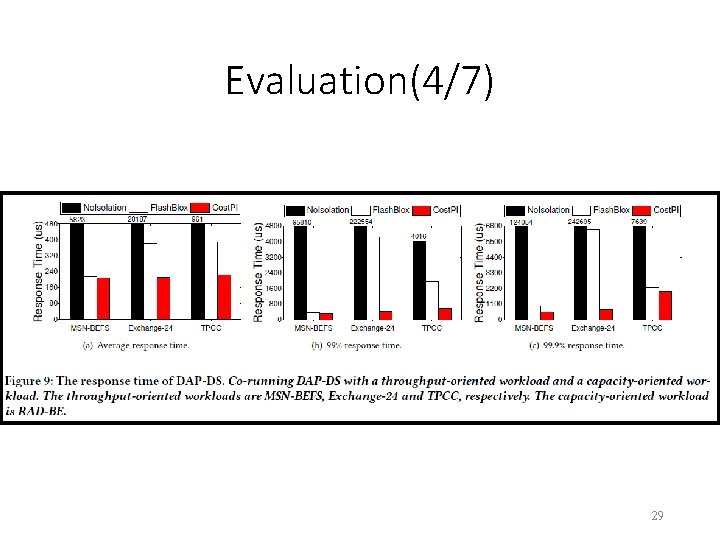

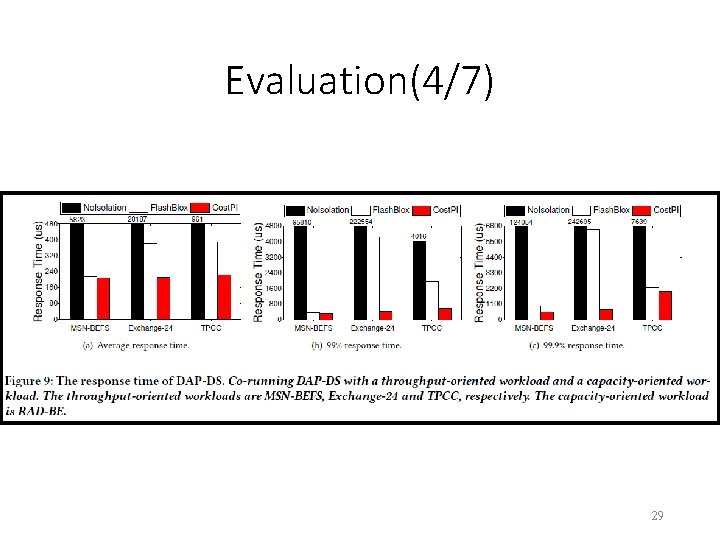

Evaluation(4/7) 29

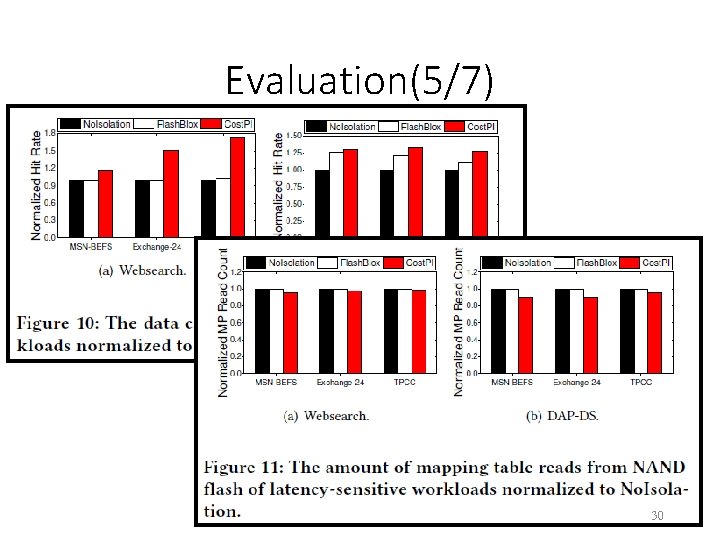

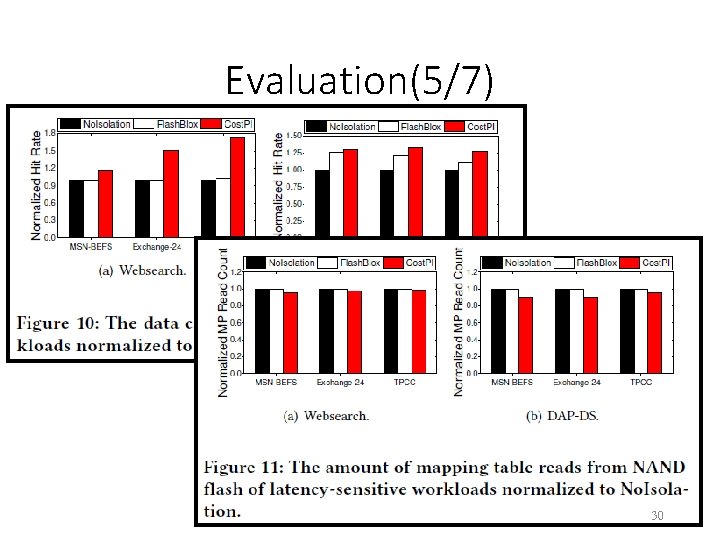

Evaluation(5/7) 30

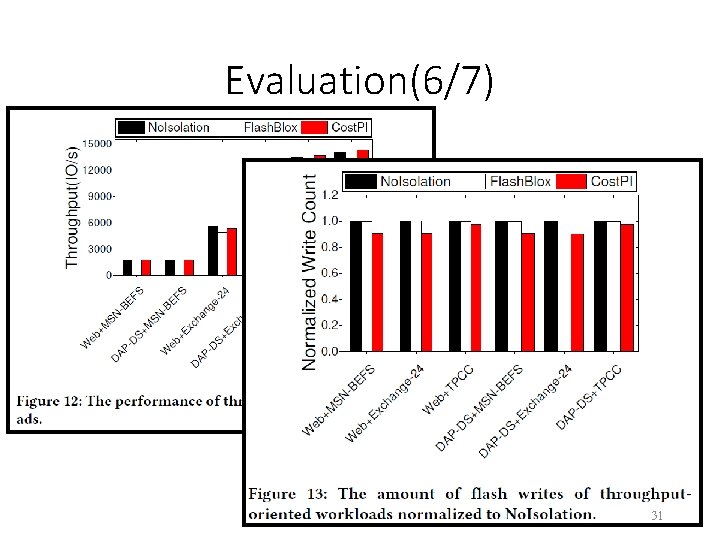

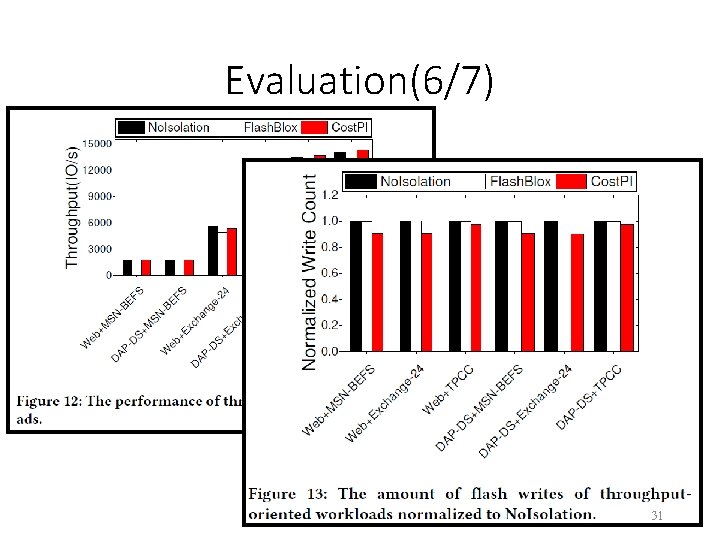

Evaluation(6/7) 31

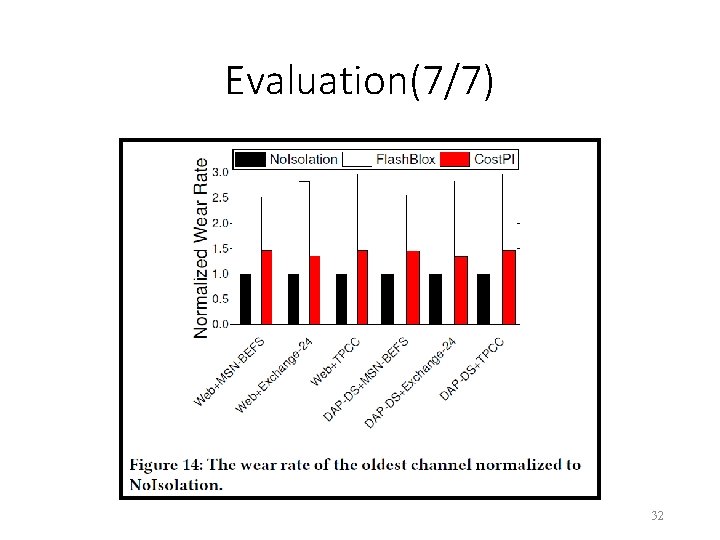

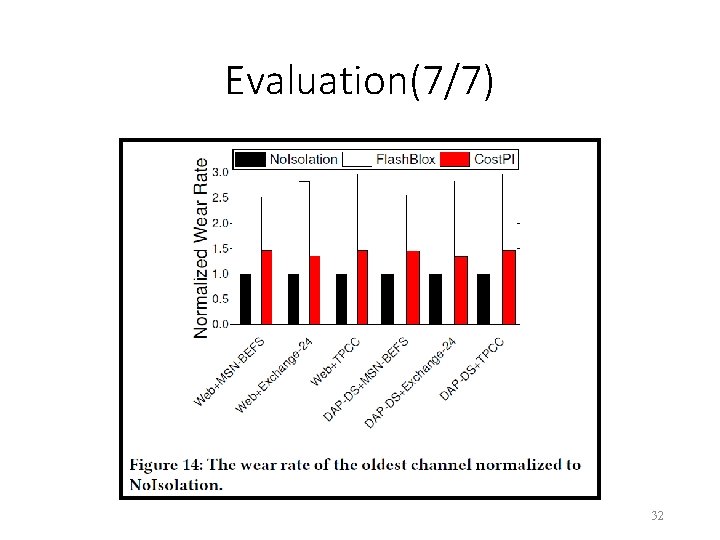

Evaluation(7/7) 32

Outline • Introduction • Background and Motivation • Design • Evaluation • Conclusion 33

Conclusion(1/1) • We present a cost-effective performance isolation scheme for the shared NVMe SSD. • Through FI-v. SSDs and PI-v. SSDs, Cost. PI can achieve endto-end performance isolation while improving resource utilization and reducing the wear imbalance. • Cost. PI can reduce the average response for latencysensitive workloads. • Cost. PI can increase throughput of throughputoriented workloads and reduce the wear imbalance of the shared SSD. 34

Thank you for listening 35