Mining of Massive Datasets Jure Leskovec Anand Rajaraman

- Slides: 17

Mining of Massive Datasets Jure Leskovec, Anand Rajaraman, Jeff Ullman Stanford University http: //www. mmds. org

What is Data Mining? Knowledge discovery from data

Data Mining But to extract the knowledge data needs to be Stored Managed And ANALYZED Data Mining ≈ Big Data ≈ Predictive Analytics ≈ Data Science J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http: //www. mmds. org 3

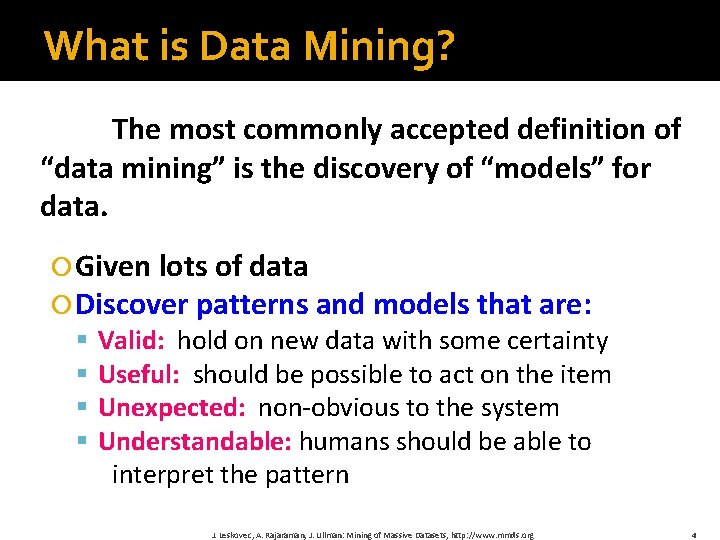

What is Data Mining? The most commonly accepted definition of “data mining” is the discovery of “models” for data. Given lots of data Discover patterns and models that are: Valid: hold on new data with some certainty Useful: should be possible to act on the item Unexpected: non-obvious to the system Understandable: humans should be able to interpret the pattern J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http: //www. mmds. org 4

A “model, ” however, can be one of several things. We mention below the most important directions in modeling. • • • Statistical Modeling: eg. Gaussian distribution Machine Learning: Computational Approaches to Modeling: Summarization : Page. Rank n clustering Feature Extraction o Frequent Itemsets. o Similar Items.

Statistical Limits on Data Mining: 1. Total Information Awareness 2. Bonferroni’s Principle

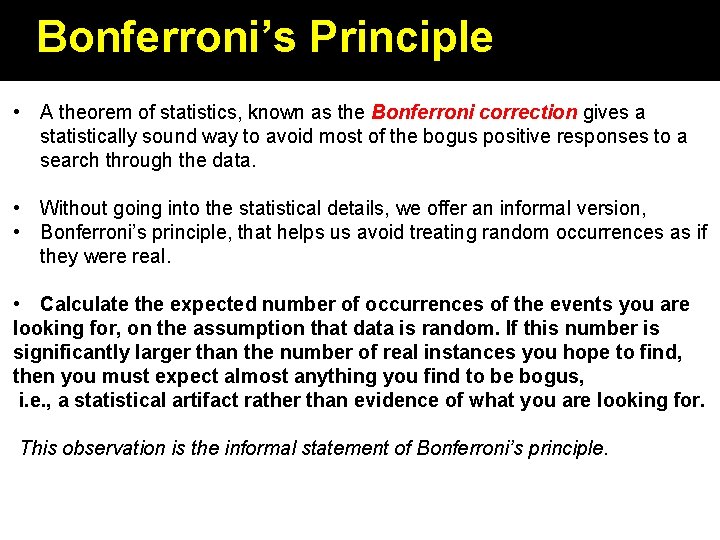

Bonferroni’s Principle • A theorem of statistics, known as the Bonferroni correction gives a statistically sound way to avoid most of the bogus positive responses to a search through the data. • Without going into the statistical details, we offer an informal version, • Bonferroni’s principle, that helps us avoid treating random occurrences as if they were real. • Calculate the expected number of occurrences of the events you are looking for, on the assumption that data is random. If this number is significantly larger than the number of real instances you hope to find, then you must expect almost anything you find to be bogus, i. e. , a statistical artifact rather than evidence of what you are looking for. This observation is the informal statement of Bonferroni’s principle.

What Is Big Data? • Big data is a term that describes the large volume of data – both structured and unstructured – that inundates a business on a day-to-day basis. • Big data can be analyzed for insights that lead to better decisions and strategic business moves. According to Gartner “Big data” is high-volume, velocity, and variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making.

Characteristics Of Big Data Mainstream definition of big data as the three V’s: Volume. Organizations collect data from a variety of sources, including business transactions, social media and information from sensor or machineto-machine data. In the past, storing it would’ve been a problem – but new technologies (such as Hadoop) have eased the burden. Velocity. Data streams in at an unprecedented speed and must be dealt with in a timely manner. RFID tags, sensors and smart metering are driving the need to deal with torrents of data in near-real time. Variety. Data comes in all types of formats – from structured, numeric data in traditional databases to unstructured text documents, email, video, audio, stock ticker data and financial transactions.

we consider two additional dimensions when it comes to big data: Variability- In addition to the increasing velocities and varieties of data, data flows can be highly inconsistent with periodic peaks. Is something trending in social media? Daily, seasonal and event-triggered peak data loads can be challenging to manage. Even more so with unstructured data. Complexity- Today's data comes from multiple sources, which makes it difficult to link, match, cleanse and transform data across systems. However, it’s necessary to connect and correlate relationships, hierarchies and multiple data linkages or your data can quickly spiral out of control.

Types of Big Data • Structured: • Unstructured • Semi-structured

Types of Big Data • Structured data: • means data that can be processed, stored, and retrieved in a fixed format. • It refers to highly organized information that can be readily and seamlessly stored and accessed from a database by simple search engine algorithms. • For instance, the employee table in a company database will be structured as the employee details, their job positions, their salaries, etc. , will be present in an organized manner.

Types of Big Data • Unstructured data refers to the data that lacks any specific form or structure whatsoever. • This makes it very difficult and time-consuming to process and analyze unstructured data. • Email is an example of unstructured data.

Types of Big Data • Semi-structured data pertains to the data containing both the formats mentioned above, that is, structured and unstructured data. • To be precise, it refers to the data that although has not been classified under a particular repository (database), yet contains vital information or tags that segregate individual elements within the data.

Why Is Big Data Important? You can take data from any source and analyze it to find answers that enable 1) cost reductions, 2) time reductions, 3) new product development and optimized offerings, and 4) smart decision making. When you combine big data with high-powered analytics, you can accomplish business-related tasks such as: Determining root causes of failures, issues and defects in near-real time. Generating coupons at the point of sale based on the customer’s buying habits. Recalculating entire risk portfolios in minutes. Detecting fraudulent behaviour before it affects your organization.

I dat♥a How do you want that data? J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http: //www. mmds. org 16

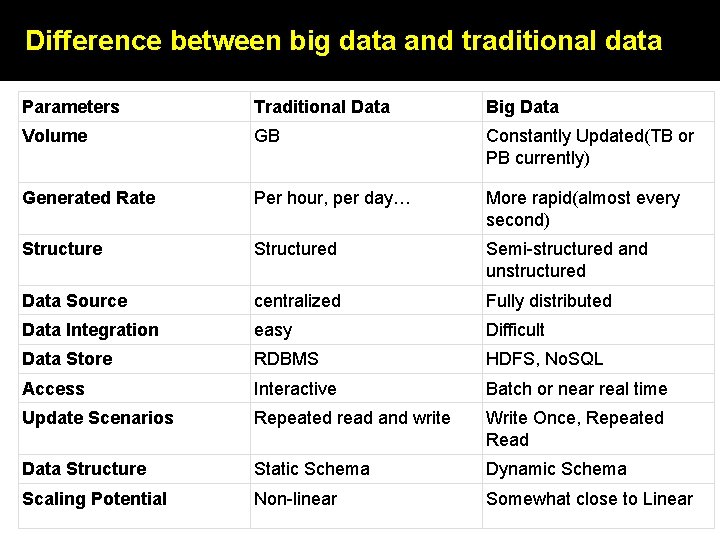

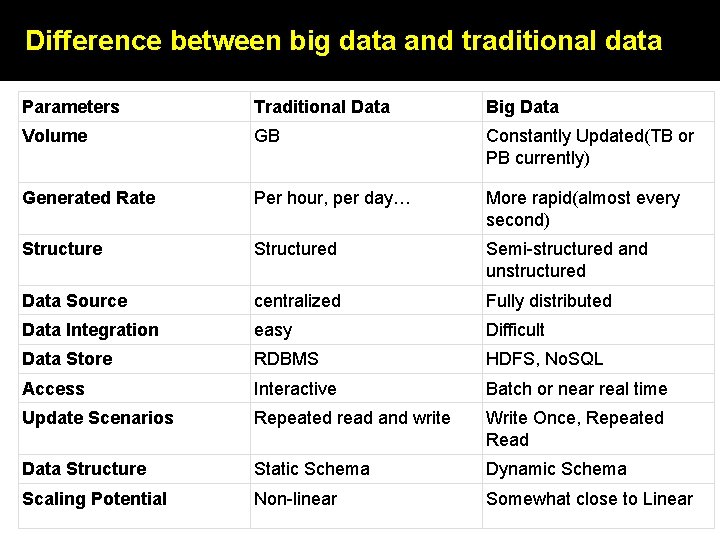

Difference between big data and traditional data Parameters Traditional Data Big Data Volume GB Constantly Updated(TB or PB currently) Generated Rate Per hour, per day… More rapid(almost every second) Structured Semi-structured and unstructured Data Source centralized Fully distributed Data Integration easy Difficult Data Store RDBMS HDFS, No. SQL Access Interactive Batch or near real time Update Scenarios Repeated read and write Write Once, Repeated Read Data Structure Static Schema Dynamic Schema Scaling Potential Non-linear Somewhat close to Linear

Mining of massive datasets solution

Mining of massive datasets solution Stanford mining massive datasets

Stanford mining massive datasets Cs 246 stanford

Cs 246 stanford Cs 246

Cs 246 Leskovec

Leskovec Rajmohan rajaraman

Rajmohan rajaraman Arvind rajaraman

Arvind rajaraman Proc datasets noprint

Proc datasets noprint Myafsaccount

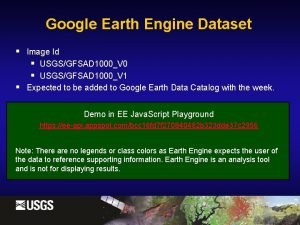

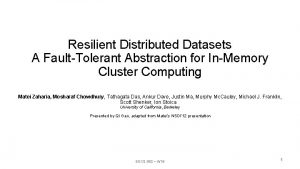

Myafsaccount Resilient distributed datasets

Resilient distributed datasets Adsl dataset example

Adsl dataset example Iteratrion

Iteratrion Sklearn.datasets.samples_generator

Sklearn.datasets.samples_generator Mining multimedia databases in data mining

Mining multimedia databases in data mining Strip mining vs open pit mining

Strip mining vs open pit mining Eck

Eck Difference between strip mining and open pit mining

Difference between strip mining and open pit mining Strip mining vs open pit mining

Strip mining vs open pit mining